This article is contributed. See the original author and article here.

NaN stands for Not a Number. This scenario was a customer trying to insert a parquet file into SQL, but he was not able to do it.

That’s because of the following error in one column: Error converting values NaN or Infinity to type ‘FLOAT’. NaN and Infinity are not supported.

The example bellow is based on this piece of documentation:

https://docs.microsoft.com/en-us/azure/synapse-analytics/spark/synapse-spark-sql-pool-import-export

I added some customization and also I have some nice discussion with my colleague Diya Mothafar. So my colleague mentioned to do the same using Pandas which also valid. My demo will not use Pandas, but again it also does the job.

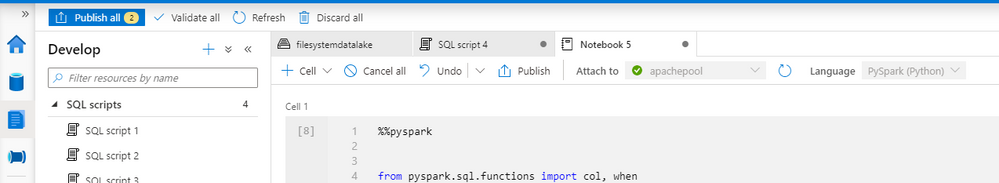

First open Synapse Studio -> Notebook ->PySpark

Fig 1 PySpark

%%pyspark

from pyspark.sql.functions import col, when

data_path = spark.read.load('abfss://filesystemdatalake@mystorage.dfs.core.windows.net/test/filetest.snappy.parquet', format='parquet')

#here we handle the NaN values

data_path = data_path.replace(float('nan'), None)

data_path.createOrReplaceTempView("pysparkdftemptable")

Add a Scala cell into the Notebook. Note the table will be created with the JOB you do not need to create in advanced.

Fig 2 Add Cell

%%spark

val scala_df = spark.sqlContext.sql ("select * from pysparkdftemptable")

//scala_df.show(100)

scala_df.write.sqlanalytics("YourDatabaseName.dbo.PySparkTable", Constants.INTERNAL)

Your new cell should look like this

Fig 3 Cell

Once the Job is complete. You can check the results by opening SSMS and querying the table.

That is it!

Liliam

Uk Engineer

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

Recent Comments