Acceleration of Data-Driven App Development with Copilot

This article is contributed. See the original author and article here.

Blog Banner

At Microsoft Build, 50 updates were announced and among those updates were Power Platform announcements in which we will look at one of them today. Learn how to use the Excel to App with Copilot feature which enables you to take advantage of Copilot to add data processing capabilities by helping you clean and prepare your data before you even start building the app. Copilot can now ingest any Excel file, no matter how loosely structured and create robust structured tables with a variety of data types.

Find out more about Microsoft Build announcements on the Microsoft Build 2023 Book of News

Earn a free certification voucher by completing at least one Cloud Skills Challenge from the Microsoft Build Cloud Skills Challenge

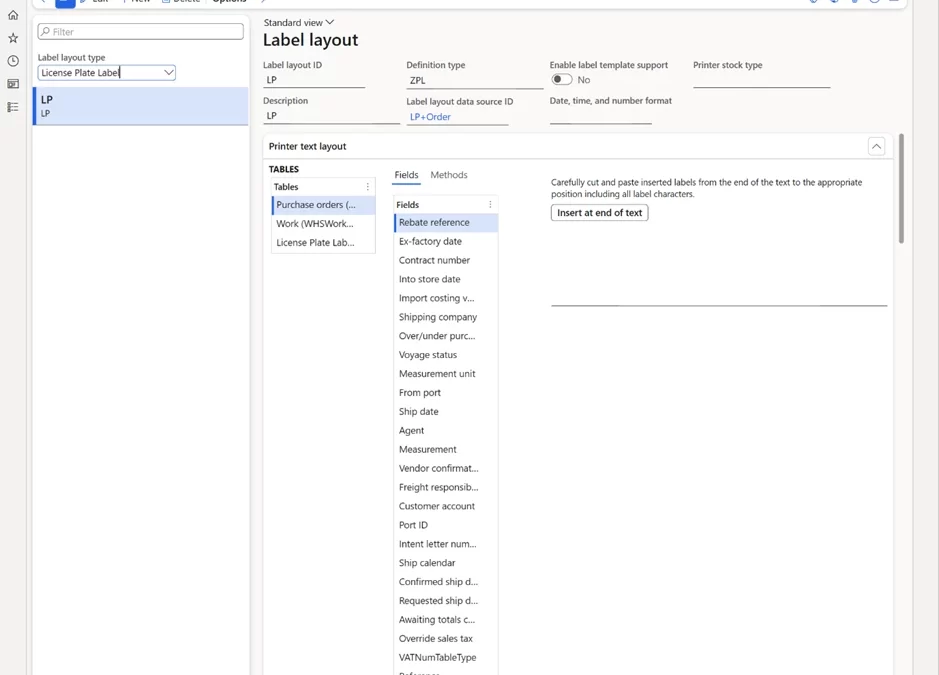

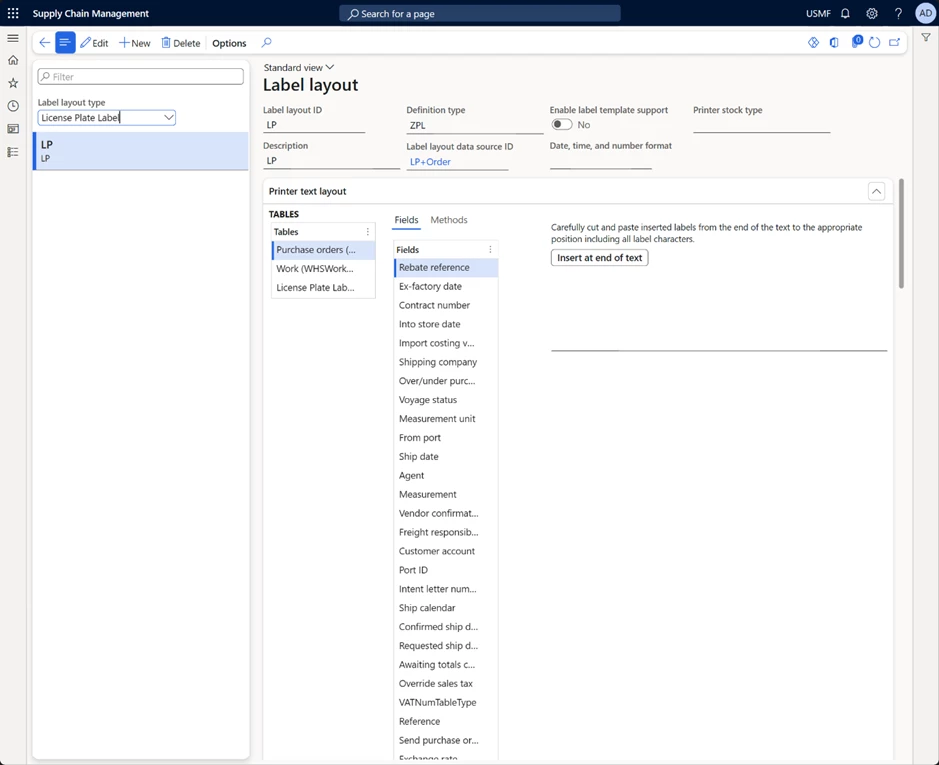

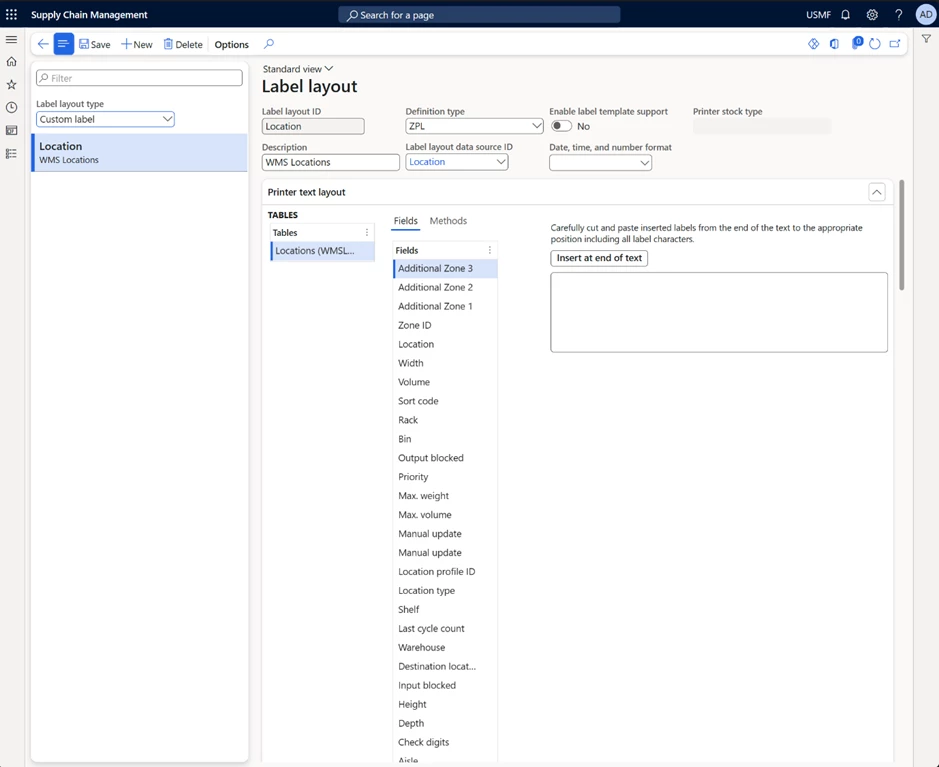

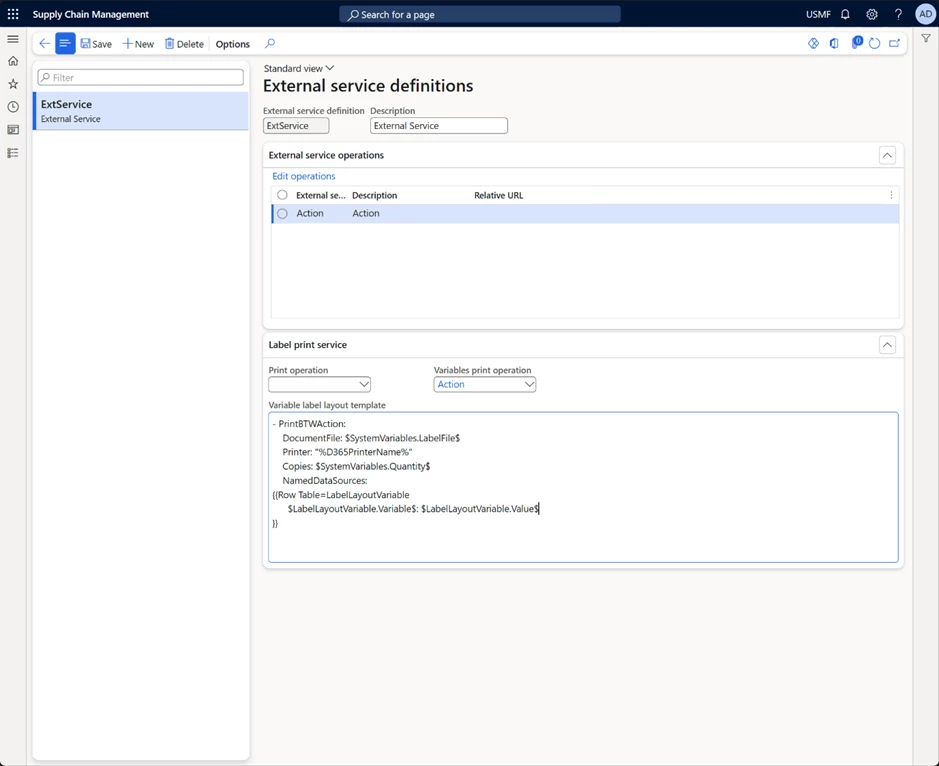

Excel to App

Students, rising developers and pro-developers can rapidly build solutions in PowerApps by simply dragging and dropping or linking to a data source like Excel, and then build the UI on top of that data.

Things to consider

- You can import your excel file as it is and PowerApps will create a Custom Dataverse Table and a Canvas App for you.

- You do not need to format your table within excel (example you do not have to use Format as a Table within your spreadsheet)

- Your Canvas App and Dataverse table will be built based on the first sheet of your Excel Spreadsheet.

- The excel file needs to be closed when importing within PowerApps

Short Demo

Animation showing a short demo of the Excel to App feature with Copilot in PowerApps

Practical steps to follow

- Prepare your excel file to use or create a new one.

- Go to PowerApps and Sign in with your account.

- On the homepage, click on Start with data

- On the Start with data wizard, choose and click on Upload an Excel file.

- Click on Select from device button then choose your excel file your device.

- You will get a preview of your Dataverse custom table that will be created for you. You will be able to edit the table by clicking on Edit Table Properties to change the Table name.

- The columns of your table are assigned to their appropriate data types and you have the ability to change/edit the column by clicking on the drop down next to the column and click on Edit Column. Once done, you can click on Create app

- Once you have clicked on Create app, Copilot will build a Canvas App with a Standard template that you can modify based on your needs.

Let’s Add more AI capabilities with Copilot

- Within your app, click on Settings then choose Upcoming Features

- Search for Copilot and toggle it to enable it. Once enabled close the dialog box.

- Once your Copilot Component is enabled, click on Insert and choose Copilot (preview)

- Once the Copilot Component is added on the screen, you will need to choose/select the your Datasource.

- Once you have chosen your Datasource, the full Copilot Component is added to your app. This allows your app users to use Copilot to understand and analyze their data using suggested prompts.

- Play the app and see Copilot in action. Choose one of the suggested prompts, for example on the picture below I want to know how many tickets are open.

Congratulations! You did it, you built a Canvas app from your excel file using Copilot!

Recent Comments