FedEx and Dynamics 365 reimagine commerce experiences

This article is contributed. See the original author and article here.

The pandemic has sped up the adoption of digital technologies to obtain data insights. The multi-year collaboration between FedEx and Microsoft, announced in May 2020, aims to reinvent commerce and provides businesses with actionable insights to win in an increasingly competitive landscape. And on January 24th, we announced a new cross-platform “logistics as a service” as the next phase of this collaboration to help transform commerce by combining the global digital and logistics network of FedEx with the power of Microsoft’s cloud, including Microsoft Dynamics 365. This blog explores how this next step brings a unique integration between FedEx and Dynamics 365 Intelligent Order Management. We are making this pre-built connector available for preview for all applicable markets during the second half of 2022.

Faster and more cost-effective delivery

According to McKinsey & Company, a positive customer experience is hugely meaningful to a retailers’ success: it yields 20 percent higher customer-satisfaction rates, a 10 to 15 percent boost in sales conversation rates, and an increase in employee engagement of 20 to 30 percent.1 The combination of consumers’ expectations for fast delivery with the business requirements to maintain profitability margins makes it even more challenging for organizations to offer faster, cost-effective delivery options.

The FedEx integration with Dynamics 365 Intelligent Order Management tackles this challenge by pairing orders with near real-time transportation network data and inventory insights so that brands can optimize fulfillment and deliver on their order promise with increased precision. And retailers can predict shipment delays and proactively overcome them by selecting alternative ways to fulfill the order on time and in full while staying profitable.

Near real-time delivery status communications

Manufacturers, distributors, consumer packaged goods (CPG) companies, and retailers understand that success depends on their ability to consistently deliver a delightful customer experience, which is increasingly a function of a retail supply chain. A recent Gartner survey found that 83 percent of companies demand that supply chains improve customer experience (CX) as part of the digital business strategy.2 Retail supply chains can improve the customer experience by offering near real-time delivery status communications for customer orders. And this is one of the enhancements that customers can look forward to as part of our collaboration with FedEx.

Through Dynamics 365 Intelligent Order Management’s integration with FedEx, it will be possible for brands to ensure a delightful customer experience by providing near real-time communications on the delivery status that consumers desire and expect.

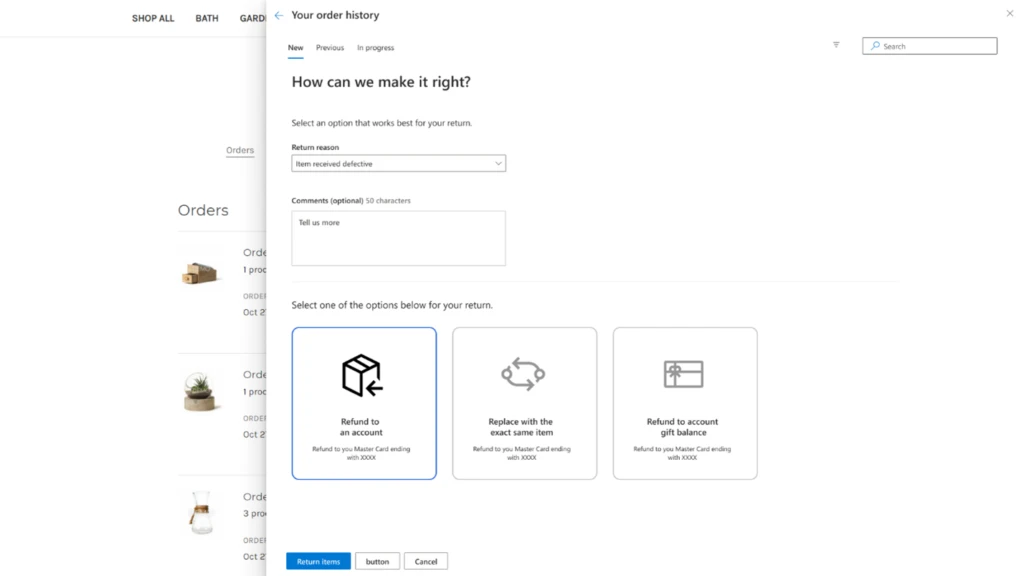

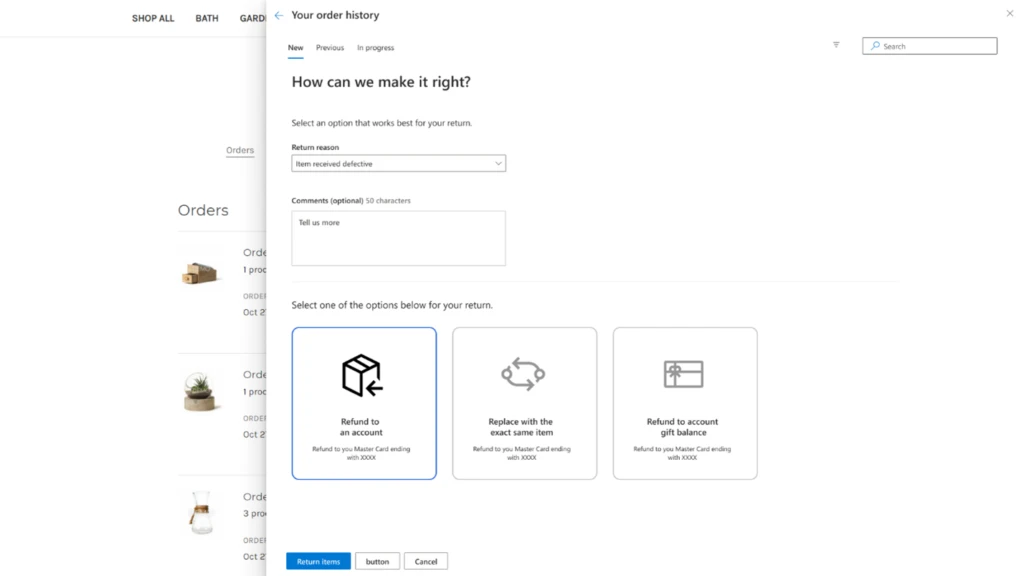

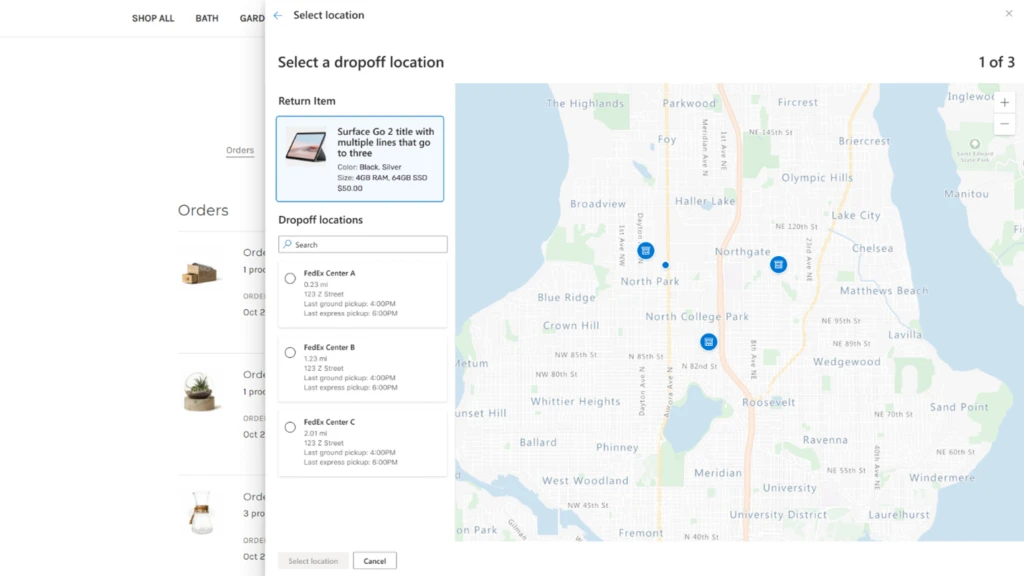

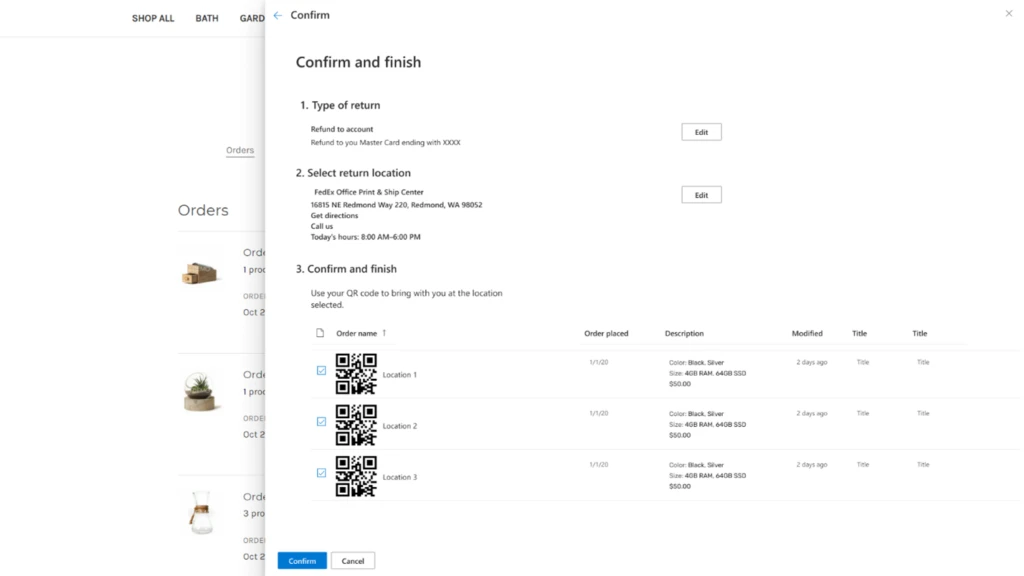

Convenient and frictionless returns

Providing easy returns is no longer optional for retailers. In fact, according to Statista, 86 percent of global consumers look for easy returns when deciding where to buy, and 81 percent are likely to switch to a competitor if they had a bad return experience.3 With so much at stake, it is not surprising that retailers are looking for ways to leverage technology to offer convenient, frictionless returns. By partnering with FedEx, Dynamics 365 Intelligent Order Management further enables brands to reliably provide free two-day shipping options to reduce shopping cart abandonment and effectively compete in the increasingly digital commerce landscape.

Through the partnership, organizations can also offer a better returns experience for their customers. End-customers will enjoy hassle-free returns options with the 60,000+ FedEx drop-off locations, convenient at-home pickups, and eco-friendly alternatives supporting sustainability initiatives such as printer-less QR code returns labels and no-box returns.

In addition to the enhancements that our partnership with FedEx will bring to Dynamics 365 Intelligent Order Management, customers also benefit from the ability to get up and running quickly without the need for costly rip and replace processes of existing enterprise resource planning (ERP) systems. And because Dynamics 365 Intelligent Order Management is built on a modern and open platform with out-of-the-box, pre-built connectors to a large ecosystem of order intake, shipping, and tax calculation partners, organizations can scale business. It also allows companies to accept orders from any order source, such as online e-commerce, marketplaces, mobile apps, or traditional sources such as electronic data interchange (EDI). And users can fulfill those orders from a mix of internal warehouses, third-party logistics providers, retail stores, or drop-ship partners locations.

What’s next

We have seen that Dynamics 365 Intelligent Order Management is driving improvements in retail supply chains through its FedEx collaboration. We have also shown how the upcoming integration with FedEx will help brands deliver modern, more delightful experiences directly to customers, including faster, more cost-effective delivery, near real-time communications on status delivery, and convenient and frictionless returns. If you are ready to apply an intelligent order management solution to drive improvement in these areas, we invite you to take our guided tour or get started today with the Dynamics 365 Intelligent Order Management free trial.

Sources:

- McKinsey & Company, Personalizing the customer experience: Driving differentiation in retail, April 28, 2020.

- Gartner, Four Steps to Become a Customer-Centric Supply Chain, 2021. GARTNER is the registered trademark and service mark of Gartner Inc., and/or its affiliates in the U.S. and internationally and has been used herein with permission. All rights reserved.

- Statista, Consumer attitudes towards return policy of retailers and its influence on their purchasing decision worldwide 2020.

The post FedEx and Dynamics 365 reimagine commerce experiences appeared first on Microsoft Dynamics 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

Recent Comments