Zero Trust for Endpoints and Applications – Essentials Series – Episode 3

This article is contributed. See the original author and article here.

See how you can apply Zero Trust principles and policies to your endpoints and apps; the conduits for users to access your data, network, and resources. Jeremy Chapman walks through your options, controls, and recent updates to implement the Zero Trust security model.

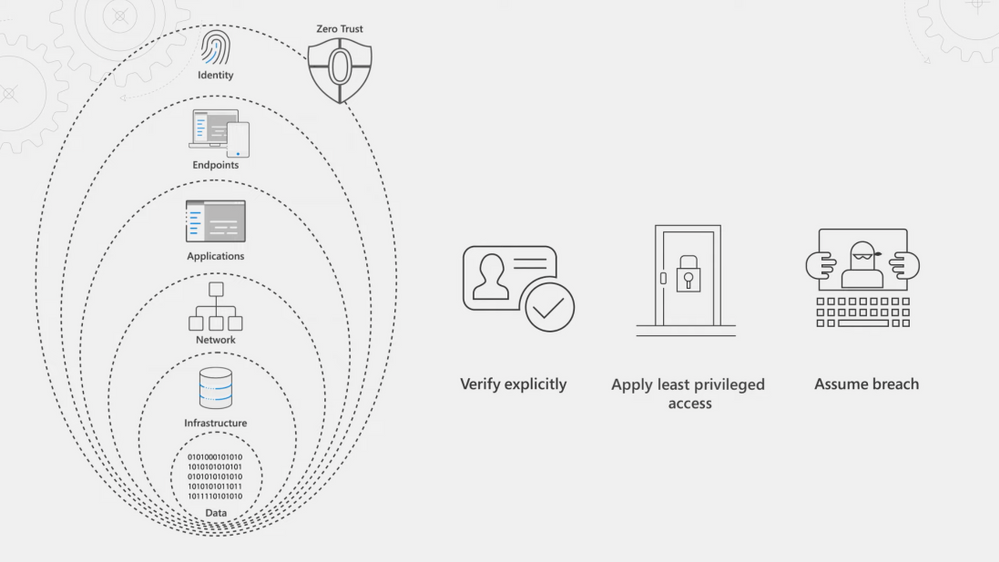

Our Essentials episode gave a high-level overview of the principles of the Zero Trust security model, spanning identity, endpoints, applications, networks, infrastructure, and data. For Zero Trust, endpoints refer to the devices people use every day — both corporate or personally owned computers and mobile devices. The prevalence of remote work means devices can be connected from anywhere and the controls you apply should be correlated to the level of risk at those endpoints. For corporate managed endpoints that run within your firewall or your VPN, you will still want to use principles of Zero Trust: Verify explicitly, apply least privileged access, and assume breach.

We’ve thought about the endpoint attack vectors holistically and have solutions to help you protect your endpoints and the resources that they’re accessing.

QUICK LINKS:

01:16 — Register your endpoints

01:49 — Configure and enforce compliance

02:31 — Search policies with new settings catalog

03:15 — Group Policy analytics

04:00 — Microsoft Defender for Endpoint

04:36 — Microsoft Cloud App Security (MCAS)

06:36 — Reverse proxy

07:06 — Authentication context

08:44 — Anomaly detection policies

09:21 — Wrap up

Link References:

For more on our series, keep checking back to https://aka.ms/ZeroTrustMechanics

Watch our Zero Trust Identity episode at https://aka.ms/IdentityMechanics

Learn more about the Zero Trust approach at https://aka.ms/zerotrust

Unfamiliar with Microsoft Mechanics?

We are Microsoft’s official video series for IT. You can watch and share valuable content and demos of current and upcoming tech from the people who build it at Microsoft.

- Subscribe to our YouTube: https://www.youtube.com/c/MicrosoftMechanicsSeries?sub_confirmation=1

- Join us on the Microsoft Tech Community: https://techcommunity.microsoft.com/t5/microsoft-mechanics-blog/bg-p/MicrosoftMechanicsBlog

- Watch or listen via podcast here: https://microsoftmechanics.libsyn.com/website

Keep getting this insider knowledge, join us on social:

- Follow us on Twitter: https://twitter.com/MSFTMechanics

- Follow us on LinkedIn: https://www.linkedin.com/company/microsoft-mechanics/

- Follow us on Facebook: https://facebook.com/microsoftmechanics/

-Welcome back to our series on Zero Trust on Microsoft Mechanics. In our Essentials episode, we gave a high-level overview of the principles of the Zero Trust security model, spanning identity, endpoints, applications, networks, infrastructure and data. Now in this episode we’re going to take a closer look at how you can apply Zero Trust principles and policies to your endpoints and apps. And these are the conduits for users to access your data, your network and resources. We’re going to walk you through all your options, your controls and even recent updates as you implement the Zero Trust security model.

-So in Zero Trust endpoints refers to the devices that people use every day. Now these can be both corporate or personally-owned computers and mobile devices. And in an era of remote work, they can also be connected from anywhere. This means that the controls that you apply should be correlated to the level of risk that those endpoints pose. And for even corporate managed endpoints that are running within your firewall or your VPN, you’ll still want to apply the principles of Zero Trust: to verify explicitly, apply least privileged access, and assume breach. Now, the good news here is that we’ve thought about the endpoint attack vectors holistically and have solutions to help you protect your endpoints and the resources that they’re accessing.

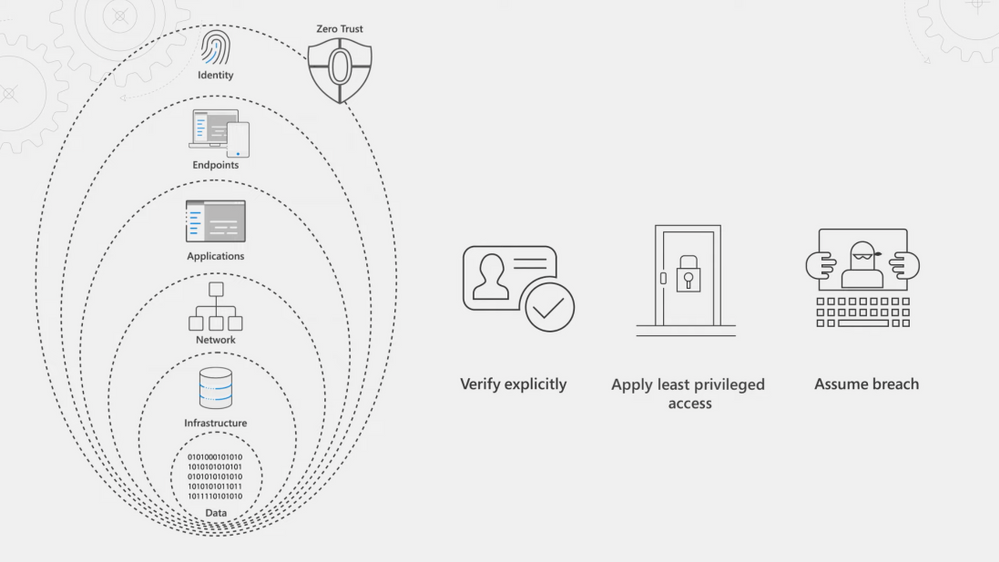

-First, as we’ve highlighted in the identity episode that you can watch aka.ms/IdentityMechanics, your endpoints should be registered with your centralized identity provider. Now, here Azure Active Directory serves as the front door for your device endpoints and beyond device assessment at sign-in with conditional access with managed and unmanaged devices, it also enables devices to register or join your directory service. Now this relationship between the endpoint and the identity provider ultimately allows for deeper policy management and control.

-Next, to configure and enforce device compliance, Microsoft Endpoint Manager includes the services and tools that you need to manage and monitor mobile devices, desktop computers, virtual machines and even servers. Now it comprises Microsoft Intune is a cloud-based mobile device management service and Configuration Manager as an on-premises management solution. Microsoft Endpoint Manager offers a comprehensive set of policies, spanning MDM and ADMX-backed policies that power Active Directory group policy, as you can see here with these policies that are labeled administrative templates, as well as deep integration with Azure Active Directory and Microsoft Defender for endpoint, for defense in-depth controls. In fact, now when you create a device configuration profile, you can search across all policy providers supported by Microsoft Endpoint Manager using the new settings catalog.

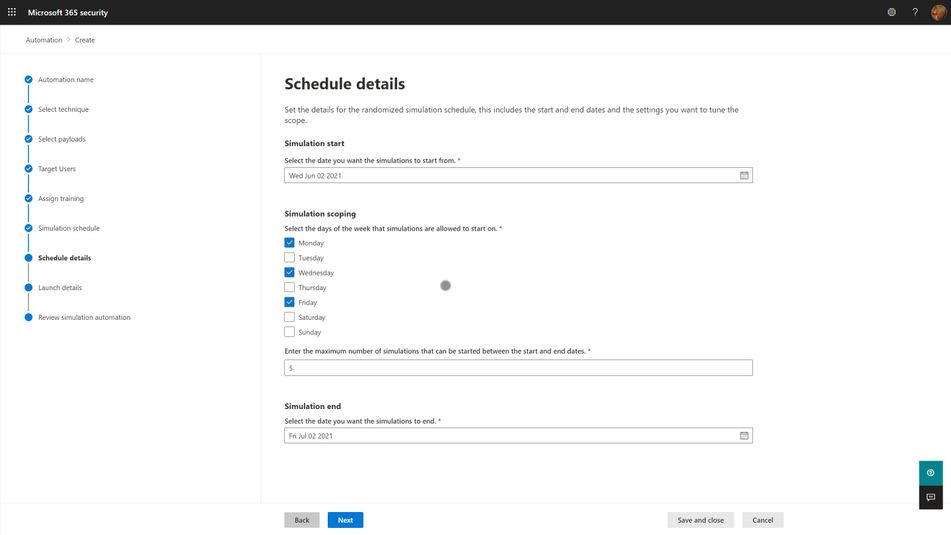

-For example, if I search here for script, I’m going to find all the policies related to scripting. You’ll see the policies are from multiple providers. And I’ll choose Defender here and select all of them. And I’ll go ahead and choose to block all of the ones here, one-by-one. And then I’ll move to the next step. And now with the new device filters, we can even choose only to scope the policy to corporate-owned devices. Additionally, Microsoft Endpoint Manager includes policies to require local drive encryption across platforms, along with secure boot and code integrity on Windows to keep your devices safe. If you’re using Active Directory group policy, Endpoint Manager’s Group Policy analytics assesses your GPOs then it helps you to migrate your settings to the Cloud.

-And this even works for third-party policy templates. So for example, here with this one called Chrome GPO, I’ve already imported the policies in my XML file and you can see that Endpoint Manager’s already matched those policies, in this case matched them to Edge, and everything looks good. So I’m going to go ahead and migrate them. And I’ll select each of the items one by one. There we go, and I’ll give it a name. And now I can assign the policy to my groups and I’ll add all devices in my case. And now I can publish out that policy.

-Now with devices under management, Microsoft Endpoint Manager enables you to install required apps on devices across most common platforms. Next, with your devices under management in the Endpoint security blade of MEM, under Microsoft Defender for Endpoint, you’ll see the service can be distributed to your desktop and mobile platforms. This will provide preventative protection, post-breach detection, automated investigation and response. With Defender in place, if devices are impacted by security incidents, your SecOps team can easily identify how to remediate issues even as you can see here, pass the required configuration changes to you, the device management team, using Endpoint Manager so that you can make the changes and that status will even get passed back to your SecOps team.

-So those are just some of the controls for endpoints and locally installed applications. But for comprehensive management of app experiences, the Zero Trust security model needs to be applied to all of your apps. Now Microsoft Cloud App Security, or MCAS, is a cloud access security broker. Now that helps you extend real-time controls to any app in any browser, as well as comprehensively discover, secure, control and provide threat protection and detection across your app ecosystem.

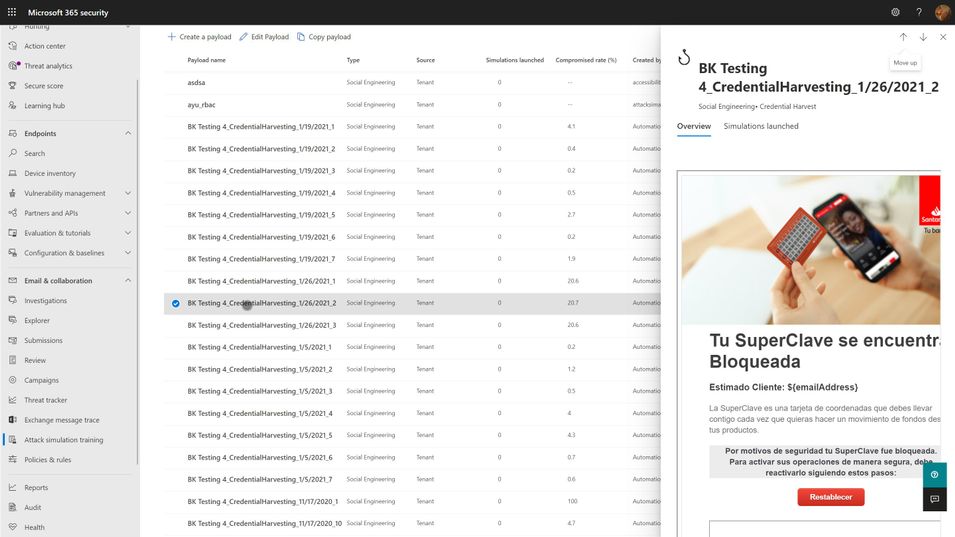

-Let’s start with Shadow IT and how MCAS can help you apply the Zero Trust principles of verify explicitly and assume breach to avoid the use of unsanctioned apps that have not been verified for your organization that may introduce risk. Now, MCAS gives you the visibility into the cloud apps and services used in your organization and it assesses them for risk and provides sophisticated analytics. You can then use this to make informed decisions about whether you want to sanction the apps that you discover or block them from being accessed. MCAS discovery will continually assess the apps and services that people are using and it also enables discovery of apps running on your endpoints via its integration with Microsoft Defender for Endpoint.

-So here I’ll take a look at an app, File Dropper, and you can see that it has a score of two out of 10. I’m going to click into it and you can see all the details about the app and what it is, security related considerations, compliance and legal information. Additionally, if I drill into users, you’ll see that each discovered app gives specifics on users, their IP addresses, total data transacted, as well as the risk level by area. Now, once you’ve discovered your apps and assessed their risks, you can then take action. You can sanction apps and manage them more tightly with the controls that we’ll show in the next section or block unsanctioned apps outright.

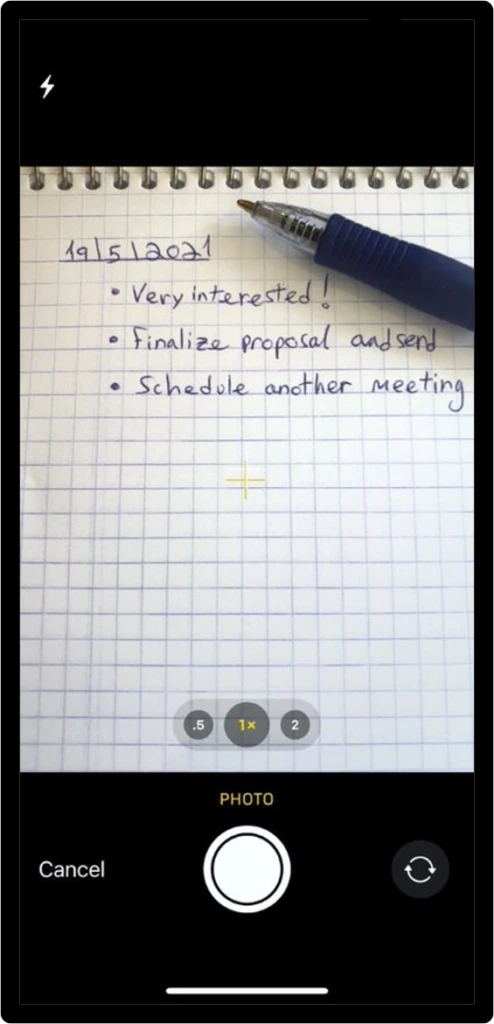

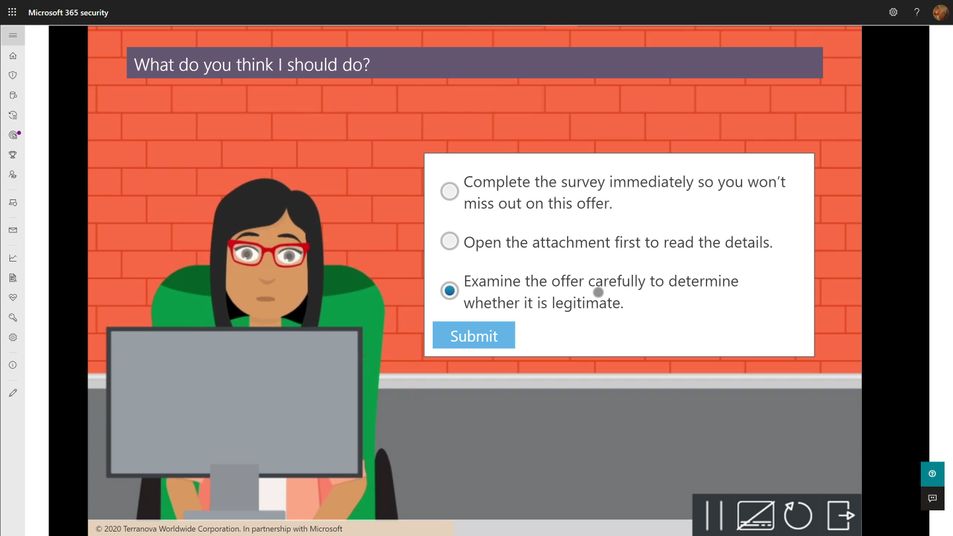

-Once you’ve sanctioned the applications that you want employees to use, layer on the Zero Trust principle of least privileged access, you’ll then use policy to protect information that resides in them and detect potential threats in your environment. Now, web apps configured with an identity provider can be connected via reverse proxy to enable real-time in-session controls. And applications with enterprise-grade APIs can also be connected to Cloud App Security to monitor files and activities in those cloud apps.

-The reverse proxy service in MCAS is easily integrated with conditional access policies that you set in Azure Active Directory to enable granular control over in-session activities. For example, like the ability to download or upload sensitive information. Additionally features like auth context, which are natively integrated with Office 365 apps like SharePoint Online, are extended to any web app. Now this enforces controls like step-up multi-factor authentication in session while attempting to download sensitive data, like you can see here with our policy for Google Workspace. This means that you can work as usual and access content from any expected location or device.

-But for example, if I change location to a coffee shop; in this case, I’ll try to download a sensitive file, then authentication context will kick in. It’ll reevaluate my session due to that new security risk that’s associated with the IP address change. And as you can see here, requires another factor of authentication with this message security check required. Popular third-party applications can also be directly integrated by our API-based app connectors to MCAS. So MCAS leverages Microsoft Information Protection as part of its data loss prevention capabilities.

-So let’s configure another policy, and this time a file policy for Google Workspace. In the policy, you can set different kinds of filters, such as access level and the application that you want to scope the policy to and you can choose to apply this to all files, selected folders or more. Now in this case, our goal is to make sure that any files that have sensitive information are labeled for our company’s compliance policies. So for inspection, we’ll use the same DLP engine that Office 365 uses, the data classification service. And that’s going to look for sensitive content like social security numbers, for example. And we’ll create an alert for each policy match and in governance actions we’ll apply a sensitive label if the file matches our policy.

-And finally, MCAS has built-in anomaly detection policies to monitor user interactions with individual applications regardless of how you’ve connected those apps to Cloud App Security. Now these detections range from suspicious admin activity to triggering a mass download alert. Now MCAS establishes baseline patterns for user behavior and can trigger alerts or actions once an anomaly is detected. Additionally, MCAS offers Cloud Security Posture Management, or CSPM, and here the security configuration screen helps you improve the security posture across clouds with recommendations.

-So that was a tour of your endpoint and application management options and your considerations when moving to the Zero Trust security model. Up next, we’re going to explore your options for networks, infrastructure and data. And keep checking back to aka.ms/ZeroTrustMechanics for more on our series where I share tips and hands-on demonstrations of the tools for implementing the Zero Trust security model across all six layers of defense. And you can learn more at aka.ms/zerotrust. Thanks for watching.

Recent Comments