by Contributed | May 26, 2021 | Technology

This article is contributed. See the original author and article here.

Are you running multiple applications in your HCI cluster? Do you see users complaining about intermittent connectivity issues to certain apps? Do you observe a particular application taking up a lot of network bandwidth thus preventing access to other applications? If yes, please read on to understand how you can resolve such issues in your HCI clusters.

Problem

If the networks configured on your HCI cluster have plenty of bandwidth and no traffic that bursts above what it can handle, you are in a good place and probably do not have a problem with packet loss, delay, or jitter. But generally, that is not the case. We are usually working with a finite amount of network bandwidth.

Some applications running in your network can be sensitive to delay. And they may be hosted with other applications on the same HCI host machine. If that is the case, your network sensitive applications are going to suffer.

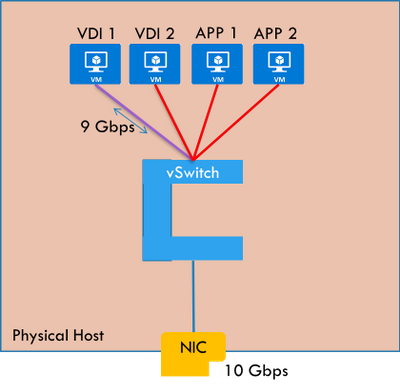

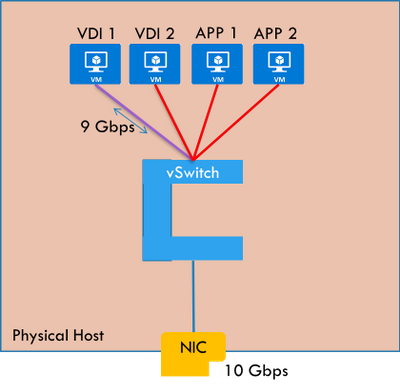

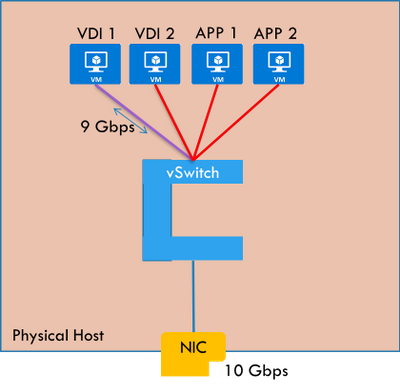

Figure: VDI host pool may hog the network bandwidth of the host starving other apps

Solution: Quality of Service (QoS) Policies

What if you can control the network bandwidth of application workloads? This would prevent certain applications from over-consuming the bandwidth, even during traffic bursts.

Azure Stack HCI supports configuring maximum permitted send-side or receive-side bandwidth for virtual machines. This is supported for virtual machines connected to a traditional VLAN network as well as virtual machines connected to a Software Defined Networking (SDN) virtual network. Once set, your virtual machine will not be able to send/receive traffic above the configured maximum limits. For a virtual machine, you can choose to configure either send-side limit, receive side limit, or both.

NOTE: There are other ways to give priority to specific network traffic, like, for example, DSCP markings. While you can use them as per your requirements, SDN QoS described above is a generic way to limit traffic to/from a virtual machine.

Configure and manage QoS policies in Azure Stack HCI

There are two high level steps to configure QoS policies for HCI. First, you need to setup the Network Controller and then configure QoS policies.

Setup Network Controller

Network Controller can be setup using SDN Express Powershell scripts or the Windows Admin Center (WAC) or through System Center Virtual Machine Manager (SCVMM). Please refer to my previous blog post on microsegmentation for details on how to setup Network Controller.

Configure Quality of Service Policies

Once Network Controller is setup, you can go ahead and deploy your QoS policies. Today, you can do this using Network Controller Powershell cmdlets.

NOTE: Configuration support through Windows Admin Center is coming soon.

Step 1: Configure global QoS settings.

You can perform the below steps on a Network Controller machine or a management client of Network Controller. This will enable the global setting to configure QoS policies through Network Controller.

$vswitchConfig=[Microsoft.Windows.NetworkController.VirtualSwitchManagerProperties]::new()

$qos=[Microsoft.Windows.NetworkController.VirtualSwitchQosSettings]::new()

$qos.EnableSoftwareReservations=$true

$vswitchConfig.QosSettings =$qos

Set-NetworkControllerVirtualSwitchConfiguration -ConnectionUri $uri -Properties $vswitchConfig

//ConnectionUri is the REST uri of the Network Controller. Example: https://nc.contoso.com

Step 2: Configure QoS policies on a workload VM network interface.

First, you will need to identify the Network Interface where you want to apply the policy.

$NwInterface=Get-NetworkControllerNetworkInterface -ConnectionUri $uri -ResourceId Vnet-VM2_Net_Adapter_0

//ConnectionUri is the REST uri of the Network Controller. Example: https://nc.contoso.com

Then, you can configure the inbound and/or outbound maximum throughput allowed on the network interface.

$NwInterface.Properties.PortSettings.QosSettings= [Microsoft.Windows.NetworkController.VirtualNetworkInterfaceQosSettings]::new()

$NwInterface.Properties.PortSettings.QosSettings.InboundMaximumMbps =”20″

New-NetworkControllerNetworkInterface -ConnectionUri $uri -ResourceId $NwInterface.ResourceId -Properties $NwInterface.Properties

So, as you can see, with SDN QoS policies, you can prevent network intensive applications from hogging the entire bandwidth of your HCI cluster hosts. Please try this out and give us feedback at sdn_feedback@microsoft.com. Feel free to reach out for any questions as well.

by Contributed | May 26, 2021 | Technology

This article is contributed. See the original author and article here.

We’re glad to announce the release of Log Analytics Workspace Insights (preview) – a new experience providing comprehensive monitoring of your Log Analytics Workspace, through a central view of the workspace usage, performance, health, agents, run queries, and change log.

Accessing Log Analytics Workspace Insights

- Overview at scale – You can launch Log Analytics Workspace Insights through Azure Monitor’s list of insights, which shows an overview of your workspace across the globe: or from the Workspace itself. Opening LA Workspace Insights through Azure Monitor, first shows an overview of all your workspaces, across the globe:

Overview at scale

Overview at scale

Select a workspace from the list to reach the more detailed workspace-specific view.

- Workspace-specific insights – open a Log Analytics Workspace and select Insights from its menu. This opens a multi-tabbed view, where you can deep dive into different aspects of your workspace. Below, we review in detail what insights this view provides.

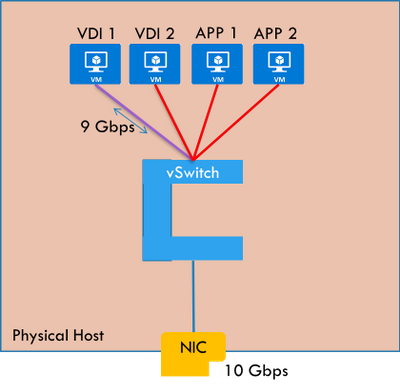

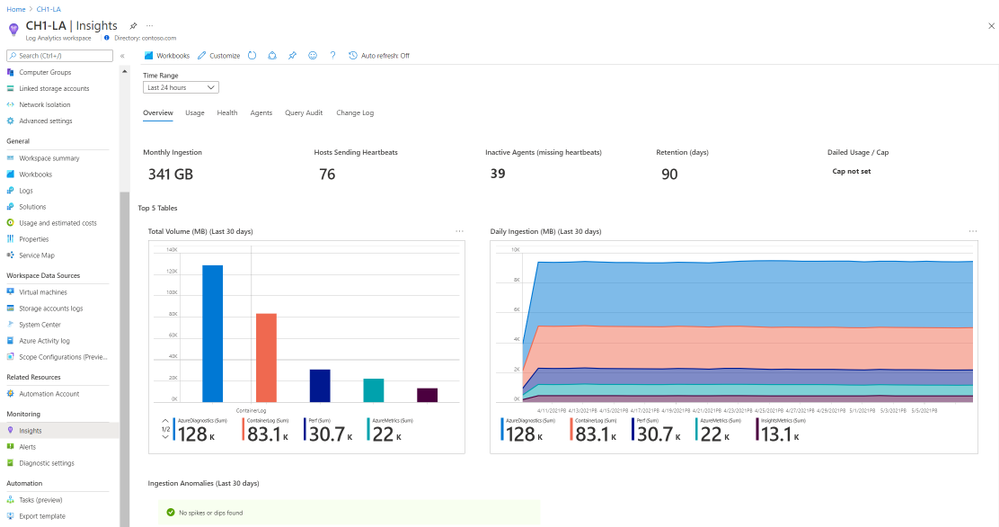

Workspace Overview

The Overview section surfaces main workspace settings and statistics, such as the total monthly ingestion volume, the data retention period, or a set daily cap and how much of was it used already.

It also shows which are the top 5 most used tables, and information on them – how much data was ingested, what’s the daily pattern and anomalies – if found.

Workspace overview

Workspace overview

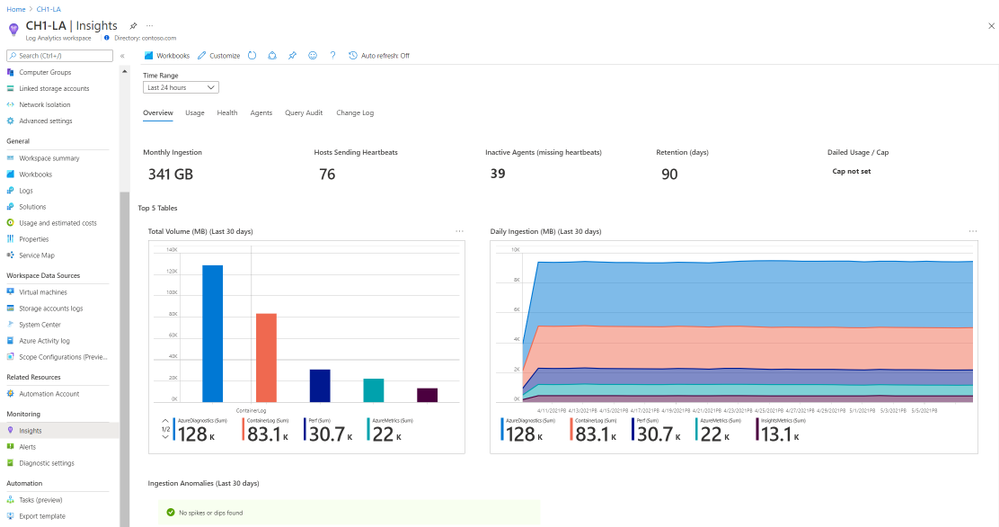

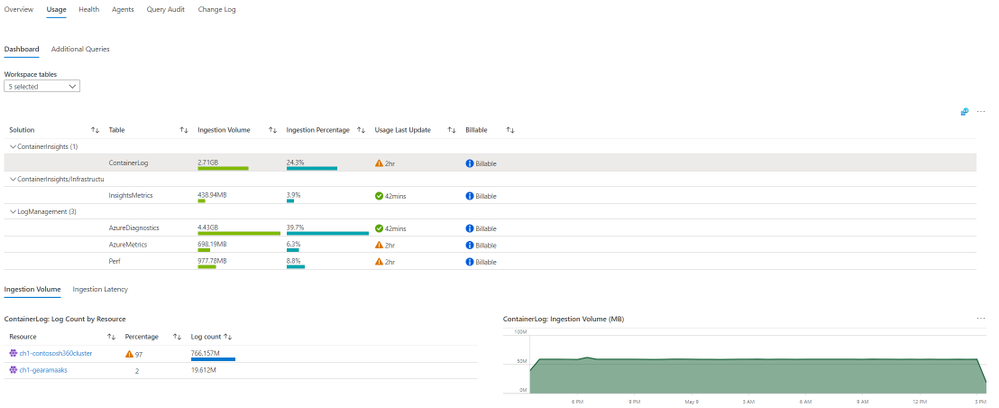

Workspace Usage

Here you can explore in detail the usage of each table of the workspace. Click a row in the top grid to see table-specific information – how much data was ingested to the table, the percentage of it from the total workspace volume, which resources sent most data, and latency data, charted by time, and split to agent and pipeline latency.

Additionally, you can switch from the Dashboard to the Additional Queries tab to run queries and learn which resources, subscriptions and resource groups ingested most data – across the workspace. That information could help you identify “spamming” resources and save costs.

Workspace Usage

Workspace Usage

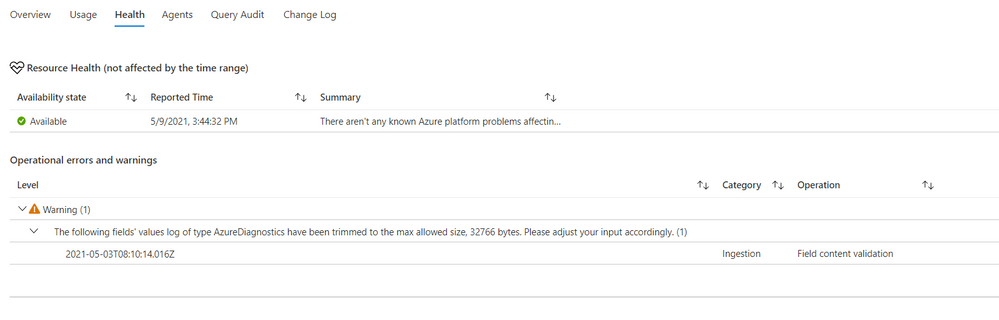

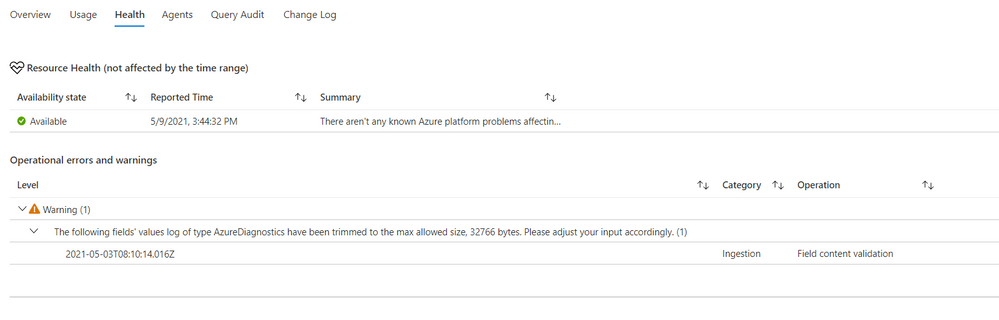

Workspace Health

The Health section shows the workspace health state, and known operational errors and warnings you should take note of. The table of operational events is based on the _LogOperation table.

Workspace health

Workspace health

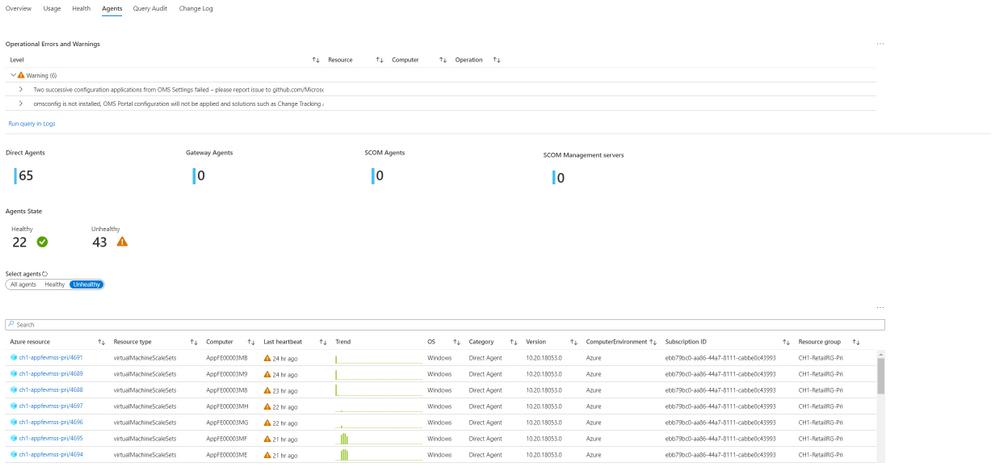

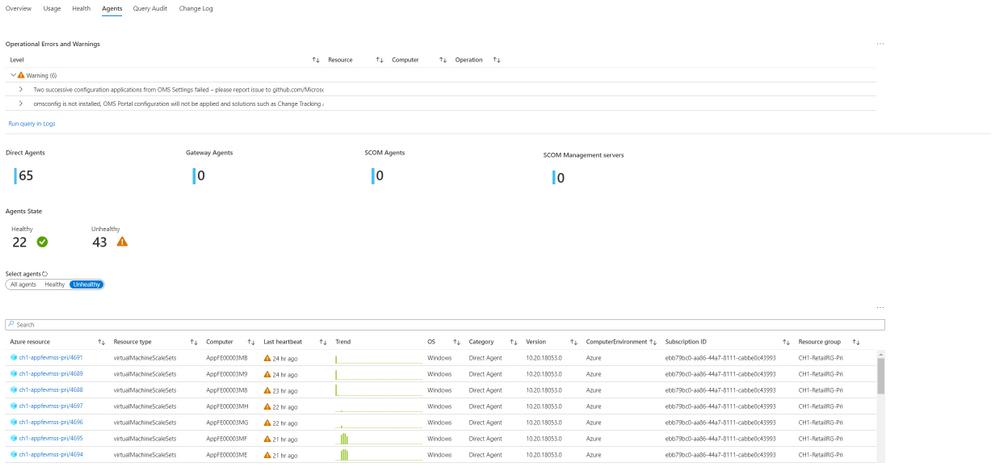

Workspace Agents

The top area of this page shows -operational errors and warnings related to your agents. The events are grouped by their description, but you can expand each type of event to see which resources were affected, and at which times.

Below it, you can review your agents in more detail – agent types, count, health and connectivity to the workspace over time.

Workspace agents

Workspace agents

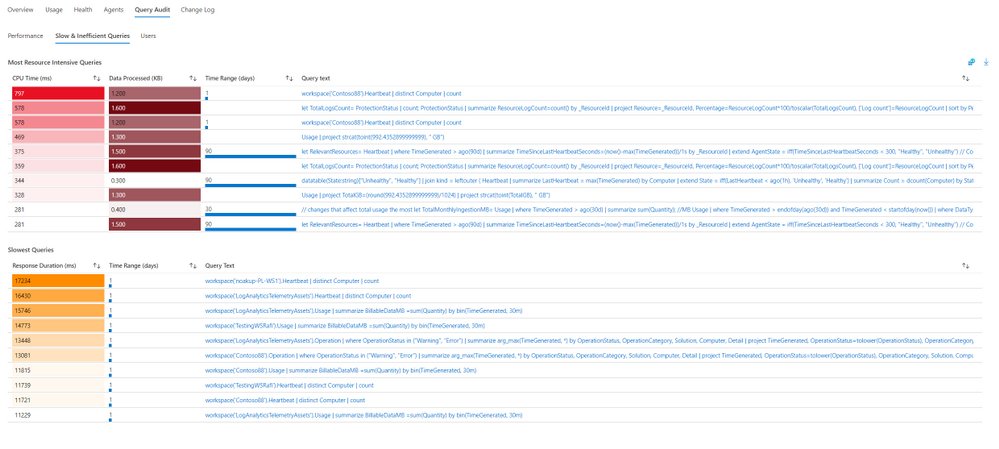

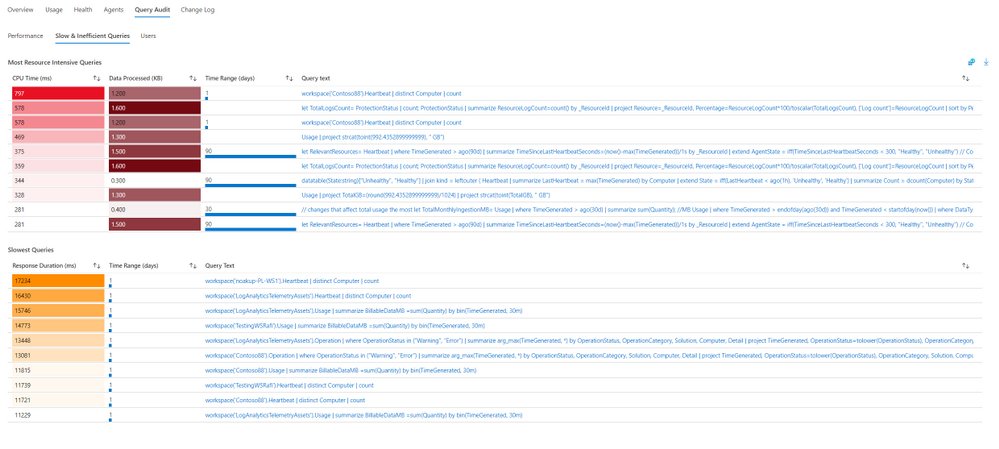

Workspace Query Audit

The insights regarding workspace queries rely on query auditing logs. If query auditing is enabled on your workspace, this data could help you understand and improve query performance, and load, identify the most inefficient queries and which users query most, or experience query throttling. To enable query auditing on your workspace or learn more about it, see Audit queries in Azure Monitor Logs.

Workspace query audit

Workspace query audit

Workspace Change Log

This tab shows configuration changes made on the workspace during the last 90 days (regardless of the time range selected), and who performed them, to help you monitor who changes important workspace settings, such as data capping or workspace license.

Feedback

We appreciate your feedback! comment on this blog post and let us know what you think of the this feature.

by Contributed | May 26, 2021 | Technology

This article is contributed. See the original author and article here.

In this month’s edition of the What’s New blog, we’re excited to share news regarding the availability of Microsoft Office Long Term Servicing Channel (LTSC), OneDrive Admin Sync Reports (ADR), and updated Configuration Manager ADRs. We also point you to the latest admin-focused Microsoft Docs articles for Microsoft 365, as well as Amesh Mansukhani’s appearance on two new videos on the Office Deployment Insider’s YouTube channel and an interview on the Practical 365 Podcast!

Faster at-a-glance views with OneDrive Sync Admin Reports

In April, we announced the public preview for OneDrive Sync Admin Reports. Available in the Microsoft 365 Apps admin center, these reports give you an at-a-glance view of everything happening with OneDrive Sync across the organization, including visibility into who is running the OneDrive Sync client and any errors they might be experiencing. Insights like these can help you proactively reach out to educate people and resolve common issues quickly to improve user experience and increase OneDrive adoption.

Easier targeting for Microsoft 365 Apps updates with updated Configuration Manager ADRs

Coming in June, we’re releasing an update to Automatic Deployment Rules (ADR) for Microsoft 365 Apps in Microsoft Endpoint Configuration Manager that adds release type to the Title property in the update catalog. You will be able to use the Title property within the search criteria of your ADR definition to easily target the necessary updates for your environment. In addition, you’ll no longer need to continually update your search criteria with each new release. The version number and architecture values will also trade places.

Amesh Mansukhani talks about the Microsoft 365 Apps Admin Center on Practical 365 Podcast

At the end of April, Amesh Mansukhani, Office Deployment Insiders lead at Microsoft, joined the Practical 365 Podcast to talk about the Microsoft 365 Apps Admin Center. Listen to hear Amesh talk about the importance of keeping your Microsoft 365 Apps up to date and how you can benefit from using the Admin Center to help ensure your users and devices are getting access to the latest updates and to gain better visibility and control over application health. You can watch the video podcast on the Practical 365’s YouTube channel or listen to the audio-only version to learn more.

We’re excited to bring you two brand new videos on the Office Deployment Insiders YouTube Channel!

Get the most out of Microsoft OneDrive with brand new insight capabilities into your overall OneDrive deployment. Explore these new features with Amesh as he dives into fresh ways to analyze OneDrive client reports, sync issues, known folders to leverage KFM’s, and much more.

Introducing Microsoft 365 Apps Inventory, the Apps Inventory service recently added to the Microsoft 365 Apps Admin Center. Join Amesh as he shows how Inventory can help you gain deep insights and a real-time view of Office Apps in your organization.

Commercial Preview of Microsoft Office LTSC

Recently, we announced the Commercial Preview of Microsoft Office LTSC, which is built specifically for organizations running regulated devices that cannot accept feature updates for long periods, devices that are not connected to the internet, and specialty systems that must stay locked in time and require a long-term servicing channel.

Catching up: New Microsoft Docs articles for April

You can catch up on some of the latest Microsoft 365 Apps best practices from the field in these articles:

Network guidance for deploying and servicing Microsoft 365 Apps – This article covers topics such as available options for managing Microsoft 365 apps for remote workers or employees in the office, split tunneling for workforces that frequently connect using VPN, deploying Microsoft 365 Apps using Intune, using Servicing Profiles to manage monthly app updates, and optimizing your network via Configuration Manager. You can also read further guidance on deploying Microsoft 365 Apps.

Build dynamic collections for Microsoft 365 Apps with Configuration Manager – This article shares best practices for using Microsoft Endpoint Configuration Manager’s dynamic collections to simplify management. This month we added a new best practice for setting up a collection that captures all devices running outdated builds, so you can quickly identify devices that lack updates or must be updated to a certain minimum build.

Continue the conversation by joining us in the Microsoft 365 Tech Community! Whether you have product questions or just want to stay informed with the latest updates on new releases, tools, and blogs, Microsoft 365 Tech Community is your go-to resource to stay connected!

by Contributed | May 26, 2021 | Technology

This article is contributed. See the original author and article here.

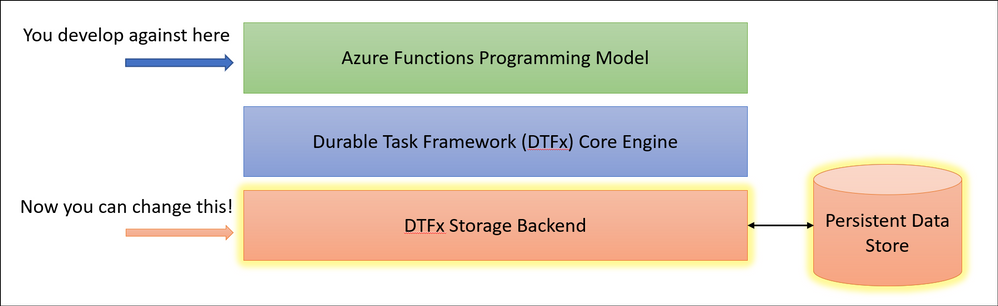

This week at Microsoft’s annual Build conference we made two announcements related to Azure Durable Functions: Two new backend storage providers, and the GA of Durable Functions for PowerShell. In this post, we’ll go into more details about the new storage providers and what they mean for Durable Functions developers.

New Storage Providers

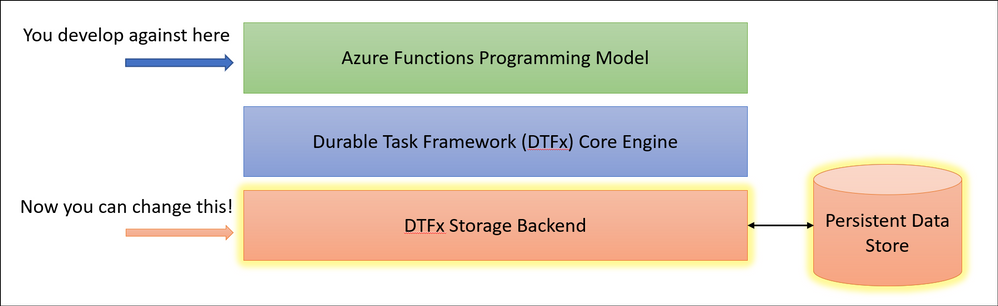

Azure Durable Functions now supports two new backend storage providers for storing durable runtime state, “Netherite” and Microsoft SQL Server (including full support for Azure SQL Database). These new storage options allow you to run at higher scale, with greater price-performance efficiency, and more portability compared to the default Azure Storage configuration. Any of these three storage providers can now be configured without making any code changes to your existing apps.

To learn more, read the Durable Functions storage providers documentation, which also contains a side-by-side comparison of all three supported storage providers.

Durable Functions enables you to write long-running, reliable, event-driven, and stateful logic on the serverless Azure Functions platform using every day imperative code. Since its GA release in 2018, the Durable Functions extension transparently saved execution state into an Azure Storage account, ensuring that functions could recover automatically from any infrastructure failure. The convenience and ubiquity of Azure Storage accounts made it easy to get up-and-running in production with Durable Functions apps in a matter of minutes.

Limitations of the Azure Storage provider

Azure Storage is and will continue to be the default storage provider for Durable Functions. It uses queues, tables, and blobs to persist orchestration and entity state. It also uses blobs and blob leases to manage partitions across a distributed set of nodes. While the Azure Storage provider is the most convenient and lowest-cost option for persisting runtime state, it also has some notable limitations that may prevent it from being usable in certain scenarios.

- Azure Storage has limits on the number of transactions per second for a storage account, limiting the maximum scalability of a Durable Function app.

- Azure Storage has strict data size limits for queue messages and Azure Table entities, requiring slow and expensive workarounds when handling large payloads.

- Azure Storage costs can be hard to predict since they are per-transaction and have very limited support for batching.

- Azure Storage can’t easily support certain enterprise business continuity requirements, such as backup/restore and disaster recovery without data loss.

- Azure Storage can’t be used outside of the Azure cloud.

After speaking with customers who were impacted by some of these limitations, it became clear to us that we needed to invest in alternative storage providers to ensure the needs of all Durable Functions customers could be met.

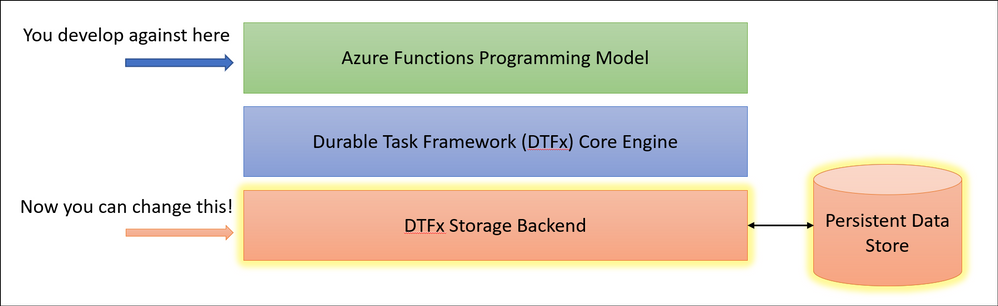

Fortunately, the architecture of Durable Functions and the underlying Durable Task Framework made it simple for us to enable swapping out backend storage providers without requiring customers to make any code changes. Starting in Durable Functions v2.4.3, we allow you to swap providers by adding a new extension and making a simple configuration change in your host.json file.

Introducing “Netherite” for maximum orchestration throughput

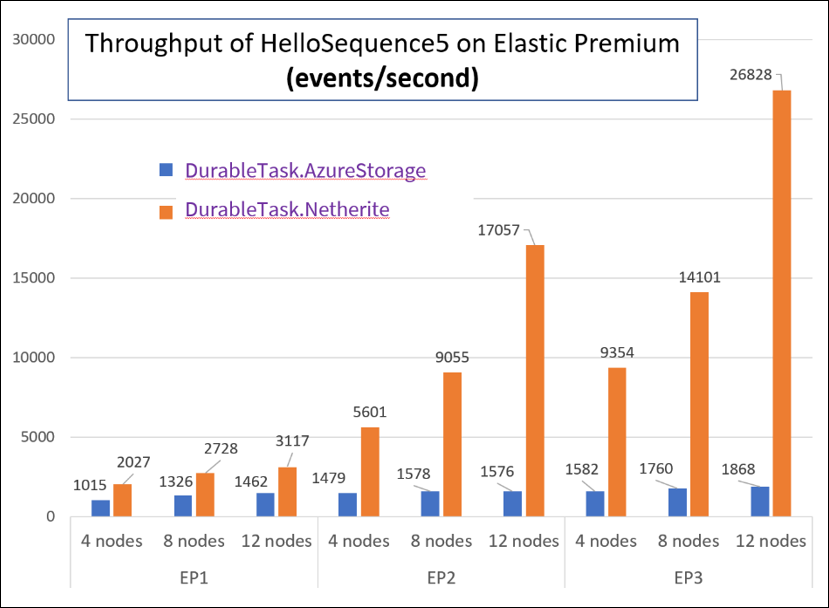

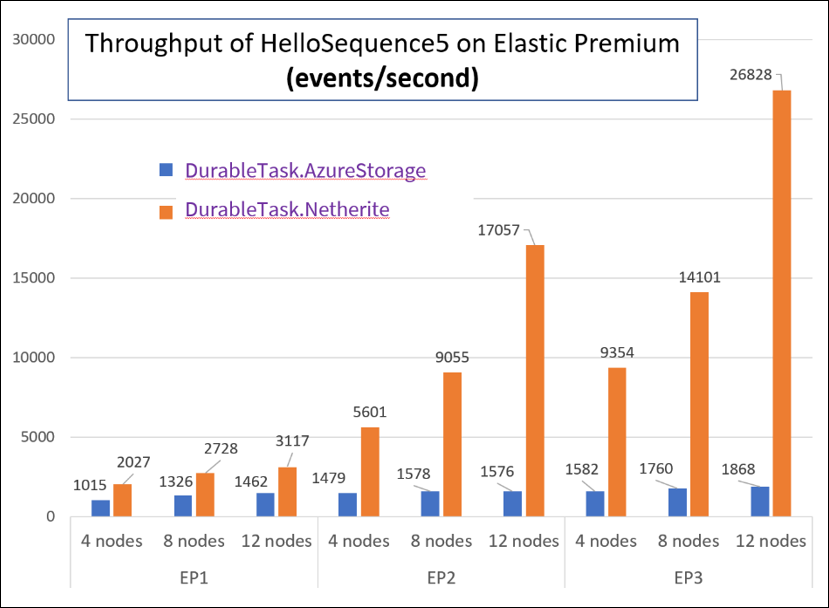

If you’re a fan of Minecraft, you’ll recognize that “Netherite” is the name of a rare material that is more durable than diamond, can float in lava, and cannot burn. The Netherite storage provider aspires to have similar qualities, but in the context of Durable Functions. It was designed and developed in collaboration with Microsoft Research. It combines the high-throughput messaging capabilities of Azure Event Hubs with the FASTER database technology on top of Azure Page Blobs. The design of Netherite enables significantly higher-throughput processing of orchestrations and entities compared to other Durable storage providers. In some benchmark scenarios, throughput was shown to increase by more than an order of magnitude when compared to the default Azure Storage provider!

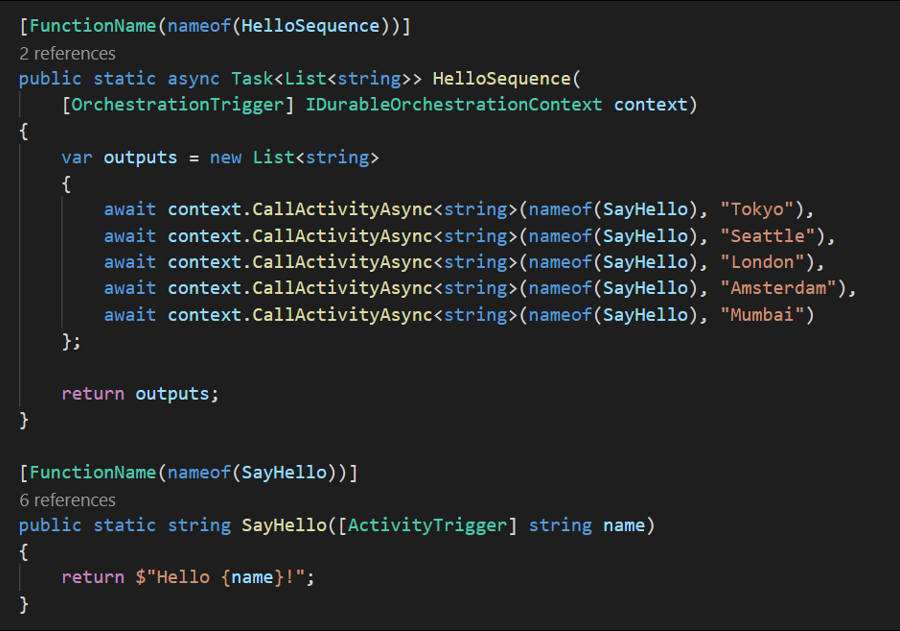

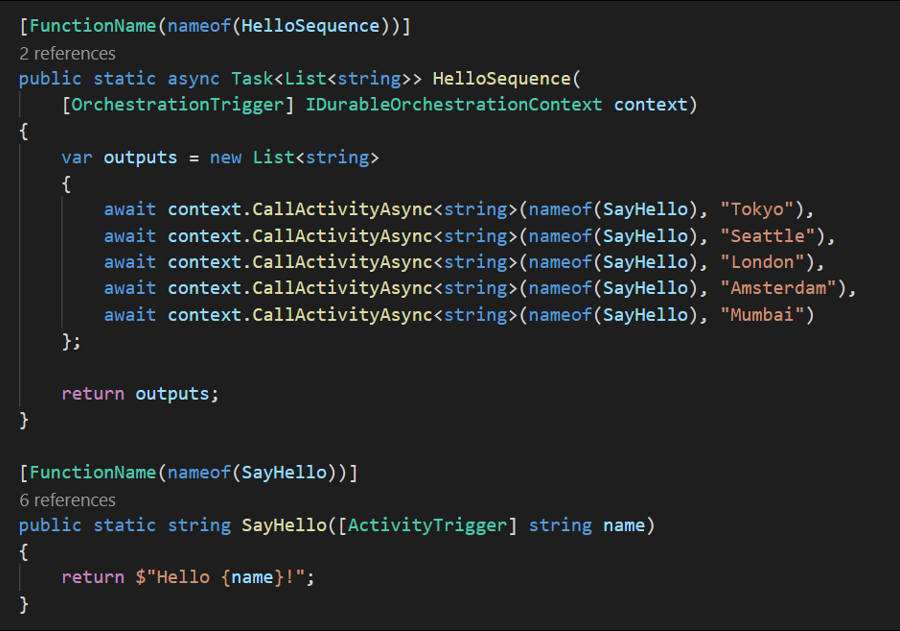

The orchestrator used in the above test is a simple function-chaining sample with 5 activity calls running on the Azure Functions Elastic Premium plan:

The significant increase in throughput shown in the above chart can be achieved using a single Azure Event Hubs throughput unit (1 TU), costing approximately $22/month USD (~€18) on the Standard plan (at the time of writing). Much of this performance gain can be attributed to advanced techniques, such as asynchronous snapshotting and speculative communication, as described in the Serverless Workflows with Durable Functions and Netherite research paper.

For more information and getting-started instructions for the Netherite provider, see the Netherite documentation.

Microsoft SQL for maximum control and portability

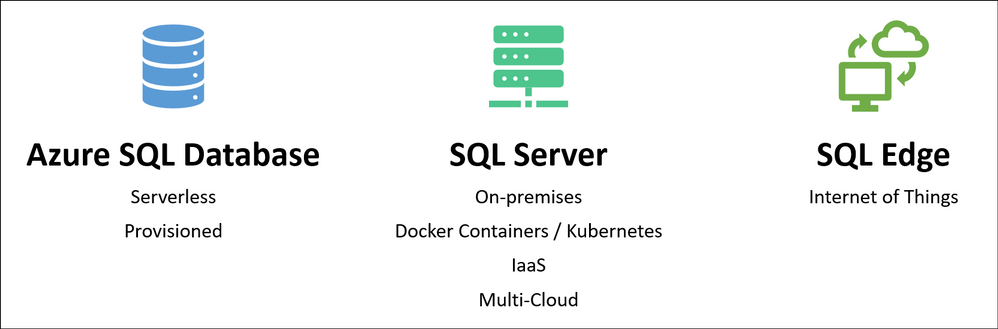

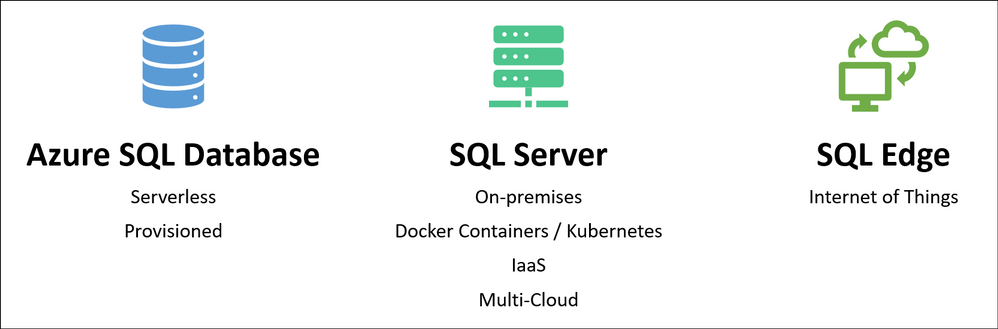

While the Netherite provider was designed for maximum throughput, the Microsoft SQL (MSSQL) provider for Durable Functions was designed for the needs of the enterprise, including the ability to decouple from the Azure cloud.

Microsoft SQL can run anywhere, including on-premises servers, Edge devices, Linux Docker containers, on the Azure SQL Database serverless tier, and even on competitor cloud providers like AWS and GCP. This means you can run Durable Functions anywhere that Azure Functions can run, including your own Azure Arc-enabled Kubernetes clusters. In fact, the Azure Functions Core Tools and the Azure Arc App Service extension have been updated to support automatically configuring Durable Function apps on a Kubernetes cluster with the MSSQL KEDA scaler for elastic scale-out.

In addition to portability, you also get many other benefits of using Azure SQL database or Microsoft SQL for storing runtime state, including its long-established support for backup/restore, business continuity (high availability and disaster recovery), and data encryption.

The design of the Microsoft SQL storage provider for Durable Functions also makes it easy to integrate with existing SQL-based applications. When your function app starts up, it automatically provisions a set of tables, SQL functions, and stored procedures in the target database within a “dt” schema (“dt” stands for Durable Tasks). You can easily monitor your orchestrations and entities by running SELECT queries against these tables. You can also start new orchestrations using T-SQL and invoking the dt.CreateInstance stored procedure. This is especially useful if you want to extend an existing line-of-business application that already use SQL Server or Azure SQL Database by incorporating database triggers.

For more information and getting-started instructions for the Microsoft SQL provider, see the Durable Task SQL Provider documentation.

Concluding thoughts

We’re really excited about the new possibilities for customers building solutions using Durable Functions. With the availability of the two new storage backends, we hope to see new types of serverless apps get built which may not have been possible before. To be clear, the default Azure Storage provider option isn’t going anywhere, and we’ll continue to promote it as the easiest and lowest cost option for Durable Functions. Customers simply have new options which weren’t previously available.

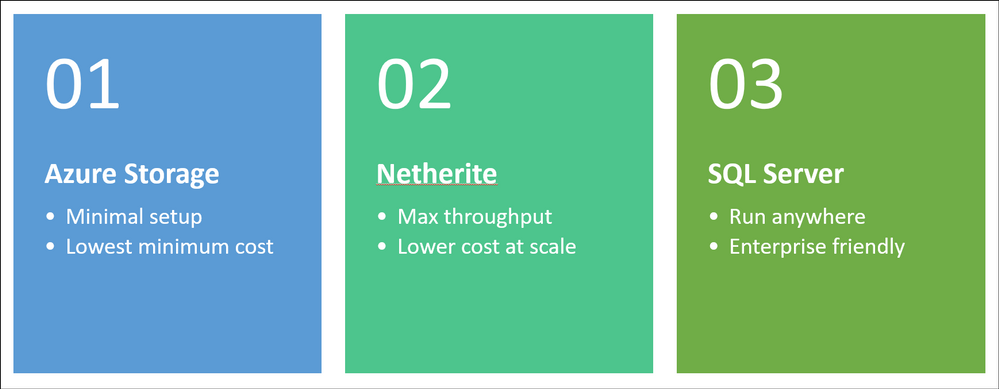

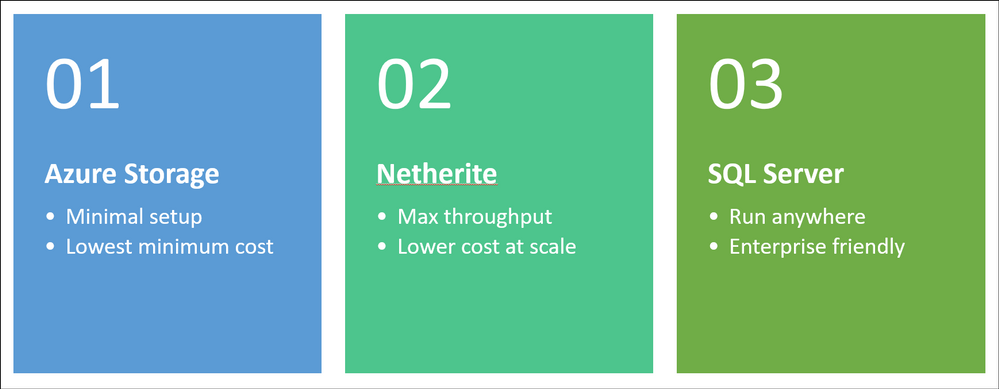

So which one should you choose? I made a simple graphic to help you decide.

You can find a more comprehensive comparison of the three storage providers here.

As always, the development for Durable Functions happens in the open on GitHub and the new backends are no exception. You can find the Netherite provider at microsoft/durabletask-netherite and the Microsoft SQL provider at microsoft/durabletask-mssql. We encourage you to open issues in these repos and contribute PRs if you have ideas for how we can make them better (we’ve already accepted a few external contributions). Also, don’t forget to give us a :glowing_star: and subscribe for notifications of new releases using the “Watch” button.

by Contributed | May 26, 2021 | Technology

This article is contributed. See the original author and article here.

select 'ALTER TABLESPACE '||tablespace_name||' OFFLINE NORMAL;' from DBA_TABLESPACES;

select 'ALTER DATABASE RENAME FILE '''||NAME|| ''' TO '||NAME||';' from V$DATAFILES;

select 'ALTER TABLESPACE '||tablespace_name||' ONLINE;' from DBA_TABLESPACES;

Recent Comments