by Contributed | Feb 17, 2021 | Technology

This article is contributed. See the original author and article here.

First published on February 12, 2018

Howdy folks,

I hope you’ll find today’s post as interesting as I do. It’s a bit of brain candy and outlines an exciting vision for the future of digital identities.

Over the last 12 months we’ve invested in incubating a set of ideas for using Blockchain (and other distributed ledger technologies) to create new types of digital identities, identities designed from the ground up to enhance personal privacy, security and control. We’re pretty excited by what we’ve learned and by the new partnerships we’ve formed in the process. Today we’re taking the opportunity to share our thinking and direction with you. This blog is part of a series and follows on Peggy Johnson’s blog post announcing that Microsoft has joined the ID2020 initiative. If you haven’t already Peggy’s post, I would recommend reading it first.

I’ve asked Ankur Patel, the PM on my team leading these incubations to kick our discussion on Decentralized Digital Identities off for us. His post focuses on sharing some of the core things we’ve learned and some of the resulting principles we’re using to drive our investments in this area going forward.

And as always, we’d love to hear your thoughts and feedback.

Best Regards,

Alex Simons (Twitter: @Alex_A_Simons)

Director of Program Management

Microsoft Identity Division

———————————————–

Greetings everyone, I’m Ankur Patel from Microsoft’s Identity Division. It is an awesome privilege to have this opportunity to share some of our learnings and future directions based on our efforts to incubate Blockchain/distributed ledger based Decentralized Identities.

What we see

As many of you experience every day, the world is undergoing a global digital transformation where digital and physical reality are blurring into a single integrated modern way of living. This new world needs a new model for digital identity, one that enhances individual privacy and security across the physical and digital world.

Microsoft’s cloud identity systems already empower thousands of developers, organizations and billions of people to work, play, and achieve more. And yet there is so much more we can do to empower everyone. We aspire to a world where the billions of people living today with no reliable ID can finally realize the dreams we all share like educating our children, improving our quality of life, or starting a business.

To achieve this vision, we believe it is essential for individuals to own and control all elements of their digital identity. Rather than grant broad consent to countless apps and services, and have their identity data spread across numerous providers, individuals need a secure encrypted digital hub where they can store their identity data and easily control access to it.

Each of us needs a digital identity we own, one which securely and privately stores all elements of our digital identity. This self-owned identity must be easy to use and give us complete control over how our identity data is accessed and used.

We know that enabling this kind of self-sovereign digital identity is bigger than any one company or organization. We’re committed to working closely with our customers, partners and the community to unlock the next generation of digital identity-based experiences and we’re excited to partner with so many people in the industry who are making incredible contributions to this space.

What we’ve learned

To that end today we are sharing our best thinking based on what we’ve learned from our decentralized identity incubation, an effort which is aimed at enabling richer experiences, enhancing trust, and reducing friction, while empowering every person to own and control their Digital Identity.

- Own and control your Identity. Today, users grant broad consent to countless apps and services for collection, use and retention beyond their control. With data breaches and identity theft becoming more sophisticated and frequent, users need a way to take ownership of their identity. After examining decentralized storage systems, consensus protocols, blockchains, and a variety of emerging standards we believe blockchain technology and protocols are well suited for enabling Decentralized IDs (DID).

- Privacy by design, built in from the ground up.

Today, apps, services, and organizations deliver convenient, predictable, tailored experiences that depend on control of identity-bound data. We need a secure encrypted digital hub (ID Hubs) that can interact with user’s data while honoring user privacy and control.

- Trust is earned by individuals, built by the community.

Traditional identity systems are mostly geared toward authentication and access management. A self-owned identity system adds a focus on authenticity and how community can establish trust. In a decentralized system trust is based on attestations: claims that other entities endorse – which helps prove facets of one’s identity.

- Apps and services built with the user at the center.

Some of the most engaging apps and services today are ones that offer experiences personalized for their users by gaining access to their user’s Personally Identifiable Information (PII). DIDs and ID Hubs can enable developers to gain access to a more precise set of attestations while reducing legal and compliance risks by processing such information, instead of controlling it on behalf of the user.

- Open, interoperable foundation.

To create a robust decentralized identity ecosystem that is accessible to all, it must be built on standard, open source technologies, protocols, and reference implementations. For the past year we have been participating in the Decentralized Identity Foundation (DIF) with individuals and organizations who are similarly motivated to take on this challenge. We are collaboratively developing the following key components:

- Decentralized Identifiers (DIDs) – a W3C spec that defines a common document format for describing the state of a Decentralized Identifier

- Identity Hubs – an encrypted identity datastore that features message/intent relay, attestation handling, and identity-specific compute endpoints.

- Universal DID Resolver – a server that resolves DIDs across blockchains

- Verifiable Credentials – a W3C spec that defines a document format for encoding DID-based attestations.

- Ready for world scale:

To support a vast world of users, organizations, and devices, the underlying technology must be capable of scale and performance on par with traditional systems. Some public blockchains (Bitcoin [BTC], Ethereum, Litecoin, to name a select few) provide a solid foundation for rooting DIDs, recording DPKI operations, and anchoring attestations. While some blockchain communities have increased on-chain transaction capacity (e.g. blocksize increases), this approach generally degrades the decentralized state of the network and cannot reach the millions of transactions per second the system would generate at world-scale. To overcome these technical barriers, we are collaborating on decentralized Layer 2 protocols that run atop these public blockchains to achieve global scale, while preserving the attributes of a world class DID system.

- Accessible to everyone:

The blockchain ecosystem today is still mostly early adopters who are willing to spend time, effort, and energy managing keys and securing devices. This is not something we can expect mainstream people to deal with. We need to make key management challenges, such as recovery, rotation, and secure access, intuitive and fool-proof.

Our next steps

New systems and big ideas, often make sense on a whiteboard. All the lines connect, and assumptions seem solid. However, product and engineering teams learn the most by shipping.

Today, the Microsoft Authenticator app is already used by millions of people to prove their identity every day. As a next step we will experiment with Decentralized Identities by adding support for them into to Microsoft Authenticator. With consent, Microsoft Authenticator will be able to act as your User Agent to manage identity data and cryptographic keys. In this design, only the ID is rooted on chain. Identity data is stored in an off-chain ID Hub (that Microsoft can’t see) encrypted using these cryptographic keys.

Once we have added this capability, apps and services will be able to interact with user’s data using a common messaging conduit by requesting granular consent. Initially we will support a select group of DID implementations across blockchains and we will likely add more in the future.

Looking ahead

We are humbled and excited to take on such a massive challenge, but also know it can’t be accomplished alone. We are counting on the support and input of our alliance partners, members of the Decentralized Identity Foundation, and the diverse Microsoft ecosystem of designers, policy makers, business partners, hardware and software builders. Most importantly we will need you, our customers to provide feedback as we start testing these first set of scenarios.

This is our first post about our work on Decentralized Identity. In upcoming posts we will share information about our proofs of concept as well as technical details for key areas outlined above.

We look forward to you joining us on this venture!

Key resources:

Regards,

Ankur Patel (@_AnkurPatel)

Principal Program Manager

Microsoft Identity Division

![[Guest Blog] Living a (Mixed Reality) Life Beyond Imagination](https://www.drware.com/wp-content/uploads/2021/02/medium-266)

by Contributed | Feb 17, 2021 | Technology

This article is contributed. See the original author and article here.

This article was written by Collaboration Application Analyst and Business Applications MVP Anj Cerbolles as part of our Humans of Mixed Reality Guest Blogger Series. Anj, who is based in Singapore, shares about her path to Mixed Reality in the Business Applications space.

Me :)

Me :)

In a room with a full audience and an atmosphere full of excitement and admiration, a man drinking coffee on stage is wearing a device on his head. He kept walking around “his house” and watching a movie while a screen keeps on tagging along with him. He uses “gaze and air tap” gestures to resize the screen. After watching, he checks his calendar to see if he has a scheduled meeting for today and pins his contacts on the wall. When he demonstrates what he could do with the device on his head, a woman wearing a similar device on her head enters the stage.

She displays a corpse on the scene using the same gestures the man did earlier. By interacting with the human anatomy and studying what could be the problem in the body, she screens it out. A hologram of a snapped femur bone suddenly displays, concluding that it could help her with the body’s diagnosis. After interacting with the human body anatomy using the device, her time was up. Another presenter comes up on stage, and this time, a woman enters the scene with a robot. The robot is named B15. It says “Hello” to the audience when the woman instructed it while wearing the same head-mounted device as the previous presenters and shows the pin location. This guides B15 to move accordingly. While B15 moves to the next pin, the presenter obstructs the path where B15 will move next, but it didn’t stop B15 from moving. It finds a new route to get away with the obstruction, carrying on with its tasks successfully. When B15 says “Goodbye” to the audience, everyone claps with great excitement, and I, too, kept on clapping in that magic show live at home.

Oh wait, it’s not a magic show!

It was the Build 2015 Keynote with Alex Kipman. As he introduced a truly magical device called “Microsoft HoloLens,” I was one of the audiences at home, clapping excitedly and amazed at what I had just witnessed. A human interacting with the digital world in the real world by simply wearing the HoloLens, isn’t it impressive? By wearing the device and moving your fingertips, you can easily visualize 3D objects, interacts with maps and structures, and experience virtual items being overlaid onto your real world.

When I was in college, I used to interact with 3D objects by hand using the “Right-Hand Rule” Method and draw them using the drawing tools. When using the “Right-Hand Rule,” you rotate your right hand in the direction x, y, z, or merely horizontal and vertical to visualize better the objects (even your head is turning to imagine the 3D object). Now imagine making a building structure in 3D, and you simply have to use your fingers to seamlessly flip or twist objects, or rotate your head to visualize what it would look like in the real world. Imagine having to call your team member in another part of the country or even across the world, asking for help and advice to fix/inspect a machine in your factory. It will be time-consuming since you have to wait for them to book a flight and get to your location, battle jet lag and even after they’ve arrived, you can’t easily visualize the complex piece of machinery.

However, with Dynamics 365 Remote Assist on Hololens 2, a new world has opened and the possibility is endless. Gone is the need to book flights and spend an exorbitant amount of money to have overseas experts come to your site. Now, you can call them directly via the HoloLens, and they can see exactly what you’re seeing, in REAL-TIME and even draw mixed reality annotations right into your physical environment. It’s hard to simply live in a 2D, paper-based environment once you’ve experienced the magic of mixed reality.

At the last “Future Now Conference 2018” #MicrosoftAI event I attended in Singapore, I got a chance to experience the Microsoft HoloLens 2. That experience was life-changing since I don’t often indulge in buying them for development and tinkering, and it is not in my direct line of work. However, I was deeply curious and wanted to explore this fascinating piece of technology for my own learning. I knew that there are many possibilities that we could do with this technology – we’re only at the tip of the iceberg of what’s possible!

When I tried on the HoloLens, a thought came to my mind. I love the HoloLens, but what if you wanted to experience virtual objects in the real world but had no access to an AR wearable device? Could we make mixed reality a possibility for these people too? If you are like me and interested in mixed reality but may not have the budget to procure an expensive AR device, what are your options?

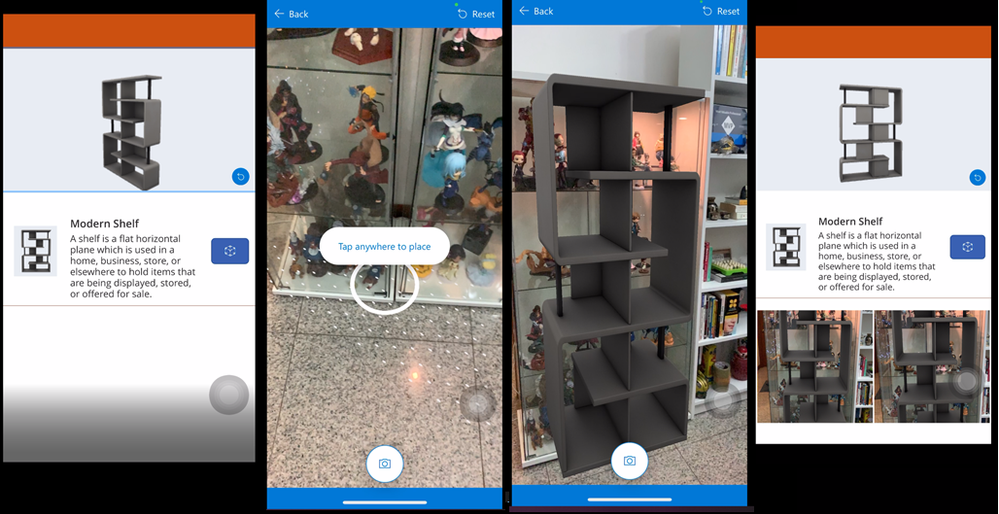

Enter Power Platform. Its fan base has been growing rapidly as it makes it super easy to create an application. Even developers or app makers can quickly build one with minimal coding required. Infusing AI with Power Platform application is as easy as a point-and-click; what about Mixed Reality? For me, I originally thought that making an application with AI would be complex to build and use simultaneously, and significantly more so if I added Mixed Reality into the equation.

Yet, Power Platform has been a great help in removing the impostor syndrome inside of me. I got to meet many people in the community and was even able to build an AI application using Power Platform quickly. I could make an app with AI and my goal back then was to make one with Mixed Reality next. My wishful desire was granted when Power Platform rapidly evolved; In April 2020, Mixed Reality technology was released as a Private Preview in Power Platform. I was so excited and thrilled about it that I immediately signed up for the private preview, and truly ecstatic that my application was accepted.

I started looking for inspiration to do some demos and business case scenarios to help the customers and the company, so I configured my development tenant to experience and try the preview of Mixed Reality in Power Apps. Even though it’s not currently part of my line of work, I still had the chance to explore and enjoy it in my leisure time without sacrificing my job. I was impressed by its capabilities and possibilities with Mixed Reality in Power Apps and so excited to share it with everyone in the community.

Mixed Reality in Power Apps

Mixed Reality in Power Apps

The first time I shared it with was during the ASEAN Microsoft BizApps UG Women in Tech online. I shared the same stage with Dona Sarkar, Soyoung Lee, April Dunnam, and other amazing women speakers worldwide. But it didn’t end there. My speaking career with Mixed Reality in Power Apps continued until I reached Kuala Lumpur, Germany, and Paris through online speaking engagements. I get to share great things and ways of what we could do with Mixed Reality in Power Apps.

My Mixed Reality in Power Apps Presentations

My Mixed Reality in Power Apps Presentations

While we’re still in the midst of a global pandemic where social distancing is observed, the possibility of traveling and meeting with other people is almost zero. Everything is online now. From buying products, engaging with colleagues, training, and even classes use online platforms. With Mixed Reality, we can easily view the products we order online, take measurements, and easily visualize them from our home or business area instead. Even if you can’t get your hands on a Hololens, we can still make use of our phones with Mixed Reality in Power Apps. Dynamics 365 Remote Assist also works on iOS and Android mobile devices, which most of us likely already have! I highly encourage all of you to experiment with it using devices you already have, and you might just be inspired to use mixed reality in new ways as well.

There is an excellent potential for the device in life, health, and safety aspects to help you visualize objects, experience, and engage with other humans. The possibility of this technology is endless; the only limitation you have is your imagination. What will you create with mixed reality next?

Editor’s note:

Anj Cerbolles will be hosting a Table Talk on “Intro to Mixed Reality Business Applications” in the Connection Zone along with Daniel Christian and Adityo Setyonugroho at Microsoft Ignite 2021. If you are interested in this topic and want to connect with her LIVE, be sure to register for Microsoft Ignite and add their session to your schedule! Microsoft Ignite 2021 is free to attend and open to all.

Helpful Links:

- Microsoft Dynamics 365 Remote Assist and Guides

- Microsoft Learn paths for Remote Assist and Guides

- Introducing Mixed Reality in Power Apps

- Power Apps Mixed Reality 3D Control

- Power Apps Mixed Reality Measurements

#MixedReality #CareerJourneys

by Contributed | Feb 17, 2021 | Technology

This article is contributed. See the original author and article here.

During the pandemic, we all found ourselves in isolation, and relying more and more on effective electronic means of communication. The amount of digital conversations increased dramatically, and we need to rely on bots to help us handle some of those conversations. In this blog post, I give brief overview of conversational AI on Microsoft platform and show you how to build a simple educational bot to help students learn.

Do We Need Bots, and When?

Many people believe that in the future we will be interacting with computers using speech, in the same way we interact between each other. While the future is still vague, we can already benefit from conversational interfaces in many areas, for example:

- In user support, which has traditionally been based on interpersonal communication, automated chat-bots can solve a lot of routine problems for the users, leaving human specialists for solving only unusual cases.

- During surgical operation, when hands-free interaction is essential. From personal experience, I find it personally more convenient to set morning alarm and “good night” music through voice assistant before going to sleep.

- Automating some functions in interpersonal communication. My favorite example is a chat-bot that you can add to a group chat when organizing a party, and it will track how much money each of the participants spent on the preparation.

At the current state of development of conversational AI technologies, a chat bot will not replace a human, and it will not pass Turing test.

In practice, chat bots act as an advanced version of a command line, in which you do not need to know exact commands to perform an action.

Thus, successful bot applications will not try to pretend to be a human, because such behavior is likely to cause some user dissatisfaction in the future. It is one of the responsible conversational AI principles, which you need to consider when designing a bot.

Educational Bots

During the pandemic, one of the areas that is being transformed the most is education. We can envision educational bots that help student answer most common questions, or act as a virtual teaching assistant. In this blog post, I will show you how to create a simple assistant bot that will be able to handle several questions from the field of Geography.

Before we jump to this task, let’s talk about Microsoft conversational AI stack in general, and consider different development options.

Conversational AI Development Stack

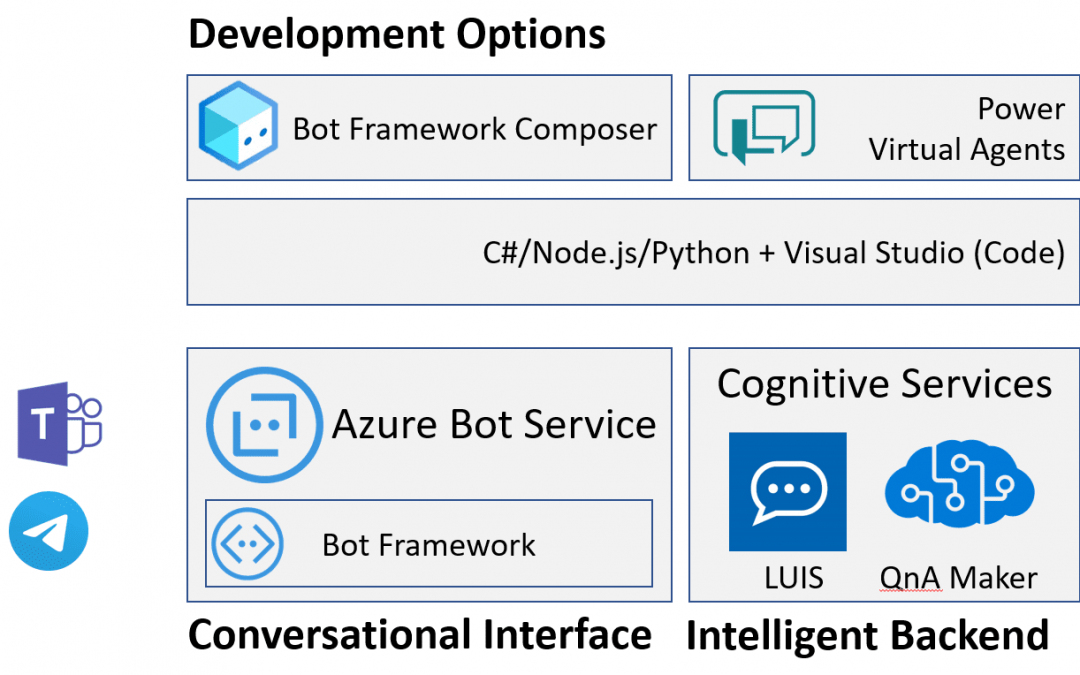

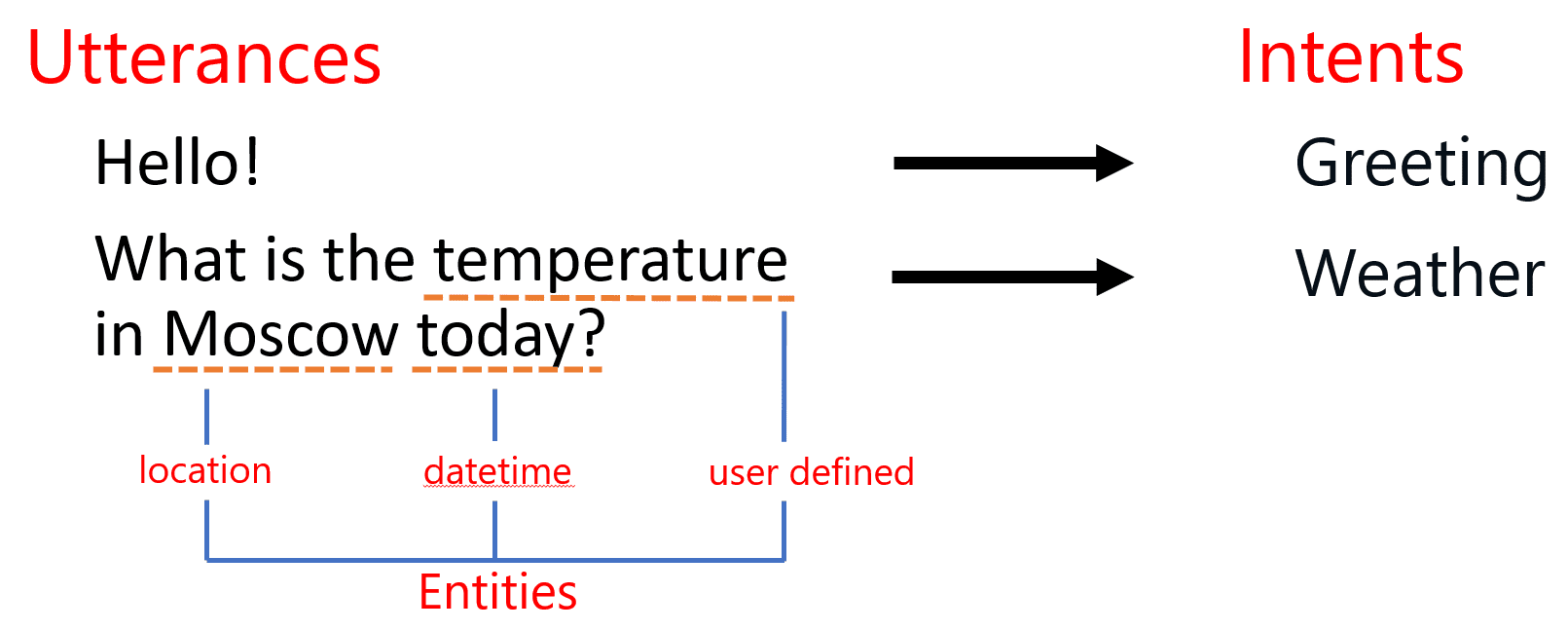

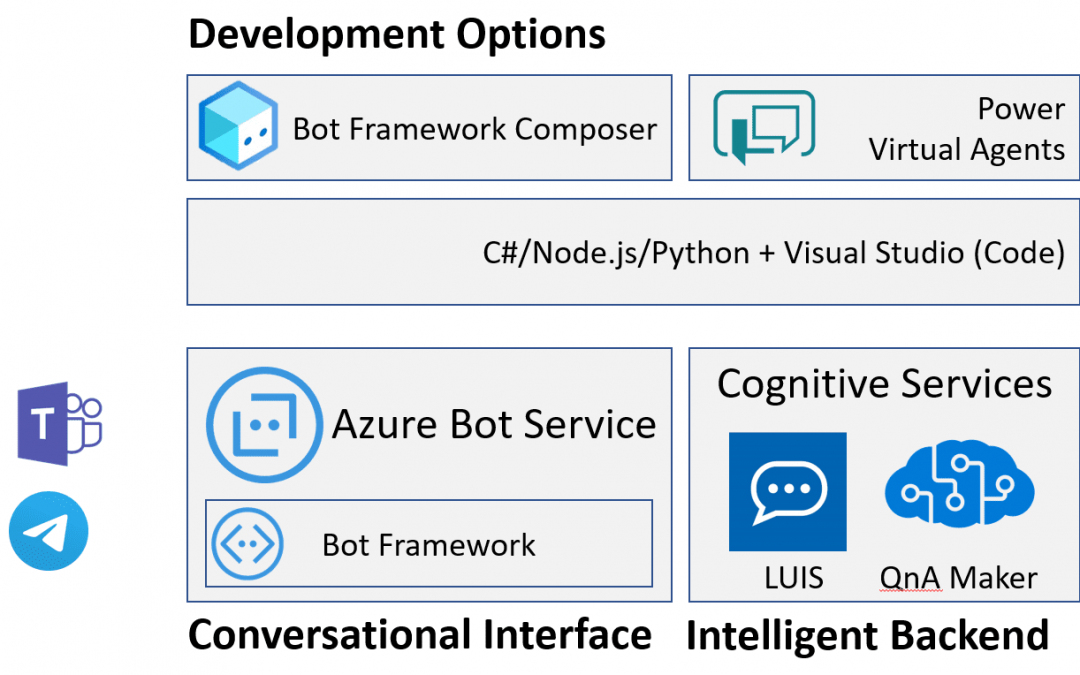

When it comes to conversational AI, we can logically think of a conversational agent having two main parts:

- Conversational interface handles passing messages from the user to the bot and back. It takes care about communication between user messaging agent (such as Microsoft Teams, Skype or Telegram) and our application logic, and includes code to handle request-response logic.

- Intelligent backend adds some AI functionality to your bot, such are recognizing user’s phrases, or finding best possible answer.

A bot can exist without any intelligent backend, but it would not be smart. Still, bots like that are useful for automating simple tasks, such as form filling, or handling some pre-defined workflow.

Here I present slightly simplified view of the whole Bot Ecosystem, but this way it is easier to get the picture.

Conversational Interface: Microsoft Bot Framework and Azure Bot Services

At the heart of conversational interface is Microsoft Bot Framework – an open-source development framework (with source code available on GitHub), which contains useful abstractions for bot development. The main idea of Bot Framework is to abstract communication channel, and develop bots as web endpoints that asynchronously handle request-response communication.

Decoupling of bot logic and communication channel allows you to develop bot code once, and then connect it easily to different platforms, such as Skype, Teams or Telegram. Omnichannel bots are now made simple!

Bot Framework SDK supports primarily C#, Node.js, Python and Java, although C# or Node.js are highly recommended.

To host bots developed with Bot Framework on Azure, you use Azure Bot Services. It hosts bot logic itself (either as web application, or azure function), as well as allows you to declaratively define physical channels that your bot will be connected to. You can connect your bot to Skype or Telegram through Azure Portal with a few simple steps.

Intelligent Backend: LUIS and QnA Maker

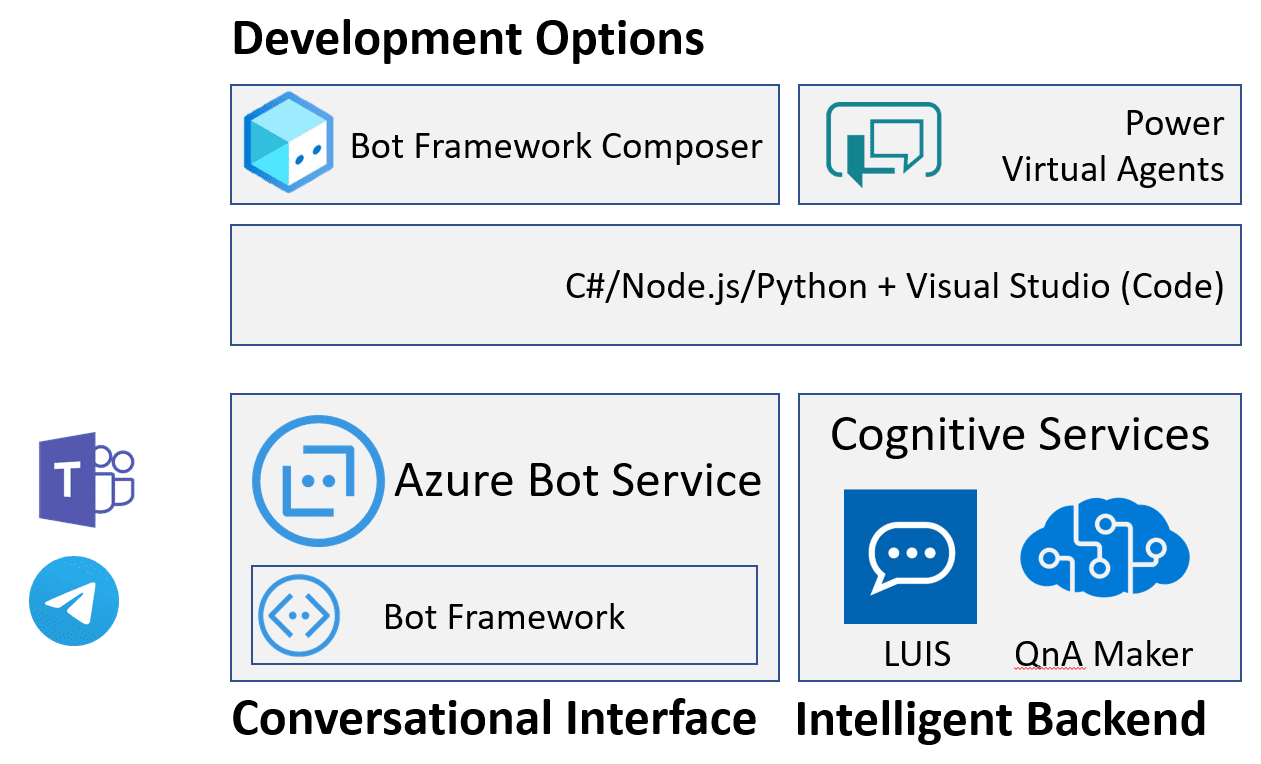

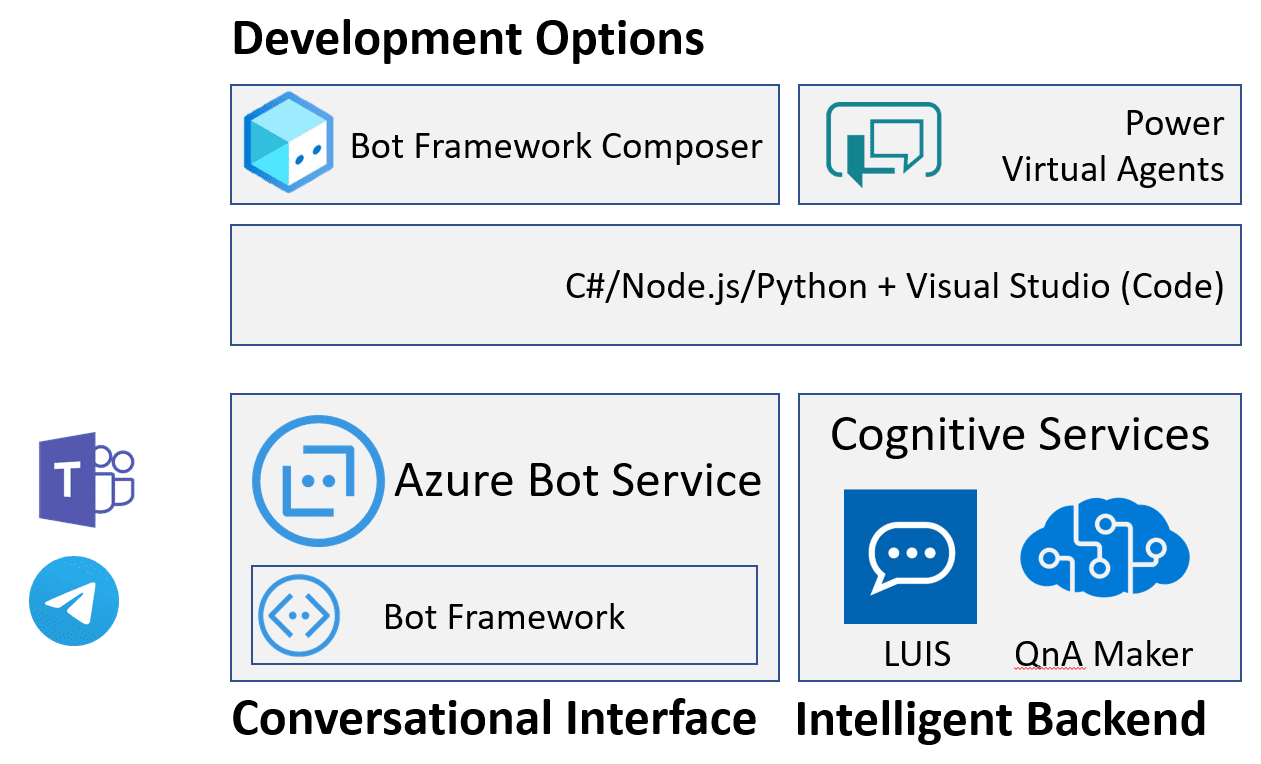

Many modern bots support some form of natural language interaction. To do it, the bot needs to understand the user’s phrase, which is typically done through intent classification. We define a number of possible intents or actions that the bot can support, and then map an input phrase to one of the intents.

This mapping is typically done using a neural network trained on some dataset of sample phrases. To take away the complexity of training your own neural network model, Microsoft provides Lanuguage Understanding Intelligent Service, or LUIS, which allows you to train a model either through web interface, or an API.

In addition to intent classification, LUIS also performs named entity recognition (or NER). It can automatically extract some entities of well-known types, such as geolocations or references to date and time, and can learn to extract some user-defined entities as well.

Having entities extracted and intent correctly determined it should be much easier to program the logic of your bot. This is often done using slot filling technique: extracted entities from the user’s input populate some slots in a dictionary, and if some more values are required to perform the task – additional dialog is initiated to ask additional info from the user.

Another type of bot behavior that often comes up is the ability to find best matching phrase or piece of information in some table, i.e. do an intelligent lookup. It is useful if you want to provide FAQ-style bot that can answer user’s questions based on some database of answers, or if you just want to program chit-chat behavior with some common responses. To implement this functionality, you can use QnA Maker – a complex service, that encapsulates Azure Cognitive Search, and provides simple way to build question-answering functionality. You can index any existing FAQ document, or provide question-answer pairs through the web interface, and then hook up QnA maker to your bot with a few lines of code.

Bot Development: Composer, Power Virtual Agents or Code?

As I mentioned above, bots can be developed using your favorite programming language. However, this approach requires you to write some boilerplate code, understand asynchronous calls, and therefore has a significant learning curve. There are some simpler options that are good for a start!

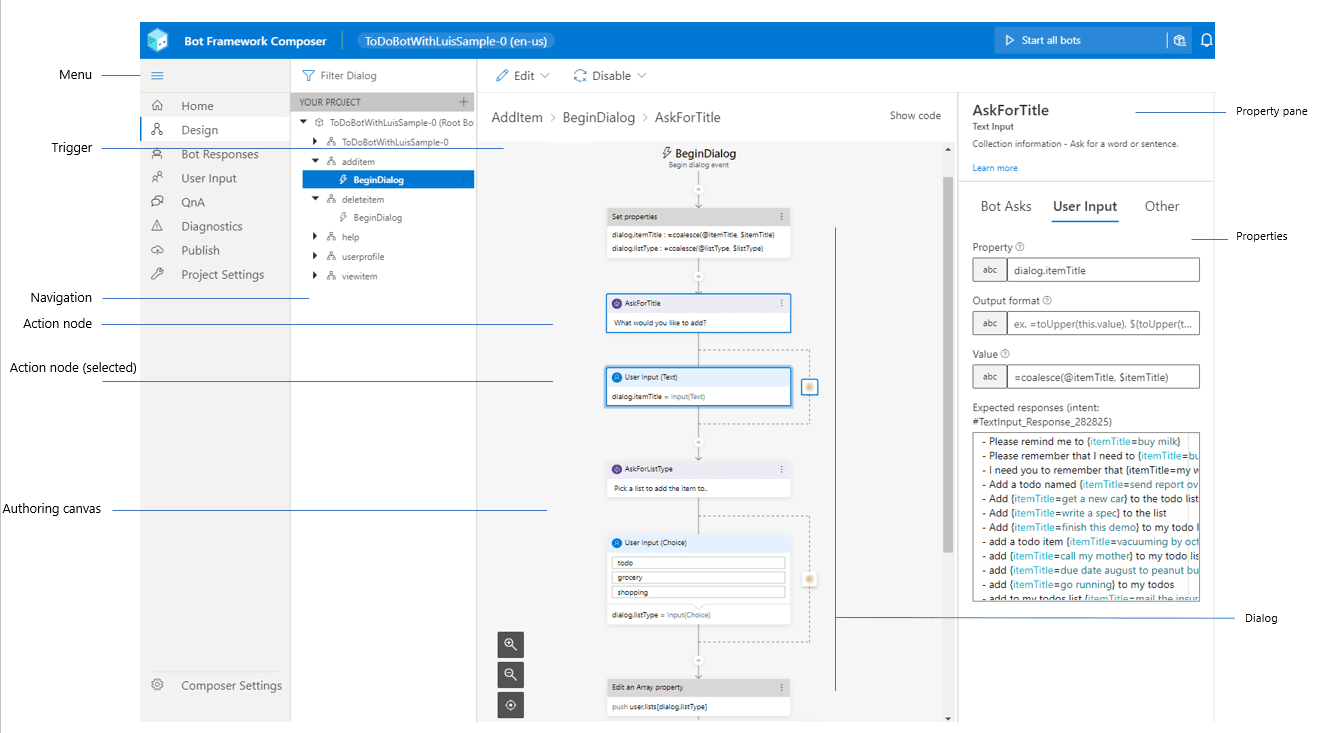

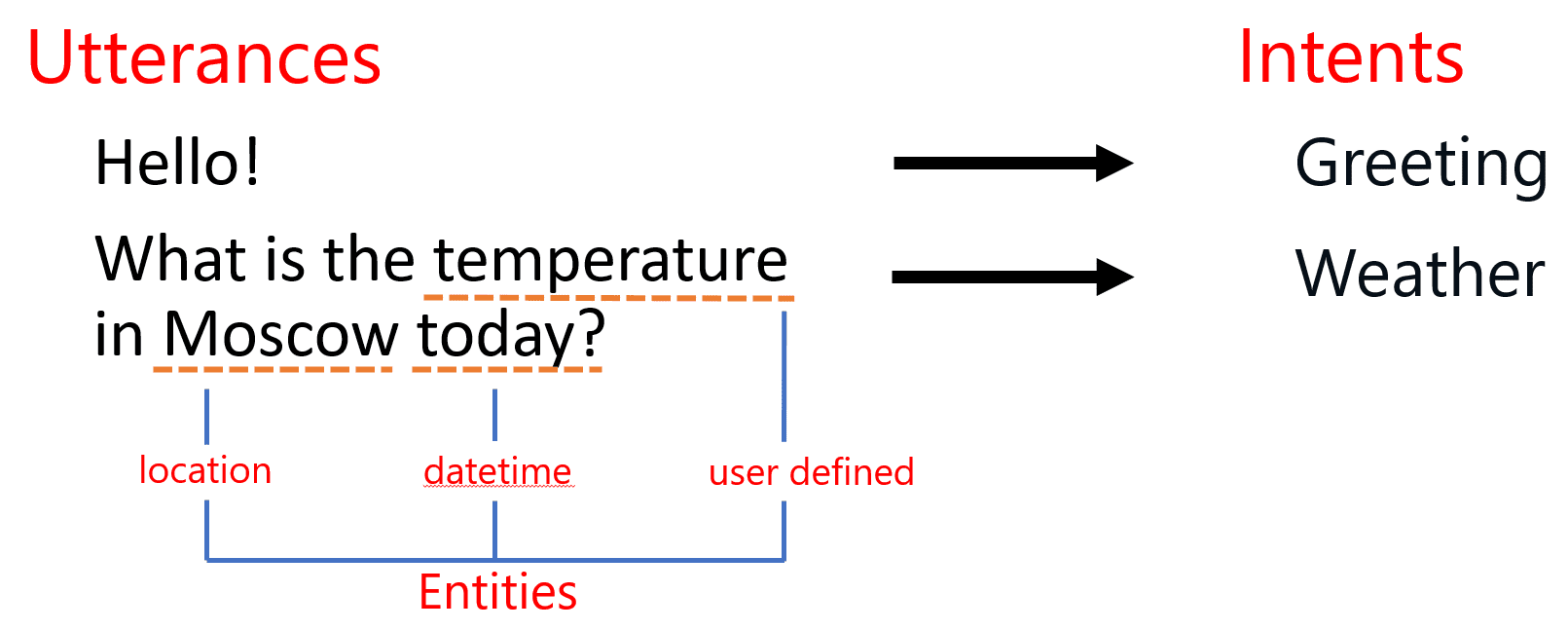

It is recommended to start developing your bot using low-code approach through Bot Framework Composer – an interactive visual tool that allows you to design your bot by drawing dialog diagrams.

Composer integrates LUIS and QnA maker out of the box, so that you do not need to train those services through web interface first, and then worry about integrating them to your bot. From the same UI, you are able to specify events triggered by some user phrases, and dialogs that respond to them.

Another similar low-code option would be to use Power Virtual Agents (PVA), a tool from Power Platform family of tools for business automation. It would be especially useful if you are already familiar with Power Platform, and using any of its tools to enhance productivity. In this case, PWA will be a natural choice, and it will integrate nicely into all your data points and business processes. In short – Composer is a great low-code tool for developers, while PVA is more for business users.

Getting Started with Bot Development

Let me show you how we can start the development of a simple educational bot that will help K-12 students with their geography classes. We will develop a simple bot, which you can later host on Microsoft Azure and connect to most popular communication channels, such as Teams, Slack or Telegram. If you do not have an Azure account, you can get a free trial (or here, if you are a student).

To begin with, we will implement three simple functions in our bot:

- Being able to tell a capital city for a country (What is the capital of Russia?).

- Giving definitions of most useful terms, eg. answering a questions like What is a capital?

- Support for simple chit-chat (How are you today?)

Those two functions cover two most important elements of our intelligent backend: QnA Maker (which can be used to implement the last two points) and LUIS.

Starting with Bot Composer

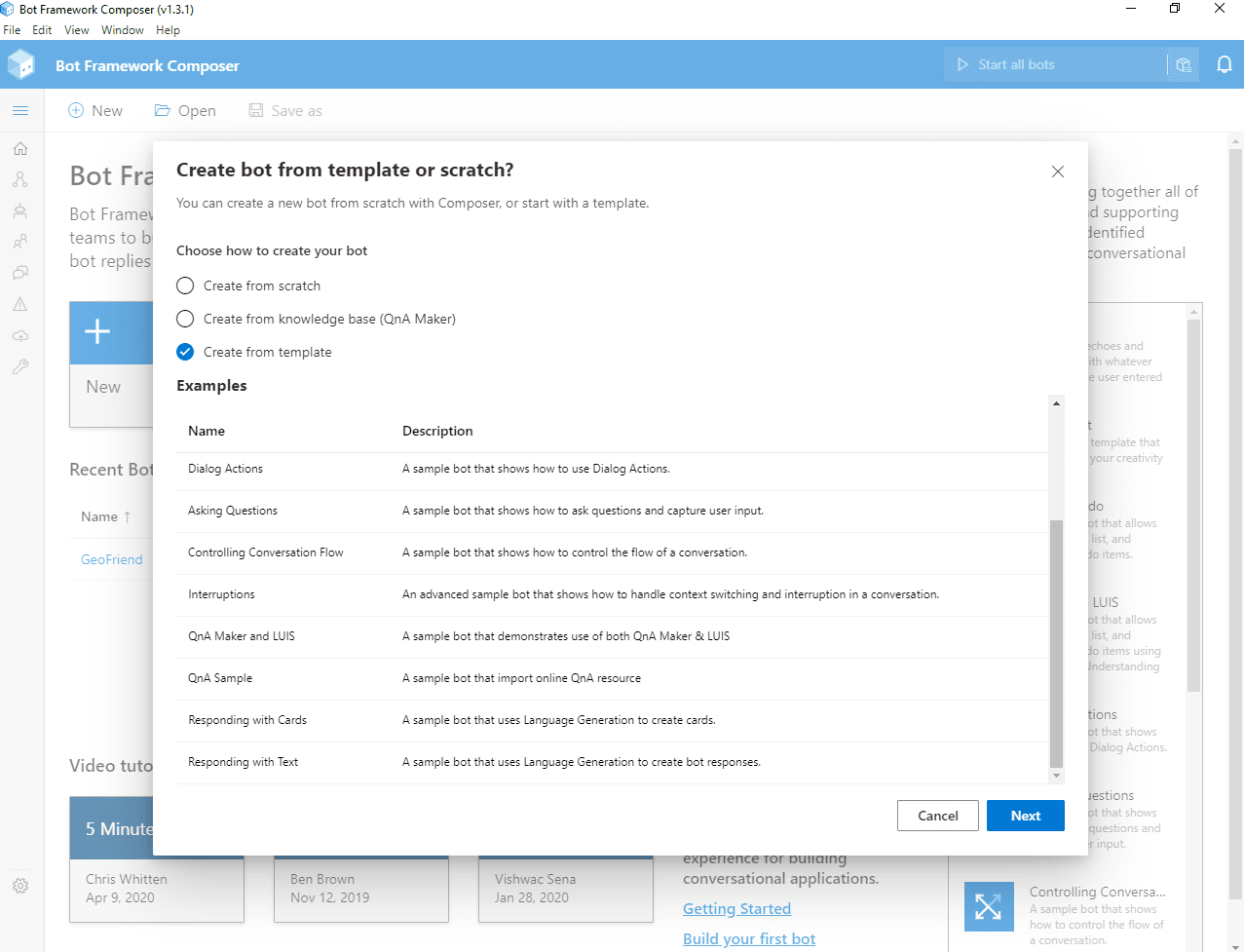

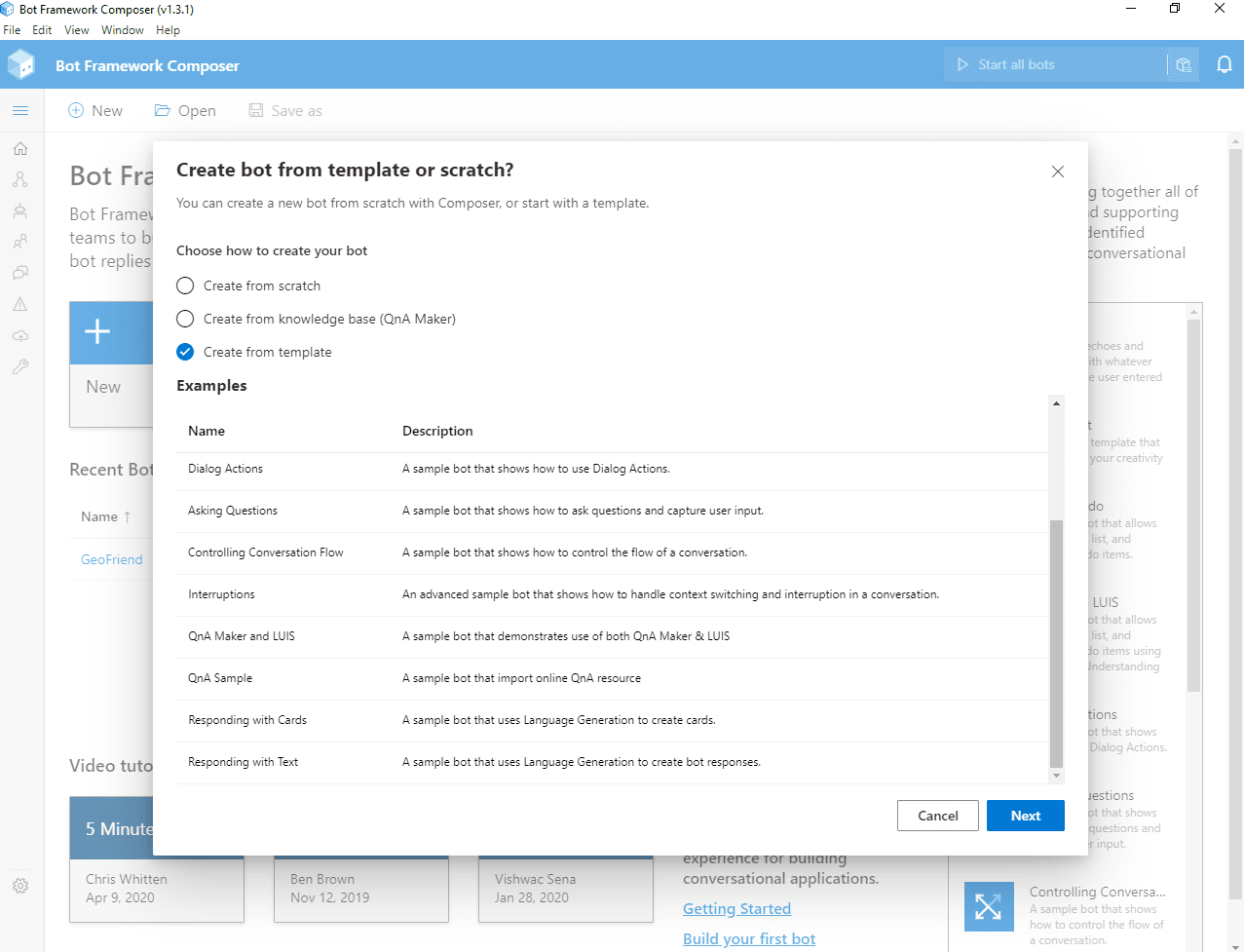

To begin development, you need to [install Bot Framework Composer][InstallComposer] – I recommend to do it as desktop application. Then, after starting it, click on New button, and chose starting template for your bot: QnA Maker and LUIS:

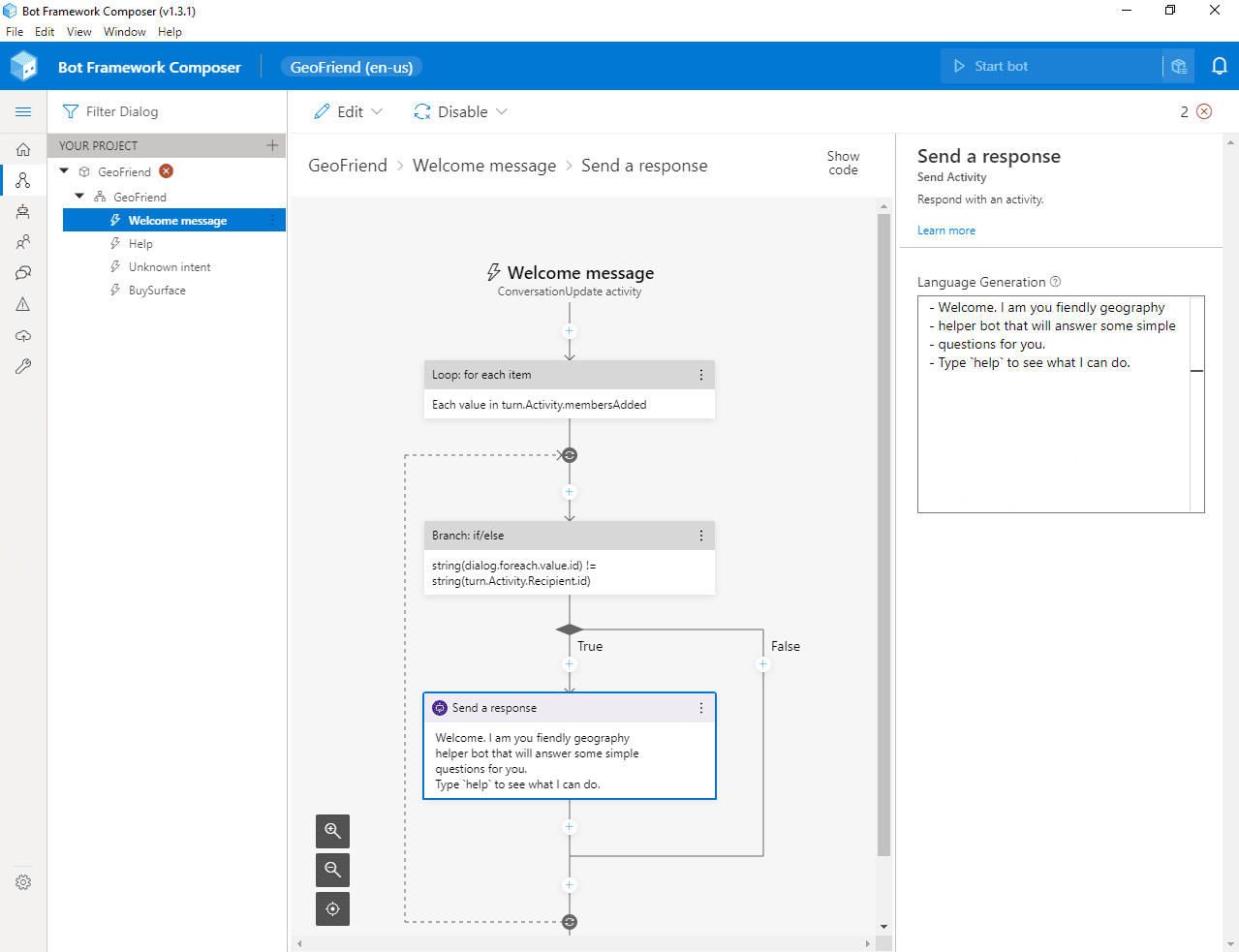

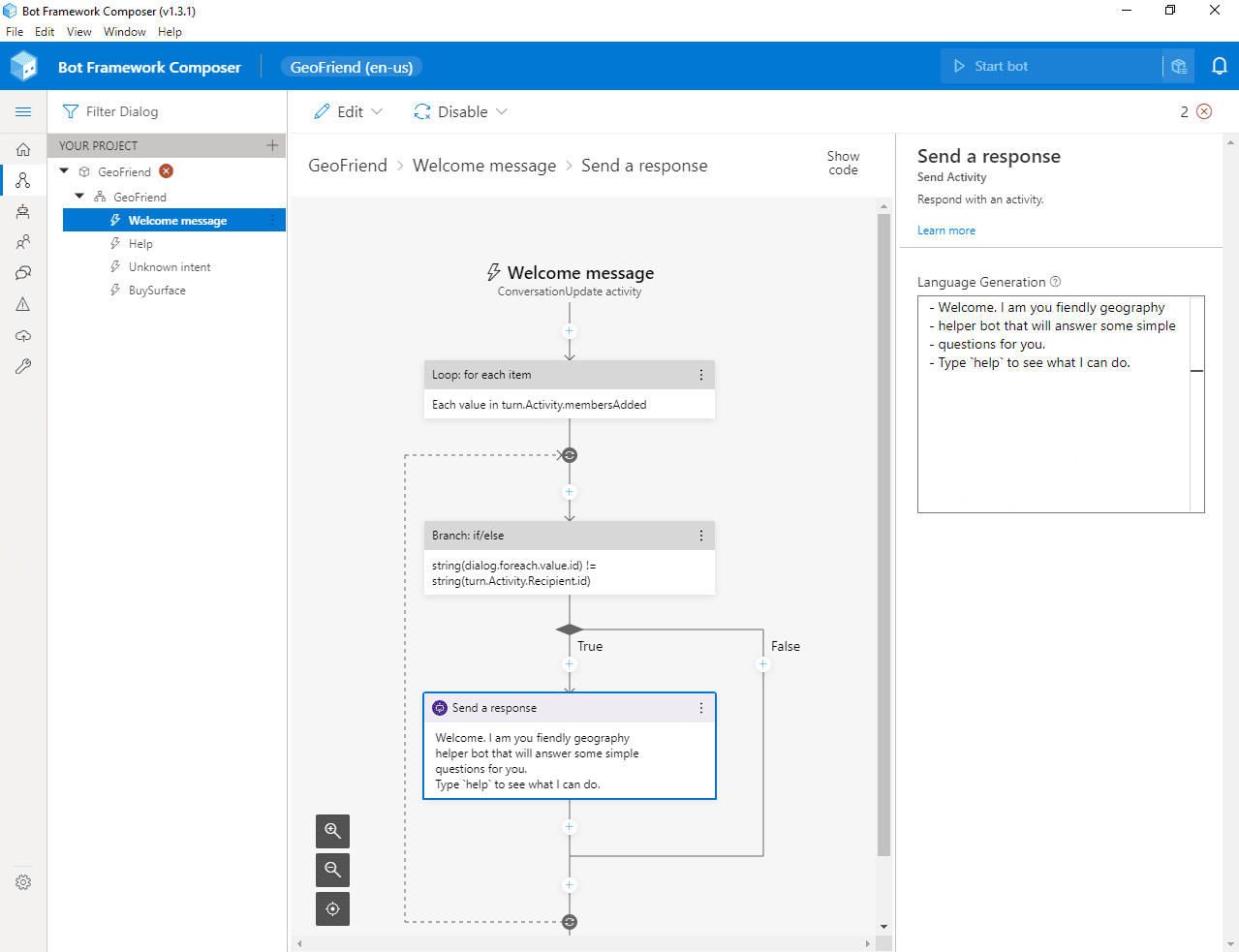

Once you do that, you will see the main screen of composer, with a list of triggers on the left, and the main pane to design dialogs:

Here, you can delete an unused trigger BuySurface (which is left over from the demo), and go to Welcome message to customize the phrase that the bot says to new user. The logic of Welcome Message trigger is a bit complex, you need to look for a box called Send a response, and change the message in the right pane.

The language used to define phrases is called Language generation, or lg. A few useful syntax rules to know:

- A phrase starts with

–. If want to chose from a number of replies, specify several phrases, and one of them will be selected randomly. For example: “`bash

- Hello, I am geography helper bot!

- Hey, welcome!

- Hi, looking forward to chat with you! “`

- Comments start with

>

- Some additional definitions start with

@

- You can use

${…} syntax for variable substitution (we will see an example of this later)

Connecting to Azure Services

To use intelligent backend, you need to create Azure resources for LUIS and QnA Maker and provide corresponding keys to Composer:

- Create LUIS Authoring Resource, and make sure to remember the region in which it was created, and copy key from Keys and Endpoint page in Azure Portal.

- Create QnA Maker Service, and copy corresponding key

- In Composer, go to bot settings by pressing Project Settings button in the left menu (look for a wrench icon, or expand the menu if unsure). Under settings, fill in LUIS Authoring Key, LUIS region and QnA Maker Subscription key.

Starting the Bot

At this point, you can already start chatting with your bot. Click Start bot in the upper right corner, and let some time for the magic to happen. When starting a bot, Composer actually creates and trains underlying LUIS model, builds bot framework project, and starts local web server with a copy of the bot, ready to serve your requests.

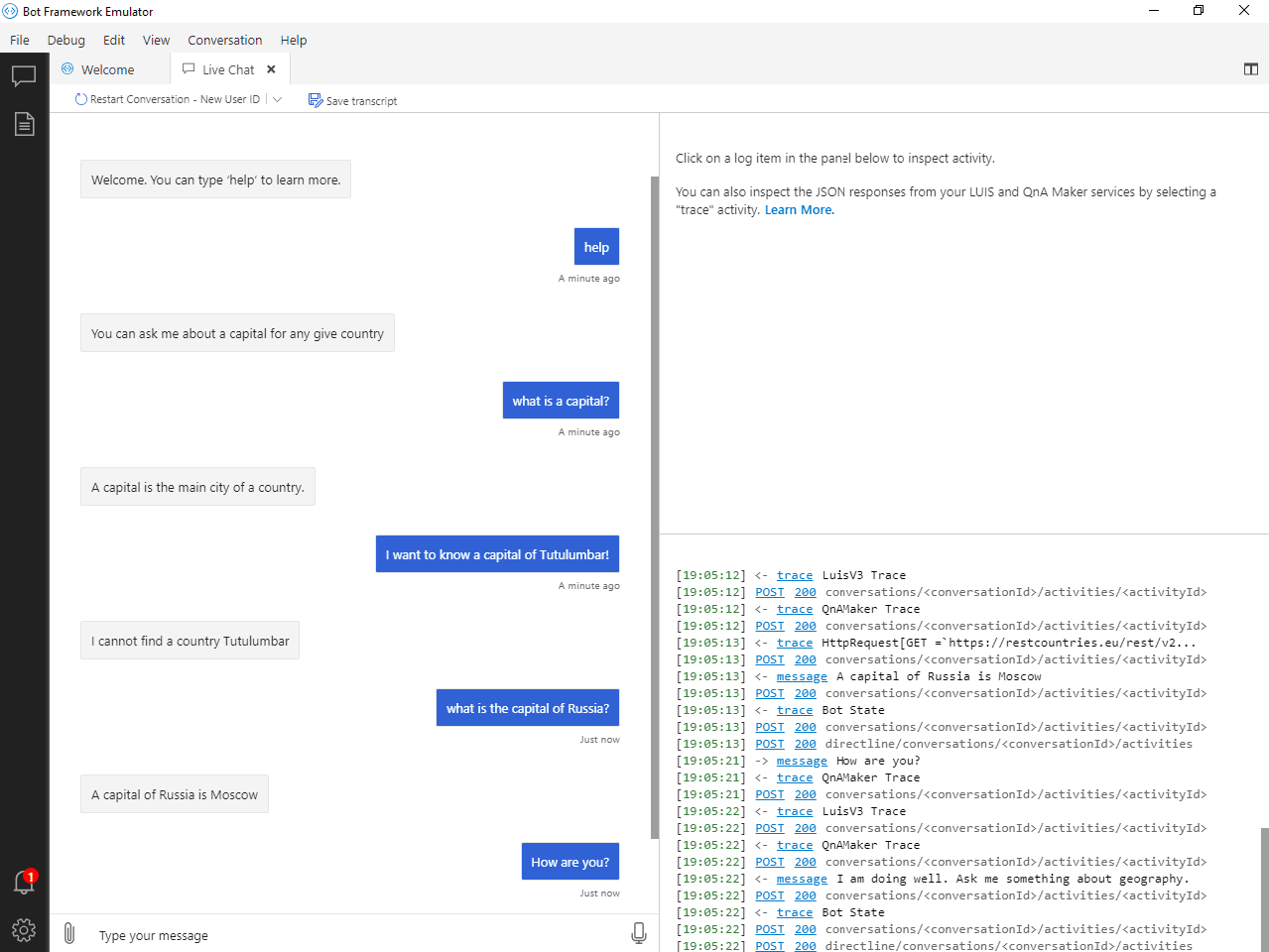

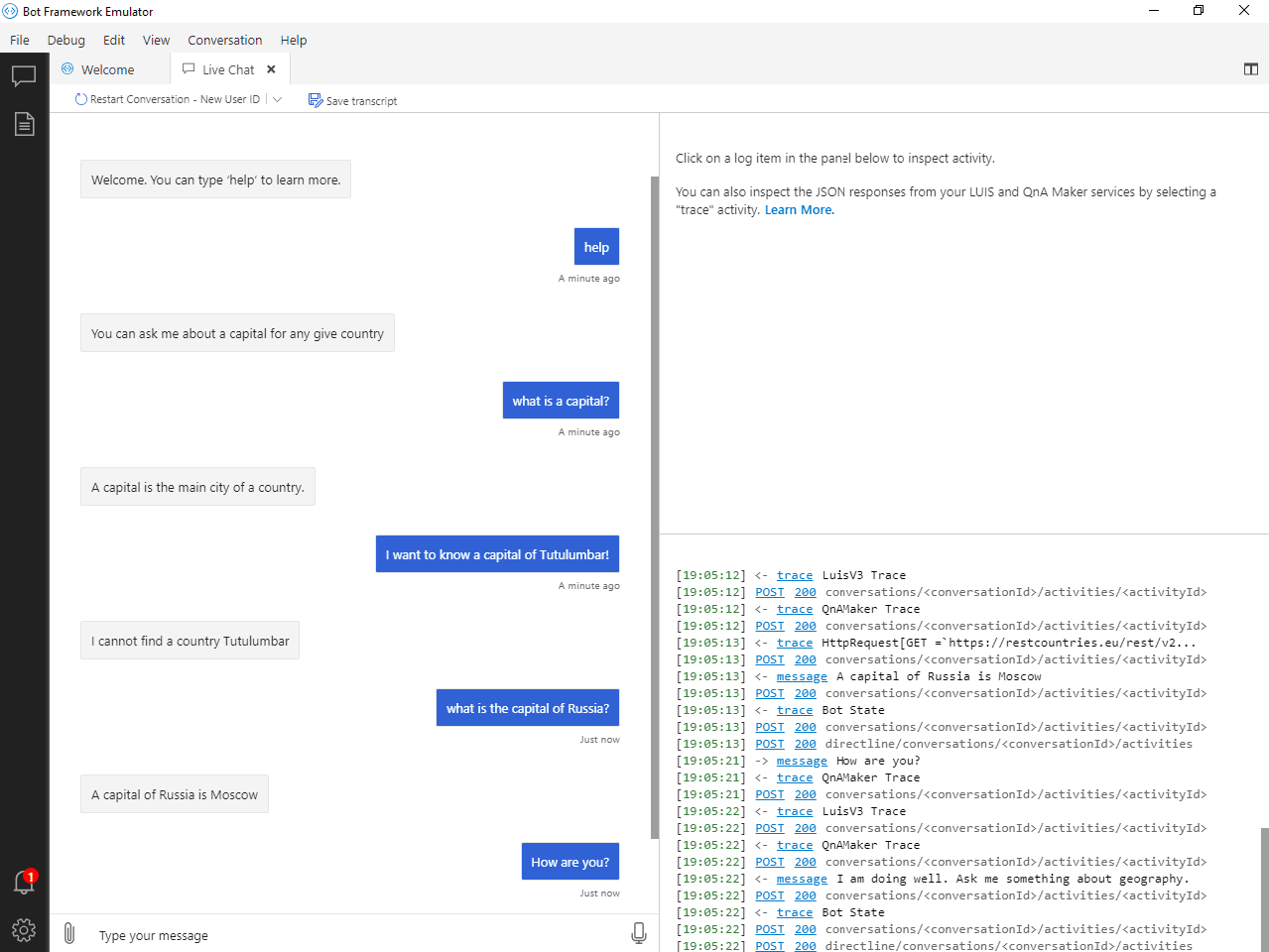

To chat with a bot, click Test in emulator button (you need to have Bot Framework Emulator) installed for this to work). This automatically opens up the chat window with all required settings, and you can start talking to your bot right away.

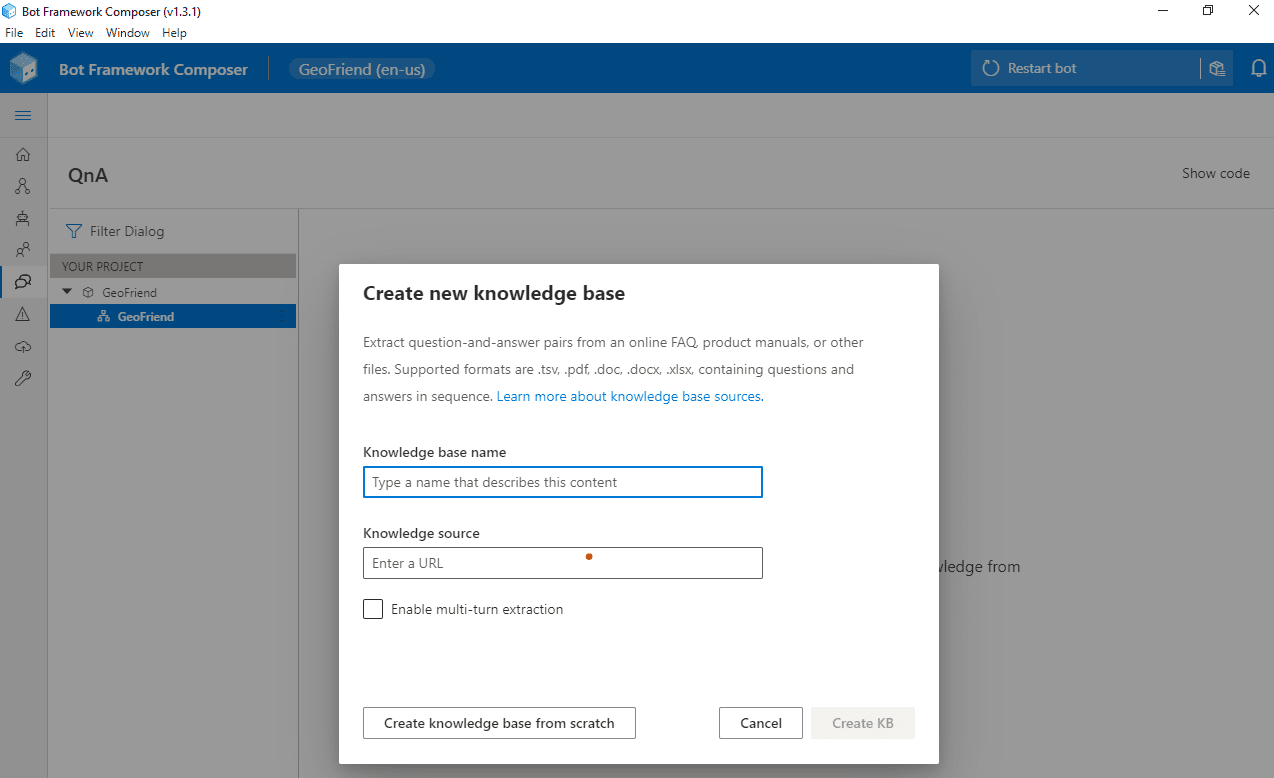

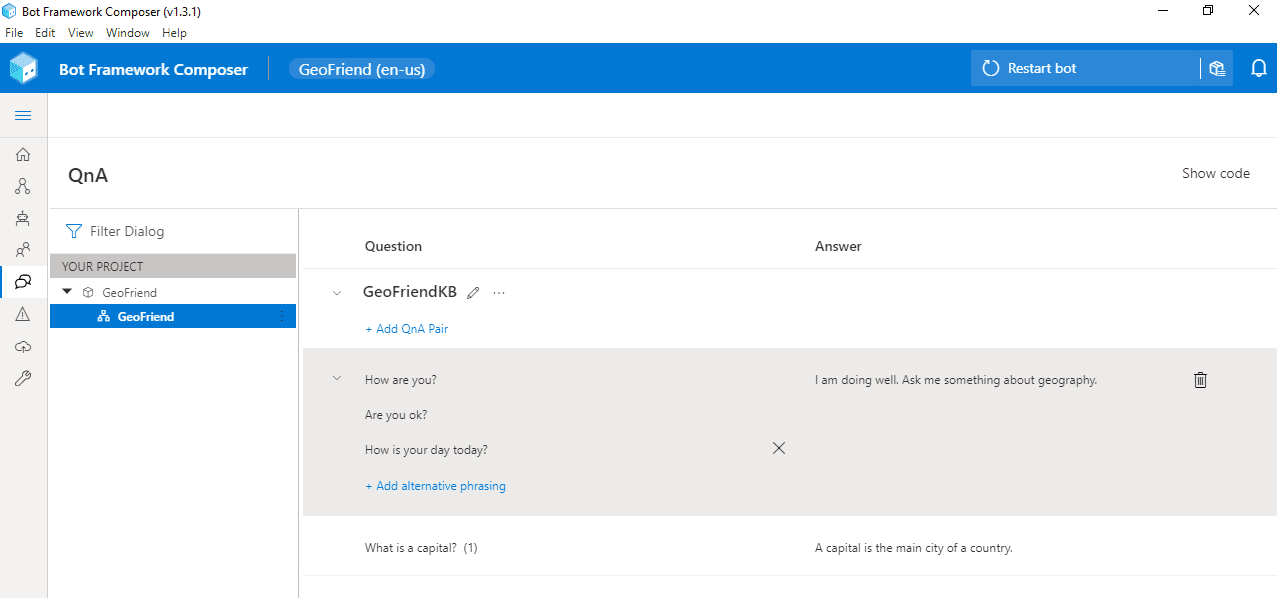

Creating QnA Maker Knowledge base

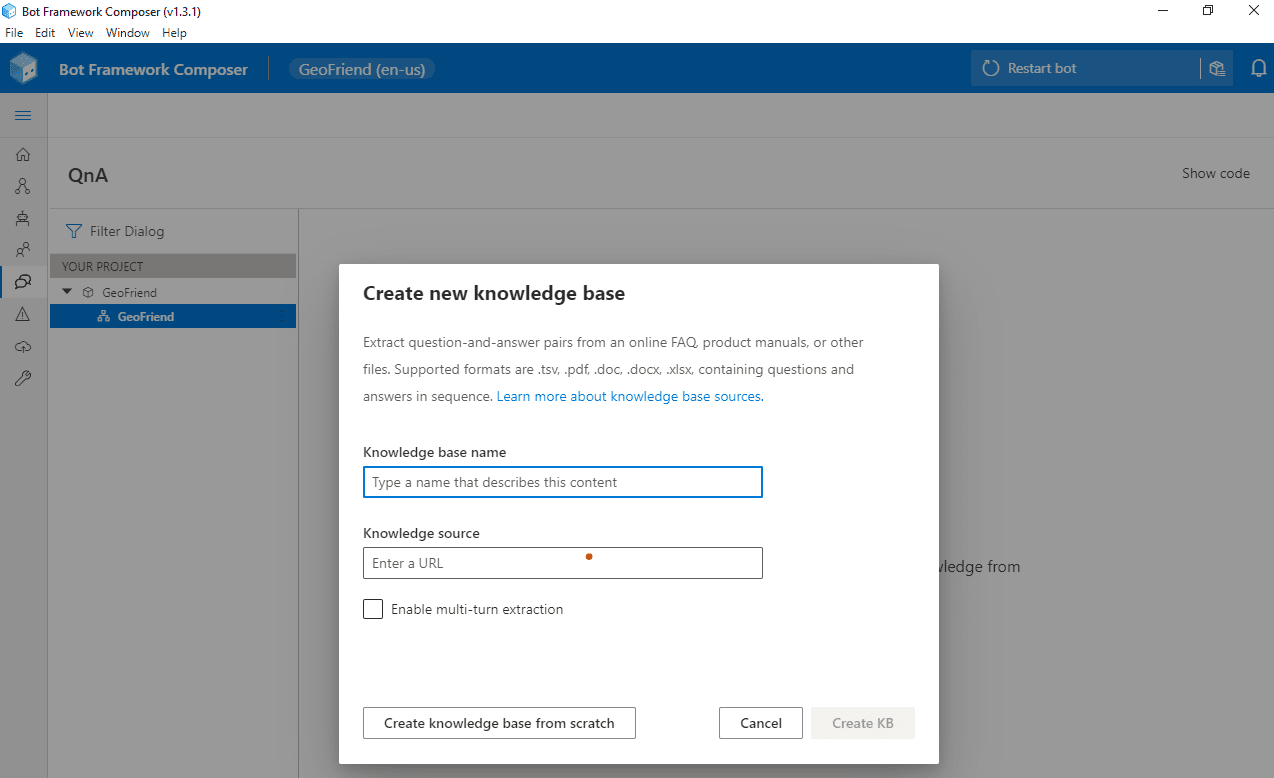

Let’s start with creating term dictionary using QnA Maker. Click on QnA left menu, and then Create new KB.

Here you can either start from some existing data (provided as an URL to html, pdf, Word or Excel document), or start creating phrases from scratch.

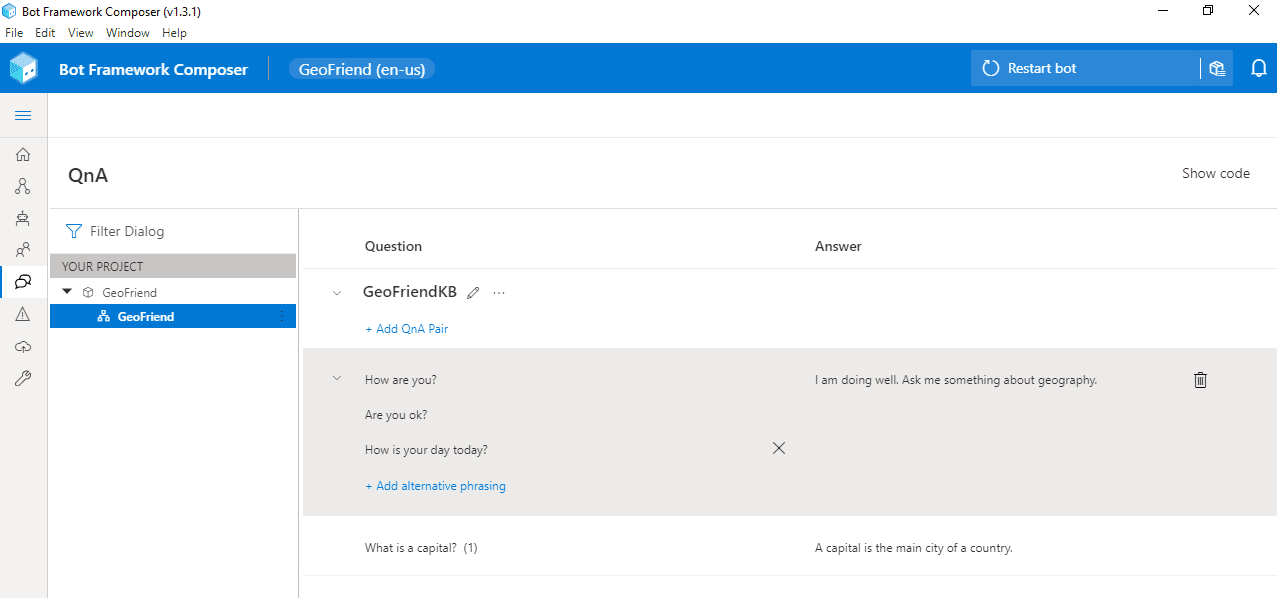

In most of the cases, you would have a document to start with, but in our case we will start from scratch. After creating a knowledge base, you can click Add QnA Pair to add all question-answer combinations you need. Note that you can add several options of the question by using All alternative phrasing link.

In our case, I have added a how are you phrase (with several options), and a phrase to explain the meaning of the word capital.

Having added the phrases, we can start a bot and make sure that it correctly reacts to given phrases, or similar versions of those phrases – QnA maker does not require it to be an exact match, it looks for similar phrases to make a decision on which answer to provide.

Adding Specific Actions with LUIS

To give our bot an ability to give capitals of countries, we need to add some specific functionality to look for a capital, triggered by a certain phrase. We definitely do not want to type all 200+ countries and their capitals into QnA Maker!

A functionality to get information about a country is openly available via REST Countries API. For example, if we make GET request to https://restcountries.eu/rest/v2/name/Russia, we will get JSON response like this:

[ {“name”:“Russian Federation”,

“topLevelDomain”:[“.ru”],

“capital”:“Moscow”, … } ]

To ask for a capital of a given country, a user will say something like What is a capital of Russia?, or I want to know the capital of Italy. This phrase intent can be recognized using LUIS, and the name of the country is also extracted.

To add LUIS trigger, from the Design page of the composer, select your bot dialog and press “…” next to it. You will see Add a trigger option in the drop-down box. Select it, and then chose Intent recognized as trigger type.

Intent Recognized is the most common trigger type. However, you can specify Dialog events, that allow you to structure part of the conversation as a separate dialog, or some conversational activities, such as Handoff to human.

Then, specify trigger phrases, using a variation of LG language. In our case, we will use the following:

– what is a capital of {country=Russia}?

– I want to know a capital of {country=Italy}.

– Give me a capital of {country=Greece}!

Here, we specify a number of trigger phrases starting with –, and we indicate that we want to extract part of the phrase as an entity country. LUIS will automatically train a model to extract entities based on the provided utterances, so make sure to provide a number of possible phrases.

There are some pre-defined entity types, such as datetimeV2, number, etc. Using pre-defined types is recommended, and entity type can be specified using @ <entity_type> <entity_name> notation. In our case, we can use geographyV2 entity type, which extracts geographic locations, including countries.

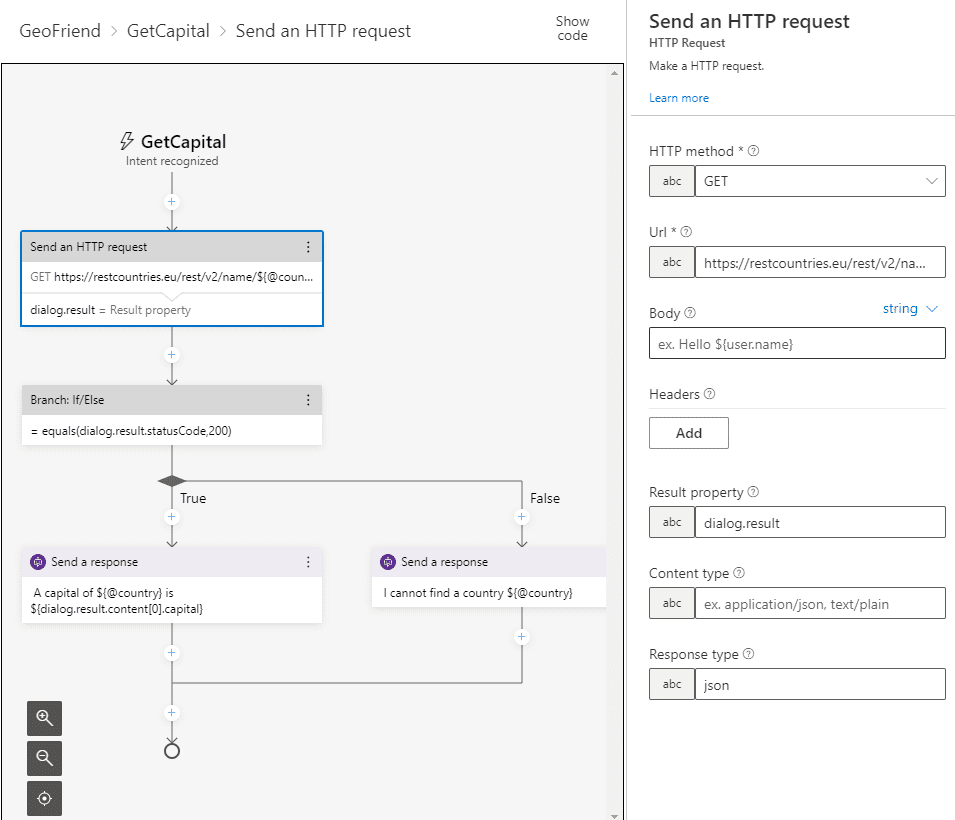

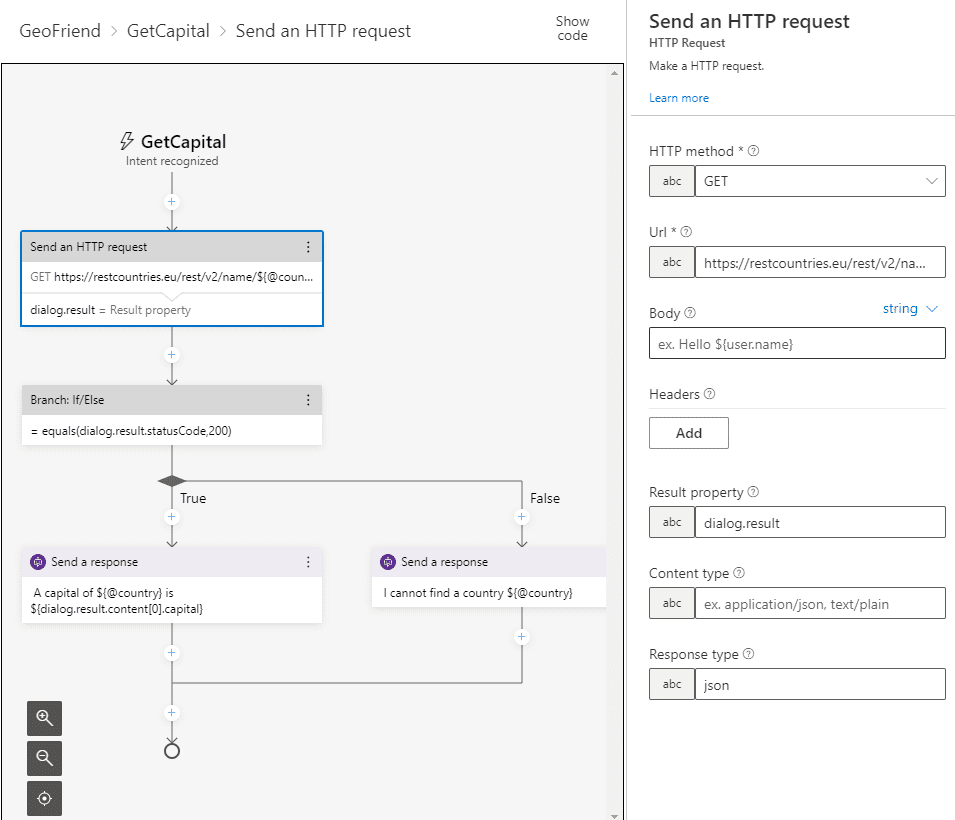

Once we have defined phrase recognizer, we need to add a block that will do the actual REST call and fetch information on the given country. Use use Send HTTP Request block, and specify the following parameters:

This would make the REST call to the API, and the result will be stored into dialog.result property. If we provided a valid country, json result will be automatically parsed, otherwise, invalid operation code will be recorded in dialog.result.statusCode – in our case, 404.

To test if the call was successful and define different logic based on the result, we insert Branch: If/Else block, and specify the following condition: = equals(dialog.result.statusCode,200). True condition will correspond to the left branch, and we will insert Send a response block there, with the following text:

– A capital of ${@country} is ${dialog.result.content[0].capital}

In case result code is not 200, the right branch will be executed, where we will insert an error message. Our final dialog should look like this:

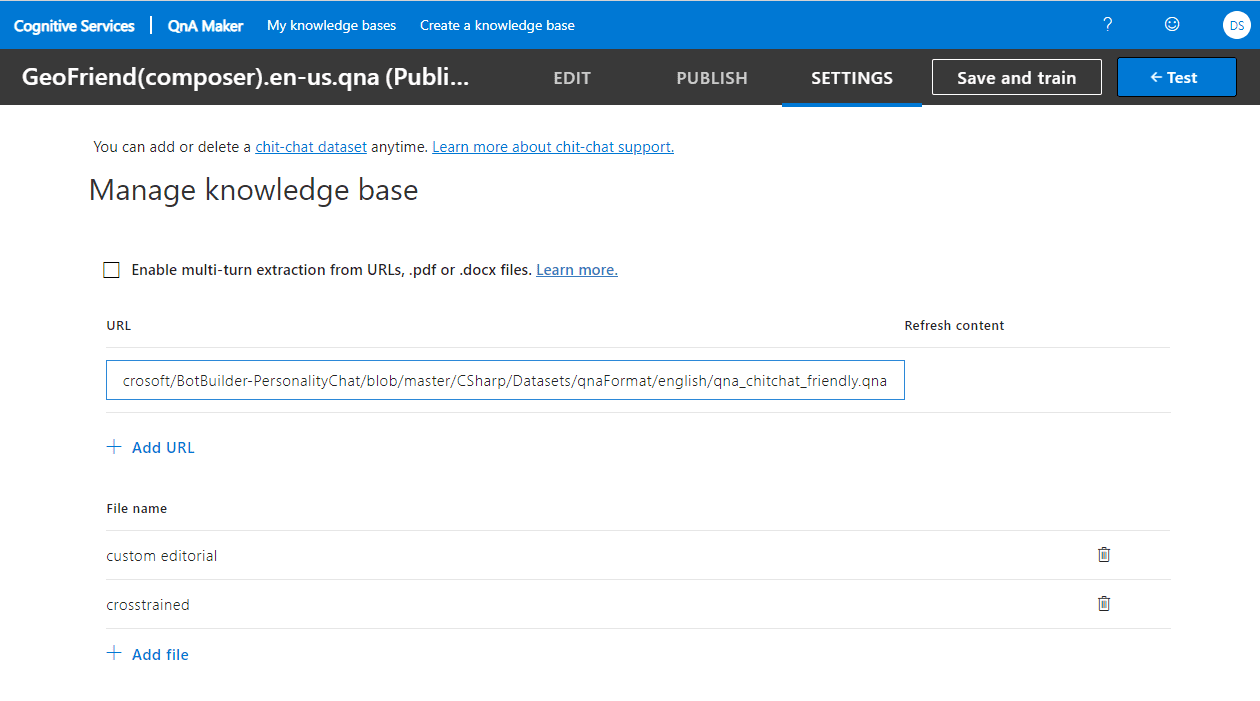

Adding Preconfigured Chit-Chat Functionality

It would be nice if your bot could respond to more everyday phrases, such as How old are you?, or Do you enjoy being a bot. We can define all those phrases in QnA Maker, but that would take us quite some time to do so. Luckily, there is Project Personality Chat that contains a number of pre-defined QnA Maker knowledge bases for several languages, and for a number of personalities:

- Professional

- Friendly

- Witty

- Caring

- Enthusiastic

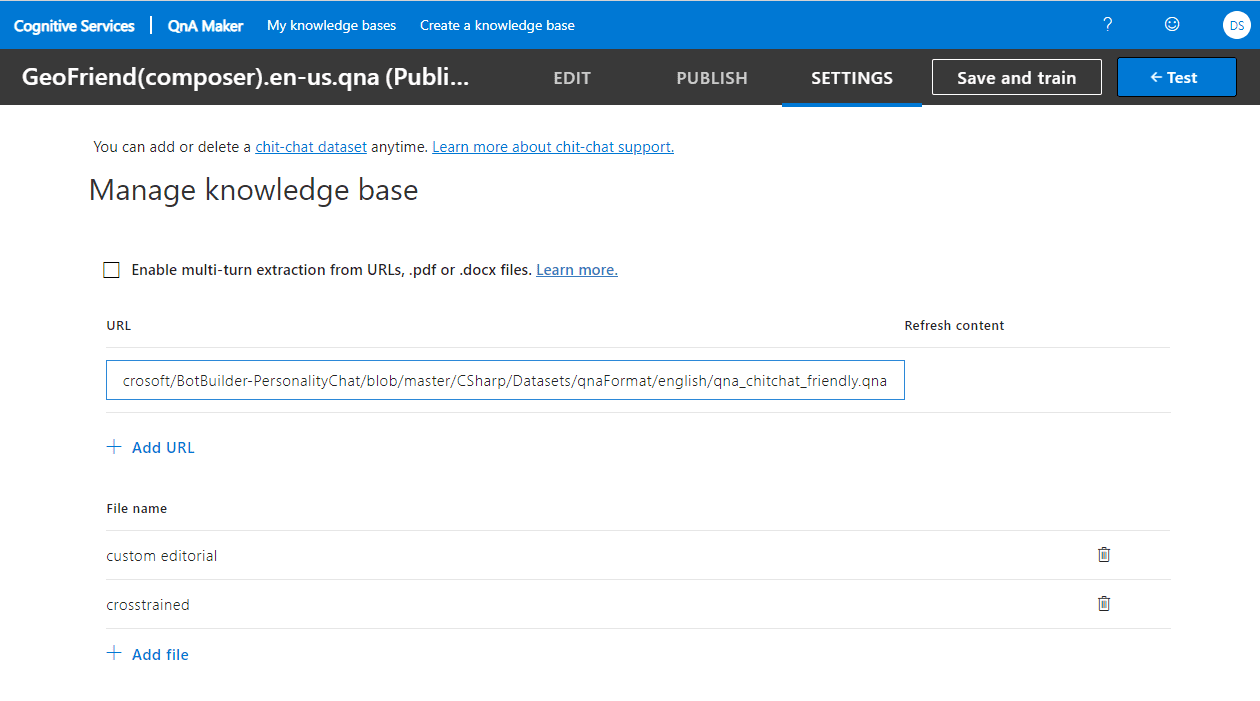

You can grab a link to the knowledge base from here, then go to QnA Maker Portal, find your knowledge base, and add this URL link to your service:

Having done that, click Save and Train, and enjoy a talk to your bot! You can even try ask it about life, universe and everything!

Testing the Bot and Publishing to Azure

Now that our basic bot functionality is complete, we can test the bot in bot emulator:

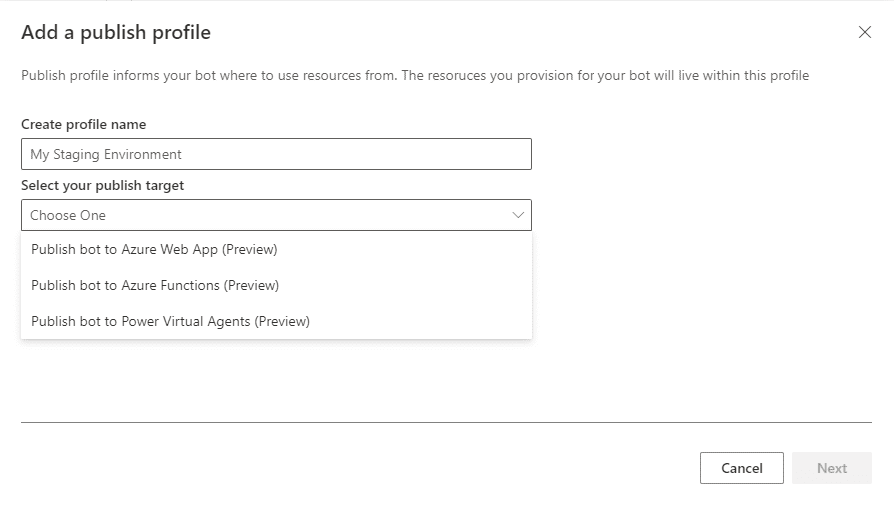

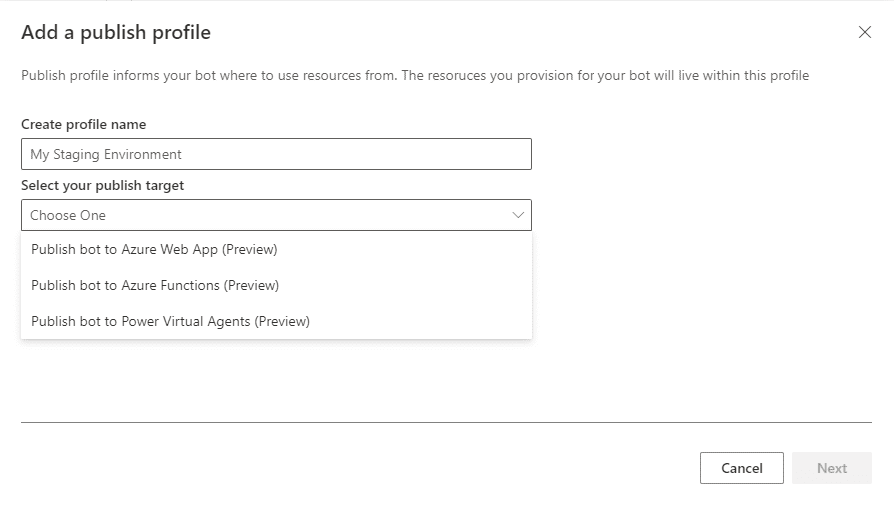

Once the bot is running locally, we can deploy it to Azure right from the Composer. If you go to Publish from the left menu, you will be able to define Publishing profile for your bot. Select Define new publishing profile, and chose one of the following:

The most standard way to deploy is to use Azure Web App. Composer will only require you to provide Azure subscription and resource group name, and it will take care of creating all the required resources (including bot-specific LUIS/QnA Maker instances) automatically. It may take awhile, but it will save you a lot of time and hassle of doing manual deployment.

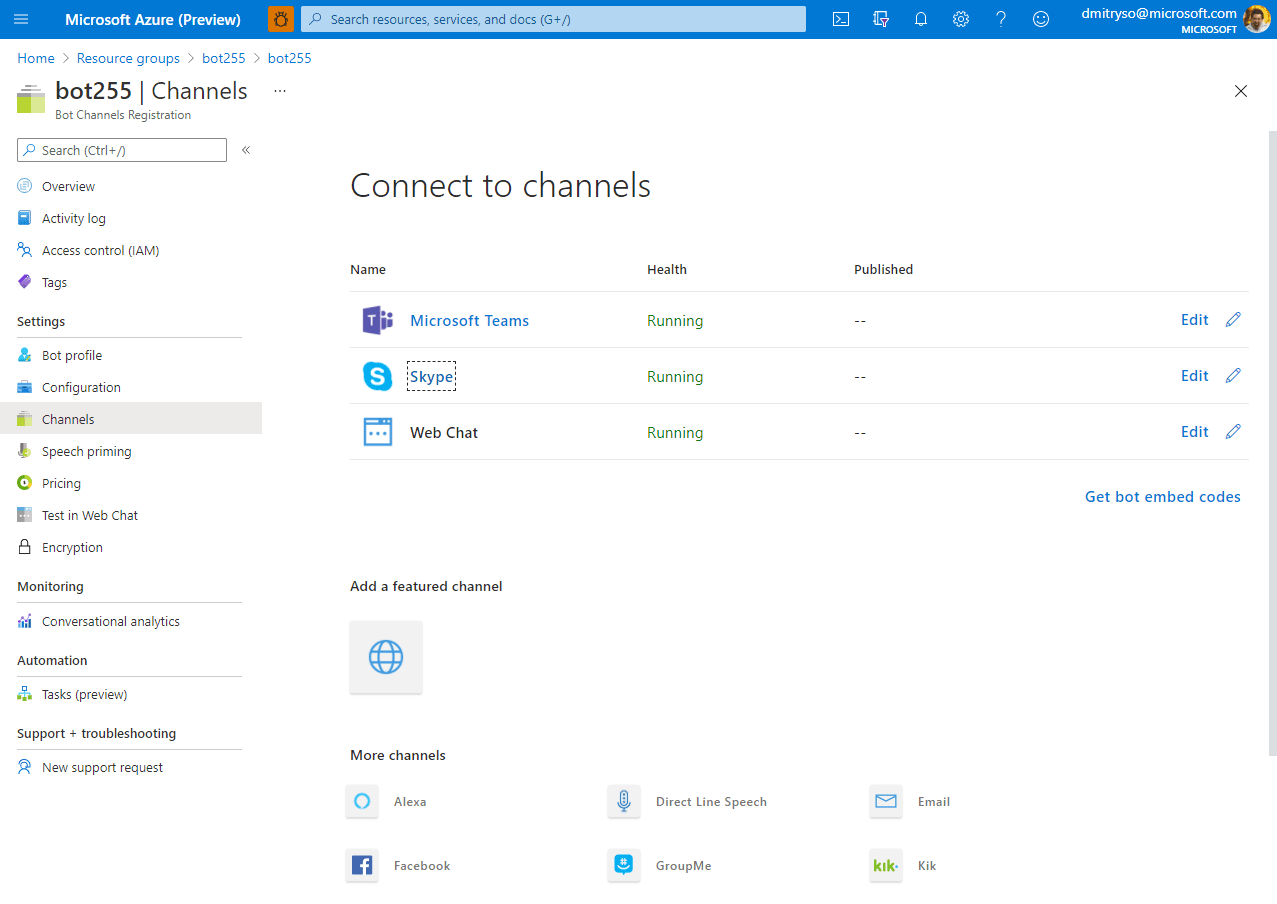

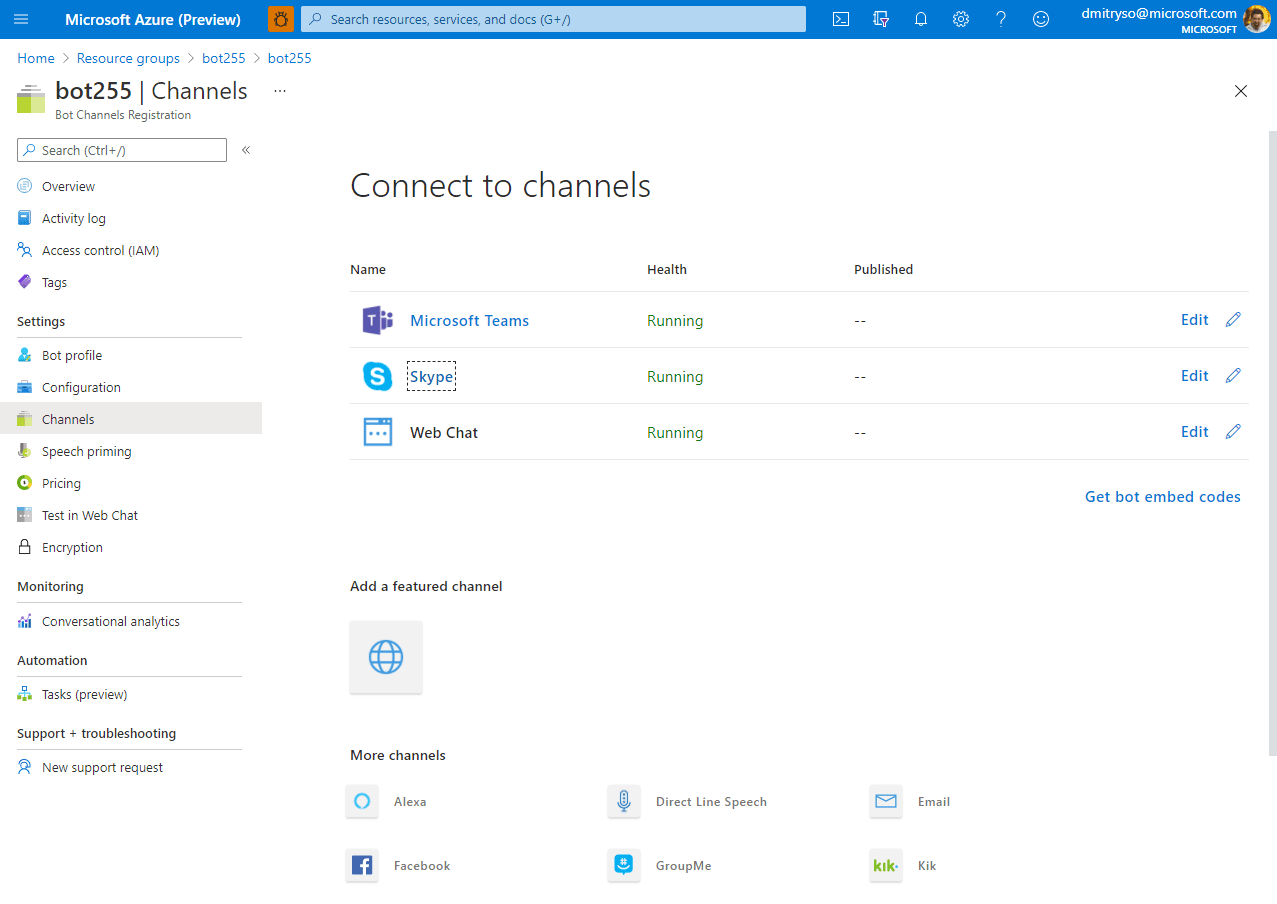

Once the bot is published to Azure, you can go to Azure portal and configure Channels through which you bot would be available to external world, such as Telegram, Microsoft Teams, Slack or Skype.

Conclusion

Creating a bot using Bot Composer seems like an easy thing to do. In fact, you can create quite powerful bots almost without any code! And you can also hook them to your enterprise endpoint using such features as HTTP REST APIs and OAuth authorization.

However, there are cases when you need to significantly extend bot functionality using code. In this case, you have several options:

- Keep main bot authoring in Bot Composer, and develop Custom Actions in C#

- Export complete bot code using Custom Runtime feature of Composer, which exports complete bot code in C# or Javascript, which you can then fully customize. This approach is not ideal, because you will lose the ability to maintain the source of your bot in Composer.

- Write a bot from the beginning in one of the supported languages (C#, JS, Python or Java) using Bot Framework.

If you want to explore how the same Educational bot for Geography can be written in C#, check out this Microsoft Learn Module: Create a chat bot to help students learn with Azure Bot Service.

I am sure conversational approach to UI can prove useful in many cases, and Microsoft Conversational Platform offers you wide variety of tools to support all your scenarios.

by Contributed | Feb 17, 2021 | Technology

This article is contributed. See the original author and article here.

On November 10, 2020, Microsoft announced the general availability of Microsoft Endpoint DLP (Data Loss Prevention). Endpoint DLP is a native integrated experience that identifies and protects sensitive information accessed by information workers in the applications they use every day. It is part of Microsoft Information Protection, an intelligent, unified, and extensible solution to know your data, protect your data, and prevent data loss across all the touchpoints within an enterprise – including Microsoft 365 apps and services, on-premises file stores, endpoint devices, and third-party SaaS applications and services.

Seamless Endpoint DLP onboarding for Microsoft Defender for Endpoint customers

As a Microsoft Defender for Endpoint customer, you can take advantage of a seamless onboarding to Endpoint DLP.

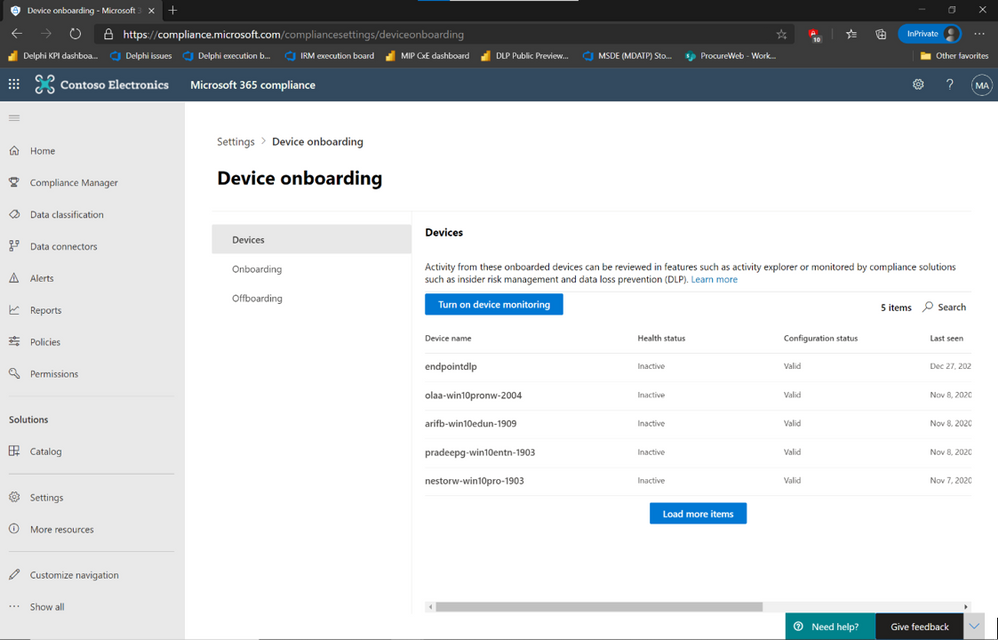

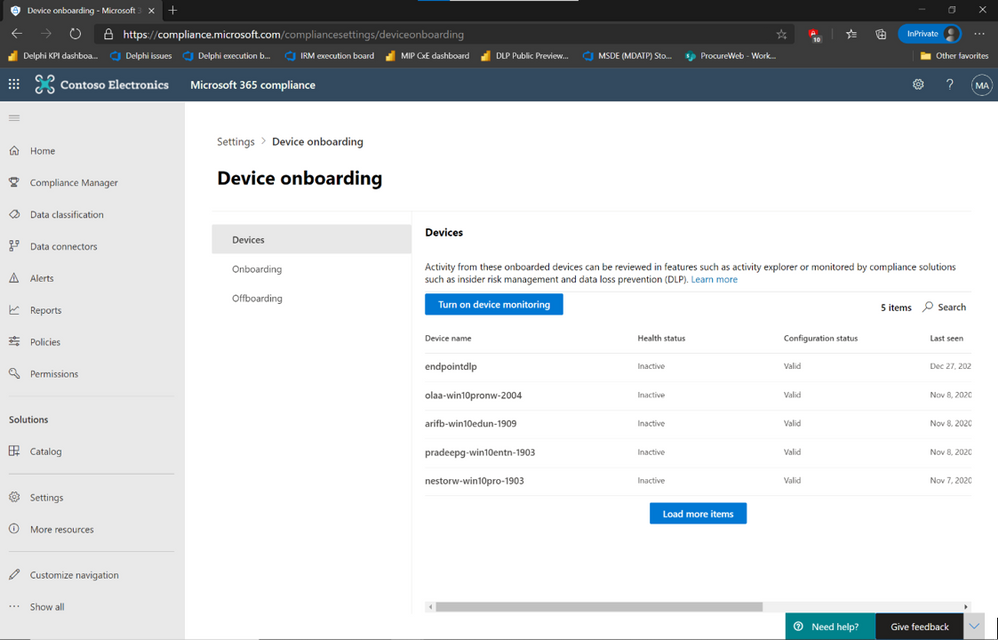

If you own the required licenses, all it takes is a single click in Microsoft 365 Compliance portal’s device onboarding the to enable Endpoint DLP across all your Windows 10 devices that are onboarded to Defender for Endpoint.

If you don’t own the appropriate license, we encourage you to try out our Endpoint DLP capabilities by signing up for a free trial of Microsoft 365 E5 Compliance, available through the

For more information, read our official documentation on Endpoint DLP onboarding.

Once a device is onboarded, Endpoint DLP automatically provides telemetry information and data discovery capabilities for sensitive data out of the box. Endpoint DLP monitors sensitive data for file access, copy, paste, print and saving to removable media, file shares and uploads via browsers for Office 365, PDF, and CSV files without requiring the configuration of policies. Endpoint DLP also analyzes files for sensitivity related information by parsing the file content, extracting sensitive information types, and assigned sensitivity label, if it exists. This telemetry is available in Activity Explorer, alongside similar telemetry from other Microsoft workloads.

This telemetry data provides a direct view into information worker’s regular interactions with sensitive information and can be used to streamline the identification and deployment of DLP policies that would have the most significant impact on improving the overall security posture of the organization by reducing the risk of sensitive data loss.

Deprecating Azure Information Protection integration with Microsoft Defender for Endpoint (preview)

Microsoft Defender for Endpoint has an integration with Azure Information Protection (AIP) that shares sensitive data user activity and device risk data. This information is stored in the Log Analytics workspace and is displayed in the AIP Analytics screens, along with the other AIP audit logs. It is an integration has been available to customers as part of a Public Preview.

Endpoint DLP incorporates an improved discovery and protection solution for sensitive data stored on endpoint devices that facilitates greater visibility and integration between solutions. On March 29, 2021, the current integration between Microsoft Defender for Endpoint and AIP will be deprecated. Existing Microsoft Defender for Endpoint customers who have been using the Public Preview of the AIP integration are encouraged to move to Endpoint DLP and enjoy the improved security capabilities and activity visibility in . For more information about Endpoint DLP, see please read our documentation as well as our announcement blog.

The integration is controlled by an on/off toggle in the Microsoft Defender Security Center under Settings -> Advanced features:

When deprecated, this setting will be removed, and Microsoft Defender for Endpoint will not forward signals to Azure Information Protection.

If you haven’t yet tried out Endpoint DLP, sign up for a free trial in the Microsoft 365 admin center.

If you’re not yet taking advantage of Microsoft’s industry leading optics and endpoint security capabilities, sign up for a free trial of Microsoft Defender Endpoint today.

Additional resources:

- For more information on Data Loss Prevention, please see our blog and our documentation

- For videos on Microsoft’s Unified DLP approach and Endpoint DLP, watch the following:

- For more information on DLP alerts and event management, check out the documentation

Recent Comments