by Scott Muniz | Jan 4, 2021 | Security, Technology

This article is contributed. See the original author and article here.

aminocom — cpe_wan_management_protocol

|

Command Injection in the CPE WAN Management Protocol (CWMP) registration in Amino Communications AK45x series, AK5xx series, AK65x series, Aria6xx series, Aria7/AK7Xx series and Kami7B allows man-in-the-middle attackers to execute arbitrary commands with root level privileges. |

2020-12-30 |

not yet calculated |

CVE-2020-10209

MISC |

| aminocom — entonewebengine |

Command Injection in EntoneWebEngine in Amino Communications AK45x series, AK5xx series, AK65x series, Aria6xx series, Aria7/AK7Xx series and Kami7B allows authenticated remote attackers to execute arbitrary commands with root user privileges. |

2020-12-30 |

not yet calculated |

CVE-2020-10208

MISC |

aminocom — entonewebengine

|

Use of Hard-coded Credentials in EntoneWebEngine in Amino Communications AK45x series, AK5xx series, AK65x series, Aria6xx series, Aria7/AK7Xx series and Kami7B allows remote attackers to retrieve and modify the device settings. |

2020-12-29 |

not yet calculated |

CVE-2020-10207

MISC |

aminocom — ssh_keys

|

Because of hard-coded SSH keys for the root user in Amino Communications AK45x series, AK5xx series, AK65x series, Aria6xx series, Aria7/AK7Xx series, Kami7B, an attacker may remotely log in through SSH. |

2020-12-29 |

not yet calculated |

CVE-2020-10210

MISC |

aminocom — vncserver

|

Use of a Hard-coded Password in VNCserver in Amino Communications AK45x series, AK5xx series, AK65x series, Aria6xx series, Aria7/AK7Xx series and Kami7B allows local attackers to view and interact with the video output of the device. |

2020-12-30 |

not yet calculated |

CVE-2020-10206

MISC |

arista — eos

|

An issue with ARP packets in Arista’s EOS affecting the 7800R3, 7500R3, and 7280R3 series of products may result in issues that cause a kernel crash, followed by a device reload. The affected Arista EOS versions are: 4.24.2.4F and below releases in the 4.24.x train; 4.23.4M and below releases in the 4.23.x train; 4.22.6M and below releases in the 4.22.x train. |

2020-12-28 |

not yet calculated |

CVE-2020-24360

CONFIRM |

arista — eos

|

In EVPN VxLAN setups in Arista EOS, specific malformed packets can lead to incorrect MAC to IP bindings and as a result packets can be incorrectly forwarded across VLAN boundaries. This can result in traffic being discarded on the receiving VLAN. This affects versions: 4.21.12M and below releases in the 4.21.x train; 4.22.7M and below releases in the 4.22.x train; 4.23.5M and below releases in the 4.23.x train; 4.24.2F and below releases in the 4.24.x train. |

2020-12-28 |

not yet calculated |

CVE-2020-26569

CONFIRM |

arista — eos

|

In Arista EOS malformed packets can be incorrectly forwarded across VLAN boundaries in one direction. This vulnerability is only susceptible to exploitation by unidirectional traffic (ex. UDP) and not bidirectional traffic (ex. TCP). This affects: EOS 7170 platforms version 4.21.4.1F and below releases in the 4.21.x train; EOS X-Series versions 4.21.11M and below releases in the 4.21.x train; 4.22.6M and below releases in the 4.22.x train; 4.23.4M and below releases in the 4.23.x train; 4.24.2.1F and below releases in the 4.24.x train. |

2020-12-28 |

not yet calculated |

CVE-2020-15898

CONFIRM |

bolt — bolt

|

Bolt before 3.7.2 does not restrict filter options in a Request in the Twig context, and is therefore inconsistent with the “How to Harden Your PHP for Better Security” guidance. |

2020-12-30 |

not yet calculated |

CVE-2020-28925

MISC

MISC |

cbor — cbor

|

An issue was discovered in the serde_cbor crate before 0.10.2 for Rust. The CBOR deserializer can cause stack consumption via nested semantic tags. |

2020-12-31 |

not yet calculated |

CVE-2019-25001

MISC |

charging_limit_current_write — charging_limit_current_write

|

In functions charging_limit_current_write and charging_limit_time_write in /SM8250_Q_Master/android/vendor/oppo_charger/oppo/oppo_charger.c have not checked the parameters, which causes a vulnerability. |

2020-12-31 |

not yet calculated |

CVE-2020-11832

MISC |

cockpit-project.org — cockpit_234

|

** DISPUTED ** An SSRF issue was discovered in cockpit-project.org Cockpit 234. NOTE: this is unrelated to the Agentejo Cockpit product. NOTE: the vendor states “I don’t think [it] is a big real-life issue.” |

2020-12-30 |

not yet calculated |

CVE-2020-35850

MISC

MISC |

d-link — dap-1650_devices

|

An issue was discovered on D-Link DAP-1650 devices through v1.03b07 before 1.04B02_J65H Hot Fix. Attackers can bypass authentication via forceful browsing. |

2020-12-30 |

not yet calculated |

CVE-2019-12768

MISC |

dotcms — dotcms

|

dotCMS before 20.10.1 allows SQL injection, as demonstrated by the /api/v1/containers orderby parameter. The PaginatorOrdered classes that are used to paginate results of a REST endpoints do not sanitize the orderBy parameter and in some cases it is vulnerable to SQL injection attacks. A user must be an authenticated manager in the dotCMS system to exploit this vulnerability. |

2020-12-30 |

not yet calculated |

CVE-2020-27848

CONFIRM

MISC |

draytek — vigot2960

|

DrayTek Vigor2960 1.5.1 allows remote command execution via shell metacharacters in a toLogin2FA action to mainfunction.cgi. |

2020-12-31 |

not yet calculated |

CVE-2020-19664

MISC

MISC |

dropbear — dropbear

|

Dropbear 2011.54 through 2018.76 has an inconsistent failure delay that may lead to revealing valid usernames, a different issue than CVE-2018-15599. |

2020-12-30 |

not yet calculated |

CVE-2019-12953

MISC |

drupal — aes_encryption

|

The AES encryption project 7.x and 8.x for Drupal does not sufficiently prevent attackers from decrypting data, aka SA-CONTRIB-2017-027. NOTE: This project is not covered by Drupal’s security advisory policy. |

2021-01-01 |

not yet calculated |

CVE-2017-20001

MISC |

drupal — kcfinder_integration

|

uploader.php in the KCFinder integration project through 2018-06-01 for Drupal mishandles validation, aka SA-CONTRIB-2018-024. NOTE: This project is not covered by Drupal’s security advisory policy. |

2021-01-01 |

not yet calculated |

CVE-2018-25002

MISC

MISC

MISC |

drupal — rest/json

|

The REST/JSON project 7.x-1.x for Drupal allows comment access bypass, aka SA-CONTRIB-2016-033. NOTE: This project is not covered by Drupal’s security advisory policy. |

2021-01-01 |

not yet calculated |

CVE-2016-20002

MISC |

drupal — rest/json

|

The REST/JSON project 7.x-1.x for Drupal allows node access bypass, aka SA-CONTRIB-2016-033. NOTE: This project is not covered by Drupal’s security advisory policy. |

2021-01-01 |

not yet calculated |

CVE-2016-20001

MISC |

drupal — rest/json

|

The REST/JSON project 7.x-1.x for Drupal allows session enumeration, aka SA-CONTRIB-2016-033. NOTE: This project is not covered by Drupal’s security advisory policy. |

2021-01-01 |

not yet calculated |

CVE-2016-20008

MISC |

drupal — rest/json

|

The REST/JSON project 7.x-1.x for Drupal allows user enumeration, aka SA-CONTRIB-2016-033. NOTE: This project is not covered by Drupal’s security advisory policy. |

2021-01-01 |

not yet calculated |

CVE-2016-20003

MISC |

drupal — rest/json

|

The REST/JSON project 7.x-1.x for Drupal allows field access bypass, aka SA-CONTRIB-2016-033. NOTE: This project is not covered by Drupal’s security advisory policy. |

2021-01-01 |

not yet calculated |

CVE-2016-20004

MISC |

drupal — rest/json

|

The REST/JSON project 7.x-1.x for Drupal allows user registration bypass, aka SA-CONTRIB-2016-033. NOTE: This project is not covered by Drupal’s security advisory policy. |

2021-01-01 |

not yet calculated |

CVE-2016-20005

MISC |

drupal — rest/json

|

The REST/JSON project 7.x-1.x for Drupal allows blockage of user logins, aka SA-CONTRIB-2016-033. NOTE: This project is not covered by Drupal’s security advisory policy. |

2021-01-01 |

not yet calculated |

CVE-2016-20006

MISC |

drupal — rest/json

|

The REST/JSON project 7.x-1.x for Drupal allows session name guessing, aka SA-CONTRIB-2016-033. NOTE: This project is not covered by Drupal’s security advisory policy. |

2021-01-01 |

not yet calculated |

CVE-2016-20007

MISC |

drupal — webform_report

|

The Webform Report project 7.x-1.x-dev for Drupal allows remote attackers to view submissions by visiting the /rss.xml page. NOTE: This project is not covered by Drupal’s security advisory policy. |

2021-01-01 |

not yet calculated |

CVE-2019-25012

MISC |

| egavilanmedia — egavilanmedia |

EGavilanMedia User Registration and Login System With Admin Panel 1.0 is affected by cross-site scripting (XSS) in the Admin Panel – Manage User tab using the Full Name of the user. This vulnerability can result in the attacker injecting the XSS payload in the User Registration section and each time admin visits the manage user section from the admin panel, the XSS triggers and the attacker can steal the cookie according to the crafted payload. |

2020-12-30 |

not yet calculated |

CVE-2020-29230

MISC

MISC |

| egavilanmedia — egavilanmedia |

EGavilanMedia User Registration and Login System With Admin Panel 1.0 is affected by cross-site scripting (XSS) in the Admin Profile Page. This vulnerability can result in the attacker injecting the XSS payload in Admin Full Name and each time admin visits the Profile page from the admin panel, the XSS triggers. |

2020-12-30 |

not yet calculated |

CVE-2020-29231

MISC

MISC |

egavilanmedia — egavilanmedia

|

EGavilanMedia User Registration and Login System With Admin Panel 1.0 is affected by SQL injection in the User Login Page. |

2020-12-30 |

not yet calculated |

CVE-2020-29228

MISC

MISC |

electron — zonote

|

zonote through 0.4.0 allows XSS via a crafted note, with resultant Remote Code Execution (because nodeIntegration in webPreferences is true). |

2021-01-01 |

not yet calculated |

CVE-2020-35717

MISC

MISC

MISC |

exponentcms — exponent_cms

|

Exponent CMS before 2.6.0 has improper input validation in cron/find_help.php. |

2020-12-31 |

not yet calculated |

CVE-2016-9023

MISC

MISC |

exponentcms — exponent_cms

|

Exponent CMS before 2.6.0 has improper input validation in storeController.php. |

2020-12-31 |

not yet calculated |

CVE-2016-9021

MISC

MISC |

exponentcms — exponent_cms

|

Exponent CMS before 2.6.0 has improper input validation in usersController.php. |

2020-12-31 |

not yet calculated |

CVE-2016-9022

MISC

MISC |

exponentcms — exponent_cms

|

Exponent CMS before 2.6.0 has improper input validation in purchaseOrderController.php. |

2020-12-31 |

not yet calculated |

CVE-2016-9025

MISC

MISC |

exponentcms — exponent_cms

|

Exponent CMS before 2.6.0 has improper input validation in fileController.php. |

2020-12-31 |

not yet calculated |

CVE-2016-9026

MISC

MISC |

foxitsoftware — foxit_reader

|

An issue was discovered in Foxit Reader before 10.1.1 (and before 4.1.1 on macOS) and PhantomPDF before 9.7.5 and 10.x before 10.1.1 (and before 4.1.1 on macOS). An attacker can spoof a certified PDF document via an Evil Annotation Attack because the products fail to consider a null value for a Subtype entry of the Annotation dictionary, in an incremental update. |

2020-12-31 |

not yet calculated |

CVE-2020-35931

MISC |

golang — docker_engine

|

util/binfmt_misc/check.go in Builder in Docker Engine before 19.03.9 calls os.OpenFile with a potentially unsafe qemu-check temporary pathname, constructed with an empty first argument in an ioutil.TempDir call. |

2020-12-30 |

not yet calculated |

CVE-2020-27534

MISC

MISC

MISC

MISC

MISC |

golang — go

|

In x/text in Go 1.15.4, an “index out of range” panic occurs in language.ParseAcceptLanguage while parsing the -u- extension. (x/text/language is supposed to be able to parse an HTTP Accept-Language header.) |

2021-01-02 |

not yet calculated |

CVE-2020-28851

MISC |

golang — go

|

In x/text in Go 1.15.4, a “slice bounds out of range” panic occurs in language.ParseAcceptLanguage while processing a BCP 47 tag. (x/text/language is supposed to be able to parse an HTTP Accept-Language header.) |

2021-01-02 |

not yet calculated |

CVE-2020-28852

MISC |

google — flatbuffers

|

An issue was discovered in the flatbuffers crate before 0.6.1 for Rust. Arbitrary bytes can be reinterpreted as a bool, defeating soundness. |

2020-12-31 |

not yet calculated |

CVE-2019-25004

MISC |

google — flatbuffers

|

An issue was discovered in the flatbuffers crate through 2020-04-11 for Rust. read_scalar (and read_scalar_at) can transmute values without unsafe blocks. |

2020-12-31 |

not yet calculated |

CVE-2020-35864

MISC |

green_packet — wimax_dv-360_devices

|

Green Packet WiMax DV-360 2.10.14-g1.0.6.1 devices allow Command Injection, with unauthenticated remote command execution, via a crafted payload to the HTTPS port, because lighttpd listens on all network interfaces (including the external Internet) by default. NOTE: this may overlap CVE-2017-9980. |

2020-12-31 |

not yet calculated |

CVE-2018-14067

MISC |

gssproxy — gssproxy

|

gssproxy (aka gss-proxy) before 0.8.3 does not unlock cond_mutex before pthread exit in gp_worker_main() in gp_workers.c. |

2020-12-31 |

not yet calculated |

CVE-2020-12658

MISC

MISC

MISC |

| hgiga — mailsherlock |

The function, view the source code, of HGiga MailSherlock does not validate specific characters. Remote attackers can use this flaw to download arbitrary system files. |

2020-12-31 |

not yet calculated |

CVE-2020-25850

MISC |

hgiga — mailsherlock

|

HGiga MailSherlock does not validate specific URL parameters properly that allows attackers to inject JavaScript syntax for XSS attacks. |

2020-12-31 |

not yet calculated |

CVE-2020-35740

MISC |

hgiga — mailsherlock

|

HGiga MailSherlock contains a SQL injection flaw. Attackers can inject and launch SQL commands in a URL parameter of specific cgi pages. |

2020-12-31 |

not yet calculated |

CVE-2020-35743

MISC |

hgiga — mailsherlock

|

HGiga MailSherlock contains a vulnerability of SQL Injection. Attackers can inject and launch SQL commands in a URL parameter. |

2020-12-31 |

not yet calculated |

CVE-2020-35742

MISC |

hgiga — mailsherlock

|

HGiga MailSherlock does not validate user parameters on multiple login pages. Attackers can use the vulnerability to inject JavaScript syntax for XSS attacks. |

2020-12-31 |

not yet calculated |

CVE-2020-35741

MISC |

hgiga — mailsherlock

|

HGiga MailSherlock does not validate specific parameters properly. Attackers can use the vulnerability to launch Command inject attacks remotely and execute arbitrary commands of the system. |

2020-12-31 |

not yet calculated |

CVE-2020-35851

MISC |

hgiga — mailsherlock

|

HGiga MailSherlock contains weak authentication flaw that attackers grant privilege remotely with default password generation mechanism. |

2020-12-31 |

not yet calculated |

CVE-2020-25848

MISC |

hyperium — http

|

An issue was discovered in the http crate before 0.1.20 for Rust. HeaderMap::reserve() has an integer overflow that allows attackers to cause a denial of service. |

2020-12-31 |

not yet calculated |

CVE-2019-25008

MISC |

hyperium — hyper

|

An issue was discovered in the hyper crate before 0.12.34 for Rust. HTTP request smuggling can occur. Remote code execution can occur in certain situations with an HTTP server on the loopback interface. |

2020-12-31 |

not yet calculated |

CVE-2020-35863

MISC |

invision_community — invision_community

|

Invision Community 4.5.4 is affected by cross-site scripting (XSS) in the Field Name field. This vulnerability can allow an attacker to inject the XSS payload in Field Name and each time any user will open that, the XSS triggers and the attacker can able to steal the cookie according to the crafted payload. |

2020-12-30 |

not yet calculated |

CVE-2020-29477

MISC

MISC |

italoantunes — vidyo

|

Vidyo 02-09-/D allows clickjacking via the portal/ URI. |

2020-12-29 |

not yet calculated |

CVE-2020-35735

MISC

MISC |

jira — xwiki

|

XWiki Platform before 12.8 mishandles escaping in the property displayer. |

2020-12-31 |

not yet calculated |

CVE-2020-13654

MISC

CONFIRM

MISC |

libsecp256k1 — libsecp256k1

|

An issue was discovered in the libsecp256k1 crate before 0.3.1 for Rust. Scalar::check_overflow allows a timing side-channel attack; consequently, attackers can obtain sensitive information. |

2020-12-31 |

not yet calculated |

CVE-2019-25003

MISC |

| limesurvey — limesurvey |

LimeSurvey 3.21.1 is affected by cross-site scripting (XSS) in the Quota component of the Survey page. When the survey quota being viewed, e.g. by an administrative user, the JavaScript code will be executed in the browser. |

2020-12-31 |

not yet calculated |

CVE-2020-25799

MISC

MISC |

limesurvey — limesurvey

|

LimeSurvey 3.21.1 is affected by cross-site scripting (XSS) in the Add Participants Function (First and last name parameters). When the survey participant being edited, e.g. by an administrative user, the JavaScript code will be executed in the browser. |

2020-12-31 |

not yet calculated |

CVE-2020-25797

MISC

MISC |

linbit — csync2

|

An issue was discovered in LINBIT csync2 through 2.0. It does not correctly check for the return value GNUTLS_E_WARNING_ALERT_RECEIVED of the gnutls_handshake() function. It neglects to call this function again, as required by the design of the API. |

2020-12-30 |

not yet calculated |

CVE-2019-15523

MISC |

mantisbt — mantisbt

|

An issue was discovered in MantisBT before 2.24.4. An incorrect access check in bug_revision_view_page.php allows an unprivileged attacker to view the Summary field of private issues, as well as bugnotes revisions, gaining access to potentially confidential information via the bugnote_id parameter. |

2020-12-30 |

not yet calculated |

CVE-2020-35849

MISC |

mantisbt — mantisbt

|

In MantisBT 2.24.3, SQL Injection can occur in the parameter “access” of the mc_project_get_users function through the API SOAP. |

2020-12-30 |

not yet calculated |

CVE-2020-28413

MISC |

matrixssl — matrixssl

|

In MatrixSSL before 4.2.2 Open, the DTLS server can encounter an invalid pointer free (leading to memory corruption and a daemon crash) via a crafted incoming network message, a different vulnerability than CVE-2019-14431. |

2020-12-30 |

not yet calculated |

CVE-2019-16747

CONFIRM

MISC

CONFIRM |

nayutaco — ptarmigan

|

Ptarmigan before 0.2.3 lacks API token validation, e.g., an “if (token === apiToken) {return true;} return false;” code block. |

2020-12-30 |

not yet calculated |

CVE-2019-16281

MISC

MISC

MISC |

netbox_community — netbox

|

NetBox through 2.6.2 allows an Authenticated User to conduct an XSS attack against an admin via a GFM-rendered field, as demonstrated by /dcim/sites/add/ comments. |

2020-12-31 |

not yet calculated |

CVE-2019-25011

MISC

MISC |

netgear — multiple_devices

|

Certain NETGEAR devices are affected by command injection by an unauthenticated attacker. This affects R6400v2 before 1.0.4.84, R6700v3 before 1.0.4.84, R6900P before 1.3.2.124, R7000 before 1.0.11.100, R7000P before 1.3.2.124, R7800 before 1.0.2.74, R7850 before 1.0.5.60, R7900 before 1.0.4.26, R7960P before 1.4.1.50, R8000 before 1.0.4.52, R7900P before 1.4.1.50, R8000P before 1.4.1.50, RAX15 before 1.0.1.64, RAX20 before 1.0.1.64, RAX200 before 1.0.1.12, RAX45 before 1.0.2.66, RAX50 before 1.0.2.66, RAX75 before 1.0.3.102, RAX80 before 1.0.3.102, RBK752 before 3.2.16.6, RBR750 before 3.2.16.6, RBS750 before 3.2.16.6, RBK852 before 3.2.15.25, RBR850 before 3.2.15.25, RBS850 before 3.2.15.25, RBK842 before 3.2.15.25, RBR840 before 3.2.15.25, RBS840 before 3.2.15.25, RS400 before 1.5.0.48, and XR300 before 1.0.3.50. |

2020-12-30 |

not yet calculated |

CVE-2020-35798

MISC |

netgear — multiple_devices

|

Certain NETGEAR devices are affected by Stored XSS. This affects D7800 before 1.0.1.56, R7500v2 before 1.0.3.46, R7800 before 1.0.2.68, R8900 before 1.0.4.28, R9000 before 1.0.4.28, XR500 before 2.3.2.56, XR700 before 1.0.1.10, and RAX120 before 1.0.0.78. |

2020-12-30 |

not yet calculated |

CVE-2020-35839

MISC |

netgear — multiple_devices

|

Certain NETGEAR devices are affected by stored XSS. This affects D7800 before 1.0.1.56, R7800 before 1.0.2.74, R8900 before 1.0.4.28, R9000 before 1.0.4.28, RAX120 before 1.0.0.78, RBK20 before 2.3.5.26, RBR20 before 2.3.5.26, RBS20 before 2.3.5.26, RBK40 before 2.3.5.30, RBR40 before 2.3.5.30, RBS40 before 2.3.5.30, RBK50 before 2.3.5.30, RBR50 before 2.3.5.30, RBS50 before 2.3.5.30, XR500 before 2.3.2.56, and XR700 before 1.0.1.10. |

2020-12-30 |

not yet calculated |

CVE-2020-35829

MISC |

netgear — multiple_devices

|

Certain NETGEAR devices are affected by stored XSS. This affects D7800 before 1.0.1.56, R7800 before 1.0.2.74, R8900 before 1.0.4.28, R9000 before 1.0.4.28, RAX120 before 1.0.0.78, RBK20 before 2.3.5.26, RBR20 before 2.3.5.26, RBS20 before 2.3.5.26, RBK40 before 2.3.5.30, RBR40 before 2.3.5.30, RBS40 before 2.3.5.30, RBK50 before 2.3.5.30, RBR50 before 2.3.5.30, RBS50 before 2.3.5.30, XR500 before 2.3.2.56, and XR700 before 1.0.1.10. |

2020-12-30 |

not yet calculated |

CVE-2020-35814

MISC |

netgear — multiple_devices

|

Certain NETGEAR devices are affected by stored XSS. This affects D7800 before 1.0.1.56, R7500v2 before 1.0.3.46, R7800 before 1.0.2.68, R8900 before 1.0.4.28, R9000 before 1.0.4.28, RAX120 before 1.0.0.78, RBK50 before 2.3.5.30, RBR50 before 2.3.5.30, RBS50 before 2.3.5.30, XR500 before 2.3.2.56, and XR700 before 1.0.1.10. |

2020-12-30 |

not yet calculated |

CVE-2020-35831

MISC |

netgear — multiple_devices

|

Certain NETGEAR devices are affected by stored XSS. This affects D7800 before 1.0.1.56, R7500v2 before 1.0.3.46, R7800 before 1.0.2.68, R8900 before 1.0.4.28, R9000 before 1.0.4.28, RAX120 before 1.0.0.78, RBK20 before 2.3.5.26, RBR20 before 2.3.5.26, RBS20 before 2.3.5.26, RBK40 before 2.3.5.30, RBR40 before 2.3.5.30, RBS40 before 2.3.5.30, RBK50 before 2.3.5.30, RBR50 before 2.3.5.30, RBS50 before 2.3.5.30, XR500 before 2.3.2.56, and XR700 before 1.0.1.10. |

2020-12-30 |

not yet calculated |

CVE-2020-35832

MISC |

netgear — multiple_devices

|

Certain NETGEAR devices are affected by stored XSS. This affects D7800 before 1.0.1.56, R7500v2 before 1.0.3.46, R7800 before 1.0.2.68, R8900 before 1.0.4.28, R9000 before 1.0.4.28, RAX120 before 1.0.0.78, RBK20 before 2.3.5.26, RBR20 before 2.3.5.26, RBS20 before 2.3.5.26, RBK40 before 2.3.5.30, RBR40 before 2.3.5.30, RBS40 before 2.3.5.30, RBK50 before 2.3.5.30, RBR50 before 2.3.5.30, RBS50 before 2.3.5.30, XR500 before 2.3.2.56, and XR700 before 1.0.1.10. |

2020-12-30 |

not yet calculated |

CVE-2020-35833

MISC |

netgear — multiple_devices

|

Certain NETGEAR devices are affected by stored XSS. This affects D7800 before 1.0.1.56, RBK50 before 2.3.5.30, RBR50 before 2.3.5.30, RBS50 before 2.3.5.30, RBK40 before 2.3.5.30, RBR40 before 2.3.5.30, RBS40 before 2.3.5.30, RBK20 before 2.3.5.26, RBR20 before 2.3.5.26, RBS20 before 2.3.5.26, XR700 before 1.0.1.10, R7500v2 before 1.0.3.46, R7800 before 1.0.2.74, R8900 before 1.0.4.28, R9000 before 1.0.4.28, XR500 before 2.3.2.56, and RAX120 before 1.0.0.78. |

2020-12-30 |

not yet calculated |

CVE-2020-35813

MISC |

netgear — multiple_devices

|

Certain NETGEAR devices are affected by stored XSS. This affects D6200 before 1.1.00.38, D7000 before 1.0.1.78, JNR1010v2 before 1.1.0.62, JR6150 before 1.0.1.24, JWNR2010v5 before 1.1.0.62, R6020 before 1.0.0.42, R6050 before 1.0.1.24, R6080 before 1.0.0.42, R6120 before 1.0.0.66, R6220 before 1.1.0.100, R6260 before 1.1.0.76, R6700v2 before 1.2.0.62, R6800 before 1.2.0.62, R6900v2 before 1.2.0.62, R7450 before 1.2.0.62, WNR1000v4 before 1.1.0.62, WNR2020 before 1.1.0.62, and WNR2050 before 1.1.0.62. |

2020-12-30 |

not yet calculated |

CVE-2020-35841

MISC |

netgear — multiple_devices

|

Certain NETGEAR devices are affected by stored XSS. This affects D7800 before 1.0.1.56, R7500v2 before 1.0.3.46, R7800 before 1.0.2.68, R8900 before 1.0.4.28, R9000 before 1.0.4.28, RAX120 before 1.0.0.78, RBK40 before 2.3.5.30, RBR40 before 2.3.5.30, RBS40 before 2.3.5.30, RBK20 before 2.3.5.26, RBR20 before 2.3.5.26, RBS20 before 2.3.5.26, RBK50 before 2.3.5.30, RBR50 before 2.3.5.30, RBS50 before 2.3.5.30, XR500 before 2.3.2.56, and XR700 before 1.0.1.10. |

2020-12-30 |

not yet calculated |

CVE-2020-35812

MISC |

netgear — multiple_devices

|

Certain NETGEAR devices are affected by disclosure of sensitive information. This affects D6200 before 1.1.00.40, D7000 before 1.0.1.78, R6020 before 1.0.0.46, R6080 before 1.0.0.46, R6120 before 1.0.0.72, R6220 before 1.1.0.100, R6230 before 1.1.0.100, R6260 before 1.1.0.76, R6700v2 before 1.2.0.74, R6800 before 1.2.0.74, R6900v2 before 1.2.0.74, R7450 before 1.2.0.74, AC2100 before 1.2.0.74, AC2400 before 1.2.0.74, and AC2600 before 1.2.0.74. |

2020-12-30 |

not yet calculated |

CVE-2020-35803

MISC |

netgear — multiple_devices

|

Certain NETGEAR devices are affected by stored XSS. This affects D7800 before 1.0.1.56, R7500v2 before 1.0.3.46, R7800 before 1.0.2.68, R8900 before 1.0.4.28, R9000 before 1.0.4.28, RAX120 before 1.0.0.78, XR500 before 2.3.2.56, and XR700 before 1.0.1.10. |

2020-12-30 |

not yet calculated |

CVE-2020-35834

MISC |

netgear — multiple_devices

|

Certain NETGEAR devices are affected by stored XSS. This affects D7800 before 1.0.1.56, R7500v2 before 1.0.3.46, R7800 before 1.0.2.74, R8900 before 1.0.4.28, R9000 before 1.0.4.28, RAX120 before 1.0.0.78, RBK40 before 2.3.5.30, RBR40 before 2.3.5.30, RBS40 before 2.3.5.30, RBK20 before 2.3.5.26, RBR20 before 2.3.5.26, RBS20 before 2.3.5.26, RBK50 before 2.3.5.30, RBR50 before 2.3.5.30, RBS50 before 2.3.5.30, XR500 before 2.3.2.56, and XR700 before 1.0.1.10. |

2020-12-30 |

not yet calculated |

CVE-2020-35810

MISC |

netgear — multiple_devices

|

Certain NETGEAR devices are affected by stored XSS. This affects D6100 before 1.0.0.63, DM200 before 1.0.0.61, R7800 before 1.0.2.52, R8900 before 1.0.4.12, R9000 before 1.0.4.12, WN3000RPv2 before 1.0.0.68, and WNR2000v5 before 1.0.0.66. |

2020-12-30 |

not yet calculated |

CVE-2020-35808

MISC |

netgear — multiple_devices

|

Certain NETGEAR devices are affected by stored XSS. This affects D7800 before 1.0.1.56, R7500v2 before 1.0.3.46, R7800 before 1.0.2.74, R8900 before 1.0.4.28, R9000 before 1.0.4.28, XR500 before 2.3.2.56, XR700 before 1.0.1.10, and RAX120 before 1.0.0.78. |

2020-12-30 |

not yet calculated |

CVE-2020-35836

MISC |

netgear — multiple_devices

|

Certain NETGEAR devices are affected by stored XSS. This affects D7800 before 1.0.1.56, R7800 before 1.0.2.68, RAX120 before 1.0.0.78, RBK22 before 2.3.5.26, RBR20 before 2.3.5.26, RBS20 before 2.3.5.26, RBK40 before 2.3.5.30, RBR40 before 2.3.5.30, RBS40 before 2.3.5.30, RBK50 before 2.3.5.30, RBR50 before 2.3.5.30, RBS50 before 2.3.5.30, and WN3000RPv2 before 1.0.0.78. |

2020-12-30 |

not yet calculated |

CVE-2020-35807

MISC |

netgear — multiple_devices

|

Certain NETGEAR devices are affected by stored XSS. This affects D7800 before 1.0.1.56, RBK20 before 2.3.5.26, RBR20 before 2.3.5.26, RBS20 before 2.3.5.26, RBK40 before 2.3.5.30, RBR40 before 2.3.5.30, RBS40 before 2.3.5.30, RBK50 before 2.3.5.30, RBR50 before 2.3.5.30, RBS50 before 2.3.5.30, R7800 before 1.0.2.74, R8900 before 1.0.4.28, R9000 before 1.0.4.28, XR500 before 2.3.2.56, XR700 before 1.0.1.10, RAX120 before 1.0.0.78, and R7500v2 before 1.0.3.46. |

2020-12-30 |

not yet calculated |

CVE-2020-35828

MISC |

netgear — multiple_devices

|

Certain NETGEAR devices are affected by stored XSS. This affects D7800 before 1.0.1.56, R7500v2 before 1.0.3.46, R7800 before 1.0.2.68, RAX120 before 1.0.0.78, RBK22 before 2.3.5.26, RBR20 before 2.3.5.26, RBS20 before 2.3.5.26, RBK40 before 2.3.5.30, RBR40 before 2.3.5.30, RBS40 before 2.3.5.30, RBK50 before 2.3.5.30, RBR50 before 2.3.5.30, RBS50 before 2.3.5.30, and WN3000RPv2 before 1.0.0.78. |

2020-12-30 |

not yet calculated |

CVE-2020-35806

MISC |

netgear — multiple_devices

|

Certain NETGEAR devices are affected by stored XSS. This affects D7800 before 1.0.1.56, R7500v2 before 1.0.3.46, R7800 before 1.0.2.74, R8900 before 1.0.4.28, R9000 before 1.0.4.28, RAX120 before 1.0.0.78, RBR20 before 2.3.5.26, RBR20 before 2.3.5.26, RBS20 before 2.3.5.26, RBK40 before 2.3.5.30, RBR40 before 2.3.5.30, RBS40 before 2.3.5.30, RBK50 before 2.3.5.30, RBR50 before 2.3.5.30, RBS50 before 2.3.5.30, XR500 before 2.3.2.56, and XR700 before 1.0.1.10. |

2020-12-30 |

not yet calculated |

CVE-2020-35818

MISC |

netgear — multiple_devices

|

Certain NETGEAR devices are affected by stored XSS. This affects D7800 before 1.0.1.56, R7500v2 before 1.0.3.46, R7800 before 1.0.2.68, R8900 before 1.0.4.28, R9000 before 1.0.4.28, RAX120 before 1.0.0.78, RBK20 before 2.3.5.26, RBR20 before 2.3.5.26, RBS20 before 2.3.5.26, RBK40 before 2.3.5.30, RBR40 before 2.3.5.30, RBS40 before 2.3.5.30, RBK50 before 2.3.5.30, RBR50 before 2.3.5.30, RBS50 before 2.3.5.30, XR500 before 2.3.2.56, and XR700 before 1.0.1.10. |

2020-12-30 |

not yet calculated |

CVE-2020-35811

MISC |

newgen — egov

|

In Correspondence Management System (corms) in Newgen eGov 12.0, an attacker can modify other users’ profile information by manipulating the unvalidated UserIndex parameter, aka Insecure Direct Object Reference. |

2020-12-30 |

not yet calculated |

CVE-2020-35737

MISC |

| nhiservisignadapter — nhiservisignadapter |

NHIServiSignAdapter fails to verify the length of digital credential files’ path which leads to a heap overflow loophole. Remote attackers can use the leak to execute code without privilege. |

2020-12-31 |

not yet calculated |

CVE-2020-25843

MISC |

| nhiservisignadapter — nhiservisignadapter |

The digest generation function of NHIServiSignAdapter has not been verified for parameter’s length, which leads to a stack overflow loophole. Remote attackers can use the leak to execute code without privilege. |

2020-12-31 |

not yet calculated |

CVE-2020-25844

MISC |

| nhiservisignadapter — nhiservisignadapter |

Multiple functions of NHIServiSignAdapter failed to verify the users’ file path, which leads to the SMB request being redirected to a malicious host, resulting in the leakage of user’s credential. |

2020-12-31 |

not yet calculated |

CVE-2020-25845

MISC |

| nhiservisignadapter — nhiservisignadapter |

The digest generation function of NHIServiSignAdapter has not been verified for source file path, which leads to the SMB request being redirected to a malicious host, resulting in the leakage of user’s credential. |

2020-12-31 |

not yet calculated |

CVE-2020-25846

MISC |

nhiservisignadapter — nhiservisignadapter

|

The encryption function of NHIServiSignAdapter fail to verify the file path input by users. Remote attacker can access arbitrary files through the flaw without privilege. |

2020-12-31 |

not yet calculated |

CVE-2020-25842

MISC |

nokogiri — nokogiri

|

Nokogiri is a Rubygem providing HTML, XML, SAX, and Reader parsers with XPath and CSS selector support. In Nokogiri before version 1.11.0.rc4 there is an XXE vulnerability. XML Schemas parsed by Nokogiri::XML::Schema are trusted by default, allowing external resources to be accessed over the network, potentially enabling XXE or SSRF attacks. This behavior is counter to the security policy followed by Nokogiri maintainers, which is to treat all input as untrusted by default whenever possible. This is fixed in Nokogiri version 1.11.0.rc4. |

2020-12-30 |

not yet calculated |

CVE-2020-26247

MISC

MISC

CONFIRM

MISC

MISC |

npmjs — parse_server

|

Parse Server is an open source backend that can be deployed to any infrastructure that can run Node.js. It is an npm package “parse-server”. In Parse Server before version 4.5.0, user passwords involved in LDAP authentication are stored in cleartext. This is fixed in version 4.5.0 by stripping password after authentication to prevent cleartext password storage. |

2020-12-30 |

not yet calculated |

CVE-2020-26288

MISC

MISC

CONFIRM

MISC |

npmjs — uri.js

|

URI.js is a javascript URL mutation library (npm package urijs). In URI.js before version 1.19.4, the hostname can be spoofed by using a backslash (“) character followed by an at (`@`) character. If the hostname is used in security decisions, the decision may be incorrect. Depending on library usage and attacker intent, impacts may include allow/block list bypasses, SSRF attacks, open redirects, or other undesired behavior. For example the URL `https://expected-example.com@observed-example.com` will incorrectly return `observed-example.com` if using an affected version. Patched versions correctly return `expected-example.com`. Patched versions match the behavior of other parsers which implement the WHATWG URL specification, including web browsers and Node’s built-in URL class. Version 1.19.4 is patched against all known payload variants. Version 1.19.3 has a partial patch but is still vulnerable to a payload variant.] |

2020-12-31 |

not yet calculated |

CVE-2020-26291

MISC

MISC

CONFIRM

MISC |

npmjs — vega

|

Vega is a visualization grammar, a declarative format for creating, saving, and sharing interactive visualization designs. Vega in an npm package. In Vega before version 5.17.3 there is an XSS vulnerability in Vega expressions. Through a specially crafted Vega expression, an attacker could execute arbitrary javascript on a victim’s machine. This is fixed in version 5.17.3 |

2020-12-30 |

not yet calculated |

CVE-2020-26296

MISC

MISC

MISC

CONFIRM

MISC |

| nukeviet — nukeviet |

modules/banners/funcs/click.php in NukeViet before 4.3.04 has a SQL INSERT statement with raw header data from an HTTP request (e.g., Referer and User-Agent). |

2020-12-31 |

not yet calculated |

CVE-2019-7726

MISC

MISC

CONFIRM

MISC |

nukeviet — nukeviet

|

includes/core/is_user.php in NukeViet before 4.3.04 deserializes the untrusted nvloginhash cookie (i.e., the code relies on PHP’s serialization format when JSON can be used to eliminate the risk). |

2020-12-31 |

not yet calculated |

CVE-2019-7725

MISC

MISC

CONFIRM

CONFIRM |

openemr — openemr

|

OpenEMR 5.0.1.3 allows Cross-Site Request Forgery (CSRF) via library/ajax and interface/super, as demonstrated by use of interface/super/manage_site_files.php to upload a .php file. |

2020-12-31 |

not yet calculated |

CVE-2018-16795

MISC

MISC |

oppo_charger — sm8250_q_master

|

In /SM8250_Q_Master/android/vendor/oppo_charger/oppo/charger_ic/oppo_da9313.c, failure to check the parameter buf in the function proc_work_mode_write in proc_work_mode_write causes a vulnerability. |

2020-12-31 |

not yet calculated |

CVE-2020-11835

MISC |

oppo_charger — sm8250_q_master

|

In /SM8250_Q_Master/android/vendor/oppo_charger/oppo/oppo_vooc.c, the function proc_fastchg_fw_update_write in proc_fastchg_fw_update_write does not check the parameter len, resulting in a vulnerability. |

2020-12-31 |

not yet calculated |

CVE-2020-11834

MISC |

oppo_charger — sm8250_q_master

|

In /SM8250_Q_Master/android/vendor/oppo_charger/oppo/charger_ic/oppo_mp2650.c, the function mp2650_data_log_write in mp2650_data_log_write does not check the parameter len which causes a vulnerability. |

2020-12-31 |

not yet calculated |

CVE-2020-11833

MISC |

plone — plone

|

Plone before 5.2.3 allows SSRF attacks via the tracebacks feature (only available to the Manager role). |

2020-12-30 |

not yet calculated |

CVE-2020-28735

CONFIRM

MISC

MISC |

plone — plone

|

Plone before 5.2.3 allows XXE attacks via a feature that is explicitly only available to the Manager role. |

2020-12-30 |

not yet calculated |

CVE-2020-28734

CONFIRM

MISC

MISC |

plone — plone

|

Plone before 5.2.3 allows XXE attacks via a feature that is protected by an unapplied permission of plone.schemaeditor.ManageSchemata (therefore, only available to the Manager role). |

2020-12-30 |

not yet calculated |

CVE-2020-28736

CONFIRM

MISC

MISC |

qdpm — qdpm

|

qdPM through 9.1 allows PHP Object Injection via timeReportActions::executeExport in core/apps/qdPM/modules/timeReport/actions/actions.class.php because unserialize is used. |

2020-12-31 |

not yet calculated |

CVE-2020-26165

MISC

MISC

FULLDISC |

qemu — qemu

|

iscsi_aio_ioctl_cb in block/iscsi.c in QEMU 4.1.0 has a heap-based buffer over-read that may disclose unrelated information from process memory to an attacker. |

2020-12-31 |

not yet calculated |

CVE-2020-11947

MISC |

qemu — qemu

|

In QEMU 4.1.0, an out-of-bounds read flaw was found in the ATI VGA implementation. It occurs in the ati_cursor_define() routine while handling MMIO write operations through the ati_mm_write() callback. A malicious guest could abuse this flaw to crash the QEMU process, resulting in a denial of service. |

2020-12-31 |

not yet calculated |

CVE-2019-20808

MISC

MISC |

qnap — qts

|

A vulnerability has been reported to affect QNAP NAS. If exploited, this vulnerability allows an attacker to access sensitive information stored in cleartext inside cookies via certain widely-available tools. QNAP have already fixed this vulnerability in the following versions: QTS 4.5.1.1456 build 20201015 (and later) QuTS hero h4.5.1.1472 build 20201031 (and later) QuTScloud c4.5.2.1379 build 20200730 (and later) |

2020-12-31 |

not yet calculated |

CVE-2018-19941

MISC |

qnap — qts

|

A cleartext transmission of sensitive information vulnerability has been reported to affect certain QTS devices. If exploited, this vulnerability allows a remote attacker to gain access to sensitive information. QNAP have already fixed this vulnerability in the following versions: QTS 4.4.3.1354 build 20200702 (and later) |

2020-12-31 |

not yet calculated |

CVE-2018-19944

MISC |

qnap — qts

|

A vulnerability has been reported to affect earlier QNAP devices running QTS 4.3.4 to 4.3.6. Caused by improper limitations of a pathname to a restricted directory, this vulnerability allows for renaming arbitrary files on the target system, if exploited. QNAP have already fixed this vulnerability in the following versions: QTS 4.3.6.0895 build 20190328 (and later) QTS 4.3.4.0899 build 20190322 (and later) This issue does not affect QTS 4.4.x or QTS 4.5.x. |

2020-12-31 |

not yet calculated |

CVE-2018-19945

MISC |

rocket_chat — rocket_chat

|

Rocket.Chat before 0.74.4, 1.x before 1.3.4, 2.x before 2.4.13, 3.x before 3.7.3, 3.8.x before 3.8.3, and 3.9.x before 3.9.1 mishandles SAML login. |

2020-12-30 |

not yet calculated |

CVE-2020-29594

MISC

MISC |

| rusqlite — rusqlite |

An issue was discovered in the rusqlite crate before 0.23.0 for Rust. Memory safety can be violated via create_module. |

2020-12-31 |

not yet calculated |

CVE-2020-35867

MISC

MISC |

| rusqlite — rusqlite |

An issue was discovered in the rusqlite crate before 0.23.0 for Rust. Memory safety can be violated via UnlockNotification. |

2020-12-31 |

not yet calculated |

CVE-2020-35868

MISC

MISC |

| rusqlite — rusqlite |

An issue was discovered in the rusqlite crate before 0.23.0 for Rust. Memory safety can be violated because rusqlite::trace::log mishandles format strings. |

2020-12-31 |

not yet calculated |

CVE-2020-35869

MISC

MISC |

| rusqlite — rusqlite |

An issue was discovered in the rusqlite crate before 0.23.0 for Rust. Memory safety can be violated via an Auxdata API use-after-free. |

2020-12-31 |

not yet calculated |

CVE-2020-35870

MISC

MISC |

| rusqlite — rusqlite |

An issue was discovered in the rusqlite crate before 0.23.0 for Rust. Memory safety can be violated via an Auxdata API data race. |

2020-12-31 |

not yet calculated |

CVE-2020-35871

MISC

MISC |

| rusqlite — rusqlite |

An issue was discovered in the rusqlite crate before 0.23.0 for Rust. Memory safety can be violated via the repr(Rust) type. |

2020-12-31 |

not yet calculated |

CVE-2020-35872

MISC

MISC |

rusqlite — rusqlite

|

An issue was discovered in the rusqlite crate before 0.23.0 for Rust. Memory safety can be violated via VTab / VTabCursor. |

2020-12-31 |

not yet calculated |

CVE-2020-35866

MISC

MISC |

rust — http

|

An issue was discovered in the http crate before 0.1.20 for Rust. The HeaderMap::Drain API can use a raw pointer, defeating soundness. |

2020-12-31 |

not yet calculated |

CVE-2019-25009

MISC |

| rust — rust |

An issue was discovered in the thex crate through 2020-12-08 for Rust. Thex<T> allows cross-thread data races of non-Send types. |

2020-12-31 |

not yet calculated |

CVE-2020-35927

MISC |

rust — rust

|

An issue was discovered in the dync crate before 0.5.0 for Rust. VecCopy allows misaligned element access because u8 is not always the type in question. |

2020-12-31 |

not yet calculated |

CVE-2020-35903

MISC |

rust — rust

|

An issue was discovered in the crossbeam-channel crate before 0.4.4 for Rust. It has incorrect expectations about the relationship between the memory allocation and how many iterator elements there are. |

2020-12-31 |

not yet calculated |

CVE-2020-35904

MISC |

rust — rust

|

An issue was discovered in the mio crate before 0.7.6 for Rust. It has false expectations about the std::net::SocketAddr memory representation. |

2020-12-31 |

not yet calculated |

CVE-2020-35922

MISC |

rust — rust

|

An issue was discovered in the actix-codec crate before 0.3.0-beta.1 for Rust. There is a use-after-free in Framed. |

2020-12-31 |

not yet calculated |

CVE-2020-35902

MISC |

rust — rust

|

An issue was discovered in the actix-http crate before 2.0.0-alpha.1 for Rust. There is a use-after-free in BodyStream. |

2020-12-31 |

not yet calculated |

CVE-2020-35901

MISC |

rust — rust

|

An issue was discovered in the actix-service crate before 1.0.6 for Rust. The Cell implementation allows obtaining more than one mutable reference to the same data. |

2020-12-31 |

not yet calculated |

CVE-2020-35899

MISC |

rust — rust

|

An issue was discovered in the actix-utils crate before 2.0.0 for Rust. The Cell implementation allows obtaining more than one mutable reference to the same data. |

2020-12-31 |

not yet calculated |

CVE-2020-35898

MISC |

rust — rust

|

An issue was discovered in the atom crate before 0.3.6 for Rust. An unsafe Send implementation allows a cross-thread data race. |

2020-12-31 |

not yet calculated |

CVE-2020-35897

MISC |

rust — rust

|

An issue was discovered in the ws crate through 2020-09-25 for Rust. The outgoing buffer is not properly limited, leading to a remote memory-consumption attack. |

2020-12-31 |

not yet calculated |

CVE-2020-35896

MISC |

rust — rust

|

An issue was discovered in the concread crate before 0.2.6 for Rust. Attackers can cause an ARCache<K,V> data race by sending types that do not implement Send/Sync. |

2020-12-31 |

not yet calculated |

CVE-2020-35928

MISC |

rust — rust

|

An issue was discovered in the stack crate before 0.3.1 for Rust. ArrayVec has an out-of-bounds write via element insertion. |

2020-12-31 |

not yet calculated |

CVE-2020-35895

MISC |

rust — rust

|

An issue was discovered in the obstack crate before 0.1.4 for Rust. Unaligned references can occur. |

2020-12-31 |

not yet calculated |

CVE-2020-35894

MISC |

rust — rust

|

An issue was discovered in the simple-slab crate before 0.3.3 for Rust. remove() has an off-by-one error, causing memory leakage and a drop of uninitialized memory. |

2020-12-31 |

not yet calculated |

CVE-2020-35893

MISC |

rust — rust

|

An issue was discovered in the simple-slab crate before 0.3.3 for Rust. index() allows an out-of-bounds read. |

2020-12-31 |

not yet calculated |

CVE-2020-35892

MISC |

rust — rust

|

An issue was discovered in the array-queue crate through 2020-09-26 for Rust. A pop_back() call may lead to a use-after-free. |

2020-12-31 |

not yet calculated |

CVE-2020-35900

MISC |

rust — rust

|

An issue was discovered in the futures-task crate before 0.3.6 for Rust. futures_task::waker may cause a use-after-free in a non-static type situation. |

2020-12-31 |

not yet calculated |

CVE-2020-35906

MISC |

rust — rust

|

An issue was discovered in the futures-util crate before 0.3.7 for Rust. MutexGuard::map can cause a data race for certain closure situations (in safe code). |

2020-12-31 |

not yet calculated |

CVE-2020-35905

MISC |

rust — rust

|

An issue was discovered in the image crate before 0.23.12 for Rust. A Mutable reference has immutable provenance. (In the case of LLVM, the IR may be always correct.) |

2020-12-31 |

not yet calculated |

CVE-2020-35916

MISC |

rust — rust

|

An issue was discovered in the try-mutex crate before 0.3.0 for Rust. TryMutex<T> allows cross-thread sending of a non-Send type. |

2020-12-31 |

not yet calculated |

CVE-2020-35924

MISC |

rust — rust

|

An issue was discovered in the magnetic crate before 2.0.1 for Rust. MPMCConsumer and MPMCProducer allow cross-thread sending of a non-Send type. |

2020-12-31 |

not yet calculated |

CVE-2020-35925

MISC |

rust — rust

|

An issue was discovered in the miow crate before 0.3.6 for Rust. It has false expectations about the std::net::SocketAddr memory representation. |

2020-12-31 |

not yet calculated |

CVE-2020-35921

MISC |

rust — rust

|

An issue was discovered in the nanorand crate before 0.5.1 for Rust. It caused any random number generator (even ChaCha) to return all zeroes because integer truncation was mishandled. |

2020-12-31 |

not yet calculated |

CVE-2020-35926

MISC |

rust — rust

|

An issue was discovered in the socket2 crate before 0.3.16 for Rust. It has false expectations about the std::net::SocketAddr memory representation. |

2020-12-31 |

not yet calculated |

CVE-2020-35920

MISC |

rust — rust

|

An issue was discovered in the net2 crate before 0.2.36 for Rust. It has false expectations about the std::net::SocketAddr memory representation. |

2020-12-31 |

not yet calculated |

CVE-2020-35919

MISC |

rust — rust

|

An issue was discovered in the branca crate before 0.10.0 for Rust. Decoding tokens (with invalid base62 data) can panic. |

2020-12-31 |

not yet calculated |

CVE-2020-35918

MISC

MISC |

rust — rust

|

An issue was discovered in the pyo3 crate before 0.12.4 for Rust. There is a reference-counting error and use-after-free in From<Py<T>>. |

2020-12-31 |

not yet calculated |

CVE-2020-35917

MISC |

rust — rust

|

An issue was discovered in the ordnung crate through 2020-09-03 for Rust. compact::Vec violates memory safety via out-of-bounds access for large capacity. |

2020-12-31 |

not yet calculated |

CVE-2020-35890

MISC |

rust — rust

|

An issue was discovered in the ordered-float crate before 1.1.1 and 2.x before 2.0.1 for Rust. A NotNan value can contain a NaN. |

2020-12-31 |

not yet calculated |

CVE-2020-35923

MISC |

rust — rust

|

An issue was discovered in the futures-intrusive crate before 0.4.0 for Rust. GenericMutexGuard allows cross-thread data races of non-Sync types. |

2020-12-31 |

not yet calculated |

CVE-2020-35915

MISC |

rust — rust

|

An issue was discovered in the lock_api crate before 0.4.2 for Rust. A data race can occur because of RwLockWriteGuard unsoundness. |

2020-12-31 |

not yet calculated |

CVE-2020-35914

MISC |

rust — rust

|

An issue was discovered in the lock_api crate before 0.4.2 for Rust. A data race can occur because of RwLockReadGuard unsoundness. |

2020-12-31 |

not yet calculated |

CVE-2020-35913

MISC |

rust — rust

|

An issue was discovered in the lock_api crate before 0.4.2 for Rust. A data race can occur because of MappedRwLockWriteGuard unsoundness. |

2020-12-31 |

not yet calculated |

CVE-2020-35912

MISC |

rust — rust

|

An issue was discovered in the lock_api crate before 0.4.2 for Rust. A data race can occur because of MappedRwLockReadGuard unsoundness. |

2020-12-31 |

not yet calculated |

CVE-2020-35911

MISC |

rust — rust

|

An issue was discovered in the lock_api crate before 0.4.2 for Rust. A data race can occur because of MappedMutexGuard unsoundness. |

2020-12-31 |

not yet calculated |

CVE-2020-35910

MISC |

rust — rust

|

An issue was discovered in the multihash crate before 0.11.3 for Rust. The from_slice parsing code can panic via unsanitized data from a network server. |

2020-12-31 |

not yet calculated |

CVE-2020-35909

MISC |

rust — rust

|

An issue was discovered in the futures-util crate before 0.3.2 for Rust. FuturesUnordered can lead to data corruption because Sync is mishandled. |

2020-12-31 |

not yet calculated |

CVE-2020-35908

MISC |

rust — rust

|

An issue was discovered in the futures-task crate before 0.3.5 for Rust. futures_task::noop_waker_ref allows a NULL pointer dereference. |

2020-12-31 |

not yet calculated |

CVE-2020-35907

MISC |

rust — rust

|

An issue was discovered in the ordnung crate through 2020-09-03 for Rust. compact::Vec violates memory safety via a remove() double free. |

2020-12-31 |

not yet calculated |

CVE-2020-35891

MISC |

rust — rust

|

An issue was discovered in the mozwire crate through 2020-08-18 for Rust. A ../ directory-traversal situation allows overwriting local files that have .conf at the end of the filename. |

2020-12-31 |

not yet calculated |

CVE-2020-35883

MISC |

rust — rust

|

An issue was discovered in the crayon crate through 2020-08-31 for Rust. A TOCTOU issue has a resultant memory safety violation via HandleLike. |

2020-12-31 |

not yet calculated |

CVE-2020-35889

MISC |

rust — rust

|

An issue was discovered in the tokio-rustls crate before 0.13.1 for Rust. Excessive memory usage may occur when data arrives quickly. |

2020-12-31 |

not yet calculated |

CVE-2020-35875

MISC |

rust — rust

|

An issue was discovered in the arr crate through 2020-08-25 for Rust. Uninitialized memory is dropped by Array::new_from_template. |

2020-12-31 |

not yet calculated |

CVE-2020-35888

MISC |

rust — rust

|

An issue was discovered in the libpulse-binding crate before 2.5.0 for Rust. proplist::Iterator can cause a use-after-free. |

2020-12-31 |

not yet calculated |

CVE-2018-25001

MISC |

rust — rust

|

An issue was discovered in the trust-dns-server crate before 0.18.1 for Rust. DNS MX and SRV null targets are mishandled, causing stack consumption. |

2020-12-31 |

not yet calculated |

CVE-2020-35857

MISC |

rust — rust

|

An issue was discovered in the prost crate before 0.6.1 for Rust. There is stack consumption via a crafted message, causing a denial of service (e.g., x86) or possibly remote code execution (e.g., ARM). |

2020-12-31 |

not yet calculated |

CVE-2020-35858

MISC |

rust — rust

|

An issue was discovered in the lucet-runtime-internals crate before 0.5.1 for Rust. It mishandles sigstack allocation. Guest programs may be able to obtain sensitive information, or guest programs can experience memory corruption. |

2020-12-31 |

not yet calculated |

CVE-2020-35859

MISC |

rust — rust

|

An issue was discovered in the cbox crate through 2020-03-19 for Rust. The CBox API allows dereferencing raw pointers without a requirement for unsafe code. |

2020-12-31 |

not yet calculated |

CVE-2020-35860

MISC |

rust — rust

|

An issue was discovered in the bumpalo crate before 3.2.1 for Rust. The realloc feature allows the reading of unknown memory. Attackers can potentially read cryptographic keys. |

2020-12-31 |

not yet calculated |

CVE-2020-35861

MISC |

rust — rust

|

An issue was discovered in the bitvec crate before 0.17.4 for Rust. BitVec to BitBox conversion leads to a use-after-free or double free. |

2020-12-31 |

not yet calculated |

CVE-2020-35862

MISC |

rust — rust

|

An issue was discovered in the os_str_bytes crate before 2.0.0 for Rust. It has false expectations about char::from_u32_unchecked behavior. |

2020-12-31 |

not yet calculated |

CVE-2020-35865

MISC |

rust — rust

|

An issue was discovered in the internment crate through 2020-05-28 for Rust. ArcIntern::drop has a race condition and resultant use-after-free. |

2020-12-31 |

not yet calculated |

CVE-2020-35874

MISC |

rust — rust

|

An issue was discovered in the failure crate through 2019-11-13 for Rust. Type confusion can occur when __private_get_type_id__ is overridden. |

2020-12-31 |

not yet calculated |

CVE-2019-25010

MISC

MISC |

rust — rust

|

An issue was discovered in the rio crate through 2020-05-11 for Rust. A struct can be leaked, allowing attackers to obtain sensitive information, cause a use-after-free, or cause a data race. |

2020-12-31 |

not yet calculated |

CVE-2020-35876

MISC |

rust — rust

|

An issue was discovered in the ozone crate through 2020-07-04 for Rust. Memory safety is violated because of the dropping of uninitialized memory. |

2020-12-31 |

not yet calculated |

CVE-2020-35878

MISC |

rust — rust

|

An issue was discovered in the rulinalg crate through 2020-02-11 for Rust. There are incorrect lifetime-boundary definitions for RowMut::raw_slice and RowMut::raw_slice_mut. |

2020-12-31 |

not yet calculated |

CVE-2020-35879

MISC |

rust — rust

|

An issue was discovered in the bigint crate through 2020-05-07 for Rust. It allows a soundness violation. |

2020-12-31 |

not yet calculated |

CVE-2020-35880

MISC |

rust — rust

|

An issue was discovered in the traitobject crate through 2020-06-01 for Rust. It has false expectations about fat pointers, possibly causing memory corruption in, for example, Rust 2.x. |

2020-12-31 |

not yet calculated |

CVE-2020-35881

MISC |

rust — rust

|

An issue was discovered in the rocket crate before 0.4.5 for Rust. LocalRequest::clone creates more than one mutable references to the same object, possibly causing a data race. |

2020-12-31 |

not yet calculated |

CVE-2020-35882

MISC |

rust — rust

|

An issue was discovered in the tiny_http crate through 2020-06-16 for Rust. HTTP Request smuggling can occur via a malformed Transfer-Encoding header. |

2020-12-31 |

not yet calculated |

CVE-2020-35884

MISC |

rust — rust

|

An issue was discovered in the alpm-rs crate through 2020-08-20 for Rust. StrcCtx performs improper memory deallocation. |

2020-12-31 |

not yet calculated |

CVE-2020-35885

MISC |

rust — rust

|

An issue was discovered in the arr crate through 2020-08-25 for Rust. An attacker can smuggle non-Sync/Send types across a thread boundary to cause a data race. |

2020-12-31 |

not yet calculated |

CVE-2020-35886

MISC |

rust — rust

|

An issue was discovered in the ozone crate through 2020-07-04 for Rust. Memory safety is violated because of out-of-bounds access. |

2020-12-31 |

not yet calculated |

CVE-2020-35877

MISC |

rust — rust

|

An issue was discovered in the arr crate through 2020-08-25 for Rust. There is a buffer overflow in Index and IndexMut. |

2020-12-31 |

not yet calculated |

CVE-2020-35887

MISC |

rustcrypto — chacha20

|

An issue was discovered in the chacha20 crate before 0.2.3 for Rust. A ChaCha20 counter overflow makes it easier for attackers to determine plaintext. |

2020-12-31 |

not yet calculated |

CVE-2019-25005

MISC |

rustcrypto — hashes

|

An issue was discovered in the streebog crate before 0.8.0 for Rust. The Streebog hash function can cause a panic. |

2020-12-31 |

not yet calculated |

CVE-2019-25007

MISC |

rustcrypto — hashes

|

An issue was discovered in the streebog crate before 0.8.0 for Rust. The Streebog hash function can produce the wrong answer. |

2020-12-31 |

not yet calculated |

CVE-2019-25006

MISC |

sentrifugo — sentrifugo

|

** UNSUPPORTED WHEN ASSIGNED ** Sentrifugo 3.2 allows Stored Cross-Site Scripting (XSS) vulnerability by inserting a payload within the X-Forwarded-For HTTP header during the login process. When an administrator looks at logs, the payload is executed. NOTE: This vulnerability only affects products that are no longer supported by the maintainer. |

2020-12-30 |

not yet calculated |

CVE-2020-28365

MISC

MISC |

seopanel — seopanel

|

Seo Panel 4.8.0 allows stored XSS by an Authenticated User via the url parameter, as demonstrated by the seo/seopanel/websites.php URI. |

2020-12-31 |

not yet calculated |

CVE-2020-35930

MISC |

seopanel — seopanel

|

Seo Panel 4.8.0 allows reflected XSS via the seo/seopanel/login.php?sec=forgot email parameter. |

2021-01-01 |

not yet calculated |

CVE-2021-3002

MISC

MISC |

smsecgroup — sm-vul

|

An issue was discovered in a smart contract implementation for MORPH Token through 2019-06-05, an Ethereum token. A typo in the constructor of the Owned contract (which is inherited by MORPH Token) allows attackers to acquire contract ownership. A new owner can subsequently obtain MORPH Tokens for free and can perform a DoS attack. |

2020-12-30 |

not yet calculated |

CVE-2019-15080

MISC

MISC |

smsecgroup — sm-vul

|

A typo exists in the constructor of a smart contract implementation for EAI through 2019-06-05, an Ethereum token. This vulnerability could be used by an attacker to acquire EAI tokens for free. |

2020-12-30 |

not yet calculated |

CVE-2019-15079

MISC |

smsecgroup — sm-vul

|

An issue was discovered in a smart contract implementation for AIRDROPX BORN through 2019-05-29, an Ethereum token. The name of the constructor has a typo (wrong case: XBornID versus XBORNID) that allows an attacker to change the owner of the contract and obtain cryptocurrency for free. |

2020-12-30 |

not yet calculated |

CVE-2019-15078

MISC |

sodiumoxide — sodiumoxide

|

An issue was discovered in the sodiumoxide crate before 0.2.5 for Rust. generichash::Digest::eq compares itself to itself and thus has degenerate security properties. |

2020-12-31 |

not yet calculated |

CVE-2019-25002

MISC |

sysdream — usvn

|

USVN (aka User-friendly SVN) before 1.0.9 allows remote code execution via shell metacharacters in the number_start or number_end parameter to LastHundredRequest (aka lasthundredrequestAction) in the Timeline module. NOTE: this may overlap CVE-2020-25069. |

2020-12-31 |

not yet calculated |

CVE-2020-17363

MISC |

team_amaze — amaze_file_manager

|

The Amaze File Manager application before 3.4.2 for Android does not properly restrict intents for controlling the FTP server (aka services.ftpservice.FTPReceiver.ACTION_START_FTPSERVER and services.ftpservice.FTPReceiver.ACTION_STOP_FTPSERVER). |

2020-12-30 |

not yet calculated |

CVE-2020-35173

MISC

CONFIRM

MISC |

tenda — ac1200

|

On Tenda AC1200 (Model AC6) 15.03.06.51_multi devices, a large HTTP POST request sent to the change password API will trigger the router to crash and enter an infinite boot loop. |

2020-12-30 |

not yet calculated |

CVE-2020-28095

MISC |

tenda — n300

|

Tenda N300 F3 12.01.01.48 devices allow remote attackers to obtain sensitive information (possibly including an http_passwd line) via a direct request for cgi-bin/DownloadCfg/RouterCfm.cfg, a related issue to CVE-2017-14942. NOTE: the vulnerability report may suggest that either a ? character must be placed after the RouterCfm.cfg filename, or that the HTTP request headers must be unusual, but it is not known why these are relevant to the device’s HTTP response behavior. |

2021-01-01 |

not yet calculated |

CVE-2020-35391

MISC |

| umbraco — umbraco |

A stored XSS vulnerability exists in Umbraco CMS <= 8.9.1 or current. An authenticated user authorized to upload media can upload a malicious .svg file which act as a stored XSS payload. |

2020-12-30 |

not yet calculated |

CVE-2020-5810

MISC |

| umbraco — umbraco |

A stored XSS vulnerability exists in Umbraco CMS <= 8.9.1 or current. An authenticated user can inject arbitrary JavaScript code into iframes when editing content using the TinyMCE rich-text editor, as TinyMCE is configured to allow iframes by default in Umbraco CMS. |

2020-12-30 |

not yet calculated |

CVE-2020-5809

MISC |

umbraco — umbraco

|

An authenticated path traversal vulnerability exists during package installation in Umbraco CMS <= 8.9.1 or current, which could result in arbitrary files being written outside of the site home and expected paths when installing an Umbraco package. |

2020-12-30 |

not yet calculated |

CVE-2020-5811

MISC |

webswing — jslink

|

JsLink in Webswing before 2.6.12 LTS, and 2.7.x and 20.x before 20.1, allows remote code execution. |

2020-12-30 |

not yet calculated |

CVE-2020-11103

CONFIRM

CONFIRM |

wondercms — wondercms

|

WonderCMS 3.1.3 is affected by cross-site scripting (XSS) in the Page description component. This vulnerability can allow an attacker to inject the XSS payload in the Page description and each time any user will visits the website, the XSS triggers and attacker can steal the cookie according to the crafted payload. |

2020-12-30 |

not yet calculated |

CVE-2020-29233

MISC |

wondercms — wondercms

|

WonderCMS 3.1.3 is affected by cross-site scripting (XSS) in the Menu component. This vulnerability can allow an attacker to inject the XSS payload in the Setting – Menu and each time any user will visits the website directory, the XSS triggers and attacker can steal the cookie according to the crafted payload. |

2020-12-30 |

not yet calculated |

CVE-2020-29469

MISC |

| wordpress — wordpress |

The Advanced Access Manager plugin before 6.6.2 for WordPress displays the unfiltered user object (including all metadata) upon login via the REST API (aam/v1/authenticate or aam/v2/authenticate). This is a security problem if this object stores information that the user is not supposed to have (e.g., custom metadata added by a different plugin). |

2021-01-01 |

not yet calculated |

CVE-2020-35934

MISC |

| wordpress — wordpress |

The Advanced Access Manager plugin before 6.6.2 for WordPress allows privilege escalation on profile updates via the aam_user_roles POST parameter if Multiple Role support is enabled. (The mechanism for deciding whether a user was entitled to add a role did not work in various custom-role scenarios.) |

2021-01-01 |

not yet calculated |

CVE-2020-35935

MISC |

| wordpress — wordpress |

An issue was discovered in the Quiz and Survey Master plugin before 7.0.1 for WordPress. It allows users to delete arbitrary files such as wp-config.php file, which could effectively take a site offline and allow an attacker to reinstall with a WordPress instance under their control. This occurred via qsm_remove_file_fd_question, which allowed unauthenticated deletions (even though it was only intended for a person to delete their own quiz-answer files). |

2021-01-01 |

not yet calculated |

CVE-2020-35951

MISC

MISC |

| wordpress — wordpress |

An issue was discovered in the XCloner Backup and Restore plugin before 4.2.153 for WordPress. It allows CSRF (via almost any endpoint). |

2021-01-01 |

not yet calculated |

CVE-2020-35950

MISC

MISC |

| wordpress — wordpress |

An issue was discovered in the Quiz and Survey Master plugin before 7.0.1 for WordPress. It made it possible for unauthenticated attackers to upload arbitrary files and achieve remote code execution. If a quiz question could be answered by uploading a file, only the Content-Type header was checked during the upload, and thus the attacker could use text/plain for a .php file. |

2021-01-01 |

not yet calculated |

CVE-2020-35949

MISC

MISC |

| wordpress — wordpress |

An issue was discovered in the XCloner Backup and Restore plugin before 4.2.13 for WordPress. It gave authenticated attackers the ability to modify arbitrary files, including PHP files. Doing so would allow an attacker to achieve remote code execution. The xcloner_restore.php write_file_action could overwrite wp-config.php, for example. Alternatively, an attacker could create an exploit chain to obtain a database dump. |

2021-01-01 |

not yet calculated |

CVE-2020-35948

MISC

MISC |

| wordpress — wordpress |

An issue was discovered in the PageLayer plugin before 1.1.2 for WordPress. Nearly all of the AJAX action endpoints lacked permission checks, allowing these actions to be executed by anyone authenticated on the site. This happened because nonces were used as a means of authorization, but a nonce was present in a publicly viewable page. The greatest impact was the pagelayer_save_content function that allowed pages to be modified and allowed XSS to occur. |

2021-01-01 |

not yet calculated |

CVE-2020-35947

MISC

MISC |

| wordpress — wordpress |

An issue was discovered in the All in One SEO Pack plugin before 3.6.2 for WordPress. The SEO Description and Title fields are vulnerable to unsanitized input from a Contributor, leading to stored XSS. |

2021-01-01 |

not yet calculated |

CVE-2020-35946

MISC

MISC |

| wordpress — wordpress |

An issue was discovered in the Divi Builder plugin, Divi theme, and Divi Extra theme before 4.5.3 for WordPress. Authenticated attackers, with contributor-level or above capabilities, can upload arbitrary files, including .php files. This occurs because the check for file extensions is on the client side. |

2021-01-01 |

not yet calculated |

CVE-2020-35945

MISC

MISC |

| wordpress — wordpress |

An issue was discovered in the PageLayer plugin before 1.1.2 for WordPress. The pagelayer_settings_page function is vulnerable to CSRF, which can lead to XSS. |

2021-01-01 |

not yet calculated |

CVE-2020-35944

MISC

MISC |

| wordpress — wordpress |

PHP Object injection vulnerabilities in the Team Showcase plugin before 1.22.16 for WordPress allow remote authenticated attackers to inject arbitrary PHP objects due to insecure unserialization of data supplied in a remotely hosted crafted payload in the source parameter via AJAX. The action must be set to team_import_xml_layouts. |

2021-01-01 |

not yet calculated |

CVE-2020-35939

MISC |

| wordpress — wordpress |

PHP Object injection vulnerabilities in the Post Grid plugin before 2.0.73 for WordPress allow remote authenticated attackers to inject arbitrary PHP objects due to insecure unserialization of data supplied in a remotely hosted crafted payload in the source parameter via AJAX. The action must be set to post_grid_import_xml_layouts. |

2021-01-01 |

not yet calculated |

CVE-2020-35938

MISC |

| wordpress — wordpress |

Stored Cross-Site Scripting (XSS) vulnerabilities in the Team Showcase plugin before 1.22.16 for WordPress allow remote authenticated attackers to import layouts including JavaScript supplied via a remotely hosted crafted payload in the source parameter via AJAX. The action must be set to team_import_xml_layouts. |

2021-01-01 |

not yet calculated |

CVE-2020-35937

MISC |

| wordpress — wordpress |

Stored Cross-Site Scripting (XSS) vulnerabilities in the Post Grid plugin before 2.0.73 for WordPress allow remote authenticated attackers to import layouts including JavaScript supplied via a remotely hosted crafted payload in the source parameter via AJAX. The action must be set to post_grid_import_xml_layouts. |

2021-01-01 |

not yet calculated |

CVE-2020-35936

MISC |

wordpress — wordpress

|

Insecure Deserialization in the Newsletter plugin before 6.8.2 for WordPress allows authenticated remote attackers with minimal privileges (such as subscribers) to use the tpnc_render AJAX action to inject arbitrary PHP objects via the options[inline_edits] parameter. NOTE: exploitability depends on PHP objects that might be present with certain other plugins or themes. |

2021-01-01 |

not yet calculated |

CVE-2020-35932

MISC |

wordpress — wordpress

|

A Reflected Authenticated Cross-Site Scripting (XSS) vulnerability in the Newsletter plugin before 6.8.2 for WordPress allows remote attackers to trick a victim into submitting a tnpc_render AJAX request containing either JavaScript in an options parameter, or a base64-encoded JSON string containing JavaScript in the encoded_options parameter. |

2021-01-01 |

not yet calculated |

CVE-2020-35933

MISC |

zyxel — zyxel

|

Certain Zyxel products allow command injection by an admin via an input string to chg_exp_pwd during a password-change action. This affects VPN On-premise before ZLD V4.39 week38, VPN Orchestrator before SD-OS V10.03 week32, USG before ZLD V4.39 week38, USG FLEX before ZLD V4.55 week38, ATP before ZLD V4.55 week38, and NSG before 1.33 patch 4. |

2020-12-27 |

not yet calculated |

CVE-2020-29299

MISC

MISC |

by Contributed | Jan 4, 2021 | Technology

This article is contributed. See the original author and article here.

Overview

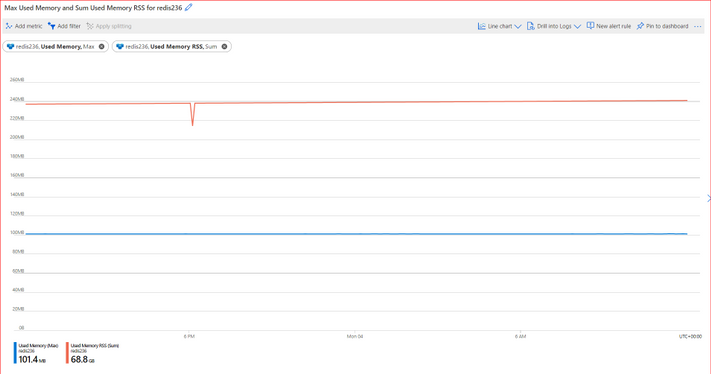

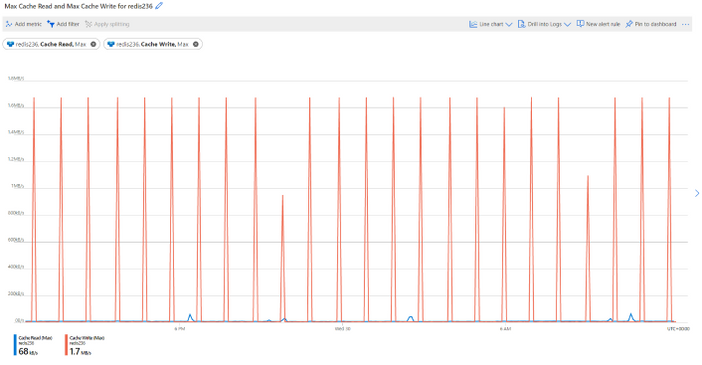

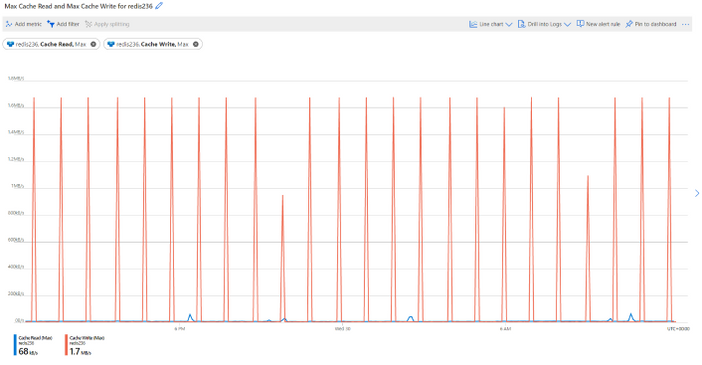

There are many reasons that may cause timeouts on Redis client side, due to client, network or server side causes, and the error message also may differ based on Client library used.

Timeouts in Azure Cache for Redis occurs on client side when client application cannot receive the response from Redis server side timely.

Anytime, when client application doesn’t receive response before one of the timeout value expire, a timeout will occur and will be logged on client application logs as Redis timeout error message.

There are some causes on Azure Cache for Redis server or on the VM hosting it, that can also cause Redis timeouts on client side. If the cause of the delay is on Server side, the client application will not receive the response from Redis server timely and a timeout may happen.

Order by most common issues, below are the most common Server side causes:

1- Server Update / Patch

2- Long Running Commands

3- Server High CPU Load

4- Server High Memory Usage

5- Redis Server Bandwidth Limit Exceeded

For Client or Network side Redis timeout causes, please see these Tech Community articles:

Azure Redis Timeouts – Client Side Issues

Azure Redis Timeouts – Network Issues

When any Redis Server update occurs, some Redis timeouts may happen on client side.

This is expected and by design as described below.

Standard/Premium Tiers of Azure Cache for Redis are made up of two Redis nodes. Each node running a single Redis server process and having a dedicated VM.

One instance is the “primary” node and other is the “replica” node. The primary node replicates data to the replica node more or less continuously.

If the primary node goes down unexpectedly, the replica will promote itself to primary, typically within 10-15 seconds. When the old primary node comes back up, it will become a replica and replicate data from the current primary node.

In the case of any update/patch, replica is proactively promoted to primary and the client should be able to immediately reconnect without any significant delay.

Basic tier cache has only one node, with no replica to take over during patching, so during update/patch the Basic tier Redis instances will go down and not be accessible during the patching process.

This is one of the reasons why Basic caches are good for test/development environments but not recommended for production.

Redis updates:

On Azure Redis service, there are three types of updates:

- Redis updates/patches applied to the binaries used by Redis service;