by Contributed | Dec 2, 2020 | Technology

This article is contributed. See the original author and article here.

It’s been observed that Users while configuring any management service feature like Vulnerability Assessment , Auditing , Threat protection etc. for their Azure SQL DB/Server seldom fails with an error message { “:”PrinicipalNotFound”,”message”:”Principal ***** does not exist in the directory ****. ”} with HTTPS status code 400 (bad request)

This error states , There is no Azure AD Identity assigned for your Azure SQL Server . To solve the problem you may need to create an Azure AD identity and assign the identity to the Azure SQL logical server with below steps.

- Open a new cloud shell window from the top right side of azure portal or you may use PowerShell to connect with your Azure subscription.

- Paste the below PowerShell code and execute it , it will create a function(Assign-AzSQLidentity) for the current PowerShell session.

Function Assign-AzSQLidentity

{

Param

(

[parameter(Mandatory=$true)][string]$ResourceGroup,

[parameter(Mandatory=$true)][string]$ServerName

)

"Checking if server identity exists..."

if(Get-AzADServicePrincipal -DisplayName $ServerName)

{

"Server identity already exists"

Get-AzADServicePrincipal -DisplayName $ServerName

}

else {

"Server identify for server " + $ServerName + " does not exist"

"Assigning identity to server " + $ServerName

Set-AzSqlServer -ResourceGroupName $ResourceGroup -ServerName $ServerName -AssignIdentity

}

}

- Use the function and execute it on Command Window , you need to Provide the parameters Resource Group and SQL Server name when prompts.

Assign-AzSQLidentity

Once the Identity is assigned , Please retry the management operation (Setting Auditing /VA etc..) , it should work now.

I hope this helps , Please let me know if you have any feedback or queries on it on the comment section .

Thank you @Yochanan Rachamim for guidance.

by Contributed | Dec 2, 2020 | Technology

This article is contributed. See the original author and article here.

We are getting ready to update SQL Server Replication Management Pack. This release will bring support for older SQL Server versions (2012, 2014, 2016) into the version agnostic MP. That is, going forward just like SQL Server MP, we will have version-agnostic Replication MP that can monitor all SQL Server versions in support. This is the last MP in SQL Server MP family to move to full version-agnostic mode.

Please install and use this public preview and send us your feedback. We appreciate the time and effort you spend on these previews which make the final product so much better.

Microsoft System Center Management Pack (Community Technology Preview) for SQL Server Replication

What’s New

- Updated MP to support SQL Server 2012 through 2019

- Updated “Replication Agents failed on the Distributor” unit monitor to extend it for Log Reader and Queue Reader agents’ detection

- Added new property ‘DiskFreeSpace’ for “Publication Snapshot Available Space” unit monitor

- Removed “One or more of the Replication Agents are retrying on the Distributor” monitor as non-useful

- Updated display strings

Issues Fixed

- Fixed discovery issue on SQL Server 2019

- Fixed issue with the incorrect definition of ‘MachineName’ property of DB Engine in some discoveries and replication agent-state unit monitors

- Fixed issue with wrong property-bag key initialization on case-sensitive DB Engine in some unit monitors and performance rules

- Fixed issue with the critical state of “Replication Log Reader Agent State for the Distributor” and “Replication Queue Reader Agent State for Distributor” unit monitors

- Fixed wrong space calculation in “Publication Snapshot Available Space”

- Fixed duplication of securable detection for monitor “Subscriber Securables Configuration Status”

We are looking forward to your feedback.

by Contributed | Dec 2, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

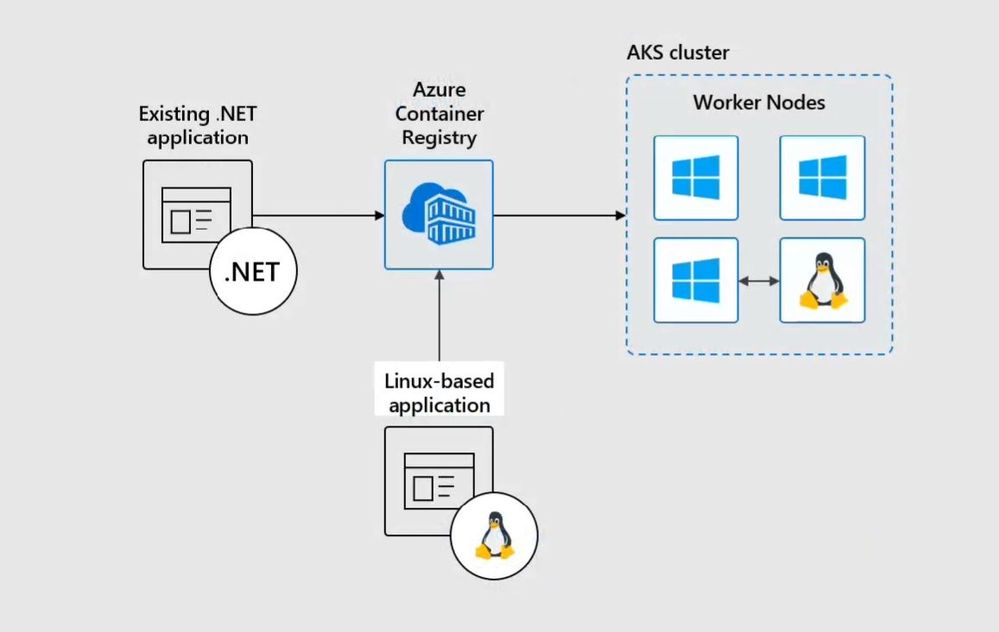

Today we are looking at how you can modernize Windows Server Apps on Microsoft Azure using Containers with Windows Admin Center and Azure Kubernetes Service (AKS). We will see how we can create a new custom Docker container image using Windows Admin Center, upload that to an Azure Container registry and deploy it to our Azure Kubernetes Service cluster.

In the video, we have a quick intro about Windows and Hyper-V containers in general. After that, we are using Windows Admin Center with the new Container Extension to manage our Windows Server container host and create a new Docker container image.

Windows Admin Center Container Extension and Windows Server Container Host

@Vinicius Apolinario and his team just released a new version of the container extension for Windows Admin Center in November 2020, which will help you to simply create a new Windows Server container host. You can find more about the latest new features here.

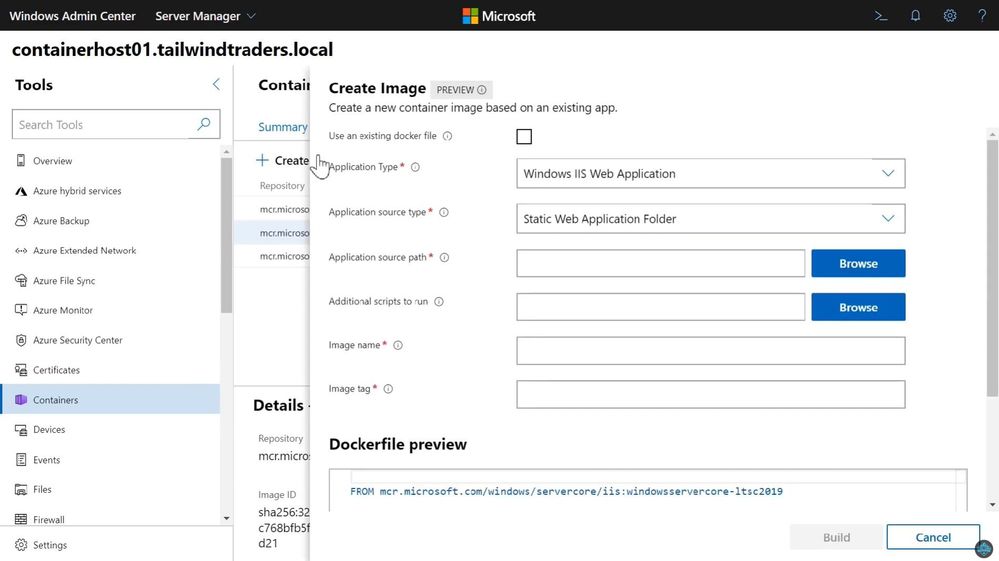

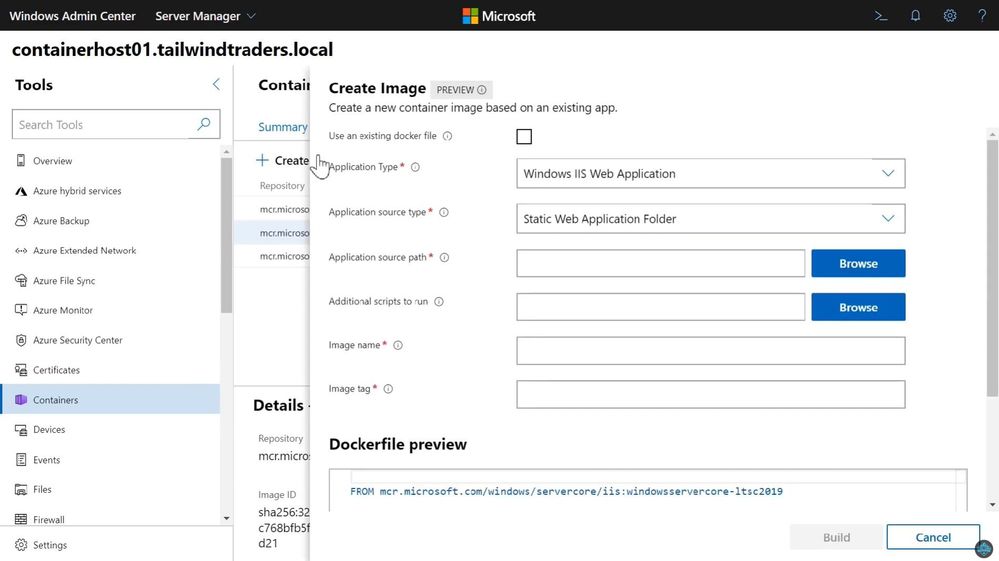

Create a new Docker Container Image using Windows Admin Center

You can use the Windows Admin Center Container extension to create a new Docker container image. This will help you to easily create the necessary basic Docker file for your container image.

Create a new Docker Container Image using Windows Admin Center

Create a new Docker Container Image using Windows Admin Center

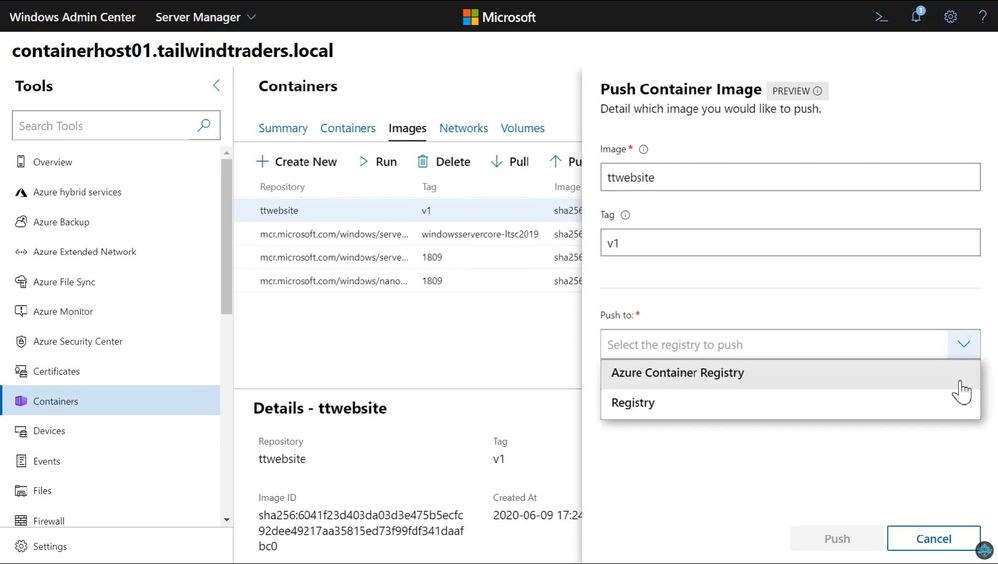

Push Windows Container Image to Azure Container Registry

After you have created your custom container image, you can now upload it to your container registry. This can be an Azure Container Registry (ACR) or another container registry you want to use.

Push Container image to ACR

Push Container image to ACR

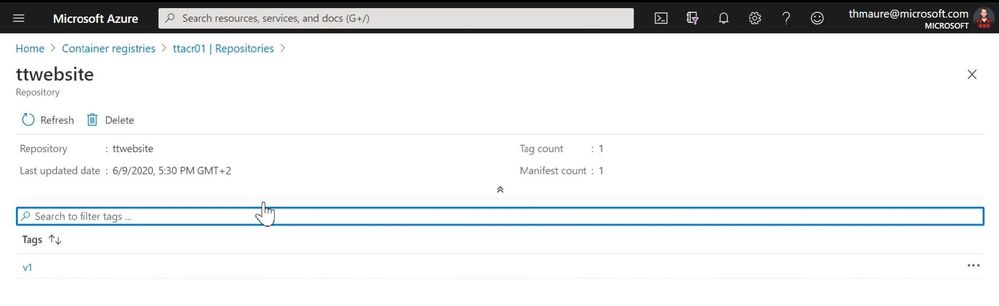

You can now find your container image on your container registry.

Azure Container Registry ACR

Azure Container Registry ACR

Now you can deploy your Windows Server container image to your Azure Kubernetes Service (AKS) cluster or other container offerings on Azure, AKS on Azure Stack HCI, Azure Stack Hub, or any other container platform which has access to the ACR.

Windows Server Containers on AKS

Windows Server Containers on AKS

I hope this blog was helpful to show some of the tooling available to modernize Windows Server applications on Microsoft Azure using Containers with Windows Admin Center and AKS! If you have any questions, feel free to leave a comment.

by Contributed | Dec 2, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

All Around Azure is the amazing show you may already know to learn everything about Azure services and how they can be utilized with different technologies, operating systems, and devices. Now, the show is expanding! All Around Azure: Developers Guide to IoT is the next event in our Worldwide Online Learning Days event series and will focus on topics ranging from IoT device connectivity, IoT data communication strategies, use of artificial intelligence at the edge, data processing considerations for IoT data, and IoT solutioning based on the Azure IoT reference architecture.

Join us on a guided journey into IoT Learning and certification options. We will be hosting 2.5 hours of live content and Q&A sessions in your local time zone – so you can get all your questions answered in real-time by our speakers. No matter where you are and what time zone you are streaming from, we will see you on January 19th.

Internet of Things Event Learning Path

This event follows along the Internet of Things Event Learning Path. A learning path is a carefully curated set of technical sessions that provide a view into a particular area of Azure; and often help you prepare for a certification. Each session in a learning path is business-scenario focused so you can see how this technology could be used in the real world. Each session is independent, however viewing them as a series builds a clearer picture of when and how to use which technology.

The Internet of Things Event Learning Path is designed for Solution Architects, Business Decision Makers, and Development teams that are interested in building IoT Solutions with Azure Services. The content is comprised of 5 video-based modules that approach topics ranging from IoT device connectivity, IoT data communication strategies, use of artificial intelligence at the edge, data processing considerations for IoT data, and IoT solutioning based on the Azure IoT reference architecture.

Each session includes a curated selection of associated modules from Microsoft Learn that can provide an interactive learning experience for the topics covered and may also contribute toward preparedness for the official AZ-220 IoT Developer Certification.

The video resources and presentation decks are open-source and can be found within the associated module’s folder in this repository.

Session 1: Connecting Your Physical Environment to a Digital World – A Roadmap to IoT Solutioning

With 80% of the world’s data collected in the last 2 years, it is estimated that there are currently 32 billion connected devices generating said data. Many organizations are looking to capitalize on this for the purposes of automation or estimation and require a starting point to do so.

This session will share an IoT real world adoption scenario and how the team went about incorporating IoT Azure services.

Session 2: Deciphering Data – Optimizing Data Communication to Maximize your ROI

Data collection by itself does not provide business values. IoT solutions must ingest, process, make decisions, and take actions to create value. This module focuses on data acquisition, data ingestion, and the data processing aspect of IoT solutions to maximize value from data.

As a device developer, you will learn about message types, approaches to serializing messages, the value of metadata and IoT Plug and Play to streamline data processing on the edge or in the cloud.

As a solution architect, you will learn about approaches to stream processing on the edge or in the cloud with Azure Stream Analytics, selecting the right storage based on the volume and value of data to balance performance and costs, as well as an introduction to IoT reporting with PowerBI.

Check out more on Learn!

Session 3: Adding Intelligence – Unlocking New Insights with AI & ML

For many scenarios, the cloud is used as a way to process data and apply business logic with nearly limitless scale. However, processing data in the cloud is not always the optimal way to run computational workloads: either because of connectivity issues, legal concerns, or because you need to respond in near-real time with processing at the Edge.

In this session we dive into how Azure IoT Edge can help in this scenario. We will train a machine learning model in the cloud using the Microsoft AI Platform and deploy this model to an IoT Edge device using Azure IoT Hub.

At the end, you will understand how to develop and deploy AI & Machine Learning workloads at the Edge. Check out more on Learn!

Session 4: Big Data 2.0 as your New Operational Data Source

A large part of value provided from IoT deployments comes from data. However, getting this data into the existing data landscape is often overlooked. In this session, we will start by introducing what are the existing Big Data Solutions that can be part of your data landscape.

We will then look at how you can easily ingest IoT Data within traditional BI systems like Data warehouses or in Big Data stores like data lakes. When our data is ingested, we see how your data analysts can gain new insights on your existing data by augmenting your PowerBI reports with IoT Data. Looking back at historical data with a new angle is a common scenario. Finally, we’ll see how to run real-time analytics on IoT Data to power real time dashboards or take actions with Azure Stream Analytics and Logic Apps. By the end of the presentation, you’ll have an understanding of all the related data components of the IoT reference architecture. Check out more on Learn!

Session 5: Get to Solutioning – Strategy & Best Practices when Mapping Designs from Edge to Cloud

by Contributed | Dec 2, 2020 | Technology

This article is contributed. See the original author and article here.

Project for the web comes with some great out-of-the-box tools for keeping your team on track. App features like orange and red highlights for late and overdue tasks provide you with visual cues for quickly finding tasks that need attention. There’s also Power BI reporting, which gives you visually rich report pages for your portfolio, resource and and project overviews.

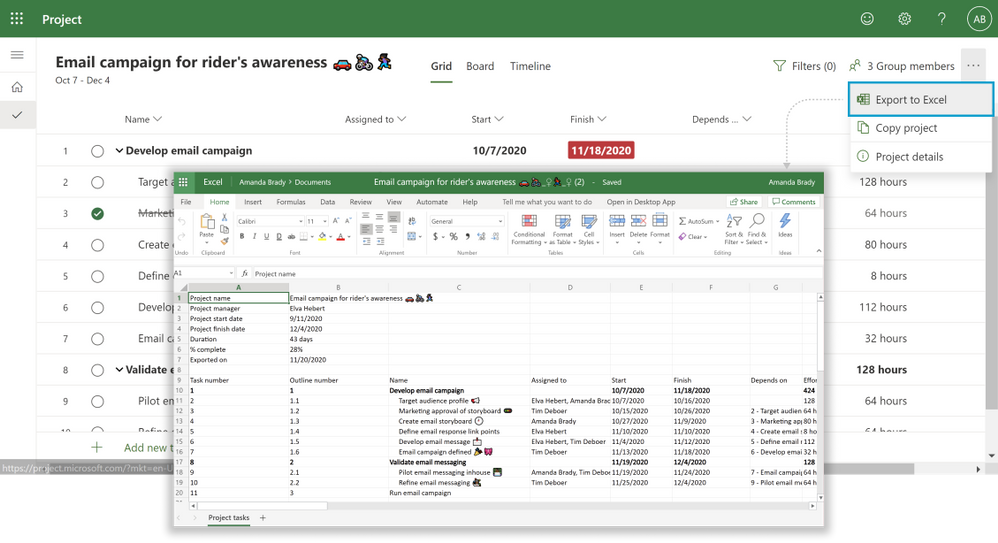

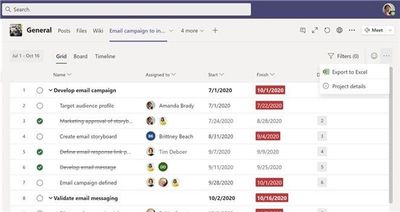

And now we have added Export to Excel as it was one of your top requests we have received over the months. You can now export your project to Microsoft Excel where you can customize the data in whatever way your team finds most useful.

To export a project, simply click on the overflow menu at the top right corner and then click the Export to Excel button.

This feature is also available in Project Teams app.

When to export a project to Excel

There are multiple scenarios for Export to Excel, here are some to help you get started:

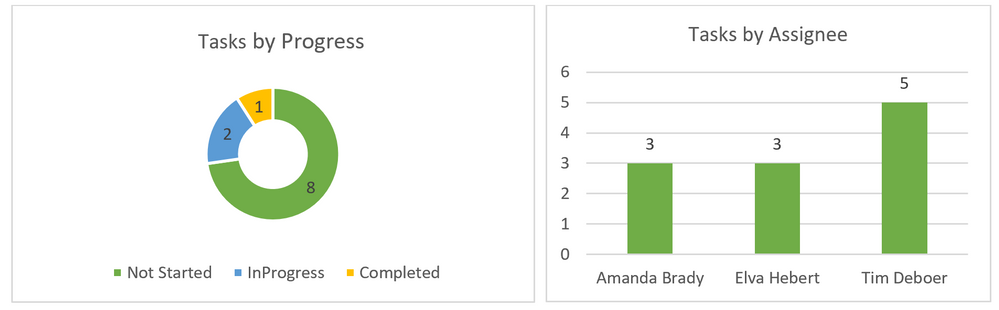

- For reporting: You will now be able to use the data you export from Project to create charts, pivot tables and more in Excel. You can quickly sort the task by their finish dates or filter information to find out the completed tasks. You can then share this information with your team or drop into presentations. Some snapshots of the charts created on top of Excel are:

- For sharing: Use Export to Excel to share your project status with guest users, vendors, or your clients who do not have access to Project. Easily delete information you do not wish to share with others such as providing high-level executive summaries without unnecessary details.

- For Archiving: Archive your completed projects in Excel to quickly share your compliance and maintain an external record of your work.

- For Printing: Print your project in Excel in a format that makes it easier for senior management to consume.

These are just a few of our ideas of how Export to Excel can help you get more out of Project—but we want to hear how you are using it! Leave us a comment below on your experiences so far. Please let us know the ideas for future integration capabilities or enhancements to current ones, like adding the ability to import items from Excel back into Project, through our User Voice site.

Recent Comments