by Contributed | Nov 17, 2020 | Technology

This article is contributed. See the original author and article here.

Migrating, intact, from one platform to another is no easy task – unless you work with a trusted partner who knows how to get from here to there, and not have that be the end.

The Intrazone continues to spotlight Microsoft partners, the people and companies who deliver solutions and services to empower our customers to achieve more. In our 8th partner episode, we talk with Tewabe “Joro” Ayenew (Microsoft 365 migrations Executive | Verinon) and his customer, Gary Moss (Director, Application Services Assurance | Stanley Black & Decker).

In this episode, we dig into the breadth of SharePoint at Stanley Black & Decker over the last 15 years ago. We then dig into one of their recent successes moving 9TB of content from OpenText Documentum eRoom to SharePoint in Microsoft 365. The result being updated and advanced productivity for their 9,400 users on this content, now more aligned with much of their active content.

And… on with the show.

https://html5-player.libsyn.com/embed/episode/id/16811675/height/90/theme/custom/thumbnail/yes/direction/backward/render-playlist/no/custom-color/247bc1/

Subscribe to The Intrazone podcast! Listen this partner episode on Verinon now + show links and more below.

![IZ-P008_Joro-and-Gary.jpg Left-to-right: Tewabe “Joro” Ayenew (Microsoft 365 migrations Executive | Verinon) and Gary Moss (Director, Application Services Assurance | Stanley Black & Decker). [The Intrazone guests]](https://techcommunity.microsoft.com/t5/image/serverpage/image-id/233973i09064276AAB55246/image-size/large?v=1.0&px=999) Left-to-right: Tewabe “Joro” Ayenew (Microsoft 365 migrations Executive | Verinon) and Gary Moss (Director, Application Services Assurance | Stanley Black & Decker). [The Intrazone guests]

Left-to-right: Tewabe “Joro” Ayenew (Microsoft 365 migrations Executive | Verinon) and Gary Moss (Director, Application Services Assurance | Stanley Black & Decker). [The Intrazone guests]

Link to articles mentioned in the show:

- Hosts and guests

- Articles and sites

- Events

Subscribe today!

Listen to the show! If you like what you hear, we’d love for you to Subscribe, Rate and Review it on iTunes or wherever you get your podcasts.

Be sure to visit our show page to hear all the episodes, access the show notes, and get bonus content. And stay connected to the SharePoint community blog where we’ll share more information per episode, guest insights, and take any questions from our listeners and SharePoint users (TheIntrazone@microsoft.com). We, too, welcome your ideas for future episodes topics and segments. Keep the discussion going in comments below; we’re hear to listen and grow.

Subscribe to The Intrazone podcast! And listen this partner episode on Verinon now.

Thanks for listening!

The SharePoint team wants you to unleash your creativity and productivity. And we will do this, together, one partner at a time.

The Intrazone links

![Chris-Mark_in-studio.jpg Left to right [The Intrazone co-hosts]: Chris McNulty, director (SharePoint, #ProjectCortex – Microsoft) and Mark Kashman, senior product manager (SharePoint – Microsoft).](https://www.drware.com/wp-content/uploads/2020/11/large-375) Left to right [The Intrazone co-hosts]: Chris McNulty, director (SharePoint, #ProjectCortex – Microsoft) and Mark Kashman, senior product manager (SharePoint – Microsoft).

Left to right [The Intrazone co-hosts]: Chris McNulty, director (SharePoint, #ProjectCortex – Microsoft) and Mark Kashman, senior product manager (SharePoint – Microsoft).

The Intrazone, a show about the Microsoft 365 intelligent intranet (aka.ms/TheIntrazone)

The Intrazone, a show about the Microsoft 365 intelligent intranet (aka.ms/TheIntrazone)

by Contributed | Nov 17, 2020 | Technology

This article is contributed. See the original author and article here.

In this series on DevOps for Data Science, I’m covering the Team Data Science Process, a definition of DevOps, and why Data Scientists need to think about DevOps. It’s interesting that most definitions of DevOps deal more with what it isn’t than what it is. Mine, as you recall, is quite simple:

DevOps is including all parties involved in getting an application deployed and maintained to think about all the phases that follow and precede their part of the solution

Now, to do that, there are defined processes, technologies, and professionals involved – something DevOps calls “People, Process and Products”. And there are a LOT of products to choose from, for each phase of the software development life-cycle (SDLC).

But some folks tend to focus too much on the technologies – referred to as the DevOps “Toolchain”. Understanding each of these technologies is indeed useful, although there are a LOT of them out there from software vendors (including Microsoft) and of course the playing field for Open Source Software (OSS) is at least as large, and contains multiple forks.

While knowing a set of technologies is important, it’s not the primary issue. I tend to focus on what I need to do first, then on how I might accomplish it. I let the problem select the technology, and then I go off and learn that as well as I need to so that I can get my work done. I try not to get too focused on a given technology stack – I grab what I need, whether that’s Microsoft or OSS. I choose the requirements and constraints for my solution, and pick the best fit. Sometimes one of those constraints is that everything needs to work together well, so I may stay in a “family” of technologies for a given area. In any case, it’s the problem that we are trying to solve, not the choice of tech.

That being said, knowing the tech is a very good thing. It will help you “shift left” as you work through the process – even as a Data Scientist. It wouldn’t be a bad idea to work through some of these technologies to learn the process.

I have a handy learning plan you can use to start with here: https://github.com/BuckWoody/LearningPaths/blob/master/IT%20Architect/Learning%20Path%20-%20Devops%20for%20Data%20Science.md. (Careful – working through all the references I have here could take a while – but it’s a good list). There are some other more compact references at the end of this article.

See you in the next installment on the DevOps for Data Science series.

For Data Science, I find this progression works best – taking these one step at a time, and building on the previous step – the entire series is here:

- Infrastructure as Code (IaC)

- Continuous Integration (CI) and Automated Testing

- Continuous Delivery (CD)

- Release Management (RM)

- Application Performance Monitoring

- Load Testing and Auto-Scale

In the articles in this series that follows, I’ll help you implement each of these in turn.

(If you’d like to implement DevOps, Microsoft has a site to assist. You can even get a free offering for Open-Source and other projects: https://azure.microsoft.com/en-us/pricing/details/devops/azure-devops-services/)

by Contributed | Nov 17, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

Follow the same steps mentioned here Publish a bot to Azure – Bot Composer | Microsoft Docs However to publish Azure Bot Framework Composer Bot to already existing Resource Group, follow below steps.

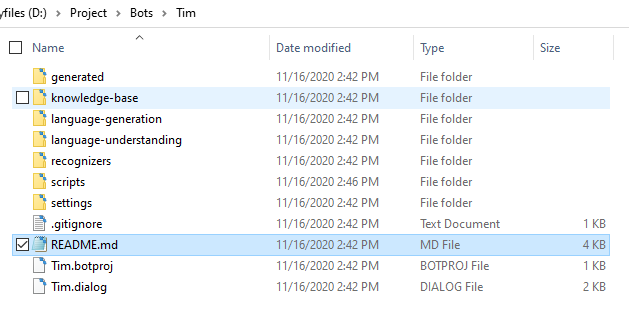

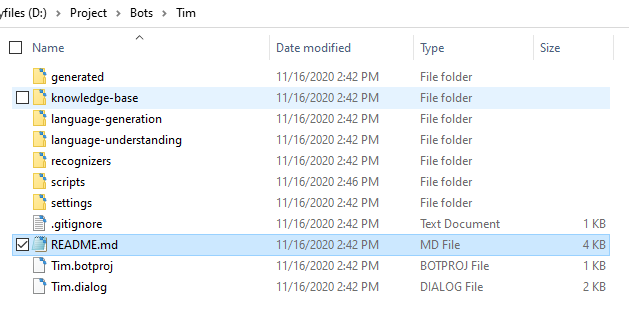

You can find the provisioning steps in the readme.md file which is automatically created when you create a new Bot in Bot Composer.

As of now, by default, provisioning script creates a new Resource Group appending environment value to it and does not deploy the services to an existing Resource Group.

Find value

const resourceGroupName = ${name}-${environment};

and change it to

const resourceGroupName = ${name};

in the file provisionComposer.js and save. With this simple change, it will not append the given Environment value to Resource Group name.

Why this will work?

In provisionComposer.js file, the code

const createResourceGroup = async (client, location, resourceGroupName) => { logger({ status: BotProjectDeployLoggerType.PROVISION_INFO, message: "> Creating resource group ...", }); const param = { location: location, }; return await client.resourceGroups.createOrUpdate(resourceGroupName, param); };

uses function client.resourceGroups.createOrUpdate – this function just checks if the RG exists or not, it’s present, it’ll use the same RG, if not, it’ll create a new RG and also if you provide a new location it’ll update the location to existing RG.

by Contributed | Nov 17, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

Today, Azure is proud to take the next step toward our commitment to enabling customers to harness the power of AI (Artificial Intelligence) at scale. For AI, the bar for innovation has never been higher with hardware requirements for training models far outpacing Moore’s Law. Technology leaders across industries are discovering new ways to apply the power of machine learning, accelerated analytics and AI to make sense of unstructured data. The natural language models of today are exponentially larger than the largest models of four short years ago.

OpenAI’s GPT-3 model, for instance, has three orders of magnitude more parameters than the ResNet-50 image classification model that was at the forefront of AI in the mid-2010s. These kinds of demanding workloads required the development of a new class of system within Microsoft Azure, from the ground-up using the latest hardware innovations.

The Azure team has built on our experience virtualizing the latest GPU technology, and building the public cloud industry’s leading InfiniBand-enabled HPC virtual machines to offer something totally new for AI in the cloud. Each deployment of an ND A100 v4 cluster rivals the largest AI supercomputers in the industry in terms of raw scale and advanced technology. These VMs enjoy the same unprecedented 1.6 Tb/s of total dedicated InfiniBand bandwidth per VM, plus AMD Rome-powered compute cores behind every NVIDIA A100 GPU as used by the most powerful dedicated on-premise HPC systems. Azure adds massive scale, elasticity, and versatility of deployment, as expected by Microsoft’s customers and internal AI engineering teams.

This unparalleled scale and capability of interconnect in a cloud offering, with each GPU directly paired with a high-throughput low-latency InfiniBand interface, offers our customers a unique dimension of scaling on demand without managing their own datacenters.

Today, at SC20, we’re announcing the public preview of the ND A100 v4 VM family, available from one virtual machine to world-class supercomputer scale, with each individual VM featuring:

- Eight of the latest NVIDIA A100 Tensor Core GPUs with 40 GB of HBM2 memory, offering a typical per-GPU performance improvement of 1.7x – 3.2x compared to V100 GPUs- or up to 20x by layering features like new mixed-precision modes, sparsity, and MIG- for significantly lower total cost of training with improved time-to-solution

- VM system-level GPU interconnected based on NVLINK 3.0 + NVswitch

- One 200 Gigabit InfiniBand HDR link per GPU with full NCCL2 support and GPUDirect RDMA for 1.6 Tb/s per virtual machine

- 40 Gb/s front-end Azure networking

- 6.4 TB of local NVMe storage

- InfiniBand-connected job sizes in the thousands of GPUs, featuring any-to-any and all-to-all communication without requiring topology aware scheduling

- 96 physical AMD Rome vCPU cores with 900 GB of DDR4 RAM

- PCIe Gen 4 for the fastest possible connections between GPU, network and host CPUs- up to twice the I/O performance of PCIe Gen 3-based platforms

Like other Azure GPU virtual machines, ND A100 v4 is also available with Azure Machine Learning (AML) service for interactive AI development, distributed training, batch inferencing, and automation with ML Ops. Customers can choose to deploy through AML or traditional VM Scale Sets, and soon many other Azure-native deployment options such as Azure Kubernetes Service. With all of these, optimized configuration of the systems and InfiniBand backend network is taken care of automatically.

Azure Machine Learning provides a tuned virtual machine (pre-installed with the required drivers and libraries) and container-based environments optimized for the ND A100 v4 family. Sample recipes and Jupyter Notebooks help users get started quickly with multiple frameworks including PyTorch, TensorFlow, and training state of the art models like BERT. With Azure Machine Learning, customers have access to the same tools and capabilities in Azure as our AI engineering teams.

Accelerate your innovation and unlock your AI potential with the ND A100 v4.

Preview sign-up is open. Request access now.

Additional Links

by Contributed | Nov 17, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

Organizations are leveraging artificial intelligence (AI) and machine learning (ML) to derive insight and value from their data and to improve the accuracy of forecasts and predictions. In rapidly changing environments, Azure Databricks enables organizations to spot new trends, respond to unexpected challenges and predict new opportunities. Data teams are using Delta Lake to accelerate ETL pipelines and MLflow to establish a consistent ML lifecycle.

Solving the complexity of ML frameworks, libraries and packages

Customers frequently struggle to manage all of the libraries and frameworks for machine learning on a single laptop or workstation. There are so many libraries and frameworks to keep in sync (H2O, PyTorch, scikit-learn, MLlib). In addition, you often need to bring in other Python packages, such as Pandas, Matplotlib, numpy and many others. Mixing and matching versions and dependencies between these libraries can be incredibly challenging.

Figure 1. Databricks Runtime for ML enables ready-to-use clusters with built-in ML Frameworks

With Azure Databricks, these frameworks and libraries are packaged so that you can select the versions you need as a single dropdown. We call this the Databricks Runtime. Within this runtime, we also have a specialized runtime for machine learning which we call the Databricks Runtime for Machine Learning (ML Runtime). All these packages are pre-configured and installed so you don’t have to worry about how to combine them all together. Azure Databricks updates these every 6-8 weeks, so you can simply choose a version and get started right away.

Establishing a consistent ML lifecycle with MLflow

The goal of machine learning is to optimize a metric such as forecast accuracy. Machine learning algorithms are run on training data to produce models. These models can be used to make predictions as new data arrive. The quality of each model depends on the input data and tuning parameters. Creating an accurate model is an iterative process of experiments with various libraries, algorithms, data sets and models. The MLflow open source project started about two years ago to manage each phase of the model management lifecycle, from input through hyperparameter tuning. MLflow recently joined the Linux Foundation. Community support has been tremendous, with 250 contributors, including large companies. In June, MLflow surpassed 2.5 million monthly downloads.

Diagram: MLflow unifies data scientists and data engineers

Ease of infrastructure management

Data scientists want to focus on their models, not infrastructure. You don’t have to manage dependencies and versions. It scales to meet your needs. As your data science team begins to process bigger data sets, you don’t have to do capacity planning or requisition/acquire more hardware. With Azure Databricks, it’s easy to onboard new team members and grant them access to the data, tools, frameworks, libraries and clusters they need.

Alignment Healthcare

Alignment Healthcare, a rapidly growing Medicare insurance provider, serves one of the most at-risk groups of the COVID-19 crisis—seniors. While many health plans rely on outdated information and siloed data systems, Alignment processes a wide variety and large volume of near real-time data into a unified architecture to build a revolutionary digital patient ID and comprehensive patient profile by leveraging Azure Databricks. This architecture powers more than 100 AI models designed to effectively manage the health of large populations, engage consumers, and identify vulnerable individuals needing personalized attention—with a goal of improving members’ well-being and saving lives.

Building your first machine learning model with Azure Databricks

![IZ-P008_Joro-and-Gary.jpg Left-to-right: Tewabe “Joro” Ayenew (Microsoft 365 migrations Executive | Verinon) and Gary Moss (Director, Application Services Assurance | Stanley Black & Decker). [The Intrazone guests]](https://techcommunity.microsoft.com/t5/image/serverpage/image-id/233973i09064276AAB55246/image-size/large?v=1.0&px=999)

Recent Comments