by Scott Muniz | Jul 29, 2020 | Uncategorized

This article is contributed. See the original author and article here.

Abstract

With the Azure Machine Learning service, the training and scoring of hundreds of thousands of models with large amounts of data can be completed efficiently leveraging pipelines where certain steps like model training and model scoring run in parallel on large scale out compute clusters. In order to help organizations get a head start on building such pipelines, the Many Models Solution Accelerator has been created. The Many Models Solution Accelerator provides two primary examples, one using custom machine learning and the other using AutoML. Give it a try today!

Executive Overview

Many executives are looking to Machine Learning to improve their business. With the reliance on a more digital world, the amount of data generated is increasing faster than ever. Further, companies are purchasing 3rd party datasets to combine with internal data to gain further insight and make better predictions. In order to make better predictions, sophisticated machine learning models are being built that leverages this large pool of data. Further, as companies are expanding to do business in a variety of markets and environments, general machine learning model no longer suffice, instead, more specific machine learning models are needed. In this case, “general machine learning models” refers to the granularity for which this model was built, for example, building a demand forecast model for a product at the country level versus at the city level which would be a “specific machine learning model.” Building more specific machine learning models can easily results in building hundreds of thousands of specific models instead of a handful of general models. Combining large datasets with the need of building hundreds of thousands of more specific machine learning models is not a trivial task. Doing so requires very large compute power. In fact, this task can greatly benefit from parallel compute power where multiple compute instances are working simultaneously to build the machine learning models in parallel. Once those models are trained, leveraging them to score large amounts of data presents the same problem characteristics which again, leveraging a compute cluster where multiple compute instances are making predictions using the machine learning models simultaneously can greatly reduce the time required to do so. With the Azure Machine Learning service, the training and scoring of hundreds of thousands of models with large amounts of data can be completed efficiently leveraging pipelines where certain steps like model training and model scoring run in parallel on large scale out compute clusters. In order to help organizations get a head start on building such pipelines, the Many Models Solution Accelerator has been created. The Many Models Solution Accelerator provides two primary examples, one using custom machine learning and the other using AutoML.

In Azure Machine Learning, AutoML automates the building of the most common categories of Machine Learning models in a very robust and sophisticated manner. For example, a very common machine learning problem is demand forecasting. Having a more accurate demand forecast can increase revenues and reduce waste. Traditionally, many statistical methods have been used to do just this. However, more modern techniques leverage Machine Learning including Deep Learning techniques to provide a more accurate demand forecast. Further, the demand forecast can be improved by moving from forecasting a broader scope (general machine learning model) to forecasting a more granular scope (specific machine learning model). Doing so means, for example, instead of building one forecast for each Product at the country level, building a forecast for each product at the city. Moving to more specific models results in building hundreds of thousands of forecasts using large amounts of data which as discussed above can be solved using the Many Models Solution Accelerator.

Technical Overview

As data scientist move from building a handful of general machine learning models to hundreds of thousands of more specific machine learning models (i.e. geography or product scope), the need to perform the model training and model scoring tasks require parallel compute power to finish in a timely manner. In the Azure Machine Learning service SDK, this is accomplished using Pipelines and specifically a ParallelRunStep which runs on a multi node Compute Clusters. The data scientist provides the ParallelRunStep a custom script, an input dataset, a compute cluster and the amount of parallelism they would like. This concept can be applied to a custom python script and to Automated Machine Learning (AutoML).

Automated Machine Learning (AutoML) uses over ten algorithms (including deep learning algorithms) with varying hyperparameters to build Classification, Regression and Forecasting models. Further, Pipelines automate the invocation of AutoML across multiple nodes using ParallelRunStep to train the models in parallel as well as to batch score new data. Pipelines can be scheduled to run within Azure Machine Learning or invoked using their REST endpoint from various Azure services (i.e. Azure Data Factory, Azure DevOps, Azure Functions, Azure Logic Apps, etc). When invoked, the Parallel Pipelines run on Compute Clusters within Azure Machine Learning. The Compute clusters can be scaled up and out to perform the training and scoring. Each node in a compute clusters can be have Terabytes of RAM, over a 100 cores, and multiple GPUs. Finally, the scored data can be stored in an a datastore in Azure, such as Azure DataLake Gen 2, and then copied to a specific location for an application to consume the results.

In order to provide a jump start in leveraging Pipelines with the new ParallelRunStep, the Many Models Solution Accelerator has been created. This solution accelerator showcases both a custom python script and an AutoML script.

Major Components

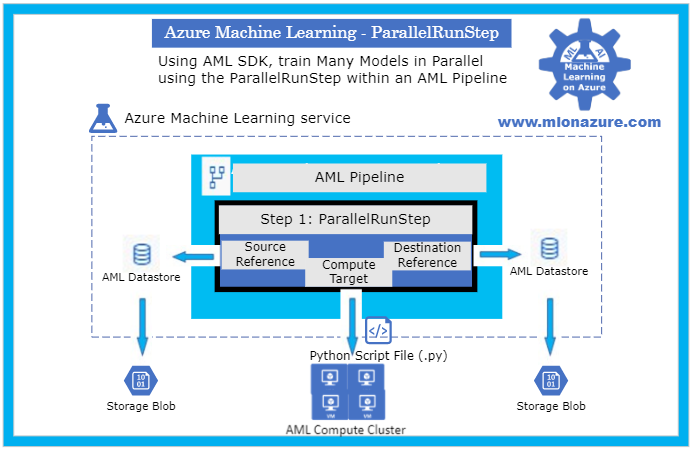

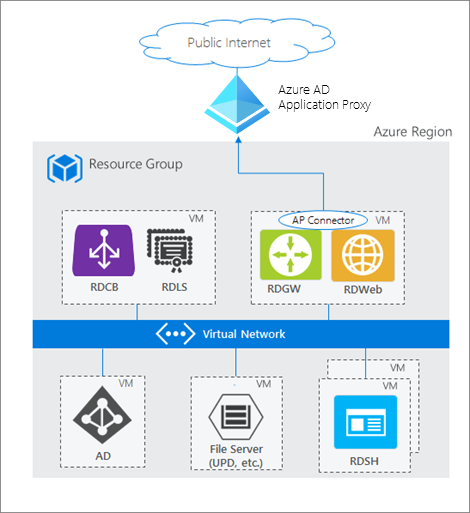

The main components of the Many Models Solution Accelerator includes an Azure Machine Learning Workspace, a Pipeline, a ParallelRunStep, a Compute Target, a Datastore, and a Python Script File as depicted in Figure 1, below.

Figure 1. The architecture of a Pipeline with a ParallelRunStep

For an overview of getting started with Azure Machine Learning service, please see the blog article MLonAzure: Getting Started with Azure Machine Learning service for the Data Scientist

For an overview of Pipelines, please see blog article, MLonAzure: Azure Machine Learning service Pipelines

Major Steps

1. Prerequisites

2. Data Prep

- Data needs to be split into multiple files (.csv or .parquet) for each group that a model is to be created for. Each file must contain one or more entire time series for the give group.

- The data must be placed in Azure Storage (e.g. ADL Gen 2, Blob Storage). The storage will then be registered as a Datastore from which two FileDatasets will be registered, one pointing to the folder containing the training data and the other to the folder containing the data to be scored.

- For example, to build a forecast model for each brand within a store, the training sales data would be split to create files StoreXXXX_BrandXXXX.

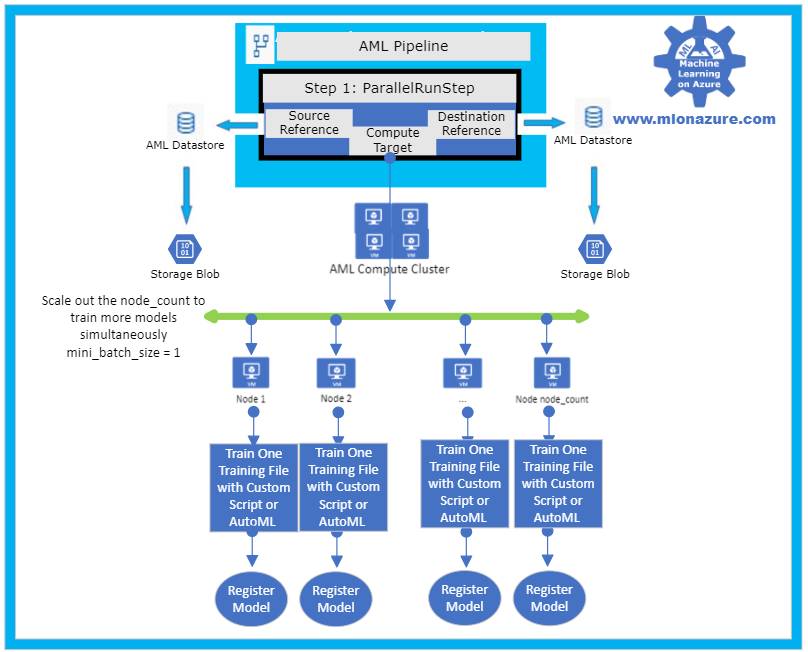

3. Model Training

The solution accelerator showcases model training with a custom python script and with AutoML which are orchestrated using a Pipeline. Please see solution-accelerator-manymodels-customscript and solution-accelerator-manymodels-AutoML. Putting it all together results in the architecture depicted in Figure 2, below.

Figure 2: Solution Accelerator Model Training

3. a. Pipeline

The solution accelerator leverages the Pipeline object to train the model. Specifically, a ParallelRunStep is used which requires a configuration parameters, ParallelRunConfig

ParallelRunConfig has many parameters, below are the typical ones used for the Many Models Solution Accelerator. For a complete list of ParallelRunConfig parameters, please see the ParallelRunConfig Class.

|

Parameter

|

Explanation

|

|

environment

|

Provides the configurations for the Python Environment

|

|

Entry_script

|

This is a Python file (.py extension only) that will run in parallel. Note that the Many Models Solution Accelerator contains a custom Entry_script that leverages AutoML and one that leverages custom code.

|

|

Compute_target

|

The AML ComputeCluster to run the step on.

|

|

Node_count

|

The number of nodes to use within the training cluster. Scale this number to a higher number to increase parallelism.

|

|

Process_count_per_node

|

The number of cores that will be used within each node

|

|

mini_batch_size

|

For FileDatasets it’s the number of files that are used at a time, for Tabular Datasets it’s the number data size that will be processed at a given time.

|

|

Run_inovcation_timeout

|

The overall allowed time for the parallel run step

|

3. b. Training script with AutoML

The solution accelerator showcases using AutoML Forecasting. AutoML has many parameters, below are the typical ones used for doing a Forecasting task within the Many Models Solution Accelerator. For a complete list of AutoML Config parameters, please see the AutoMLConfig Class.

automl_settings = {

"task" : 'forecasting',

"primary_metric" : 'normalized_root_mean_squared_error',

"iteration_timeout_minutes" : 10,

"iterations" : 15,

"experiment_timeout_hours" : 1,

"label_column_name" : 'Quantity',

"n_cross_validations" : 3,

"verbosity" : logging.INFO,

"debug_log": 'DebugFileName.txt',

"time_column_name": 'WeekStarting',

"max_horizon" : 6,

"group_column_names": ['Store', 'Brand'],

"grain_column_names": ['Store', 'Brand']

}

|

Parameter

|

Explanation

|

|

task

|

The type of AutoML Task: Classification, Regression, Forecasting

|

|

primary_metric

|

The metric that AutoML should optimize based on

|

|

Iteration_timeout_minutes

|

How long each of the number of “iterations” can run for

|

|

Iterations

|

Number of models that should be tried (combinations of various Algorithms + various Hyperparameters)

|

|

Experiment_timeout_hours

|

How long the overall AutoML Experiment can take. Note: The experiment may might timeout before all iterations are complete.

|

|

label_column_name

|

The column that is being predicted

|

|

n_cross_validations

|

Number of cross validations that should take place within the training dataset

|

|

verbosity

|

Log details

|

|

debug_log

|

Location for the debug log

|

|

time_column_name

|

The name of the Time column, note that the training dataset can have multiple time series

|

|

max_horizon

|

How far how the forecast will go

|

|

group_column_names

|

The names of columns used to group your models. For timeseries, the groups must not split up individual time-series. That is, each group must contain one or more whole time-series.

|

|

grain_column_names

|

The column names used to uniquely identify timeseries in data that has multiple rows with the same timestamp.

|

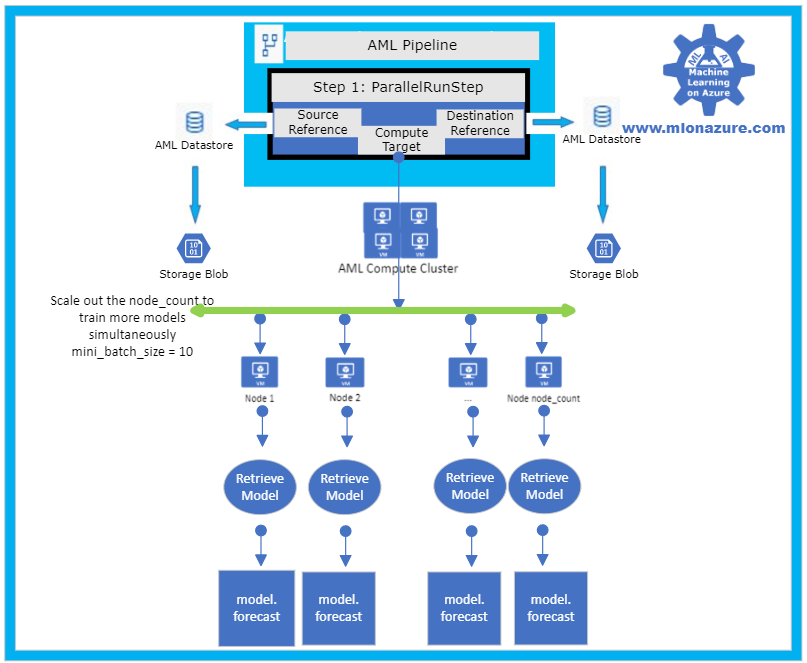

4. Model Forecasting

The solution accelerator showcases model forecasting with a custom python script and with AutoML which are orchestrated using a Pipeline. Please see solution-accelerator-manymodels-customscript and solution-accelerator-manymodels-AutoML. Putting it all together results in the architecture depicted in Figure 3, below.

Figure 3: Solution Accelerator Model Scoring

5. Automation

In order to automate the solution, the training and scoring pipelines must be published and a PipelineEndPoint must be created. Once that’s done, the PipelineEndpoint can then be invoked from Azure Data Factory. Specifically, the Azure Machine Learning Pipeline Activity is used. Note that the training and scoring pipelines can be collapsed into one pipelines if the training and scoring occur consecutively.

Next Steps

Azure Machine Learning Documentation

Many Models Solution Accelerator

Many Models Solution Accelerator Video

Azure Data Factory: Azure Machine Learning Pipeline Activity

MLOnAzure GitHub: Getting started with Pipelines

MLonAzure Blog: Getting Started with Azure Machine Learning for the Data Scientist

MLonAzure Blog: Azure Machine Learning service Pipelines

by Scott Muniz | Jul 29, 2020 | Uncategorized

This article is contributed. See the original author and article here.

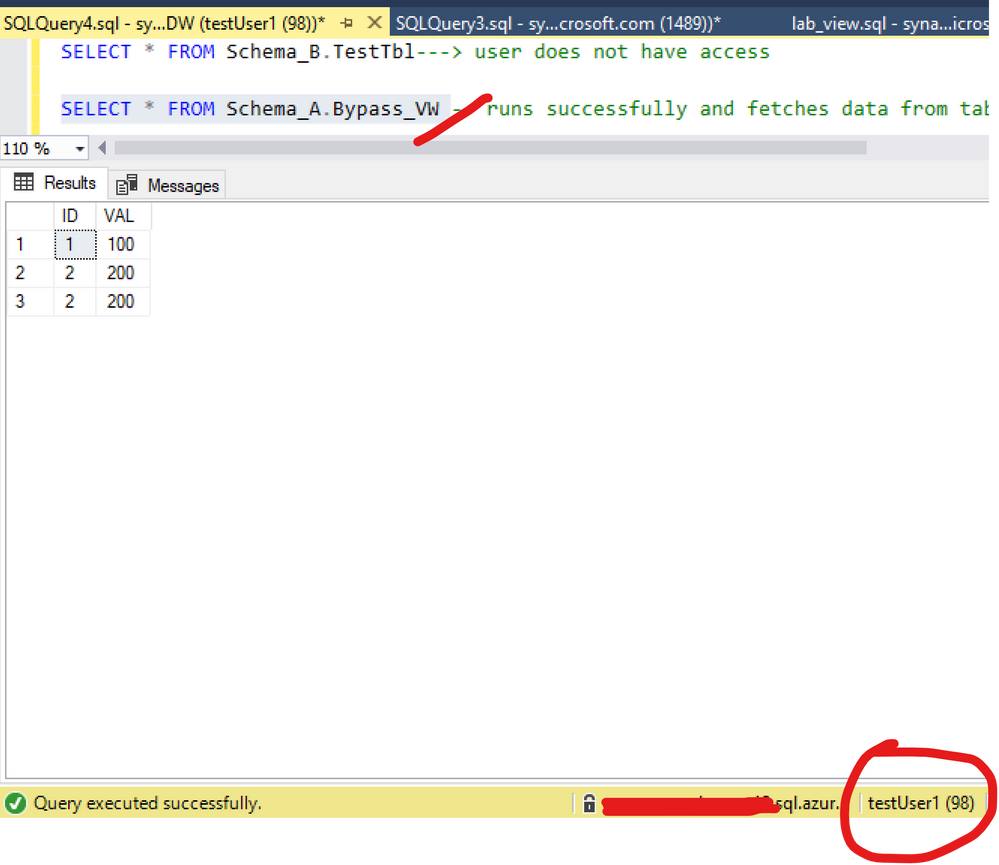

Scenario: Two schemas: A and B. User test has access to everything under schema A, he does not have permissions under Schema B. Schema A and B were created by the same owner.

A view was created under schema A pointing to a select under schema B. Customer can’t query table under schema B, but once he does a select on the view under Schema A he is able to access data under schema B. (??) weird, is not?

------------------------------

--Master Database

CREATE LOGIN testUser1

WITH PASSWORD = 'Lalala!0000'

----Change to SQLDW

CREATE USER testUser1 FROM LOGIN testUser1

------------------------------------------

CREATE SCHEMA Schema_B;

go

CREATE SCHEMA Schema_A;

go

--------------------------------------

GRANT CREATE SCHEMA ON DATABASE :: [SQL_DW_database_name] TO testUser1

GRANT SELECT, INSERT, DELETE, UPDATE, ALTER ON Schema::Schema_A TO testUser1

------------------------------------------

CREATE TABLE Schema_B.TestTbl

WITH(DISTRIBUTION=ROUND_ROBIN)

AS

SELECT 1 AS ID, 100 AS VAL UNION ALL

SELECT 2 AS ID, 200 AS VAL UNION ALL

SELECT 2 AS ID, 200 AS VAL

go

----------------------------------------

CREATE VIEW Schema_A.Bypass_VW

AS -- runs successfully

SELECT * FROM Schema_B.TestTbl

go

-------------------------------------------------------------------------

--Log into SQLDW with the testUser1 ; --->executing as this user.

GO

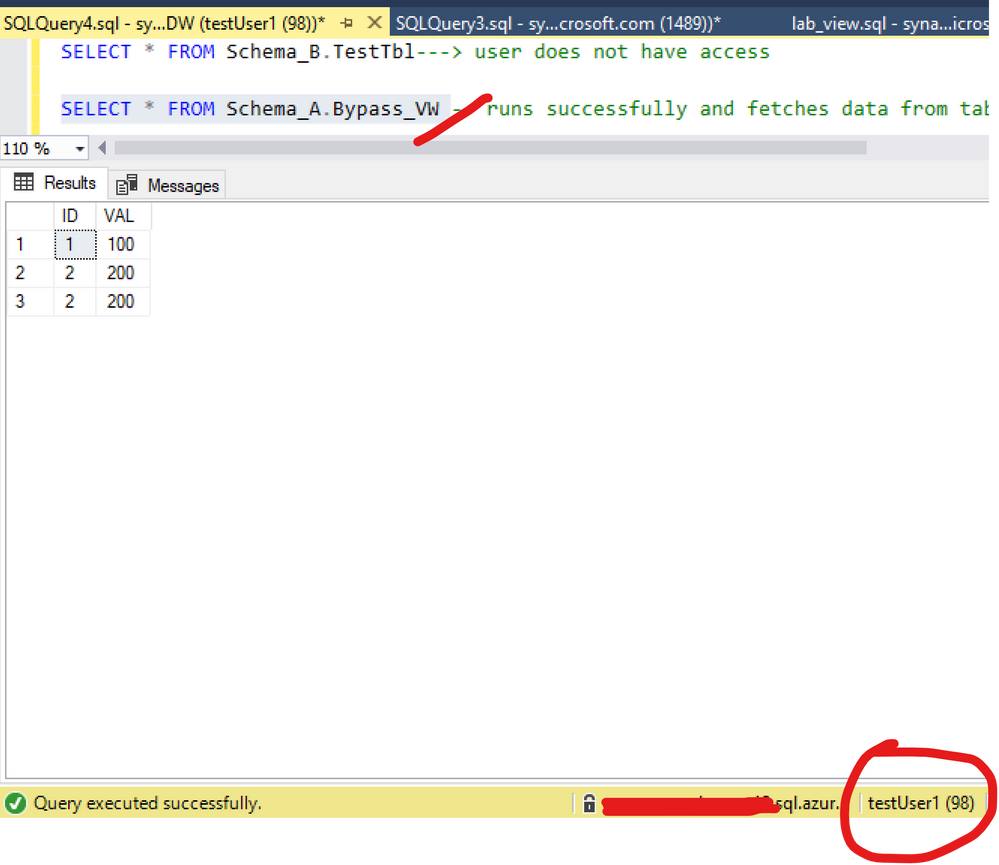

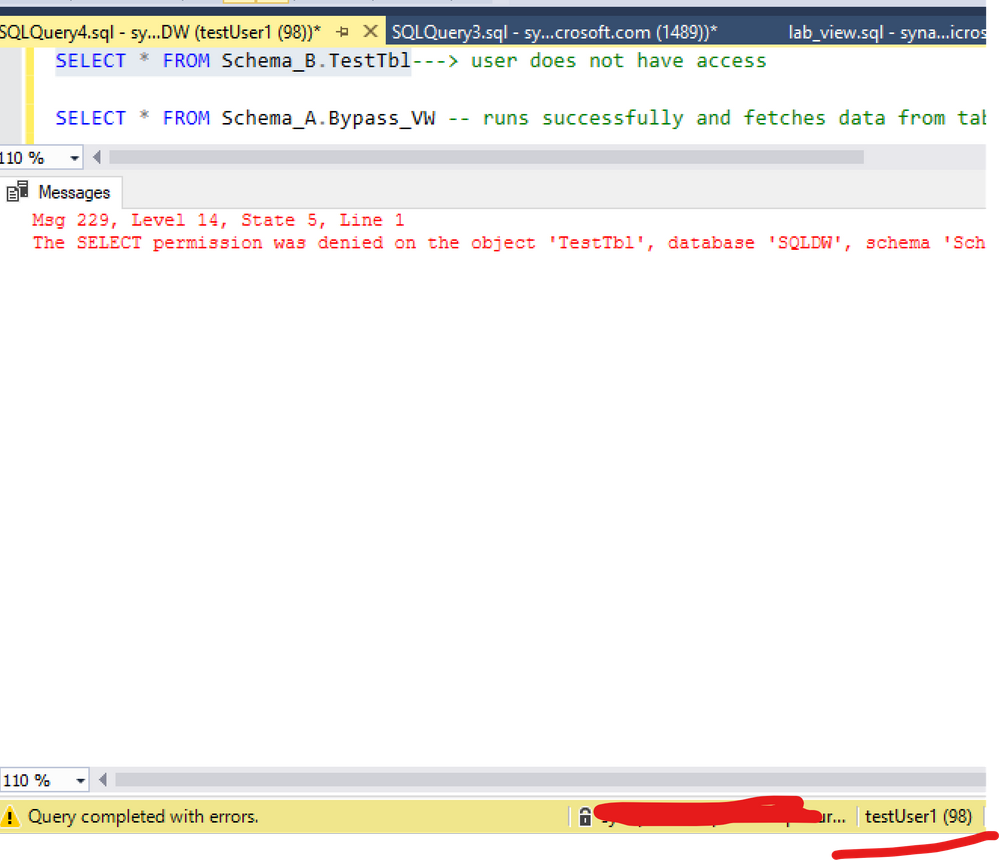

SELECT * FROM Schema_B.TestTbl---> user does not have access

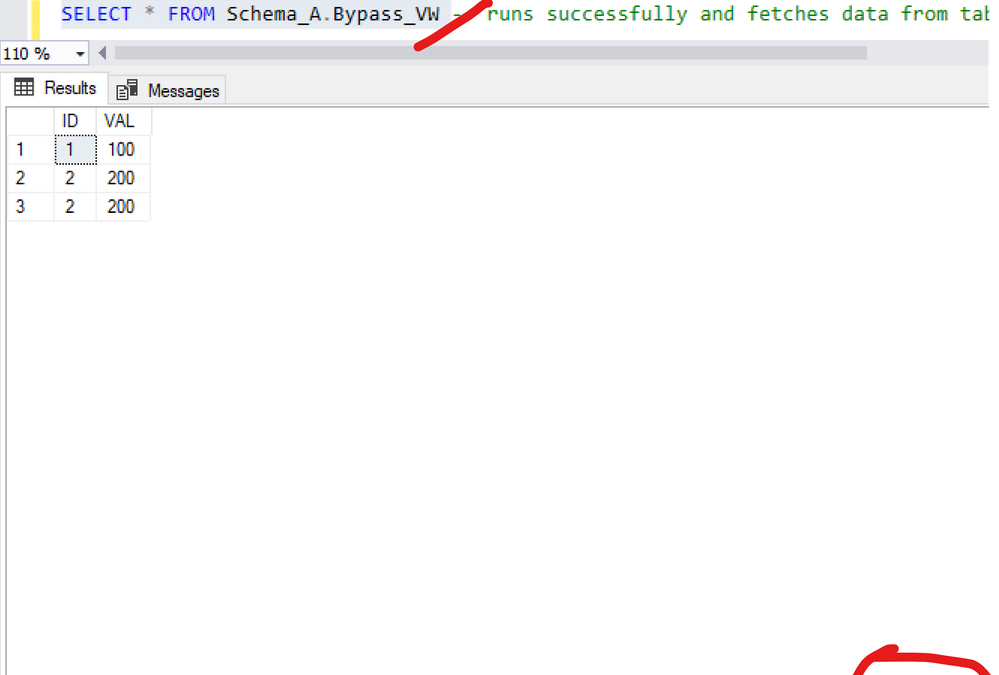

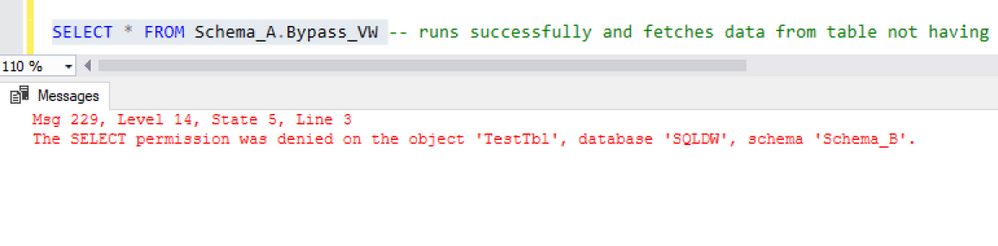

SELECT * FROM Schema_A.Bypass_VW -- runs successfully and fetches data from table not having select access to

Figure 1 and 2 exemplifies:

Figure 1: Query view

Figure 2: Query table

Here is the documentation about this:

A user with ALTER permission on a schema can use ownership chaining to access securables in other schemas, including securables to which that user is explicitly denied access. This is because ownership chaining bypasses permissions checks on referenced objects when they are owned by the principal that owns the objects that refer to them. A user with ALTER permission on a schema can create procedures, synonyms, and views that are owned by the schema’s owner. Those objects will have access (via ownership chaining) to information in other schemas owned by the schema’s owner. When possible, you should avoid granting ALTER permission on a schema if the schema’s owner also owns other schemas.

For example, this issue may occur in the following scenarios. These scenarios assume that a user, referred as U1, has the ALTER permission on the S1 schema. The U1 user is denied to access a table object, referred as T1, in the schema S2. The S1 schema and the S2 schema are owned by the same owner.

The U1 user has the CREATE PROCEDURE permission on the database and the EXECUTE permission on the S1 schema. Therefore, the U1 user can create a stored procedure, and then access the denied object T1 in the stored procedure.

The U1 user has the CREATE SYNONYM permission on the database and the SELECT permission on the S1 schema. Therefore, the U1 user can create a synonym in the S1 schema for the denied object T1, and then access the denied object T1 by using the synonym.

The U1 user has the CREATE VIEW permission on the database and the SELECT permission on the S1 schema. Therefore, the U1 user can create a view in the S1 schema to query data from the denied object T1, and then access the denied object T1 by using the view

(https://docs.microsoft.com/en-us/sql/t-sql/statements/grant-schema-permissions-transact-sql?view=sql-server-ver15)

Note this is the same across any version of SQL Server: SQLDB, SQLDW, SQL Server

Workaround:

I changed my demo based on the documentation. The point here is: there are 2 schemas with the same owner. So let’s change that: different schema owners.

List the ownership and keep this information:

----Create a new login

--Master Database

CREATE LOGIN testowner

WITH PASSWORD = 'Lalala!0000'

--SQLDW

CREATE USER testowner FROM LOGIN testowner

-------------

--list objects ownership

SELECT 'OBJECT' AS entity_type

,USER_NAME(OBJECTPROPERTY(object_id, 'OwnerId')) AS owner_name

,name

FROM sys.objects

---Keep the result of the permission. Once we change the ownership of the schema the permission per schema will be reset. ( if there is such information)

Select USER_NAME(principal_id),* from sys.schemas

--- I will change Schema_B as my schema permissions are only on Schema_A

ALTER AUTHORIZATION ON SCHEMA::Schema_B TO testowner;

----------

--list objects ownership again. Check a new owner was added

SELECT 'OBJECT' AS entity_type

,USER_NAME(OBJECTPROPERTY(object_id, 'OwnerId')) AS owner_name

,name

FROM sys.objects

And as figure 3 shows, the ownership chaining was solved:

Another way to solve this would include for example: Deny to the user select on the View(Schema_A.Bypass_VW ) or deny the select, like:

Deny select on Schema_A.Bypass_VW TO testUser1

Deny SELECT ON SCHEMA :: Schema_A TO testUser1

Thanks Joao Polisel and Moises Romero for the advice on this.

That is it!

Liliam

UK Engineer

by Scott Muniz | Jul 29, 2020 | Uncategorized

This article is contributed. See the original author and article here.

This is the second in a series of posts based on interviews with experienced Dynamics 365 Microsoft Certified Trainers (MCTs). The premier technical and instructional experts in Microsoft technologies, MCTs offer live virtual instructor-led training (VILT) that provides in-depth, hands-on experience tailored to learners’ needs. We talked with three MCTs about their approach to teaching Dynamics 365 skills and preparing partners and customers for Microsoft Certification: Julian Sharp on Microsoft Power Platform, Beth Burrell on customer engagement, and Brandon Ahmad on finance and operations. Whether you’re a business owner in search of training for your company or someone who wants to build your skills and get certified in Dynamics 365—or if your goal is to become an MCT yourself—you’ll find their take on Dynamics 365 training as inspiring as it is valuable.

________

Microsoft Dynamics 365, a set of integrated business applications, long ago surpassed previous Microsoft CRM and ERP solutions, explains Beth Burrell, MCT and CRM Senior Client Engagement Manager at Microsoft Learning Partner DXC Technology. In fact, the functionality of Dynamics 365 has expanded and deepened so much over the past few years—now covering sales, marketing, commerce, customer service, finance, operations, supply chain management, human resources, and AI—that it’s hard to keep up with all the new capabilities without expert training. There’s no longer one set of skills to know, but many, so you can’t be proficient after just one course. You need to traverse all the functionality of Dynamics 365 to see what it does, what it can do. And since the IT or tech world isn’t always the real world of business, you also need a different approach to training than familiarizing yourself with the documentation. Now training isn’t geared to just IT techs but also to low-code or no-code businesspeople who need to know how to create and use Dynamics 365 apps.

The good news, Burrell says, is that the opportunities for and ways to train in Dynamics 365 have also improved over the past years. Dynamics 365 training, which once had its own separate portal, is now part of Microsoft Learn. All Dynamics 365 documentation is linked to Microsoft Learn, too, which makes it much more accessible. The free, online Dynamics 365 learning paths and modules available on Microsoft Learn can help you build your skills and work toward certification—on your own schedule. And virtual instructor-led training (VILT) offered by Microsoft Learning Partners and taught by MCTs helps you dig deep into Dynamics 365 capabilities, keep up to date with new releases, and prepare for certification. As a Microsoft Most Valuable Professional (MVP) for Dynamics 365 who continues to advocate for the latest releases and works closely with the Dynamics 365 team, Burrell enjoys this ever-expanding and advancing functionality and the challenge of training people in it.

Although the hands-on labs and walk-throughs available on Microsoft Learn are invaluable, the kind of intensive, tailored training you get in a course led by an MCT takes learning to another level. MCTs are highly trained and experienced, and they have access to the best tools and labs as well as techniques. And, she’s quick to add, they form a genuine community. They share teaching tips, how to’s and fixes, and new ways to access tasks, so when you take an MCT-led course, you’re benefiting from the wisdom of not just one instructor but many.

Take Course 55260A: Microsoft Dynamics 365 for Customer Service, which prepares you for Exam MB-230: Microsoft Dynamics 365 Customer Service. This is the course Burrell often starts with for people and organizations, because it covers the functionality of Dynamics 365 from the start of a business process to its end. She also begins here because everyone, no matter what their background, has had experience with customer service or a help desk and is familiar with calls, cases, and tickets. That way she can build on what learners already know.

Burrell tailors this course to a business that learners are likely to be familiar with, like a bike shop or retail store, so the tools and tasks she focuses on resonate with them and correlate with real-life situations. Real-world learning, she explains, is a hallmark of MCT training. And, if an organization or team wants to focus the training in a specific area, like sales, Burrell focuses her teaching that way. She uses Microsoft Official Courseware (MOC) as the “backbone” of her teaching, to make sure everything is covered to prepare students for the certification exam. But she’s also always looking for ways to help them connect the technology, tasks, and terminology with their experience in the world so they learn how to use the business apps skillfully, regardless of whether they plan to take the exam and get certified.

She starts with a focused review of what participants have already learned on their own using the modules and learning paths on Microsoft Learn, tailoring the language she uses to class members or to the industry broadly. She uses this material as a baseline. Instead of reading from a screen, she uses a product or task to create views, flows, and more, so participants see the technology in action before she asks them to create a task and work it through end to end, from bot to chat to case number, and so on. Next she jumps into MOC, to “show them on the map where they’re going to drive,” and then moves into the labs to walk through the functionality. In the labs, she explains each task rather than using step-by-step instruction, because in her experience as a teacher she’s found that simply following instructions does not equal learning. Burrell’s commitment to helping students learn to use the technology in real-life situations is evident in the way she supplements the course materials. For example, she might ask students in pairs to role-play a customer interaction, to help them see where things can break down and how to respond. She even gives homework that challenges them to use their skills to solve a specific business problem they’ve experienced.

“Cookie-cutter training doesn’t work,” she notes. Not everyone learns the same way, so the combination of visuals, walk-throughs, reading, hands-on exercises, and interaction with the instructor is key. “And it’s fun to use a different approach,” she adds. Throughout, she focuses on proficiency not abstract terms. She tells learners the official name of a functionality but doesn’t insist that they use those terms. Instead, she encourages class members to focus on performing the task and calling it whatever works best for them. Communication is one of Burrell’s strong suits. When she started out as an IT person working with servers, she discovered she had a knack for talking in laypeople’s terms and gradually found her way from doing informal one-on-one training to more formal group training.

Burrell also tailors her teaching to the experience of each learner, making sure to keep a good pace for the class. She does this by building in 5 to 10 minutes at the end of each section to review, let the learners play with the technology on their own, or catch up, which gives her time to shadow participants and find out whether they have questions or need help. Classes range from 10 to 15 participants, so she’s able to give people this individual attention.

What she enjoys most is the moments when learners experience an Aha! moment. “That’s the fun of teaching for me,” she says, “when I can see students getting it.” Fun is a word Burrell uses often when talking about the training she leads. Getting students engaged is what makes it fun for her. “Some people may think they’re too old to learn, or they may be in the class because their employer requested they take it. I love seeing all students, even these, get engaged and enjoy the learning. They gain a sense of accomplishment and leave eager to show peers or an employer their new skills.” After a training, some students write her to let her know about something they achieved using Dynamics 365, and she finds their excitement as well as their proficiency rewarding.

It’s clear listening to Burrell that she’s an excellent instructor, creative and passionate about both Dynamics 365 and teaching. Train with Burrell or another MCT and enjoy building real-world customer engagement skills that can help make valuable contributions to your business and your career.

Explore MCT-led training for more Dynamics 365 certifications:

Browse MCT-led Dynamics 365 exam- and certification-prep courses offered by Learning Partners.

_______

by Scott Muniz | Jul 29, 2020 | Uncategorized

This article is contributed. See the original author and article here.

I’m excited to showcase a digital transformation story that shows what can happen when you give your brightest IT talent room to focus on strategic innovation.

SA Power Networks, an energy company I’ve partnered with here in Australia the past couple of years, is using Microsoft Managed Desktop to move endpoint management and security to the cloud for Microsoft experts to manage.

This approach to modernization has made desktop management evergreen, dynamic, and better able to keep pace with evolving business needs.

But there are even more dramatic impacts to user satisfaction, IT agility, and even a “digital utility of the year” award – all of which directly stem from the transition to Microsoft Managed Desktop. If your business needs modernization or you have an increasingly mobile workforce, I hope you’ll read and share the case study, and then reach out to your Microsoft account team to request more information about Microsoft Managed Desktop.

What endpoint management challenges are holding you back from achieving your innovation roadmap? Please share in the comments and be sure to subscribe to our blog to keep current with the latest information.

by Scott Muniz | Jul 29, 2020 | Alerts, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

Howdy folks!

Today we’re announcing the public preview of Azure AD Application Proxy (App Proxy) support for the Remote Desktop Services (RDS) web client. Many of you are already using App Proxy for applications hosted on RDS and we’ve seen a lot of requests for extending support to the RDS web client as well.

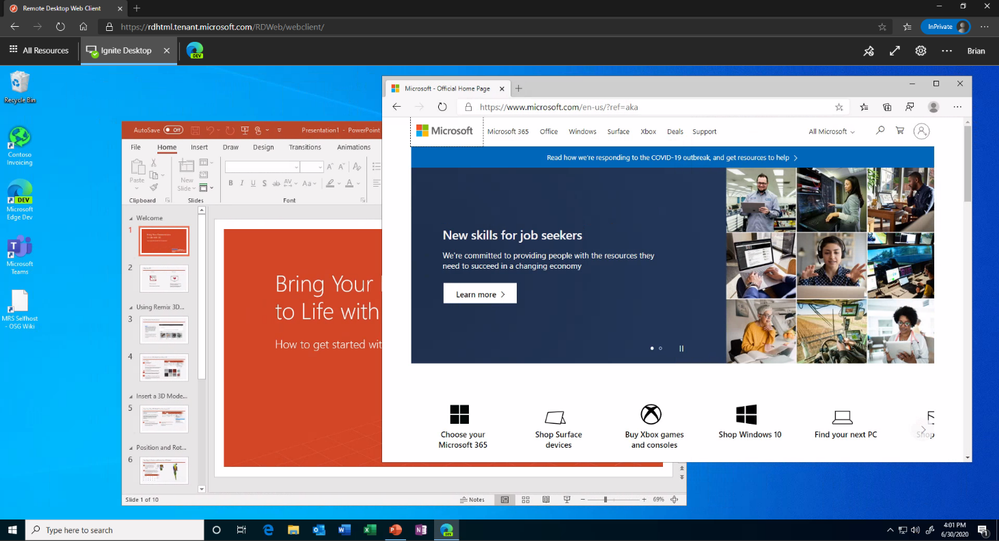

With this preview, you can now use the RDS web client even when App Proxy provides secure remote access to RDS. The web client works on any HTML5-capable browser such as Microsoft Edge, Internet Explorer 11, Google Chrome, Safari, or Mozilla Firefox (v55.0 and later). You can push full desktops or remote apps to the Remote Desktop web client. The remote apps are hosted on the virtualized machine but appear as if they’re running on the user’s desktop like local applications. The apps also have their own taskbar entry and can be resized and moved across monitors.

Launch rich client apps with a full desktop like experience

Launch rich client apps with a full desktop like experience

Why use App Proxy with RDS?

RDS allows you to extend virtual desktops and applications to any device while helping keep critical intellectual property secure. By using this virtualization platform, you can deploy all types of applications such as Windows apps and other rich client apps as-is with no re-writing required. By using App Proxy with RDS you can reduce the attack surface of your RDS deployment by enforcing pre-authentication and Conditional Access policies like requiring Multi-Factor Authentication (MFA) or using a compliant device before users can access RDS. App Proxy also doesn’t require you to open inbound connections through your firewall.

Getting started

To use the RDS web client with App Proxy, first make sure to update your App Proxy connectors to the latest version, 1.5.1975.0. If you haven’t already, you will need to configure RDS to work with App Proxy. In this configuration, App Proxy will handle the internet facing component of your RDS deployment and protect all traffic with pre-authentication and any Conditional Access policies in place. For steps on how to do this, see Publish Remote Desktop with Azure AD Application Proxy.

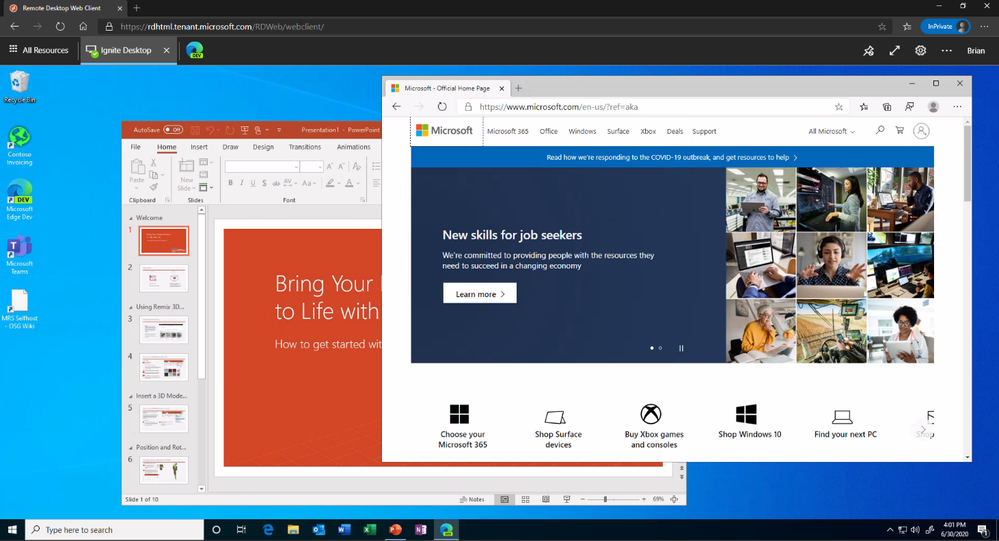

How Azure AD App Proxy works in an RDS deployment

How Azure AD App Proxy works in an RDS deployment

Configure the Remote Desktop web client

Next, complete setup by enabling the Remote Desktop web client for user access. See details on how to do this at Set up the Remote Desktop web client for your users. Now your users can use the external URL to access the client from their browser, or they can launch the app from the My Apps portal.

As always, we’d love to hear any feedback or suggestions you may have. Please let us know what you think in the comments below or on the Azure AD feedback forum.

Best regards,

Alex Simons (@alex_a_simons)

Corporate Vice President Program Management

Microsoft Identity Division

Learn more about Microsoft identity:

Recent Comments