by Scott Muniz | Jun 24, 2020 | Uncategorized

This article is contributed. See the original author and article here.

It is a known fact amongst security professionals that any major malware strain, such as Doqu 2.0, NotPetya or even Stuxnet includes some form of lateral movement capability.

According to MITRE ATT&CK™, there are least 20 known types of lateral movement techniques adversaries use to enter and control remote systems on a network.

In Active Directory, Lateral movements paths (LMPs) are used by attackers to identify and gain access to the sensitive accounts and machines in your network that share stored logon credentials in accounts, groups and machines. Once an attacker makes successful lateral moves towards their key targets, they often try to gain access to domain controllers to achieve domain dominance. Lateral movement attacks are carried out using many of the methods described in Azure ATP’s security alerts guide.

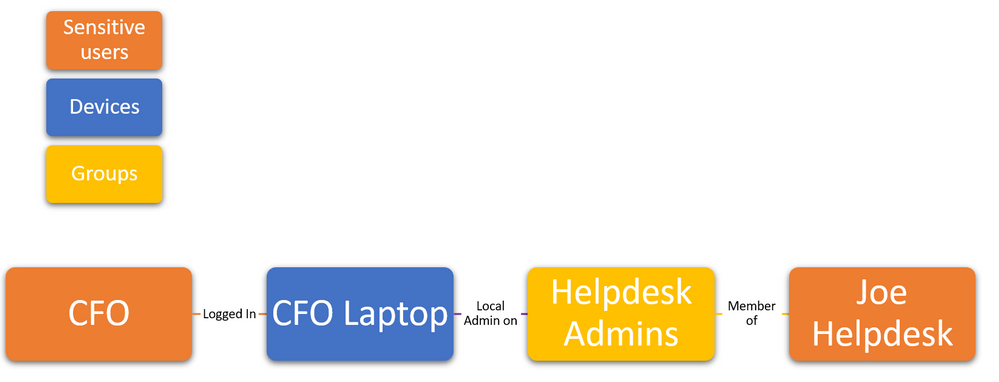

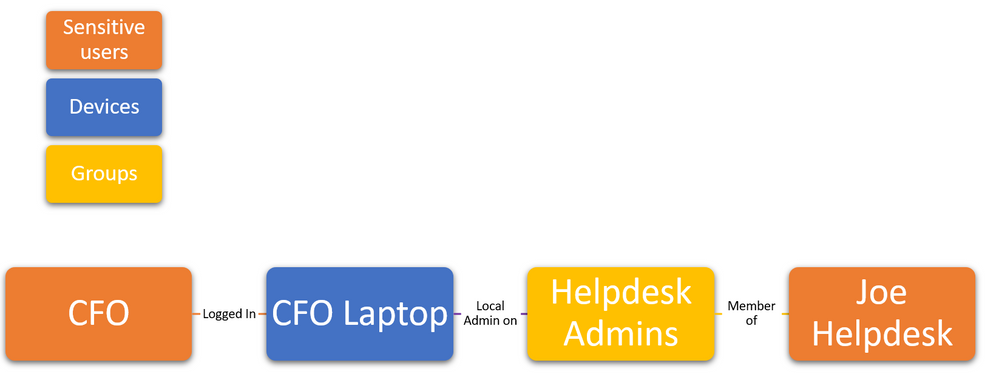

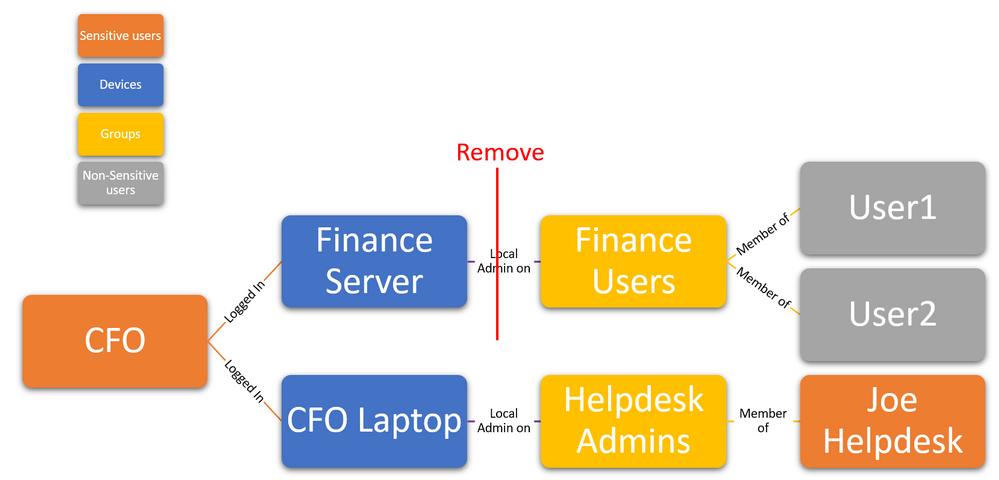

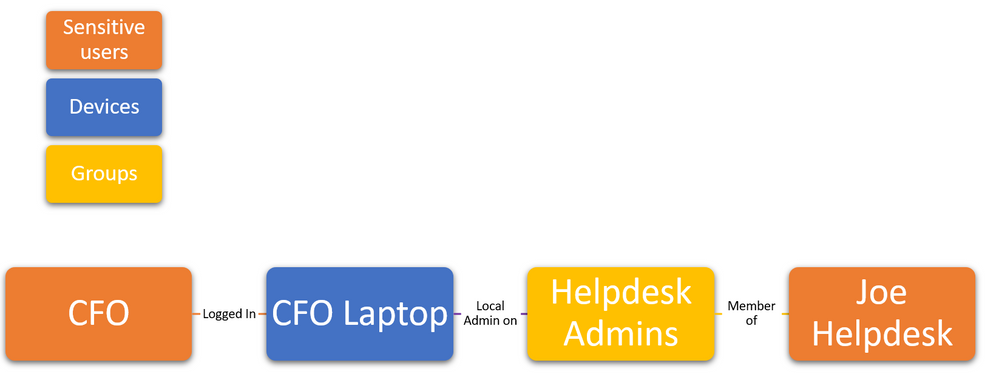

LMPs are a natural side-effect of every identity hierarchy, as shown in the following example:

In this example, Joe Helpdesk is a well-known user in the CFO’s LMP. Joe Helpdesk is considered sensitive because they can obtain the CFO credentials stored on the CFO’s laptop device, on which Joe Helpdesk is a local administrator.

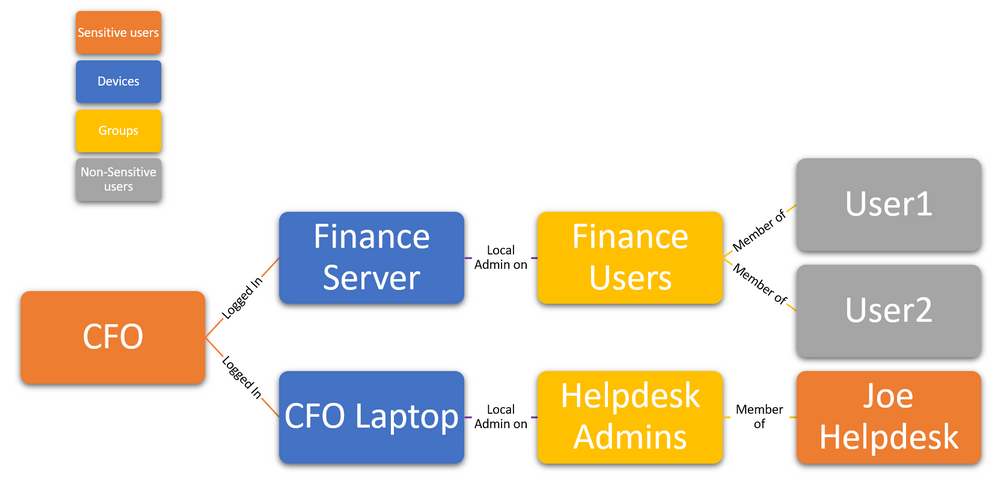

The risk created by LMPs starts to increase when additional (often overlooked) logged in sessions, group memberships and local administrator privileges are introduced into the identity hierarchy as the organization grows, for example:

In this case, both User1 & User2 can be leveraged to gain the CFO’s credentials since they have local administrator privileges on a device (Finance Server) that the CFO is logged into. From the Finance Server, these LMPs can grow exponentially, often become overwhelming to assess or attempt to remediate—this is where Azure ATP comes in.

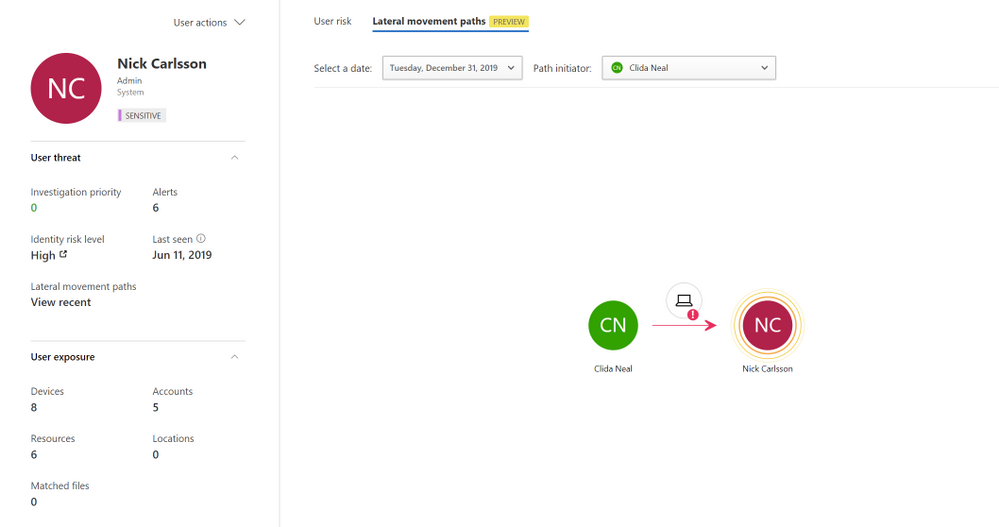

When investigating a user or responding to a suspicious activity alert, Azure ATP already shows security analysts if that user is a part of an existing LMP, helping them understand if any immediate action is required. Beyond responsive LMP hunting, proactive measures to reduce large LMPs before potential breaches can be actualized reduces risk and improves the security posture of the entire organization.

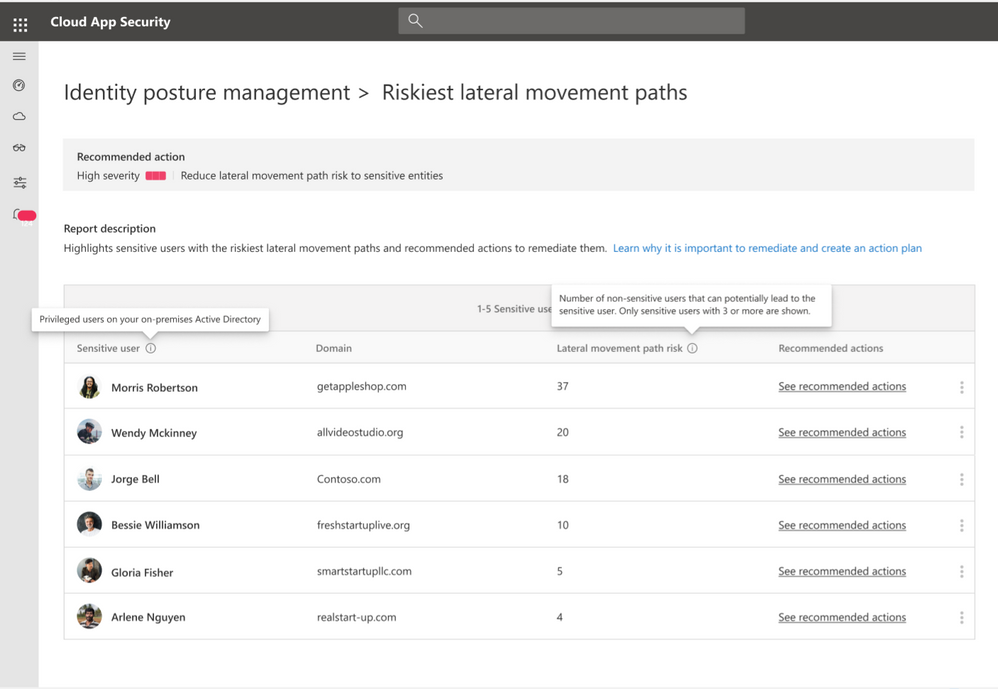

Azure ATP’s Riskiest Lateral Movement Path security assessment brings proactive LMP hunting from theory to reality.

In this example, we see that removal of the local administrator privileges from the Finance Users group to the finance server reduces the CFO’s LMP by 2 non-sensitive users.

To identify and proactively hunt and remediate this risky lateral movement paths, we used the Riskiest Lateral Movement Paths assessment report, a component of the Azure ATP identity security posture feature, part of the new Identity Threat Investigation experience.

In this security posture assessment, we analyze all potentials LMPs for every sensitive user and calculate their risk and number of unique non-sensitive users in each entity’s path, along with the recommended actions an identity administrator can take to reduce the LMPs.

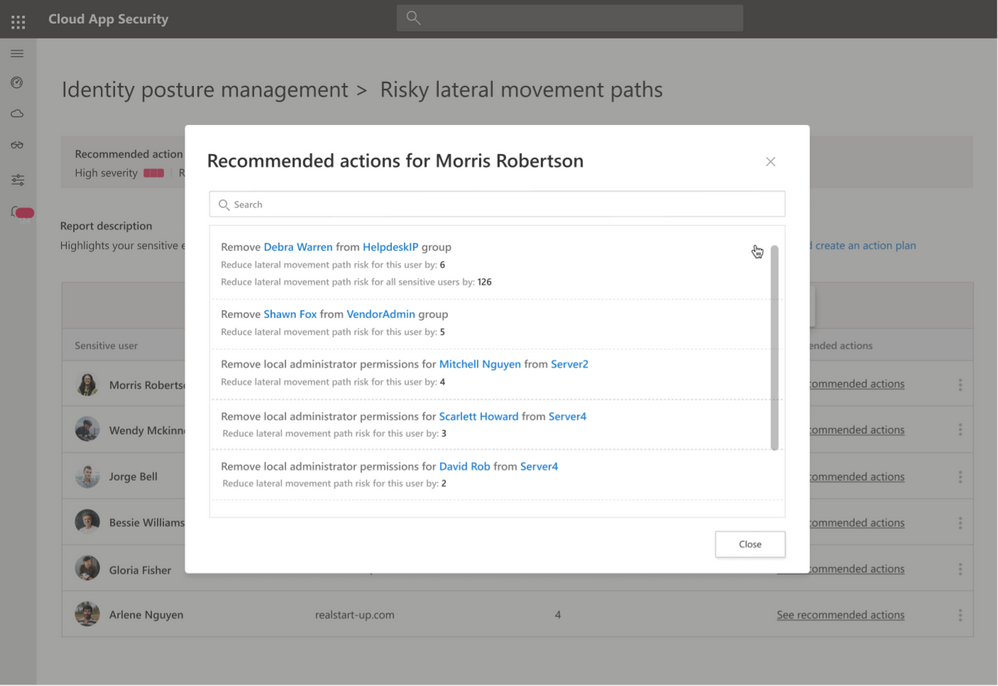

Each action provides 2 main types of data:

- What should you do?

- Remove an entity from a group

- Remove an entity from the Local administrators group on a device

- Explain what the resulting impact on the LMP risk is

- On the specific sensitive user LMP

- On all sensitive user LMPs (this action may be part of several paths)

With this assessment, identity teams can view and take immediate proactive measures on existing risky lateral movement paths, reducing the ways an attacker could possibly compromise sensitive entities and directly improving your organizational security posture.

For more information regarding lateral movement paths see

* Security assessment: Riskiest lateral movement paths (LMP)

* Azure ATP Lateral Movement Paths

* Tutorial: Use Lateral Movement Paths

* Reduce your potential attack surface using Azure ATP Lateral Movement Paths

* MITRE ATT&CK™ – Lateral movement

Or Tsemah, Product Manager, Azure ATP

by Scott Muniz | Jun 24, 2020 | Uncategorized

This article is contributed. See the original author and article here.

This post was written by MVP James Novak as part of the Humans of IT Guest Blogger series. In this article, James discusses how the Power Platform has been a game changer for enterprise application development, and how the impact goes far beyond developers and the IT community.

Back in MY day…

Several years ago, I gave a presentation titled “Dynamics CRM as an xRM Development Platform”, an overview of benefits of building enterprise line of business applications on the Dynamics CRM platform. Some of the key benefits from the presentation:

- Authentication – Dynamics CRM offered built in authentication with Active Directory.

- Core capabilities – Dynamics CRM shipped with several components such as system data entities, activity tracking, email capabilities.

- Customizations – Core Dynamics CRM platform capabilities around data entities were customizable – extending core data entities, create and edit data entry forms, deploy custom dashboards, etc.

- Relational data –Dynamics CRM was built on Microsoft SQL Server, so from the start, we worked on an enterprise data platform.

- Extensibility – Beyond customizations, we could extend Dynamics CRM with custom code via Plugins and Custom Workflow Activities or through the built-in service endpoints offered by the platform.

- Deployment – Dynamics CRM was a web-based solution, so even with on-premises deployments, users had no client side requirements for deployment.

Building solutions on this platform meant a focus on solving business problems rather than building infrastructure and “plumbing” for enterprise applications. Those might be fun challenges as a developer, but they are often distractions from rolling out useful solutions to my customers.

This flavor of development was often called XRM, meaning “anything relationship management” – business applications built on the relational data platform offered by Dynamics CRM. As powerful as the system was, it still meant work to get past the products shipped with the platform, such as Customer Engagement and Sales. XRM development meant removing loads of product elements and THEN you start building your solution. Basically, we had to buy this product called CRM only to throw away loads of capabilities.

Well, not anymore.

Welcome to the new XRM – Power Platform

Over the last few years, Microsoft has implemented a massive architectural overhaul to the Dynamics platform and the result is the Power Platform. That is a bit of an oversimplification, but Dynamics CRM played a key role in the Power Platform evolution.

Power Platform defined – “Powerful data service and app platform to quickly build enterprise-grade apps with automated business processes”. All capabilities described in the list above are now part of the core Power Platform. Put simply, the underlying customizations, relational data modeling, and extensibility capabilities were lifted from the Dynamics CRM platform and made available the Power Platform.

We can now build “enterprise-grade” applications using these core capabilities. In fact, what we knew as Customer Service, Sales, and Marketing are all now Power Apps built on the Power Platform. Discussing the full list of Power Platform capabilities and features is far beyond what we can cover in a single post, but I want to highlight a few key platform elements relevant to building a line of business solutions.

- Common Data Service (CDS) – CDS is the new name for the underlying data services offered with the Power Platform. If you have worked with Dynamics CRM, you will recognize Entities and their Attributes, Relationships, Views, and Forms. CDS is also Microsoft’s implementation of the Common Data Model (CDM), “a standard and extensible collection of schemas (entities, attributes, relationships) that represents business concepts and activities with well-defined semantics, to facilitate data interoperability.”

- Model Driven Apps – Model Driven Apps are the presentation layer for CDS, “a component-focused approach to app development.” If you are familiar with Apps in Dynamics 365 CRM, you are already familiar with Model Driven apps. Model Driven Apps allow us to deliver role-based business applications based on the Entities defined in our CDS.

- Canvas Apps – Canvas Apps are the low code, no code platform for building pixel-perfect apps that integrate with a variety of connected and disconnected data sources. These applications can be accessed via the desktop browser or your mobile phone or tablet device. I see Canvas Apps as an excellent platform for targeted user experiences for users that may not work within our Model Driven Apps.

- Power Automate – Power Automate is the next generation workflow engine for the Power Platform. With Power Automate, we can create automated Flows that interact with our CDS data and literally hundreds of connectors to internal and external data sources. Over time, Power Automate will likely replace our familiar Workflows from Dynamics CRM.

- Power Apps Portals – Power Apps Portals are “external-facing websites that allow users outside their organizations to sign in with a wide variety of identities, create and view data in Common Data Service, or even browse content anonymously.” Formerly Adxstudios Portals, the Portals platform allows makers to interact with users that do not have a Power Platform or Dynamics 365 license. As with Model Driven and Canvas Apps, Power Apps Portals are intended for both developers and non-developers alike.

This is not a comprehensive list of the Power Platform capabilities, but all items on this list share two key characteristics – they are “low code, no code”, and they allow for rapid development and deployment. This means that we can build and deploy our enterprise-grade applications rapidly and without the need for experienced developers. This is a big deal, but not just for developers, corporations, consultancies, or the IT community. Rapid application development with the Power Platform can have a huge impact on all of us.

Using Power Platform for Good during COVID-19

A prime example of the Power Platform in action is a solution built by Microsoft in response to the COVID-19 crisis. Microsoft has provided several templates and accelerators that make Power Platform development easier and faster, but one solution is an excellent demonstration of rapid development and deployment that has made an immediate impact.

Microsoft has an internal team that built a self-screening and test scheduling tool using several Power Platform technologies. The solution provides a public-facing Power Apps Portal for residents to register for a COVID-19 screening test by filling out a simple questionnaire. This Portal registration is saved in CDS and users are then emailed a QR code that they present to a mobile lab technician. When the resident arrives at the testing location, the lab technician uses a mobile Canvas App to scan the QR code and link the resident with their sample. The lab sample is sent back to the lab for processing. Once the lab results are complete, a Power Automate Flow pulls these lab results back into the CDS for agency user to review.

So, this overall solution includes the following Power Platform components:

- Common Data Service

- Model Driven App

- Canvas App

- Power Apps Portals

- Power Automate

The impressive bit about this solution is that it was built in approximately 2 weeks. Yes, I said 2 weeks. Coming from a custom development background, two weeks would have been impossible to roll out a new enterprise database, three custom user interfaces, a mobile solution, and a back-end continuous integration, all deployed with secure authentication and self-service registration.

The Impact

This self-screening and test scheduling solution was initially rolled out to Washington DC and then to the state of New York. You can read an article about the New York rollout at the end of this post. This solution is currently being rolled out to states across the US and locations overseas.

Another impressive bit about this solution – the speed at which it can be rolled out and customized for new locations. The average time to deploy each of these new instances has been about 5 days, which includes acquiring the environment through go live. The ability to package and deploy the majority of our solution components is built into the Power Platform and those application lifecycle management capabilities are being expanded with each release.

This is not just another cool website or widget that Microsoft built. According to the article, “More than 100,000 New Yorkers have already utilized the self-screening and scheduling tool”. That is 100,000 people in New York alone that have been able to register for testing because this solution was built and deployed so quickly. I do believe it’s not an exaggeration to say that this solution can help save lives.

What’s next?

Now that we have all these tools for rapid enterprise development, I think we can build even more solutions that make an impact. So how can you get involved? One avenue is to join a community event, like the work being done by the Hack4Good group. Chris Huntingford recently posted on the Humans of IT blog about the most recent Hack4Good at Microsoft Business Applications Summit:

https://techcommunity.microsoft.com/t5/humans-of-it-blog/guest-blog-hack4good-mbas-combining-community-amp-technology-for/ba-p/1450706.

This is one of the best examples of rapid development and making a positive impact – people all over the world sharing ideas and talent for a day to produce workable solutions while raising money for charities.

Further reading

I had just started writing this post when Charles Lamanna published this post. Charles is CVP at Microsoft and one of the biggest key players in the development of the Power Platform and this post goes into a lot of excellent detail about leveraging the Power Platform for rapid application development.

Rapid application development with Microsoft Power Platform & Azure: https://powerapps.microsoft.com/en-us/blog/rapid-application-development-with-microsoft-power-platform-azure/

You can read the recent article about the New York rollout of the Self Screening and Scheduling solution here, New York State’s Self-Screening and Scheduling Tool Helping to Fight COVID-19: https://www.ny.gov/updates-new-york-state-covid-19-technology-swat-teams/new-york-states-self-screening-and-scheduling

And lastly, here is a list of links to Power Platform documentation. Microsoft has done an excellent job consolidating this documentation and keeping it up to date:

I hope this inspires you to begin your own Power Platform for good journey and help build solutions for our world!

#HumansofIT

#TechforGood

by Scott Muniz | Jun 24, 2020 | Uncategorized

This article is contributed. See the original author and article here.

We’ve been putting together guides for classes based on scenarios we hear from customers. We call these ‘class types‘. The latest addition to our class type collection is how to setup a lab to teach using MATLAB. The article covers both the license server setup and client software installation.

MATLAB, which stands for Matrix laboratory, is a programming platform from MathWorks. It combines computational power and visualization, making it a popular tool in the fields of math, engineering, physics, and chemistry.

Check it out! https://docs.microsoft.com/azure/lab-services/class-type-matlab

Happy programming,

Lab Services Team

by Scott Muniz | Jun 24, 2020 | Uncategorized

This article is contributed. See the original author and article here.

Please join us for another webinar happening on July 7th, 2020 at 8:00 am PST. Chris Jackson and Dominique Kilman, Principal Program Managers at Microsoft, will share their experiences and provide recommendations on how to get started with Microsoft Defender ATP using a phased roadmap. Working with a variety of customers, they have documented a path to enable the many capabilities in Microsoft Defender ATP: what you should enable in the first 30 days, the first 90 days, and what you can look to enable beyond that!

Microsoft Defender ATP is a unified endpoint security platform for preventative protection, post-breach detection, automated investigation, and response. It is a complete solution providing security teams across the organization with threat protection, detection and response, deep and wide optics, and the tools needed to better protect the organization. This webinar will help you get started and use the platform to benefit from it as effectively and quickly as possible.

Register for the webinar today: Microsoft Defender ATP: Deploy Microsoft Defender ATP capabilities using a phased roadmap – July 7th, 2020 at 8:00 am PST. Sign up here!

We look forward to seeing you there!

The webcast will be recorded for later viewing if you cannot make the live session.

Recent Comments