by Contributed | Jun 3, 2023 | Technology

This article is contributed. See the original author and article here.

Introduction

Today there is a lot of interest around generative AI, specifically training and inferencing large language models (OpenAI GPT4, DALL.E2), Git copilot, Azure OpenAI service). Training these large language models requires lots of float-point performance and high interconnect network bandwidth. The Azure NDm_v4 virtual machine is an ideal choice for these types of demanding jobs (because it has 8 A100 GPU and each GPU has 200 Gbps of HDR InfiniBand). Kubernetes is a popular choice to deploy and manage containerized workloads on compute/gpu resources. The Azure Kubernetes service (AKS) simplifies Kubernetes cluster deployments. We show how to deploy an optimal NDm_v4 (A100) AKS cluster, making sure that all 8 GPU and 8 InfiniBand devices on each virtual machine come up correctly and are available to deliver optimal performance. A multi-node NCCL allreduce benchmark job is executed on the NDm_v4 AKS cluster to verify its deployed/configured correctly.

Procedure to deploy a NDmv4 (A100) AKS Cluster

We will deploy AKS cluster from the Azure cloud shell using Azure command line interface (azcli). The Azure cloud shell has azcli preinstalled, but if you prefer to install from your local workstation, instructions to install azcli are here.

Note: There are many other ways to deplot an AKS cluster (e.g. Azure Portal, ARM template, Bicep and terraform are also popular choices)

First we need to install the aks-preview azcli extension, to be able to deploy AKS and control AKS via azcli.

az extension add –name aks-preview

It is also necessary to register infiniBand support, to make sure all nodes in your pool can communicate over the same InfiniBand network.

az feature register –name AKSInfinibandSupport –namespace Microsoft.ContainerService

Create a resource group for the AKS cluster.

az group create –resource-group –location

For simplicity we will use the default kubenet networking (you could also deploy AKS using CNI and choose your own VNET), in the kubenet case AKS will deploy the VNET and subnet. System managed identity will be used for authentication. Ubuntu is chosen for the HostOS (The default AKS version deployed was 1.25.6 and the default Ubuntu HostOS is Ubuntu 22.04).

az aks create -g –node-resource-group -n –enable-managed-identity –node-count 2 –generate-ssh-keys -l –node-vm-size Standard_D2s_v3 –nodepool-name –os-sku Ubuntu –attach-acr

Then deploy the NDmv4 AKS pool. (Initially only one NDmv4 VM, later we will scale up the AKS cluster).

Note: Make sure you have sufficient NDmv4 quota in your subscription/location.

A specific tag (SkipGPUDriverInstall=true) needs to be set to prevent the GPU driver from being installed automatically (we will use the Nvidia GPU operator to install the InfiniBand driver instead). Some container images can be quite large and so we use a larger OS disk size (128 GB)

az aks nodepool add –resource-group –cluster-name –name –node-count 1 –node-vm-size Standard_ND96amsr_A100_v4 –node-osdisk-size 128 –os-sku Ubuntu –tags SkipGPUDriverInstall=true

Get credentials to connect and interact with the AKS Cluster.

az aks get-credentials –overwrite-existing –resource-group –name

Check that the AKS pools are ready.

kubectl get nodes

kubectl get nodes

Install NVIDIA network and gpu operators (they will be used to install specific GPU and InfiniBand drivers (in this case OFED 5.8-1.0.1.1.2 and GPU driver 525.60.13)

#! /bin/bash

# Apply required manifests

kubectl get namespace nvidia-operator 2>/dev/null || kubectl create namespace nvidia-operator

# Install node feature discovery

helm upgrade -i --wait

-n nvidia-operator node-feature-discovery node-feature-discovery

--repo https://kubernetes-sigs.github.io/node-feature-discovery/charts

--set-json master.nodeSelector='{"kubernetes.azure.com/mode": "system"}'

--set-json worker.nodeSelector='{"kubernetes.azure.com/accelerator": "nvidia"}'

--set-json worker.config.sources.pci.deviceClassWhitelist='["02","03","0200","0207"]'

--set-json worker.config.sources.pci.deviceLabelFields='["vendor"]'

# Install the network-operator

helm upgrade -i --wait

-n nvidia-operator network-operator network-operator

--repo https://mellanox.github.io/network-operator

--set deployCR=true

--set nfd.enabled=false

--set ofedDriver.deploy=true

--set ofedDriver.version="5.8-1.0.1.1.2"

--set secondaryNetwork.deploy=false

--set sriovDevicePlugin.deploy=true

--set-json sriovDevicePlugin.resources='[{"name": "infiniband", "vendors": ["15b3"], "devices": ["101c"]}]'

--set sriovNetworkOperator.enabled=false

# If you want to enable IPoIB, change secondaryNetwork.deploy to true and add the following flags:

# --set secondaryNetwork.multus.deploy=true

# --set secondaryNetwork.cniPlugins.deploy=true

# --set secondaryNetwork.ipamPlugin.deploy=true

# Install the gpu-operator

helm upgrade -i --wait

-n nvidia-operator gpu-operator gpu-operator

--repo https://helm.ngc.nvidia.com/nvidia

--set nfd.enabled=false

--set driver.enabled=true

--set driver.version="525.60.13"

--set driver.rdma.enabled=true

--set toolkit.enabled=true

Verify that InfiniBand and GPU drivers have been installed. You should see 8 infiniband devices and 8 gpu’s per NDm_v4 VM.

kubectl describe node | grep =e “nvidia.com/infiniband” -e “nvidia.com/gpu”

Install Volcano Kubernetes scheduler to make it easier to submit HPC/AI tightly-coupled jobs.

kubectl apply -f https://raw.githubusercontent.com/volcano-sh/volcano/release-1.7/installer/volcano-development.yaml

Check that the Volcano kubernetes scheduler was installed correctly.

kubectl get all -n volcano-system

Create NCCL collective test container

Here is the Dockerfile that was used to create the NCCL collective test container, the NVIDIA NGC pytorch (23.03) was used as a base container.

nccl-tests.sh script to build the NCCL collective tests.

#!/bin/bash

git clone https://github.com/NVIDIA/nccl-tests.git

cd nccl-tests

make MPI=1 MPI_HOME=/usr/local/mpi

Dockerfile

ARG FROM_IMAGE_NAME=nvcr.io/nvidia/pytorch:23.03-py3

FROM ${FROM_IMAGE_NAME}

RUN apt update

RUN apt-get -y install build-essential

RUN apt-get -y install infiniband-diags

RUN apt-get -y install openssh-server

RUN apt-get -y install kmod

COPY nccl-tests.sh .

RUN ./nccl-tests.sh

COPY ndv4-topo.xml .

Login to your Azure container registry, where your custom container will be stored.

az acr login -n

Build your container locally on a Ndmv4 VM. First change to the directory containing your Dockerfile.

docker build -t .azurecr.io/ .

Push your local container to your Azure container registry.

docker push .azurecr.io/

Run NCCL allreduce benchmark on NDmv4 AKS Cluster

The NVIDIA NCCL collective communication tests are ideal to verify that the NDv4 AKS cluster is set-up correctly for optimal performance. On 2 NDmv4 nodes (16 A100), NCCL allreduce should be ~186 GB/s.

We will use the docker container we created in the previous section and submit the NCCL allreduce benchmark using the Volcano scheduler.

Scale-up the NDmv4 AKS cluster to 2 NDmv4 VM’s (16 A100).

az aks nodepool scale –resource-group –cluster-name –name –node-count 2

Here is the NCCL allreduce benchmark yaml script.

apiVersion: batch.volcano.sh/v1alpha1

kind: Job

metadata:

name: nccl-allreduce-job1

spec:

minAvailable: 3

schedulerName: volcano

plugins:

ssh: []

svc: []

tasks:

- replicas: 1

name: mpimaster

policies:

- event: TaskCompleted

action: CompleteJob

template:

spec:

containers:

- command:

- /bin/bash

- -c

- |

MPI_HOST=$(cat /etc/volcano/mpiworker.host | tr "n" ",")

mkdir -p /var/run/sshd; /usr/sbin/sshd

echo "HOSTS: $MPI_HOST"

mpirun --allow-run-as-root -np 16 -npernode 8 --bind-to numa --map-by ppr:8:node -hostfile /etc/volcano/mpiworker.host -x NCCL_DEBUG=info -x UCX_TLS=tcp -x NCCL_TOPO_FILE=/workspace/ndv4-topo.xml -x UCX_NET_DEVICES=eth0 -x CUDA_DEVICE_ORDER=PCI_BUS_ID -x NCCL_SOCKET_IFNAME=eth0 -mca coll_hcoll_enable 0 /workspace/nccl-tests/build/all_reduce_perf -b 8 -f 2 -g 1 -e 8G -c 1 | tee /home/re

image: cgacr2.azurecr.io/pytorch_nccl_tests_2303:latest

securityContext:

capabilities:

add: ["IPC_LOCK"]

privileged: true

name: mpimaster

ports:

- containerPort: 22

name: mpijob-port

workingDir: /workspace

resources:

requests:

cpu: 1

restartPolicy: OnFailure

- replicas: 2

name: mpiworker

template:

spec:

containers:

- command:

- /bin/bash

- -c

- |

mkdir -p /var/run/sshd; /usr/sbin/sshd -D;

image: cgacr2.azurecr.io/pytorch_nccl_tests_2303:latest

securityContext:

capabilities:

add: ["IPC_LOCK"]

privileged: true

name: mpiworker

ports:

- containerPort: 22

name: mpijob-port

workingDir: /workspace

resources:

requests:

nvidia.com/gpu: 8

nvidia.com/infiniband: 8

limits:

nvidia.com/gpu: 8

nvidia.com/infiniband: 8

volumeMounts:

- mountPath: /dev/shm

name: shm

restartPolicy: OnFailure

terminationGracePeriodSeconds: 0

volumes:

- name: shm

emptyDir:

medium: Memory

sizeLimit: 8Gi

---

Note: Modify the ACR (cgacr2) and the container name (pytorch_nccl_tests_2303:latest) in the above script.

Check the output

kubectl logs

You should see ~186 GB/s for large messages sizes.

8 2 float sum -1 38.15 0.00 0.00 0 31.44 0.00 0.00 0

16 4 float sum -1 33.06 0.00 0.00 0 31.67 0.00 0.00 0

32 8 float sum -1 31.27 0.00 0.00 0 31.14 0.00 0.00 0

64 16 float sum -1 31.91 0.00 0.00 0 31.42 0.00 0.00 0

128 32 float sum -1 32.12 0.00 0.01 0 31.64 0.00 0.01 0

256 64 float sum -1 33.79 0.01 0.01 0 33.14 0.01 0.01 0

512 128 float sum -1 35.12 0.01 0.03 0 34.55 0.01 0.03 0

1024 256 float sum -1 35.38 0.03 0.05 0 34.99 0.03 0.05 0

2048 512 float sum -1 38.72 0.05 0.10 0 37.35 0.05 0.10 0

4096 1024 float sum -1 39.20 0.10 0.20 0 38.94 0.11 0.20 0

8192 2048 float sum -1 46.89 0.17 0.33 0 43.53 0.19 0.35 0

16384 4096 float sum -1 50.02 0.33 0.61 0 49.28 0.33 0.62 0

32768 8192 float sum -1 59.52 0.55 1.03 0 54.29 0.60 1.13 0

65536 16384 float sum -1 71.60 0.92 1.72 0 68.39 0.96 1.80 0

131072 32768 float sum -1 79.46 1.65 3.09 0 76.06 1.72 3.23 0

262144 65536 float sum -1 80.70 3.25 6.09 0 79.49 3.30 6.18 0

524288 131072 float sum -1 89.90 5.83 10.94 0 90.97 5.76 10.81 0

1048576 262144 float sum -1 104.8 10.00 18.75 0 105.6 9.93 18.62 0

2097152 524288 float sum -1 140.0 14.98 28.08 0 133.6 15.70 29.44 0

4194304 1048576 float sum -1 150.6 27.84 52.21 0 151.4 27.70 51.93 0

8388608 2097152 float sum -1 206.6 40.61 76.14 0 204.0 41.11 77.09 0

16777216 4194304 float sum -1 389.0 43.13 80.86 0 386.2 43.45 81.46 0

33554432 8388608 float sum -1 617.4 54.35 101.90 0 608.5 55.14 103.39 0

67108864 16777216 float sum -1 949.0 70.71 132.59 0 939.4 71.44 133.95 0

134217728 33554432 float sum -1 1687.9 79.52 149.09 0 1647.8 81.45 152.72 0

268435456 67108864 float sum -1 3019.6 88.90 166.68 0 3026.4 88.70 166.31 0

536870912 134217728 float sum -1 5701.8 94.16 176.55 0 5745.8 93.44 175.20 0

1073741824 268435456 float sum -1 11029 97.36 182.54 0 11006 97.56 182.92 0

2147483648 536870912 float sum -1 21588 99.48 186.52 0 21668 99.11 185.83 0

4294967296 1073741824 float sum -1 42935 100.03 187.56 0 42949 100.00 187.50 0

8589934592 2147483648 float sum -1 85442 100.54 188.50 0 85507 100.46 188.36 0

# Out of bounds values : 0 OK

# Avg bus bandwidth : 56.6365

Conclusion

Correct deployment of NDmv4 kubernetes pools using Azure Kubernetes service is critical to get the expected performance. NCCL collectives tests (e.g allreduce) are excellent benchmarks to verify the cluster is set-up correctly and achieving the expected high performance of NDmv4 VM’s.

by Contributed | Jun 2, 2023 | Technology

This article is contributed. See the original author and article here.

MariaDB is the open-source relational database management system created by MySQL’s original developers. MariaDB is designed to be highly compatible with MySQL. It supports the same SQL syntax, data types, and connectors as MySQL, allowing for a seamless transition for applications or databases that previously used MySQL.

Recently we’ve had many customers asking for guidance on moving from MariaDB to MySQL. To address this request, this blog post focuses on moving from Azure Database for MariaDB version 10.3 to Azure Database for MySQL version 5.7 using the MySQL Shell (MySQLSh) client. However, the same process, with minor changes, can help migrate other compatible MariaDB-MySQL version pairs.

Preparation

Before beginning, it’s important to assess any application changes or workarounds that are required to ensure a smoother migration. In real-world scenarios there are often challenges, so testing an application with the target MySQL 5.7 becomes crucial before starting the migration. To understand the potential incompatibilities between MariaDB and MySQL, refer to the following documents.

Prerequisites

Before starting this process, ensure that that the following prerequisites are in place:

- A source instance of Azure Database for MariaDB running version 10.3.

- A target instance of Azure Database for MySQL running version 5.7.

- Network connectivity established (either via private or public network) between the source and target so that they can communicate with each other.

Migration overview

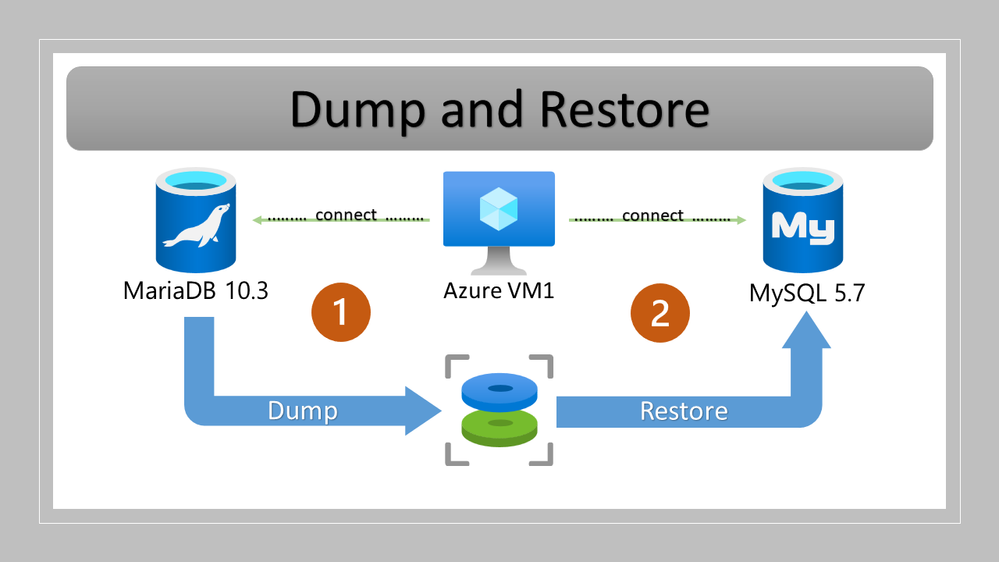

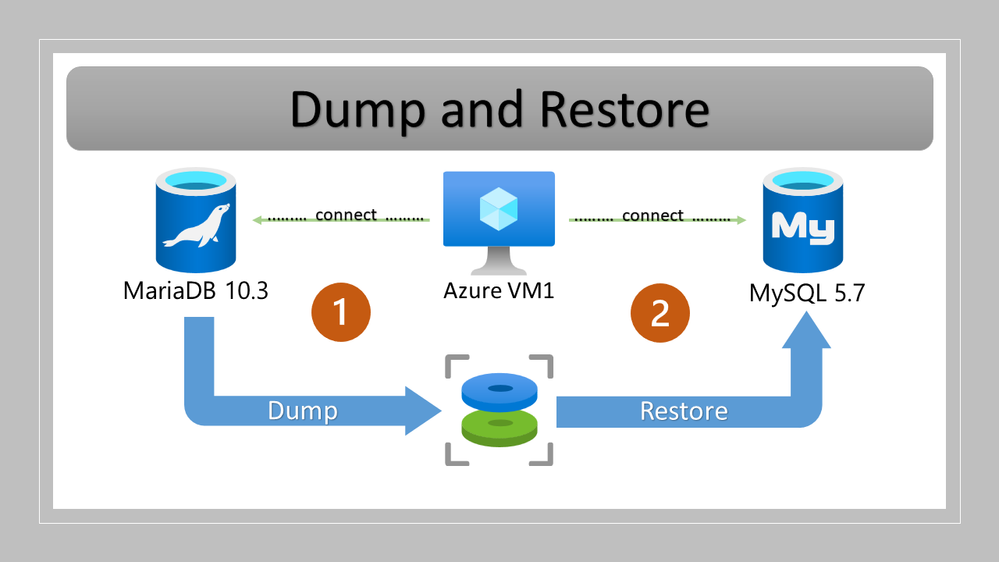

The process of migrating from Azure Database for MariaDB to Azure Database for MySQL involves:

- Backing up your Azure Database for MariaDB instance using MySQLSh.

- Restoring the backup to your Azure Database for MySQL instances using MySQLSh.

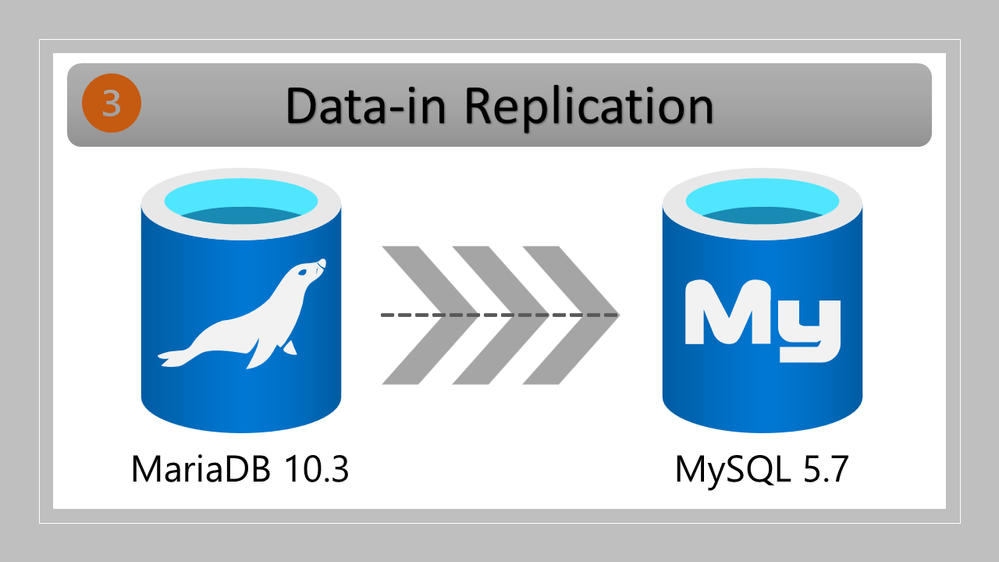

- Configuring and starting replication on your Azure Database for MariaDB instance, sending data to your Azure Database for MySQL instance.

- Performing cutover when the replication lag reaches zero.

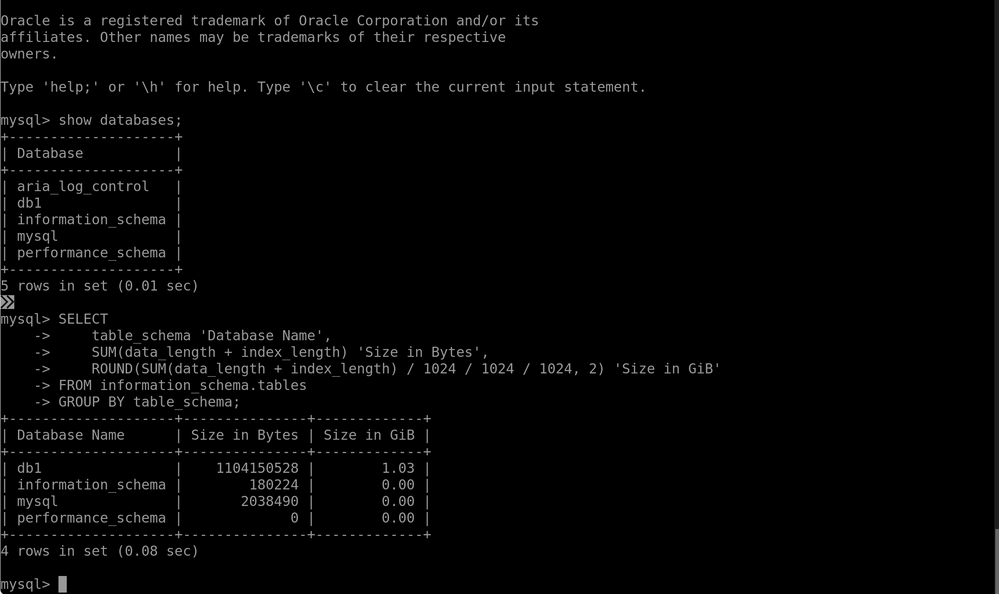

Note: For reference, the name and size of the initial database is shown in the following graphic.

Procedure

To back up your Azure Database for MariaDB instance using MySQLSh, perform the following steps.

- Connect to mysqlsh prompt by running the following command:

Syntax

mysqlsh –uri %40@:

Example command

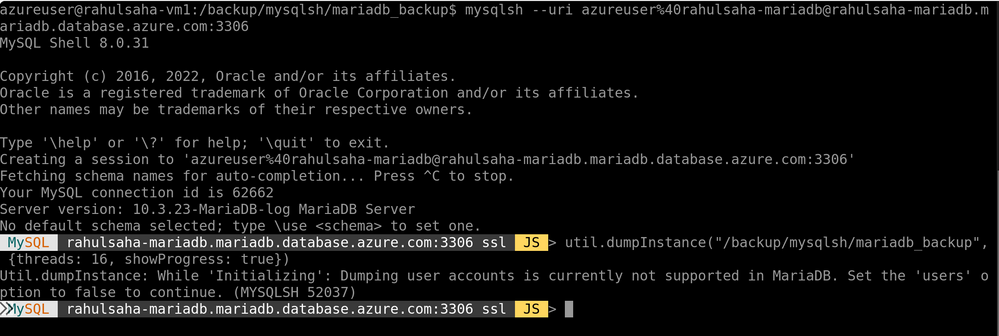

mysqlsh –uri azureuser%40rahulsaha-mariadb@rahulsaha-mariadb.mariadb.database.azure.com:3306

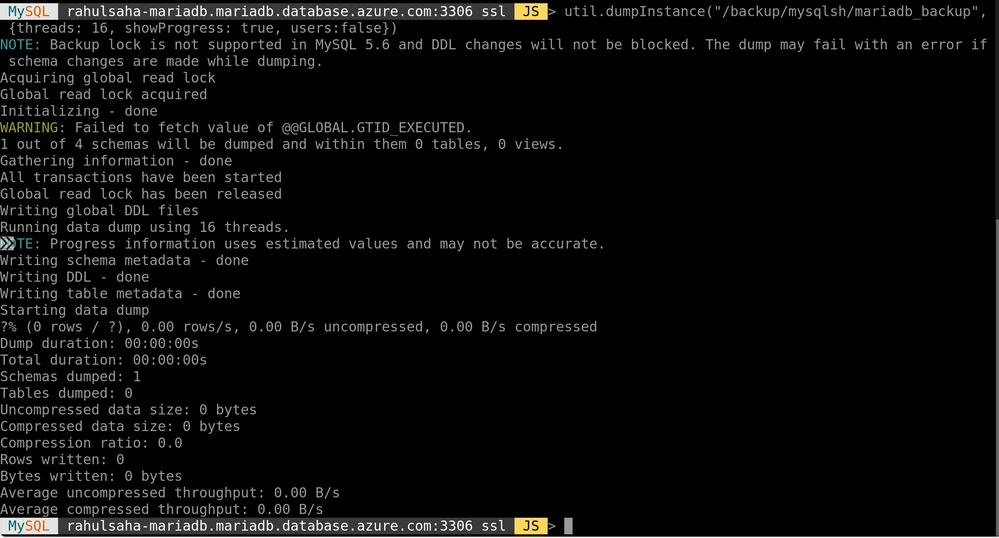

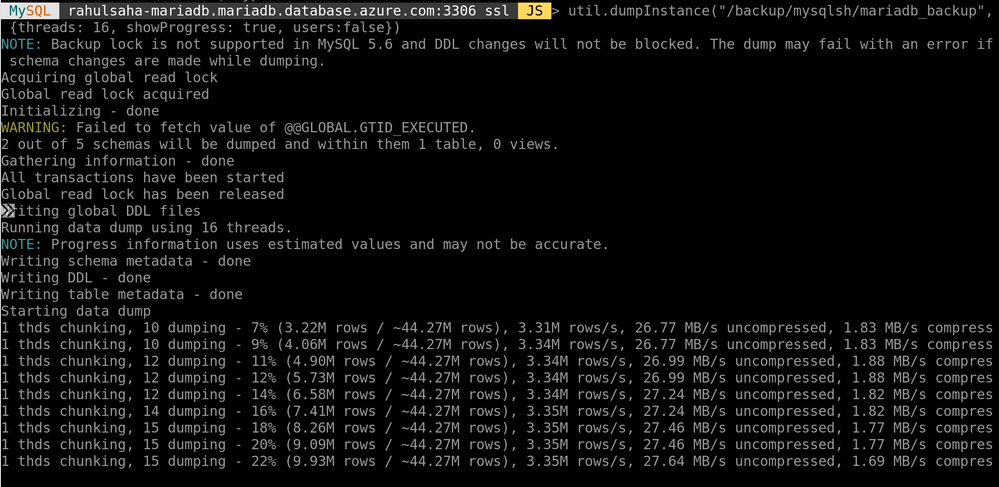

- To take a full backup (which will also include the master binlog file number and position) of the source server, at the mysqlsh prompt, run the following command:

Syntax

util.dumpInstance(“, {threads: 16, showProgress: true, users:false})

Example command

util.dumpInstance(“/backup/mysqlsh/mariadb_backup”, {threads: 16, showProgress: true, users:false})

Example output

- To restore the backup to Azure Database for MySQL using MySQLSh, run the following command.

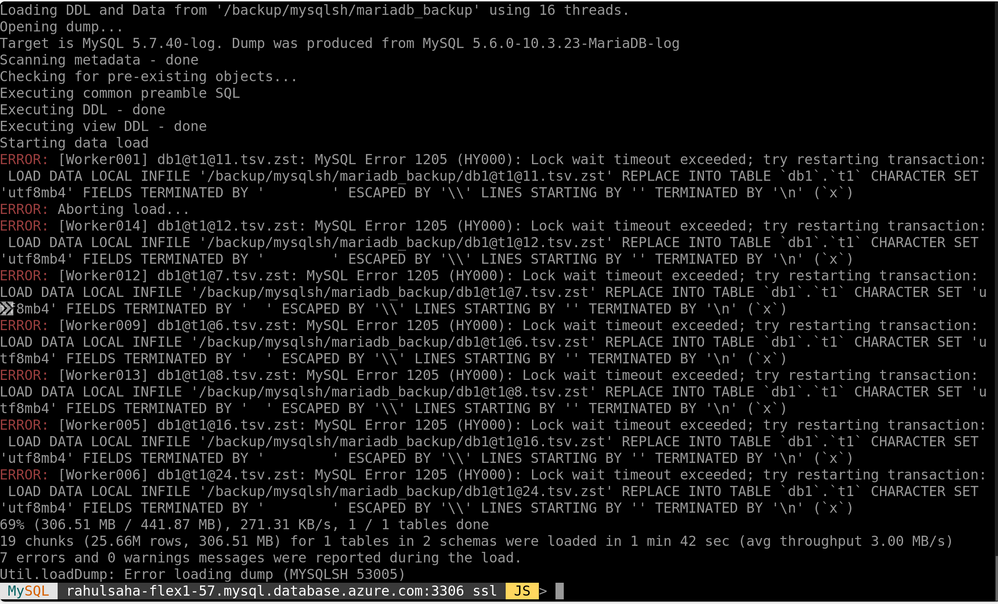

util.loadDump(“/backup/mysqlsh/mariadb_backup”, {threads: 16, showProgress: true})

Example output

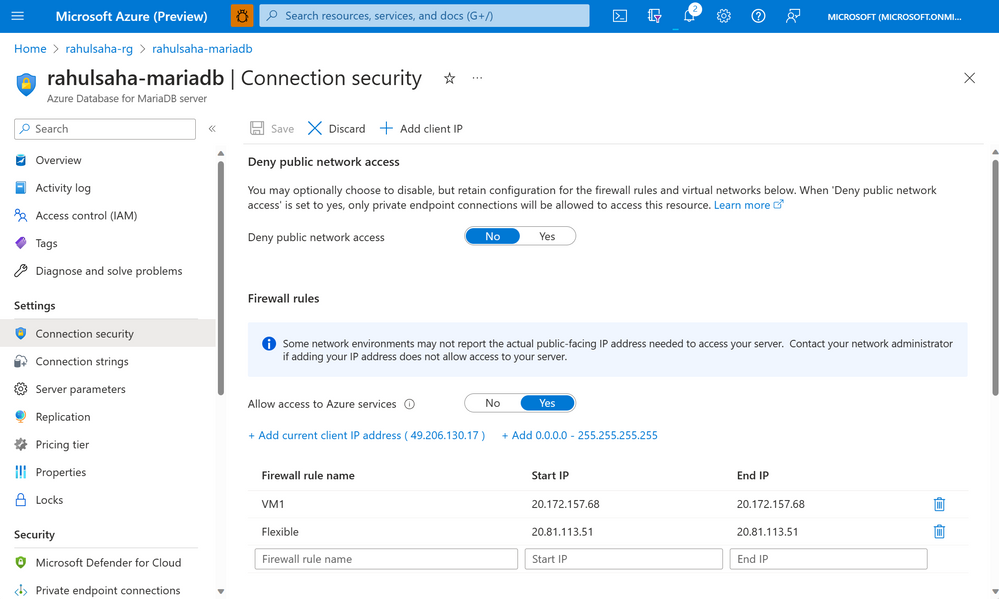

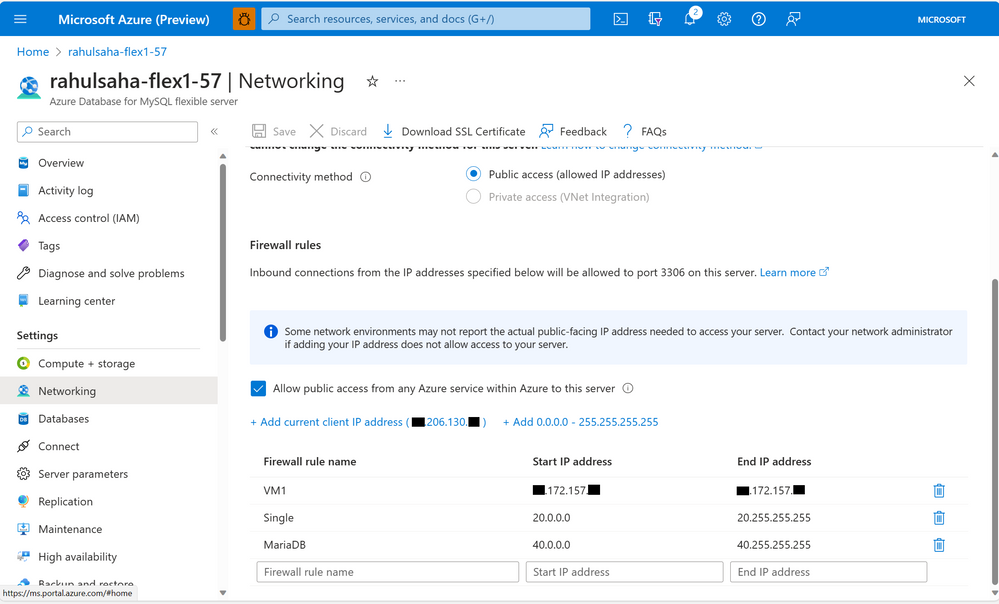

Next, I need to set up replication from Azure Database for MariaDB to Azure Database for MySQL. However, before setting up replication, it’s important to ensure that the firewall of both Azure Database for MariaDB and Azure Database for MySQL servers are configured such that they are allowed to communicate with each other.

- To configure network settings on the source Azure Database for MariaDB server, ensure that the IP addresses of the VM used to perform the migration and the target Azure Database for MySQL server are whitelisted in the firewall on the source Azure Database for MariaDB server, as shown below.

- To configure network settings on the target Azure Database for MySQL server, ensure that the IP addresses of the VM used to perform the migration and the source Azure Database for MariaDB server are whitelisted in the firewall on the target Azure Database for MySQL server, as shown below.

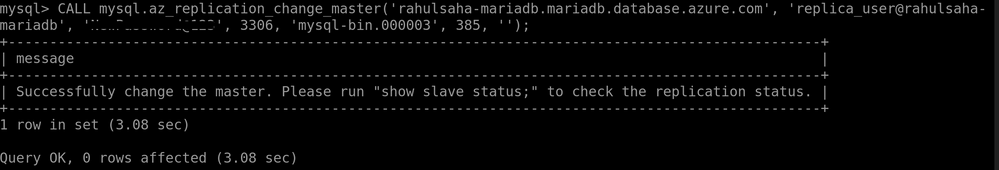

- To configure the replication, use the stored procedure mysql.az_replication_change_master, as shown below.

CALL mysql.az_replication_change_master(‘rahulsaha-mariadb.mariadb.database.azure.com’, ‘replica_user@rahulsaha-mariadb’, ‘StrongPass’, 3306, ‘mysql-bin.000003’, 385, ”);

Example output

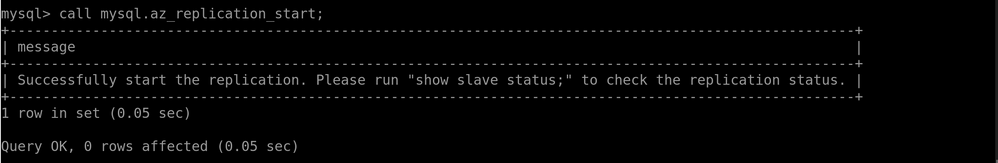

- To start the replication, use the stored procedure mysql.az_replication_start, as shown below.

call mysql.az_replication_start;

Example output

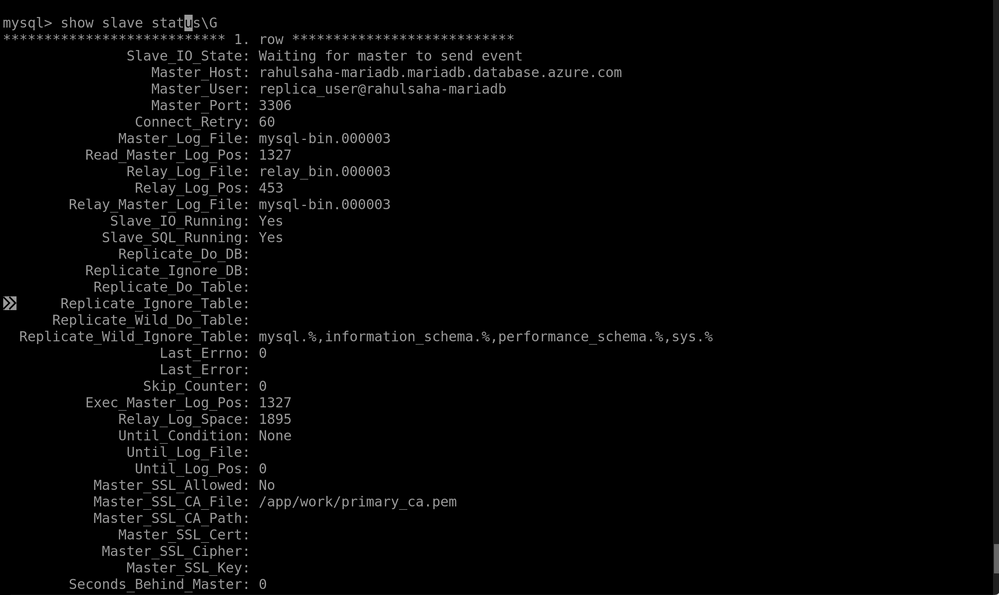

- Monitor the replication by running the command below until the Second behind master value displays as 0.

mysql> show slave statusG

Example output

- Finally, point the application to the target Azure Database for MySQL server by updating the connections string(s) as necessary.

Limitations

- MySQLShell doesn’t support backing up users. While creating the backup, be sure not to include users by using the following command:

MySQL rahulsaha-mariadb.mariadb.database.azure.com:3306 ssl JS > util.dumpInstance(“/backup/mysqlsh/mariadb_backup”, {threads: 16, showProgress: true, users:false})

Example output

- Restore error – Lock wait timeout exceeded. Locking can happen between transactions when loading data to MySQL, which may result in throwing the following error:

MySQL Error 1205 (HY000): Lock wait timeout exceeded; try restarting transaction:…

Example output

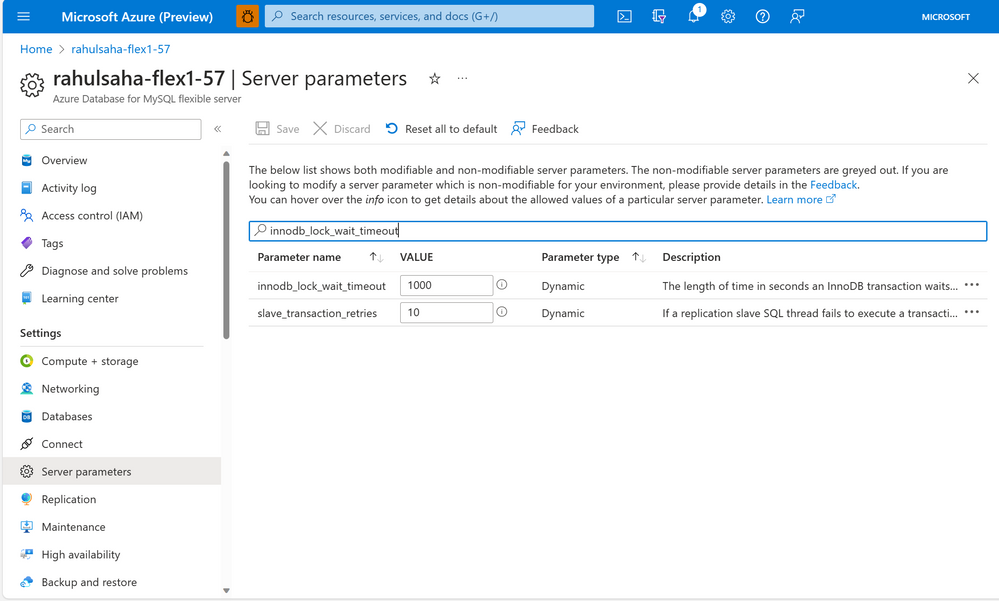

If you get this error, you can work around it by increasing the value of the “innodb_lock_wait_timeout” parameter in the Azure portal (as shown below), and then continuing the migration.

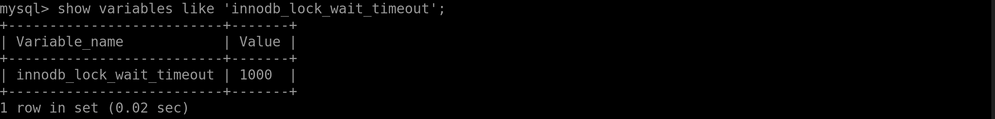

Then, verify the change from the MySQL client by running the following command:

SHOW VARIABLES LIKE ‘innodb_lock_wait_timeout’;

Example output

Conclusion

Migrating from Azure Database for MariaDB to Azure Database for MySQL using dump and restore tools, together with replication to minimize the downtime needed for cutover, is not a difficult process. However, be sure to thoroughly test and benchmark things to help minimize the potential for encountering issues during and post migration, as every database, application architecture can be incredibly unique.

If you have any feedback or questions about the information supplied above, please leave a comment below or email us at AskAzureDBforMariaDB@service.microsoft.com. Thank you!

by Contributed | Jun 2, 2023 | Technology

This article is contributed. See the original author and article here.

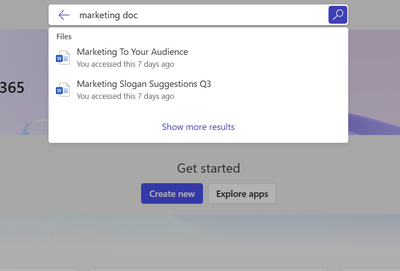

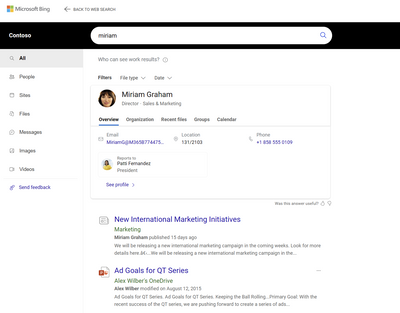

Across Microsoft solutions, it is possible to search for work/organizational content in many different entry points, such as Office.com, Sharepoint.com and Microsoft Bing – Work tab.

Office.com and Sharepoint.com are great places to start a search across all your personal and content accessible to you within your organization, including shared documents, offering capabilities that can help you navigate content in a way that suits you the best.

This post will guide you through some of the most frequent work search tasks and demonstrate how you can achieve them with different methods. You will learn how to optimize your search experience and access the information you need efficiently.

It all starts with the Search box.

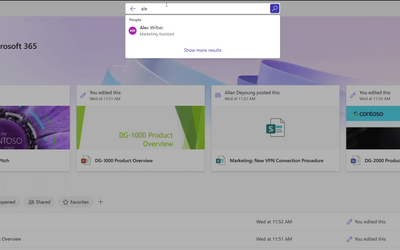

The Search box can help you find your recent content quickly and easily. It shows you suggestions of Files, People, and Apps that you interact with frequently.

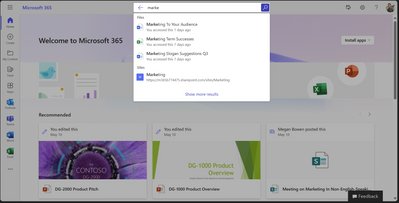

How does starting your search in Office.com or Sharepoint.com looks like

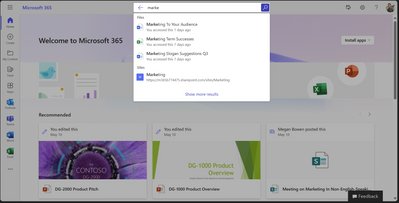

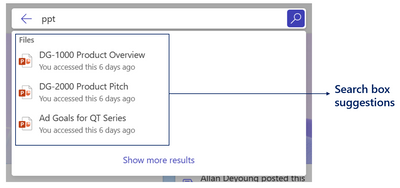

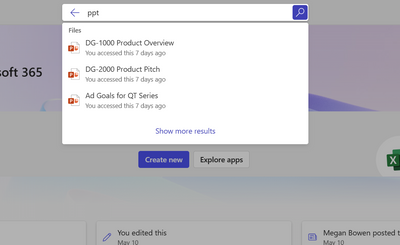

You can also use keywords like “ppt” or “doc” to refine the results you are seeing in the Search box suggestions.

Retrieve Word documents by using ‘doc’ keyword.

Retrieve PowerPoint documents by using ‘ppt’ keyword.

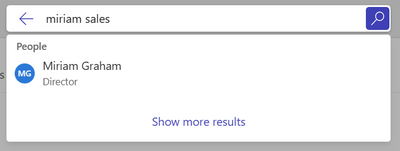

You can also get People results in Search box suggestions based on their phone number or department.

People search suggestions based on phone number.

People search suggestions based on department name.

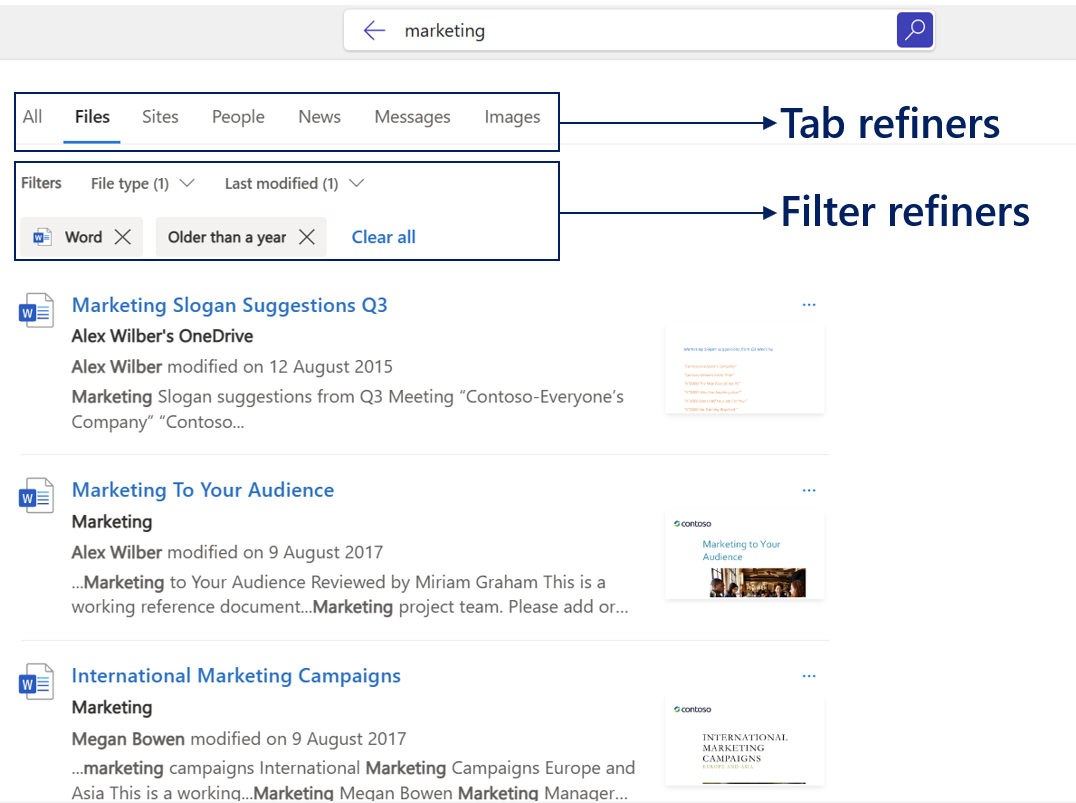

Navigating to Search Results Page

If you don’t see what you are looking for in Search box suggestions, press enter or click ‘Show more results’ to go to the results page to explore more content. You can use different tabs or filters to refine the results you are seeing.

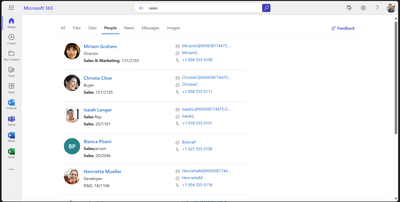

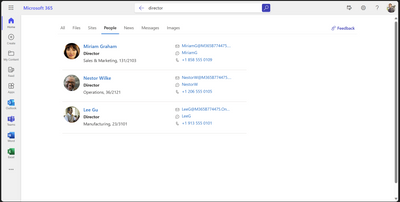

As an example, you can use People tab and different keywords to search for people based on their department or job title.

Search for People based on department name.

Search for People based on job title.

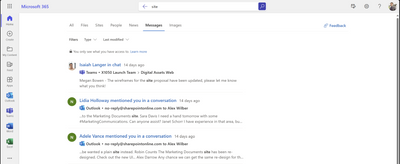

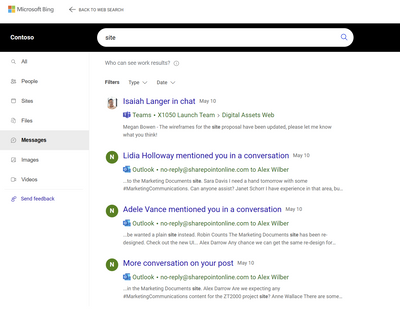

Or go to Messages tab to find an Email or a Teams message with specific keywords.

Email and Teams messages search.

Easily navigate through your own content

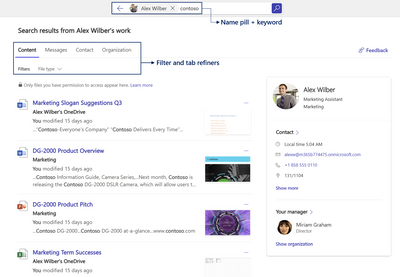

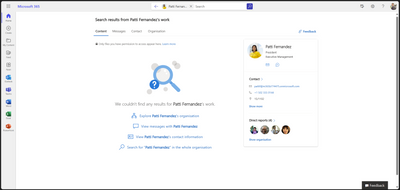

Navigating through your own content daily can be challenging. Sometimes you may want to search for a specific document you created or edited, or a message/email you sent. This can be easily done by typing your name in the Search box and clicking on the Search box suggestion. This opens up a Person Centric Search view shown below.

It is worth noting that Messages tab is currently available in Office.com and is yet to come to Sharepoint.com

Navigating to People Centric Search view by clicking on suggestions in the Search Box

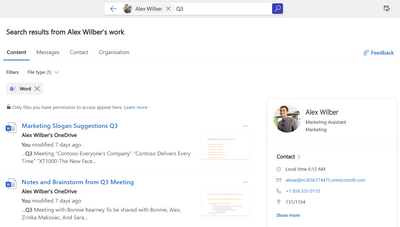

You can further narrow down your own content by typing additional keywords in the search box next to the name pill or using filter and tab refiners.

Using keyword + File Type filter to further refine your search

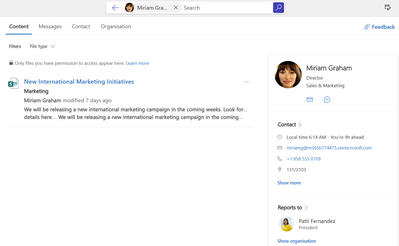

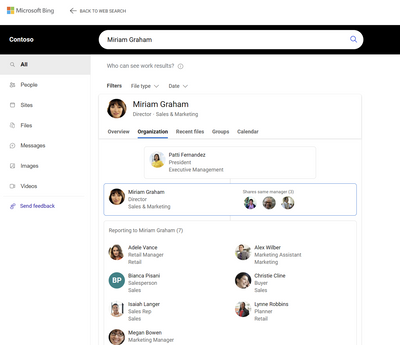

Search for people and content they shared.

You can search for your colleagues’ content or understand their position in the organizational chart by looking them up and clicking on their name from the search box suggestion.

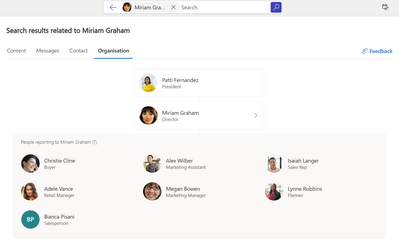

Browse through content your colleagues have shared with you.

Understand your company organization and who your colleagues report to.

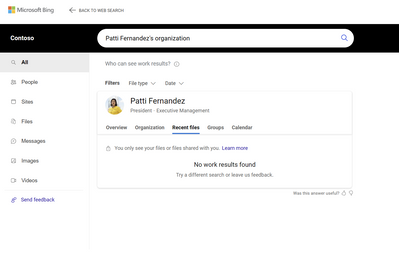

This feature only shows public content and content that has been explicitly shared with you by the person you are looking up. Hence in some cases, you may not get any results.

You can only browse through content explicitly shared with you.

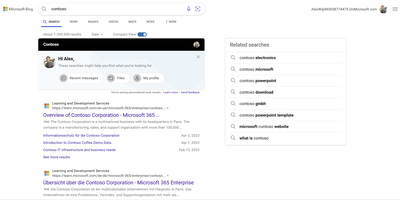

Getting to work search results in Microsoft Bing

We are currently working to bring the same set of search capabilities across Office.com, Sharepoint.com and Bing.com/work.

To access work search in Bing.com, you need to be signed into Bing with your organizational account. This will enable you to see top work search results in the All-Tab. You can further explore work content by clicking some of the work result suggestions or going straight to Work tab.

Work result suggestions in All-Tab

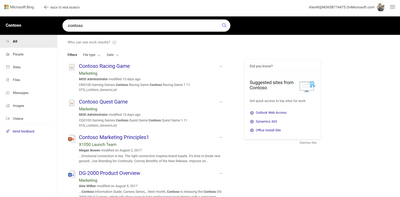

Work search results in Work-Tab

On images below, we are showing how some of the searches mentioned in this article may look like in Bing.com/work.

Email and Teams messages search

People search by name.

Understand your company organization and who your colleagues report to.

You can only browse through content explicitly shared with you.

by Contributed | Jun 1, 2023 | Technology

This article is contributed. See the original author and article here.

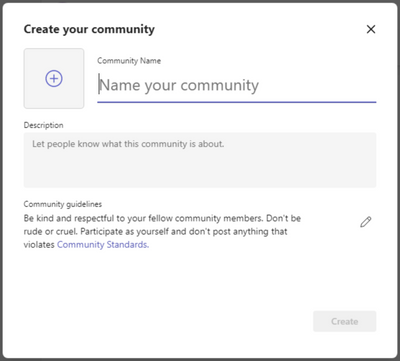

As you might have seen, many new features have become available in Communities (beta) in Teams free on Windows 11, bringing it close to parity with mobile. You can now create and join communities on Windows 11, and invite others to your communities, too.

There’s also a significant change in Teams free meetings where previously all meeting participants joined meetings through lobby. We have now added a new option for invited participants to get directly to the meeting, in other words bypassing the lobby. This will be the default setting for all new Teams free meetings, making meeting joins more seamless for the invited participants, and keeping your meetings safe by having everybody else still joining through the lobby.

New features in Teams (free) on desktop and web

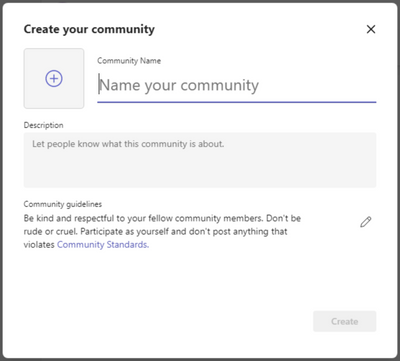

Create a community (Windows 11)

You can now conveniently create a new community from scratch in Teams free on Windows 11, very similarly as on mobile. You just need to fill in the community details like name, description, and community picture, and adjust community guidelines as needed. After community creation you can then invite new members or change further community details using mobile.

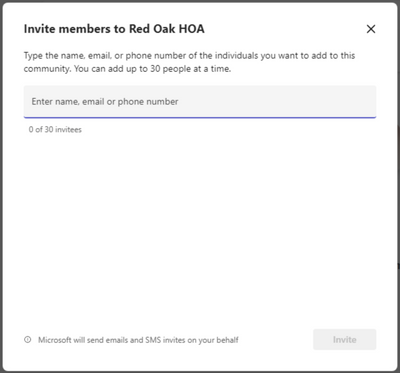

Invite members to your community (Windows 11)

It’s easy to invite others to your communities in Teams free on Windows 11 now. You can invite contacts already using Teams free or even those who have not yet got started using their email address or phone number. Learn how to invite and remove community members

Create community events and view community calendars (Windows 11)

Community events are a core feature in communities and now it’s possible to create those and view community calendars in Teams free on Windows 11, too. Communities created on Windows 11 are visible on mobile and vice versa making it easy to join the meeting when it’s time. Learn more about community calendars and events

Notify community members about new content via email (Windows 11)

When creating a new community post, you can now notify community members about the new post via email. This new option available when posting in a community helps you to reach your community members quickly even when they are not in Teams. These emails link back to the original post making it convenient to read the new content. Learn how to post to a community

Use Microsoft Designer for community announcements (Windows 11)

Community announcements stand out from other community content and when posting a community announcement on Windows 11, you can now use Microsoft Designer to quickly create a gorgeous banner image for the announcement. Learn how to use Designer for announcement banners

New features in Teams (free) on mobile

Notify community members about new content via email

When creating a new community post, you can now notify community members about the new post via email. This new option available when posting in a community helps you to reach your community members quickly even when they are not in Teams. These emails link back to the original post making it convenient to read the new content. Learn how to post to a community

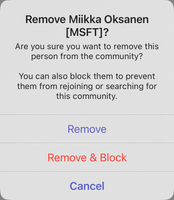

Block users from your community

In the event of a bad actor going rogue in your community, as the community owner you can now block them from the community. The same user will not be able to join the community again. We hope you won’t be needing this feature, but it’s now available should it be needed. You can remove a community member and block them from joining the community again by selecting them from the member list and selecting ‘Remove from community’. This will give you options to ‘Remove’ and ‘Remove & Block’. Learn how to remove and block users from your community

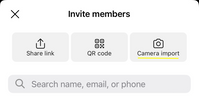

Bulk invite community members using mobile camera (iOS)

It’s now very easy to quickly get your community growing with the new bulk invite option on iOS. With this feature you can use your mobile camera on iOS to grab a picture of a list of email addresses and phone numbers which then will be validated and matched against the current member list of the community. You can update the list as needed and when ready, send invites to up to 50 persons in one go. Learn how to bulk invite community members on iOS

How to send feedback and suggestions?

We are always happy to receive feedback and suggestions to make our product even better. To participate in our feedback program and help us in our endeavor, please follow the steps below:

You can also sign up for a user panel, to get opportunities to connect directly with the product team, and help make Teams (free) better. Learn more

by Contributed | May 31, 2023 | Technology

This article is contributed. See the original author and article here.

Many organizations, for example, schools during a significant weather event, need to rapidly communicate ad hoc updates via SMS or Email. With Azure Communication Services and Azure OpenAI Services, we can simplify this experience to help you automatically generate templated messages for different communication channels and audiences. If this problem seems familiar, we have built a pre-built solution that can help you. See our manual for step-by-step instructions.

The solution uses the example of a a school administrator who needs to send a weather related alert and focuses on three main parts:

- Provide a secure app for school administrators using Power Apps. School administrators can configure the message they want to send to their contacts.

- Support multiple channels (SMS, Email) using Power Automate and Azure Communication Services. They can cover a variety of channels to ensure the recipients see the message in a timely manner.

- Auto-generate templates for each medium based on the message description using Power Automate and Azure OpenAI Services. Craft professional messages for a variety of mediums easily. You will not need to re-write the message for each medium.

Architecture overview

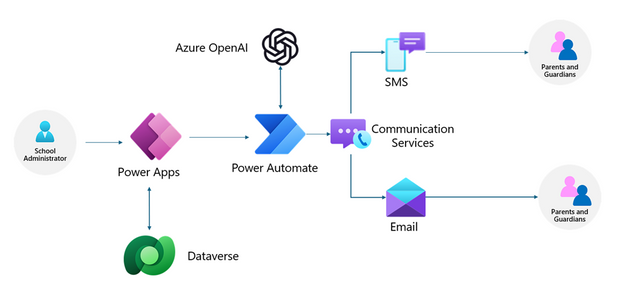

In our solution, to create and send messages to the contacts, we will leverage Power Apps and Power Automate to build the applications and flows. The Power App will enable the school administrator to configure the message they want to send out to contacts. It will be linked to a Dataverse table with contact information. The Power App will trigger a Power Automate flow which will use Azure OpenAI Services to create communication templates for each channel and Azure Communication Services to send SMS and Emails to the parents using contact information.

Check out our pre-built solution or the step-by-step instructions to get started building your own application for SMS and email messages today.

If you would like to see more samples like this or would like to learn more about the process of building solutions with Azure Communication Services and Azure OpenAI Services, drop us a comment below.

Recent Comments