by Contributed | Sep 28, 2023 | Technology

This article is contributed. See the original author and article here.

As a Microsoft Most Valuable Professional (MVP) and a Microsoft Certified Trainer (MCT), I can say from experience that if you want to improve your skills, expand your knowledge, and advance your career, Microsoft Learn can be an essential resource for you. This family of skill-building offerings brings together all Microsoft technical content, learning tools, and resources, providing practical learning materials both for professionals and beginners. Among the many features that Microsoft Learn offers, four of my favourites are collections, career path training, Practice Assessments, and exam prep videos.

1. Collections

Collections let you customise your own learning journey. Often you come across something on Microsoft Learn that’s interesting, and you want to save it for later. This is where collections come in handy. Collections let you organise and group content on Microsoft Learn—whether it’s a module about a particular topic, a learning path, or an article with technical documentation. You can even share your collections via a link with others.

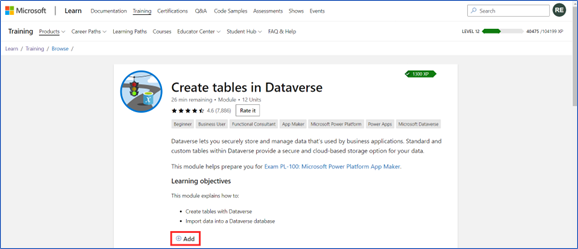

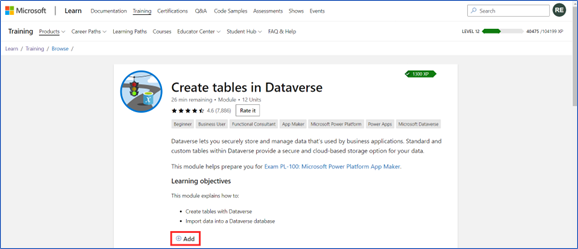

I frequently create collections to keep track of all the content that will be useful in preparing for a Microsoft Certification exam. This might include the official learning path, along with any extra documentation that could help during exam prep. To place a module or learning path into a collection, from the Training tab, on the content of interest, select Add. You can revisit collections from your Microsoft Learn profile.

The Add button on a Microsoft Learn training module.

2. Career path training

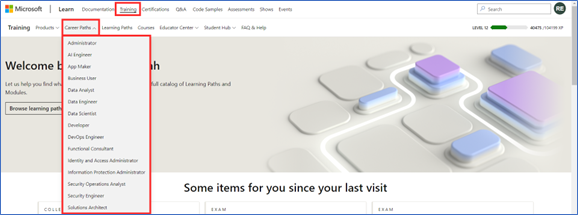

As you may have already discovered, one of the challenges to learning new technologies is finding the right resources for your skill-building needs. Perhaps you’re not sure where to begin your learning journey. I’ve found that a good starting point is to explore learning content based on your career path or on one that interests you. You can find this option on the Microsoft Learn Training tab, and it points you to a collection of modules, learning paths, and certifications that are relevant and tailored to your chosen job role. Whether you want to become a business user, a data scientist, a solutions architect, a security engineer, or a functional consultant, you can find the appropriate learning content for your role and level of expertise. Plus, with career path training, you can learn at your own pace, gain practical experience, and validate your skills with Microsoft Certifications.

Career path collection options on Microsoft Learn.

3. Practice Assessments

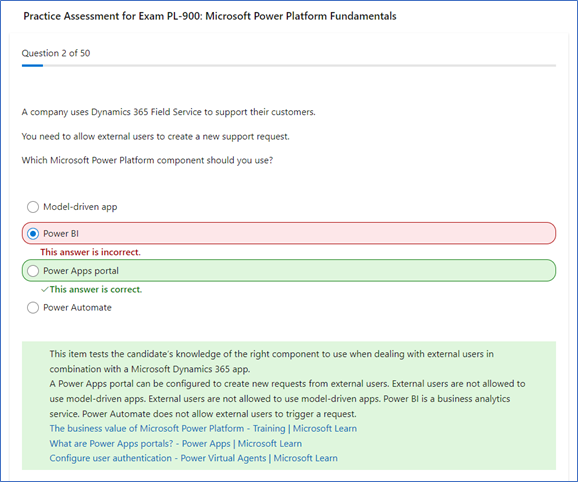

If you’re preparing to earn a Microsoft Certification, you can get an idea of what to expect before you take the associated exam by trying a Practice Assessment. This option is available for some certifications and is a great way to gauge the topics you’re strong in and the ones for which you could use more practice. They help you build confidence by giving you a feel for the types of questions, style of wording, and level of difficulty you might encounter during the actual exam.

Sample Practice Assessment questions.

If your certification exam has a Practice Assessment available, it’s listed on the Microsoft Learn exam page, under Schedule exam. Just select Take a free practice assessment.

4. Exam prep videos

Other valuable Microsoft Learn resources to help you get ready for earning a Microsoft Certification are exam prep videos, available for some certifications. These videos are designed to help you review the key concepts and skills that are covered on the exam and to provide tips and tricks on how to approach the questions. They offer an engaging way to absorb essential knowledge and skills, making it easier to grasp technical concepts and their practical applications. The videos, hosted by industry experts, provide a structured, guided approach to the exam topics.

These exam prep videos complement your other Microsoft Learn study materials. Even if you consider yourself an expert on a topic, the videos are a good way to refresh your memory before exam day. To browse through available exam prep videos, check out the Microsoft Learn Exam Readiness Zone and search for your topic of interest or exam number, or even filter by product.

Share your favourite Microsoft Learn features

Creating your own collections of content, exploring new career paths, or preparing to earn Microsoft Certifications by taking Practice Assessments or watching exam prep videos are just some of the ways that Microsoft Learn can help you achieve your skill-building and certification goals, and they’re some of my favourite features in Microsoft Learn. What are your favourites? Share your top picks with us, and help others on their learning journeys.

Meet Rishona Elijah, Microsoft Learn expert

Rishona Elijah is a Microsoft Most Valuable Professional (MVP) for Business Applications and a Microsoft Certified Trainer (MCT). She works as a Trainer & Evangelist at Microsoft Partner Barhead Solutions, based in Australia. She is also a LinkedIn Learning instructor for Microsoft Power Platform certifications. Rishona has trained thousands of individuals on Microsoft Power Platform and Dynamics 365, delivering impactful training sessions that empower them to use the no-code/low-code technology to build their own apps, chatbots, workflows, and dashboards. She enjoys sharing her knowledge and ideas on her blog, Rishona Elijah, in addition to speaking at community conferences and user groups.

“Power Platform with Rishona Elijah” is a Microsoft learning room that provides a supportive and encouraging environment for people starting their Microsoft Power Platform journey. The room offers assistance and guidance on Microsoft Power Platform topics, including certifications, Power Apps, Power Virtual Agents, Power Automate, and AI Builder. It’s also a great space to network with like-minded peers and to celebrate your success along the way. Sign up for the “Power Platform with Rishona Elijah” learning room.

Learn more about Rishona Elijah

by Contributed | Sep 27, 2023 | Technology

This article is contributed. See the original author and article here.

The Azure Functions team is thrilled to announce General Availability of version 4 of the Node.js programming model! This programming model is part of Azure Functions’ larger effort to provide a more flexible and intuitive experience for all supported languages. You may be aware that we announced General Availability of the new Python programming model for Azure Functions at MS Build this year. The new Node.js experience we ship today is a result of the valuable feedback we received from JavaScript and TypeScript developers through GitHub, surveys, user studies, as well as suggestions from internal Node.js experts working closely with customers.

This blog post aims to highlight the key features of the v4 model and also shed light on the improvements we’ve made since announcing public preview last spring.

What’s improved in the V4 model?

In this section, we highlight several key improvements made in the V4 programming model.

Flexible folder structure

The existing V3 model requires that each trigger be in its own directory, with its own function.json file. This strict structure can make it hard to manage if an app has many triggers. And if you’re a Durable Functions user, having your orchestration, activity, and client functions in different directories decreases code readability, because you must switch between directories to look at the components of one logical unit. The V4 model removes the strict directory structure and gives users the flexibility to organize triggers in ways that makes sense to their Function app. For example, you can have multiple related triggers in one file or have triggers in separate files that are grouped in one directory.

Furthermore, you no longer need to keep a function.json file for each trigger you have in the V4 model as bindings are configured in code! See the HTTP example in the next section and the Durable Functions example in the “More Examples” section.

Define function in code

The V4 model uses an app object as the entry point for registering functions instead of function.json files. For example, to register an HTTP trigger responding to a GET request, you can call app.http() or app.get() which was modeled after other Node.js frameworks like Express.js that also support app.get(). The following shows what has changed when writing an HTTP trigger in the V4 model:

File Type

|

V3

|

V4

|

JavaScript |

module.exports = async function (context, req) {

context.log('HTTP function processed a request');

const name = req.query.name

|| req.body

|| 'world';

context.res = {

body: `Hello, ${name}!`

};

};

|

const { app } = require("@azure/functions");

app.http('helloWorld1', {

methods: ['GET', 'POST'],

handler: async (request, context) => {

context.log('Http function processed request');

const name = request.query.get('name')

|| await request.text()

|| 'world';

return { body: `Hello, ${name}!` };

}

});

|

JSON |

{

"bindings": [

{

"authLevel": "anonymous",

"type": "httpTrigger",

"direction": "in",

"name": "req",

"methods": [

"get",

"post"

]

},

{

"type": "http",

"direction": "out",

"name": "res"

}

]

}

|

Nothing Nothing

|

Trigger configuration like methods and authLevel that were specified in a function.json file before are moved to the code itself in V4. We also set several defaults for you, which is why you don’t see authLevel or an output binding in the V4 example.

New HTTP Types

In the V4 model, we’ve adjusted the HTTP request and response types to be a subset of the fetch standard instead of types unique to Azure Functions. We use Node.js’s undici package, which follows the fetch standard and is currently being integrated into Node.js core.

HttpRequest – body

V3

|

V4

|

// returns a string, object, or Buffer

const body = request.body;

// returns a string

const body = request.rawBody;

// returns a Buffer

const body = request.bufferBody;

// returns an object representing a form

const body = await request.parseFormBody();

|

const body = await request.text();

const body = await request.json();

const body = await request.formData();

const body = await request.arrayBuffer();

const body = await request.blob();

|

HttpResponse – status

V3

|

V4

|

context.res.status(200);

context.res = { status: 200}

context.res = { statusCode: 200 };

return { status: 200};

return { statusCode: 200 };

|

return { status: 200 };

|

To see how other properties like header, query parameters, etc. have changed, see our developer guide.

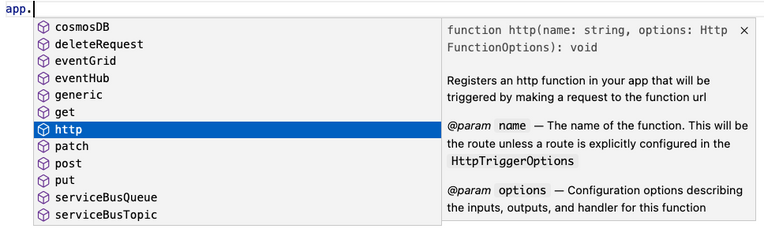

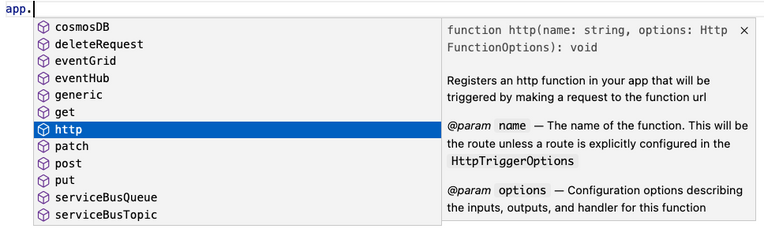

Better IntelliSense

If you’re not familiar with IntelliSense, it covers the features in your editor like autocomplete and documentation directly while you code. We’re big fans of IntelliSense and we hope you are too because it was a priority for us from the initial design stages. The V4 model supports IntelliSense for JavaScript for the first time, and improves on the IntelliSense for TypeScript that already existed in V3. Here are a few examples:

More Examples

NOTE: One of the priorities of the V4 programming model is to ensure parity between JavaScript and TypeScript support. You can use either language to write all the examples in this article, but we only show one language for the sake of article length.

Timer (TypeScript)

A timer trigger that runs every 5 minutes:

import { app, InvocationContext, Timer } from '@azure/functions';

export async function timerTrigger1(myTimer: Timer, context: InvocationContext): Promise {

context.log('Timer function processed request.');

}

app.timer('timerTrigger1', {

schedule: '0 */5 * * * *',

handler: timerTrigger1,

});

Durable Functions (TypeScript)

Like in the V3 model, you need the durable-functions package in addition to @azure/functions to write Durable Functions in the V4 model. The example below shows one of the common patterns Durable Functions is useful for – function chaining. In this case, we’re executing a sequence of (simple) functions in a particular order.

import { app, HttpHandler, HttpRequest, HttpResponse, InvocationContext } from '@azure/functions';

import * as df from 'durable-functions';

import { ActivityHandler, OrchestrationContext, OrchestrationHandler } from 'durable-functions';

// Replace with the name of your Durable Functions Activity

const activityName = 'hello';

const orchestrator: OrchestrationHandler = function* (context: OrchestrationContext) {

const outputs = [];

outputs.push(yield context.df.callActivity(activityName, 'Tokyo'));

outputs.push(yield context.df.callActivity(activityName, 'Seattle'));

outputs.push(yield context.df.callActivity(activityName, 'Cairo'));

return outputs;

};

df.app.orchestration('durableOrchestrator1', orchestrator);

const helloActivity: ActivityHandler = (input: string): string => {

return `Hello, ${input}`;

};

df.app.activity(activityName, { handler: helloActivity });

const httpStart: HttpHandler = async (request: HttpRequest, context: InvocationContext): Promise => {

const client = df.getClient(context);

const body: unknown = await request.text();

const instanceId: string = await client.startNew(request.params.orchestratorName, { input: body });

context.log(`Started orchestration with ID = '${instanceId}'.`);

return client.createCheckStatusResponse(request, instanceId);

};

app.http('durableOrchestrationStart1', {

route: 'orchestrators/{orchestratorName}',

extraInputs: [df.input.durableClient()],

handler: httpStart,

});

In Lines 8-16, we set up and register an orchestration function. In the V4 model, instead of registering the orchestration trigger in function.json, you simply do it through the app object on the durable-functions module (here df). Similar logic applies to the activity (Lines 18-21), client (Lines 23-37), and Entity functions. This means you no longer have to manage multiple function.json files just to get a simple Durable Functions app working!

Lines 23-37 set up and register a client function to start the orchestration. To do that, we pass in an input object from the durable-functions module to the extraInputs array to register the function. Like in the V3 model, we obtain the Durable Client using df.getClient() to execute orchestration management operations like starting a new orchestration. We use an HTTP trigger in this example, but you could use any trigger supported by Azure Functions such as a timer trigger or Service Bus trigger.

Refer to this example to see how to write a Durable Entity with the V4 model.

What’s new for GA?

We made the following improvements to the v4 programming model since the announcement of Public Preview last spring. Most of these improvements were made to ensure full feature parity between the existing v3 and the new v4 programming model.

- AzureWebJobsFeatureFlags no longer needs to be set

During preview, you needed to set the application setting “AzureWebJobsFeatureFlags” to “EnableWorkerIndexing” to get a v4 model app working. We removed this requirement as part of the General Availability update. This also allows you to use Azure Static Web Apps with the v4 model. You must be on runtime v4.25+ in Azure or core tools v4.0.5382+ if running locally to benefit from this change.

- Model v4 is now the default

We’re confident v4 is ready for you to use everywhere, and it’s now the default version on npm, in documentation, and when creating new apps in Azure Functions Core Tools or VS Code.

- Entry point errors are now exposed via Application Insights

In the v3 model and in the preview version of the v4 model, errors in entry point files were ignored and weren’t logged in Application Insights. We changed the behavior to make entry point errors more obvious. It’s a breaking change for model v4 as some errors that were previously ignored will now block your app from running. You can use the app setting “FUNCTIONS_NODE_BLOCK_ON_ENTRY_POINT_ERROR” to configure this behavior. We highly recommend setting it to “true” for all v4 apps. For more information, see the App Setting reference documentation.

- Support for retry policy

We added support for configuring retry policy when registering a function in the v4 model. The retry policy tells the runtime to rerun a failed execution until either successful completion occurs or the maximum number of retries is reached. A retry policy is evaluated when a Timer, Kafka, CosmosDB or Event Hubs-triggered function raises an uncaught exception. As a best practice, you should catch all exceptions in your code and rethrow any errors that you want to result in a retry. Learn more about Azure Functions Retry policy.

- Support for Application Insights npm package

Add the Application Insights npm package (v2.8.0+) to your app to discover and rapidly diagnose performance and other issues. This package tracks the following out-of-the-box: incoming and outgoing HTTP requests, important system metrics such as CPU usage, unhandled exceptions, and events from many popular third-party libraries.

- Support for more binding types

We added support for SQL and Table input and output bindings. We also added Cosmos DB extension v4 types. A highlight of the latest Cosmos DB extension is that it allows you to use managed identities instead of secrets. Learn how to upgrade your Cosmos DB extension here and how to configure an app to use identities here.

- Support for hot reload

Hot reload ensures your app will automatically restart when a file in your app is changed. This was not working for model v4 when we announced preview, but has been fixed for GA.

How to get started?

Check out our Quickstarts to get started:

See our Developer Guide to learn more about the V4 model. We’ve also created an upgrade guide to help migrate existing V3 apps to V4.

Please give the V4 model a try and let us know your thoughts!

If you have questions and/or suggestions, please feel free to drop an issue in our GitHub repo. As this is an open-source project, we welcome any PR contributions from the community.

by Contributed | Sep 26, 2023 | Technology

This article is contributed. See the original author and article here.

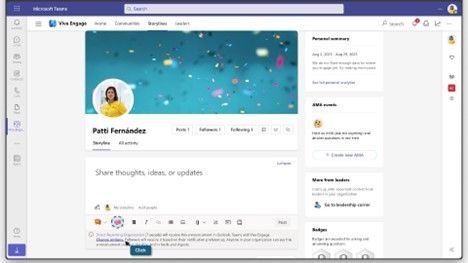

Announcements have been a core part of Viva Engage for years but have recently become a critical way to keep employees informed and engaged with leaders. The broad delivery of announcements across Viva Engage, Viva Connections, Outlook, and Microsoft Teams means that employees can use rich engagement features like reactions, replies, and sharing from within the apps that they use every day. Analytics help track the reach of the announcements, meaning our customers have come to rely on announcements to help run their business and measure the impact their communications are having in your network.

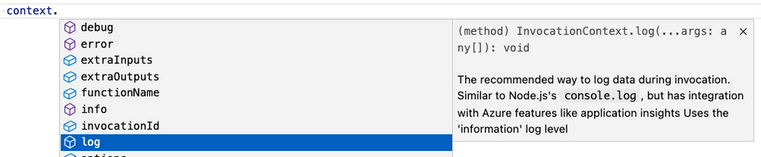

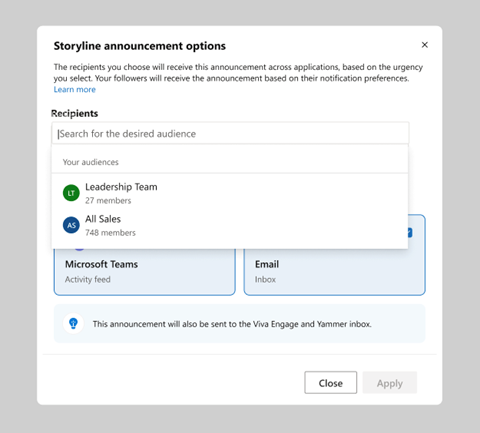

We’ve taken it one step further by enabling specific employees as leaders because we know leaders want to share vision, updates, and perspectives to build culture and manage change. When leaders are selected in Viva Engage, and their audiences have been set up they can send storyline announcements. And now leaders can reach and connect with employees in their organizations and send their posts to multiple audiences. Leaders and their delegates can now configure multiple audiences and effectively target storyline announcements to them.

Leaders can now send targeted storyline announcements to different audiences

Leaders, and their delegates can now target storyline announcements to preconfigured audiences, expanding the leader’s ability to reach people beyond their direct reporting organization. Upon creating an announcement, the leader’s default audience will be preselected. To add additional audiences, select Change option. This will bring up the Storyline announcements options window in which the default audience can be changed for any of the configured ones. Once an audience is selected, confirm the channels before you send your message.

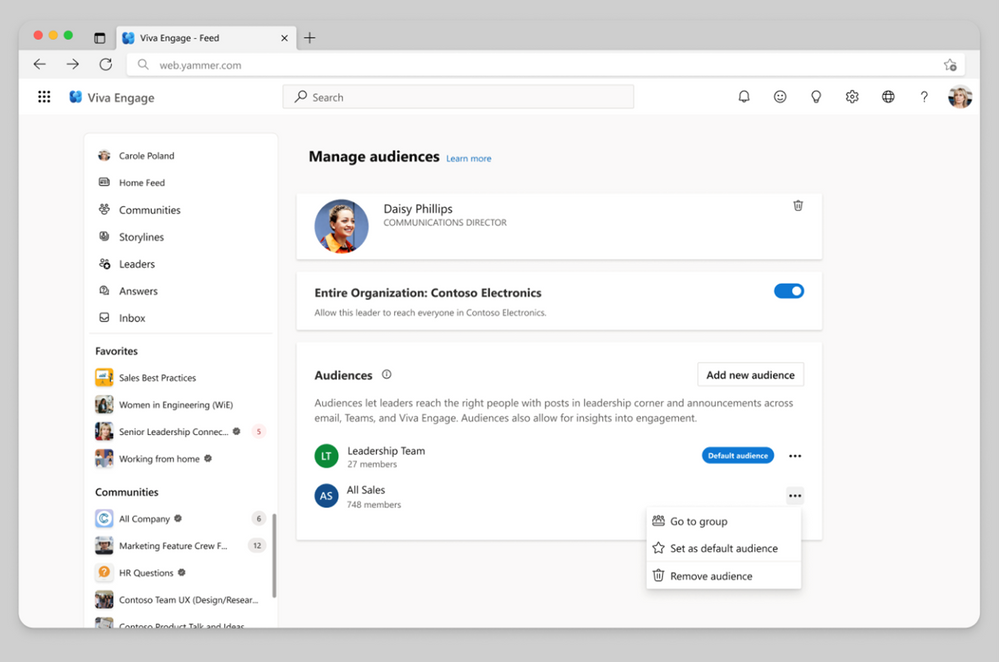

Set up multiple audiences

To view information about how to configure audiences, please visit Identify leaders and manage audiences in Viva Engage. To define a leader’s audience, you add individual users or groups, such as security, distribution, or Microsoft 365 groups. When you add a group, changes to the group’s membership, including nested members, automatically update the audience within 24 hours. This functionality makes it easy to apply existing groups that define a leader’s organization to define the leader’s audience in Viva Engage. Customers may have existing distribution lists that they use to communicate with an audience by email. You can add those lists to the leader’s audience in Viva Engage for continuous communication.

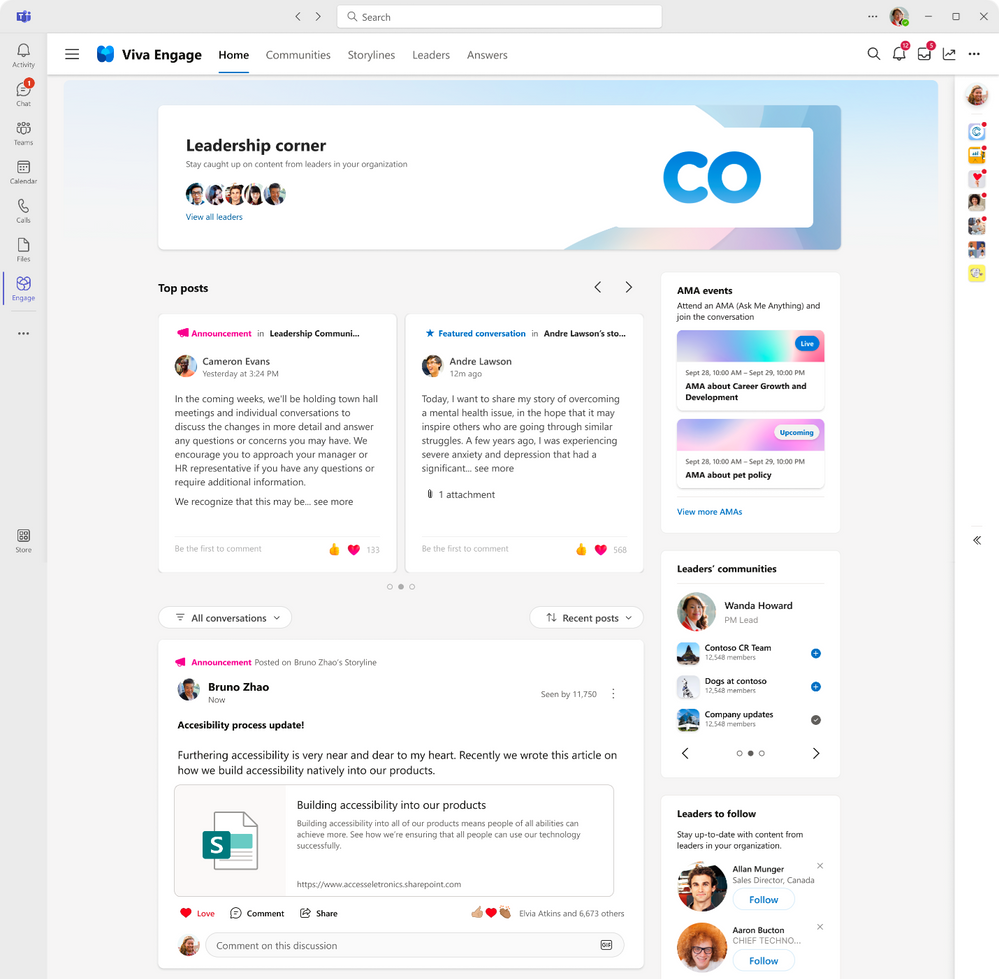

Send announcements to your audience across apps

Leaders can make an announcement and select your audience and reach people across apps. Once the announcement is delivered, your audiences can react and reply regardless of what app they receive the announcement. To make your announcements more engaging, attach images or videos, ask a question, pose a poll to your community, or draw attention to specific actions by using rich media within your announcement. Announcements made by leaders will also be highlighted in leadership corner.

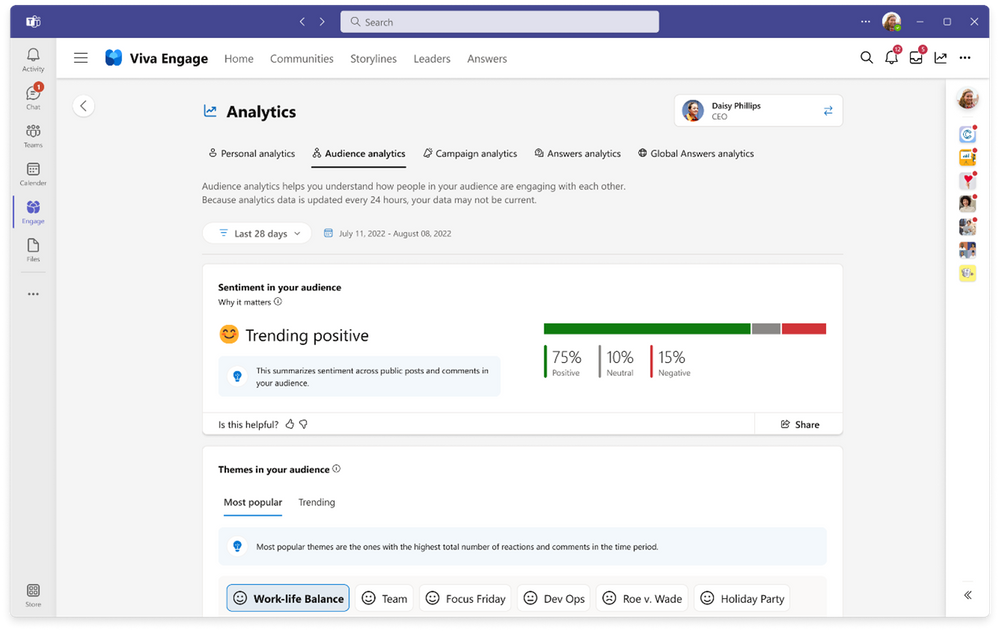

Analyze the impact of your communication

When you post an announcement in Viva Engage, you can expect the message to reach your audience. From the notifications with Microsoft Teams, Outlook interactive messages, Engage notifications, we want to make sure that you understand the impact of your communications by tracking the reach of your communications and the sentiment of your audience. With conversation insights, you’ll be able to view how well your announcement has performed. With personal analytics, you can track the effectiveness across multiple posts and announcements. With audience analytics, leaders can track levels of sentiment analysis to help monitor the engagement, contributions and themes across your audience, beyond what you have sent. You can start to understand and know what your audience thinks is important and can help you identify what to post next.

What’s new and next for storyline….

• Storyline can be made available for only specific employees

• Storyline announcements coming to multiple tenant organizations

Learn more about Viva Employees Communications and Communities and stay tuned to the M365 Roadmap for the latest updates about what’s coming for Viva Engage.

If you are looking to share important news and information with employees, try using announcements on your storyline posts. With the speed of delivery, ability to measure reach, and a way to spark two-way engagement, announcements are an essential way to keep your employees informed.

by Contributed | Sep 25, 2023 | Technology

This article is contributed. See the original author and article here.

In the fall of 2018, we announced the general availability (GA) of Azure Database for MariaDB. Since that release five years ago, we’ve invested time and resources in Azure Database for MariaDB to further extend our commitment to the open-source community by providing valuable, enterprise-ready features of Azure for use on open-source database instances.

In November 2021 we released Flexible Server, the next-generation deployment option for Azure Database for MySQL. As we continue to invest in Azure Database for MySQL and focus our efforts on Flexible Server to make it the best destination for your open-source MySQL workloads, we’ve decided to retire the Azure Database for MariaDB service in two years (September 2025). This will help us focus on Azure Database for MySQL – Flexible Server to ensure that we are providing the best user experience for our customers.

Azure Database for MySQL – Flexible Server has enhanced features, performance, an improved architecture, and more controls to manage costs across all service tiers when compared to Azure Database for MariaDB. As a result, we encourage you to migrate to Azure Database for MySQL – Flexible Server before the Azure MariaDB retirement to experience the new capabilities of the service, including:

- More ways to optimize costs, including support for burstable tier compute options.

- Improved performance for business-critical production workloads that require low latency, high concurrency, fast failover, and high scalability.

- Improved uptime with the ability to configure a hot standby on the same or a different zone, and a one-hour time window for planned server maintenance.

For more information about Flexible Server, see the article Azure Database for MySQL – Flexible Server.

Migrate from Azure Database for MariaDB to Azure Database for MySQL – Flexible Server

For information about how you can migrate your Azure Database for MariaDB server to Azure Database for MySQL – Flexible Server, see the blog post Migrating from Azure Database for MariaDB to Azure Database for MySQL.

Retirement announcement FAQs

We understand that you may have a lot of questions about what this announcement means for your Azure Database for MariaDB workloads. As a result, we’ve added several “frequently asked questions” in the article What’s happening to Azure Database for MariaDB?

For quick reference, we’ve included a few key questions and answers below.

Q. Why am I being asked to migrate to Azure Database for MySQL – Flexible Server

A. There’s a high application compatibility between Azure Database for MariaDB and Azure Database for MySQL, as MariaDB was forked from MySQL. Azure Database for MySQL – Flexible Server is the best platform for running all your MySQL workloads on Azure. MySQL- Flexible Server is economical and provides better performance across all service tiers, together with more ways to control your costs for less costly and faster disaster recovery.

Q. After the Azure Database for MariaDB retirement announcement, can I still create new MariaDB servers to meet my business needs?

A. As part of this retirement, we’ll no longer support the ability to create new MariaDB instances by using Azure portal beginning on December 19, 2023. If you do still need to create MariaDB instances to meet business continuity needs, you can use the Azure CLI to do so until March 19, 2024.

Q. Can I choose to continue running Azure Database for MariaDB beyond the sunset date?

A. Unfortunately, we don’t plan to support Azure Database for MariaDB beyond the sunset date of September 19, 2025. As a result, we advise you to start planning your migration as soon as possible.

Q. I have additional questions about the retirement. How can I find out more?

A. If you have questions, get answers from community experts in Microsoft Q&A. If you have a support plan and you need technical help, create a support request that includes the following information:

- For Issue type, select Technical.

- For Subscription, select your subscription.

- For Service, select My services.

- For Service type, select Azure Database for MariaDB.

- For Resource, select your resource.

- For Problem type, select Migration.

- For Problem subtype, select Migrating from Azure for MariaDB to Azure for MySQL Flexible Server.

If you have questions about the information in this post, please don’t hesitate to reach out to us at AskAzureDBforMariaDB@service.microsoft.com. Thank you!

by Contributed | Sep 24, 2023 | Technology

This article is contributed. See the original author and article here.

This blog is a deep dive into the future of requirement gathering. This blog explores how Azure DevOps and Azure OpenAI are joining forces to transform the way we manage project requirements. From automated requirement generation to intelligent analysis, learn how these powerful tools are reshaping the landscape of project management. Stay tuned for an enlightening journey into the world of AI-powered requirement gathering!

Setting up environment

Pre-requisite

– Visual studio code

Please install below extension

– Jupyter (Publisher- Microsoft)

– Python (Publisher- Microsoft)

– Pylance (Publisher- Microsoft)

– Semantic Kernel Tools (Publisher- Microsoft)

– Python

Please install below python packages

– PIP

– Semantic-kernel

Define the Semantic Function to generate feature description-

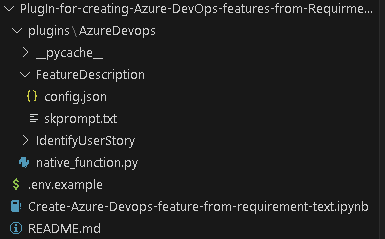

Now that you have below mentioned folder structure.

Create semantic function for generating Feature description.

The first step is to define a semantic function that can interpret the input string and map it to a specific action. In our case, the action is to generate feature description from title. The function could look something like this:

1. Create folder structure

– Create /plugins folder

– Create folder for semantic plugins inside Plugins folder, in this case its “AzureDevops”. (For more details on plugins)

– Create Folder for semantic function inside the skills folder ie ‘/plugin/AzureDevops’, in this case “FeatureDescription” (For more details on functions)

2. Define semantic function

– Once we have folder structure in place lets define the function by having

‘config.json’ with below JSON content for more details on content refer here.

{

"schema": 1,

"description": "get standard feature title and description",

"type": "completion",

"completion": {

"max_tokens": 500,

"temperature": 0.0,

"top_p": 0.0,

"presence_penalty": 0.0,

"frequency_penalty": 0.0

},

"input": {

"parameters": [

{

"name": "input",

"description": "The feature name.",

"defaultValue": ""

}

]

}

}

In above file, we are defining semantic function which accept ‘input’ parameter to perform “get standard feature title and description” as mentioned in Description section.

– Now, let’s put the single shot prompt for our semantic function in ‘skprompt.txt’. where ‘{{input}}’ where our input ask would be replaced.

Create feature title and description for {{$input}} in below format

Feature Title:"[Prodive a short title for the feature]"

Description: "[Provide a more detailed description of the feature's purpose, the problem it addresses, and its significance to the product or project.]

User Needs-

[Outline the specific user needs or pain points that this feature aims to address.]

Functional Requirements:-

- [Requirement 1]

- [Requirement 2]

- [Requirement 3]

- ...

Non-Functional Requirements:-

- [Requirement 1]

- [Requirement 2]

- [Requirement 3]

- ...

Feature Scope:

[Indicates the minimum capabilities that feature should address. Agreed upon between Engineering Leads and Product Mangers] "

Execute above semantic function in action.

– Rename “.env.example’ as ‘.env’ and update the parameters with actual values

– Open notebook “Create-Azure-Devops-feature-from-requirement-text” in visual studio code and follow the steps mentioned to test

– Step 1 Install all python libraries

!python -m pip install semantic-kernel==0.3.10.dev0

!python -m pip install azure-devops

– Step 2 Import Packages required to prepare a semantic kernel instance first.

import os

from dotenv import dotenv_values

import semantic_kernel as sk

from semantic_kernel import ContextVariables, Kernel # Context to store variables and Kernel to interact with the kernel

from semantic_kernel.connectors.ai.open_ai import AzureChatCompletion, OpenAIChatCompletion # AI services

from semantic_kernel.planning.sequential_planner import SequentialPlanner # Planner

kernel = sk.Kernel() # Create a kernel instance

kernel1 = sk.Kernel() #create another kernel instance for not having semanitc function in the same kernel

useAzureOpenAI = True

# Configure AI service used by the kernel

if useAzureOpenAI:

deployment, api_key, endpoint = sk.azure_openai_settings_from_dot_env()

kernel.add_chat_service("chat_completion", AzureChatCompletion(deployment, endpoint, api_key))

kernel1.add_chat_service("chat_completion", AzureChatCompletion(deployment, endpoint, api_key))

else:

api_key, org_id = sk.openai_settings_from_dot_env()

kernel.add_chat_service("chat-gpt", OpenAIChatCompletion("gpt-3.5-turbo", api_key, org_id))

– Step 3 Importing skills and function from folder

# note: using skills from the samples folder

plugins_directory = "./plugins"

# Import the semantic functions

DevFunctions=kernel1.import_semantic_skill_from_directory(plugins_directory, "AzureDevOps")

FDesFunction = DevFunctions["FeatureDescription"]

– Step 4 calling the semantic function with feature title to generate feature description based on predefined template

resultFD = FDesFunction("Azure Resource Group Configuration Export and Infrastructure as Code (IAC) Generation")

print(resultFD)

Create native function to create features in Azure DevOps

– Create file “native_function.py” under “AzureDevOps” or download the file from repo.

– Copy the code base and update Azure Devops parameter. you can access this as context parameter but for simplicity of this exercise, we kept it as hardcoded. Please find below code flow

– Importing python packages

– Defining class ‘feature‘ and native function as “create” under “@sk_function”.

– Call semantic function to generate feature description.

– Use this description to create Azure DevOps feature.

from semantic_kernel.skill_definition import (

sk_function,

sk_function_context_parameter,

)

from semantic_kernel.orchestration.sk_context import SKContext

from azure.devops.v7_1.py_pi_api import JsonPatchOperation

from azure.devops.connection import Connection

from msrest.authentication import BasicAuthentication

import base64

from semantic_kernel import ContextVariables, Kernel

import re

class feature:

def __init__(self, kernel: Kernel):

self._kernel = kernel

_function(

description="create a Azure DevOps feature with description",

name="create",

)

_function_context_parameter(

name="title",

description="the tile of the feature",

)

_function_context_parameter(

name="description",

description="Description of the feature",

)

async def create_feature(self, context: SKContext) -> str:

feature_title = context["title"]

get_feature = self._kernel.skills.get_function("AzureDevOps", "FeatureDescription")

fdetails = get_feature(feature_title)

# Define a regular expression pattern to match the feature title

pattern = r"Feature Title:s+(.+)"

# Search for the pattern in the input string

match = re.search(pattern, str(fdetails))

# Check if a match was found

if match:

feature_title = match.group(1)

# Define a regular expression pattern to match the feature description

# Split the string into lines

lines = str(fdetails).split('n')

lines = [line for index, line in enumerate(lines) if index not in [0]]

description = 'n'.join(lines)

jsonPatchList = []

#description=context["description"]

targetOrganizationName= "XXX"

targetProjectName= "test"

targetOrganizationPAT = "XXXXXX"

workItemCsvFile= "abc"

teamName = "test Team"

areaName = teamName

iterationName ="Sprint 1"

targetOrganizationUri='https://dev.azure.com/'+targetOrganizationName

credentials = BasicAuthentication('', targetOrganizationPAT)

connection = Connection(base_url=targetOrganizationUri, creds=credentials)

userToken = "" + ":" + targetOrganizationPAT

base64UserToken = base64.b64encode(userToken.encode()).decode()

headers = {'Authorization': 'Basic' + base64UserToken}

core_client = connection.clients.get_core_client()

targetProjectId = core_client.get_project(targetProjectName).id

workItemObjects = [

{

'op': 'add',

'path': '/fields/System.WorkItemType',

'value': "Feature"

},

{

'op': 'add',

'path': '/fields/System.Title',

'value': feature_title

},

{

'op': 'add',

'path': '/fields/System.State',

'value': "New"

},

{

'op': 'add',

'path': '/fields/System.Description',

'value': description

},

{

'op': 'add',

'path': '/fields/Microsoft.VSTS.Common.AcceptanceCriteria',

'value': "acceptance criteria"

},

{

'op': 'add',

'path': '/fields/System.IterationPath',

'value': targetProjectName+""+iterationName

}

]

jsonPatchList = JsonPatchOperation(workItemObjects)

work_client = connection.clients.get_work_item_tracking_client()

try:

WorkItemCreation = work_client.create_work_item(jsonPatchList.from_, targetProjectName, "Feature")

except Exception as e:

return feature_title+"Feature created unsuccessfully"

return feature_title+" Feature created successfully"

Let’s execute native function

Let’s go back to notebook.

– Step 5 Importing native function

from plugins.AzureDevops.native_function import feature

math_plugin = kernel.import_skill(feature(kernel1), skill_name="AzureDevOps")

variables = ContextVariables()

– Step 6 Executing native function by putting natural language queries in title field

variables["title"] = "creating a nice pipelines"

variables["description"] = "test"

result = await kernel.run_async(

math_plugin["create"], input_vars=variables

)

print(result)

Use of Sequential planner to dynamical create N number of features.

– Step 6 Initiate sequential planner with semantic kernel

from plugins.AzureDevops.native_function import feature

planner = SequentialPlanner(kernel)

# Import the native functions

AzDevplugin = kernel.import_skill(feature(kernel1), skill_name="AzureDevOps")

ask = "create two Azure DevOps features for one with title creating user and one with creating work items with standard feature title and description"

plan = await planner.create_plan_async(goal=ask)

for step in plan._steps:

print(step.description, ":", step._state.__dict__)

This would generate a plan to meet the goal which is above case is “create two Azure DevOps features for one with title creating user and one with creating work items with standard feature title and description” using available function in kernel.

– Step 7 once the plan is created, we can use this plan and execute it to create multiple features.

print("Plan results:")

result = await plan.invoke_async(ask)

for step in plan._steps:

print(step.description, ":", step._state.__dict__)

This will create two features one for user and one for work item. Using this block, you can create a semantic function-based solution that can interpret natural language requirement document or transcript of reequipment call and use it to create features in azure DevOps. You can increase the accuracy of this solution by brining multi-shot prompt and historical data using collections.

Recent Comments