![[Data Virtualization] May need to update Java (JRE7 only uses TLS 1.0)](https://www.drware.com/wp-content/uploads/2023/02/medium-52)

by Contributed | Feb 27, 2023 | Technology

This article is contributed. See the original author and article here.

At end of October 2022 we saw an issue where a customer using PolyBase external query to Azure Storage started seeing queries fail with the following error:

Msg 7320, Level 16, State 110, Line 2

Cannot execute the query “Remote Query” against OLE DB provider “SQLNCLI11” for linked server “(null)”. EXTERNAL TABLE access failed due to internal error: ‘Java exception raised on call to HdfsBridge_IsDirExist: Error [com.microsoft.azure.storage.StorageException: The server encountered an unknown failure: ]occurred while accessing external file.’

Prior to this, everything was working fine; the customer made no changes to SQL Server or Azure Storage.

“The server encountered an unknown failure” – not the most descriptive of errors. We checked the PolyBase logs for more information:

10/30/2022 1:12:23 PM [Thread:13000] [EngineInstrumentation:EngineQueryErrorEvent] (Error, High):

EXTERNAL TABLE access failed due to internal error: ‘Java exception raised on call to HdfsBridge_IsDirExist: Error [com.microsoft.azure.storage.StorageException: The server encountered an unknown failure: ] occurred while accessing external file.’

Microsoft.SqlServer.DataWarehouse.Common.ErrorHandling.MppSqlException: EXTERNAL TABLE access failed due to internal error: ‘Java exception raised on call to HdfsBridge_IsDirExist: Error [com.microsoft.azure.storage.StorageException: The server encountered an unknown failure: ] occurred while accessing external file.’ —> Microsoft.SqlServer.DataWarehouse.DataMovement.Common.ExternalAccess.HdfsAccessException: Java exception raised on call to HdfsBridge_IsDirExist: Error [com.microsoft.azure.storage.StorageException: The server encountered an unknown failure: ] occurred while accessing external file.

at Microsoft.SqlServer.DataWarehouse.DataMovement.Common.ExternalAccess.HdfsBridgeFileAccess.GetFileMetadata(String filePath)

at Microsoft.SqlServer.DataWarehouse.Sql.Statements.HadoopFile.ValidateFile(ExternalFileState fileState, Boolean createIfNotFound)

— End of inner exception stack trace —

We got a little bit more information. PolyBase Engine is checking for file metadata, but still failing with “unknown failure”.

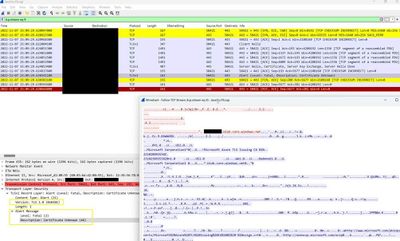

The engineer working on this case did a network trace and found out that the TLS version used for encrypting the packets sent to Azure Storage was TLS 1.0. The following screenshot demonstrates the analysis (note lower left corner where “Version: TLS 1.0” is clearly visible

He compared this to a successful PolyBase query to Azure Storage account and found it was using TLS 1.2.

Azure Storage accounts can be configured to only allow a minimum TLS version. Our intrepid engineer checked the storage account and it was so old that it predated the time when this option was configurable for the storage account. But, in an effort to resolve the customer’s issue, he researched further. The customer was using a Version 7 Java Runtime Environment. Our engineer reproduced the error by downgrading his JRE to Version 7 and then querying a PolyBase external table pointing to his Azure storage account. Network tracing confirmed that JRE v7 will use TLS 1.0. He tried changing the TLS version in the Java configuration but it did not resolve the issue. He then switched back to JRE v8 and the issue was resolved in his environment. He asked the customer to upgrade to Version 8 and found the issue was resolved.

Further research showed that there were Azure TLS Certificate Changes requirements for some Azure endpoints and this old storage account was affected by these changes. TLS 1.0 was no longer sufficient and TLS 1.2 was now required. Switching to Java Runtime Environment Version 8 made PolyBase utilize TLS 1.2 when sending packets to Azure Storage Account and the problem was resolved.

Nathan Schoenack

Sr. Escalation Engineer

by Contributed | Feb 26, 2023 | Technology

This article is contributed. See the original author and article here.

What’s wrong with 1:M relationships between ADX tables?

In this article I want to talk about the behavior of 1:M relationships and what kinds of joins are created to support 1: M.

Aren’t 1:M just the normal relationships in PBI?

Yes, they are but not when both sides are ADX queries in Direct Query mode.

In most cases Power BI “thinks” that the relationship is M:M because of the way distinct count works in ADX.

To get 1:M, you have to change the relationship’s properties using tabular editor or another method.

Also, if the dimension table is small, the distinct count of the key will return the exact value and the relationship will be defined as 1:M.

So, if they are the default, what’s wrong with 1:M?

The problem is with the KQL joins which are generated based on 1:M relationships.

Let’s assume that we have a Product Category dimension, and you filter by one category.

the relationship is 1:M between Product Category and FactSales.

Assuming you used IsDimension=true on the dimension, The KQL statement generated will be something like:

[“Product_Category”]

| join kind=rightouter hint.strategy =broadcast SalesFact

| summarize A0=sum(Sales) by …

| where Category==”Cat1”

Because of the right outer join, the filter on Category is applied after the join is performed on the entire fact table.

The query results will be correct, but the performance will be bad.

What can be done to make the query perform better?

We need to convince PBI to create an inner join instead of the rightouter join.

There are two way to force an inner join:

- Define the relationship as M:M

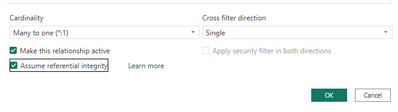

- Define the relationships as 1:M and checking the option Assume Referential integrity.

In the case of inner join , the filter(s) on the dimension that appear at the end of the query, will be pushed by the ADX engine to the early stages of execution and so the join will be only on the products that belong to Cat1 in this example.

The result will be a much faster query.

Summary

If you see in the queries generated by PBI any other join except inner, you have to change your PBI model so that the joins will be inner.

by Contributed | Feb 25, 2023 | Technology

This article is contributed. See the original author and article here.

Introduction

AI, without a doubt, is revolutionizing low code development. The capabilities of Artificial Intelligence into Low code have the power to revolutionize the way you work and enhance the applications and solutions you build.

You may be wondering what’s in it for you with AI as a low code developer. Well, AI has immense potential from automating repetitive tasks, adding intelligence into your applications, building chatbots, automated workflows, predictive analysis and much more on AI.

As a low code developer, you understand the power of technology to streamline the development to deployment process. Well in addition, with the recent development of AI this is a chance to take your skills to the next level. This is a rapidly growing field with massive impact and as a low code developer, you certainly do not need ten years of experience to develop AI models or rather add intelligence into your solution. In this blog, we’ll explore the basics of AI for low code developers, what opportunities you have in this platform and responsible AI.

What is Artificial Intelligence?

AI refers to the development of algorithms that can perform tasks that typically require human intelligence such as recognition, decision-making, solving problems and cognitive services. This usually involves training a computer/model to recognize patterns, make decisions and solve problems based on data. With the current development of AI, the main goal is to be able to create systems that can learn, adapt, and improve over time.

The results of AI are immense and have the potential to revolutionize many industries and change the way we live and work. For a low code developer this means you can automate tasks, improve accuracy and speed, provide valuable insights that can enhance user experience.

Opportunity of AI for a low code developer.

As a low code developer, the opportunity to integrate AI into your development process is too good to ignore. Regardless of your level of experience as a low code developer, AI is a powerful tool that can help you add intelligence into your solution and get the most out of it. As AI continues to evolve, we can expect to see more innovative solutions and use cases of AI in our solutions. Some examples of the several ways you can use AI as a low code developer include:

- Creating chatbots – Leveraging Power Virtual Agents helps you create conversational chatbots where customers can get quicker services from your business with tasks such as customer service, ticket processing and general inquiry. It is easier also to integrate chatbots solutions into your existing solutions easily. For instance, once you are done building your solution you can publish the chatbot onto your website, mobile apps, messaging platforms (teams, Facebook). Get started here Intelligent Virtual Agents and Bots | Microsoft Power Virtual Agents

Top tip: Remember to publish your chatbot for any updates you make to reflect changes.

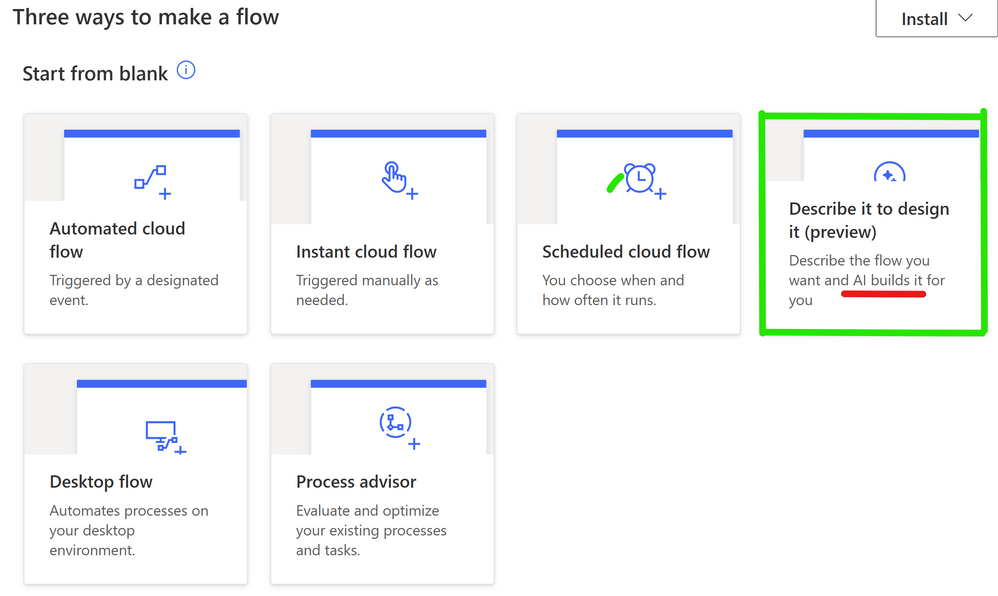

- Automated workflows – AI can be used to automate workflows in low code applications, reducing manual effort and improving efficiency. This also helps an organization follow a structured manner in the business processes with the organization.

- Decision making – Using AI you can be able to gain some valuable insights from the data that you have. For instance, if you need to predict a future trend based on data from the past few years, you can achieve this as a low code developer using AI

- Image and object detection – Leveraging object detection allows you to fully utilize AI as a low code developer from tasks such as document scanning and extraction of texts from images. This will help you improve the quality of your applications and extract substantial amounts of data in a short time.

- Natural language processing – This can be used in low code applications to improve accuracy and speed of text- based tasks such as sentiment analysis. If you need to detect the tone in a customer service review, you can leverage on this to detect the sentiments whether it is positive, neutral, or negative.

Here are some of the key benefits of using AI in low code development.

- Automation of repetitive tasks – With AI, you can automate repeated tasks such as data entry, form processing and this will let you focus on other high priority business activities

- Improved accuracy and speed – Some processes that are tedious and manual can be prone to errors and time consuming. Pre-built AI Models can be integrated into your solutions to enhance your application’s accuracy and speed.

- Gaining valuable insights – AI can help low code developers extract valuable insights from data such as trends, this helps you make data-driven decisions for greater business success.

Getting started with AI as a Low code developer.

You can quickly bring AI into your solutions using Microsoft Power Platform, connecting to your business data regardless of where they are stored in One Drive, SharePoint, Azure, Dataverse

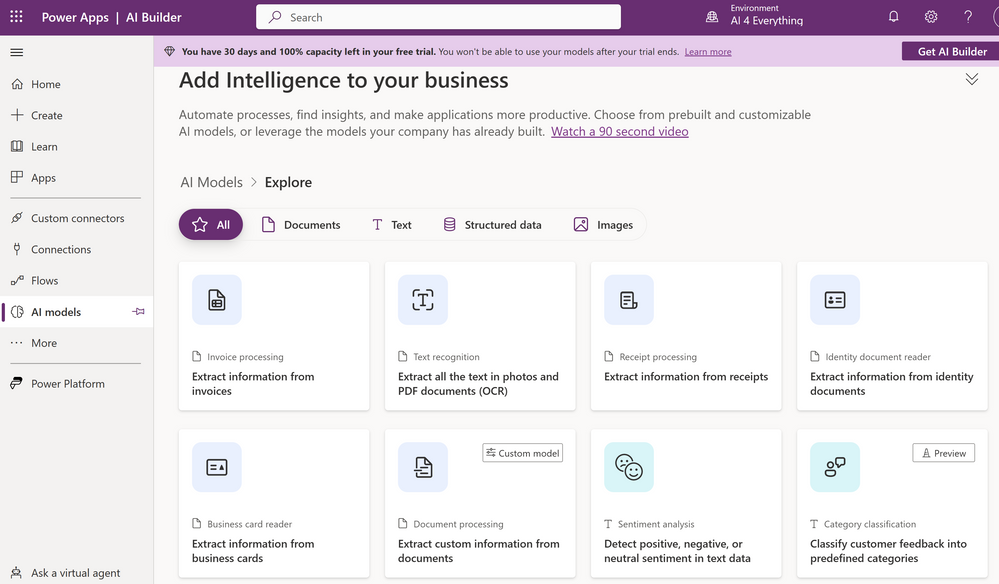

With a few simple steps you can easily get started using AI Builder

.

- Understand business requirements – Before you begin, it is important to understand the business process that needs to be integrated with AI.

- Choose a use case – Once you have decided on the business process that needs AI choose the best use case you can start with. Some of the common use cases we have are similar across businesses, including form processing, invoice processing and sentiment analysis.

If the business problem is unique to your business, you can create a model from scratch to better suit your business.

- Using AI Builder – To use AI Builder, you need to have a Microsoft Power Apps or Power Automate license, with which you can access AI Builder from your Power App or flow. If you don’t have one, you can create a free developers account here

- Choosing an AI Model – You can either use a pre-built template or create a custom model from scratch using your own data or pre-existing data. Using a prebuilt model means that you are utilizing already built AI scenario templates, like invoice processing.

- Test and deploy your model – Once you have selected the model that you want to use based on the business process case, with AI builder, you can test the model’s performance before deployment. With this you can validate the model’s accuracy and adjust it as needed. Once you are satisfied you can deploy it to your Power Apps application or Power Automate flow.

- Monitoring and Improvement – After deploying your model, you can monitor its performance and adjust as per your need.

Responsible AI

As you get started with AI as a low code developer, it is important to ensure that the AI you build is developed and used for the right purpose and in the right environment. Microsoft highlights six key principles for responsible AI namely:

- Transparency

- Fairness

- Reliability and safety

- Privacy and security

- Inclusiveness

- Accountability.

To achieve this as a low code developer who is exploring AI, Microsoft provides resources to help the developers, businesses, and individuals to understand and use responsible AI practices and ethics. This provides a set of principles to guide the development of AI in a responsible way.

Learn more about responsible AI here https://www.microsoft.com/ai/responsible-ai and how Microsoft is achieving this.

Wondering how to get started and explore more resources, check these out:

- Overview of AI Builder – AI Builder

- AI Builder—AI Templates for Apps

- AI Best Practice Architecture and Frameworks

- Watch this video for a demo on how to get started

by Contributed | Feb 24, 2023 | Technology

This article is contributed. See the original author and article here.

Preparando-se para o exame DP-900 Microsoft Azure Data Fundamentals e não sabe por onde começar? Este artigo é um guia de estudo para certificação DP-900!

Fiz uma curadoria de artigos da Microsoft para cada objetivo do exame DP-900. Além disso, compartilho os conteúdos da #SprintDP900, uma série de mentorias do Microsoft Reactor.

No Microsoft Reactor, oferecemos diversos conteúdos gratuitos de capacitação em tecnologias da Microsoft e organizamos sprints de estudos para certificações. Na #SprintDP900, estamos realizando uma série de 3 aulas sobre certificação Azure Data Fundamentals, nos dias 28 de fevereiro, 01 e 02 de março. Todas as pessoas que participarem do Cloud Skills Challenge e assistirem as aulas, poderão participar do quiz de avaliação de conhecimentos e concorrer a um voucher gratuito para realização da prova.

Agenda #SprintDP900

28 de fevereiro, às 12:30h

#SprintDP900: Introdução à Banco de dados no Azure e tipos de serviços

No primeiro encontro, você irá aprender sobre os conceitos básicos de banco de dados na nuvem, entendo cargas de trabalho, funções e serviços comuns.

|

clique aqui para se inscrever |

01 de março, às 12:30h

#SprintDP900: Banco de Dados não relacional no Azure

No segundo encontro do #SprintDP900, vamos aprofundar os conceitos de banco de dados não relacional e conhecer os recursos disponíveis no Azure.

|

clique aqui para se inscrever |

02 de março, às 12:30h

#SprintDP900: Análise de Dados no Azure

No terceiro encontro do #SprintDP900, vamos abordar os serviços de análise de dados no Azure.

|

clique aqui para se inscrever |

As gravações das aulas estarão disponíveis em nosso canal, basta acessar o link de cada sessão.

O Microsoft Cloud Skills Challenge é uma plataforma integrada com o Microsoft Learn, que é uma plataforma global, disponível 24 horas por dia, 7 dias por semana. Você pode criar sua agenda de estudos, pois o desafio estará disponíveis no período de 28/02/2023 a 10/02/2023. As aulas semanais ocorrem no formato ao vivo e se você não puder participar, terá a possibilidade de assistir as gravações.

O Cloud Skills Challenge irá utilizar a trilha de estudos DP-900: Fundamentos Dados do Microsoft Azure em português (Brasil). A prova de certificação também está disponível em português.

Inscreva-se no Cloud Skills Challenge da #SprintDP900: https://aka.ms/SprintDP900/CSC

O que eu preciso fazer para ganhar um voucher de certificação?

Você deverá realizar sua inscrição para as aulas ao vivo e realizar a trilha de estudos proposta no Cloud Skills Challenge. Na aula que será realizada no dia 02 de março, vamos disponibilizar um quiz de validação de conhecimentos para selecionar as 100 pessoas que receberão, por e-mail, um voucher gratuito para realização da certificação DP-900: Azure Data Fundamentals. O critério de priorização dos vouchers é a conclusão do Cloud Skills Challenge, participação nas aulas e obtenção de 80% de acerto no quiz.

Recursos adicionais de aprendizado

Se você não tem muita familiaridade com computação em nuvem, recomendo estudar a trilha Azure Fundamentals:

As porcentagens indicadas em cada tópico da prova são referentes ao peso / volume de questões que você poderá encontrar no exame.

Descrever os principais conceitos de dados (25-35%)

Descrever maneiras de representar dados

Identificar opções para armazenamento de dados

Descrever cargas de trabalho de dados comuns

Identificar funções e responsabilidades para cargas de trabalho de dados

Dados relacionais no Azure (20-25%)

Descrever conceitos de banco de dados relacionais

Descrever os serviços de dados relacionais do Azure

Segurança e Conectividade

Ferramentas para queries

Práticas

Descrever dados não relacionais em Azure (15-20%)

Descrever os recursos do armazenamento do Azure

Descrever capacidades e recursos do Azure Cosmos DB

Segurança e Conectividade

Ferramentas para queries

Práticas

Análise de Dados no Azure (25-30%)

Descrever elementos comuns de análise em larga escala

Descrever a análise de dados em tempo real

Descrever a visualização de dados no Microsoft Power BI

by Contributed | Feb 23, 2023 | Technology

This article is contributed. See the original author and article here.

Microsoft Purview Data Catalog provides data scientists, engineers, and analysts with the data they need for BI, analytics, AI, and machine learning. It makes data easily discoverable by using familiar business and technical search terms and eliminates the need for Excel data dictionaries with an enterprise-grade business glossary. It enables customers to track the origin of their data with interactive data lineage visualization.

We continue to listen to your feedback and have been hard at work to enable various features in Purview Data Catalog in different areas like data curation, browse & search, business glossaries, business workflows, and self-service data access among others in the last 6 months.

Data Curation:

- Create, update, delete, and assign managed attributes to data assets. Learn more here.

- Rich text editor support for asset updates (description etc.). Learn more here.

Browse & Search:

- Keyword highlighting in search results. Learn more here.

- Managed attributes filter support in search results. Learn more here.

Business Glossary:

- Create multiple glossaries to manage business terms across different business units in your organization. Learn more here.

- Rich text editor support for business glossaries. Learn more here.

- Delete term templates without references. Learn more here.

- Add, update, and remove templates for existing terms. Learn more here.

Business Workflows:

- Approval workflow for data asset curation. Learn more here.

- Cancel Workflow run. Learn more here.

- Reassign approvals and tasks in workflows. Learn more here.

- Support for HTTP connector. Learn more here.

- Set reminders and expiry for approvals and task requests in workflows. Learn more here.

Self-Service Data Access:

- Request access on behalf of another user in Microsoft Purview Studio. Learn more here.

- Request access on behalf of another user in Microsoft Synapse Studio. Learn more here.

- Assign data asset owners as approvers for self-service data access. Learn more here.

Our goal is to continue adding features and improve the usability of Microsoft Purview governance capabilities. Get Started easily and quickly using Microsoft Purview. If you have any feature requests or want to provide feedback, please visit the Microsoft Purview forum.

Recent Comments