by Contributed | Mar 9, 2023 | Technology

This article is contributed. See the original author and article here.

Processing health insurance claims can be quite complex. This complexity is driven by a few factors, such as the messaging standards, the exchange protocol, workflow orchestration, all the way to the ingestion of the claim information in a standardize and scalable data stores. To enable operational, financial, and patient-centric data analytics, the claims data stores are often mapped to patient health records at the cohort, organization, or even population level.

What is X12 EDI?

Electronic Data Interchange (EDI) defines a messaging mechanism for unified communication across different organizations. X12 claims based processing refers to a set of standards for electronic data interchange (EDI) used in the healthcare industry to exchange information related to health claims. The X12 standard defines a specific format for electronic transactions that allows healthcare providers, insurers (payers), and other stakeholders to exchange data in a consistent and efficient manner. This cross-industry standard is accredited by the American National Standards Institution (ANSI). For simplicity, we will refer to ‘X12 EDI’ as ‘X12’ throughout this article.

What is FHIR?

FHIR® (Fast Healthcare Interoperability Resources) is a standard for exchanging information in the healthcare industry through web-based APIs with a broad range of resources to accommodate various healthcare use cases. These resources include patient demographics, clinical observations, medications, claims and procedures to name a few. It aims to improve the quality and efficiency of healthcare by promoting interoperability between different systems.

Azure Health Data Services is a suite of purpose-built technologies for protected health information (PHI) in the cloud. The FHIR service in Azure Health Data Services enables rapid exchange of health data using the Fast Healthcare Interoperability Resources (FHIR®) data standard. As part of a managed Platform-as-a-Service (PaaS), the FHIR service makes it easy for anyone working with health data to securely store and exchange Protected Health Information (PHI) in the cloud.

Why Convert X12 to FHIR?

FHIR is a modern, developer-friendly, born-in-the-cloud data standard compared to the aging X12. Converting from X12 to FHIR has many merits; (1) take advantage of FHIR interoperability and adoption to exchange claim information across various systems using modern and secure protocols, (2) unification of patient health and claim dataset into a single FHIR service in the cloud (3) enjoy a larger community of developers and evolving ecosystem at the global healthcare stage.

The Azure Solution

In essence, this article describes how to orchestrate the conversion of X12 claims to FHIR messages using Azure FHIR Service (with Azure Health Data Services), Azure Integration Account and Azure Logic Apps. Azure Logic Apps is a service within the Azure platform that enables developers to create workflows and automate business processes through the use of low-code/no-code visual and integration-based connectors. The service allows you to create, schedule, and manage workflows, that can be triggered by various events, such as receiving an HTTPS request or the arrival of a new file in an SFTP service. The Azure Integration Account is part of the Logic Apps Enterprise Integration Pack (EIP) and is a secure, manageable and scalable container for the integration artifacts that you create. The X12 XML Schema will be provided through the Azure Integration Account. The complete implementation of the X12 to FHIR conversion in Azure is available on GitHub.

Orchestration of X12 to FHIR Conversion

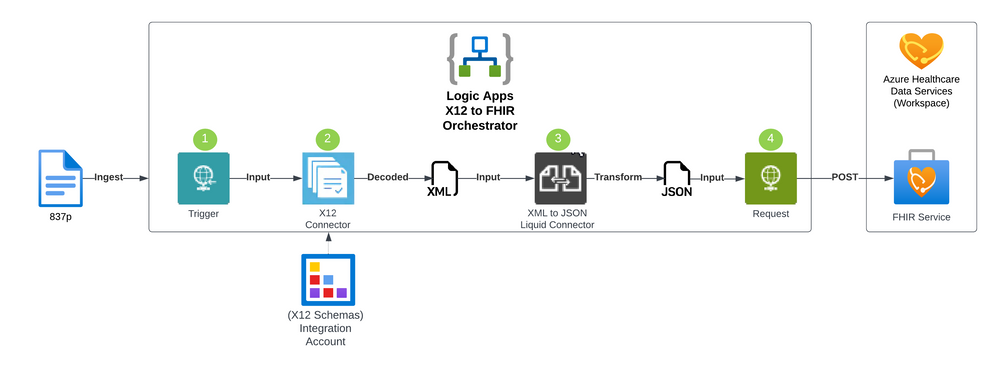

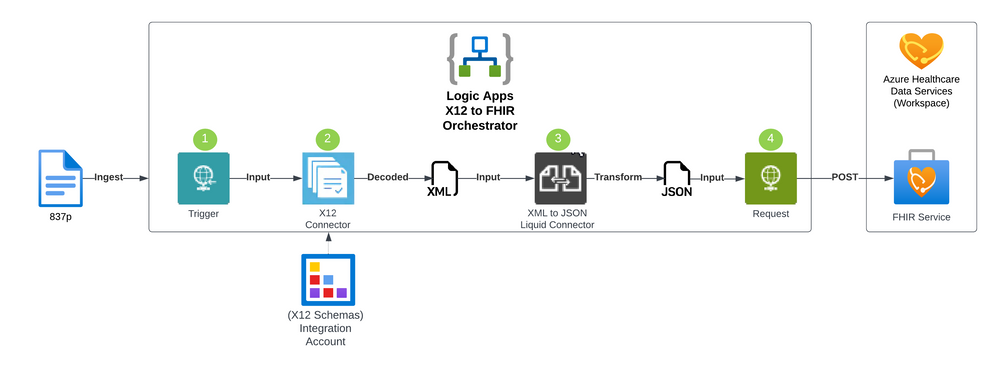

Azure Logic Apps orchestrates the conversion process from X12 to FHIR resources, allows for additional data quality checks, and then ingests the FHIR resources in the Azure FHIR Service as depicted in the following 4 steps:

X12 to FHIR

X12 to FHIR

- First, we ingest the X12 file content into the Azure Logic Apps workflow. In this sample, we submit the X12 file content in the body of an HTTPS Post request to the HTTPS endpoint exposed by Azure Logic Apps.

- Initial data quality check and decoding is done using the Azure Logic Apps X12 connector leveraging the X12 XML schemas associated with the transaction sets. This step will verify that the sender is configured and enabled in the system and pick the correct agreement that is configured with the X12 schema. This schema is used to convert the X12 data to XML.

- Once the X12 file is validated and decoded into the XML format, the XML content can then be converted to FHIR using the Azure Logic Apps XML to JSON Liquid connector. This uses DotLiquid templates to map the XML content to the corresponding FHIR resources.

- The output of the workflow is to store the data in Azure FHIR Service (with Azure Health Data Services) to support a unified view of the patient’s record. The FHIR service supports an HTTP REST endpoint where individual resources can be managed or sent as an atomic transaction using a FHIR bundle.

FHIR Resources

Various FHIR resources can be generated from an X12 transaction set. Depending on business requirements and entities participating in the integration, these resources will vary.

Entity |

Resource |

Description |

Patient |

Patient |

The person who the claim is for. |

Sender |

Organization or Practitioner |

The organization or provider submitting the claim. |

Recipient |

Organization or Practitioner |

The organization or provider receiving the claim for processing. |

Claim |

Claim |

The details about the services, amounts, and codes associated with the claim. |

Transaction Set / Message |

Communication |

Metadata bout the X12 message including the raw message. |

Liquid Template Sample

A sample liquid template is provided showing how to extract data from the decoded X12 file. In the following snippet, the elements under ‘content’ correspond to XML elements in the decoded X12 file. The XML elements are being mapped to the ‘total’ attribute of the ‘Claim’ FHIR resource.

{

"resourceType": "Claim",

"status": "active",

"use": "claim",

"total": "{{content.FunctionalGroup.TransactionSet.X12_00501_835.TS835_2000_Loop.TS835_2100_Loop.CLP_ClaimPaymentInformation.CLP03_TotalClaimChargeAmount}}"

}

Considerations

- Patient and provider identifiers in the transaction set may not correspond directly to the FHIR identifiers for those matching resources. A lookup approach may be needed to map the data such as an EMPI (enterprise master patient index) and Provider Registry. These mappings can exist in a separate data store or using the FHIR Identifier data type for the corresponding FHIR resource.

- Various X12 EDI schemas may need to be managed across your provider base. Each version of the transaction set will have a corresponding Liquid template which will also need to be versioned to convert the correct XML to FHIR. An approach around modularizing templates will be crucial to find the right balance for reusability.

- Depending on the scale of the provider base and security requirements the architecture can be revised accordingly:

- One instance of Azure Logic Apps can be created per provider providing full compute isolation.

- Azure Logic Apps also support parallelization allowing for a batch of X12 files to be submitted and then processed in parallel.

- One instance of Azure Logic Apps and Azure FHIR Service can be associated with a certain geographic region which may be needed if data sovereignty is required.

- Depending on business scenario, the ingestion process can be trigger from an SFTP event. Health organizations and providers can be associated with an Azure Storage Account enabled with SFTP where they can securely connect and manage their X12 artifacts.

References

by Contributed | Mar 8, 2023 | Technology

This article is contributed. See the original author and article here.

Start monitoring Delivery Optimization usage and performance across your organization today! Following the Windows Update for Business reports announcement back in November 2022, we are excited to announce the general availability of the Delivery Optimization Windows Update for Business report.

We genuinely appreciate those of you who participated in the public preview! You helped us verify the accuracy of the new data tables and revise the layout. Your responses helped us identify and address all critical issues, so it can now be offered to all Delivery Optimization users. In this article, find guidance to:

- Get started with Windows Update for Business reports

- Customize your Delivery Optimization report

Get started with Windows Update for Business reports

If you’re an existing Update Compliance user, you’re probably aware of the Update Compliance migration to Windows Update for Business reports. The new report experience provides templates for Windows reporting for monitoring organization and device level data with the added flexibility to customize a given report.

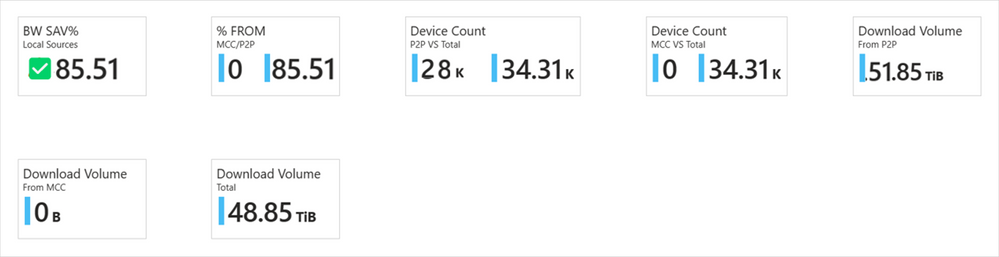

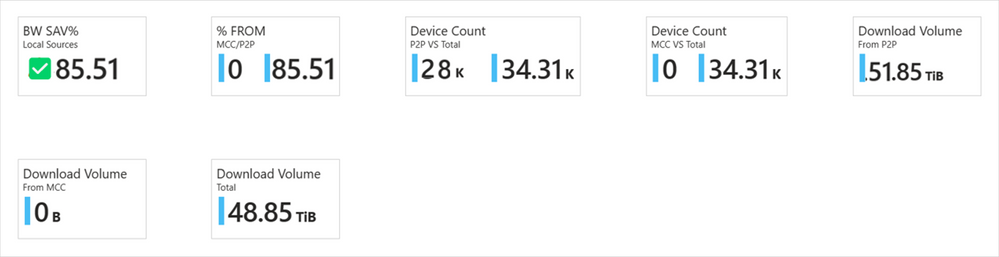

The Delivery Optimization Window Update for Business report provides a familiar experience, surfacing important data points that supply a unified way to check the performance across your organization. It contains data for the last 28 days, and we’ve added the long-awaited Microsoft Connected Cache data. In addition, we’ve reorganized the information to be more easily discoverable, leveraging the key cards that provide a quick and easy view of Delivery Optimization usage and performance.

Key cards with main metrics in Windows Update for Business reports

Key cards with main metrics in Windows Update for Business reports

You can quickly find the key pieces of data at the top of the report. We’ve bubbled up the metrics you care most about. You’ll find total bandwidth savings for both Delivery Optimization technologies, including peer-to-peer (P2P) and Connected Cache.

Further down the report, there are now three tabs that distinguish between Device configuration, Configuration details, and Efficiency by group.

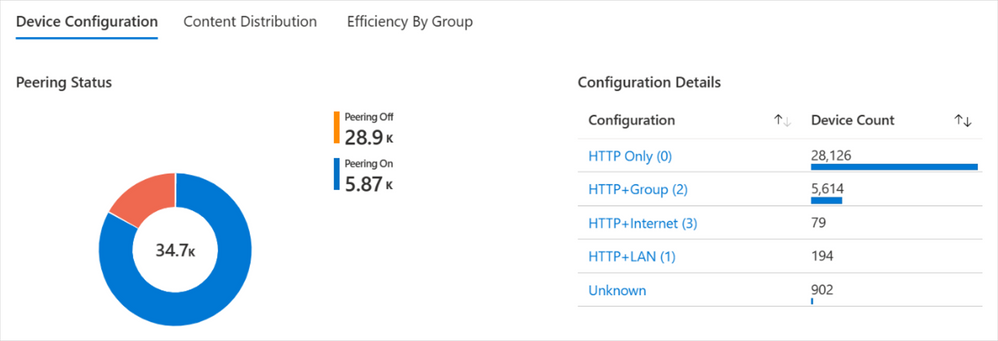

Within Device configuration, you’ll see the breakdown of Download Mode configuration for your devices. Each configuration number in parentheses references the Download Mode set on the device.

Device configuration details for peering status are shown as a pie chart while configuration names and device counts are shown as a bar graph in Windows Update for Business reports

Device configuration details for peering status are shown as a pie chart while configuration names and device counts are shown as a bar graph in Windows Update for Business reports

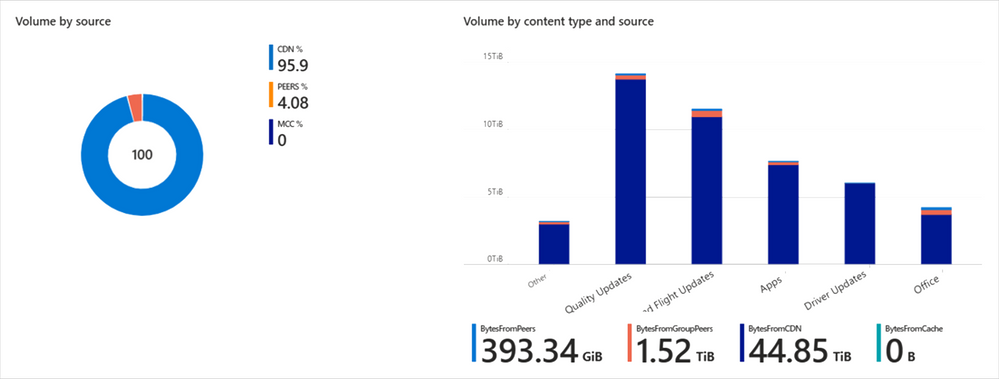

In Content distribution, you can explore the breakdown of how bytes are delivered, from CDN/HTTP source, peers, or Connected Cache. You can also visualize the delivery method based on the different content types. By clicking on a particular content type, further drill down to richer content type details.

See an example of a particular environment that does not have an Connected Cache cache server, displaying 0% of bytes.

Content distribution is visualized as a pie chart broken down by source type and a bar graph of volume by content type and source

Content distribution is visualized as a pie chart broken down by source type and a bar graph of volume by content type and source

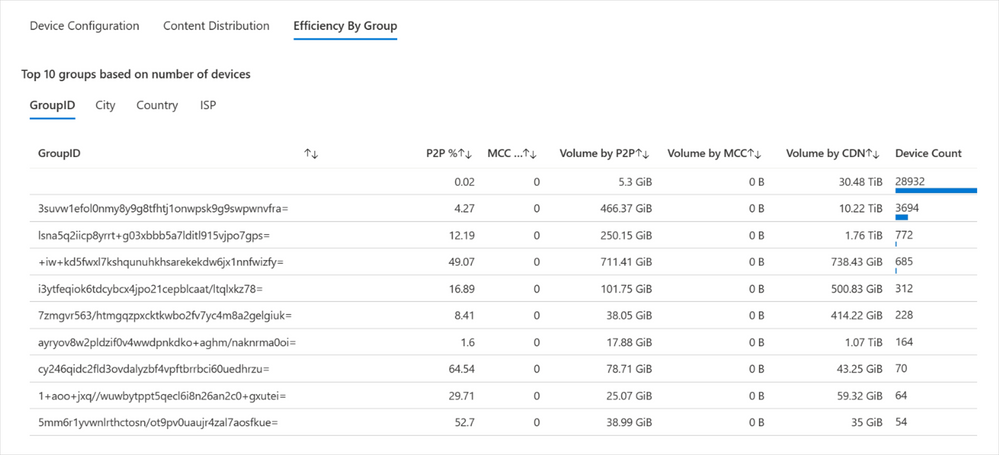

The last tab presents a new option. Most of your organizations use policies to create peering groups to manage devices. In the Efficiency by group section, you can toggle among four different options. After talking with many of you, we’ve generally seen that device groups for Delivery Optimization are managed in four common ways by:

We’ve added each of these as a quick and easy tab, so you can view the top 10 groups by number of devices. So, for example, if you’re looking at the GroupID, you’ll see the 10 top group IDs with the highest number of devices. Similarly, in the City or Country view, you’ll see the top 10 cities or countries with the greatest number of devices. Or perhaps you’re more interested in monitoring the top ISPs delivering content based on the number of devices within the organization – for that, we’ve included the ISP option.

The Efficiency By Group tab shows the top 10 groups based on Group ID, along with pertinent details such as P2P percentage, volume, and device count

The Efficiency By Group tab shows the top 10 groups based on Group ID, along with pertinent details such as P2P percentage, volume, and device count

As mentioned above, this is just the start of a new offering that enables you to tailor your report to meet the requirements of your organization. We will continue to incorporate richer features and enhanced experience in the Delivery Optimization report based on your feedback.

Customize your Delivery Optimization report

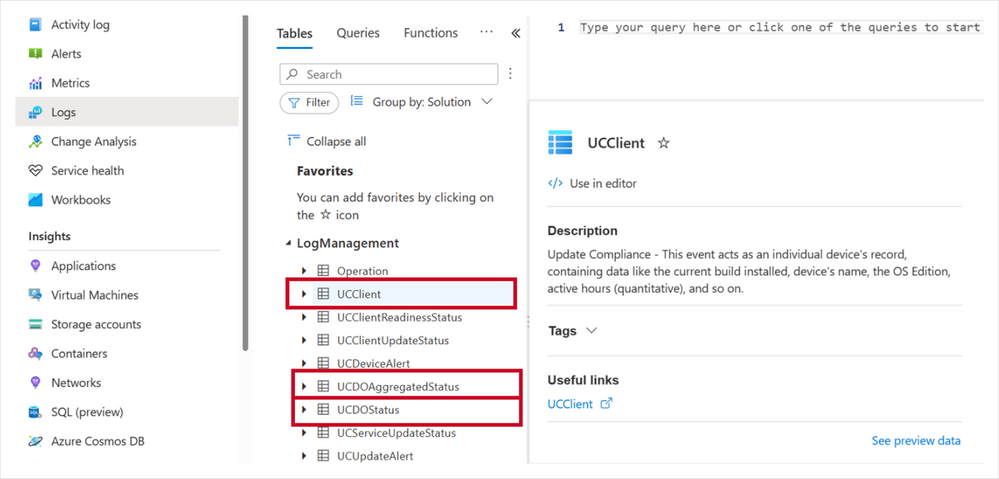

Much of the excitement with this new report format is centered on flexibility. It’s easy to modify the report template to meet your needs or if you want to run queries to further investigate your data. To do this, you can use the Windows Updates for Business reports data schema where you can access the new Delivery Optimization-related schema tables:

See what these tables look like within the LogManagement section.

The Microsoft Azure interface showing Logs options, focused on a list of tables, including UCClient, UCDOAggregateStatus, and U DOStatus

The Microsoft Azure interface showing Logs options, focused on a list of tables, including UCClient, UCDOAggregateStatus, and U DOStatus

We recommend running a query on each table to learn of the available fields. Let’s take a brief look at each.

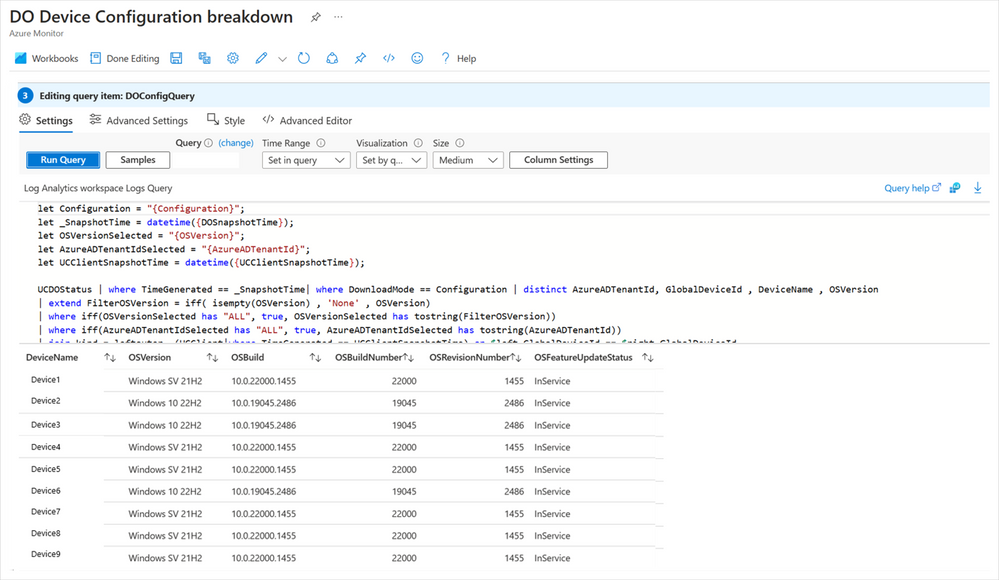

UCClient table

The UCClient table is used within the Delivery Optimization report to get OSBuild, OSBuildNumber, OSRevisionNumber, and OSFeatureStatus. The data from UCClient is joined with UCDOStatus to complete the Device configuration breakdown table. You’ll notice that in Edit mode, you can easily modify the query that builds this visualization. If any changes are needed, you can modify this query accordingly.

The Azure Workbooks interface showing the DO Device Configuration breakdown in Edit mode

The Azure Workbooks interface showing the DO Device Configuration breakdown in Edit mode

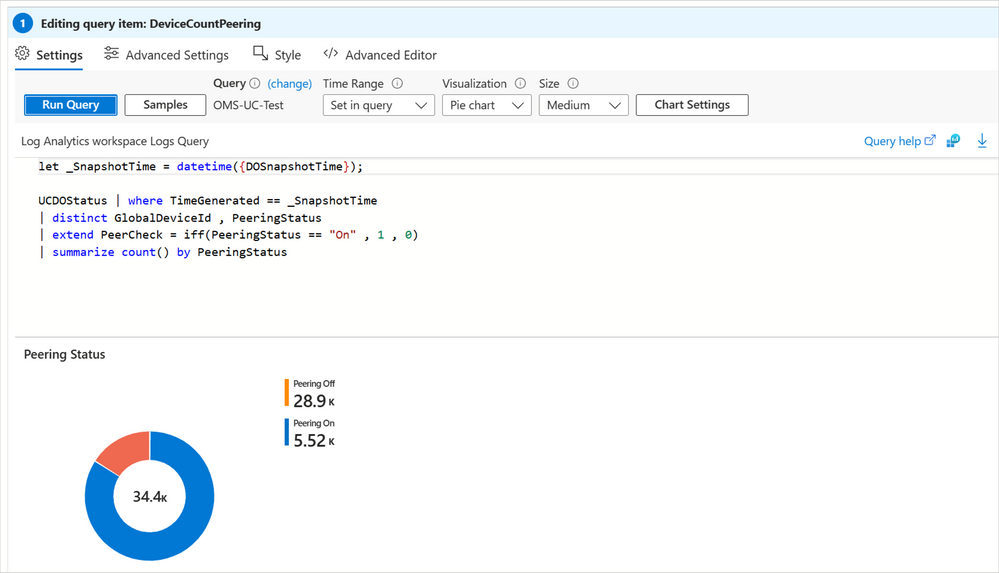

UCDOStatus table

One of the two tables that hold Delivery Optimization-only data is UCDOStatus. This table provides aggregated data on Delivery Optimization bandwidth utilization. Explore the data for a single device or by content type. For example, the ‘Peering Status’ pie chart is powered by the UCDOStatus table.

The Peering Status pie chart, powered by the UCDOStatus table, is shown when editing the query item for DeviceCountPeering

The Peering Status pie chart, powered by the UCDOStatus table, is shown when editing the query item for DeviceCountPeering

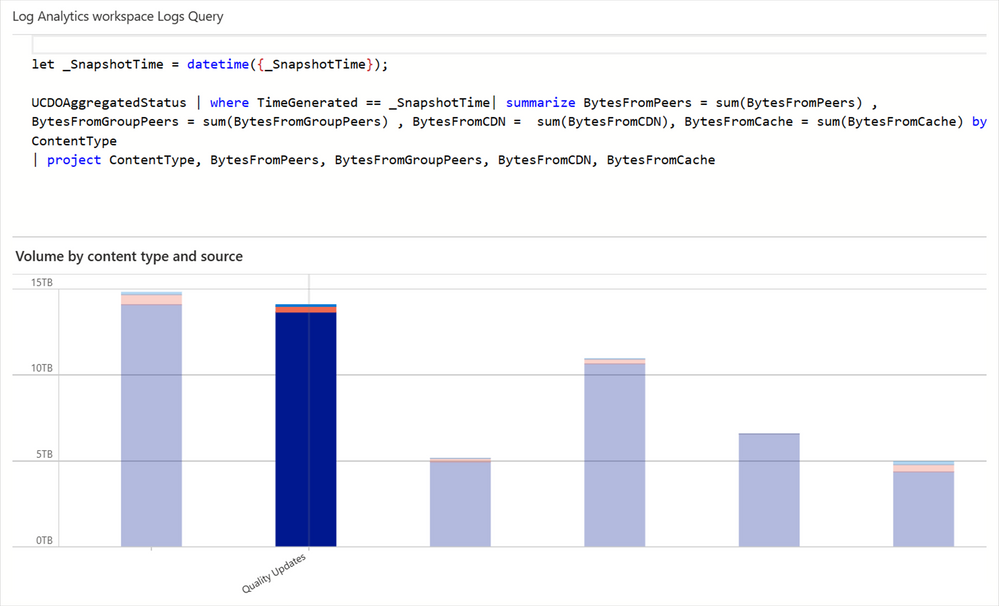

UCDOAggregatedStatus table

The second Delivery Optimization-only data table is UCDOAggregatedStatus. The role of this table is twofold:

- Provide aggregates of all individual UCDOStatus records across the tenant.

- Summarize bandwidth savings across all devices enrolled with Delivery Optimization.

One way this table is used is to build the volume by content types. Similarly, this view can be easily changed either through the query or built-in UX controls available via Log Analytics.

A bar graph summary of volume by content types, such as quality updates

A bar graph summary of volume by content types, such as quality updates

Keep in touch

We hope you are as excited as we are about these new changes for Delivery Optimization reporting. There are many more things we plan to add in the future. If you have any feedback or feature requests, we’d love to hear from you! Please submit it using the Feedback link within the Azure portal.

A feedback submission form inside the Microsoft Azure portal

A feedback submission form inside the Microsoft Azure portal

Continue the conversation. Find best practices. Bookmark the Windows Tech Community and follow us @MSWindowsITPro on Twitter. Looking for support? Visit Windows on Microsoft Q&A.

by Contributed | Mar 7, 2023 | Technology

This article is contributed. See the original author and article here.

One of the most common pain points of scheduling online meetings, especially with people outside your organization, is the back-and-forth messages. Microsoft Bookings now makes it simple to avoid that back and forth, with Power Automate you can seamlessly integrate Webex with Bookings.

Together, Bookings and Webex, they provide a comprehensive solution for all your virtual meeting needs.

With Power Automate, the moment a booking is made on Microsoft Bookings, a Webex meeting is automatically scheduled with all the details of the booking, such as date, time, and attendees. No more back and forth emails or missed meetings due to double bookings.

An animated image demonstrating how to create a Webex meeting when a Microsoft Bookings appointment is created via a Power Automate template.

An animated image demonstrating how to create a Webex meeting when a Microsoft Bookings appointment is created via a Power Automate template.

Don’t let scheduling virtual meetings stress you out anymore. With the integration of Microsoft Bookings, Webex, and Power Automate, you’ll be able to focus on what really matters – conducting successful and efficient virtual meetings. Upgrade your virtual meeting game today and experience seamless scheduling.

by Contributed | Mar 6, 2023 | Technology

This article is contributed. See the original author and article here.

Today we have an exciting announcement to share about the evolution of Microsoft Outlook for macOS. Don’t have Outlook on your Mac yet? Get Outlook for Mac in the App Store.

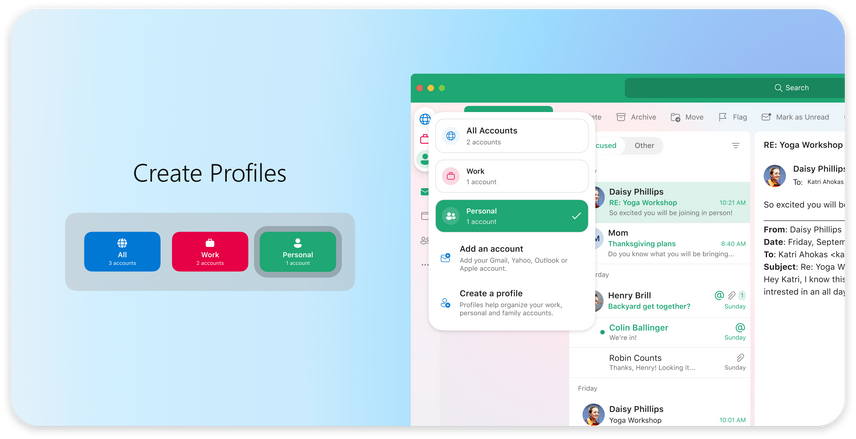

An image demonstrating the user interface of Outlook for Mac.

An image demonstrating the user interface of Outlook for Mac.

Outlook for Mac is now free to use

Now consumers can use Outlook for free on macOS, no Microsoft 365 subscription or license necessary.

Whether at home, work or school, Mac users everywhere can easily add Outlook.com, Gmail, iCloud, Yahoo! or IMAP accounts in Outlook and experience the best mail and calendar app on macOS. The Outlook for Mac app complements Outlook for iOS – giving people a consistent, reliable, and powerful experience that brings the best-in-class experience of Outlook into the Apple ecosystem that so many love.

Outlook makes it easy to manage your day, triage your email, read newsletters, accept invitations to coffee, and much more.

Bring your favorite email accounts

One of Outlook’s strengths is helping you manage multiple accounts in a single app. We support most personal email providers and provide powerful features, such as viewing all your inboxes at once and an all-mailbox search to make managing multiple email accounts a breeze.

An image demonstrating examples of personal email providers supported in Outlook.When you log into Outlook with a personal email account, you get enterprise-grade security, with secure sign-on to authenticate and protect your identity—all while keeping your email, calendar, contacts, and files protected.

An image demonstrating examples of personal email providers supported in Outlook.When you log into Outlook with a personal email account, you get enterprise-grade security, with secure sign-on to authenticate and protect your identity—all while keeping your email, calendar, contacts, and files protected.

Outlook is built for Mac

With Outlook, you’ll get a modern and beautiful user interface that has been designed and optimized for macOS. And these enhancements go far beyond surface level. The new Outlook is optimized for Apple Silicon, with snappy performance and faster sync speeds than previous versions.

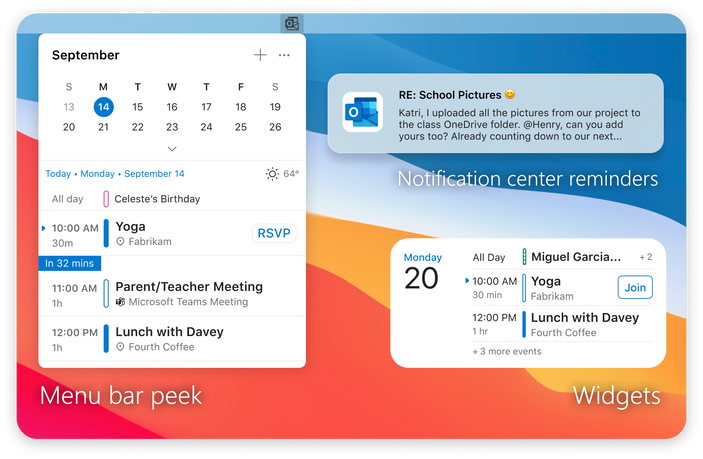

Outlook for Mac does more to integrate into the Apple platform so that you get the most from your macOS device. To help you stay on top of your email and calendar while using other apps, you can view your agenda using a widget and see reminders in the Notification Center. We are also creating a peek of upcoming calendar events in the Menu Bar (coming soon).

An image demonstrating previews of the Menu Bar (left), a Notification Center reminder (top right), and a Widget (bottom right) in Outlook for Mac.

An image demonstrating previews of the Menu Bar (left), a Notification Center reminder (top right), and a Widget (bottom right) in Outlook for Mac.

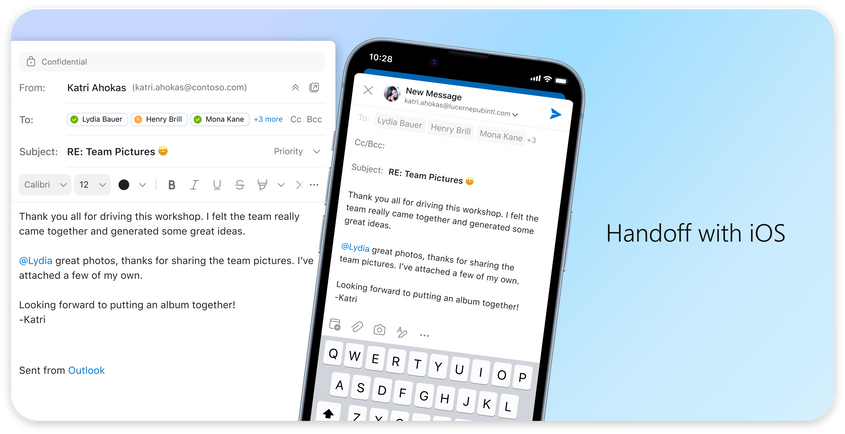

We know life is multifaceted and Outlook is leveraging the Apple platform to support you by making it easier to switch contexts. With our new Handoff feature, you can pick up tasks where you left off between iOS and Mac devices, so you can get up and go without missing a beat*.

An image demonstrating the Handoff feature in Outlook for Mac on a desktop and mobile device.

An image demonstrating the Handoff feature in Outlook for Mac on a desktop and mobile device.

We understand it’s more important than ever to protect your time. The new Outlook Profiles (coming soon) allow you to connect your email accounts to Apple’s Focus experience. With Outlook Profiles, you won’t get unwanted notifications at the wrong time so you can stay focused on that important work email, with no distractions from your personal email.

An image demonstrating the Outlook Profiles feature in Outlook for Mac.

An image demonstrating the Outlook Profiles feature in Outlook for Mac.

Your hub to help you focus

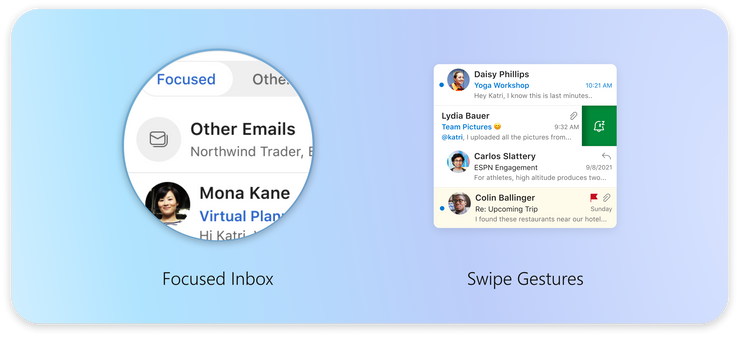

Outlook provides a variety of ways to help you stay focused and accomplish more by helping you decide what you want to see and when. For people with multiple accounts, the all-accounts view lets you manage all your inboxes at once, without having to switch back and forth. It’s a great way to see all new messages that come in so you can choose how to respond.

Outlook also has more options for keeping important emails front and center**. The Focused Inbox automatically sorts important from non-important messages so you can easily find them with a toggle above the message list and add frequently used folders to Favorites to find them easily. Outlook also gives you power to prioritize messages your way. You can pin messages in your inbox to keep them at the top or snooze non-urgent messages until you have time to reply. Categories and flagging are available as well. With so many options for organizing email, you can make Outlook work for you.

An image providing a preview of the Focused Inbox and Swipe Gestures features in Outlook for Mac.

An image providing a preview of the Focused Inbox and Swipe Gestures features in Outlook for Mac.

With Outlook, you can stay on top of your daily schedule from anywhere in the Mac app. Open My Day in the task pane to view an integrated, interactive calendar right from your inbox. Not only can you see upcoming events at a glance, but you can view event details, RSVP, join virtual meetings, and create new events from this powerful view of your calendar.

What’s next

We are thrilled to invite all Mac users to try our redesigned Outlook app with the latest and greatest features for macOS. Download Outlook for free in the App Store.

There is more to do and many more features we are excited to bring to the Outlook Mac experience. We are rebuilding Outlook for Mac from the ground up to be faster, more reliable, and to be an Outlook for everyone.

*The Handoff feature requires downloading Outlook for Mac from the App Store and login to your iOS device and macOS using the same AppleID.

**Categories only available with Outlook.com, MSN.com, Live.com, and Hotmail.com accounts. Focused Inbox, Snooze, and Pinning are not available with Direct Sync accounts. Click here for more information on Direct Sync accounts.

by Contributed | Mar 4, 2023 | Technology

This article is contributed. See the original author and article here.

Hi, I am Samson Amaugo, a Microsoft MVP and a Microsoft Learn Student Ambassador. I love writing and talking about all things DotNet. I am currently a student at the Federal University of Technology, Owerri. We could connect on Linkedin at My Linkedin Profile.

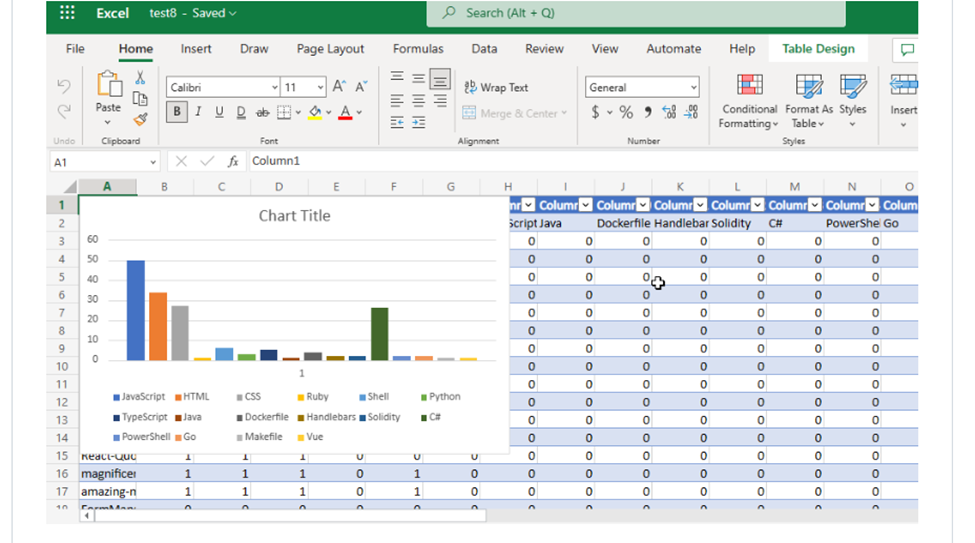

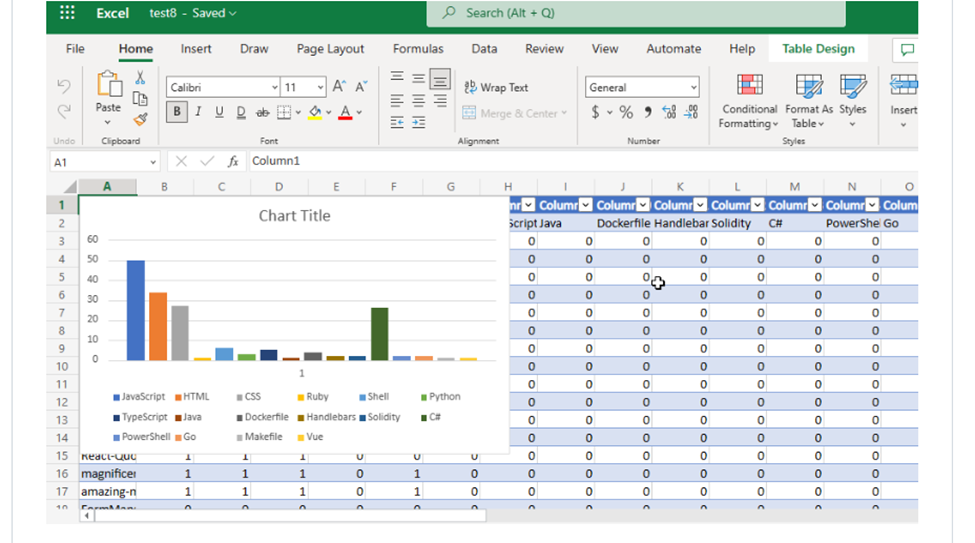

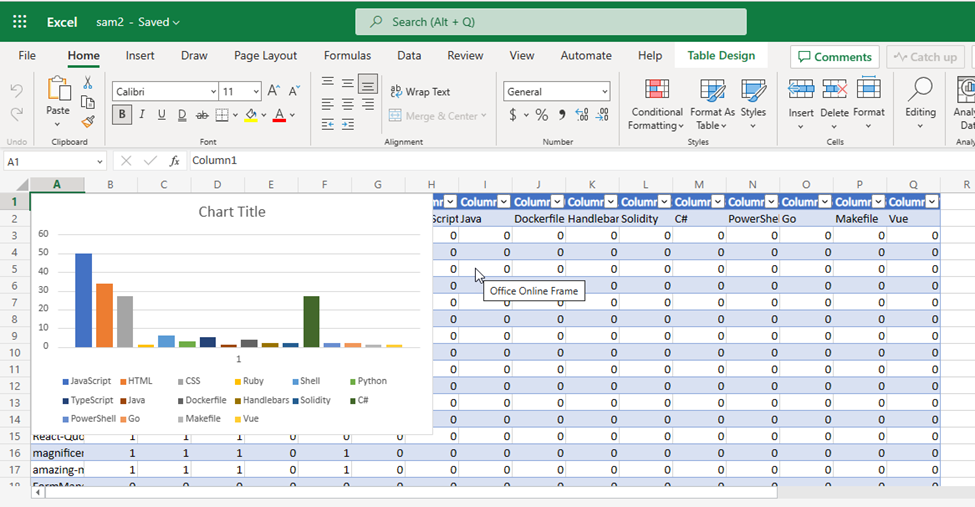

Have you ever thought about going through all your GitHub Repositories, taking note of the languages used, aggregating them, and visualizing it on Excel?

Well, that is what this post is all about except you don’t have to do it manually in a mundane way.

With the Aid of the Octokit GraphQL Library and Microsoft Graph .NET SDK, you could code up a cool that automates this process.

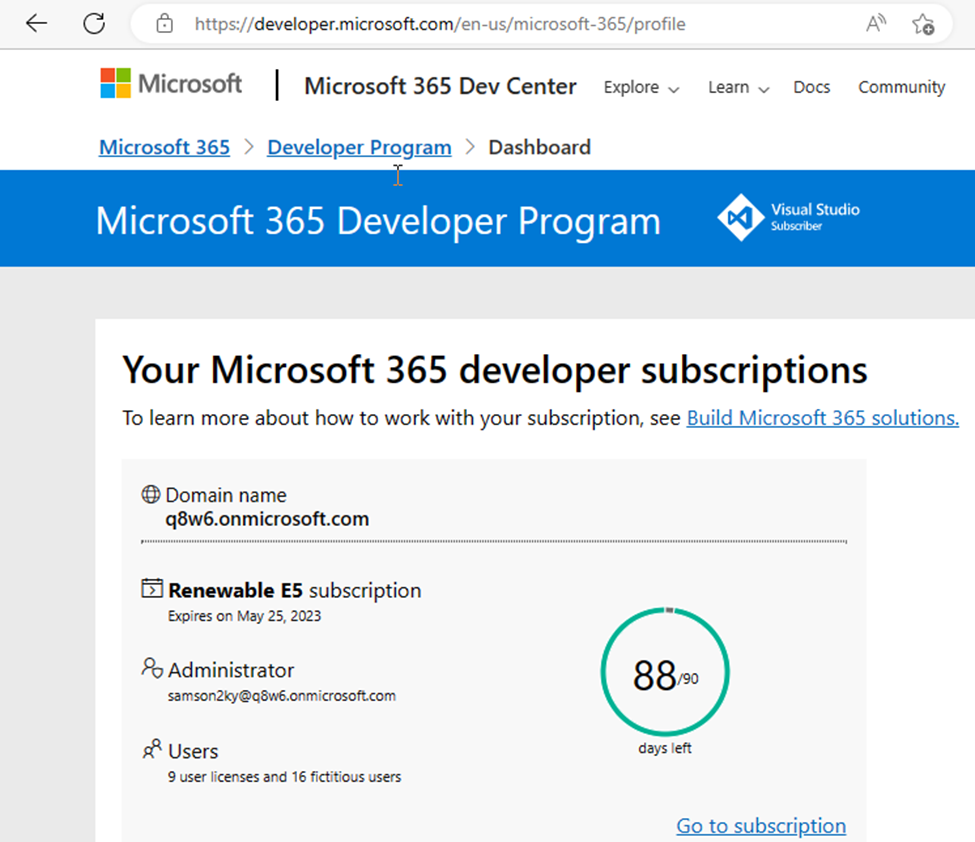

To build out this project in a sandboxed environment with the appropriate permissions I had to sign up on Microsoft 365 Dev Center to Create an Account that I could use to interact with Microsoft 365 products.

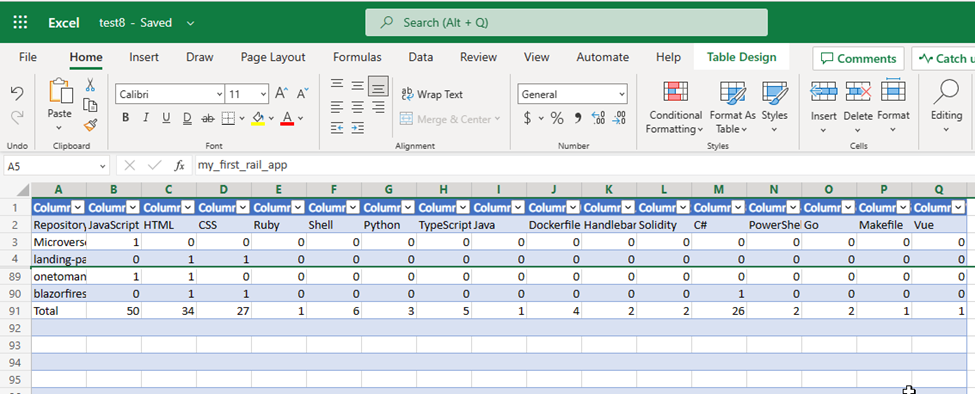

The outcome of the project could be seen below

Steps to Build

Generating an Access Token From GitHub

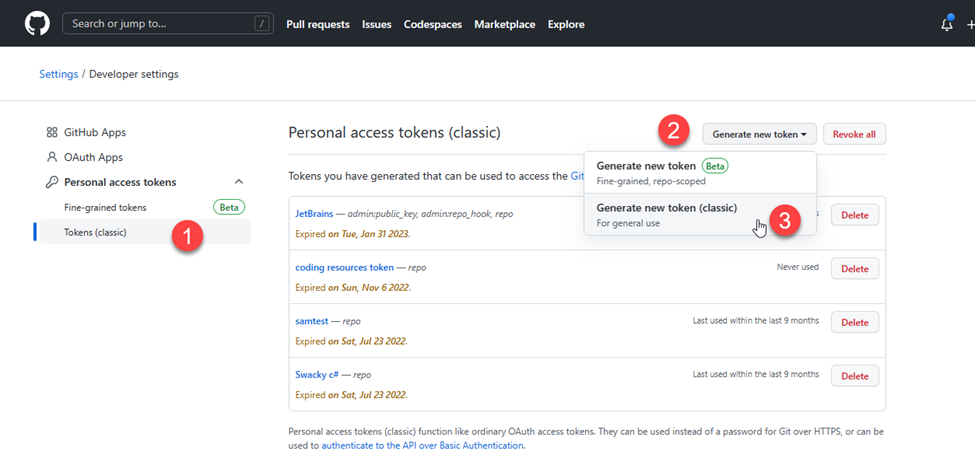

- I went to https://github.com/settings/apps and selected the Tokens (classic) option. This is because that is what the Octokit GraphQL library currently supports.

- I clicked on the Generate new token dropdown.

- I clicked on the Generate new token (classic) option.

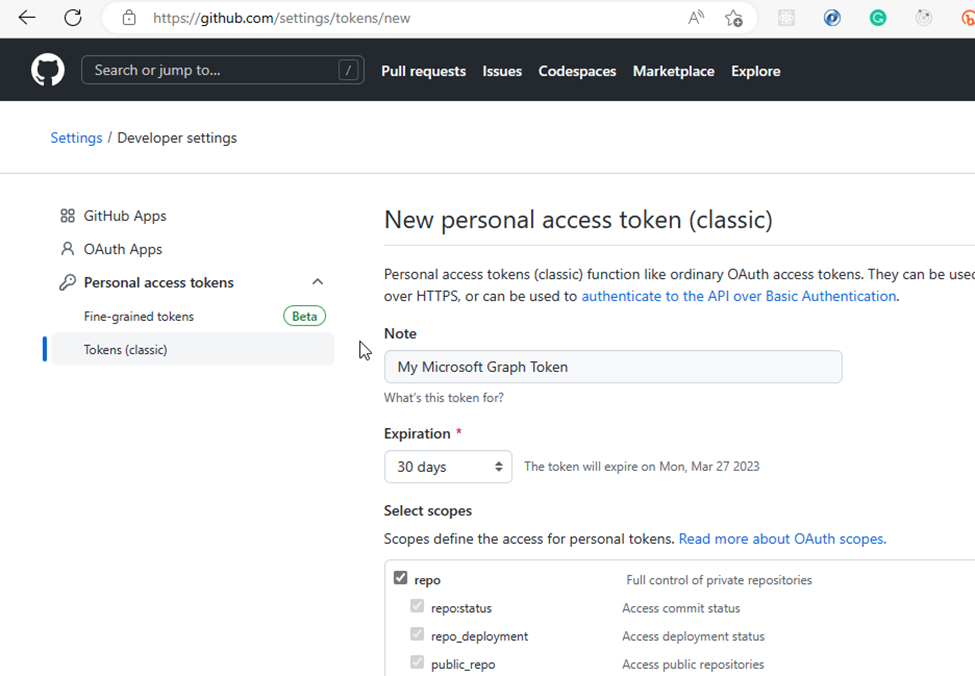

- I filled in the required Note field and selected the repo scope which would enable the token to have access to both my private and public repositories. After that, I scrolled down and selected the Generate token button.

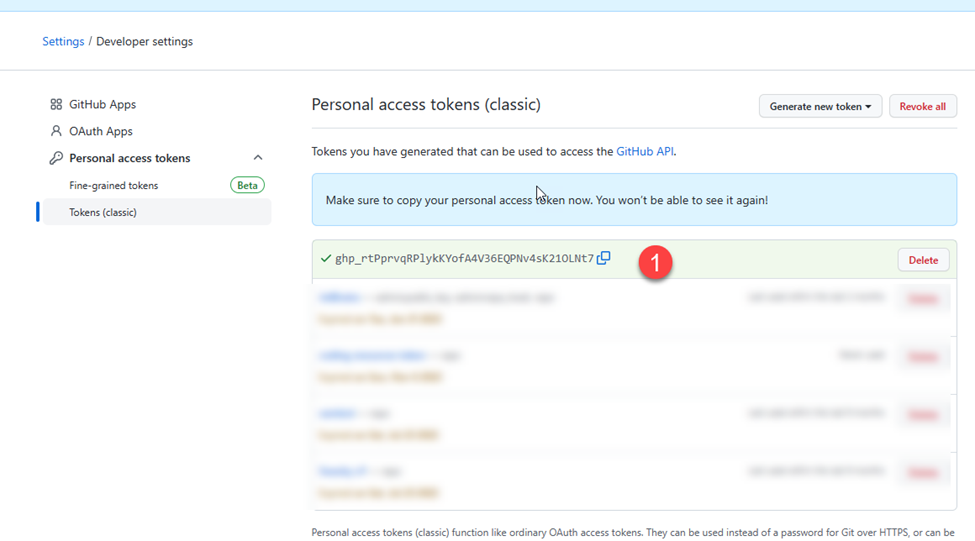

- Next, I copied the generated token to use during my code development.

Creating a Microsoft 365 Developer Account

Registering an Application on Azure

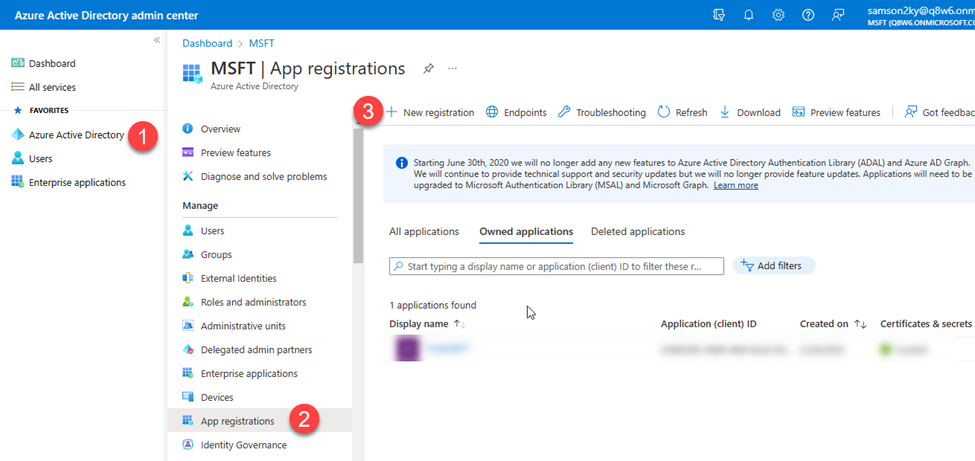

- To interact with Microsoft 365 applications via the graph API, I had to register an app on Azure Active Directory.

- I signed in to https://aad.portal.azure.com/ (Azure Active Directory Admin Center) using the developer email gotten from the Microsoft 365 developer subscription (i.e. samson2ky@q8w6.onmicrosoft.com).

- I clicked on the Azure Active Directory menu on the left menu pane.

- I clicked on the App Registration option.

- I clicked on the New registration option.

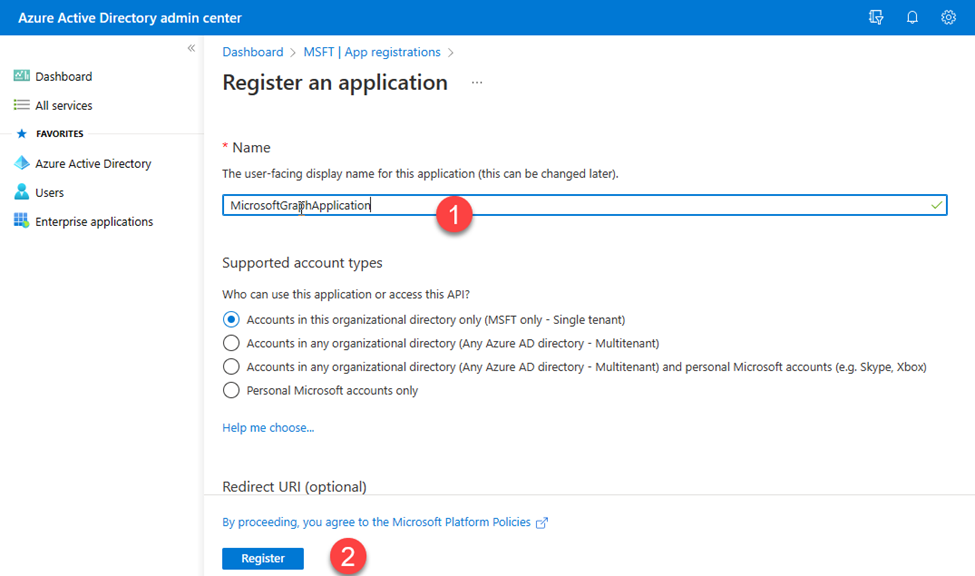

- I filled in the application name and clicked on the Register button.

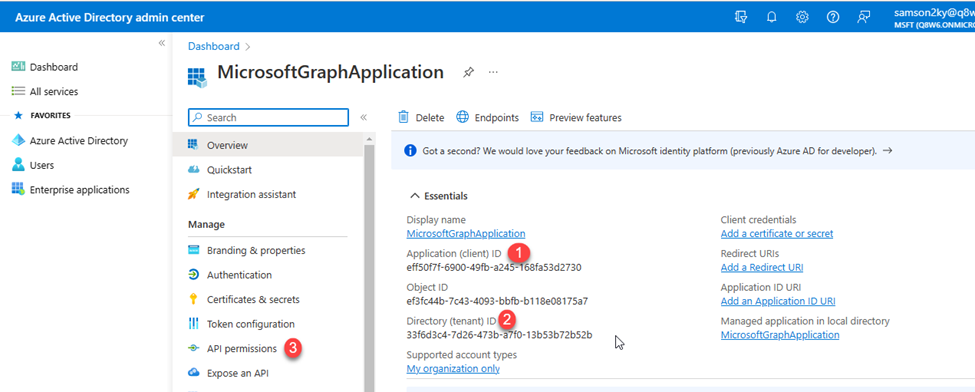

- I copied the clientId and the tenantId

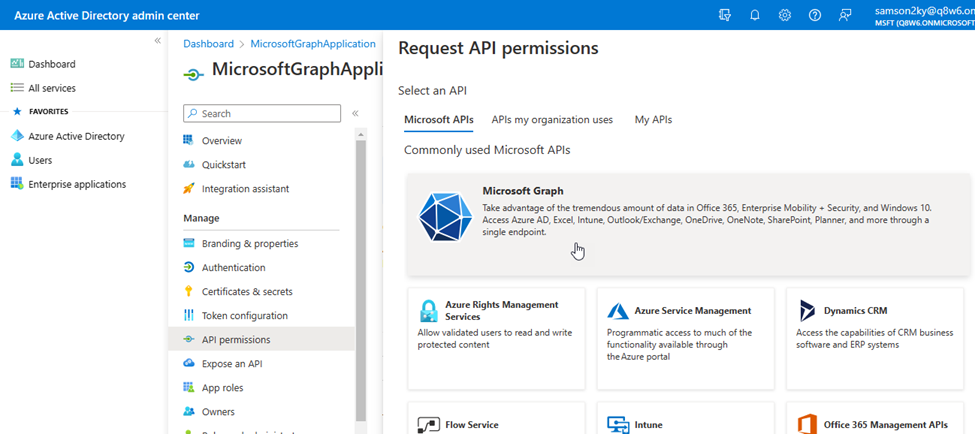

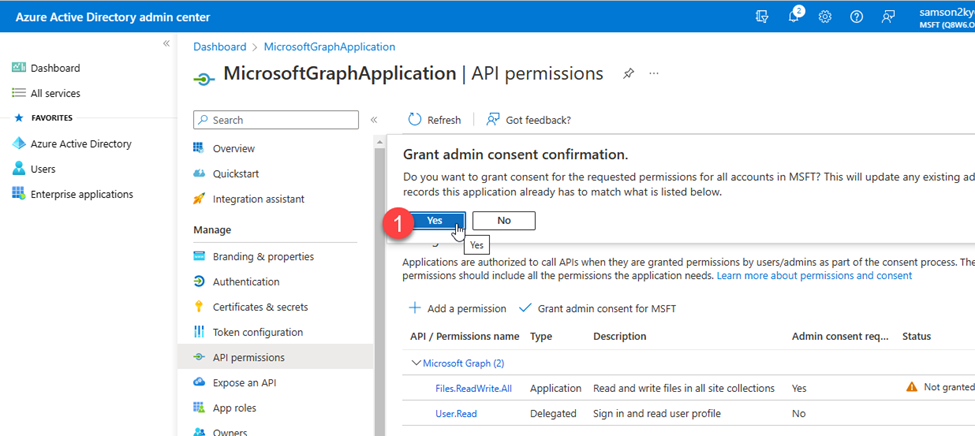

- I clicked on the API permissions menu on the left pane

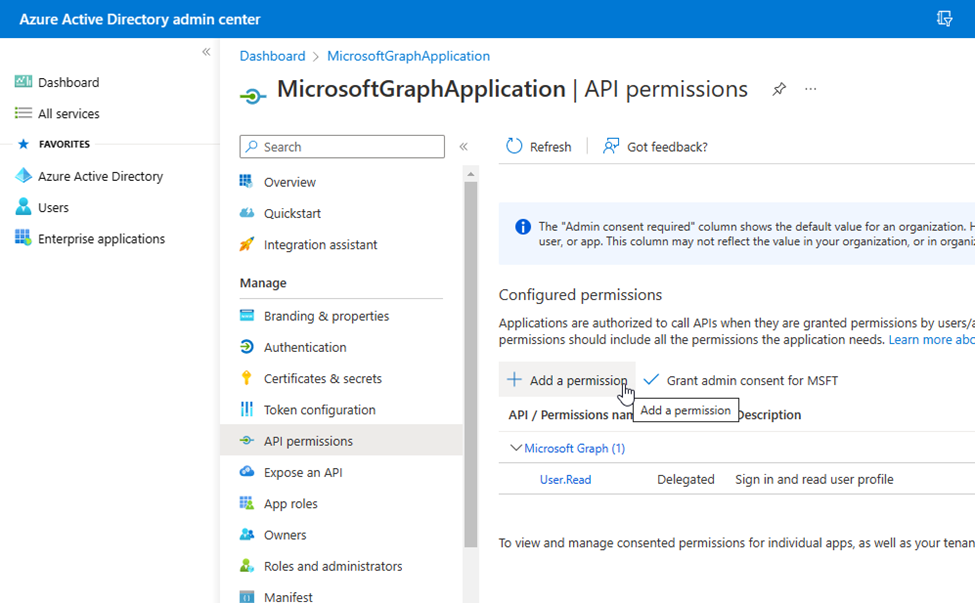

- To grant the registered application access to manipulate files, I had to grant it read and write access to my files.

- I clicked on the Add a permission option.

- I clicked on the Microsoft Graph API.

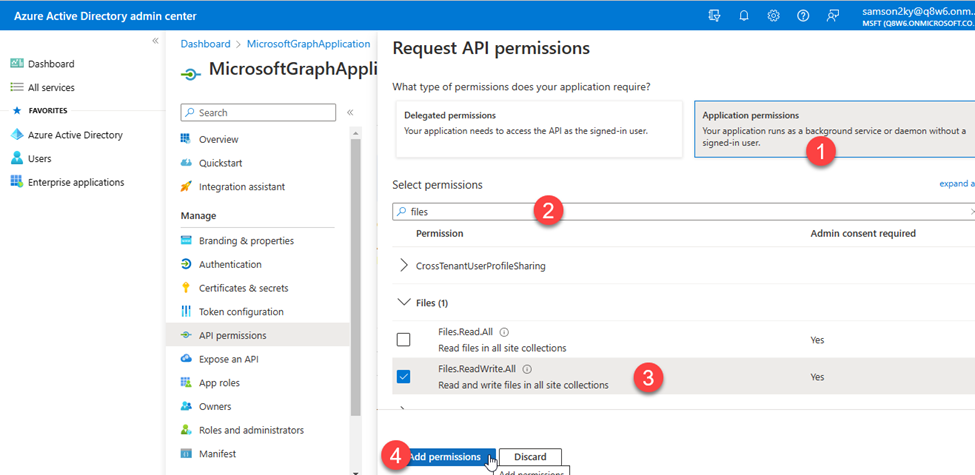

- Since I wanted the application to run in the background without signing in as a user, I selected the Application permissions option, typed files in the available field for easier selection, checked the Files.ReadWrite.All permission and clicked on the Add permissions button.

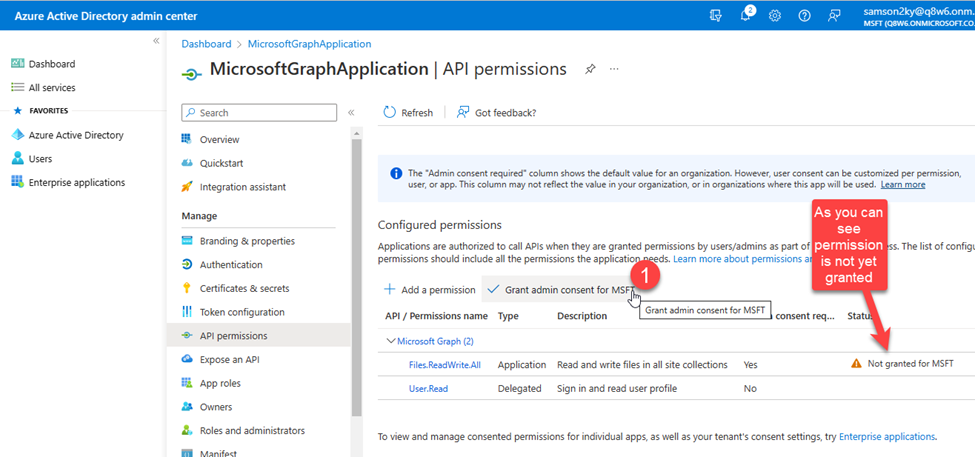

- At this point, I had to grant the application ADMIN consent before it would be permitted to read and write to my files.

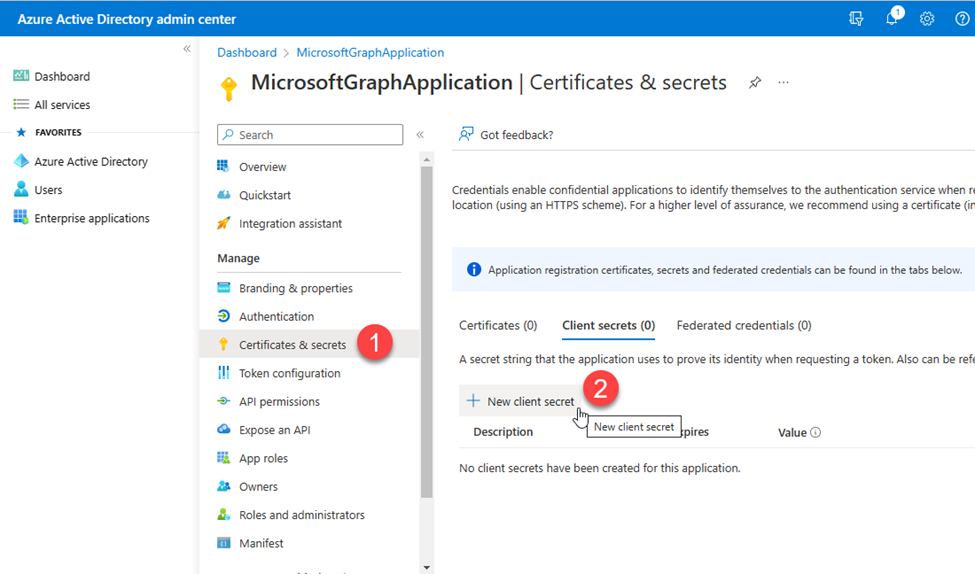

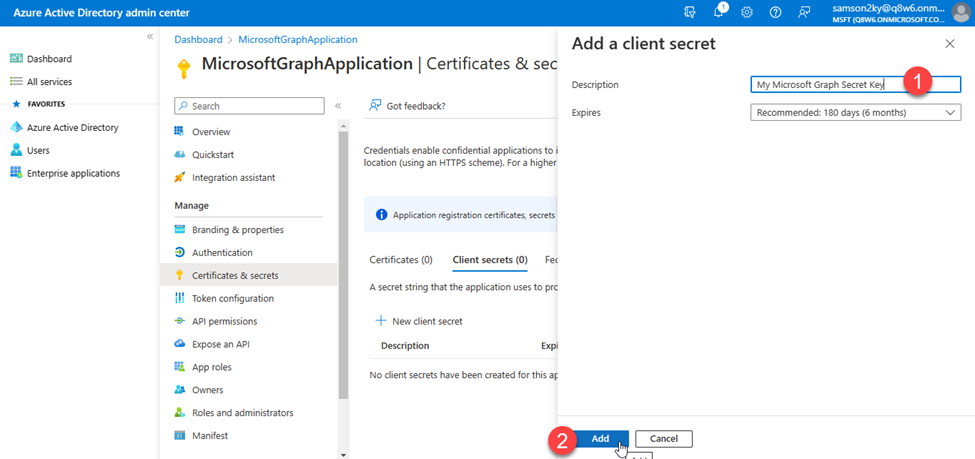

- Next, I had to generate the client’s secret by clicking on the Certificates & secrets menu on the left panel and clicking on the New client secret button.

- I filled up the client’s secret description and clicked on the Add button.

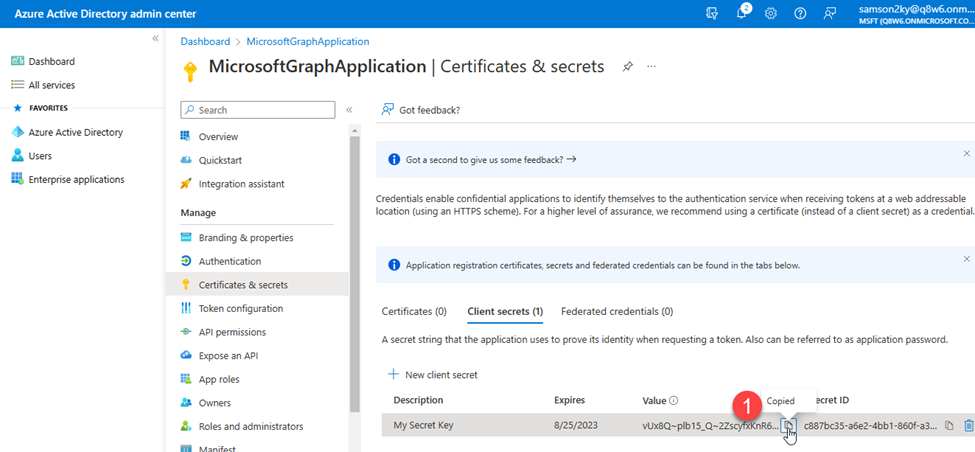

- Finally, I copied the Value of the generated secret key.

Writing the Code

- I created a folder, opened a terminal in the directory and executed the command below to bootstrap my console application.

dotnet new console

- I installed some packages which you can easily install by adding the item group below in your .csproj file within the Project tag.

- Next, execute the command below to install them

dotnet restore

- To have my necessary configuration in a single place, I created an appsettings.json file at the root of my project and added the JSON data below:

{

"AzureClientID": "eff50f7f-6900-49fb-a245-168fa53d2730",

"AzureClientSecret": "vUx8Q~plb15_Q~2ZscyfxKnR6VrWm634lIYVRb.V",

"AzureTenantID": "33f6d3c4-7d26-473b-a7f0-13b53b72b52b",

"GitHubClientSecret": "ghp_rtPprvqRPlykkYofA4V36EQPNV4SK210LNt7",

"NameOfNewFile": "chartFile.xlsx"

}

You would need to replace the credential above with yours.

- In the above JSON, you can see that I populated the values of the key with the saved secrets and IDs I obtained during the GitHub token registration and from the Azure Directory Application I created.

- To be able to bind the values in the JSON above to a C# class, I created a Config.cs file in the root of my project and added the code below:

using Microsoft.Extensions.Configuration;

namespace MicrosoftGraphDotNet

{

internal class Config

{

// Define properties to hold configuration values

public string? AzureClientId { get; set; }

public string? AzureClientSecret { get; set; }

public string? AzureTenantId { get; set; }

public string? GitHubClientSecret { get; set; }

public string? NameOfNewFile { get; set; }

// Constructor to read configuration values from appsettings.json file

public Config()

{

// Create a new configuration builder and add appsettings.json as a configuration source

IConfiguration config = new ConfigurationBuilder()

.SetBasePath(Directory.GetCurrentDirectory())

.AddJsonFile("appsettings.json")

.Build();

// Bind configuration values to the properties of this class

config.Bind(this);

}

}

}

In the Program.cs file I imported the necessary namespaces that I would be needing by adding the code below:

// Import necessary packages

using System.Text.Json;

using Octokit.GraphQL;

using Octokit.GraphQL.Core;

using Octokit.GraphQL.Model;

using Azure.Identity;

using Microsoft.Graph;

using MicrosoftGraphDotNet;

- Next, I instantiated my, Config class:

// retrieve the config

var config = new Config();

- I made use of the Octokit GraphQL API to query all my GitHub repositories for repository names and the languages used in individual repositories. Then I created a variable to hold the list of distinct languages available in all my repositories. After that, I deserialized the response into an array of a custom class I created (Repository class).

// Define user agent and connection string for GitHub GraphQL API

var userAgent = new ProductHeaderValue("YOUR_PRODUCT_NAME", "1.0.0");

var connection = new Connection(userAgent, config.GitHubClientSecret!);

// Define GraphQL query to fetch repository names and their associated programming languages

var query = new Query()

.Viewer.Repositories(

isFork: false,

affiliations: new Arg<IEnumerable>(

new RepositoryAffiliation?[] { RepositoryAffiliation.Owner })

).AllPages().Select(repo => new

{

repo.Name,

Languages = repo.Languages(null, null, null, null, null).AllPages().Select(language => language.Name).ToList()

}).Compile();

// Execute the GraphQL query and deserialize the result into a list of repositories

var result = await connection.Run(query);

var languages = result.SelectMany(repo => repo.Languages).Distinct().ToList();

var repoNameAndLanguages = JsonSerializer.Deserialize(JsonSerializer.Serialize(result));

- Since I am using top-level statements in my code I decided to ensure the custom class I created would be the last thing in the Program.cs file.

// Define a class to hold repository data

class Repository

{

public string? Name { get; set; }

public List? Languages { get; set; }

}

- Now that I’ve written the code to retrieve my repository data, the next step is to write the code to create an Excel File, Create a table, create rows and columns, populate the rows and columns with data and use that to plot a chart that visualizes the statistics of the top GitHub Programming languages used throughout my repositories.

- I initialized the Microsoft Graph .NET SDK using the :

// Define credentials and access scopes for Microsoft Graph API

var tokenCred = new ClientSecretCredential(

config.AzureTenantId!,

config.AzureClientId!,

config.AzureClientSecret!);

var graphClient = new GraphServiceClient(tokenCred);

- Next, I created an Excel file :

// Define the file name and create a new Excel file in OneDrive

var driveItem = new DriveItem

{

Name = config.NameOfNewFile!,

File = new Microsoft.Graph.File

{

}

};

var newFile = await graphClient.Drive.Root.Children

.Request()

.AddAsync(driveItem);

- I created a table that spans the length of the data I have horizontally and vertically:

// Define the address of the Excel table and create a new table in the file

var address = "Sheet1!A1:" + (char)('A' + languages.Count) + repoNameAndLanguages?.Count();

var hasHeaders = true;

var table = await graphClient.Drive.Items[newFile.Id].Workbook.Tables

.Add(hasHeaders, address)

.Request()

.PostAsync();

- I created a 2-Dimensional List that would represent my data in the format below

The code that represents the data above can be seen below:

// Define the first row of the Excel table with the column headers

var firstRow = new List { "Repository Name" }.Concat(languages).ToList();

// Convert the repository data into a two-dimensional list

List<List> totalRows = new List<List> { firstRow };

foreach (var value in repoNameAndLanguages!)

{

var row = new List { value.Name! };

foreach (var language in languages)

{

row.Add(value.Languages!.Contains(language) ? "1" : "0");

}

totalRows.Add(row);

}

// Add a new row to the table with the total number of repositories for each language

var languageTotalRow = new List();

// Add "Total" as the first item in the list

languageTotalRow.Add("Total");

// Loop through each programming language in the header row

for (var languageIndex = 1; languageIndex < totalRows[0].Count; languageIndex++)

{

// Set the total count for this language to 0

var languageTotal = 0;

// Loop through each repository in the table

for (var repoIndex = 1; repoIndex < totalRows.Count; repoIndex++)

{

// If the repository uses this language, increment the count

if (totalRows[repoIndex][languageIndex] == "1")

{

languageTotal++;

}

}

// Add the total count for this language to the languageTotalRow list

languageTotalRow.Add(languageTotal.ToString());

}

// Add the languageTotalRow list to the bottom of the table

totalRows.Add(languageTotalRow);

- I added the rows of data to the table using the code below:

// Create a new WorkbookTableRow object with the totalRows list serialized as a JSON document

var workbookTableRow = new WorkbookTableRow

{

Values = JsonSerializer.SerializeToDocument(totalRows),

Index = 0,

};

// Add the new row to the workbook table

await graphClient.Drive.Items[newFile.Id].Workbook.

Tables[table.Id].Rows

.Request()

.AddAsync(workbookTableRow);

- Finally, I created a ColumnClustered chart using my data and logged the URL of the spreadsheet.

// Add a new chart to the worksheet with the language totals as data

await graphClient.Drive.Items[newFile.Id].Workbook.Worksheets["Sheet1"].Charts

.Add("ColumnClustered", "Auto", JsonSerializer.SerializeToDocument($"Sheet1!B2:{(char)('A' + languages.Count)}2, Sheet1!B{repoNameAndLanguages.Count() + 3}:{(char)('A' + languages.Count)}{repoNameAndLanguages.Count() + 3}"))

.Request()

.PostAsync();

// Print the URL of the new file to the console

Console.WriteLine(newFile.WebUrl);

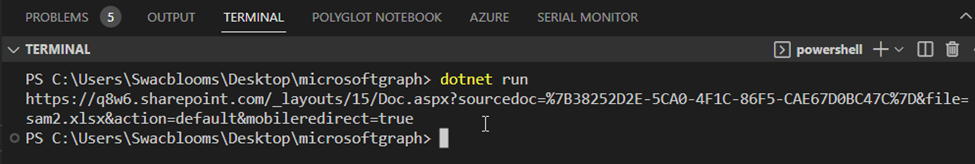

- After executing the command: dotnet run, I got the URL or link to the excel file as an output.

- On clicking the link I was able to view the awesome visualization of the languages used across my GitHub repositories.

And that’s the end of this article. I hope you enjoyed it and got to see how I used Microsoft Graph .NET SDK to automate this process.

To learn more about Microsoft Graph API and SDKs:

Microsoft Graph https://developer.microsoft.com/graph

Develop apps with the Microsoft Graph Toolkit – Training

Hack Together: Microsoft Graph and .NET

Is a hackathon for beginners to get started building scenario-based apps using .NET and Microsoft Graph. In this hackathon, you will kick-start learning how to build apps with Microsoft Graph and develop apps based on the given Top Microsoft Graph Scenarios, for a chance to win exciting prizes while meeting Microsoft Graph Product Group Leaders, Cloud Advocates, MVPs and Student Ambassadors. The hackathon starts on March 1st and ends on March 15th. It is recommended for participants to follow the Hack Together Roadmap for a successful hackathon.

Demo/Sample Code

You can access the code for this project at https://github.com/sammychinedu2ky/MicrosoftGraphDotNet

X12 to FHIR

Recent Comments