by Contributed | Apr 22, 2023 | Technology

This article is contributed. See the original author and article here.

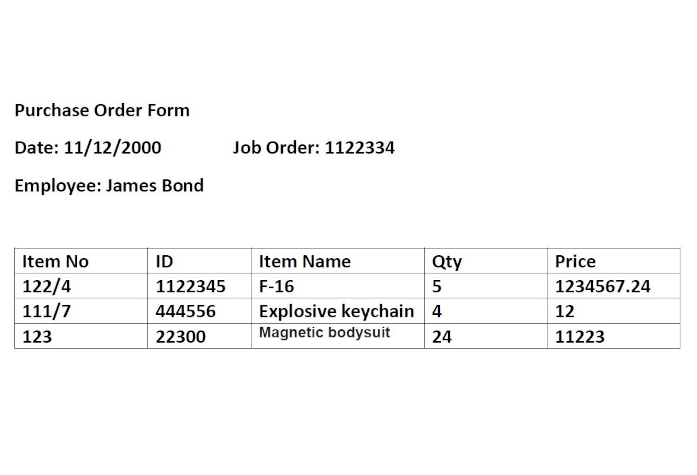

Documents can contain table data. For example, earning reports, purchase order forms, technical and operational manuals, etc., contain critical data in tables. You may need to extract this table data into Excel for various scenarios.

- Extract each table into a specific worksheet in Excel.

- Extract the data from all the similar tables and aggregate that data into a single table.

Here, we present two ways to generate Excel from a document’s table data:

- Azure Function (HTTP Trigger based): This function takes a document and generates an Excel file with the table data in the document.

- Apache Spark in Azure Synapse Analytics (in case you need to process large volumes of documents).

The Azure function extracts table data from the document using Form Recognizer’s “General Document” model and generates an Excel file with all the extracted tables. The following is the expected behavior:

- Each table on a page gets extracted and stored to a sheet in the Excel document. The sheet name corresponds to the page number in the document.

- Sometimes, there are key-value pairs on the page that need to be captured in the table. If you need that feature, leverage the add_key_value_pairs flag in the function.

- Form Recognizer extracts column and row spans, and we take advantage of this to present the data as it is represented in the actual table.

Following are two sample extractions.

Top excel is with key value pairs added to the table. Bottom one is without the key value pairs.

The Excel shown above is the extraction of table data from an earnings report. The earnings report file had multiple pages with tables, and the fourth page had two tables.

Solution

Azure Function and Synapse Spark Notebook is available here in this GIT Repository

- Deployment Steps

- Sample Data: The repository has two sample documents to work with:

- PurchaseOrderForm.pdf . This document has key-value pairs for each table that makes sense to capture as part of the table.

- earningreport.pdf . This document does not have key-value pairs for each table that makes sense to capture.

- Note on the Excel output:

- If there is a page in the main document with no tables, no sheet will be created for that page.

- The code has been updated to remove the extracted text from check boxes (“:selected:”, “:unselected:”) in the table.

- If a cell does not have any alphanumeric text, it will be skipped. Please update the code to reflect different behavior.

How to leverage this Solution

- Use this solution to generate an Excel file as mentioned above.

- Integrate this with Power Automate so that end-users can use this seamlessly from O365 (email, SharePoint, or Teams).

- Customize this to generate an aggregated table.

Contributors: Ben Ufuk Tezcan, Vinod Kurpad, Matt Nelson, Nicolas Uthurriague , Sreedhar Mallangi

by Contributed | Apr 21, 2023 | Technology

This article is contributed. See the original author and article here.

Disclaimer

This document is not meant to replace any official documentation, including those found at docs.microsoft.com. Those documents are continually updated and maintained by Microsoft Corporation. If there is a discrepancy between this document and what you find in the Compliance User Interface (UI) or inside of a reference in docs.microsoft.com, you should always defer to that official documentation and contact your Microsoft Account team as needed. Links to the docs.microsoft.com data will be referenced both in the document steps as well as in the appendix.

All the following steps should be done with test data, and where possible, testing should be performed in a test environment. Testing should never be performed against production data.

Target Audience

Microsoft customers who want to better understand Microsoft Purview.

Document Scope

The purpose of this document (and series) is to provide insights into various user cases, announcements, customer driven questions, etc.

Topics for this blog entry

Here are the topics covered in this issue of the blog:

- Sensitivity Labels relating to SharePoint Lists

- Sensitivity Label Encryption versus other types of Microsoft tenant encryption

- How Sensitivity Labels conflicts are resolved

- How to apply Sensitivity Labels to existing SharePoint Sites

- Where can I find information on how Sensitivity Labels are applied to data within a SharePoint site (i.e. File label inheritance from the Site label)

Out-of-Scope

This blog series and entry is only meant to provide information, but for your specific use cases or needs, it is recommended that you contact your Microsoft Account Team to find other possible solutions to your needs.

Sensitivity labels and SharePoint Sites – Assorted topics

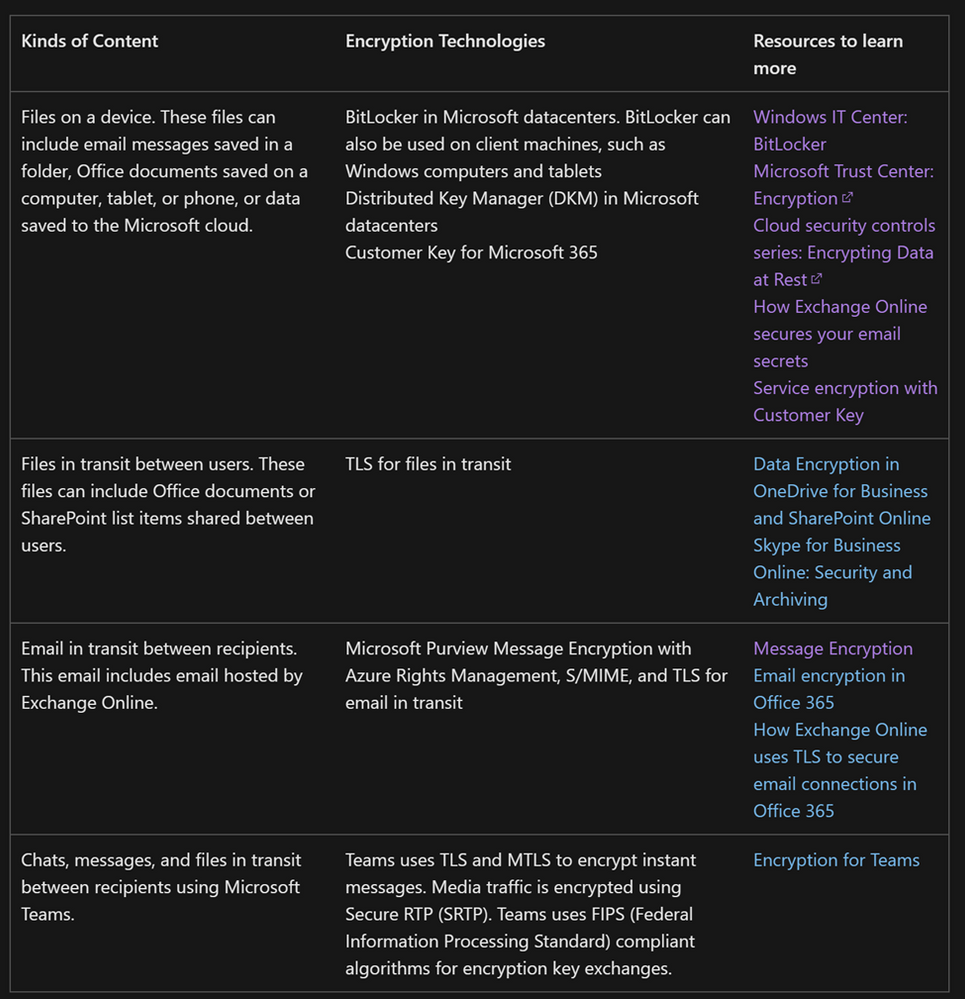

Encryption Sensitivity Label Encryption versus other types of Microsoft tenant encryption

Question #1

How does the encryption of Sensitivity Labels compare to encryption in leveraged in BitLocker?

Answer #1

The following table breaks this down in detail and is taken from the following Microsoft Link.

Encryption in Microsoft 365 – Microsoft Purview (compliance) | Microsoft Learn

Sensitivity Labels relating to SharePoint Lists

Question #2

Can you apply Sensitivity Labels to SharePoint Lists?

Answer #2

The simple answer is NO while in the list, but YES once the list is exported to a file format.

Data in the SharePoint List is stored within a SQL table in SharePoint. At the time of the writing of this blog, you cannot apply a Sensitivity Label to a SharePoint Online tables, including SharePoint Lists.

SharePoint Lists allow for exports of the data in the list to a file format. An automatic sensitivity label policy can apply a label to those file formats. Here is an (example below of those export options.

How to apply Sensitivity Labels to existing SharePoint Sites

Question #3

Can you apply Sensitivity Labels to existing SHPT sites? If so, is this, can this be automated (ex. PowerShell)

Answer #3

You can leverage PowerShell to apply SharePoint labels to multiple sites. Here is the link that explains how to accomplish this.

Look for these two sections in the link below for details:

- Use PowerShell to apply a sensitivity label to multiple sites

- View and manage sensitivity labels in the SharePoint admin center

Use sensitivity labels with Microsoft Teams, Microsoft 365 Groups, and SharePoint sites – Microsoft Purview (compliance) | Microsoft Learn

How Sensitivity Labels conflicts are resolved

Question #4

If you have an existing file with an existing Sensitivity Label that is stricter than the Sensitivity Label being inherited from SharePoint Site label, which Sensitivity Label is applied to the file?

Answer #4

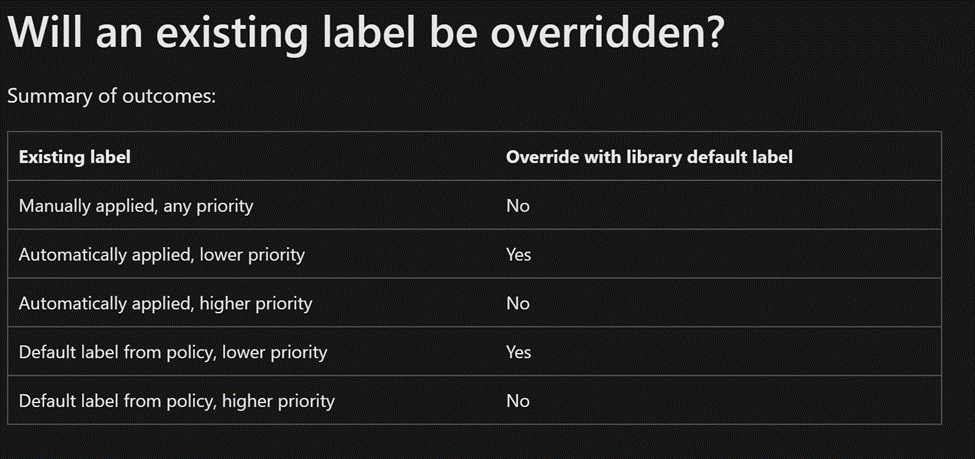

Please refer to the link and table below for how Sensitivity Label conflicts are handled. Notice that any Higher priority label or user applied label, would not be overridden by a site label or an automatic labeling policy.

Configure a default sensitivity label for a SharePoint document library – Microsoft Purview (compliance) | Microsoft Learn

File label inheritance from the Site label

Question #5

Where can you find the documentation on SharePoint Site labels and how label inheritance applies to files in that SharePoint site?

Answer #5

Here are 2 links that can help you with Sensitivity Labels and how they relate to SharePoint sites:

When it comes to default Sensitivity Labels for SharePoint sites/libraries (what I have called “label inheritance” above, this link is of use.

“When SharePoint is enabled for sensitivity labels, you can configure a default label for document libraries. Then, any new files uploaded to that library, or existing files edited in the library will have that label applied if they don’t already have a sensitivity label, or they have a sensitivity label but with lower priority.

For example, you configure the Confidential label as the default sensitivity label for a document library. A user who has General as their policy default label saves a new file in that library. SharePoint will label this file as Confidential because of that label’s higher priority.”

Appendix and Links

by Contributed | Apr 21, 2023 | Technology

This article is contributed. See the original author and article here.

Today, we worked on a service request that our customer got the following error message : Managed Instance needs permissions to access Azure Active Directory. You need to be a ‘Company Administrator’ or a ‘Global Administrator’ to grant ‘Read’ permissions to the Managed Instance.

Azure SQL Managed Instance needs permissions to read Azure AD to successfully accomplish tasks such as authentication of users through security group membership or creation of new users. For this to work, we need to grant the Azure SQL Managed Instance permission to read Azure AD.

We can do this using the Azure portal or PowerShell. This operation can only be executed by Global Administrator or a Privileged Role Administrator in Azure AD.

You can assign the Directory Readers role to a group in Azure AD. The group owners can then add the managed instance identity as a member of this group, which would allow you to provision an Azure AD admin for the SQL Managed Instance. That means you need to have Global Administrator or Privileged Role Administrator access to provide the read permission to the SQL MI.

Directory Reader role

In order to assign the Directory Readers role to an identity, a user with Global Administrator or Privileged Role Administrator permissions is needed. Users who often manage or deploy SQL Database, SQL Managed Instance, or Azure Synapse may not have access to these highly privileged roles. This can often cause complications for users that create unplanned Azure SQL resources, or need help from highly privileged role members that are often inaccessible in large organizations.

For SQL Managed Instance, the Directory Readers role must be assigned to managed instance identity before you can set up an Azure AD admin for the managed instance.

Assigning the Directory Readers role to the server identity isn’t required for SQL Database or Azure Synapse when setting up an Azure AD admin for the logical server. However, to enable an Azure AD object creation in SQL Database or Azure Synapse on behalf of an Azure AD application, the Directory Readers role is required. If the role isn’t assigned to the SQL logical server identity, creating Azure AD users in Azure SQL will fail. For more information, see Azure Active Directory service principal with Azure SQL.

Supported Article: https://learn.microsoft.com/en-us/azure/azure-sql/database/authentication-aad-directory-readers-role?view=azuresql#assigning-the-directory-readers-role

by Contributed | Apr 20, 2023 | Technology

This article is contributed. See the original author and article here.

Many database administrators ask questions like “What rows have changed for a table?” and “How has that row changed in that table?”. Change Tracking is a lightweight solution built right into the SQL Database that gives you the ability to query for data that has changed over time. In this episode of Data Exposed, join Anna Hoffman and Brian Spendolini as we explore this powerful feature of the database. Learn how to enable Change Tracking in your database, what are the best uses cases, and how it can save you massive amounts of time and effort over developing custom, one-off solutions.

Watch on Data Exposed

Resources:

View/share our latest episodes on Microsoft Learn and YouTube!

by Contributed | Apr 19, 2023 | Technology

This article is contributed. See the original author and article here.

As mentioned in previous posts, 11/11/2021 and on 11/15/2022, Office 2013 reached the end of the Extended Support lifecycle on April 11, 2023. Continuing to use Office 2013 could increase your organization’s exposure to security risks, impact your ability to meet compliance obligations, and/or affect end user productivity.

Additionally, support for other Microsoft Office products is also coming to an end in the next months. Please review the following list and act before the end of the product’s lifecycle:

- Office 2019 for Mac reaches end of support on October 10, 2023. This means Office 2019 for Mac will no longer receive security updates, bug fixes, technical support, or online technical content support.

- Connecting Office 2016 and Office 2019 to Microsoft 365 reaches end of support on October 10, 2023. After this end date we won’t block these Office versions from connecting to Microsoft 365 services if they are kept up to date. But after October 10, 2023, improvements to Microsoft 365 services will no longer be tested with these Office versions, so, users could experience performance or reliability issues. Read more about this in our Microsoft Learn article.

If you’re running a version affected by any of the end of support dates, we recommend upgrading to Microsoft 365 E3, which comes with Microsoft 365 Apps – the apps you’re familiar with (e.g., Word, Excel, PowerPoint, Outlook, etc.). It falls under the Modern Lifecycle Policy, so it’s continuously supported.

Here are some resources to help plan the move:

Please visit our Office End of Support community for more information and resources about end of support for Office.

Thanks again for being a Microsoft customer!

Recent Comments