by Contributed | Jan 18, 2024 | Technology

This article is contributed. See the original author and article here.

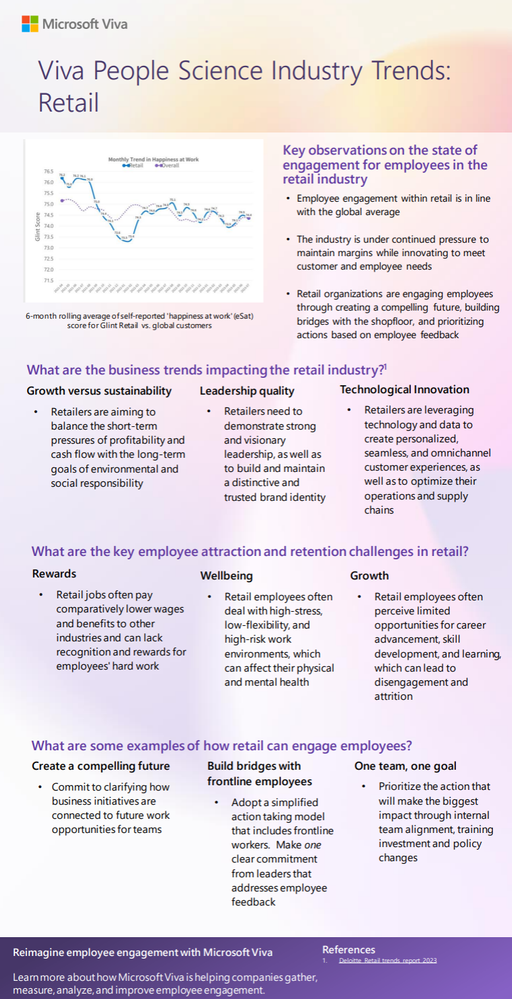

Welcome to the fourth edition of Microsoft Viva People Science industry trends, where the Viva People Science team share learnings from customers across a range of different industries. Drawing on data spanning over 150 countries, 10 million employees, and millions of survey comments, we uncover the unique employee experience challenges and best practices for each industry.

In this blog, @Jamie_Cunningham and I share our insights on the state of employee engagement in the retail industry. You can also access the recording from our recent live webinar, where we discussed this topic in depth.

Let’s first look at what’s impacting the retail industry today. In summary, we are hearing about market volatility, supply chain constraints, changing consumer behavior, technological advancements, labor pressures, and rising costs. According to the Deloitte Retail Trends 2023 report, the top-of-mind issues for retail leaders are:

- Growth versus sustainability: Retailers need to balance the short-term pressures of profitability and cash flow with the long-term goals of environmental and social responsibility.

- Consumer confidence and retail sales: Retailers need to cope with the uncertain and volatile consumer demand, which is influenced by factors such as inflation, health concerns, and government policies.

- Leadership quality and brand strength: Retailers need to demonstrate strong and visionary leadership, as well as to build and maintain a distinctive and trusted brand identity.

- Technological innovation: Retailers need to leverage technology and data to create personalized, seamless, and omnichannel customer experiences, as well as to optimize their operations and supply chains.

These issues require retailers to be agile, resilient, and innovative in their employee experience strategies and execution. The retail industry also faces some specific challenges in attracting and retaining talent, such as:

- Rewards: Retail jobs often pay comparatively lower wages and benefits to other industries and can lack recognition and rewards for employees’ hard work.

- Wellbeing: Retail employees often deal with high-stress, low-flexibility, and high-risk work environments, which can affect their physical and mental health.

- Growth: Retail employees often perceive limited opportunities for career advancement, skill development, and learning, which can lead to disengagement and attrition.

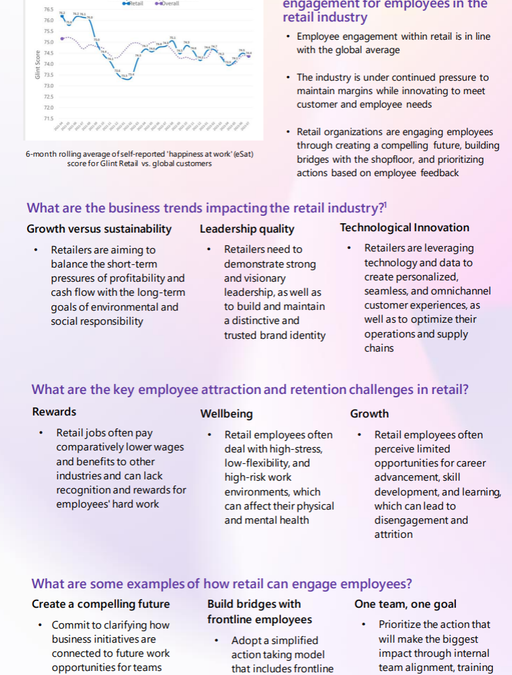

According to Glint benchmark data (2023), employee engagement in retail has declined by two points between 2021 and 2022. It’s clear that retailers need to invest in improving the employee experience, especially for the frontline workers, who are the face of the brand and the key to customer loyalty. So, how do they do this? Here are three examples of how retailers we’ve worked with have addressed the needs of their employees with the support of Microsoft Viva:

1. Create a compelling future

We worked with the leadership team of a MENA (Middle East and North Africa) based retailer to recognize that there was a connection between their ability to communicate the future of the direction of the organization effectively, and the degree to which employees saw a future for themselves in the organization. The team committed to clarifying how the business initiatives they were rolling out connected to future work opportunities for their teams.

2. Build bridges with frontline employees

According to the Microsoft Work Trend Report (2022), sixty-three percent of all frontline workers say messages from leadership don’t make it to them. A global fashion brand recognised after several years of employee listening that the actions being taken by leadership were not being felt on the shop floor. We worked with them to adopt a simplified action taking model with one clear commitment from leaders, that was efficient and effective in terms of communication and adoption. They also increased their investment in manager enablement to support better conversations within teams, when results from Viva Glint were released. This simplified approach led to improved perceptions of the listening process, and greater clarity at all levels on where to focus for a positive employee experience.

3. One internal team, one goal

Through an Executive Consultation with leaders of a UK retailer, it was identified that wellbeing was a risk for the business that unless addressed, would severely impact their priorities. With that in mind, the team created internal alignment – to prioritise wellbeing through both training investment and policy changes, resulting in a thirteen-point improvement in the wellbeing score year over year.

Conclusions

To succeed in this dynamic and competitive market, retailers need to focus on their most valuable asset: their employees. By investing in the employee experience, especially for the frontline workers, retailers can boost their employee engagement, customer satisfaction, and business performance.

A downloadable one-page summary is also available with this blog for you to share with your colleagues and leaders.

Leave a comment below to let us know if this resonates with what you are seeing with your employees in this industry.

References:

Deloitte retail trends report (2023)

Microsoft Work Trend Index special report (2022)

by Contributed | Jan 17, 2024 | Technology

This article is contributed. See the original author and article here.

Practice mode is now available in Forms. It’s tailored for EDU users, particularly students, offering a new way for students to review, test, and reinforce their knowledge. Follow me, let’s check out more details of practice mode. You can just try it from this template. (Note: Practice mode is only available for quizzes. )

Practice mode

Practice mode

Instant feedback after answering each question

In practice mode, questions will be shown one at a time. Students will receive immediate feedback after submitting each question, indicating whether their answer is right or wrong.

Instant feedback after answering each question

Instant feedback after answering each question

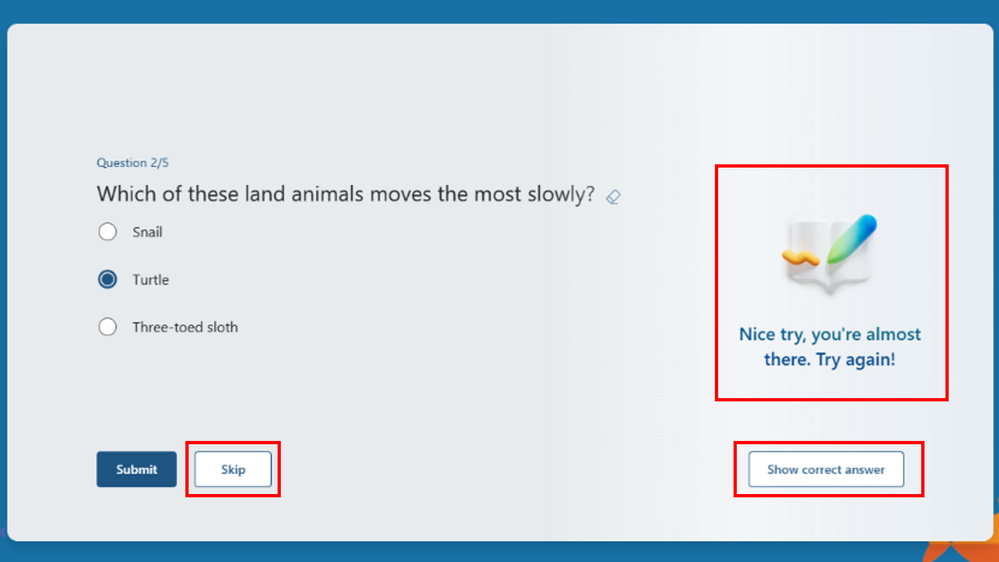

Try multiple times for the correct answer

Students can reconsider and try a question multiple times if they answer it incorrectly, facilitating immediate re-learning, and consequently strengthening their grasp of certain knowledge.

Try multiple times to get the correct answer

Try multiple times to get the correct answer

Encouragement and autonomy during practice

Students will receive an encouraging message after answering a question, whether their answer is correct or not, giving them a positive practice experience. And They have the freedom to learn at their own pace. If they answer a question incorrectly, they can choose to retry, view the correct answer, or skip this question.

Encouragement message and other options

Encouragement message and other options

Recap questions

After completing the practice, students can review all the questions along with the correct answers, offering a comprehensive overview to assess their overall performance.

Recap questions

Recap questions

Enter practice mode

Practice mode is only available for quizzes. You can turn it on from the “…” icon in the upper-right corner. Once you distribute the quiz recipients will automatically enter practice mode. Try out practice mode from this template now!

Enter practice mode

Enter practice mode

by Contributed | Jan 16, 2024 | Technology

This article is contributed. See the original author and article here.

Frontline managers have gained greater control, on a team-level, over the capabilities offered in Microsoft Shifts.

With the latest releases now available on the Shifts settings page, we have made updates to improve the end-user experience for frontline manager and workers. The updates are as follows:

Open shifts

Previously, when the Open Shifts setting was off, frontline managers could create but not publish open shifts. Also, they could view open and assigned shifts listed on their team’s schedule (including when workers are scheduled for time off).

Now, when the setting is turned off, frontline managers can’t create open shifts and can only view on their team’s schedule the assigned shifts (including scheduled time off).

See the differences from the past and new experience for frontline managers:

Time-off requests

Previously, when the time-off request setting was turned off, frontline managers couldn’t assign time off to their team members; more over, frontline workers couldn’t request time-off.

Now, when the setting is turned off, frontline managers can continue to assign time off to their team members. However, frontline workers will not have the ability to create time-off requests if this setting remains off.

Your organization can leverage Shifts as the place where the frontline may view their working and non-working schedules despite not using Shifts as your leave management tool.

See the new experience for frontline managers:

Open shifts, swap shifts, offer shifts and time-Off requests

Previously, when any of the request-related setting toggled between on to off, frontline managers couldn’t manage previous requests that were submitted when the setting was on.

Now, frontline managers can directly manage previous requests on the Requests page while frontline workers can view status and details of their individual requests.

Read more about

Shifts settings: Manage settings in Shifts – Microsoft Support

Latest Shifts enhancements: Discover the latest enhancements in Microsoft Shifts – Microsoft Community Hub

by Contributed | Jan 15, 2024 | AI, Business, Microsoft 365, Technology

This article is contributed. See the original author and article here.

We are updating our Microsoft Copilot product line-up with a new Copilot Pro subscription for individuals; expanding Copilot for Microsoft 365 availability to small and medium-sized businesses; and announcing no seat minimum for commercial plans.

The post Expanding Copilot for Microsoft 365 to businesses of all sizes appeared first on Microsoft 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

by Contributed | Jan 14, 2024 | Technology

This article is contributed. See the original author and article here.

TL;DR: This post navigates the intricate world of AI model upgrades, with a spotlight on Azure OpenAI’s embedding models like text-embedding-ada-002. We emphasize the critical importance of consistent model versioning ensuring accuracy and validity in AI applications. The post also addresses the challenges and strategies essential for effectively managing model upgrades, focusing on compatibility and performance testing.

Introduction

What are Embeddings?

Embeddings in machine learning are more than just data transformations. They are the cornerstone of how AI interprets the nuances of language, context, and semantics. By converting text into numerical vectors, embeddings allow AI models to measure similarities and differences in meaning, paving the way for advanced applications in various fields.

Importance of Embeddings

In the complex world of data science and machine learning, embeddings are crucial for handling intricate data types like natural language and images. They transform these data into structured, vectorized forms, making them more manageable for computational analysis. This transformation isn’t just about simplifying data; it’s about retaining and emphasizing the essential features and relationships in the original data, which are vital for precise analysis and decision-making.

Embeddings significantly enhance data processing efficiency. They allow algorithms to swiftly navigate through large datasets, identifying patterns and nuances that are difficult to detect in raw data. This is particularly transformative in natural language processing, where comprehending context, sentiment, and semantic meaning is complex. By streamlining these tasks, embeddings enable deeper, more sophisticated analysis, thus boosting the effectiveness of machine learning models.

Implications of Model Version Mismatches in Embeddings

Lets discuss the potential impacts and challenges that arise when different versions of embedding models are used within the same domain, specifically focusing on Azure OpenAI embeddings. When embeddings generated by one version of a model are applied or compared with data processed by a different version, various issues can arise. These issues are not only technical but also have practical implications on the efficiency, accuracy, and overall performance of AI-driven applications.

Compatibility and Consistency Issues

- Vector Space Misalignment: Different versions of embedding models might organize their vector spaces differently. This misalignment can lead to inaccurate comparisons or analyses when embeddings from different model versions are used together.

- Semantic Drift: Over time, models might be trained on new data or with updated techniques, causing shifts in how they interpret and represent language (semantic drift). This drift can cause inconsistencies when integrating new embeddings with those generated by older versions.

Impact on Performance

- Reduced Accuracy: Inaccuracies in semantic understanding or context interpretation can occur when different model versions process the same text, leading to reduced accuracy in tasks like search, recommendation, or sentiment analysis.

- Inefficiency in Data Processing: Mismatches in model versions can require additional computational resources to reconcile or adjust the differing embeddings, leading to inefficiencies in data processing and increased operational costs.

Best Practices for Upgrading Embedding Models

Upgrading Embedding – Overview

Now lets move to the process of upgrading an embedding model, focusing on the steps you should take before making a change, important questions to consider, and key areas for testing.

Pre-Upgrade Considerations

Assessing the Need for Upgrade:

- Why is the upgrade necessary?

- What specific improvements or new features does the new model version offer?

- How will these changes impact the current system or process?

Understanding Model Changes:

- What are the major differences between the current and new model versions?

- How might these differences affect data processing and results?

Data Backup and Version Control:

- Ensure that current data and model versions are backed up.

- Implement version control to maintain a record of changes.

Questions to Ask Before Upgrading

Key Areas for Testing

Performance Testing:

- Test the new model version for performance improvements or regressions.

- Compare accuracy, speed, and resource usage against the current version.

Compatibility Testing:

- Ensure that the new model works seamlessly with existing data and systems.

- Test for any integration issues or data format mismatches.

Fallback Strategies:

- Develop and test fallback strategies in case the new model does not perform as expected.

- Ensure the ability to revert to the previous model version if necessary.

Post-Upgrade Best Practices

Upgrading Embedding – Conclusion

Upgrading an embedding model involves careful consideration, planning, and testing. By following these guidelines, customers can ensure a smooth transition to the new model version, minimizing potential risks and maximizing the benefits of the upgrade.

Use Cases in Azure OpenAI and Beyond

Embedding can significantly enhance the performance of various AI applications by enabling more efficient data handling and processing. Here’s a list of use cases where embeddings can be effectively utilized:

Enhanced Document Retrieval and Analysis: By first performing embeddings on paragraphs or sections of documents, you can store these vector representations in a vector database. This allows for rapid retrieval of semantically similar sections, streamlining the process of analyzing large volumes of text. When integrated with models like GPT, this method can reduce the computational load and improve the efficiency of generating relevant responses or insights.

Semantic Search in Large Datasets: Embeddings can transform vast datasets into searchable vector spaces. In applications like eCommerce or content platforms, this can significantly improve search functionality, allowing users to find products or content based not just on keywords, but on the underlying semantic meaning of their queries.

Recommendation Systems: In recommendation engines, embeddings can be used to understand user preferences and content characteristics. By embedding user profiles and product or content descriptions, systems can more accurately match users with recommendations that are relevant to their interests and past behavior.

Sentiment Analysis and Customer Feedback Interpretation: Embeddings can process customer reviews or feedback by capturing the sentiment and nuanced meanings within the text. This provides businesses with deeper insights into customer sentiment, enabling them to tailor their services or products more effectively.

Language Translation and Localization: Embeddings can enhance machine translation services by understanding the context and nuances of different languages. This is particularly useful in translating idiomatic expressions or culturally specific references, thereby improving the accuracy and relevancy of translations.

Automated Content Moderation: By using embeddings to understand the context and nuance of user-generated content, AI models can more effectively identify and filter out inappropriate or harmful content, maintaining a safe and positive environment on digital platforms.

Personalized Chatbots and Virtual Assistants: Embeddings can be used to improve the understanding of user queries by virtual assistants or chatbots, leading to more accurate and contextually appropriate responses, thus enhancing user experience. With similar logic they could help route natural language to specific APIs. See CompactVectorSearch repository, as an example.

Predictive Analytics in Healthcare: In healthcare data analysis, embeddings can help in interpreting patient data, medical notes, and research papers to predict trends, treatment outcomes, and patient needs more accurately.

In all these use cases, the key advantage of using embeddings is their ability to process and interpret large and complex datasets more efficiently. This not only improves the performance of AI applications but also reduces the computational resources required, especially for high-cost models like GPT. This approach can lead to significant improvements in both the effectiveness and efficiency of AI-driven systems.

Specific Considerations for Azure OpenAI

- Model Update Frequency: Understanding how frequently Azure OpenAI updates its models and the nature of these updates (e.g., major vs. minor changes) is crucial.

- Backward Compatibility: Assessing whether newer versions of Azure OpenAI’s embedding models maintain backward compatibility with previous versions is key to managing version mismatches.

- Version-Specific Features: Identifying features or improvements specific to certain versions of the model helps in understanding the potential impact of using mixed-version embeddings.

Strategies for Mitigation

- Version Control in Data Storage: Implementing strict version control for stored embeddings ensures that data remains consistent and compatible with the model version used for its generation.

- Compatibility Layers: Developing compatibility layers or conversion tools to adapt older embeddings to newer model formats can help mitigate the effects of version differences.

- Baseline Tests: Create few simple baseline tests, that would identify any drift of the embeddings.

Azure OpenAI Model Versioning: Understanding the Process

Azure OpenAI provides a systematic approach to model versioning, applicable to models like text-embedding-ada-002:

Regular Model Releases:

Version Update Policies:

- Options for auto-updating to new versions or deploying specific versions.

- Customizable update policies for flexibility.

- Details on update options.

Notifications and Version Maintenance:

Upgrade Preparation:

- Recommendations to read the latest documentation and test applications with new versions.

- Importance of updating code and configurations for new features.

- Preparing for version upgrades.

Conclusion

Model version mismatches in embeddings, particularly in the context of Azure OpenAI, pose significant challenges that can impact the effectiveness of AI applications. Understanding these challenges and implementing strategies to mitigate their effects is crucial for maintaining the integrity and efficiency of AI-driven systems.

References

- “Learn about Azure OpenAI Model Version Upgrades.” Microsoft Tech Community. Link

- “OpenAI Unveils New Embedding Model.” InfoQ. Link

- “Word2Vec Explained.” Guru99. Link

- “GloVe: Global Vectors for Word Representation.” Stanford NLP. Link

Recent Comments