by Contributed | Oct 25, 2024 | Technology

This article is contributed. See the original author and article here.

SharePoint Online

Teams

Microsoft 365

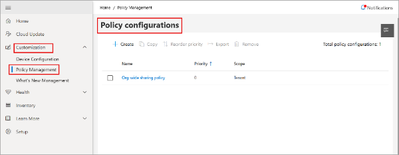

The Cloud Policy service allows Microsoft 365 administrators to configure policies for Microsoft 365 Apps for enterprise and assign these policies using Microsoft 365 groups or Entra ID groups. Once configured, these policies are automatically enforced as users sign in and use Microsoft 365 apps.

This article is the second of a series in the Microsoft Tech Community Public Sector Blog and touches on several key principles for compliance, including data residency versus data sovereignty.

If you work in smartcard federated authentication environments, here’s a much-anticipated security feature for you. Starting with the September 10, 2024 Windows security update, you can use strong name-based mapping on Windows Server 2019 and newer. This feature helps you with the hardening changes for certificate-based authentication on Windows domain controllers.

Azure OpenAI Service is FedRAMP High and Microsoft Copilot for Microsoft 365 GCC High and DOD targeting Summer 2025.

We’re thrilled to announce the upcoming Oct 2024 anticipated General Availability of Microsoft Copilot for Microsoft 365 GCC subject to US Government authorization. This powerful tool combines large language models (LLMs) with your organization’s data to enhance productivity and innovation in the public sector.

Customers in our Office 365 government clouds, GCC, GCCH, and DoD, are continuing to evolve how they do business in the hybrid workplace. As Microsoft Teams is the primary tool for communication and collaboration, customers are looking to improve productivity by integrating their business processes directly into Microsoft Teams via third-party party (3P) applications or line-of-business (LOB)/homegrown application integrations.

Watch past episodes of Jay Leask and other members of the Government Community on LinkedIn!

Empowering US public sector organizations to transition to Microsoft 365

References and Information Resources

|

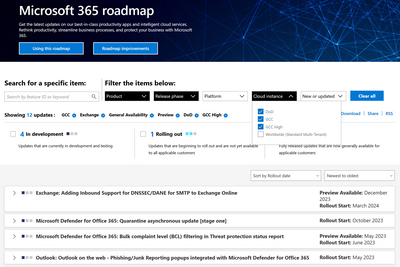

Microsoft 365 Public Roadmap

This link is filtered to show GCC, GCC High and DOD specific items. For more general information uncheck these boxes under “Cloud Instance”.

Stay on top of Microsoft 365 changes

Here are a few ways that you can stay on top of the Office 365 updates in your organization.

Microsoft Tech Community for Public Sector

Your community for discussion surrounding the public sector, local and state governments.

Microsoft 365 for US Government Service Descriptions

· Office 365 Platform (GCC, GCCH, DoD)

· Office 365 U.S. Government GCC High endpoints

· Office 365 U.S. Government DoD endpoints

· Microsoft Purview (GCC, GCCH, DoD)

· Enterprise Mobility & Security (GCC, GCCH, DoD)

· Microsoft Defender for Endpoint (GCC, GCCH, DoD)

· Microsoft Defender for Cloud Apps Security (GCC, GCCH, DoD)

· Microsoft Defender for Identity Security (GCC, GCCH, DoD)

· Azure Information Protection Premium

· Exchange Online (GCC, GCCH, DoD)

· SharePoint (GCC, GCCH, DoD)

· OneDrive (GCC, GCCH, DoD)

· Teams (GCC, GCCH, DoD)

· Office 365 Government (GCC, GCCH, DoD)

· Power Apps (GCC, GCCH, DoD)

· Power Automate US Government (GCC, GCCH, DoD)

· Power BI (GCC, GCCH, DoD)

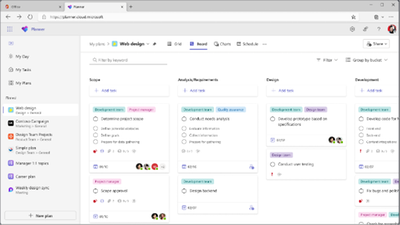

· Planner (GCC, GCCH, DoD)

· Outlook Mobile (GCC, GCCH, DoD)

· Viva Insights (GCC)

· Dynamics 365 US Government

Public Sector Center of Expertise

We bring together thought leadership and research relating to digital transformation and innovation in the public sector. We highlight the stories of public servants around the globe, while fostering a community of decision makers. Join us as we discover and share the learnings and achievements of public sector communities.

|

|

Microsoft Teams for US Government Adoption Guide

|

|

|

Message Center Highlights

|

Please note: This section is for informational purposes only. It is presented as is and as available with no warranty and no supportability given expressly or implied. Questions, comments, concerns and all other feedback must be presented in the comment section below the post, thank you!

SharePoint Online

MC898388 — Specifying client ID and secret when creating an Azure ACS principal via AppRegNew.aspx will be removed

When using the AppRegNew.aspx page to create Azure ACS (Access Control Service) principals today, you can generate a new client ID (default flow) and secret, but you can also specify the client ID or secret yourselves. Going forward, the option to specify the client ID and secret yourself will be removed. Each created Azure ACS principal will have a unique client ID (a GUID) and a system-generated secret. After the creation of the Azure ACS principal, you’ll be redirected to a page showing the created client ID and secret.

How this will affect your organization:

If you used to specify a specific client ID as a way to renew the associated service principal secret then please switch the recommended model for renewing secrets for Azure ACS service principals.

We will gradually roll out this change in early October 2024, and we expect to complete by early November 2024.

Reminder: Azure ACS in Microsoft 365 is being retired with a final end date of April 2, 2026. You can learn more via the retirement announcement or via MC693863.

What you need to do to prepare:

This change will happen automatically by the specified date. No admin action is required.

MC891238 — (Updated) Microsoft SharePoint and Microsoft Teams: Update on new Microsoft Lists rollout

Updated September 26, 2024: We have updated the content. Thank you for your patience.

Coming soon to Microsoft SharePoint and Lists in Microsoft Teams: An updated Microsoft Lists. This rollout introduces new features to users, including the ability to add ratings to any list, drag and/or paste images directly into a list, see who is collaborating with you in real time, switch views by clicking tabs, and more.

This is an update to MC600726: (Updated) Microsoft Lists User Experience Update (updated October 2023) and MC709979 (Updated) Microsoft SharePoint: Updated Lists in Team Sites, Lists progressive web app (PWA), and Teams (January 2024).

When this will happen:

General Availability (Worldwide, GCC, GCC High, DoD): We will begin rolling out late September 2024 and expect to complete by late October 2024.

How this will affect your organization:

Before this rollout, Lists do not have features like visible real time collaborators, easy access to views via tabs, and applied filters at the top of a list. Note: Some features described in the May 2023 blog Microsoft Lists: Easier, Better, Faster, Stronger – Microsoft Community Hub, such as new forms to collect information easily, have started to roll out or have fully rolled out.

After this rollout, users in active tenants will see the remaining Lists feature updates described in the May 2023 blog when browsing lists on SharePoint sites, the Lists progressive web app (PWA), the Lists app on the web, and the Lists app in Teams. All Lists in SharePoint sites will retain the SharePoint site’s theme and navigation.

Existing lists configured with these features will be updated after this rollout:

- SharePoint Framework (SPFx) command extensions

- Power Apps forms

- Approvals

Note: Extensions that depend directly on DOM (Document Object Model) structure or CSS (cascading style sheets) are not supported and may break. We recommend using Microsoft SharePoint Framework, which provides a rich API to customize the SharePoint experience.

These Lists components are not included in the new rollout:

- SharePoint Framework (SPFx) field customizers

- The Playlist template integrated with Microsoft Stream

All new and updated List features are on by default.

What you need to do to prepare:

No admin action is needed to prepare for this change. You may want to notify your users about this change and update any relevant documentation as appropriate.

Learn more

MC752513 — (Updated) SharePoint Online: New heading level options for web parts

Updated September 25, 2024: We have updated the rollout timeline below. Thank you for your patience.

Coming soon: When authoring pages in Microsoft SharePoint, authors will be able to choose the heading level for titles in web parts to help define the hierarchy of information on a page. As we’re introducing this new capability, we’re also using it as an opportunity to align to the default heading font size in the Text web part. This means that all headings for both Text web part and titles in web parts will be as follow. Note: with this change we’re not changing the default headings sizes for the Text web part:

- Heading 2 will be 28

- Heading 3 will be 24

- Heading 4 will be 20

MC670896 Accessibility Improvements to Page Authoring (August 2023) and with Roadmap ID 387500

When this will happen:

Targeted Release: We will begin rolling out early April 2024 and expect to complete by mid-August 2024.

General Availability (Worldwide, GCC, GCC High, DoD): We will begin rolling out mid-August 2024 and expect to complete by late October 2024 (previously early September).

How this will affect your organization:

Authors will now have the ability to set their own heading level. If not set, the default will continue to be Heading 2. New and existing pages and news posts will see the new default heading font sizes.

What you need to do to prepare:

This rollout will happen automatically by the specified date with no admin action required. You may want to notify your SharePoint authors about this change and update any relevant documentation as appropriate.

OneDrive for Business

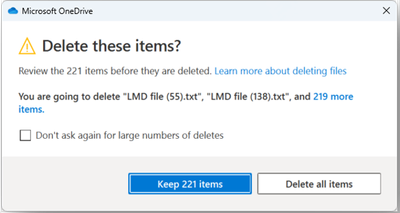

MC888872 — Microsoft OneDrive: New prompt to confirm deletion of shared items and updated prompt for local mass item deletions

Microsoft 365 Roadmap ID 415705

Coming soon for Microsoft OneDrive Sync: When users are about to delete shared items, a new prompt will ask users to confirm the deletion. When users are about to mass delete local items, an updated prompt will ask users to confirm the deletion. This message applies to OneDrive on Windows desktop.

When this will happen:

General Availability (Worldwide, GCC, GCC High, DoD): We will begin rolling out mid-September 2024 and expect to complete by early October 2024.

How this will affect your organization:

Before this rollout, users are able to delete shared items in File Explorer without being asked to confirm the deletion. Users are prompted to confirm deletion before they mass delete local items, which might have included shared items.

After this rollout, when users are running OneDrive and OneDrive is not paused, and users are about to delete shared items or mass delete local items (more than 200 items by default) in File Explorer, users will be asked to confirm the deletion of shared items in a new prompt or the deletion of mass items in an updated prompt in File Explorer:

Prompt to confirm the mass deletion of local items:

In the Microsoft admin center, admins can set a value for the Group policies (SharedContentDeleteConfirmation and LocalMassDeleteFileDeleteThreshold) to prevent users from opting out of seeing the confirmation prompts. Admins can also set the threshold for mass delete (the default is 200 items). If admins do not set a value for the Group policies, users will be able to opt out of the confirmation messages.

This feature is on by default.

What you need to do to prepare:

This rollout will be applicable in builds later than One Drive 24.091.0505.0001. No admin action required before the rollout. You may want to notify your users about this change and update any relevant documentation.

Admins who set a value for the Group policies should be aware of these features in case internal support is needed for users who are not able to opt out of the confirmation dialogs.

MC806523 — (Updated) Microsoft OneDrive: Change to shared folder experience

Microsoft 365 Roadmap ID 395378

Updated September 17, 2024: We have updated the images below. Thank you for your patience.

We’re making a change to the shared folder experience in Microsoft OneDrive. Currently, when a user opens a shared folder in OneDrive, they are taken to a view of that folder within the sharer’s OneDrive. In the new experience, opening a shared folder will take the user to the shared folder within the People view of their own OneDrive. This view, organized by people, shows and allows access to all the files and folders that have been shared with the user.

When this will happen:

General Availability (Worldwide, GCC): We will begin rolling out late July 2024 and expect to complete by mid-September 2024 (previously late August).

How this will affect your organization:

With this feature update, opening a shared folder will take the user to the shared folder within the People view of their own OneDrive, as follows.

What you need to do to prepare:

This rollout will happen automatically with no admin action required. You may want to notify your users about this change and update any relevant documentation as appropriate.

Microsoft Viva

MC894580 — (Updated) Microsoft Viva Engage: Subscribe to notifications for followed topics (GCC)

Microsoft 365 Roadmap ID 395940

Updated September 26, 2024: After further review we have decided not to proceed with this change at this time. We apologize for any inconvenience.

Coming soon to Microsoft Viva Engage: This feature will allow users to subscribe to receive notifications after following Viva Engage topics. Users will receive an Engage notification on the bell icon on the left side of Viva Engage when a new post is tagged with a topic they are subscribed to, or the topic is added to an existing post.

When this will happen:

General Availability (GCC): We will begin rolling out mid-September 2024 and expect to complete by late September 2024. – We will not be proceeding with this change at this time.

How this will affect your organization:

Before this rollout, users are not able to subscribe to a topic after following a topic in Engage. Instead, they can only follow a topic.

After this rollout, users will be able to subscribe to a topic after following the topic on the Viva Engage topic page. After users subscribe, they will receive notifications for those topics they are subscribed to.

This feature will be on by default.

User adds tags to a post and Viva prompts the user to subscribe to the topics:

Viva confirms that notifications are enabled:

The bell icon on the left side of the screen with an active notification:

What you need to do to prepare:

This rollout will happen automatically by the specified date with no admin action required before the rollout. You may want to notify your users about this change and update any relevant documentation.

Learn more: Follow or subscribe to topics – Microsoft Support (will be updated before rollout begins).

MC867656 — (Updated) Microsoft Viva Connections news notifications in GCC, GCC High, and DoD

Microsoft 365 Roadmap ID 410198

Updated September 13, 2024: We have updated the rollout timeline below. Thank you for your patience.

With this feature update, existing news notifications in Microsoft Viva Connections will now be available in GCC, GCC High, and DoD.

When this will happen:

General Availability (GCC): We will begin rolling out late September 2024 (late August) and expect to complete by early October 2024 (early September).

General Availability (GCC High, DoD): We will begin rolling out early October 2024 (early September) and expect to complete by mid-October 2024 (mid-September).

How this will affect your organization:

News notifications are delivered via Microsoft Teams in the desktop, mobile, and web environments in the following scenarios:

- News is published to a SharePoint team or communication site that a user follows or by someone that a user works closely with.

- News is boosted.

- Someone comments on a new News post.

- Someone “Likes” a News post.

- Someone is @mentioned in a comment on a news post.

End users can control which notifications they want to see in the following ways:

- On Teams desktop and web, specific notification types can be selectively enabled or disabled under Settings > Notifications and activity > Viva Connections. These settings are respected on Teams mobile as well.

- On Teams mobile, notifications can’t be selectively enabled or disabled, but users can toggle all push notifications (including Viva Connections) in the Teams mobile app under Settings > Notifications > General Activity > Apps on Teams. Notifications will still be visible under the Microsoft Teams activity feed.

- There is no tenant level setting to turn off Viva Connections News notifications.

For more information, see Viva Connections News notifications.

What you need to do to prepare:

This rollout will happen automatically with no admin action required. You may want to notify your users about this change and update any relevant documentation as appropriate.

Microsoft Teams

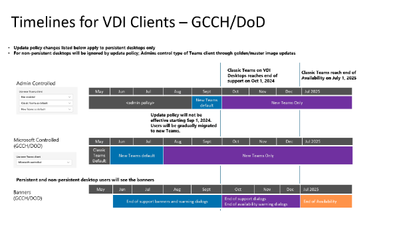

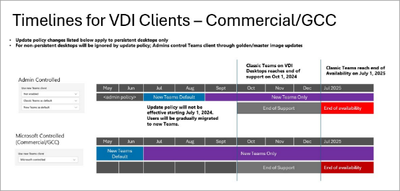

MC899937 — Microsoft Teams in VDI: Rollout schedule of “New Teams only” policy (GCCH, DOD)

As we approach October 1, 2024, when support ends for classic Teams in VDI (Virtualized Desktop Infrastructure), we are finalizing the rollout schedule of new Teams as previously communicated in MC804769 Microsoft Virtual Desktop Infrastructure: Classic Microsoft Teams retirement schedules (GCCH, DoD) (June 2024) and End of availability for classic Teams client – Microsoft Teams | Microsoft Learn:

When this will happen:

General Availability (GCCH, DoD): We will begin rolling out the new policy value early October 2024 and expect to complete by mid-October 2024.

How this will affect your organization:

After this rollout, the Teams admin center policy UseNewTeamsClient under TeamsUpdateManagement will be overwritten to New Teams only on the schedule in this message. This means that a new Teams client will be installed automatically (if not present already) and will become the default client. The toggle to return to classic Teams will not be available.

This policy value override will only apply to persistent VDI environments where classic Teams is version 1.7.00.7956 or newer.

For non-persistent environments, admins will continue to control the rollout of the new Teams client with golden/master image updates, and the value of the existing policy in the Teams admin center will be honored for users in non-persistent VDI environments.

Classic Teams installation details and definitions

- Persistent environments: Classic Teams was installed with the .exe installer (user installation in Appdata/local).

- Non-persistent environments: Classic Teams was installed with the .msi installer (machine-wide installation in C:Program Files (x86)).

In persistent environments only, Microsoft will attempt to uninstall Classic Teams between 1-2 weeks after new Teams is installed.

What you need to do to prepare:

Review the main VDI article and your current configuration to determine the impact for your organization. Please notify your users about this change and update relevant documentation as appropriate.

MC899179 — Microsoft Teams: Menu bar icon for Mac devices (GCC, GCCH, DoD)

Microsoft 365 Roadmap ID 416069

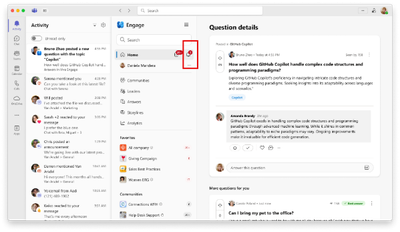

Coming soon: Microsoft Teams for Mac devices is adding an extension to the macOS Menu Bar, where users will find a Teams icon with a persistent indicator of their presence status. Users can change their presence status with the Menu Bar extension, which also contains additional actions for streamlining Teams workflows.

When this will happen:

Targeted Release: We will begin rolling out mid-October 2024 and expect to complete by late October 2024.

General Availability (GCC, GCC High, DoD): We will begin rolling out mid-November 2024 and expect to complete by mid-December 2024.

How this will affect your organization:

Before this rollout, Teams users on Mac devices do not have a persistent indicator of their Teams presence status.

After this rollout, Teams users on Mac devices will have the Menu Bar extension by default. If a user prefers not to have the icon present in the macOS Menu Bar, it can be disabled from the Teams Settings. More details on how to use or disable Teams Menu Bar extension will be available in the documentation. We will update this post with the documentation link before rollout begins.

The feature will be available for users after an update to the latest version of Teams. We will update this communication soon with the specific version number.

What you need to do to prepare:

The feature will be enabled automatically for users after Teams updates to the build containing this functionality. No admin action is required. You may want to notify your users about this change and update any relevant documentation.

MC898394 — Stay current with your OS to keep your Microsoft Teams desktop client supported

Microsoft Teams is governed by the Modern Lifecycle Policy, the Teams desktop client needs to be under a supported OS version. This is to ensure that users have the latest updates, capabilities, performance enhancements, security features, and service compatibility.

On new Teams, users on OS versions that are not supported will receive in-app notifications to encourage them to update their device to a supported OS version. A version that is too far out of date will become incompatible with the service and the Desktop client will be blocked until the OS is updated.

Windows OSes support dates and lifecycle policy are documented in Product and Service lifecycle page. For example, OSes such as Windows LTSC and OSes under Long Term Serving Channel (LTSC), are not supported as they follow the Fixed lifecycle policy.

MacOS typically receives support for three years following its release, with the end of support coinciding with the launch of the third subsequent major macOS version. For example, once macOS 15 is released, macOS 12 will no longer be supported.

When this will happen:

This will be shown on the new Teams client starting on October 15th, 2024. Note, users on Virtual Desktop Infrastructure (VDI) deployments are currently excluded.

How this will affect your organization:

New Teams- Windows 10 OS Versions less than 19041

- Beginning October 15th, 2024, the Teams desktop client may present a banner notifying users that they are on an unsupported OS version with a link to this page.

- Beginning January 15th, 2025. The desktop client may present a blocking page for users and require an update to a supported OS version to continue using the Desktop Client with a link to this page.

New Teams- Windows 10 or Windows 11 OS on LTSC update servicing (not on Modern Lifecycle)

- Beginning October 15th, 2024, the Teams desktop client may present a banner notifying users that they are on an unsupported OS version with a link to this page.

- Beginning August 15th, 2025. The desktop client may present a blocking page for users and require an update to a supported OS version to continue using the Desktop Client with a link to this page.

New Teams – macOS versions 11 (Big Sur) and older

- Beginning October 15th, 2024, the Teams desktop client may present a banner notifying users that they are on an unsupported OS version with a link to this page.

- Beginning November 15th, 2024, the Teams desktop client may present a blocking page for users and require an update to a supported OS version to continue using the Desktop Client with a link to this page.

New Teams – macOS 12 (Monterey)

- Beginning November 15th, 2024, the Teams desktop client may present a banner notifying users that they are on an unsupported OS version with a link to this page.

- Beginning March 15th, 2025, the Teams desktop client may present a blocking page for users and require an update to a supported OS version to continue using the Desktop Client with a link to this page.

Note: For the classic Teams client see End of availability for classic Teams client for classic Teams Timelines regarding end of support and end of availability.

What you can do to prepare:

To avoid disruption to end-users, admins should plan for a regular OS update cadence to remain on a supported OS version.

MC898391 — Microsoft Teams: Performance improvements for meetings in Edge web browser for guests

Microsoft 365 Roadmap ID 401117

Anonymous users (guests) who join Microsoft Teams meetings in the Microsoft Edge for Business web browser will soon experience performance improvements. They will be able to join meetings faster.

When this will happen:

Targeted Release: We will begin rolling out early November 2024 and expect to complete by mid-November 2024.

General Availability (Worldwide): We will begin rolling out mid-November 2024 and expect to complete by late-November 2024.

General Availability (GCC, GCC High, and DoD): We will begin rolling out early January 2025 and expect to complete by late January 2025.

How this will affect your organization:

Before this rollout, anonymous users (guests) may experience longer wait times when attempting to join a Teams meeting.

After this rollout, anonymous users (guests) will be able to join meetings faster, even on low-end devices.

What you need to do to prepare:

This rollout will happen automatically with no admin action required.

MC894581 — Microsoft Teams: Performance improvements for meetings in Chrome web browser for guests

Microsoft 365 Roadmap ID 401116

Anonymous users (guests) who join Microsoft Teams meetings in the Chrome web browser will soon experience performance improvements.

When this will happen:

Targeted Release: We will begin rolling out early November and expect to complete by mid-November 2024.

General Availability (Worldwide): We will begin rolling out mid-November 2024 and expect to complete by late-November 2024.

General Availability (GCC, GCC High and DoD): We will begin rolling out early January 2025 and expect to complete by late January 2025.

How this will affect your organization:

Before this rollout, anonymous users (guests) may experience longer wait times when attempting to join a Teams meeting.

After this rollout, anonymous users (guests) will be able to join meetings faster, even on low-end devices.

What you need to do to prepare:

You may want to update your internal documentation to inform your users about this improvement. However, because the improvement is for external users and does not change the look of Teams, you can skip this step./p>

MC893623 — Microsoft Teams Rooms on Windows: New Cloud IntelliFrame preview

Coming soon to Microsoft Teams Rooms for Windows: When Cloud IntelliFrame is activated for a room, the in-room preview video on the display will be the same view that online attendees see. Previously, the in-room preview video did not show IntelliFrame while online participants could see IntelliFrame, which resulted in a lot of confusion. This update now ensures everyone sees the same experience. Available for tenants with Teams Rooms Pro licenses.

When this will happen:

Targeted Release: We will begin rolling out mid-September 2024 and expect to complete by late September 2024.

General Availability (Worldwide): We will begin rolling out late September 2024 and expect to complete by early October 2024.

How this will affect your organization:

Before this rollout, participants in Teams Rooms on Windows using Cloud IntelliFrame would see themselves without IntelliFrame in the in-room preview video, while the online audience sees IntelliFrame. In-room participants do not know how they appear to the online audience.

After this rollout, participants in Teams Rooms on Windows using Cloud IntelliFrame will see how they appear to the audience with the in-room preview:

What you need to do to prepare:

All Rooms using Cloud IntelliFrame will receive this feature update automatically and it will be on by default. No admin action required before rollout.

You may want to notify your users about this change and update any relevant documentation.

Learn more: Cloud IntelliFrame – Microsoft Teams | Microsoft Learn (will be updated before rollout)

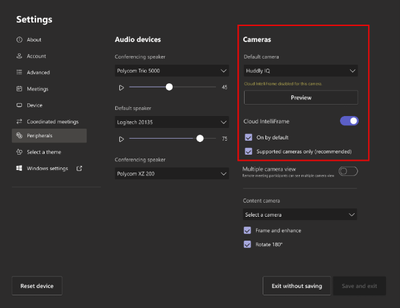

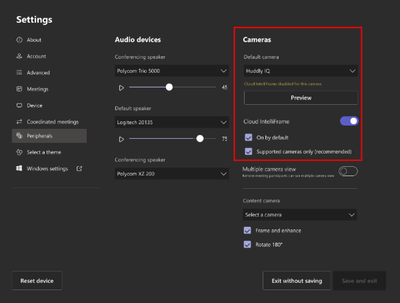

MC893620 — Microsoft Teams: Enhanced admin controls for Cloud IntelliFrame in Teams Rooms on Windows

Coming soon for Microsoft Teams Rooms for Windows: Admins will be able to override the default settings of Cloud IntelliFrame and use cameras even if not on the supported camera list. This feature is available for customers a Pro license for Teams Rooms for Windows

When this will happen:

Targeted Release: We will begin rolling out late September 2024 and expect to complete by mid-October 2024.

General Availability (Worldwide): We will begin rolling out early October 2024 and expect to complete by late October 2024.

How this will affect your organization:

Before this rollout: Cloud IntelliFrame is available for customers with a Pro license for Teams Rooms for Windows with supported cameras only. Admins can enable/disable Cloud IntelliFrame for these eligible rooms.

After this rollout: All customers a Pro license for Teams Rooms for Windows are eligible to use Cloud IntelliFrame. Rooms with supported cameras get Cloud IntelliFrame by default. Rooms with non-supported cameras can be configured by admins to use Cloud IntelliFrame. We recommend you disable all AI features using OEM firmware on unsupported cameras before enabling Cloud IntelliFrame.

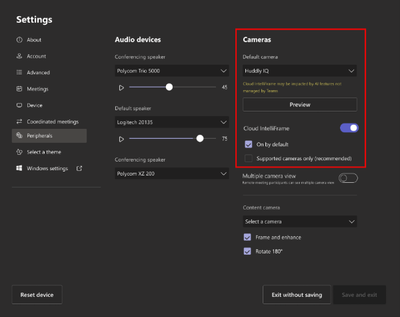

Default settings for a supported camera:

Default settings for an unsupported camera:

Settings required to use Cloud IntelliFrame on an unsupported camera:

This feature is on by default.

What you need to do to prepare:

This rollout will happen automatically by the specified date with no admin action required before the rollout. You may want to notify your admins about this change and update any relevant documentation.

Learn more: Cloud IntelliFrame – Microsoft Teams | Microsoft Learn (will be updated before rollout)

MC892646 — (Updated) Microsoft Teams: New policy for voice and face enrollment defaulting to ‘On’

Microsoft 365 Roadmap ID 413708

Updated September 20, 2024: We have updated the content below for clarity. Thank you for your feedback.

We’re introducing a new policy that gives IT admins enhanced flexibility with distinct settings for voice and face enrollment. This adjustment provides greater control over managing these features, allowing organizations to tailor to their needs. Voice and face biometric enrollment will be enabled by default, enabling voice isolation and speaker recognition in meeting rooms and enhancing intelligent meeting recaps and Microsoft Copilot for meetings.

When this will happen:

General Availability (Worldwide, GCC):

- We will begin rolling out the new policy in early October 2024 and expect complete by mid-October 2024.

- The policy will be enabled beginning in early January 2025 and expect to be complete by mid-January 2025.

How this will affect your organization:

These changes will provide your organization with increased flexibility in managing voice and face enrollments. By using separate settings, you can customize for your organization’s specific needs. The enrollment policy will be enabled by default. Once enabled, these enrollments will activate features like Voice Isolation and enhanced speaker recognition, leading to more efficient and productive meetings.

What you need to do to prepare:

To ensure you have enough time to prepare for this change, you have until mid-January 2025 to configure everything to best suit your needs. If your organization requires keeping face and voice enrollments off, be sure to review the new policy and adjust the settings during this time. This period provides an opportunity to optimize how you manage voice and face data, enhancing your team’s control over the process.

The new csTeamsAIPolicy will replace the current csTeamsMeetingPolicy, introducing two separate settings for face and voice enrollment: EnrollFace and EnrollVoice. Each can be set to Enabled or Disabled.

To help you get ready, review:

For more information about voice and face enrollment, visit Overview of voice and face enrollment – Microsoft Teams | Microsoft Learn

MC892055 — Microsoft Teams: Participant roster grouping in Teams BYOD meeting rooms

Microsoft 365 Roadmap ID 413711

Coming soon for Microsoft Teams: When users join a Teams meeting with their laptop in bring your own device (BYOD) meeting rooms, they will appear in the In-room participant section of the roster, which enables individual identification and meeting intelligence capabilities. As communicated in MC814577 (Updated) Microsoft Teams: Roster grouping for in-room participants through Proximity Join for Teams Rooms on Windows (July 2024), we will launch this same experience in September 2024 for joining a nearby meeting with a Microsoft Teams Room and a companion device.

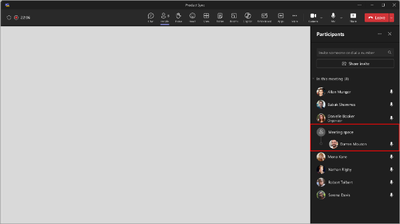

Unique to BYOD meeting rooms, the roster group will be displayed as Meeting space if the connected device is NOT identified in the Teams Pro Management Portal (PMP). If the device is identified in the PMP, the roster group will display the configured meeting room name.

This message applies to Teams for Windows and Mac desktops.

When this will happen:

Targeted Release: We will begin rolling out early October 2024 and expect to complete by mid-October 2024.

General Availability (Worldwide): We will begin rolling out mid-October 2024 and expect to complete by late October 2024.

General Availability (GCC, GCCH, DoD): We will begin rolling out mid-October 2024 and expect to complete by late November 2024.

How this will affect your organization:

Before this rollout: Users joining a Teams meeting from a BYOD meeting room will be displayed as an individual participant in the Participants roster. Room node treatments will only be applicable to users joining from a Microsoft Teams Room.

After this rollout, this update will be available by default for all users joining a Teams meeting from a BYOD meeting room. After joining, in-room participants will automatically be grouped under the room node on the Participant roster. The two scenarios in which in-room participants can be ungrouped from the room node are:

- Raised hand: We will continue to follow the sequential order of raised hands on the roster. In-room participants who use their companion device to raise their hand will appear on the roster in the order in which they raised their hand.

- Role assignment: For changes in roles (presenters and attendees), in-room participants can be broken up and placed in the appropriate category on the roster. (This is an existing feature before this rollout.)

In both scenarios, room information will be listed below participant name.

What you need to do to prepare:

This rollout will happen automatically by the specified date with no admin action required before the rollout. You may want to notify your users about this change and update any relevant documentation.

Before rollout, we will update this post with revised documentation.

MC892049 — (Updated) Microsoft Teams: Users will exclusively control their voice and face data exports

Microsoft 365 Roadmap ID 413709

Updated September 27, 2024: We have updated the rollout timeline below. Thank you for your patience.

We are excited to announce an important update designed to enhance the privacy and security of your organization. Before this rollout, admins had the capability to download voice and face data for their users who have opted in to enroll their voice and/or face on the Recognition tab in Microsoft Teams. With this rollout, to better protect user data, we are shifting this capability to users. This change will ensure that users have more control over their own voice and face data, aligning with our commitment to privacy and security. This change reduces the risk of unauthorized access or sharing, as users will handle their own data export requests and admins will not have access to this data. This message applies to Teams for Windows desktop and Teams for Mac desktop.

When this will happen:

Targeted Release: We will begin rolling out mid-November 2024 and expect to complete by late November 2024.

General Availability (Worldwide, GCC): We will begin rolling out early December 2024 (previously mid-October) and expect to complete by mid-December 2024 (previously late October).

How this will affect your organization:

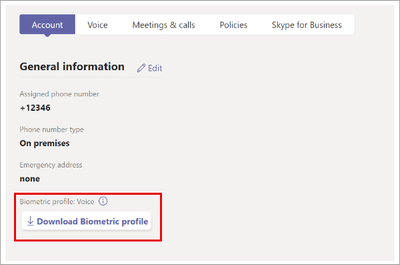

With this rollout, we will remove the download button from the Teams admin center (TAC) > User > Manage user > select any user > Account:

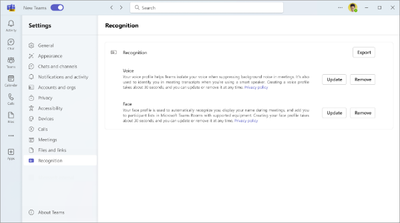

Users can go to Teams > Settings > Recognition and select the Export button to access their data and download it to their Download folder:

The new Export feature is on by default.

What you need to do to prepare:

This rollout will happen automatically by the specified date with no admin action required before the rollout. You may want to notify your admins and users about this change and update any relevant documentation.

Learn more: Overview of voice and face enrollment – Microsoft Teams | Microsoft Learn (will be updated before rollout)

MC891240 — Microsoft Teams: Voice isolation will be available for macOS desktops

Microsoft 365 Roadmap ID 412359

With voice isolation in Microsoft Teams, you can enjoy clear and uninterrupted calls or meetings, no matter where you are. Voice isolation is an AI-based advanced noise suppression feature that eliminates unwanted background noise, including other human voices. The technology recognizes your voice profile and ensures only your voice is transmitted. Voice isolation can be enabled for calls and meetings. With this rollout, voice isolation will be available on Teams for macOS desktops.

When this will happen:

Targeted Release: We will begin rolling out early October 2024 and expect to complete by mid-October 2024.

General Availability (Worldwide, GCC): We will begin rolling out mid-October 2024 and expect to complete by late October 2024.

How this will affect your organization:

Before this rollout, Teams users on macOS desktops are not able to access the Teams voice isolation feature.

After this rollout, to use this feature, admins must enable the enrollUserOverride policy and the Voice isolation feature policy in the Teams admin center. Users will need to enroll their voice profile in Teams Settings on the Recognition tab to enable the voice isolation feature to have better audio input quality.

To disable this feature for your organization, use Microsoft PowerShell: Set-CsTeamsMeetingPolicy

This feature is on by default and available for admins to enable it.

What you need to do to prepare:

Make sure to enable the enrollUserOverride policy for Voice and Face profile enrollment. Users can begin enrolling their Recognition profile now so they can use the voice isolation on macOS as soon as it is rolled out to your tenant.

These support articles and resources will help address any questions you or your users may have about the rollout:

This rollout will happen automatically by the specified date with no admin action required before the rollout. You may want to notify your users about this change and update training and documentation as appropriate.

MC889534 — (Updated) Microsoft Teams: Embed support for Teams town hall

Microsoft 365 Roadmap ID 401120

Updated September 26, 2024: We have updated the rollout timeline below. Thank you for your patience.

As a part of Team’s ongoing effort to enable our customers to create, manage, and run large scale events, end users can now embed Teams town hall in sites they manage. This capability allows town hall attendees to watch the event instance within a particular website.

Embed for town hall introduces embeddable support for town hall engagement features on release. Attendees will have the same engagement feature access within the embed player as they do in client such as Q&A, Captions, Reactions and more.

To implement embed for town hall, organizers can access their town hall events unique embed code from the town hall scheduling template upon publish. Organizers can then take that code and inject it to their SharePoint or Microsoft 365 site as desired. On event start, attendees will be able to view and engage with the embed stream on the published site.

When this will happen:

Targeted Release: We will begin rolling out early November 2024 (previously early October) and expect to complete by mid-November 2024 (previously mid-October).

General Availability (Worldwide, GCC): We will begin rolling out early December 2024 (previously late October) and expect to complete by mid-December 2024 (previously late November).

How this will affect your organization:

Users will be able to copy embed code directly from town hall scheduling form on event publish. After the embeddable code is placed in the website, the town hall player will be accessible to attendees who have access to the URL.

Note: Attendee access to the embedded video player is driven by the town hall event access.

What you need to do to prepare:

Organizers with SharePoint or Microsoft 365 site who want to utilize embed support for town hall can begin implementing the embeddable code upon release.

MC889529 — (Updated) Microsoft Teams: Volume ratio setting for meeting participants using language interpretation

Microsoft 365 Roadmap ID 411569

Updated September 19, 2024: We have updated the rollout timeline below. Thank you for your patience.

In Microsoft Teams, meeting participants who use language interpretation will be able to control the volume ratio between the original meeting audio and the interpretation audio after they select the language to listen to in the meeting. This message applies to Teams on Windows desktop and Teams on Mac desktop. NOTE: Meeting organizers must enable language interpretation when setting up the meeting.

When this will happen:

Targeted Release: We will begin rolling out early November 2024 (previously early October) and expect to complete by mid-November 2024 (previously early October).

General Availability (Worldwide): We will begin rolling out mid-November 2024 (previously mid-October) and expect to complete by late November 2024 (previously late October).

General Availability (GCC): We will begin rolling out late November 2024 and expect to complete by early December 2024 (previously late November).

General Availability (GCC High): We will begin rolling out early December 2024 and expect to complete by mid-December 2024 (previously early December).

General Availability (DoD): We will begin rolling out late December 2024 (previously late December) and expect to complete by early January 2025 (previously late December).

How this will affect your organization:

Before this rollout: Meeting participants using language interpretation are not able to control the volume ratio of the original meeting audio and the interpretation audio.

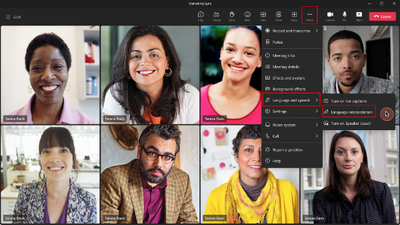

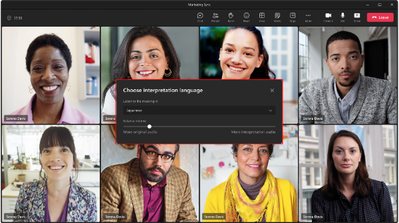

After this rollout: If a meeting organizer has enabled language interpretation when setting up the meeting, participants will be asked which language they want to listen when they first join the meeting, or they can go to the More menu > Language and speech > Language interpretation to choose a language:

After choosing a language, participants will see the control for the volume ratio of the original meeting audio and the interpretation audio:

What you need to do to prepare:

This rollout will happen automatically by the specified date with no admin action required before the rollout. You may want to notify your users about this change and update any relevant documentation.

Learn more: Use language interpretation in Microsoft Teams meetings – Microsoft Support (will be updated before rollout)

MC889524 — Microsoft Teams Panels: Reminder to update to avoid disruption

As communicated in Message center post MC798318 about retirement of CNS Service API support for older Android clients, this change impacts Teams Devices including Teams panels. To avoid disruption, an update to Teams panels version 1449/1.0.97.2024071105 or greater is required for Teams panels devices.

Microsoft Teams devices are governed by the Modern Lifecycle Policy and require users to stay on the most up-to-date version of the Teams panels application. Automatic updates ensure that users have the latest capabilities, performance and security enhancements, and service reliability.

Learn more about the Modern Lifecycle Policy under the Servicing agreement for Microsoft Teams.

How this will affect your organization:

The retirement of the CNS service API impacts automatic check-in through Teams Rooms devices. Team panels application version 1449/1.0.97.2024071105 and later versions are not impacted as they use the new and improved notification service APIs.

When this will happen:

On September 30, 2024, Microsoft will stop supporting the legacy services infrastructure for chat messaging, which impacts Teams panels devices on earlier app versions than 1449/1.0.97.2024071105.

What you need to do to prepare:

Update your Teams panels device to latest Teams panels application version or app version greater than 1449/1.0.97.2024071105 as soon as possible.

Manual update process:

In Teams Admin Center, select the device category under Teams Devices group. Select all respective devices from the list and select Update.

Under Manual Updates, select Teams application and press Update button to update to the latest application. If all your devices are eligible to receive Automatic Updates, use that option to get to the latest Teams app version.

Automatic update process:

To help bring the devices to the latest versions, automatic updates will continue at an accelerated pace to ensure adherence to the retirement timeline. Updates can include both firmware and Teams app updates, as applicable to bring the device to the latest versions. These updates will be scheduled outside of normal business hours in the local time of the device, to help minimize any impact to your organization. Since it is important that the devices get updated as soon as possible, the configured maintenance window for the devices will not be adhered to.

For organizations with users around the globe, we recognize that “outside of normal business hours” might affect you differently. We apologize for the impact this may have on your users.

MC888871 — Reminder Teams Phones impacted by retirement of CNS Service API support: Software Application Update Required

As communicated previously about retirement of CNS Service API support for older Android clients, this change impacts Teams Devices including Teams Phone devices. To avoid disruption, an update to Teams phone application version 1449/1.0.94.2024011003 or greater is required for Teams Phone devices.

These updates must be completed before September 30, 2024, to avoid disruption.

Microsoft Teams devices are governed by the Modern Lifecycle Policy and require users to stay on the most up-to-date version of the Teams phone devices application. Automatic updates ensure that users have the latest capabilities, performance and security enhancements, and service reliability.

When will this happen:

By the end of September 2024, Microsoft will stop supporting the legacy services infrastructure for chat messaging thus impacting Teams Phone devices on earlier app version than 1449/1.0.94.2024011003.

How this will affect your organization:

The retirement of the CNS service API incoming calls, voicemail and missed call notifications scenarios on Teams Phone devices. Teams phone devices app versions 1449/1.0.94.2024011003 and later are not impacted.

Update Teams Phone devices to latest Teams Phone app or an app version greater than 1449/1.0.94.2024011003.

Manual update process:

- In Teams Admin Center, select the device category under Teams Devices group. Select all respective devices from the list and select Update.

- Under Manual Updates, select Teams application and press Update button to update to the latest application. If all your devices are eligible to receive Automatic Updates, use that option to get to the latest Teams app version.

Automatic update process:

- To help bring the devices to the latest versions, automatic updates will continue at an accelerated pace to ensure adherence to the retirement timeline. Updates can include both firmware and Teams app updates, as applicable to bring the device to the latest versions. These updates will be scheduled outside of normal business hours in the local time of the device, to help minimize any impact to your organization. Since it is important that the devices get updated as soon as possible, the configured maintenance window for the devices will not be adhered to.

For organizations with users around the globe, we recognize that “outside of normal business hours” might affect you differently. We apologize for the impact this may have on your users.

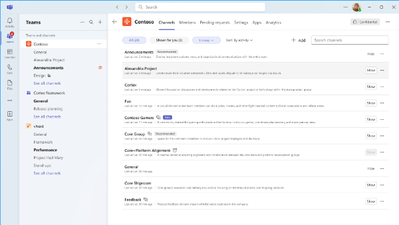

MC887377 — (Updated) Microsoft Teams: Refreshed view of all your teams and channels page

Microsoft 365 Roadmap ID 411779

Updated September 30, 2024: We have updated the last image below. Thank you for your patience.

Coming soon for Microsoft Teams:

- An updated view of all teams on one Your teams page.

- An updated Manage Team > Channels page, helping team owners and team members to filter, search and manage channels in the team from one page. Team members will be able to navigate to the list of channels from the Your teams page with a single click.

This message applies to Teams on Windows desktop, Mac desktop, and on the web.

When this will happen:

Targeted Release: We will begin rolling out early October 2024 and expect to complete by early October 2024.

General Availability (Worldwide): We will begin rolling out mid-October 2024 and expect to complete by late October 2024.

General Availability (GCC, GCCH, DoD): We will begin rolling out early December 2024 and expect to complete by early December 2024.

How this will affect your organization:

Before this rollout, Team owners and members can hover on the team name on the left side of Teams, select the three dots, and go to Manage team to individually manage the team and its channels. Team owners and members can see all the teams they belong to from the Your teams page. Selecting a team on the Manage teams page takes Team owners and members to the team’s General channel.

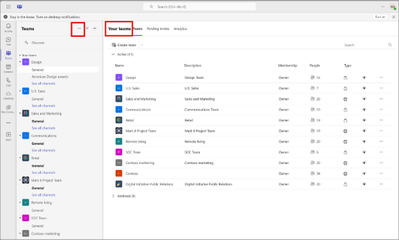

The Manage team page with the Channels tab open: Access this page from the three dots next to the team name in the left panel in Teams:

The Your teams page shows all teams you belong to. Access this page from the three dots in the upper right corner of the left panel in Teams:

After this rollout:

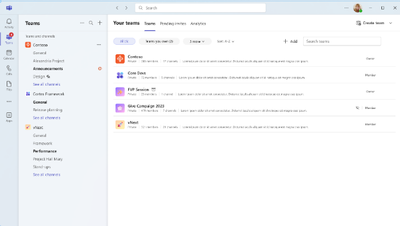

- Team owners and members will be able to view their teams from the Your teams page, and access channels in each team. To navigate to the Your teams page, select Your teams and channels from the three dots in the top right corner of the left panel:

- Team owners and members can select a team name to review the channels in the team and choose channels of interest to show in Teams:

- Team owners and members can search and sort on the Your Teams page or hide all channels. When all channels of a team are hidden, the team will not show in the Teams and channels list on the left side of Teams.

- Team owners and members can also view the channels in the team from the Manage Team > Channels page, and show channels of interest by filtering, sorting, and searching for channels in the team from one page.

These features will be on by default.

What you need to do to prepare:

This rollout will happen automatically by the specified date with no admin action required before the rollout. You may want to notify your Team owners and members about this change and update any relevant documentation.

MC886601 — Microsoft Teams displays impacted by retirement of CNS Service API support: Upgrade Required

In June 2024, we communicated in MC798318 Reminder | Microsoft Teams Mobile: Support is retiring for legacy services infrastructure for chat messaging that affected older Android clients. This retirement also affects Microsoft Teams devices, including Teams displays. To avoid disruption to your Teams displays, you will need to update to Teams displays version 1449/1.0.95.2024062804 before September 30, 2024.

Microsoft Teams devices are governed by the Microsoft Modern Lifecycle Policy and require users to stay on the most up-to-date version of the Teams displays application. Automatic updates ensure that users have the latest capabilities, performance and security enhancements, and service reliability. Learn more: Modern Lifecycle Policy – Microsoft Lifecycle | Microsoft Learn and Lifecycle FAQ – Modern Policy | Microsoft Learn

When this will happen:

Worldwide and GCC: On September 30, 2024, Microsoft will stop supporting the legacy services infrastructure for chat messaging, which affects Teams displays devices on earlier app versions than 1449/1.0.95.2024062804.

How this will affect your organization:

The retirement of the legacy services infrastructure for chat messaging affects notifications (chats, missed calls, incoming calls, and voicemails may not work). Team displays application version 1449/1.0.95.2024062804 and later versions are not affected because they use the new and improved notification service APIs.

What you need to do to prepare:

Update your Teams displays to 1449/1.0.95.2024062804 as soon as possible.

Manual update process

- In the Teams admin center > Teams devices, select the device category. Select all devices that need to be updated from the list and then select Update.

- Under Manual updates, select the Teams app and then select the Update button to update to the latest application. If all your devices are eligible to receive automatic updates, use that option to get to the latest Teams app version.

Automatic update process

To help upgrade devices to the latest versions, automatic updates will continue at an accelerated pace to ensure adherence to the retirement timeline. Updates can include both firmware and Teams app updates as applicable. These updates will be scheduled outside of normal business hours in the local time of the device, to help minimize any impact to your organization. Because it is important that the devices get updated as soon as possible, we will not adhere to the configured maintenance window for the devices.

For organizations with users around the globe, we recognize that “outside of normal business hours” might affect you differently. We apologize for the impact this may have on your users.

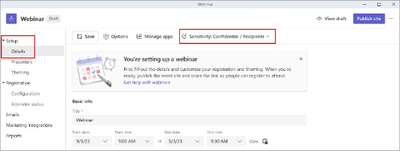

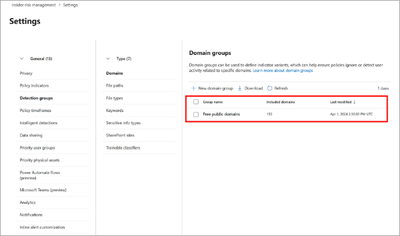

MC884018 — Microsoft Teams: Set sensitivity labels for town halls and webinar

Microsoft 365 Roadmap ID 409226

Coming soon for Microsoft Team Premium customers: Event organizers will be able to set sensitivity labels for town halls and webinars. Sensitivity labels allow organizations to protect and regulate access to sensitive content created through collaboration in Teams. Sensitivity labels are available only for Teams Premium users on Mac and Windows desktops.

When this will happen:

Targeted release: We will begin rolling out in early October 2024 and expect to complete by mid-October 2024.

General Availability (Worldwide, GCC): We will begin rolling out in mid-October 2024 and expect to complete by late October 2024.

How this will affect your organization:

Before this release: Sensitivity labels were not available for town halls and webinars.

After this rollout, events created or updated by an organizer with a Teams Premium license can apply a sensitivity label to their event. Organizers will be notified if their configured settings are not valid for the selected sensitivity. Specific options under Event access may be enabled or disabled based on the sensitivity label set for the event:

For upcoming events that have already been scheduled prior to rollout, the sensitivity of the event will be set to None. If an event organizer chooses to modify the sensitivity for a scheduled event after the rollout, this action may affect event settings that were previously configured.

What you need to do to prepare:

This rollout will happen automatically by the specified date with no admin action required before the rollout. You may want to notify your users about this change and update any relevant documentation.

Before rollout, we will update this post with revised documentation.

MC884009 — (Updated) Microsoft Teams: Start/stop recording on Teams Rooms on Windows

Microsoft 365 Roadmap ID 412072

Updated September 18, 2024: We have updated the rollout timeline below. Thank you for your patience.

Coming soon to Microsoft Teams Rooms on Windows: Users can start or stop recording meetings directly from a Teams Rooms on Windows device. This feature requires a Teams Rooms Pro license and Teams Rooms on Windows app version 5.2.

When this will happen:

General Availability (Worldwide, GCC): We will begin rolling out early December 2024 (previously mid-October) and expect to complete by mid-December 2024 (previously late October).

General Availability (GCC High): We will begin rolling out mid-December 2024 (previously mid-November) and expect to complete by mid-January 2024 (previously late November).

General Availability (DoD): We will begin rolling out early January 2024 (previously early December) and expect to complete by mid-January 2024 (previously mid-December).

How this will affect your organization:

Before the rollout: When attending a meeting using a Teams Rooms on Windows device, if users want to record the meeting, they need to also join the meeting on a companion device to initiate the meeting recording.

After the rollout

Start/stop recording is supported in any meeting where a participant belongs to the same tenant as the organizer, including scheduled meetings, ad-hoc meetings, channel meetings (only for channel members), and Teams Cast sessions. Users can select Start recording or Stop recording from the More menu, shown here at the bottom of the screen

When users start recording a Meet now or Whiteboard session initiated from a Teams Rooms on Windows device, users must ensure that they invite themselves to the meeting so they can access the meeting chat on their Teams desktop or mobile app where the recording file will be available after the meeting.

This feature will be on by default and available for admins to configure.

As an admin, you can allow or prevent users (including room accounts) from recording meetings with the Teams meeting recording policy:

NOTE: Both the meeting organizer and the recording initiator (room account) need to have recording permissions to record the meeting, or the start/stop recording button will not be available. Also, if the meeting organizer uses Meeting options to remove permissions from the room account to record the meeting, the start/stop recording button will not be available as well.

Start/stop recording is not supported in these scenarios:

- Teams calls including P2P, group, and PSTN calls

- External/cross-tenant Teams meetings and calls

- Third-party meetings and calls including Direct Guest Join and SIP

NOTE: Avoid assigning Microsoft OneDrive for Business licenses to your room accounts to ensure that IT-managed room accounts do not become owners of the recording files.

What you need to do to prepare:

After your Teams Rooms devices are updated to the 5.2 app, configure the desired meeting recording policy, notify your users about this change, and update your training and documentation as appropriate. This rollout will happen automatically by the specified date with no admin action required before the rollout.

MC883202 — (Updated) Microsoft Teams in VDI: Rollout schedule of “New Teams only” policy (Worldwide, GCC)

Updated September 26, 2024: We have updated the rollout timeline below. Thank you for your patience.

As we approach October 1, 2024, when support ends for classic Teams in VDI (Virtualized Desktop Infrastructure), we are finalizing the rollout schedule of new Teams as previously communicated in MC803007 (Updated) Teams in VDI: Expiring UseNewTeamsClient policy and updated new Teams rollout schedule (Worldwide, GCC) (published June 2024 and updated July 2024) and End of availability for classic Teams client – Microsoft Teams | Microsoft Learn:

When this will happen:

General Availability (Worldwide): We will begin rolling out on September 9, 2024, and expect to complete by September 24, 2024 (previously September 12).

General Availability (GCC): We will begin rolling out on September 16, 2024, and expect to complete by October 4, 2024 (previously September 19).

How this will affect your organization:

Starting September 9, 2024, the Teams admin center policy UseNewTeamsClient under TeamsUpdateManagement will be overwritten to New Teams only on the schedule in this message. This means that a new Teams client will be installed automatically (if not present already) and will become the default client. The toggle to return to classic Teams will not be available.

This policy value override will only apply to persistent VDI environments where classic Teams is version 1.7.00.7956 or newer.

For non-persistent environments, admins will continue to control the rollout of the new Teams client with golden/master image updates, and the value of the existing policy in the Teams admin center will be honored for users in non-persistent VDI environments.

Classic Teams installation details

- Persistent environments: Classic Teams was installed with the .exe installer (user installation in Appdata/local).

- Non-persistent environments: Classic Teams was installed with the .msi installer (machine-wide installation in C:Program Files (x86)).

Microsoft will not attempt to uninstall classic Teams. See MC806095 Classic Microsoft Teams: Uninstallation paused for Windows only (June 2024).

What you need to do to prepare:

Review the main VDI article and your current configuration to determine the impact for your organization. Please notify your users about this change and update relevant documentation as appropriate.

MC862236 — (Updated) Microsoft Teams: External presenters can join on mobile

Microsoft 365 Roadmap ID 409228

Updated September 26, 2024: We have updated the rollout timeline below. Thank you for your patience.

In Microsoft Teams, town halls and webinars currently support the ability for an external user outside the organization to present in an event only through the Teams desktop. With this update, external presenters will have the option to join the event through the Teams mobile app on their Android or iOS devices.

Note: It is currently not supported for external presenters to join a town hall or webinar through web platforms.

When this will happen:

General Availability (Worldwide, GCC): We will begin rolling out in late September 2024 (previously mid-September) and expect to complete by early October 2024 (previously late September).

General Availability (GCC High, DoD): We will begin rolling out in mid-October 2024 and expect to complete by early November 2024.

How this will affect your organization:

Presenters external to the organization will now be able to join events through their mobile devices.

For more information on external presenters, see Schedule a town hall in Microsoft Teams.

What you need to do to prepare:

This rollout will happen automatically with no admin action required. You may want to notify your users about this change and update any relevant documentation as appropriate.

MC856755 — (Updated) Microsoft Teams Rooms on Windows: New native Microsoft-defined camera controls in camera settings

Microsoft 365 Roadmap ID 409537

Updated September 26, 2024: We have updated the rollout timeline below. Thank you for your patience.

Coming soon in Microsoft Teams Rooms on Windows: Cameras that have implemented Microsoft-defined camera controls for Active Speaker Framing, Group Framing, and Edge Composed IntelliFrame will see controls for these features in the Camera settings pop-up menu.

When this will happen:

General Availability (Worldwide, GCC): We will begin rolling out late September 2024 (previously mid-September) and expect to complete by early October 2024 (previously late September).

General Availability (GCC High, DoD): We will begin rolling out early October 2024 and expect to complete by early November 2024.

How this will affect your organization:

Before this rollout: Controls for Active Speaker Framing, Group Framing, and Edge Composed IntelliFrame do not appear in the Camera settings pop-up menu for cameras that have implemented Microsoft-defined camera controls.

After this rollout: Users in a Teams Rooms on Windows with a camera connected that has implemented the controls as defined by Microsoft will see in-meeting controls for Active Speaker Framing, Group Framing, and Edge Composed IntelliFrame. Setting the default behavior for cameras with these controls will not be supported as part of this initial release, but we expect to support default setting controls at a later date. There are no related admin controls for this feature as part of this release.

What you need to do to prepare:

This rollout will happen automatically by the specified date with no admin action required before the rollout. You may want to notify your users about this new capability and update any supporting documentation for your users.

MC854652 — (Updated) Microsoft Teams: Meeting participants can choose a breakout room from a list

Microsoft 365 Roadmap ID 121269

Updated September 11, 2024: We have updated the rollout timeline below. Thank you for your patience.

Coming soon: Microsoft Teams meeting organizers can make the list of breakout rooms visible to all meeting participants and allow them to choose which room to join. Enabling this setting in breakout rooms allows participants to move freely from one room to another, simplifying breakout room coordination for meeting organizers and participants. This message applies to Teams for Windows, Mac, and the web.

When this will happen:

Targeted Release: We will begin rolling out early December 2024 (previously early October) and expect to complete by mid-December 2024 (previously mid-October).

General Availability (Worldwide, GCC): We will begin rolling out mid-December 2024 (previously mid-October) and expect to complete by early January 2025 (previously late October).

General Availability (GCC High, DoD): We will begin rolling out early January 2025 (previously early November) and expect to complete by late January 2025 (previously late November).

How this will affect your organization:

Before this rollout: Only the meeting organizer can assign meeting participants to breakout rooms. Participants cannot move between rooms.

After this rollout: Meeting participants can select their breakout rooms of choice if the meeting organizer selects this option during setup.

Organizers can look for the new feature under the breakout rooms panel when setting up breakout rooms.

This feature is on by default and accessible to all meeting participants on the affected platforms if enabled by meeting organizers.

What you need to do to prepare:

This rollout will happen automatically by the specified date with no admin action required before the rollout. You may want to notify your users about this change and update any relevant documentation.

MC818885 — (Updated) Microsoft Teams: Multiple camera view for Teams Rooms on Windows

Microsoft 365 Roadmap ID 402517

Updated September 26, 2024: We have updated the rollout timeline below. Thank you for your patience.

Microsoft Teams Rooms on Windows will be soon able to send up to four single-stream USB camera feeds to render on the receiver side, so remote meeting participants can view all cameras simultaneously. This opt-in feature requires admins to first enable the multiple camera view and map cameras to the desired order that will be displayed on the receiver side. With multiple camera view enabled, remote meeting participants will be able to follow all the action in the room and manually switch to the desired room. This message applies to Teams for Desktop (Windows, Mac).

When this will happen:

Targeted release: We will begin rolling out early November 2024 (previously mid-October) and expect to complete by mid-November 2024 (previously late October).

General Availability (Worldwide): We will begin rolling out mid-November 2024 (previously early November) and expect to complete by late November 2024 (previously mid-November).

General Availability (GCC): We will begin rolling out in mid-November 2024 (previously mid-October) and expect to complete by early December 2024 (previously late October).

How this will affect your organization:

Before the rollout

Multiple camera views are not available in Teams Rooms on Windows.

After the rollout

A multiple camera view can create more visibility and coverage for large and complex spaces, such as multipurpose rooms, classrooms, and executive boardrooms. Admins can set up multiple camera views in two ways:

- Admins can configure settings on a local device by turning on the Multiple camera view toggle and using the dropdown menu to map the cameras.

- Admins can configure multiple camera view using Remote Access in the Microsoft Teams Rooms Pro Management portal.

After the feature is enabled, in-room participants can disable multiple camera view with the Camera chevron button on the meeting console. This action will return the device to a single camera view, and all receiver-side participants will see a single camera view. This in-room setting will only affect that meeting and the device will revert to the admin settings for the next meeting.

Remote participants will see the multiple camera view by default. On the top right corner of the room’s video tile, a remote participant can use the arrows to switch to the desired camera view. This toggle will only affect the remote participant’s own view and will not affect other meeting participants.

What you need to do to prepare:

To prepare for the change, create a plan for the rooms that may require multiple cameras in consideration of the space, meeting scenario, and the desired experience for in-room and remote participants. Then, configure the multiple camera view on the local device or in the Teams Pro Management portal and notify your users about this new experience. You may want to update relevant training documentation.

Before rollout, we will update this post with revised documentation.

MC816179 — (Updated) Microsoft Teams: Bidirectional Support for Teams Live Interpretation

Microsoft 365 Roadmap ID 403103

Updated September 11, 2024: We have updated the rollout timeline below. Thank you for your patience.

With bidirectional support in Teams Live Interpretation, interpreters can now switch the translation direction between two languages by clicking on the button of the language they want to interpret into at the bottom of the screen. The highlighted language button will be the language the interpreter is translating into and attendees hear.

When this will happen:

Targeted Release: We will begin rolling out early September 2024 (previously early August) and expect to complete by mid-September 2024 (previously mid-August).

General Availability (Worldwide, GCC): We will begin rolling out mid-September 2024 (previously mid-August) and expect to complete by late September 2024 (previously late August).

General Availability (GCC High, DoD): We will begin rolling out late October 2024 (previously late September) and expect to complete by early November 2024 (previously early October).

How this will affect your organization:

The new bidirectional capability allows tenants to hire fewer interpreters to do live translation in Teams meetings, reducing the operation costs for tenants.

What you need to do to prepare:

This rollout will happen automatically with no admin action required. You may want to notify your users about this change and update any relevant documentation as appropriate.

MC814579 — (Updated) Microsoft Teams: The new Queues app for customer call management in Teams

Microsoft 365 Roadmap ID 379980

Updated September 19, 2024: We have updated the content. Thank you for your patience

Microsoft is pleased to announce the general availability of Queues app, a new Teams-native app designed to improve the management of customer calls. Integrated with Teams Phone, Queues app will allow team members to manage call queues more efficiently without leaving Teams. Queues app is available by default to all Teams Premium licensed users. This message applies to Teams for desktop.

When this will happen:

General Availability (Worldwide and GCC): We will begin rolling out early-October 2024 (previously mid-August) and expect to complete by mid-October 2024 (previously late August).

Existing Teams Premium customers are now eligible for Teams Premium Early Access for Queues app. Customers with Teams Premium licenses can now access the preview release of Queues app. For more information, please visit Manage Queues app for Microsoft Teams – Microsoft Teams | Microsoft Learn

How this will affect your organization:

Before the rollout: Teams users can receive and answer calls from Call queues directly from their Teams client, just like any other incoming call.

After the rollout: Teams Premium licensed users will be able to select View more apps in the left side of Teams and then select Queues app. Key features of Queues app includes:

- Real-time statistics: Provides an overview of metrics for Call queues, Auto attendants, and agents

- Historical reporting: Track past performance of Call queues, Auto attendants, and agents

- Agent opt-in and opt-out: Allows team members to opt-in and out of the queue based on availability. Authorized users can opt in or opt out on the behalf of agents.

- Collaborative call handling: Facilitates teamwork with call transfers and team communication through the People list.

- Outbound calls: Enables calls on behalf of call queues or auto attendants.

- Enhanced management: Authorized users now have delegated admin capabilities that allow for adding and removing queue members, changing call handing flows, configuring auto attendant greetings, and more.

What you need to do to prepare:

We recommend that you:

Learn more

MC814577 — (Updated) Microsoft Teams: Roster grouping for in-room participants through Proximity Join for Teams Rooms on Windows

Microsoft 365 Roadmap ID 400702

Updated September 25, 2024: We have updated the rollout timeline below. Thank you for your patience.

When joining a meeting in Teams Room on Windows with a companion device (laptop or mobile), in-room participants will be grouped under the room node. Participants must join through Proximity Join. This update includes additional UI elements to create visual clarity of the in-room participants who are together.

When this will happen:

General Availability (Worldwide): We will begin rolling out late September 2024 (previously mid-September) and expect to complete by mid-November 2024 (previously late September).

General Availability (GCC, GCC High, DoD): We will begin rolling out mid-November 2024 and expect to complete rollout by late November 2024 (previously late October).

How this will affect your organization:

This update is available by default if the companion device has enabled the Bluetooth setting and the Teams Room device has any one of the following XML configuration settings enabled:

On the roster, in-room participants will automatically be grouped under the room node. The two scenarios in which in-room participants can be ungrouped from the room node are:

- Raised hand: For raised hand, we continue to follow the sequential order of raised hands. Therefore, in-room participants who use their companion device to raise their hand will be broken up and ordered accordingly.

- Role assignment: For changes in roles (Presenters/Attendees), in-room participants can be broken up and placed in the appropriate category on the roster.

In both scenarios, the room information will be listed below the participant’s name.

What you need to do to prepare:

This rollout will happen automatically. You may want to notify your users about this change and update any relevant documentation as appropriate.

MC810407 — (Updated) Microsoft Teams Rooms on Windows: A Teams Room can be a Breakout Room participant

Microsoft 365 Roadmap ID 95680

Updated September 25, 2024: We have updated the rollout timeline below. Thank you for your patience.