by Contributed | Sep 19, 2023 | Technology

This article is contributed. See the original author and article here.

Microsoft Defender External Attack Surface Management (EASM) continuously discovers a large amount of up-to-the-minute attack surface data, helping organizations know where their internet-facing assets lie. Connecting and automating this data flow to all our customers’ mission-critical systems that keep their organizations secure is essential to understanding the data holistically and gaining new insights, so organizations can make informed, data-driven decisions.

In June, we released the new Data Connections feature within Defender EASM, which enables seamless integration into Azure Log Analytics and Azure Data Explorer, helping users supplement existing workflows to gain new insights as the data flows from Defender EASM into the other tools. The new capability is currently available in public preview for Defender EASM customers.

Why use data connections?

The data connectors for Log Analytics and Azure Data Explorer can easily augment existing workflows by automating recurring exports of all asset inventory data and the set of potential security issues flagged as insights to specified destinations to keep other tools continually updated with the latest findings from Defender EASM. Benefits of this feature include:

- Users have the option to build custom dashboards and queries to enhance security intelligence. This allows for easy visualization of attack surface data, to then go and perform data analysis.

- Custom reporting enables users to leverage tools such as Power BI. Defender EASM data connections will allow the creation of custom reports that can be sent to CISOs and highlight security focus areas.

- Data connections enable users to easily access their environment for policy compliance.

- Defender EASM’s data connectors significantly enrich existing data to be better utilized for threat hunting and incident handling.

- Data connectors for Log Analytics and Azure Data Explorer enable organizations to integrate Defender EASM workflows into the local systems for improved monitoring, alerting, and remediation.

In what situations could the data connections be used?

While there are many reasons to enable data connections, below are a few common use cases and scenarios you may find useful.

- The feature allows users to push asset data or insights to Log Analytics to create alerts based on custom asset or insight data queries. For example, a query that returns new High Severity vulnerability records detected on Approved inventory can be used to trigger an email alert, giving details and remediation steps to the appropriate stakeholders. The ingested logs and Alerts generated by Log Analytics can also be visualized within tools like Workbooks or Microsoft Sentinel.

- Users can push asset data or insights to Azure Data Explorer/Kusto to generate custom reports or dashboards via Workbooks or Power BI. For example, a custom-developed dashboard that shows all of a customer’s approved Hosts with recent/current expired SSL Certificates that can be used for directing and assigning the appropriate stakeholders in your organization for remediation.

- Users can include asset data or insights in a data lake or other automated workflows. For example, generating trends on new asset creation and attack surface composition or discovering unknown cloud assets that return 200 response codes.

How do I get started with Data Connections?

We invite all Microsoft Defender EASM users to participate in using the data connections to Log Analytics and/or Azure Data Explorer so you can experience the enhanced value it can bring to your data, and thus, your security insights.

Step 1) Ensure your organization meets the preview prerequisites

Aspect

|

Details

|

Required/Preferred

Environmental Requirements

|

Defender EASM resource must be created and contain an Attack Surface footprint.

Must have Log Analytics and/or Azure Data Explorer/ Kusto

|

Required Roles & Permissions

|

– Must have a tenant with Defender EASM created (or be willing to create one). This provisions the EASM API service principal.

– User and Ingestor roles assigned to EASM API (Azure Data Explorer)

|

Step 2) Access the Data Connections

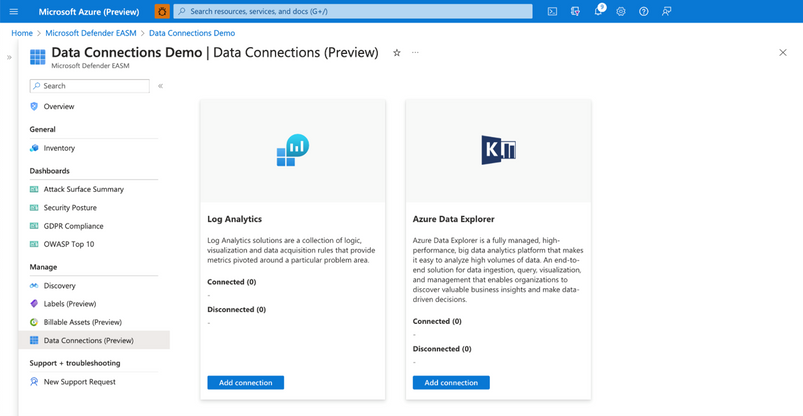

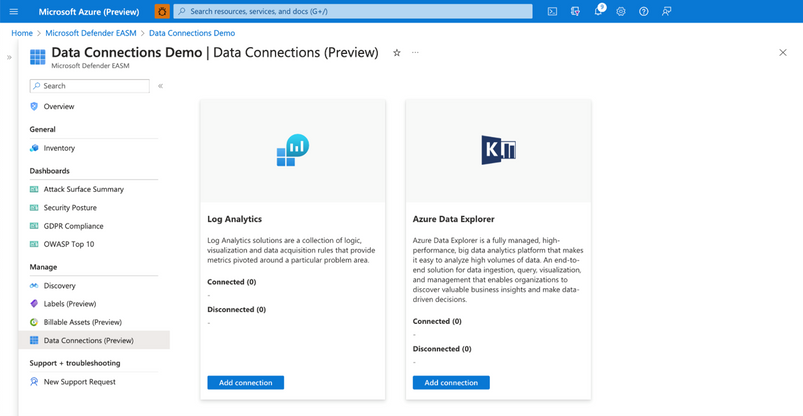

Users can access Data Connections from the Manage section of the left-hand navigation pane (shown below) within their Defender EASM resource blade. This page displays the data connectors for both Log Analytics and Azure Data Explorer, listing any current connections and providing the option to add, edit or remove connections.

Connection prerequisites: To successfully create a data connection, users must first ensure that they have completed the required steps to grant Defender EASM permission for the tool of their choice. This process enables the application to ingest our exported data and provides the authentication credentials needed to configure the connection.

Step 3: Configure Permissions for Log Analytics and/or Azure Data Explorer

Log Analytics:

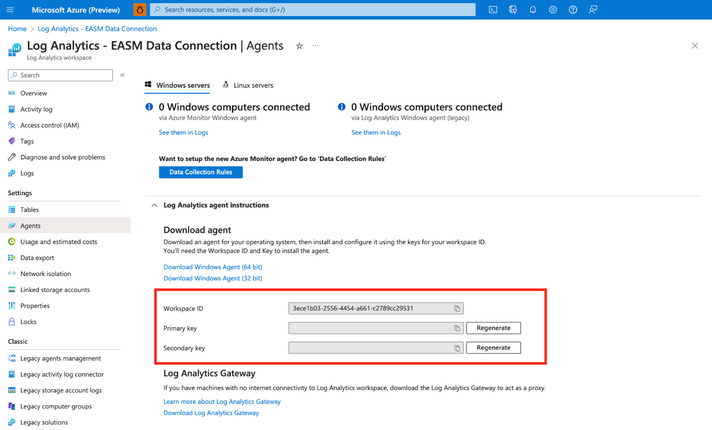

- Open the Log Analytics workspace that will ingest your Defender EASM data or create a new workspace.

- On the leftmost pane, under Settings, select Agents.

Azure Data Explorer:

- Expand the Log Analytics agent instructions section to view your workspace ID and primary key. These values are used to set up your data connection.

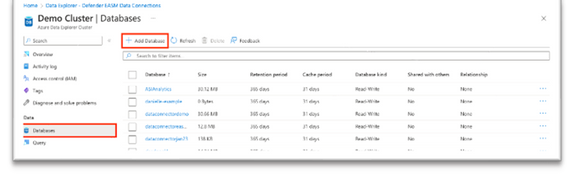

- Open the Azure Data Explorer cluster that will ingest your Defender EASM data or create a new cluster.

- Select Databases in the Data section of the left-hand navigation menu.

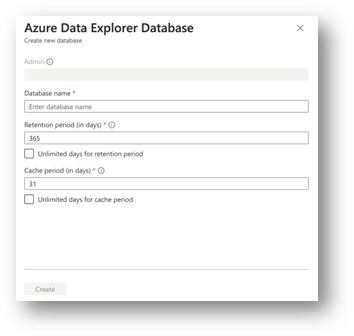

Select + Add Database to create a database to house your Defender EASM data.

4. Name your database, configure retention and cache periods, then select Create.

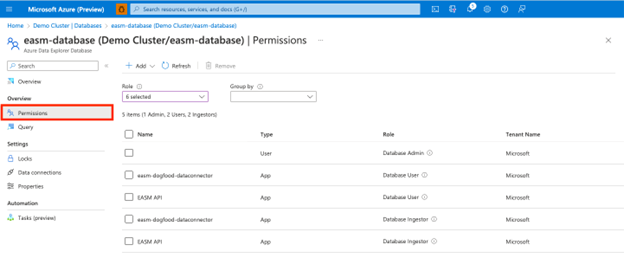

5. Once your Defender EASM database has been created, click on the database name to open the details page. Select Permissions from the Overview section of the left-hand navigation menu.

To successfully export Defender EASM data to Data Explorer, users must create two new permissions for the EASM API: user and ingestor.

6. First, select + Add and create a user. Search for “EASM API,” select the value, then click Select.

7. Select + Add to create an ingestor. Follow the same steps outlined above to add the EASM API as an ingestor.

8. Your database is now ready to connect to Defender EASM.

Step 4: Add data connections for Log Analytics and/or Azure Data Explorer

Log Analytics:

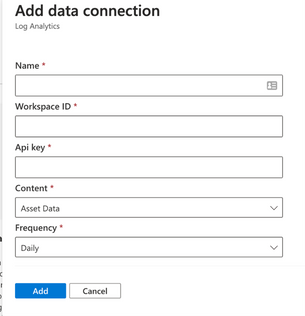

Users can connect their Defender EASM data to either Log Analytics or Azure Data Explorer. To do so, select “Add connection” from the Data Connections page for the appropriate tool. The Log Analytics connection addition is covered below.

A configuration pane will open on the right-hand side of the Data Connections screen as shown below. The following fields are required:

- Name: enter a name for this data connection.

- Workspace ID For Log Analytics, users enter the Workspace ID and the coinciding API key associated with their account.

- Api key Log Analytics users enter the API key associated with their account

- Content: users can select to integrate asset data, attack surface insights, or both datasets.

- Frequency: select the frequency that the Defender EASM connection sends updated data to the tool of your choice. Available options are daily, weekly, and monthly.

Azure Data Explorer:

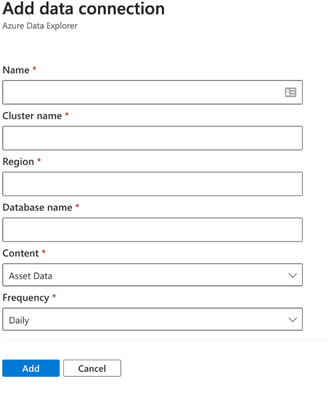

The Azure Data Explorer connection addition is covered below.

A configuration pane will open on the right-hand side of the Data Connections screen as shown below. The following fields are required:

- Name: enter a name for this data connection.

- Cluster name:

- Region: The region associated with Azure Data explorer

- Database: The database associated with the Azure Data explorer

- Content: users can select to integrate asset data, attack surface insights, or both datasets.

- Frequency: select the frequency that the Defender EASM connection sends updated data to the tool of your choice. Available options are daily, weekly, and monthly.

Step 5: View data and gain security insights

To view the ingested Defender EASM asset and attack surface insight data, you can use the query editor available by selecting the ”Logs” option from the left hand menu of the Azure Log Analytics Workspace you created earlier. These tables are also updated at the Data Connection configuration record frequency.

Extending Defender EASM Asset and Insights data, via these two new data connectors, into Azure ecosystem tools like Log Analytics and Data Explorer enables customers to orchestrate the creation of contextualized data views that can be operationalized into existing workflows and provides the facility and toolsets for analysts to investigate and develop new methods of applicative Attack Surface Management.

Additional resources:

by Contributed | Sep 18, 2023 | Technology

This article is contributed. See the original author and article here.

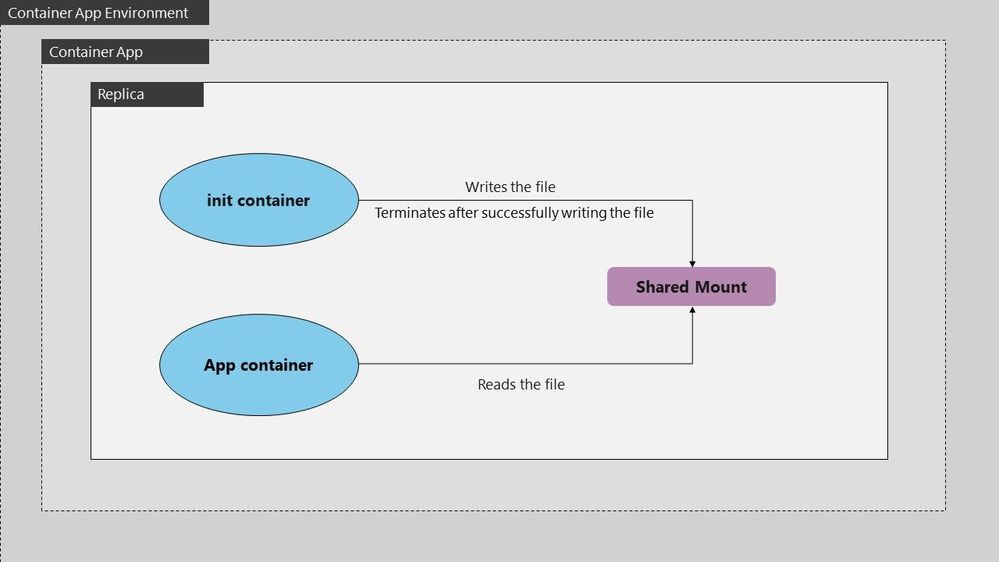

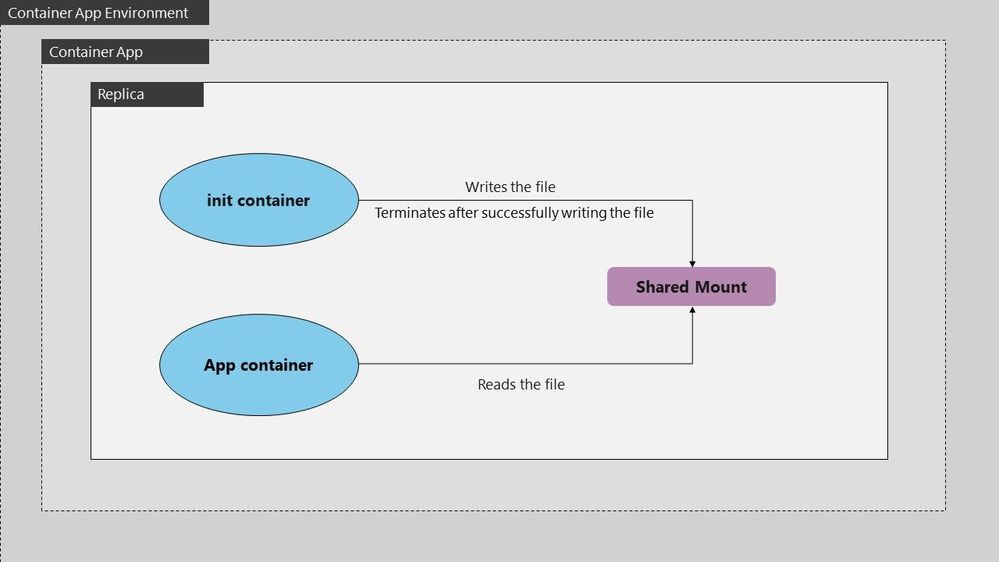

In some scenarios, you might need to preprocess files before they’re used by your application. For instance, you’re deploying a machine learning model that relies on precomputed data files. An Init Container can download, extract, or preprocess these files, ensuring they are ready for the main application container. This approach simplifies the deployment process and ensures that your application always has access to the required data.

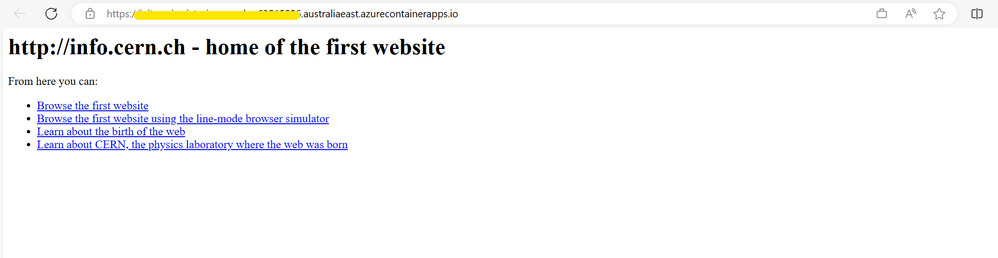

The below example defines a simple Pod that has an init container which downloads a file from some resource to a file share which is shared between the init and main app container. The main app container is running a php-apache image and serves the landing page using the index.php file downloaded into the shared file space.

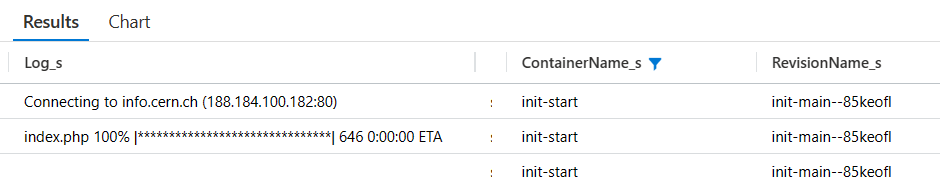

The init container mounts the shared volume at /mydir , and the main application container mounts the shared volume at /var/www/html. The init container runs the following command to download the file and then terminates: wget -O /mydir/index.php http://info.cern.ch.

Configurations and dockerfile for init container:

- Dockerfile for init which downloads an index.php file under /mydir:

FROM busybox:1.28

WORKDIR /

ENTRYPOINT ["wget", "-O", "/mydir/index.php", "http://info.cern.ch"]

Configuration for main app container:

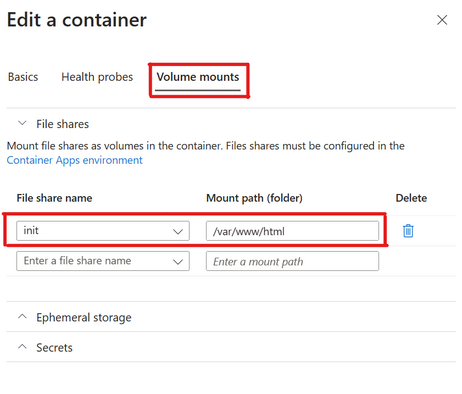

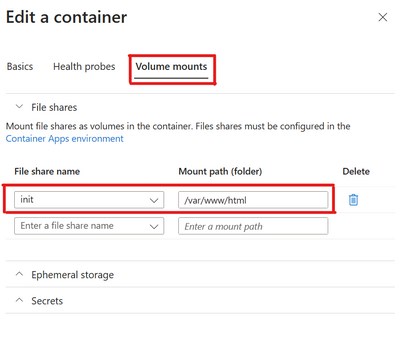

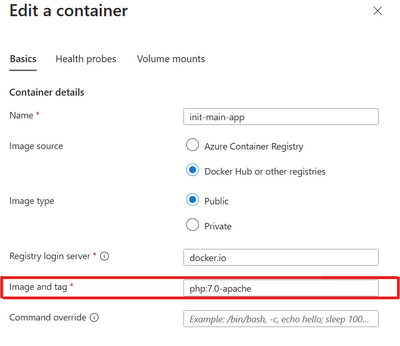

- Create main app container mounting file share named init on path /var/www/html:

- Main app container configuration which uses php-apache image and serves the index.php file from DocumentRoot /var/www/html:

Output:

Logs:

by Contributed | Sep 16, 2023 | Technology

This article is contributed. See the original author and article here.

Picture showing Intro to Copilots session banner

Picture showing Intro to Copilots session banner

Power Platform AI Global Hack. Please visit here for more details: http://aka.ms/hacktogether/powerplatform-ai

Build, innovate, and HackTogether! It’s time to get started building solutions with AI in the Power Platform! HackTogether is your playground for experimenting with the new Copilot and AI features in the Power Platform. With mentorship from Microsoft experts and access to the latest tech, you will learn how to build solutions in Power Platform by leveraging AI. The possibilities are endless for what you can create… plus you can submit your hack for a chance to win exciting prizes! ?

WHAT IS THE SESSION ABOUT?

This is the first full week of Hack Together: Power Platform AI Global Hack! Join the hacking:rocket:: https://aka.ms/hacktogether/powerplatform-ai

In this session you’ll learn about:

- Power Apps Copilots for building and editing desktop and mobile applications

- Power Automate Copilot for creating and editing automations

- Power Virtual Agents Copilot and conversation booster for creating intelligent chatbots

- Power Pages Copilot for creating business websites

You’ll get a high level overview of what you can do with these Copilots and get live demos of them in action! Please visit here for more details: https://aka.ms/hacktogether/powerplatform-ai

WHO IS IT AIMED AT?

This session is for anyone who likes to get into the weeds building apps and automations and are interesting in learning a skill that can accelerate their career. If you’re interested in how AI can help you build solutions faster and with more intelligence in the Power Platform then this session is for you!

WHY SHOULD MEMBERS ATTEND?

Build, innovate, and HackTogether! It’s time to get started building solutions with AI in the Power Platform! HackTogether is your playground for experimenting with the new Copilot and AI features in the Power Platform. With mentorship from Microsoft experts and access to the latest tech, you will learn how to build solutions in Power Platform by leveraging AI. The possibilities are endless for what you can create… plus you can submit your hack for a chance to win exciting prizes!

MORE LEARNING/PREREQUISITES:

To follow along, you need to access the onboarding resources here: https://aka.ms/hacktogether/powerplatform-ai

WATCH THIS VIDEO FOR PREPREQUISTITES

– You will need to create a free Microsoft 365 Developer Program account: https://aka.ms/M365Developers and a free Power Platform Developer Account: https://aka.ms/PowerAppsDevPlan

To view all the required environment setup, click here: https://aka.ms/hacktogether/powerplatform-ai and setup a Microsoft Developer Account and Power Platform Developer Account. This will give you access to all the services and Licenses you will need to follow along and build your own solution.

SPEAKERS:

April Dunnam – https://developer.microsoft.com/en-us/advocates/april-dunnam

by Contributed | Sep 15, 2023 | Technology

This article is contributed. See the original author and article here.

We are very happy to announce the private preview of Data Virtualization in Azure SQL Database. Data Virtualization in Azure SQL Database enables working with CSV, Parquet, and Delta files stored on Azure Storage Account v2 (Azure Blob Storage) and Azure Data Lake Storage Gen2. Azure SQL Database will now support: CREATE EXTERNAL TABLE (CET), CREATE EXTERNAL TABLE AS SELECT (CETAS) as well as enhanced OPENROWSET capabilities to work with the new file formats.

The list of capabilities available in private preview are:

Metadata functions:

Just like in SQL Server 2022 (Data Virtualization with PolyBase for SQL Server 2022 – Microsoft SQL Server Blog) and Azure SQL Managed Instance (Data virtualization now generally available in Azure SQL Managed Instance – Microsoft Community Hub), Data Virtualization in Azure SQL Database also supports updated metadata functions, wildcard search mechanism, and procedures that enables the users to query across different folders and leverage partition pruning, commands like:

- Filename();

- Filepath();

- sp_describe_first_result_set.

Benefits:

Major benefits of Data Virtualization in Azure SQL Database are:

- No data movement: Access real-time data where it is.

- T-SQL language: Ability to leverage all the benefits of the T-SQL language, its commands, enhancements, and familiarity.

- One source for all your data: Users and applications can use Azure SQL Database as a data hub, accessing all the required data in a single environment.

- Security: Leverage SQL security capabilities to simplify permissions, credential management, and control

- Export: Easily export data as CSV or Parquet to any Azure Storage location, either to empower other applications or reduce cost.

Getting started:

For simplicity, we are going to use publicly available NYC Taxi dataset (NYC Taxi and Limousine yellow dataset – Azure Open Datasets | Microsoft Learn) that allows anonymous access.

-- Create data source for NYC public dataset:

CREATE EXTERNAL DATA SOURCE NYCTaxiExternalDataSource

WITH (LOCATION = 'abs://nyctlc@azureopendatastorage.blob.core.windows.net');

-- Query all files with .parquet extension in folders matching name pattern:

SELECT TOP 1000 *

FROM OPENROWSET(

BULK 'yellow/puYear=*/puMonth=*/*.parquet',

DATA_SOURCE = 'NYCTaxiExternalDataSource',

FORMAT = 'parquet'

) AS filerows;

-- Schema discovery:

EXEC sp_describe_first_result_set N'

SELECT

vendorID, tpepPickupDateTime, passengerCount

FROM

OPENROWSET(

BULK ''yellow/*/*/*.parquet'',

DATA_SOURCE = ''NYCTaxiExternalDataSource'',

FORMAT=''parquet''

) AS nyc';

-- Query top 100 files and project file path and file name information for each row:

SELECT TOP 100 filerows.filepath(1) as [Year_Folder],

filerows.filepath(2) as [Month_Folder],

filerows.filename() as [File_name],

filerows.filepath() as [Full_Path]

FROM OPENROWSET(

BULK 'yellow/puYear=*/puMonth=*/*.parquet',

DATA_SOURCE = 'NYCTaxiExternalDataSource',

FORMAT = 'parquet') AS filerows;

-- Create external file format for Parquet:

CREATE EXTERNAL FILE FORMAT DemoFileFormat

WITH ( FORMAT_TYPE=PARQUET );

-- Create external table:

CREATE EXTERNAL TABLE tbl_TaxiRides(

vendorID VARCHAR(100) COLLATE Latin1_General_BIN2,

tpepPickupDateTime DATETIME2,

tpepDropoffDateTime DATETIME2,

passengerCount INT,

tripDistance FLOAT,

puLocationId VARCHAR(8000),

doLocationId VARCHAR(8000),

startLon FLOAT,

startLat FLOAT,

endLon FLOAT,

endLat FLOAT,

rateCodeId SMALLINT,

storeAndFwdFlag VARCHAR(8000),

paymentType VARCHAR(8000),

fareAmount FLOAT,

extra FLOAT,

mtaTax FLOAT,

improvementSurcharge VARCHAR(8000),

tipAmount FLOAT,

tollsAmount FLOAT,

totalAmount FLOAT

)

WITH (

LOCATION = 'yellow/puYear=*/puMonth=*/*.parquet',

DATA_SOURCE = NYCTaxiExternalDataSource,

FILE_FORMAT = DemoFileFormat

);

-- Query the external table:

SELECT TOP 1000 * FROM tbl_TaxiRides;

Private Preview Sign-up form:

Data Virtualization in Azure SQL Database is in active development, Private Preview users will help shape the future of the feature, with regular interactions with Data Virtualization product team. If you want to be part of the private preview a sign-up form is required and can be found here.

by Contributed | Sep 14, 2023 | Technology

This article is contributed. See the original author and article here.

Our Microsoft Learn community, along with the rest of the world, has experienced a time of great change over the last few years—the pandemic, a sudden shift to remote work, economic volatility, and huge leaps in the capabilities and implementation of AI, to name just a few. Times of change like these cause us all to reevaluate our priorities, how we operate, and what’s most important across all areas of our lives. Careers are no small part of that equation. We must continuously adapt to these new realities, whether we’re employees, employers, job seekers, educators, or leaders of organizations. Because of that impact, Microsoft Learn remains committed to leading the way with resources to help equip our learners and customers with technical skills to not only meet but thrive through the challenges of that ever-changing landscape.

What does ‘skills-first’ mean and why are we talking about it?

We’re always on the lookout for emerging trends so that we can bring you insights to help you succeed. The latest and most significant of these trends is a direct response to the massive global shifts we alluded to above, what the World Economic Forum refers to as “an accelerated shift towards a skills-based operating model for talent.” Simply put: whether you’re focused on your own career or on finding the right talent, a skills-centric mentality is becoming more essential.

How does this impact you? There are all sorts of reasons to engage with skilling content—you might have one or more of the following goals (featuring some great Microsoft Learn blogs on the subject!):

Whatever your objective, knowing how to find and feature the right skills is a game-changer, and we want to be part of your journey.

What to expect from Microsoft Learn through the end of October

Our ‘Skill-it-forward’ content throughout September and October will be focused on understanding the skills-first trend and why it’s important. We’ll also be highlighting the tools and resources you need to build your technical skills and expertise. You can expect the inside scoop about what’s new with Microsoft Learn (hint: we might have a few announcements to make…). We’ll offer resources across Microsoft Learn and beyond to help you not only navigate this skills-centric shift but use it to achieve your goals. And of course, we can’t leave out Tips & Tricks – we always have a few up our sleeve!

Make sure you’re following us on Twitter and LinkedIn, and are subscribed to “The Spark,” our recently enhanced LinkedIn newsletter so you don’t miss any of the exciting stuff we have planned!

Recent Comments