by Contributed | Oct 13, 2023 | Technology

This article is contributed. See the original author and article here.

The 23rd cumulative update release for SQL Server 2019 RTM is now available for download at the Microsoft Downloads site. Please note that registration is no longer required to download Cumulative updates.

To learn more about the release or servicing model, please visit:

Starting with SQL Server 2017, we adopted a new modern servicing model. Please refer to our blog for more details on Modern Servicing Model for SQL Server

by Contributed | Oct 12, 2023 | Technology

This article is contributed. See the original author and article here.

Bad actors can expose a new security vulnerability to initiate a DDoS attack on a customer’s infrastructure. This attack is leveraged against servers implementing the HTTP/2 protocol. Windows, .NET Kestrel, and HTTP .Sys (IIS) web servers are also impacted by the attack. Azure Guest Patching Service keeps customers secure by ensuring the latest security and critical updates are applied using Safe Deployment Practices on their VM and VM Scale Sets.

As the latest security fixes are released from Windows and other Linux distributions, Azure will apply them for customers opted into to either Auto OS Image Upgrades or Auto Guest Patching. By opting into the auto update mechanisms through Azure, customers can remain proactive against security issues rather than reacting to attackers. Customers not leveraging the auto update capabilities through Azure Guest Patching Service are recommended to update their fleet with the latest security updates (KB5031364 for Windows and fix for CVE-2023-44487 related to Open-Source Software distributions).

Without the latest security updates, organizations risk exposing their systems and data to potential security threats and web attacks. It is important for organizations to plan for this update to avoid any disruption to their business operations.

Microsoft recommends enabling Azure Web Application Firewall (WAF) on Azure Front Door or Azure Application Gateway to further improve security posture. WAF rate limiting rules are effective in providing additional protection against these attacks. See additional recommendations from Microsoft Security Response Center for this vulnerability.

Enabling Auto Update Features: Azure recommends the following features to ensure VM and VM Scale Sets are secured with the latest security and critical updates in a safe manner:

Auto OS Image Upgrades: Azure replaces the OS disk with the latest OS Image. Supports rollback and rolls the upgrade across scale sets throughout all the regions.

Auto Guest Patching: Azure applies the latest security and critical updates to an asset and rolls the update across the fleet throughout all the regions.

The recent announcement of a new security issue is an important reminder for organizations to stay current with their software solutions to avoid any security or performance issues. Azure continues to keep customers secure by rolling out the latest security updates through multiple mechanisms for VM and VM Scale Sets in a safe manner. Customers are recommended to leverage the auto update capabilities in Azure to ensure they remain proactive against bad actors.

by Contributed | Oct 11, 2023 | Technology

This article is contributed. See the original author and article here.

Welcome to the fall! This month’s Microsoft Syntex update is gearing up to be a great one in the world of content processing. We have updates on Syntex taxonomy tagging and image tagging; a set of Syntex capabilities coming to preview for pay-as-you-go users; the general availability of the Syntex optical character recognition (OCR) is expanding to include PDF and TIFF support; and lastly, both Syntex OCR and Syntex structured document processing are moving to general availability.

Syntex taxonomy tagging and Syntex image tagging in general availability

In our previous blog post, we shared that Syntex taxonomy tagging and image tagging were rolling into general availability. We’re happy to share that both services have now completed rollout and are generally available to all Syntex pay-as-you-go users.

As a refresher, Syntex Taxonomy Tagging uses AI to help you label and organize documents by automatically tagging them with descriptive keywords, based on your taxonomy defined in SharePoint. By applying a taxonomy column and enabling taxonomy tagging, the document is automatically tagged with keywords from your term store to help with searching, sorting, filtering and more. This reduces manual work, and makes it faster and more efficient to categorize, find, and manage files in your document libraries. Overview of taxonomy tagging in Microsoft Syntex – Microsoft Syntex | Microsoft Learn

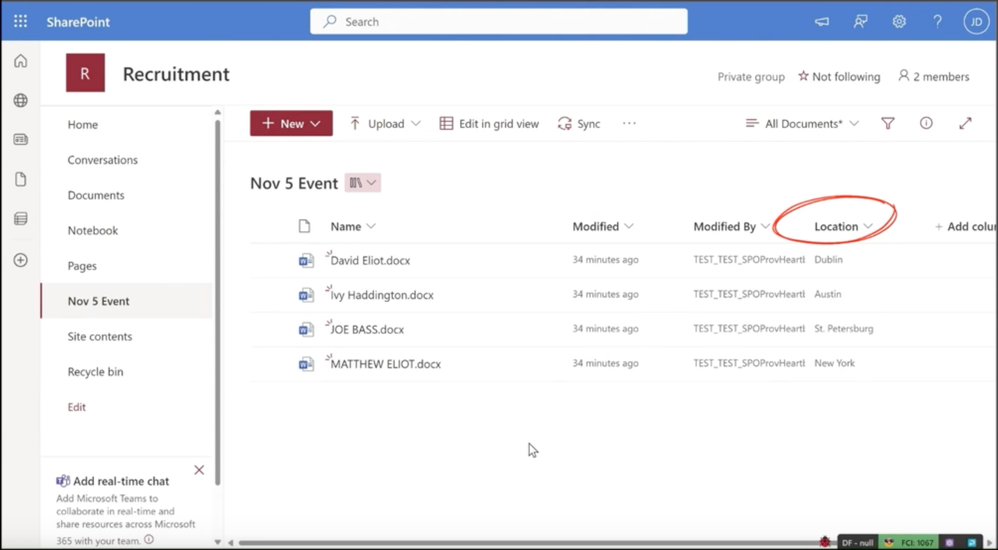

Taxonomy Tagging – the location column auto-populates based on your term store in this example

Syntex Image Tagging is now also generally available. Image Tagging is an AI-powered service that helps you label and organize images by automatically tagging them with descriptive keywords. These tags are stored as metadata to optimize searching, sorting, filtering, and managing your images. With this Syntex service, it’s much faster to categorize and search for specific images that you need. Overview of enhanced image tagging in Microsoft Syntex – Microsoft Syntex | Microsoft Learn

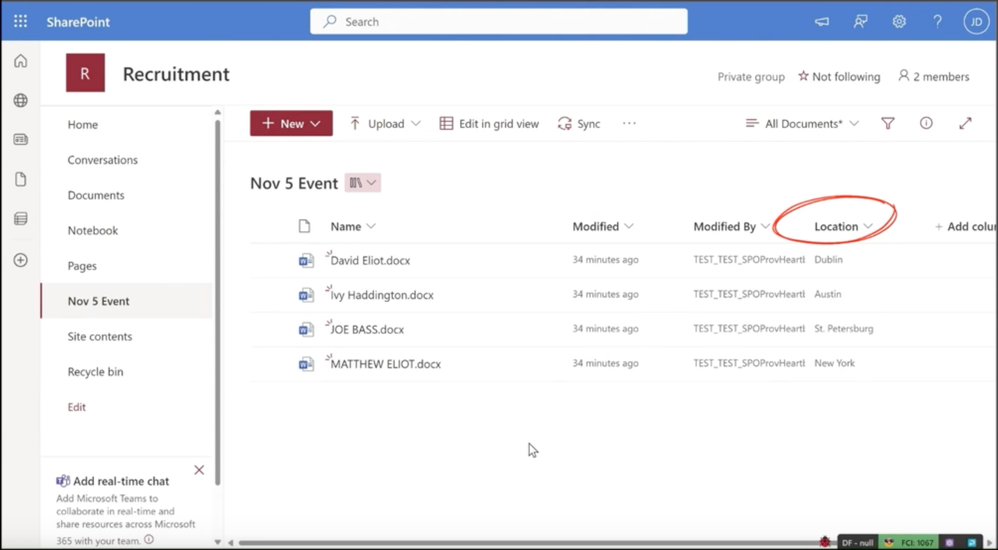

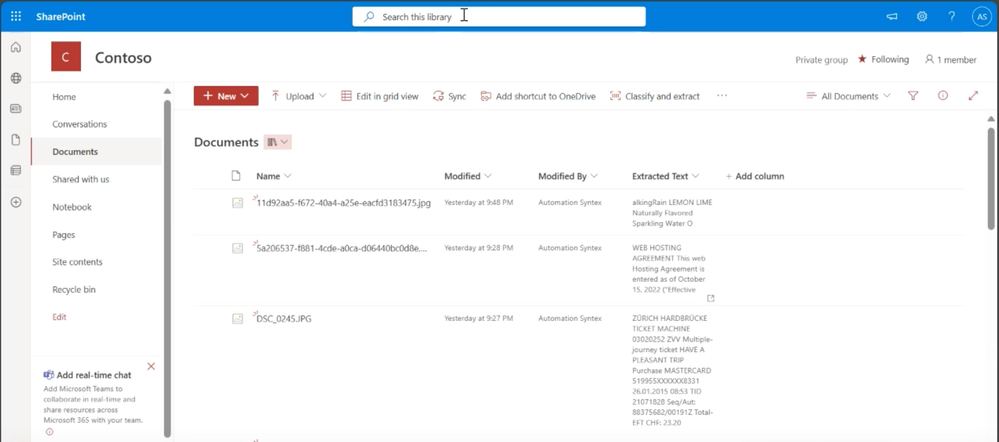

Image Tagging – images are auto-tagged with descriptive keywords

New features in preview for Syntex pay-as-you-go users

We’re excited to share that, for a limited time, Syntex pay-as-you-go users now get to use all the Syntex features previously only available to customers with the SharePoint Syntex seat license. If you’re not yet a Syntex customer, now is a particularly great time to give it a go. These services will be available as a preview through June 30, 2024.

1. Content query – an advanced, powerful search with custom metadata in a form-based interface

2. Universal annotation – add ink and highlights to additional file types like PDF & TIFF supported by our file viewer

3. Accelerators – preconfigured templates that leverage Syntex capabilities in an end-to-end solution for common scenarios like contract management and accounts payable

4. Taxonomy services – admin reporting on term set usage, easy import from SKOS-formatted taxonomies, and the ability to push a content type to a hub

5. Content processing rules – lightweight automation for common operations such as moving or copying a file, and setting a content type from the file name or path in SharePoint

6. PDF merge/extract – combine two or more PDF files into a new PDF file, or extract pages from one PDF into a new one

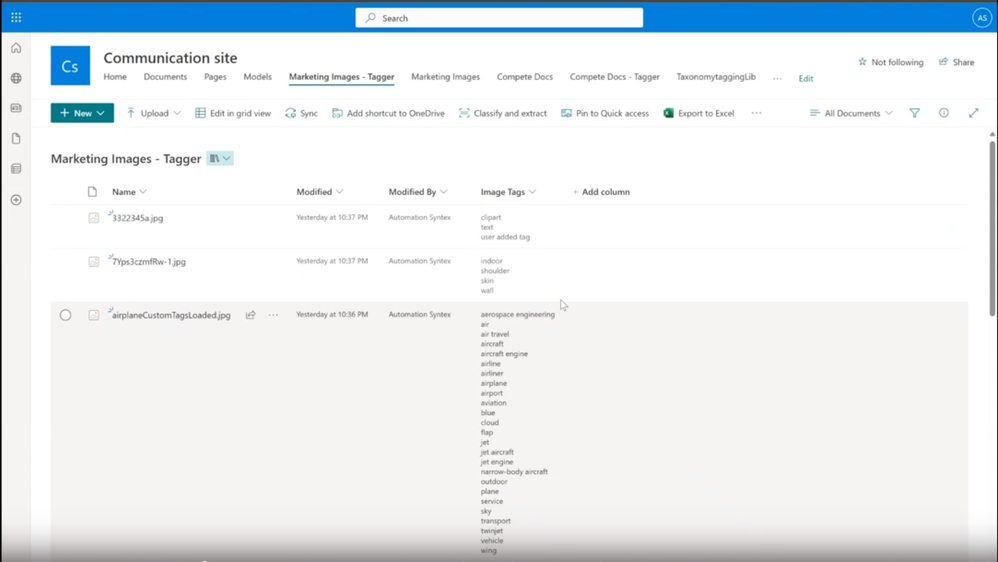

Site accelerator – preconfigured site templates for Accounts Payable

For Syntex pay-as-you-go customers, these capabilities will be readily available to you without taking any action or set up and without any additional charge. Microsoft Syntex features limited time license – Microsoft Syntex | Microsoft Learn

Syntex OCR and structured document processing will be generally available this month

Lastly, Syntex optical character recognition (OCR), which was previously in public preview, will be generally available this month! In images containing text – such as screenshots, scanned documents, or photographs – Syntex OCR automatically extracts the printed or handwritten text and makes it discoverable, searchable, and indexable.

It can be used for image-only files, now including PDF and TIFF as mentioned in the introduction, in OneDrive, SharePoint, Exchange, Windows devices and Teams messages. Searching for images is improved thanks to OCR, and IT admins can better secure images across OneDrive, SharePoint, Exchange, Teams and Windows devices with data loss prevention (DLP) policies.

Optical Character Recognition (OCR) auto-extracts text from images

You will also be able to use both the Syntex structured and freeform document processing features later this month when they become generally available as a new pay-as-you-go meter called “Structured document processing”. Unlike in the past, you will be able to use these services with your Azure subscription – no per-user license required, no AI Builder credits needed (but if you want to use AI Builder credits, we will still support that as well). Microsoft 365 roadmap ID 167309. Get started here.

Stay connected

And there you have it, lots of updates on Syntex services that will help your organization manage your content and improve content discovery, with less redundancy and greater efficiency. To get the latest on Syntex, join our mailing list for updates, and register for the upcoming October 18th Syntex Community Call.

Be sure to also connect with us at Microsoft Ignite, November 14-17, 2023, in Seattle or virtually!

by Contributed | Oct 10, 2023 | Technology

This article is contributed. See the original author and article here.

Author: Reems Thomas Kottackal, Product Manager

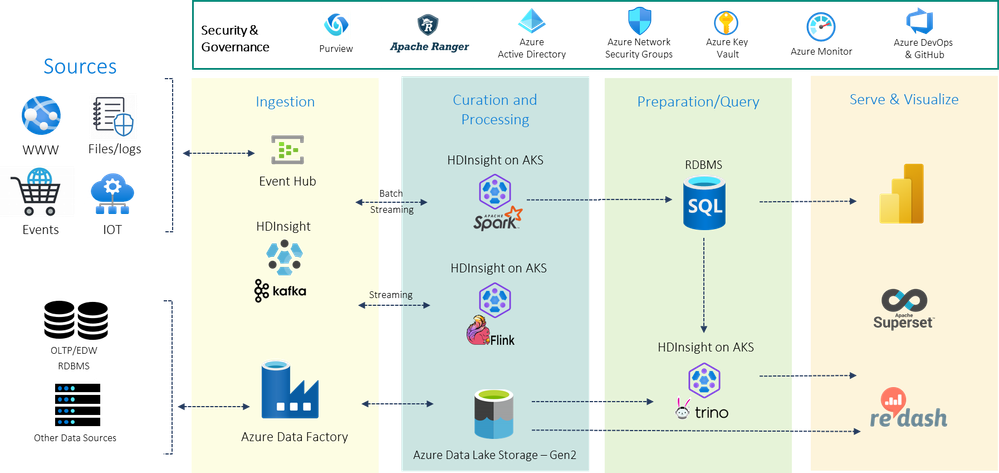

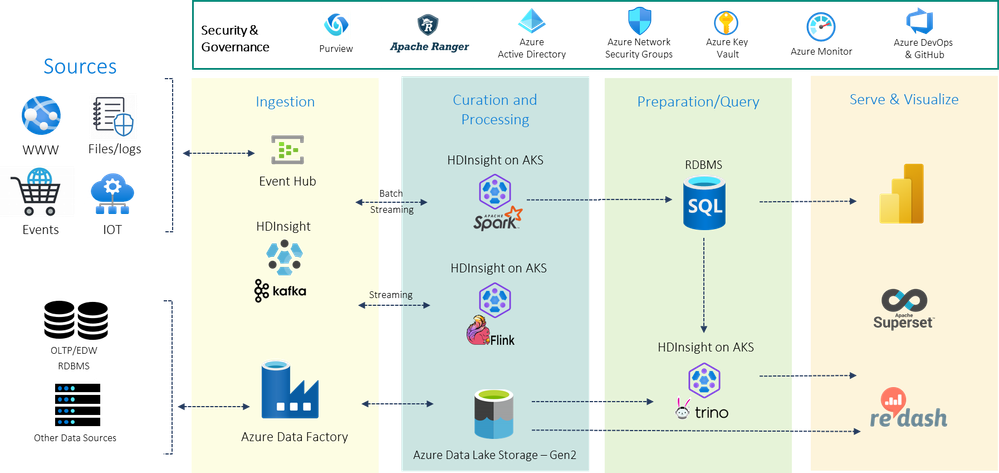

HDInsight on AKS is a modern, reliable, secure, and fully managed Platform as a Service (PaaS) that runs on Azure Kubernetes Service (AKS). HDInsight on AKS allows an enterprise to deploy popular open-source analytics workloads like Apache Spark, Apache Flink, and Trino without the overhead of managing and monitoring containers.

You can build end-to-end, petabyte-scale Big Data applications spanning event storage using HDInsight Kafka, streaming through Apache Flink, data engineering and machine learning using Apache Spark, and Trino‘s powerful query engine. In combination with Azure analytics services like Azure data factory, Azure event hubs, Power BI, Azure Data Lake Storage.

HDInsight on AKS can connect seamlessly with HDInsight. You can reap the benefits of using needed cluster types in a hybrid model. Interoperate with cluster types of HDInsight using the same storage and meta store across both the offerings.

The following diagram depicts an example of end-end analytics landscape realized through HDInsight workloads.

We are super excited to get you started, lets get to how?

by Contributed | Oct 10, 2023 | AI, Business, Microsoft 365, Microsoft Graph, Technology, Viva Insights, Viva Learning

This article is contributed. See the original author and article here.

We’re excited to announce a new AI-powered Skills in Viva service that will help organizations understand workforce skills and gaps, and deliver personalized skills-based experiences throughout Microsoft 365 and Viva applications for employees, business leaders, and HR.

The post Introducing Skills in Microsoft Viva, a new AI-powered service to grow and manage talent appeared first on Microsoft 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

Recent Comments