by Contributed | Nov 15, 2023 | Technology

This article is contributed. See the original author and article here.

By Quentin Miller

We are excited to announce the public preview of Liveness Detection, an addition to the existing Azure AI Face API service. Facial recognition technology has been a longstanding method for verifying a user’s identity in device and online account login for its convenience and efficiency. However, the system encounters escalating challenges as malicious actors persist in their attempts to manipulate and deceive the system through various spoofing techniques. This issue is expected to intensify with the emergence of generative AIs such as DALL-E and ChatGPT. Many online services e.g., LinkedIn and Microsoft Entra, now support “Verified Identities” that attest to there being a real human behind the identity.

Face Liveness Detection

Liveness detection aims to verify that the system engages with a physically present, living individual during the verification process. This is achieved by differentiating between a real (live) and fake (spoof) representation which may include photographs, videos, masks, or other means to mimic a real person.

Face Liveness Detection has been an integrated part of Windows Hello Face for nearly a decade. “Every day, people around the world get access to their device in a convenient, personal and secure way using Windows Hello face recognition”, said Katharine Holdsworth, Principal Group Program Manager, Windows Security. The new Face API liveness detection is a combination of mobile SDK and Azure AI service. It is conformant to ISO/IEC 30107-3 PAD (Presentation Attack Detection) standards as validated by iBeta level 1 and level 2 conformance testing. It successfully defends against a plethora of spoof types ranging from paper printouts, 2D/3D masks, and spoof presentations on phones and laptops. Liveness detection is an active area of research, with continuous improvements being made to counteract increasingly sophisticated spoofing attacks over time, and continuous improvement will be rolled out to the client and the service components as the overall solution gets hardened against new types of attacks over time.

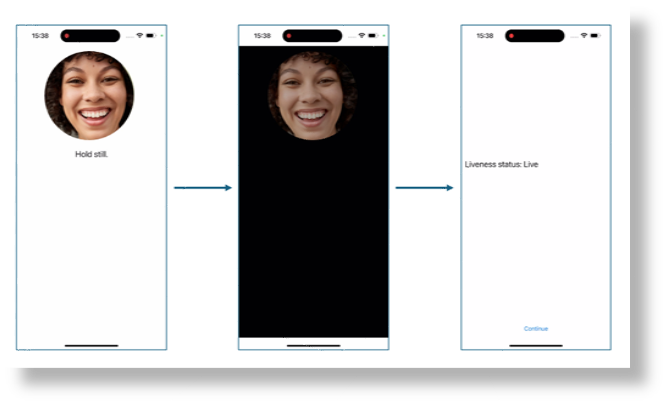

A simple illustration of the user experience from the SDK sample is shown below, where the screen background goes from white to black before the final liveness detection result is shown on the last page.

The following video demonstrates how the product works under different spoof conditions.

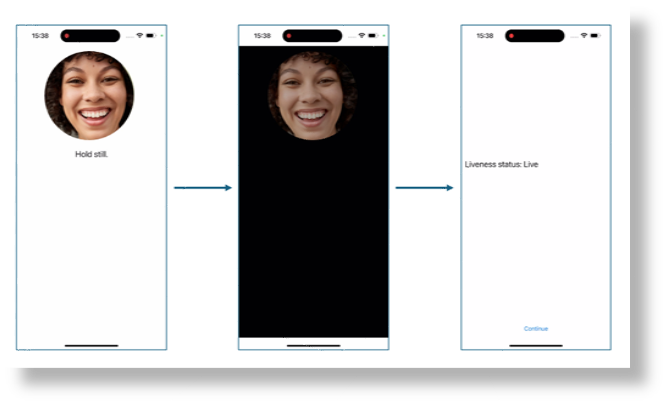

While blocking spoof attacks is the primary focus of the liveness solution, paramount importance is also given to allowing real users to successfully pass the liveness check with low friction. Additionally, the liveness solution complies with the comprehensive responsible AI and data privacy standards to ensure fair usage across demographics around the world through extensive fairness testing.

For more information, please visit: Empowering responsible AI practices | Microsoft AI.

Implementation

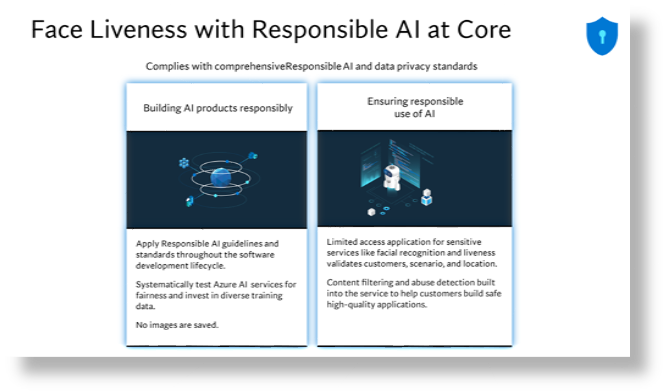

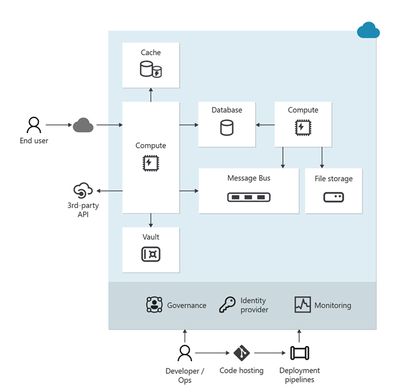

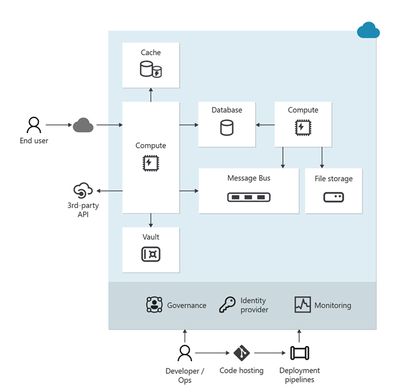

The overall liveness solution integration involves 2 different components: a mobile application and an app server/orchestrator.

Prepare mobile application to perform liveness detection

The newly released Azure AI Vision Face SDK for mobile (iOS and Android) allows for ease-of-integration of the liveness solution on front-end applications. To get started you would need to apply for the Face API Limited Access Features to get access to the SDK. For more information, see the Face Limited Access page.

Once you have access to the SDK, follow the instructions and samples included in the github-azure-ai-vision-sdk to integrate the UI and the code needed into your native mobile application. The SDK supports both Java/Kotlin for Android and Swift for iOS mobile applications.

Upon integration into your application, the SDK will handle starting the camera, guide the end-user to adjust their position, compose the liveness payload and then call the Face API to process the liveness payload.

Orchestrating the liveness solution

The high-level steps involved in the orchestration are illustrated below:

For more details, refer to the tutorial.

Face Liveness Detection with Face Verification

Integrating face verification with liveness detection enables biometric verification of a specific person of interest while ensuring an additional layer of assurance that the individual is physically present.

For more details, refer to the tutorial.

by Contributed | Nov 15, 2023 | Business, Microsoft 365, Technology, Work Trend Index

This article is contributed. See the original author and article here.

At Microsoft Ignite 2023, we are announcing new innovations across Microsoft Copilot—one copilot experience that runs across all our surfaces, understanding your context on the web, on your PC, and at work to bring the right skills to you when you need them across work and life.

The post Introducing Microsoft Copilot Studio and new features in Copilot for Microsoft 365 appeared first on Microsoft 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

by Contributed | Nov 15, 2023 | AI, Azure, Business, Microsoft 365, Technology

This article is contributed. See the original author and article here.

At Microsoft Ignite 2023, we’re excited to announce Microsoft Copilot Studio, a low-code tool to customize Microsoft Copilot for Microsoft 365 and build standalone copilots.

The post Announcing Microsoft Copilot Studio: Customize Copilot for Microsoft 365 and build your own standalone copilots appeared first on Microsoft 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

by Contributed | Nov 14, 2023 | Technology

This article is contributed. See the original author and article here.

I am excited to announce a comprehensive refresh of the Well-Architected Framework for designing and running optimized workloads on Azure. Customers will not only get great, consistent guidance for making architectural trade-offs for their workloads, but they’ll also have much more precise instructions on how to implement this guidance within the context of their organization.

Background

Cloud services have become an essential part of the success of most companies today. The scale and flexibility of the cloud offer organizations the ability to optimize and innovate in ways not previously possible. As organizations continue to expand cloud services as part of their IT strategies, it is important to establish standards that create a culture of excellence that enables teams to fully realize the benefits of the modern technologies available in the cloud.

At Microsoft, we put huge importance on helping customers be successful and publish guidance that teaches every step of the journey and how to establish those standards. For Azure, that collection of adoption and architecture guidance is referred to as Azure Patterns and Practices.

The Patterns and Practices guidance has three main elements:

Each element focuses on different parts of the overall adoption of Azure and speaks to specific audiences, such as WAF and workload teams.

What is a workload?

The term workload in the context of the Well-Architected Framework refers to a collection of application resources, data, and supporting infrastructure that function together towards a defined business goal.

Well-architected is a state that is achieved and maintained through design and continuous improvement. You optimize through a design process that results in an architecture that delivers what the business needs while minimizing risk and expense.

For us, the workload standard of excellence is defined in the Well-architected Framework – a set of principles, considerations, and trade-offs that cover the core elements of workload architecture. As with all of the Well-architected Framework content, this guidance is based on proven experience from Microsoft’s customer-facing technical experts. The Well-architected Framework continues to receive updates from working with customers, partners, and our technical teams.

Today we have published updates across each of the core pillars of WAF which represent a huge amount of experience and learning from across Microsoft.

The refreshed and expanded Well-Architected Framework brings together guidance to help workload teams design, build, and optimize great workloads in the cloud. It is intended to shape discussions and decisions within workload teams and help create standards that should be applied continuously to all workloads.

Details of the refreshed Well-Architected Framework

Over the past six months, Microsoft’s cloud solution architects refreshed the Well-Architected Framework by compiling the learnings and experience of over 10,000 engagements that had leveraged the WAF and its assessment.

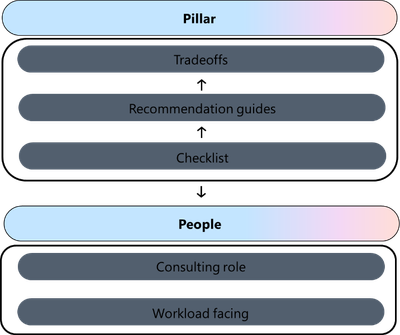

All five pillars of the Well-Architected Framework now follow a common structure that consists exclusively of design principles, design review checklists, trade-offs, recommendation guides, and cloud design patterns.

Design principles. Presents goal-oriented principles that build a foundation for the workload. Each principle includes a set of recommended approaches and the benefits of taking those approaches. The principles for each pillar have changed in terms of content and coverage.

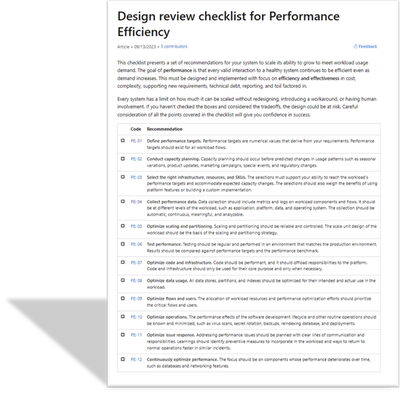

Design review checklists. Lists roughly codified recommendations that drive action. Use the checklists during the design phase of your new workload and to evaluate brownfield workloads.

Trade-offs. Describes tradeoffs with other pillars. Many design decisions force a tradeoff. It’s vital to understand how achieving the goals of one pillar might make achieving the goals of another pillar more challenging.

Recommendation guides. Every design review checklist recommendation is associated with one or more guides. They explain the key strategies to fulfill that recommendation. They also include how Azure can facilitate workload design to help achieve that recommendation. Some of these guides are new, and others are refreshed versions of guides that cover a similar concept.

The recommendation guides include trade-offs along with risks.

- This icon indicates a trade-off:

- This icon indicates a risk:

Cloud design patterns. Build your design on proven, common architecture patterns. The Azure Architecture Center maintains the Cloud Design Patterns catalog. Each pillar includes descriptions of the cloud design patterns that are relevant to the goals of the pillar and how they support the pillar.

The Well-Architected Review assessment has also been refreshed. Specifically, the “Core Well-Architected Review” option now aligns to the new content structure in the Well-Architected Framework. Every question in every pillar maps to the design review checklist for that pillar. All choices for the questions correlate to the recommendation guides for the related checklist item.

Using the guidance

The Well-architected Framework is intended to help workload teams throughout the process of designing and running workloads in the cloud.

Here are three key ways in which the guidance can help your team be successful:

- Use the Well-architected Framework as the basis for your organization’s approach to designing and improving cloud workloads.

- Establish the concept of achieving and maintaining a state of well-architected as a best practice for all workload teams

- Regularly review each workload to find opportunities to optimize further – use learnings from operations and new technology capabilities to refine elements such as running costs, or attributes aligned to performance, reliability, or security

To learn more, see the new hub page for the Well-Architected Framework: aka.ms/waf

Dom Allen has also created a great, 6-minute video on the Azure Enablement Series.

by Contributed | Nov 13, 2023 | Technology

This article is contributed. See the original author and article here.

v:* {behavior:url(#default#VML);}

o:* {behavior:url(#default#VML);}

w:* {behavior:url(#default#VML);}

.shape {behavior:url(#default#VML);}

Meiko Lopez

Normal

Joe Cicero

2

277

2023-11-09T21:37:00Z

2023-11-09T21:37:00Z

3

719

4101

34

9

4811

16.00

0x010100A389A42144AF8541BAAFF21480674075

Clean

Clean

false

false

false

false

EN-US

JA

AR-SA

Service Delivery Manager Profile: Meiko Lopez

“Be steadfast in the truth – providing comfort and assurance to the customers that you will help them to a resolution.”

-Meiko Lopez, Sr. Product Manager

In the dynamic world of cybersecurity, heroes emerge to safeguard our customers’ digital realm. One such hero is Meiko Lopez, a senior product manager within Microsoft’s service delivery arm for the Defender Experts for XDR service. With almost 13 years of experience at Microsoft, Meiko brings a wealth of knowledge, skills, and a unique approach to the cybersecurity landscape.

Who is Meiko Lopez?

Meiko (pronounced mee-ko) Lopez introduces herself: “My name is Meiko Lopez, and I am a service delivery manager (SDM) for the Microsoft Defender Experts for XDR service.” Her journey at Microsoft has been nothing short of extraordinary.

What did you do before becoming an SDM?

Meiko’s journey at Microsoft is a testament to her dedication and versatility. She started as a technical account manager, now known as a customer success account manager. In this role, she served as a “Cyber Champ,” assisting enterprise customers in crafting and executing their IT strategies and aligning them with Microsoft’s solutions. Her early experiences also included roles as a project manager, where she helped customers recover and rebuild their infrastructure after cybersecurity breaches, enhancing their security posture for the long term.

Meiko’s transition to a cyber architect role further showcased her technical prowess and leadership. Through these diverse roles, she developed a strong network of partnerships and honed both her business acumen and technical skills. These experiences have enabled her to help customers reach new heights in their cybersecurity journey.

What is your typical “day in the life” of an SDM?

Meiko’s day as an SDM is filled with activities aimed at enhancing customer satisfaction and service performance. She conducts operational syncs with her customers, discussing service health and alignment with their business objectives. These discussions also serve as an avenue for collecting feedback and suggestions for operational, technical, and relationship improvements.

In addition to customer interactions, Meiko collaborates with other product managers to share customer feedback and prioritize needs. She engages with her team members on various initiatives that enhance visibility both inside and outside their organization, making the Defender Experts for XDR service even more powerful. Her day wraps up by inputting insights from various stakeholders to track backlog items that ultimately improve the service.

How do you customize your approach for each customer?

Meiko’s success lies in her ability to customize her partnership with each customer. She takes the time to understand their unique business and operational context. She reviews notes from past conversations, connects with internal stakeholders aligned to the customer, and reviews Defender Experts operational insights to identify areas that will demonstrate value during their discussions. Active engagement with the team of analysts also provides a deeper understanding of the customer’s environment and how they can improve technically.

How do you balance technical expertise with the human element?

In the world of cybersecurity, balancing technical expertise with the human element is paramount. Meiko emphasizes the importance of empathy in high-stakes situations. She believes that technical understanding, coupled with the ability to convey information effectively to the appropriate audience, is the key to success. Communication is the linchpin that ensures resolution and instills confidence in customers.

Meiko’s advice to those aspiring to enter the cybersecurity field is simple yet powerful: “Go for it. Gain the foundation and grow from there. There are so many facets of cybersecurity that the possibilities are endless. Network and connect with those in cybersecurity to get a first-hand account of what your future could entail. Find what works best for you.”

What are some of your unique strengths and qualities?

Beyond her technical skills, Meiko’s unique strengths and qualities benefit her customers in cybersecurity. She’s known for her personable nature, which fosters trust and credibility. In the cybersecurity space, trust is everything, and Meiko’s ability to connect with others has proven invaluable. Her calm demeanor in critical situations provides assurance to customers that they have a dedicated and dependable ally in their corner.

Meiko Lopez’s journey is a testament to the diverse opportunities and paths within the cybersecurity field, and her commitment to excellence serves as an inspiration for those entering this dynamic and vital field.

Recent Comments