by Contributed | Dec 5, 2023 | Technology

This article is contributed. See the original author and article here.

We’re excited to spotlight our Microsoft Security Experts Discussion Space—a dedicated community designed for cybersecurity practitioners to connect, share insights, and learn together. As we embark on this journey, we want to provide some tips on how you can kickstart and actively participate in discussions, fostering a vibrant and collaborative community of practice.

Getting Started: Tips for Users

- Explore Community Topics: Engage in discussions on a variety of topics, such as delving into Defender Experts features, crafting advanced hunting queries, or leveraging Graph API for automation. These are just starting points—feel free to suggest and explore your own areas of interest.

Ask Questions: Don’t hesitate to ask questions, whether you’re a beginner seeking guidance or an expert looking for a fresh perspective. Our community is here to help.

Share Your Expertise: If you have experience in a particular area, share your insights and tips. Your knowledge can be incredibly valuable to others.

Engage in Conversations: Participate in ongoing discussions by providing feedback, sharing your experiences, or offering alternative viewpoints. Engaging with others is key to building a thriving community.

Let’s Get the Conversation Going!

To kick things off, we invite you to check out our most recent thread regarding Sentinel Automation based on Defender Experts Notifications (DENs). This community is a space for collaboration, and your input shapes its growth. Remember, a vibrant community is built on active participation. So, let’s ignite conversations, share knowledge, and make this tech community a hub of inspiration and expertise!

by Contributed | Dec 4, 2023 | Technology

This article is contributed. See the original author and article here.

In past roles, I spent hundreds of hours with colleagues developing our annual strategic plan. We created committees, subcommittees, and teams to identify priorities and initiatives grounded to the organization’s mission and vision. We would ambitiously set our goals for the year and even identify success metrics. While intentions were good, our strategic outcomes always fell short.

Why? This conventional approach took too long, lacked accountability and the rigid planning cycle inhibited our ability to adapt to changing organization needs and shifting priorities. We got stuck in the “set and forget” cycle of setting goals at the beginning of the year and never knowing if our everyday activities at the individual contributor and team levels even rolled up to those institutional goals.

At Microsoft we recognize a defined set of corporate goals not only instills a sense of purpose, accountability, and agility but also helps companies mitigate uncertainty.

How do we escape the organizational goal-setting rut?

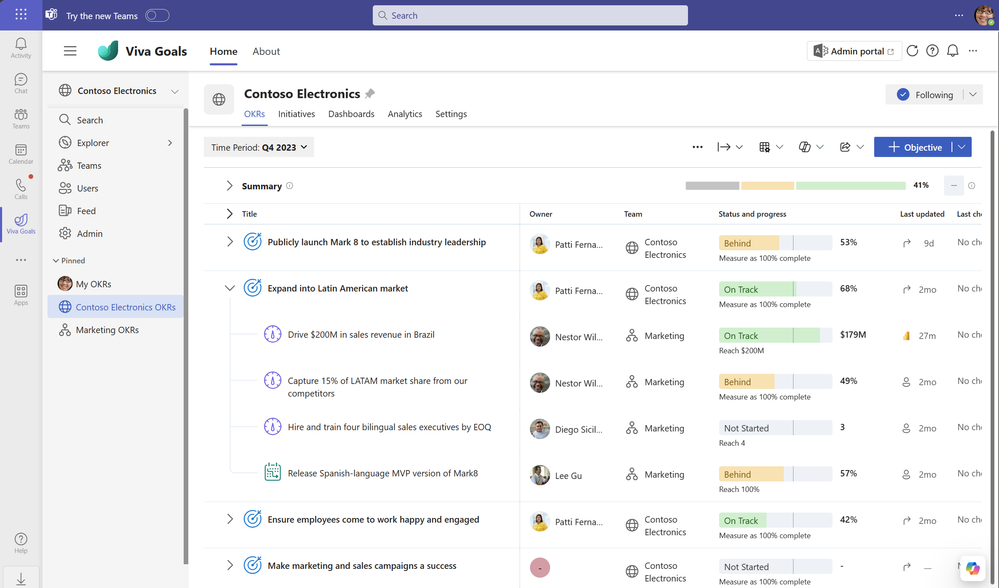

In today’s environment when organizations are required to operate faster and more efficiently, having an adaptable goal planning and management processes are essential. Microsoft Viva Goals is a goal management solution that helps ease goal planning and management. Viva Goals helps companies escape the goal-setting rut with capabilities to communicate goals across the organization, measure progress, and realign priorities as needed.

CAI, a global consulting firm, shared how Microsoft Viva Goals helped boost their business agility and enable operational alignment by streamlining its goal planning and management processes. Historically CAI relied on disparate Excel spreadsheets, making it difficult to align strategies across the company. Viva Goals provided them with one centrally managed solution that readily supported an adaptable goal setting strategy. With Viva Goals, CAI was able to move away from Excel spreadsheets and a conventional, linear approach.

“We don’t create a traditional business plan now. We use Viva Goals with our customer-facing project teams to manage progress, align strategy, and create accountability to high-level objectives.”

– Richard Tree: Chief Operating Officer, CAI

Today they are successfully setting business goals, objectives and key results (OKRs) and aligning teams to organizational priorities while at the same time delivering consistent and high-quality services to their customers. They are able to stay agile and responsive to their customers’ needs by modernizing their approach to goal setting.

Read more about CAI’s Viva Goals journey to learn how they were able to boost business agility and operational alignment by adoption an OKR framework.

Bridging the gap between goal setting strategy and execution

Another issue companies face in their goal setting journey is a disconnect between strategy and execution. Commonly, corporate goals are set at the leadership level and not communicated to business units and teams within a company. This makes it hard for individuals to know if their work is impacting the company’s bottom line.

To bridge this gap and help break the “set and forget” cycle, Viva Goals enables leaders to send regular updates across the organization, teams, and even individual views. With better transparency, internal teams are able to align priorities so they can achieve results together.

Microsoft customer, OC Tanner, discussed their journey from goal setting strategy to successful execution with Viva Goals. Regarded as a manufacturing leader and pioneer of the employee recognition industry, they realized that to maintain their leadership position, they needed clear and concise goals communicated across the organization. They chose Viva Goals to help them focus on the most critical priorities.

“Viva Goals helps us keep OKRs established and aligned for the appropriate planning periods and creates visibility across the entire team.”

– Jason Andersen: Vice President of Product, O.C. Tanner

Today OC Tanner incorporates OKRs into all planning and collaborative processes. Viva Goals also plays a key role in their semiannual and quarterly planning processes, which have helped to align everyone on their tasks and ensure their work is laddering up to the big picture. In short, OC Tanner successfully bridged the gap between strategy and execution with Viva Goals.

Read more about how OC Tanner was able to create “unity in focus” with

Viva Goals.

Adapting a continuous goal-management framework

Consistent with my own planning experience described in the introduction, many organizations find goal setting arduous. To help better understand the goal setting challenges, Microsoft commissioned a study by Forrester Consulting, 2023 State Of Goal Setting Report.

One of the findings Forrester reported was that although goal setting provides structure and a common vision, the lack of organization wide visibility to corporate goals can hinder teams from meeting their goals. In addition, Forrester also reported that company leaders who regularly revisit and set goals to align with the rhythm of business, were not doing so at other levels of the organization. This also results in employees feeling disconnected and unmotivated with the goal setting process.

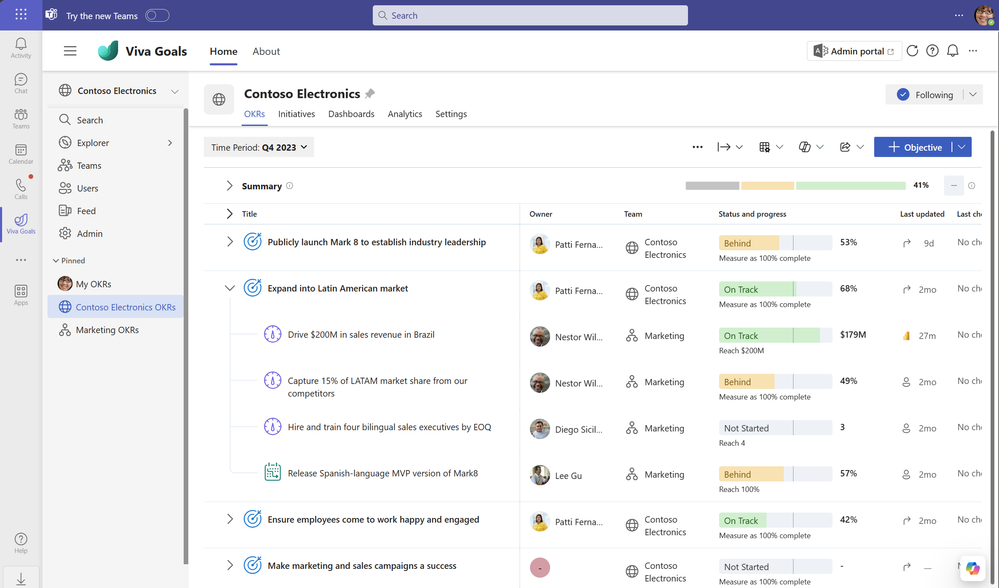

At Microsoft, we believe a continuous goal-setting planning lifecycle is an important component to success. The continuous planning cycle involves shorter planning cycles, ability to measure and report progress, analyze performance using scenario-based models, adjust priorities as needed, and repeat for the next evaluation cycle. This approach allows companies to make necessary adjustments to meet their corporate goals and help keep everyone focused on the right work.

Viva Goals provides companies with the tools to define agile processes and framework for planning, measuring, analyzing and adjusting priorities as needed. With the ability to connect to the project management and data tools employees use regularly, such as Azure Dev Ops, Microsoft Project, Microsoft Planner and Power BI, Viva Goals can provide visibility into how progress on daily work is impacting goals.

Using next generation AI from Copilot, Viva Goals helps power organizations to streamline the entire goal management process from start to finish. Whether it be quarterly or monthly planning cycles, Viva Goals enables companies to readily measure, analyze and adjust goals on a regular basis. For more information on how Viva Goals supports continuous planning lifecycle, watch Viva Goals video.

Aligning OKRs across the organization leads to stronger engagement and motivation

In July 2023 we hosted a Viva Goals webinar, “Where are we going?”: How to chart your Viva Goals journey, where we asked more than 100 participants what their biggest goal setting challenge was. Not surprisingly, alignment was the top answer.

Sample responses from participants in our July 2023 webinar

In our experience it’s not just getting alignment at the onset of the goal setting process, it’s also maintaining alignment across the organization. Microsoft customer, Svea Solar, relies on Viva Goals to help employees connect to the company’s founding purpose from the moment they are hired.

“We use Viva Goals to demystify what’s important for each function and get people up to speed faster.”

– Wilhelm Kugelberg: Strategy & Business Development Manager, Svea Solar

Sharing corporate goals provides new hires understand with what they need to do and, more importantly, why their work is important. Svea Solar quickly noticed that aligning employees on OKRs from the moment they are hired, led to stronger engagement and motivation to focus on the right things.

Consistent with Svea Solar’s experience, at Microsoft we also recommend a top-down alignment for stronger impact. Just as important is cross-functional alignment within and across groups to help reduce redundancies as well as leverage the power of meaningful collaboration to achieve operational excellence.

Read more about how Viva Goals helped Svea Solar achieve more and drive efficiency by defining distinct goals.

Viva Goals empowers teams

Effective goal setting not only can lead to stronger alignment, but also empowers teams to work together for greater impact. Goal setting requires commitment, communication, and top-down alignment. In addition, it also requires communication and transparency so employees at all levels understand their contributions and impact. Viva Goals provides companies with the capabilities to be successful in their goal setting journey.

For successful adoption across your organization here are some best practices we have learned in our own journey:

- Get executive buy-in to the process and commitment to provide clarity on vision and overall objectives.

- Identify OKR champions to scale to and coach individual teams for consistent quality standard. At Microsoft, our OKR champions helped ensure a reasonable number of OKRs are selected for each team which fostered teams to focus on a few items for stronger impact.

- Develop and manage organizational OKRs as an outcome of continuous strategic planning cycles and not as a separate, distinct activity. At Microsoft, our OKR champions meet monthly to share best practices and ensure teams are creating clear OKRs on high-level objectives.

- Adjust OKRs and priorities on a regular basis with dynamic goal-management practices.

- Provide visibility at all levels so leadership team can see progress towards objectives and individual contributors have visibility into how their work contributes to objectives.

To better support your goal setting journey, technology plays a key role in helping to ease the process. Viva Goals provides visibility into organization objectives, enables transparency for both leaders and individual contributors, offers a dashboard to readily measure progress, and integrates with Microsoft ecosystem tools such as Teams, Outlook, PowerBI, Excel and PowerPoint, for easier communication, reporting, and integration.

For questions on Viva Goals, leave us a comment or question below. We are happy to answer your questions on Viva Goals.

For more information on Viva Goals, check out these resources

by Contributed | Dec 2, 2023 | Technology

This article is contributed. See the original author and article here.

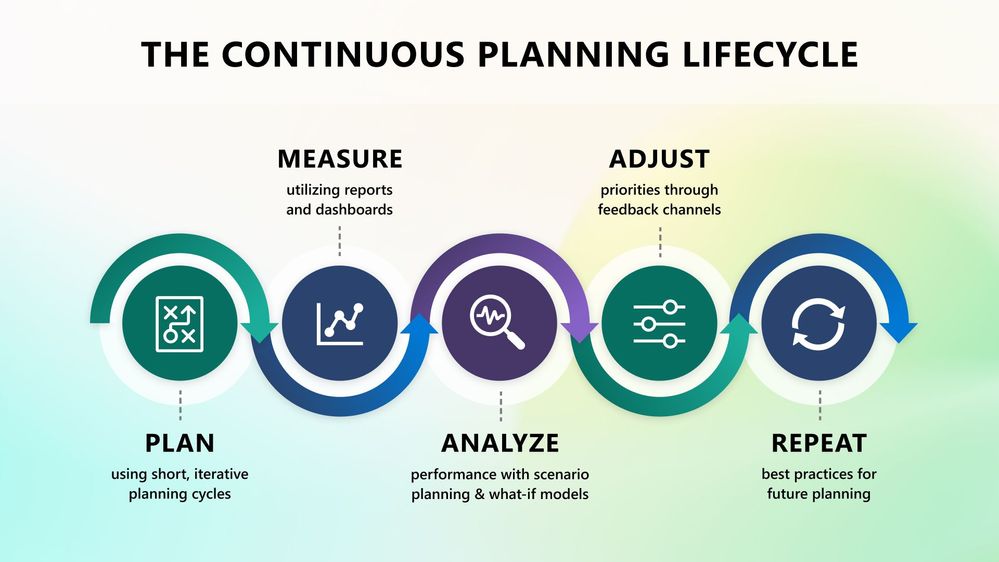

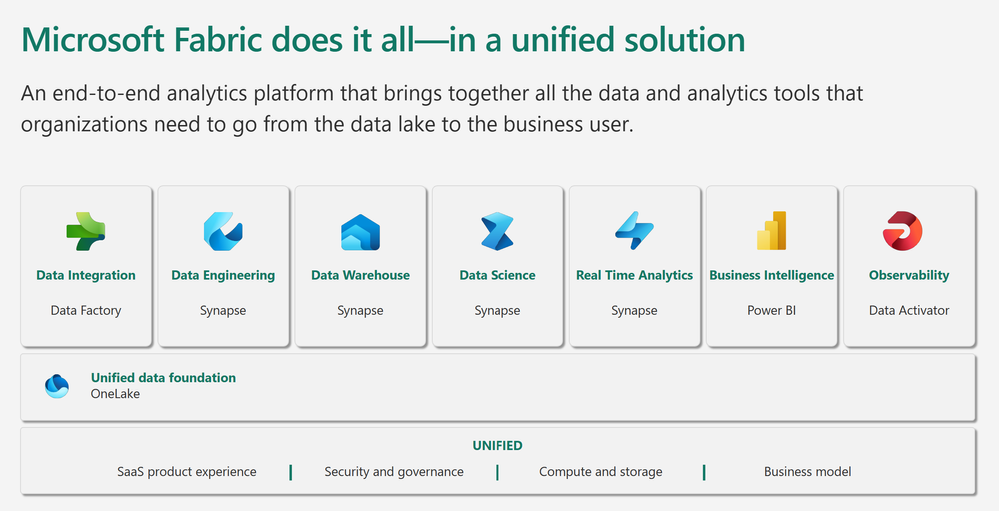

Microsoft Fabric is an all-in-one analytics solution for enterprises that covers everything from data movement to data science, Real-Time Analytics, and business intelligence . It offers a comprehensive suite of services, including data lake, data engineering, and data integration, all in one place. This makes it an ideal platform for technical students and entrepreneurial developers looking to streamline their data engineering and analytics workflows.

High-Level Overview of Microsoft Fabric

Microsoft Fabric brings together new and existing components from Power BI, Azure Synapse, and Azure Data Factory into a single integrated environment. These components are then presented in various customized user experiences. Fabric brings together experiences such as Data Engineering, Data Factory, Data Science, Data Warehouse, Real-Time Analytics, and Power BI onto a shared SaaS foundation.

This integration provides several advantages :

- An extensive range of deeply integrated analytics in the industry.

- Shared experiences across experiences that are familiar and easy to learn.

- Developers can easily access and reuse all assets.

- A unified data lake that allows you to retain the data where it is while using your preferred analytics tools.

- Centralized administration and governance across all experiences.

Benefits of Learning and Using Microsoft Fabric

Learning and using Microsoft Fabric can provide numerous benefits. Here are a few key ones:

Simplicity: With Fabric, you don’t need to piece together different services from multiple vendors. Instead, you can enjoy a highly integrated, end-to-end, and easy-to-use product that is designed to simplify your analytics needs.

Efficiency: Fabric allows creators to concentrate on producing their best work, freeing them from the need to integrate, manage, or understand the underlying infrastructure that supports the experience.

Scalability: Microsoft Fabric is a powerful platform that offers scalability, resilience, simplified development, fault tolerance, and support for microservices, making it an ideal choice for businesses aiming to stay agile and competitive in today’s digital landscape.

Microsoft Learn Resources for Microsoft Fabric

Microsoft Learn offers a variety of resources to help you get started with Microsoft Fabric. Here are a few key ones:

- Get started with Microsoft Fabric – Training: This learning path includes 11 modules that cover everything from an introduction to end-to-end analytics using Microsoft Fabric to administering Microsoft Fabric.

- Microsoft Fabric documentation: This comprehensive documentation provides an overview of Microsoft Fabric, its capabilities, and how to use it.

- Learn Live: Get started with Microsoft Fabric: Online Webinars and On Demand series showcasing Microsoft Fabric

- Get started documentation: This page provides a variety of documentation to help you get started with Microsoft Fabric.

- Exam DP-600: Implementing Analytics Solutions Using Microsoft Fabric (beta) – Certifications | Microsoft Learn This exam will be available in January 2024. This exam measures your ability to accomplish the following technical tasks: plan, implement, and manage a solution for data analytics; prepare and serve data; implement and manage semantic models; and explore and analyze data.

In conclusion, Microsoft Fabric is a game-changing platform that brings together a variety of Azure tools and services under one unified umbrella. Its core features empower businesses and data professionals to make smarter, data-driven decisions.

So, whether you’re a technical student looking to expand your skillset or an entrepreneurial developer aiming to streamline your data workflows, Microsoft Fabric is definitely worth considering.

by Contributed | Dec 2, 2023 | Technology

This article is contributed. See the original author and article here.

One such error for Azure SQL Database users employing DataSync is: “Database provisioning failed with the exception ‘Column is of a type that is invalid for use as a key column in an index.” This article aims to dissect this error, providing insights and practical solutions for database administrators and developers.

Understanding the Error:

This error signifies a mismatch between the column data type used in an index and what is permissible within Azure SQL DataSync’s framework. Such mismatches can disrupt database provisioning, a critical step in synchronization processes.

Data Types and Index Restrictions in DataSync:

Azure SQL Data Sync imposes specific limitations on data types and index properties. Notably, it does not support indexes on columns with nvarchar(max)that our customer has. Additionally, primary keys cannot be of types like sql_variant, binary, varbinary, image, and xml. What is SQL Data Sync for Azure? – Azure SQL Database | Microsoft Learn

Practical Solutions:

- Modify Data Types: If feasible, alter the data type from

nvarchar(max) to a smaller variant .

- Index Adjustments: Review your database schema and modify or remove indexes that include unsupported column types.

- Exclude Problematic Columns: Consider omitting columns with unsupported data types from your DataSync synchronization groups.

by Contributed | Dec 1, 2023 | Technology

This article is contributed. See the original author and article here.

We have published a new page on Azure to highlight Windows Container customer stories on AKS with M365 (supporting products like Office and Teams), Forza (XBOX Game Studios), Relativity and Duck Creek.

If you are looking for a way to modernize your Windows applications, streamline your development process, and scale your business with Azure, you might be interested in learning how other customers have achieved these goals by using Windows Containers on Azure Kubernetes Service (AKS).

Windows Containers on AKS is a fully managed Kubernetes service that allows you to run your Windows applications alongside Linux applications in the same cluster, with seamless integration and minimal code modifications. Windows Containers on AKS offers a number of benefits, such as:

- Reduced infrastructure and operational costs

- Improved performance and reliability

- Faster and more frequent deployments

- Enhanced security and compliance

- Simplified management and orchestration

Stay tuned for new stories that will be published soon, featuring customers from new industries and with new scenarios using Windows Containers.

In the meantime, we invite you to check out the Windows Container GitHub repository, where you can find useful resources, documentation, samples, and tools to help you get started. You can also share your feedback, questions, and suggestions with the Windows Container product team and the community of users and experts.

Recent Comments