Logic Apps Aviators Newsletter – February 2024

This article is contributed. See the original author and article here.

We are thrilled to announce that this newsletter edition officially marks one full year of Logic Apps’ Ace Aviators! From our initial newsletter and livestream in February of last year, it’s been an incredible journey witnessing this community take flight. Of course, none of this smooth flying would have been possible without YOU! So, to all our aviators, thank you for reading, watching, and participating this past year. Make sure to keep sharing and posting to #LogicAppsAviators so we can continue to navigate the skies of innovation together.

In this issue:

Ace Aviator of the Month

February’s Ace Aviator: Maheshkumar Tiwari

What is your role and title? What are your responsibilities associated with your position?

Although my title is Solution Architect, my role is of Practice/Competency Lead, and I lead the Microsoft Integration practice (I’m responsible for leading and growing our expertise) within Birlasoft Limited. My role is multifaceted, encompassing strategic leadership, team development, client engagement, and operational excellence.

Below is a breakdown of my responsibilities:

Strategic Leadership:

- Defining the vision and direction for the practice, aligning it with the overall organizational strategy.

- Identifying and pursuing growth opportunities, including new markets, services, and partnerships.

- Staying abreast of industry trends and innovations to ensure our practice remains competitive (I’m not at my best now, but I’m taking steps to improve).

Team Development:

- Building and nurturing a high-performing team of experts in our practice area.

- Providing mentorship and coaching to help team members develop their skills and expertise.

- Fostering a collaborative and innovative work environment.

Client Engagement:

- Building strong relationships with clients and understanding their needs and challenges.

- Developing and delivering high-quality solutions that meet client requirements.

- Managing client expectations and ensuring their satisfaction.

Operational Excellence:

- Establishing and maintaining efficient processes and workflows within the practice.

- Managing budgets and resources effectively.

- Measuring and monitoring key performance indicators (KPIs) and driving continuous improvement.

Overall, my goal is to lead the Microsoft Integration Practice to success by delivering exceptional value to our clients, developing our team, and contributing to the growth of the organization.

Can you provide some insights into your day-to-day activities and what a typical day in your role looks like?

Typical days begin with a coffee-fueled review of emails, calendar, and upcoming meetings, deadlines, and calls. A substantial portion of the day is then dedicated to collaborative meetings with project teams and clients, focusing on progress updates, challenge resolution, and recommendation presentations. Drafting proposals for new RFPs/RFIs or executing ongoing project plans occupies another significant segment of the workday. As the practice lead, I am also prepared to address any ad-hoc requests or situations that may arise within the practice.

The positive response to our proposals, built on strong customer focus and industry best practices, has ignited growth in the Birlasoft Integration Practice. To capitalize on this momentum, I’m busy interviewing and assembling a team of exceptional individuals. It’s an honor to be part of this thriving practice (and I can’t wait to see what we achieve together)!

So, my day involves doing development work, working on POC/RFP/RFI, solution designing, Interviews, handling escalations, mentoring team, resources, and project planning etc.

What motivates and inspires you to be an active member of the Aviators/Microsoft community?

I am a very strong believer in The Value of Giving Back and by nature I like helping people (as much as I can).

What Inspired: When I had started learning BizTalk, I took lot of help from the community written articles and Microsoft documentation. I will be very honest, although Microsoft documentation is very apt but the articles written by community members were more easy to understand, had a different perspective, simple ways to explain etc.

And that’s how I started with an intention of helping people like me by sharing whatever limited knowledge I have in a simplified manner (at least I try to) by various means – answering on forums, writing articles etc. I maintain a blog Techfindings…by Maheshkumar Tiwari through which I share my findings/learnings and it’s been over a decade I am doing it, over LinkedIn/Facebook answering individuals to their questions, also sometimes on groups.

What Motivates: When you receive mail/message/thank you note from someone you don’t know, saying that the content really helped them – to solve the problem, to crack the interview, to clear the understanding etc. — It warms my heart more than any award. It’s the fuel that keeps me creating, knowing I’m truly touching lives.

Looking back, what advice do you wish you would have been told earlier on that you would give to individuals looking to become involved in STEM/technology?

While theoretical knowledge is important, prioritize developing practical skills like coding, data analysis, project management, and problem-solving. Don’t wait for the “perfect” moment or project to begin. Try mini-projects, tinker with code, participate in online challenges. While doing this embrace failures as learning opportunities and steppingstones to improvement.

No one knows everything, and reaching out for help is a sign of strength, not weakness. Seek guidance from seniors, peers, online communities, or any available resources.

Focus on the joy of learning, exploring, and problem-solving, not just achieving a specific degree or job title. Curiosity and a love for understanding how things work will fuel your passion and resilience through challenges.

What are some of the most important lessons you’ve learned throughout your career?

The only thing which is constant is Change – the sooner we accept it and develop/have a mindset to adapt, the better it is.

Survival of the fittest is applicable to every phase of personal/professional life. You really can’t blame others.

Maintaining a healthy balance between work and personal life (unfortunately I am failing in this), practicing self-care, and managing stress are crucial for long-term success.

Building a successful career takes time, dedication, and perseverance. Set realistic goals, celebrate milestones, and don’t get discouraged by setbacks.

Enjoy the process, keep learning, and adapt to the ever-changing field.

Imagine you had a magic wand that could create a feature in Logic Apps. What would this feature be and why?

Without a second thought, following is what I would have created – A wizard which asks me questions about my workflow requirement and once the questionnaire ends, complete workflow should be presented.

Well, that’s from magic wand perspective :smiling_face_with_smiling_eyes:, but above is very much doable.

But, as of now following are the things which we can do at present (few points are from my team – want to share maximum ideas to make Logic app more robust)

- Logic Apps should have a feature of disabling the actions from designer. This will help developers in unit testing the code efficiently. We can achieve this by commenting out Json in code view or by creating a backup workflow but that’s a tedious task.

- Versioning missing in Azure standard Logic Apps

- Breakpoint option should be enabled, so that it will help in debugging.

- Retry from the failed step should be extended to loops and parallel branches as well

- Need out of box support for Liquid Map debugging, Intellisense support would be also good to have

- For now only Json schema is supported in http trigger, if xml support can be added to it.

- CRON expression support in Logic app recurrence trigger

- Reference documentation as to which WS plan should one choose based on number of workflows, volume of messages processed etc.(will help to justify the cost to clients)

- Exact error capture for actions within loop/action within a scope inside a scope etc.

- Support for partial deployment of workflows in a single logic app (adding only the new workflows and not overwrite all)

Customer Corner:

Datex debuts flexible supply chain software based on the Azure Stack and Azure Integration Services

Check out this customer success story about Datex leveraging Microsoft Azure Integration Services to transform its infrastructure for a more modern solution. Azure Integration Services played a crucial role in enabling flexible integrations, data visualization through Power BI, and efficient electronic data integration (EDI) processes using Azure Logic Apps. Read more in this article about how AIS helped provide Datex with a low-code environment, faster time-to-market, cost savings, and enhanced capabilities.

News from our product group:

|

| Announcement – Target-Based Scaling Support in Azure Logic Apps Standard Read this exciting announcement about an update refining the underlying dynamic scaling mechanism, resulting in faster scale-out and scale-in times. |

| Logic Apps Standard Target-Based Scaling Performance Benchmark — Burst Workloads Take a deeper dive into the new target-based scaling for Azure Logic Apps Standard update and how it can help you manage your application’s performance with asynchronous burst loads. |

| Logic Apps Mission Critical Series: “We Speak: IBM i: COBOL and RPG Applications” Read more on how Azure Logic Apps can unlock scenarios where it’s required to integrate with IBM i applications in another Mission Critical Series episode. |

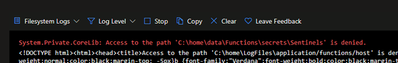

| Key Vault policies affecting your Logic App Standard functioning This article shows how Key Vault Policies may affect the functioning of a Logic App Standard, the troubleshooting steps, and how to fix it. |

Session support for Service Bus built-in connector (Logic Apps Standard) Need some help with using Azure Service Bus Sessions in Logic Apps Standard? Check out this article. | |

| Concurrency support for Service Bus built-in connector in Logic Apps Standard Learn more about how you can use concurrency control with Service Bus built-in connector in Logic Apps Standard. |

News from our community:

Azure Function | Application settings | User Secrets | Azure Key Vault | Options Pattern

Post by Sri Gunnala

Struggling to manage your application settings during development and testing, especially when switching between local and cloud environments? Watch Sri’s video to learn how to efficiently manage your app settings.

BizTalk Server to Azure Integration Services: Send zipped messages (or files)

Post by Sandro Pereira

Need to know how to send zipped messages (or files)? Read Sandro’s post about a solution for this in BizTalk and Azure Integration Services.

Friday Fact: Trigger Conditions Can Help You Optimize Workflows and Conserve Resources

Post by Luis Rigueira

Learn more in this post by Luis about improving your Logic App design with the ability to set trigger conditions.

Introduction to Azure Logic Apps

Post by Stephen W Thomas

If you’re new to Logic Apps, then Stephen’s newest video is perfect for you to get started.

Azure Integration Services – Faster Integration, Better Results to realize your AI Strategy

Post by Horton Cloud Solutions

Read more from Horton Cloud Solutions about how AIS not only improves developer productivity but is also key in executing an effective AI strategy.

Upgrade an Azure function from .NET 6 to .NET 8

Post by Mark Brimble

Need help upgrading an Azure function from .NET 6 to .NET 8? Mark has your back in this post.

Recent Comments