by Contributed | Mar 13, 2024 | Technology

This article is contributed. See the original author and article here.

The Microsoft Defender Threat Intelligence (MDTI) team is excited to announce that we are revealing previews for each of our 350+ intel profiles to all Defender XDR customers for the first time. This represents Microsoft’s broadest expansion of threat intelligence content to non-MDTI premium customers yet, adding nearly 340 intel profiles to Defender XDR customers’ view, including over 200 tracked threat actors, tools, and vulnerabilities that Microsoft has not named anywhere else.

We are also revealing the full content for an additional 31 profiles, building on our initial set of 17 profiles released to standard (free) users at Microsoft Ignite. Defender XDR customers now can access 27 full threat actor profiles, including new profiles like Periwinkle Tempest and Purple Typhoon; 13 full tool profiles, such as Qakbot and Cobalt Strike; and eight full vulnerability profiles, including CVE-2021-40444 and CVE-2023-45319.

Note: Profiles in the standard edition will not contain indicators of compromise (IOCs), which are reserved for MDTI premium customers.

Intel Profiles standard edition experience

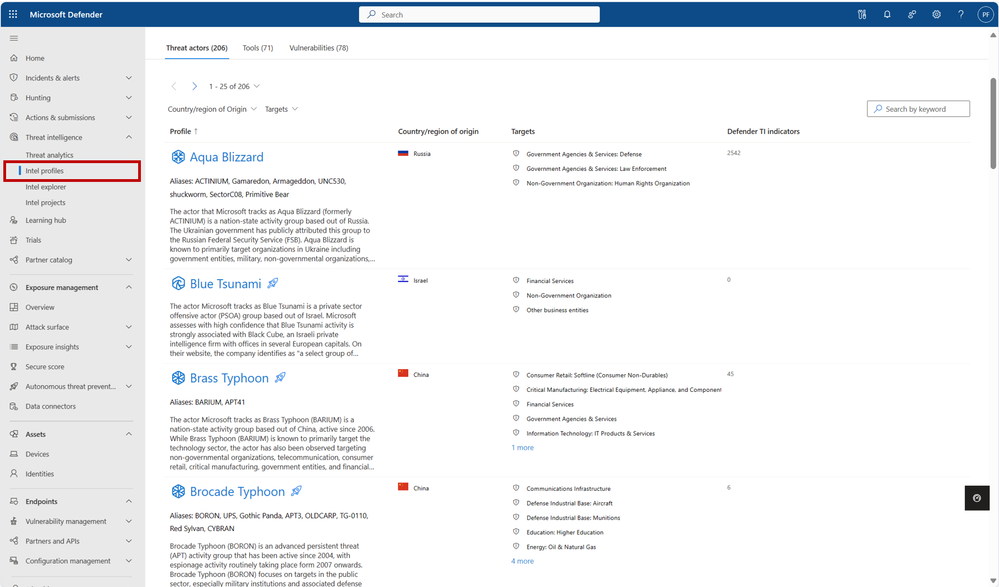

You can visit our more than 350 intel profiles on the “Intel profiles” tab under the “Threat intelligence” blade in the left navigation menu:

350+ intel profiles are now available to all Defender XDR customers via the “Intel profiles” tab under the threat intelligence blade, including information on over 200 threat actors, tools, and vulnerabilities that Microsoft has not mentioned publicly to date.

350+ intel profiles are now available to all Defender XDR customers via the “Intel profiles” tab under the threat intelligence blade, including information on over 200 threat actors, tools, and vulnerabilities that Microsoft has not mentioned publicly to date.

Currently, our corpus of shareable finished threat intelligence contains 205+ named threat actors, 70+ malicious tools, and 75+ vulnerabilities, with more to be released on a continual basis. To view our full catalog for each of the three profile types – Threat Actors, Tools, and Vulnerabilities – click their respective tab near the top of the page.

In the intel profiles page list view, profiles containing limited information are marked with an  icon. However, don’t let this symbol stop you – each of these profiles contain the same detailed summary (“Snapshot”) written at the start of the content for premium customers. For threat actor profiles, this section often includes a valuable description of the actor’s origins, activities, techniques, and motivations. On tool and vulnerability profiles, these summaries describe the malicious tool or exploit and illustrate its significance, with details from real-world activity by threat actor groups when available. This information enables leaders of threat intelligence and security programs to take an intel-led approach, starting with the threat actors, tools, and vulnerabilities that matter most to their organization and building a robust strategy outward.

icon. However, don’t let this symbol stop you – each of these profiles contain the same detailed summary (“Snapshot”) written at the start of the content for premium customers. For threat actor profiles, this section often includes a valuable description of the actor’s origins, activities, techniques, and motivations. On tool and vulnerability profiles, these summaries describe the malicious tool or exploit and illustrate its significance, with details from real-world activity by threat actor groups when available. This information enables leaders of threat intelligence and security programs to take an intel-led approach, starting with the threat actors, tools, and vulnerabilities that matter most to their organization and building a robust strategy outward.

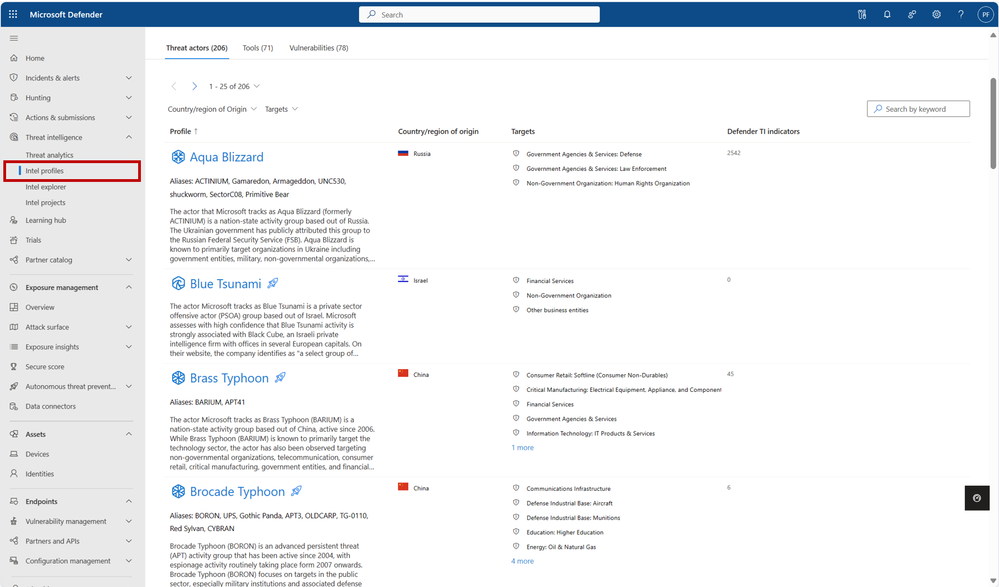

Our intel profiles containing full content can be distinguished from the limited profiles in the list view as they do not contain the icon. Full profiles can contain much additional detail beyond a Snapshot, including:

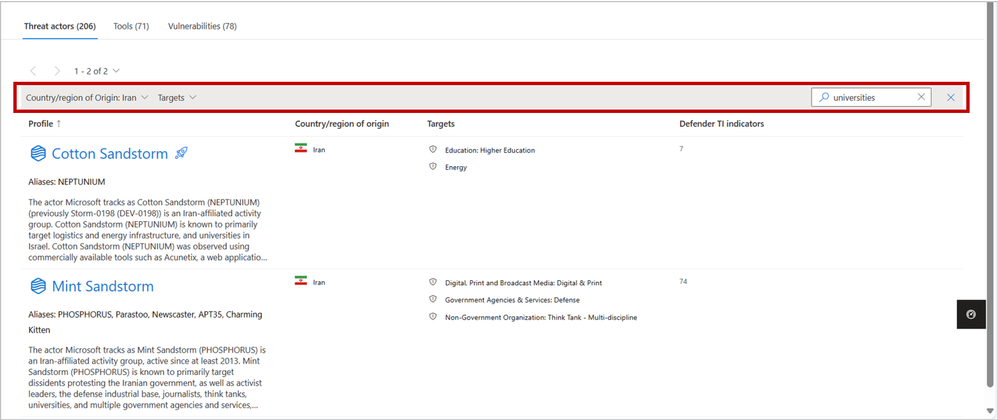

- Real details from past threat actor activity, tool usage, and vulnerability exploits, including phishing templates, malicious attachments, code excerpts and more from actual threat investigations

- Detailed TTPs (tactics, techniques, and procedures) and attack path analyses, based on both past and potential future exploitation attempts, and their corresponding MITRE ATT&CK (Adversarial Tactics, Techniques, and Common Knowledge) techniques

- Detections and Hunting Queries, which list alerts and detections that may indicate the presence of the above threats

- Advanced Hunting queries to identify adversary presence within a customer’s network

- Microsoft Analytic Rules, which result in alerts and incidents to signal detections associated with adversarial activity

- Recommendations to protect your organization against the threat

- And References for more information.

Full intel profiles contain extensive information on threat actors, tools, and vulnerabilities by leveraging details from actual threat investigations.

Full intel profiles contain extensive information on threat actors, tools, and vulnerabilities by leveraging details from actual threat investigations.

To see the full content and IOCs for all intel profiles, start a free trial or upgrade to premium.

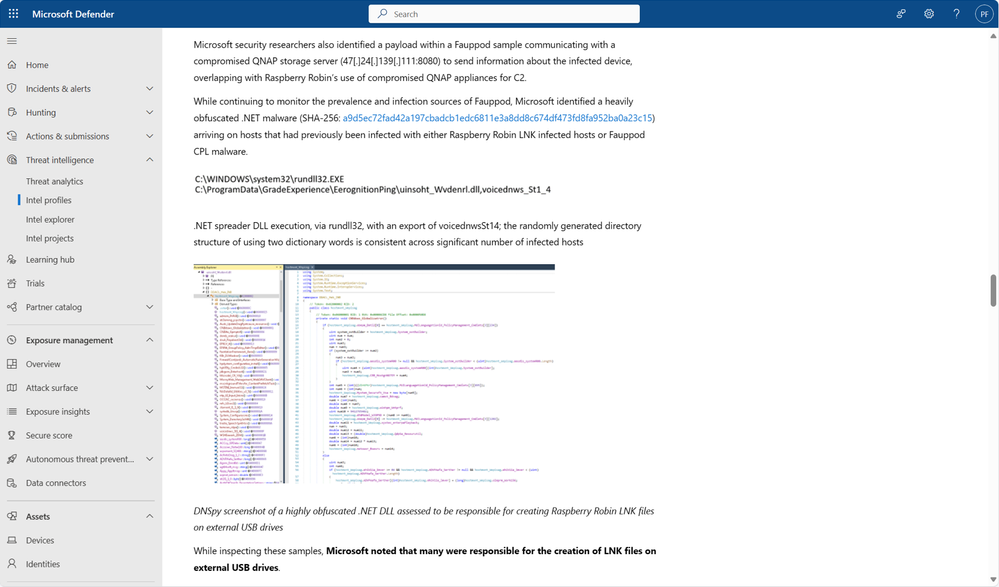

Discovering relevant profiles

On the intel profiles page, each of the tabs for the three profile types contains a local search box, enabling you to quickly discover profiles of interest by matching keywords. Additionally, the Threat actors tab enables you to filter for the Country/Region of Origin and Targets (representing Targeted Industries) of actor groups, helping to narrow the list down to the profiles that are most important to your organization:

Use the filter and search functions to narrow profile lists down to the content that is most relevant to your organization.

Use the filter and search functions to narrow profile lists down to the content that is most relevant to your organization.

With the inclusion of MDTI results in Defender XDR’s global search bar, you also may use this top-level search to discover intel profiles from anywhere in the portal based on keywords. Refer to the linked blog for inspiration on what you can search for and what other MDTI results you can expect.

About intel profiles

Intel profiles are Microsoft’s definitive source of shareable knowledge on tracked threat actors, malicious tools, and vulnerabilities. Written and continuously updated by our dedicated security researchers and threat intelligence experts, intel profiles contain detailed analysis of the biggest threats facing organizations, along with recommendations on how to protect against these threats and IOCs to hunt for these threats within your environment.

As the defender of four of the world’s largest public clouds, Microsoft has unique visibility into the global threat landscape, including the tools, techniques, and vulnerabilities that threat actors are actively using and exploiting to inflict harm. Our team of more than 10,000 dedicated security researchers and engineers is responsible for making sense of more than 65 trillion security signals per day to protect our customers. We then build our findings into highly digestible intel profiles, so high-quality threat intelligence is available where you need it, when you need it, and how you need it.

Just one year after launching intel profiles at Microsoft Secure last year, Microsoft’s repository of shareable threat intelligence knowledge has expanded to over 205 named threat actors, 70 tools, and 75 vulnerabilities, with more added every month.

Next steps

Learn more about what you can do with the standard edition of MDTI in Defender XDR.

We want to hear from you!

Learn more about what else is rolling out at Microsoft Secure 2024, and be sure to join our fast-growing community of security pros and experts to provide product feedback and suggestions and start conversations about how MDTI is helping your team stay on top of threats. With an open dialogue, we can create a safer internet together. Learn more about MDTI and learn how to access the MDTI standard version at no cost.

by Contributed | Mar 12, 2024 | Technology

This article is contributed. See the original author and article here.

In this month’s Empowering.Cloud community update, we cover the latest briefings from MVPs, the Microsoft Teams Monthly Update, updates in the Operator Connect world and upcoming industry events. There’s lots to look forward to!

Troubleshooting your Meeting Room Experience

https://app.empowering.cloud/briefings/329/Troubleshooting-your-Meeting-Room-Experience

Jason Wynn, MVP and Presales Specialist at Carillion, shows us how to get the most out of our meeting room experience, troubleshoot some common issues and explains how and why we’re trying to get all this information together.

- Use of Teams Admin Center, Microsoft Pro Portal and Power BI reports

- Configuration and live information from Microsoft

- Monitoring hardware health and connectivity

- Live environment analysis and troubleshooting

- Network performance and jitter rates

- Call performance analysis and optimisation

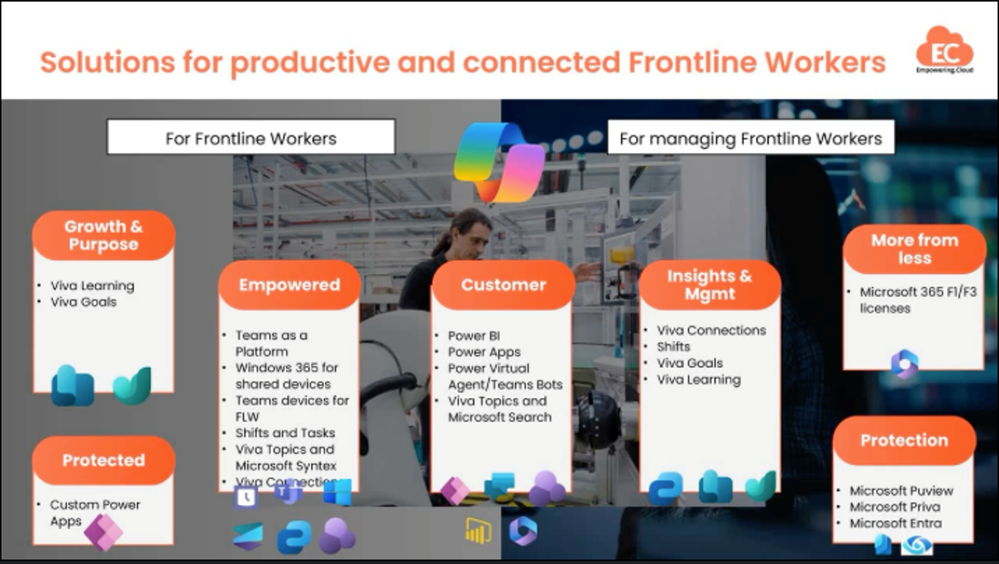

Introduction to Frontline Workers in M365

https://app.empowering.cloud/briefings/327/Take-Charge-of-The-Microsoft-Teams-Phone-Experience

MVP Kevin McDonnell introduces us to the topic of M365 for Frontline Workers, including challenges faced by frontline works and how Microsoft 365 can help provide a solution to some of these.

- Challenges faced by Frontline Workers

- Challenges faced by managers and organizers

- Solutions in Microsoft 365 for Frontline Workers include:

- M365 can help boost productivity, improve employee experience and provide personalized information and support for Frontline Workers

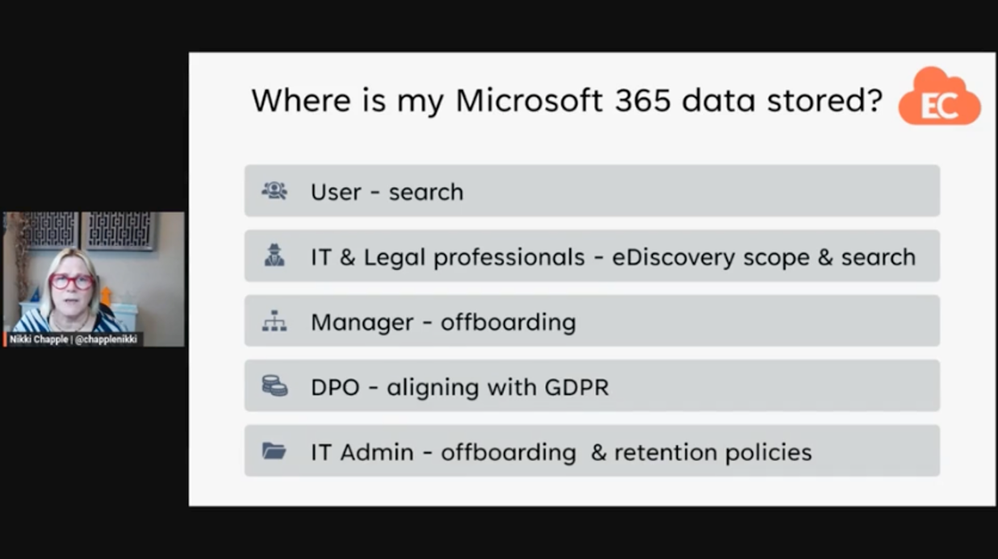

Where is My Microsoft 365 Data Stored?

https://app.empowering.cloud/briefings/350/Where-is-my-Microsoft365-Data-Stored

In another one of the latest community briefings, MVP Nikki Chapple tells us all about where our M365 data is stored.

- The importance of knowing where your Microsoft 365 data is stored

- Microsoft 365 data is stored in various locations including user mailboxes, group mailboxes, OneDrive, SharePoint sites and external locations

- The location of data storage may vary depending on user location and compliance requirements

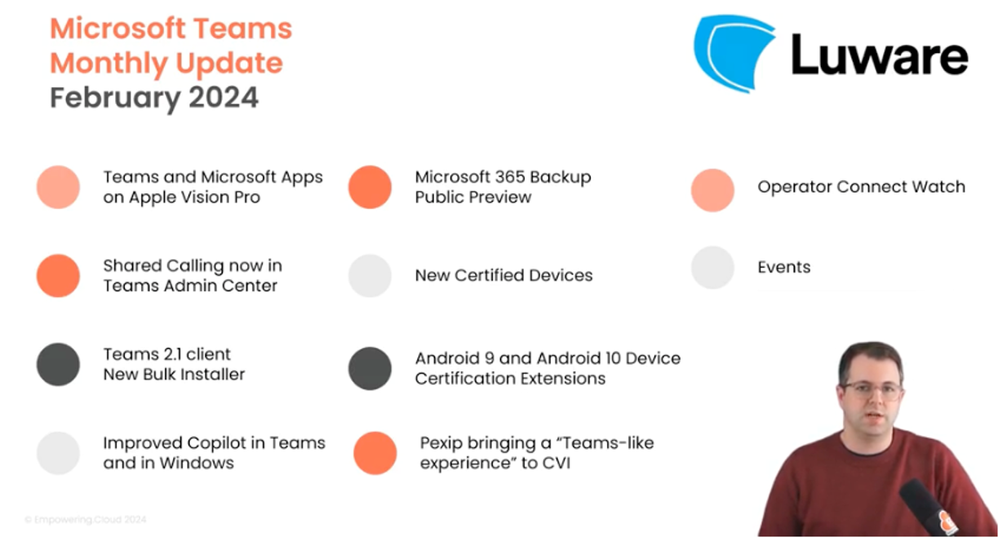

Microsoft Teams Monthly Update – February 2024

https://app.empowering.cloud/briefings/349/Microsoft-Teams-Monthly-Update-February-2024

In this month’s Microsoft Teams monthly update, MVP Tom Arbuthnot gives us the rundown on all the latest Microsoft Teams news, including new certified devices and Shared Calling in TAC.

- Teams and Microsoft Apps on Apple Vision Pro

- Shared Calling now in Teams Admin Center

- Teams 2.1 client cutover coming soon

- Improved Copilot in Teams and in Windows for prompting, chat history and a prompt library

- Microsoft 365 Backup Public Preview with fast restorability and native data format

- Android 9 and Android 10 Device Certificate Extensions

- Pexip bringing a ‘Teams-like experience’ to Cloud Video Interop (CVI)

Microsoft Teams Insider Podcast

Complex Voice Strategies for Global Organizations with Zach Bennett

https://www.teamsinsider.show/2111467/14508325

Zach Bennett, Principal Architect at LoopUp, came along to the Teams Insider Show to discuss Teams Phone options for complex and global organizations.

The Role of AI in Contact Centers and Regulatory Considerations with Philipp Beck

https://www.teamsinsider.show/2111467/14537425-the-role-of-ai-in-contact-centers-and-regulatory-considerations-with-philipp-beck

Philipp Beck, Former CEO and Founder of Luware, and MVP Tom Arbuthnot delve into key developments in the world of Microsoft Teams and Contact Center.

Microsoft Teams Operator Connect Updates

The numbers are continuing to rise in the Microsoft Teams Operator Connect world with there now being 89 operators and 86 countries covered. Will we reach 100 providers or countries first?!

Check out our full Power BI report of all the Operators here:

https://app.empowering.cloud/research/microsoft-teams-operator-connect-providers-comparison

Upcoming Community Events

Teams Fireside Chat – 14th March, 16:00 GMT | Virtual

Hosts: MVP Tom Arbuthnot

Guest Speaker: MVPs and Microsoft speakers LIVE from MVP summit

This month’s Teams Fireside Chat is a special one as Tom Arbuthnot will be hosting live from the MVP Summit at the Microsoft campus in Redmond, where he’ll be joined by other MVPs for an expertise-filled session.

Registration Link: https://events.empowering.cloud/event-details?recordId=recJNyAGoTadbcfMN

Microsoft Teams Devices Ask Me Anything – 18/19th March | Virtual

Microsoft Teams Devices Ask Me Anything is a monthly community which gives you all an update on the important and Microsoft Teams devices news, as well as the chance to ask questions and get them answered by the experts. We have 2 sessions to cover different time zones, so there’s really no excuse not to come along to at least one!

EMEA/NA – 18th March, 16:00 GMT | Virtual

Hosts: MVP Graham Walsh, Michael Tressler, Jimmy Vaughan

Registration Link: https://events.empowering.cloud/event-details?recordId=recnbltzoOt2pQ2wF

APAC – 19th March, 17:30 AEST | Virtual

Hosts: MVP Graham Walsh, Phil Clapham, Andrew Higgs, Justin O’Meara

Registration Link: https://events.empowering.cloud/event-details?recordId=recsMBe3O6J10xSC2

Everything You Need to Know as a Microsoft Teams Service Owner at Enterprise Connect – 25th March | In-Person

Training Session: led by MVP Tom Arbuthnot

Whether you’re in the network team, telecoms team or part of the Microsoft 365 team, MVP Tom Arbuthnot’s training session will help you avoid common pitfalls and boost your success as he takes you through everything you need to know as a Microsoft Teams Service Owner.

Registration Link: https://enterpriseconnect.com/training

Teams Fireside Chat – 11th April, 16:00 GMT | Virtual

Hosts: MVP Tom Arbuthnot

Guest Speaker: Vandana Thomas, Product Leader, Microsoft Teams Phone Mobile

Join other community members as we chat with Microsoft’s Product Leader for Teams Phone Mobile, Vandana Thomas on April’s Teams Fireside Chat. As usual, we’ll open up the floor to discussion to bring along your burning Microsoft Teams questions to get them answered by the experts.

Registration Link: https://events.empowering.cloud/event-details?recordId=recLQiD9PjZbQahog

Comms vNext – 23-24 April | In-Person | Denver, CO

Comms VNext is the only conference in North America dedicated to Microsoft Communications and Collaboration Technologies and aims to bring the community together for an event full of deep technical sessions from experts, an exhibition hall with 40 exhibitors and some great catering too!

Registration Link: https://www.commsvnext.com/

That’s all for this month, so look out for the next update in April with all the news from Enterprise Connect!

by Contributed | Mar 11, 2024 | Technology

This article is contributed. See the original author and article here.

Afua Bruce is a leading public interest technologist, professor, founder and CEO of ANB Advisory, and author of The Tech that Comes Next. Her work spans AI ethics, equitable technology, inclusive data strategies, and STEM opportunities. We were thrilled to welcome her insights and experiences to the keynote stage at the Global Nonprofit Leaders Summit.

In her keynote presentation, Afua explores:

- How nonprofit leaders can use AI to invent a better future, and shares three pillars of leadership: leading people, leading processes, and leading technology.

- Examples of how nonprofits have used AI to improve their hiring, communication, and decision making, and how they have created guidelines and policies for using AI responsibly and ethically.

- Tips on how to get started with AI—such as identifying pain points, exploring available tools, and learning with nonprofit and tech communities.

Afua explored the theme of community-guided innovation in her earlier blog, How nonprofits can manage and lead transformation together, emphasizing that collaboration and connection are critical to how nonprofits meet the challenges and speed of AI transformation.

by Contributed | Mar 10, 2024 | Technology

This article is contributed. See the original author and article here.

Introduction:

JavaScript, the powerhouse behind many applications, sometimes faces limitations when it comes to memory in a Node.js environment. Today, let’s dive into a common challenge faced by many applications – the default memory limit in Node.js. In this blog post, we’ll explore how to break free from these limitations, boost your application’s performance, and optimize memory usage.

Understanding the Challenge:

Node.js applications are confined by a default memory limit set by the runtime environment. This can be a bottleneck for memory-intensive applications. Fortunately, Node.js provides a solution by allowing developers to increase this fixed memory limit, paving the way for improved performance.

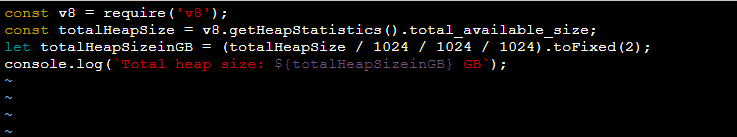

Checking Current Heap Size:

Before making any tweaks, it’s crucial to grasp your Node.js application’s current heap size. The code snippet below, saved in a file named `heapsize.js`, uses the V8 module to retrieve the system’s current heap size:

const v8 =require('v8');

const totalHeapSize = v8.getHeapStatistics().total_available_size;

let totalHeapSizeinGB = (totalHeapSize /1024/1024/1024).toFixed(2);

console.log(`Total heap size: ${totalHeapSizeinGB} GB`);

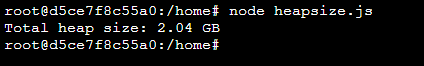

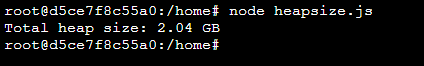

Running this script (`node heapsize.js`) will provide insights into the current heap size of your Node.js application.

How-to run-on Azure App service?

- Navigate to the Advanced Tools Blade on the Azure portal and click on “Go” to access KUDU (SCM site).

- Click on SSH, leading you to the WebSSH session of your app service (`https://.scm.azurewebsites.net/webssh/host`).

Please consider the below when you attempt to open SSH session:

- For web app for containers, you need to enable SSH from your Dockerfile. Steps to enable SSH for custom containers.

- For blessed images (Images developed by Microsoft), SSH would be enabled by default, however your app should be up and running without any issues.

- Under configuration blade -> General Settings on Azure app service. Ensure that SSH is “on” as shown below,

After successfully loading your SSH session, navigate to /home and create a file named heapsize.js using the command – touch heapsize.js. Edit the file using the vi editor with the command – vi heapsize.js.

Copy the code snippet above, then save the file by entering `!wq` and pressing Enter.

Run the file using `node heapsize.js`, and you will receive the following output.

Note: In case you encounter v8 module not found issues, attempt to resolve it by installing v8 from SSH using the command – `npm install v8`.

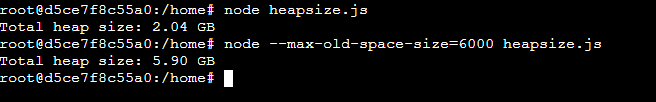

Adjusting Memory Limits:

To increase the memory limit for your Node.js application, use the `–max-old-space-size` flag when starting your script. The value following this flag denotes the maximum memory allocation in megabytes.

For instance, running the following command increases the heap size:

node --max-old-space-size=6000 heapsize.js

This modification results in an expanded total heap size, furnishing your application with more memory resources.

Testing on Azure app service:

In the section on how-to run-on Azure App Service, we’ve learned how to check the current heap size. Now, for the same file, let’s attempt to increase the heap size and conduct a test.

Execute the command “node –max-old-space-size=6000 heapsize.js” in the WebSSH session. You can observe the difference with and without the argument –max-old-space-size=6000.

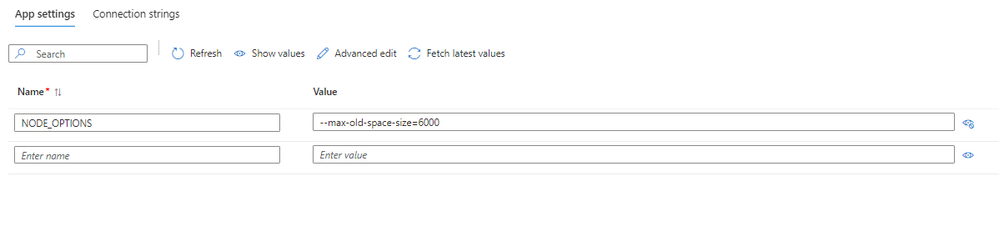

Automating Memory Adjustments with App Settings on Azure App Service:

For a more streamlined approach, consider adding the `–max-old-space-size` value directly as an app setting. This setting allows you to specify the maximum memory allocation for your Node.js application.

{

"appSettings": [

{

"name": "NODE_OPTIONS",

"value": "--max-old-space-size="

}

]

}

In the above snippet, replace “ with the desired maximum memory allocation for your application.

Sample screenshot:

How to choose the value to which you can increase the heap size?

Selecting an appropriate heap size value is contingent upon the constraints of the app service plan. Consider a scenario where you’re utilizing a 4-core CPU, and concurrently running three applications on the same app service plan. It’s crucial to recognize that the CPU resources are shared among these applications as well as certain system processes.

In such cases, a prudent approach involves carefully allocating the heap size to ensure optimal performance for each application while taking into account the shared CPU resources and potential competition from system processes. Balancing these factors is essential for achieving efficient utilization of the available resources within the specified app service plan limits.

For example:

Let’s consider an example where you have a 4-core CPU and a total of 32GB memory in your app service plan. We’ll allocate memory to each application while leaving some headroom for system processes.

- Total Memory Available: 32GB

- System Processes Overhead: 4GB

Reserve 4GB for the operating system and other system processes.

- Memory for Each Application: (32GB – 4GB) / 3 = 9.33GB per Application

Allocate approximately 9.33GB to each of the three applications running on the app service plan.

- Heap Size Calculation for Each Node.js Application:

Suppose you want to allocate 70% of the allocated memory to Node.js heap.

Heap Size per Application = 9.33GB * 0.7 ≈ 6.53GB

- Total Heap Size for All Applications: 3 * 6.53GB = 19.59GB

This is the combined heap size for all three Node.js applications running on the app service plan.

- Remaining Memory for Other Processes: (32GB – 19.59GB) = 12.41GB

- The remaining memory can be utilized by other processes and for any additional requirements.

These calculations are approximate and can be adjusted based on the specific needs and performance characteristics of your applications. It’s essential to monitor the system’s resource usage and make adjustments accordingly.

Validating on Azure app Service:

To validate, you can check the docker.log present in the location – /home/Logfiles and check the docker run command as below and you can see the app setting NODE_OPTIONS getting appended to the docker run command.

docker run -d --expose=8080 --name xxxxxx_1_d6a4bcfd -e NODE_OPTIONS=--max-old-space-size=6000 appsvc/node:18-lts_20240207.3.tuxprod

Conclusion:

Optimizing memory limits is pivotal for ensuring the seamless operation of Node.js applications, especially those handling significant workloads. By understanding and adjusting the memory limits, developers can enhance performance and responsiveness. Regularly monitor your application’s memory usage and adapt these settings to strike the right balance between resource utilization and performance. With these techniques, unleash the full potential of your JavaScript applications!

by Contributed | Mar 8, 2024 | Technology

This article is contributed. See the original author and article here.

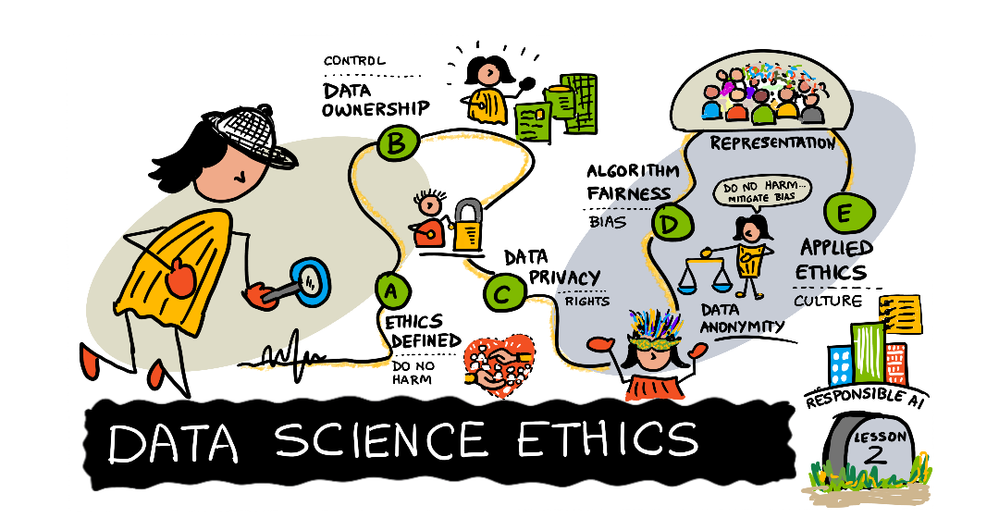

1 | What is #MarchResponsibly?

March is known for International Women’s Day – but did you know that women are one of the under-represented demographics when it comes to artificial intelligence prediction or data for machine learning? And did you know that Responsible AI is a key tool to ensure that the AI solutions of the future are built in a safe, trustworthy, and ethical manner that is representative of all demographics? J As we celebrate Women’s History Month, we will take this opportunity to share technical resources, Cloud Skills Challenges, and learning opportunities to build AI systems that behave more responsibly. Let’s #MarchResponsibly together.

2 | What is Responsible AI?

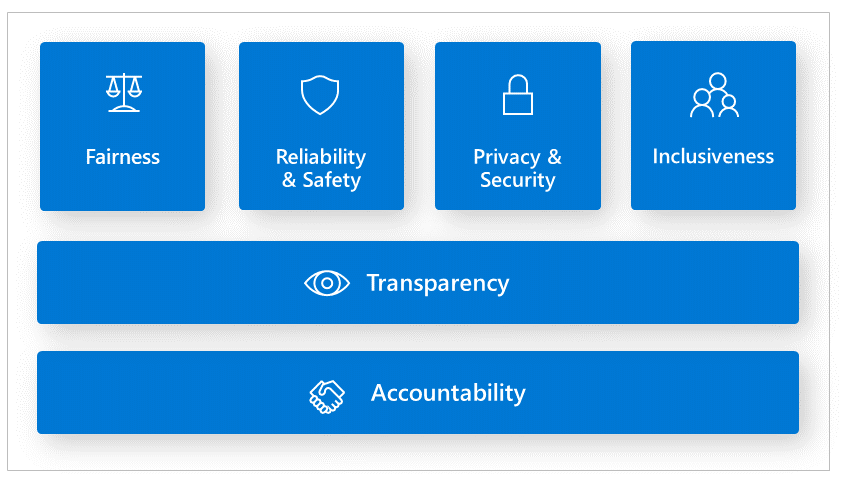

Responsible AI principles are essential principles that guide organizations and AI developers to build AI systems that are less harmful and more trustworthy.

Reference the Responsible AI Standards

Fairness issues occur when the AI system favors one group of people vs another, even when they share similar characteristics. Inclusiveness is another area that we need to examine whether the AI system is intentionally or unintentionally excluding certain demographics. Reliability and Safety are another area that we must make sure to consider outliers and all the possible things that could go wrong. Otherwise, it can lead to negative consequences when AI has abnormal behavior. Accountability is the notion that people who design and deploy AI systems must be accountable for how their systems operate. We recently saw this example in the news where the U.S. Congress summoned social media tech leads to hearing how their social media algorithms are influencing teenagers to lose their lives and inflict self-harm. At the end of the day, who compensated the victims or their families for the loss or grief? Transparency is particularly important for AI developers to find out why AI models are making mistakes or not meeting regulatory requirements. Finally, security and privacy are an evolving concern. When an AI system exposes or accesses unauthorized confidential information this is a privacy violation.

3 | Why is Responsible AI Important?

Artificial Intelligence is at the center of many conversations. On a daily basis we are seeing increasing news headlines on the positive and negative impact of AI. As a result, we are seeing unprecedented scrutiny for governments to regulate AI and governments acting as a response. The trend has moved from building traditional machine learning models to Large Language models (LLMs). However, the AI issues remain the same. At the heart of everything is data. The underlining data collected is based on human behavior and content we create, which often includes biases, stereotypes, or lack of adequate information. In addition, data imbalance where there is an over or under representation of certain demographics is often a blind spot that leads to bias favoring one group verse another. Lastly, there are other data risks that could have undesirable AI effects such as using unauthorized or unreliable data. This can cause infringement and privacy lawsuits. Using data that is not credible will yield erroneous AI outcomes; or back decision-making based on AI predictions. As a business, not only is your AI system untrustworthy, but this can ruin your reputation. Other societal harms AI systems can inflict are physical or psychological injury, and threats to human rights.

3 | Empowering Responsible AI Practices

Having practical responsible AI tools for organizations and AI practitioners is essential to reducing negative impacts of AI system. For instance, debugging and identifying AI performance metrics are usually numeric value. Human-centric tools to analyze AI models are beneficial in revealing what societal factors impact erroneous outputs and prediction. To illustrate, the Responsible AI dashboard tools empowers data scientists and AI developers to discovers areas where there are issues:

Addressing responsible AI with Generative AI applications is another area where we often see undesirable AI outcomes. Understanding prompt engineering techniques and being able to detect offensive text or image, as well as adversarial attacks, such as jailbreaks are valuable to prevent harm.

Having resources to build and evaluate LLM applications in a fast and efficient manner is a challenge that is much needed. We’ll be sharing awesome services organizations and AI engineers can adopt to their machine learning lifecycle implement, evaluate and deploy AI applications responsibly.

4 | How can we integrate Responsible AI into our processes?

Data scientists, AI developers and organizations understand the importance of responsible AI, however the challenges they face are finding the right tools to help them identify, debug, and mitigate erroneous behavior from AI models.

Researchers, organizations, open-source community, and Microsoft have been instrumental in developing tools and services to empower AI developers. Traditional machine learning model performance metrics are based on aggregate calculations, which are not sufficient in pinpointing AI issues that are human-centric. In this #MarchResponsibly initiative you will gain knowledge on:

- Identifying and diagnosing where your AI model is producing error.

- Exploring data distribution

- Conducting fairness assessments

- Understanding what influences or drives your model’s behavior.

- Preventing jailbreaks and data breach

- Mitigating AI harms.

4 | How can you #MarchResponsibly?

- Join in the learning and communications – each week we will share our Responsible AI learnings!

- Share, Like or comment.

- Celebrate Women making an impact in responsible AI.

- Check out the Azure Responsible AI workshop.

- Check out the Responsible AI Study Guide to Microsoft’s FREE learning resources.

- Stay tuned to our #MarchResponsibly hashtag more resources.

5 | Share the Message!

350+ intel profiles are now available to all Defender XDR customers via the “Intel profiles” tab under the threat intelligence blade, including information on over 200 threat actors, tools, and vulnerabilities that Microsoft has not mentioned publicly to date.

Full intel profiles contain extensive information on threat actors, tools, and vulnerabilities by leveraging details from actual threat investigations.

Use the filter and search functions to narrow profile lists down to the content that is most relevant to your organization.

Recent Comments