by Contributed | Aug 27, 2021 | Technology

This article is contributed. See the original author and article here.

Integrated authentication provides a secure and easy way to connect to Azure SQL Database and SQL Managed Instance. It leverages hybrid identities that coexist both on traditional Active Directory on-premises and in Azure Active Directory.

At the time of writing Azure SQL supports Azure Active Directory Integrated authentication with SQL Server Management Studio (SSMS) either by using credentials from a federated domain or via a managed domain that is configured for seamless single sign-on for pass-through and password hash authentication. More information here Configure Azure Active Directory authentication – Azure SQL Database & SQL Managed Instance & Azure Synapse Analytics | Microsoft Docs

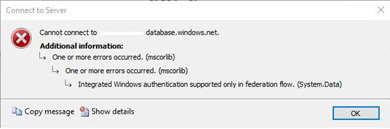

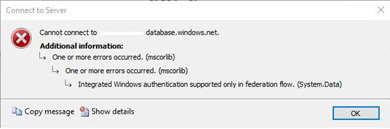

We recently worked on an interesting case where our customer was getting the error “Integrated Windows authentication supported only in federation flow” when trying to use AAD Integrated authentication with SSMS.

Recently they have migrated from using ADFS (Active Directory Federation Services) to SSSO for PTA (Seamless Single Sign-on for Pass-through Authentication). To troubleshoot the issue, we performed the following checks.

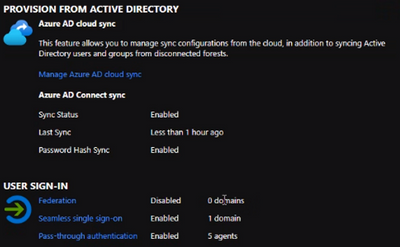

Validating setup for SSSO for PTA

- Ensure you are using the latest version of Azure AD Connect

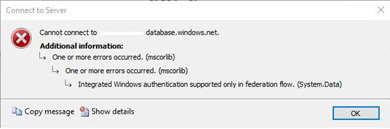

- Validate the Azure AD Connect status with the Azure portal https://aad.portal.azure.com

- Verify the below features are enabled

- Sync Status

- Seamless single sign-on

- Pass-through authentication

Testing Seamless single sign on works correctly using a web browser

Follow the steps here and navigate to https://myapps.microsoft.com Be sure to either clear the browser cache or use a new private browser session with any of the supported browsers in private mode.

If you successfully signed in without providing the password, you have tested that SSSO with PTA is working correctly.

Now the question is. Why the sign in is failing with SSMS?

For that we turned to grab a capture using Fiddler

Collecting a Fiddler trace

The following link has a set of instructions on how to go about setting up Fiddler classic to collect a trace. Troubleshooting problems related to Azure AD authentication with Azure SQL DB and DW – Microsoft Tech Community

- Once Fiddler is ready, I recommend that you pre-filter the capture by process as to only capture traffic that is originating from SSMS. That would prevent capturing traffic that is unrelated to our troubleshooting.

- Clear the current session if there are any frames that were captured before setting the filter

- Reproduce the issue

- Stop the capture and save the file

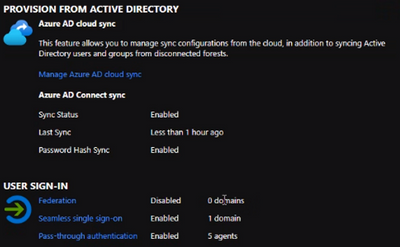

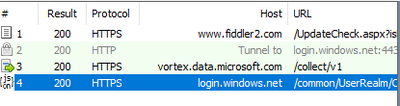

When we reviewed the trace, we saw a few interesting things

We can only see a call to login.windows.net which is one of the endpoints that helps us use Azure Active Directory authentication.

For SSSO for PTA we would expect to see subsequent calls to https://autologon.microsoftazuread-sso.com which were not present in the trace.

This Azure AD URL should be present in the Intranet zone settings, and it is rolled out by a group policy object in the on premises Active Directory.

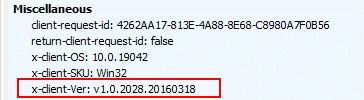

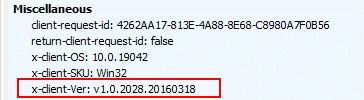

A key part on the investigation was finding that the client version is 1.0.x.x as captured on the Request Headers. This indicates the client is using the legacy Active Directory Authentication Library (ADAL)

Why is SSMS using a legacy component?

The SSMS version on the developer machine was the latest one so we needed to understand how the application is loading this library. For that we turned to Process Monitor (thanks Mark Russinovich)

We found that SSMS queries a key in the registry to find what DLL to use to support the Azure Active Directory Integrated authentication.

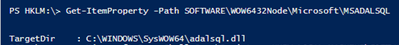

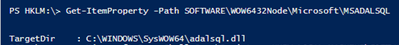

Using the below PowerShell cmdlets, we were able to find the location of the library on the filesystem

Set-Location -Path HKLM:

Get-ItemProperty -Path SOFTWAREWOW6432NodeMicrosoftMSADALSQL | Select-Object -Property TargetDir

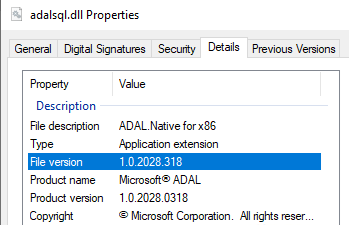

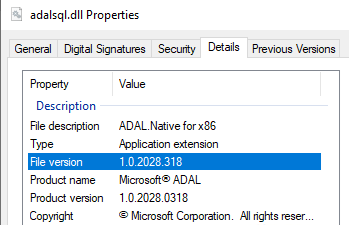

Checking on the adalsql.dll details we confirmed this is the legacy library

As SSMS is a 32 bit application it loads the DLL from the SysWOW64 location. If your application is 64 bit you may opt to check the registry key HKLM:SOFTWAREMicrosoftMSADALSQL

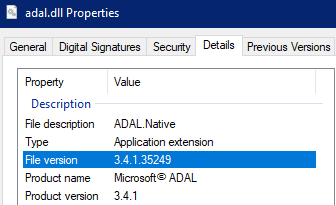

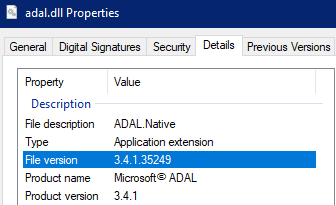

A clean install of the most recent version of SSMS creates a different DLL with the most up to date library

In this case the developer machine ended up having up that registry location modified and pointing to the legacy client (adalsql.dll). As the newer DLL (adal.dll) was already installed on the system the end user simply made the change to use the adal.dll on the registry.

It is important to be aware of this situation. Installing older versions of software like SSMS, SSDT (SQL Server Data Tools), Visual Studio etc. may end up modifying the registry key and pointing to the legacy ADAL client.

Cheers!

by Contributed | Aug 26, 2021 | Technology

This article is contributed. See the original author and article here.

In May 2021, the Biden Administration signed Executive Order (EO) 14028, placing cloud security at the forefront of national security. Federal agencies can tap into Microsoft’s comprehensive cloud security strategy to navigate the EO requirements with ease. The integration between Azure Security Center and Azure Sentinel allows agencies to leverage an existing, cohesive architecture of security products rather than attempting to blend various offerings. Our security products, which operate at cloud-speed, provide the needed visibility into cloud security posture while also offering remediation from the same pane of glass. Built-in automation reduces the burden on security professionals and encourages consistent, real-time responses to alerts or incidents.

The Azure Security suite helps federal agencies and partners improve their cloud security posture and stay compliant with the recent EO. While there are many areas Azure Security can support, this blog will focus on how Azure Security Center and Azure Sentinel can empower federal agencies to address the following EO goals:

Microsoft applies its industry-leading practices to Azure Security products, generating meaningful insights about security posture that simplify the process of protecting federal agencies and result in cost and time savings.

Azure Security Center (ASC) is a unified infrastructure security management system that strengthens the security posture of your data centers. Azure Defender, part of Azure Security Center, provides advanced threat protection across your hybrid workloads in the cloud – whether they’re in Azure or not – as well as on-premises.

Azure Sentinel, our cloud-native security information event management (SIEM) and security orchestration automated response (SOAR) solution is deeply integrated with Azure Security Center and provides security information event management and security orchestration automated response.

Note: For more information on products and features available in Azure Government, please refer to: Azure service cloud feature availability for US government customers | Microsoft Docs

Modernize and Implement Stronger Cybersecurity Standards in the Federal Government

Section three of the EO emphasizes the push toward cloud adoption and the need for proper cloud security. It highlights the necessity of a federal cloud security strategy, governance framework, and reference architecture to drive cloud adoption. There are significant security benefits when using the cloud over traditional on-premises data centers by centralizing data and providing continuous monitoring and analytics.

Azure Sentinel contains workbooks, visual representations of data, that help federal agencies gain insight into their security posture. Section three of the EO mandates Zero Trust planning as a requirement, which can be daunting to implement. The Zero Trust (TIC3.0) Workbook provides a visualization of Zero Trust principles mapped to the Trusted Internet Connections (TIC) framework. After aligning TIC 3.0 Security Capabilities to Zero Trust Principles and Pillars, this workbook shares easy-to-implement recommendations, log sources, automations, and more to empower federal agencies looking to build Zero Trust into cloud readiness. Read more about the Zero Trust (TIC3.0) Workbook.

For federal agencies beginning their digital transformations, ASC provides robust features out of the box to secure your environment and accelerate secure cloud adoption by leveraging existing best practices and guardrails.

ASC continuously scans your hybrid cloud environment and provides recommendations to help you harden your attack surface against threats. Azure Security Benchmark (ASB) is the baseline and driver for these recommendations. ASB is a Microsoft-authored, Azure-specific set of guidelines for security and compliance best practices based on common compliance frameworks. Azure Security Benchmark builds on the controls from the Center for Internet Security (CIS) and the National Institute of Standards and Technology (NIST) with a focus on cloud-centric security. ASB empowers teams to leverage the dynamic nature of the cloud and continuously deploy new resources by providing the needed visibility into the posture of these resources as well as easy to follow steps for remediation. With over 150+ built-in recommendations, ASB evaluates Azure resources across 11 controls, including network security, data protection, logging and threat detection, incident response, governance and strategy, and more.

Government agencies have complex compliance requirements that can be streamlined through Azure Security Benchmark. ASB provides federal agencies with a strong baseline to assess the health of their Azure resources. Teams can complement this visibility by including additional regulatory compliance standards or their own custom policy. Azure Security Center’s regulatory compliance dashboard provides insights into compliance posture against compliance requirements, including NIST SP 800-53, SWIFT CSP CSCF-v2020, Azure CIS 1.3.0, and more.

We recently released Regulatory Compliance in Workflow Automation, where changes in regulatory compliance standards can trigger real-time responses, such as notifying relevant stakeholders, launching a change management process, or applying specific remediation steps. Building in automation allows organizations to improve security posture by ensuring the proper steps are completed consistently and automatically, according to predefined requirements. Automation also reduces the burden on your security teams by streamlining repeatable tasks. Read more about how to build in automation for regulatory compliance.

With visibility and remediation all from the same dashboard, ASB and other out-of-the-box regulatory compliance initiatives empower security teams to get immediate, actionable insights into their security posture. Leveraging Microsoft best practices, built with Azure in mind, federal agencies can tap into the security of the cloud without committing resources to build new frameworks.

Using Azure Security Center’s regulatory compliance feature and workbooks in Azure Sentinel, federal agencies can tap into Microsoft best practices and existing frameworks, regardless of where they may be in their cloud journeys, to get and stay secure. These products not only provided heightened visibility into cloud security posture, but also provide steps for remediation to harden your attack surface and prevent attacks. These tools harness the power of automation, AI/ML, and more to reduce the burden on your security teams and allow them to focus on what matters.

Improve Detection of Cybersecurity Incidents on Federal Government Networks

The objective of section seven of the EO is to promote cross-government collaboration and information sharing by enabling a government-wide endpoint detection and response (EDR) system.

Integrating Azure Security Center and Azure Sentinel provides federal agencies with increased visibility to proactively identify threats and build in automated responses. Through Azure Sentinel, agencies can ensure they have the appropriate tools, whether that be automated responses or access to logs, to contain and remediate threats.

In addition to providing cloud security posture management, Azure Security Center has a cloud workload protection platform, commonly referred to as Azure Defender. Azure Defender provides advanced, intelligent protection for a variety of resource types, including servers, Kubernetes, container registries, SQL database servers, storage, and more. Read more about resource types covered by Azure Defender.

When Azure Defender detects an attempt to compromise your environment, it generates a security alert. Security alerts contain details of the affected resource, suggested remediation steps, and refer to recommendations to help harden your attack surface to protect against similar alerts in the future. In some scenarios, logic apps can also be triggered. Like automated responses to deviations in regulatory compliance standards, logic apps allow for consistent responses to Azure Security Center alerts.

Azure Defender not only has a breadth of coverage across many resource types, but also depth in coverage by resource type. Given the increase in frequency and complexity of attacks, organizations require dynamic threat detections. Azure Defender benefits from security research and data science teams at Microsoft who are continuously monitoring the threat landscape, leading to the constant tuning of detections as well as the inclusion of additional detections for greater coverage. Azure Defender incorporates integrated threat intelligence, behavioral analytics, and anomaly detection to identify threats across your environment.

Azure Sentinel is a central location to collect data at scale – across users, devices, applications, and infrastructure – and to conduct investigation and response.

There are two ways that Azure Sentinel can ingest data: data connectors and continuous export.

Azure Sentinel comes with built-in connectors for many Microsoft products, allowing for out-of-the-box, real-time integration. The Azure Defender connector facilitates the streaming of Azure Defender security alerts into Azure Sentinel, where you can view, analyze, and respond to alerts in a broader organizational threat context.

In addition to bringing Azure Defender alerts, organizations can stream alerts from other Microsoft products, including Microsoft 365 sources such as Office 365, Azure Active Directory, Microsoft Defender for Identity, or Microsoft Cloud App Security.

Continuous export in Azure Security Center allows for the streaming of not only Azure Defender alerts but also secure score and regulatory compliance insights.

After connecting data sources to Azure Sentinel, out-of-the-box, built-in templates guide the creation of threat detection rules. Our team of security experts created rule templates based on known threats, common attack vectors, and suspicious activity escalation chains. Creating rules based on these templates will continuously scan your environment for suspicious activity and create incidents when alerts are generated. You can couple built-in fusion technology, machine learning behavioral analytics, anomaly rules, or scheduled analytics rules with your own custom rules to ensure Azure Sentinel is scanning your environment for relevant threats.

Automation rules in Azure Sentinel help triage incidents. These rules can automatically assign incidents to the right team, close noisy incidents or known false positives, change alert severity, or add tags.

Automation rules are also used to run playbooks in response to incidents. Playbooks, which are based on workflows built-in Azure Logic Apps, are a collection of processes that are run in response to an alert or incident. This feature allows for predefined, consistent, and automated responses to Azure Sentinel activity, reducing the burden on your security team and allowing for close to real-time responses to alerts or incidents.

Due to the integrated nature of our threat protection suite, completing investigation and remediation of an Azure Defender alert in Azure Sentinel will still update the alerts status in the Azure Security Center portal. For example, when an alert is closed in Azure Sentinel, that alert will display as closed in Azure Security Center as well (and visa versa)!

At Microsoft, we are excited about the opportunity to expand our partnerships with federal agencies as we work to improve cloud security, and in doing so, improve national security.

For more information, please visit our Cyber EO resource center.

by Contributed | Aug 24, 2021 | Technology

This article is contributed. See the original author and article here.

This is the first in a series of articles which explore how to integrate Artificial Intelligence into a video processing infrastructure using off-the-market cameras and Intel OpenVino Model Server running at the edge. In the below sections we will learn some background trivia, hardware/software prerequisites for implementation, and steps to setup a production-ready AI enabled Network Video Recorder that has the best of both worlds – Microsoft and Intel.

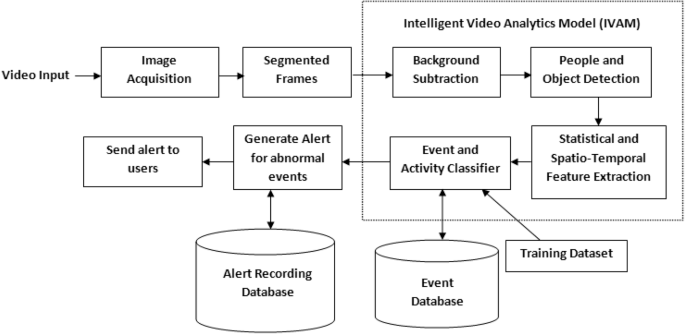

What is a video analytics platform

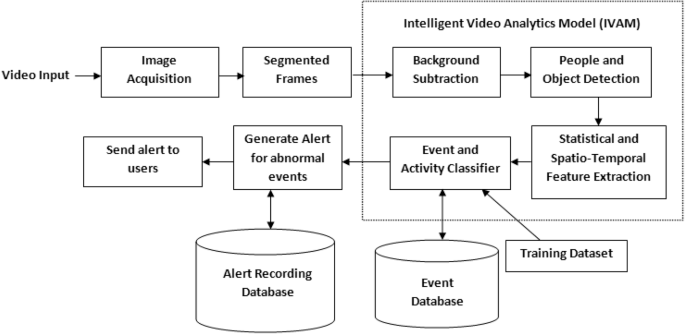

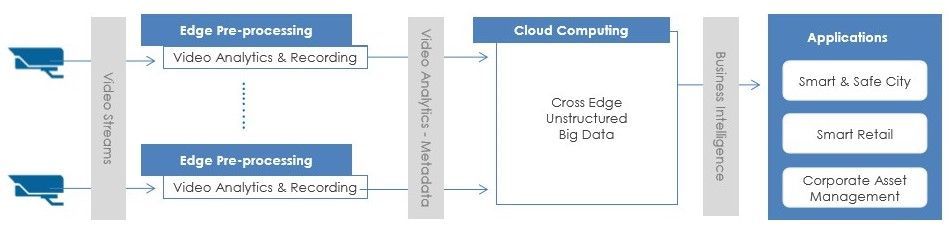

In the last few years, video analytics, also known as video content analysis or intelligent video analytics, has attracted increasing interest from both industry and the academic world. Video Analytics products add artificial intelligence to cameras by analyzing video content in real-time, extracting metadata, sending out alerts and providing actionable intelligence to security personnel or other systems. Video Analytics can be embedded at the edge (even in-camera), in servers on-premise, and/or on-cloud. They extract only temporal and spatial events in a scene, filtering out noise such as lighting changes, weather, trees and animal movements. Here is a logical flow of how it works.

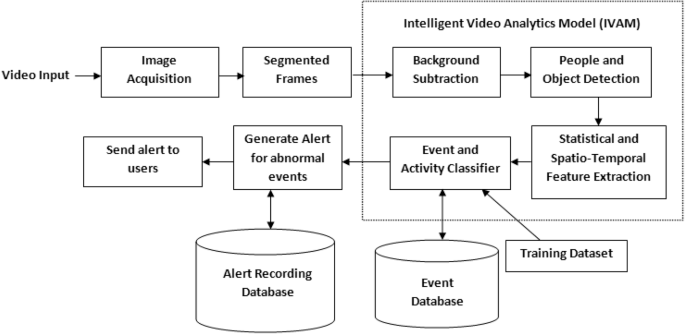

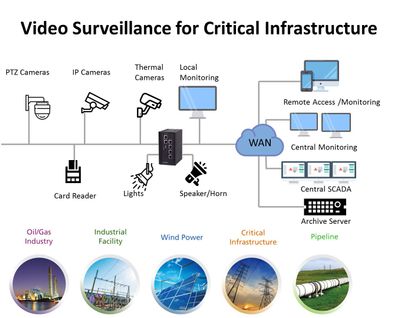

Let’s face it: on-premises and legacy video surveillance infrastructure are still in the dark ages. Physical servers often have limited virtualization integration and support, as well as racks upon racks of servers that clog up performance regardless of whether the data center is using NVR, direct-attached storage, storage area network or hyper-converged infrastructure. It’s been that way for the last 10, if not 20, years. Buying and housing an NVR for five or six cameras is expensive and time-consuming from a management and maintenance point of view. With great improvements in connectivity, compression and data transfer methods, a cloud-native solution becomes an excellent option. Here are some of the popular use cases in this field and digram of a sample deployment for critical infrastructure.

- Motion Detection

- Intrusion Detection

- Line Crossing

- Object Abandoned

- License Plate Recognition

- Vehicle Detection

- Asset Management

- Face Detection

- Baby Monitoring

- Object Counting

|

|

Common approaches for proposals to clients involve either a new installation (greenfield project) or a lift and shift scenario (brownfield project). Video intelligence is one industry where it becomes important to follow a bluefield approach – meant to describe a combination of both brownfield and greenfield, where some streams of information are already in motion and some will be new instances of technology. The reason is that the existing hardware and software installations are very expensive and although they are open to new ideas, they want to keep what is already working. The current article is about setting up this new technology in a way so it accepts pipelines, for inference and event generation, on live video for the above use cases in future.

The rise of AI NVRs

Video Intelligence was invented in 1942 by German engineer, Walter Bruch, so that he and others could observe the launch of V2 rockets on a private system. While its purpose has not drastically changed in the past 75 years, the system itself has undergone radical changes. Since its development, users’ expectations have evolved exponentially, necessitating the development of faster, better, and more cost-effective technology.

Initially, they could only watch through live streams as they happened— recordings would become available much later (VCR). Until the recent past these were analog devices using analog cameras and a Digital Video Recorder (DVR). Not unlike your everyday television box which used to run off these DVRs in every home! Recently, these have started getting replaced with Power Over Ethernet (PoE) enabled counterparts, running off Network Video Recorders (NVR). Here is a quick visual showing the difference between DVR and NVR.

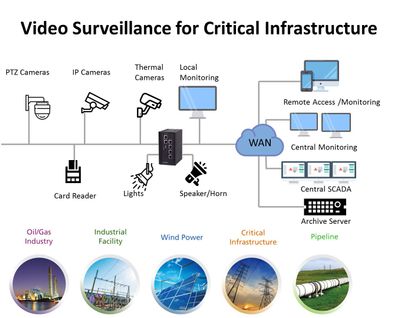

AI NVR Video Analytics System is a plug-and-play turnkey solution, including video search for object detection, high-accuracy intrusion detection, face search and face recognition, license plate and vehicle recognition, people/vehicle counting, and abnormal-activity detection. All functions support live stream and batch mode processing, real-time alerts and GDPR-friendly privacy protection when desired. AI NVR overcomes the challenges of many complex environments and is fully integrated with AI video analytics features for various markets, including perimeter protection of businesses, access controls for campuses and airports, traffic management by law enforcement, and business intelligence for shopping centers. Here is a logical flow of an AI NVR from video capture to data-driven applications.

In this article we are going to see how to create such an AI NVR at the edge using Azure Video Analyzer (AVA) and Intel products.

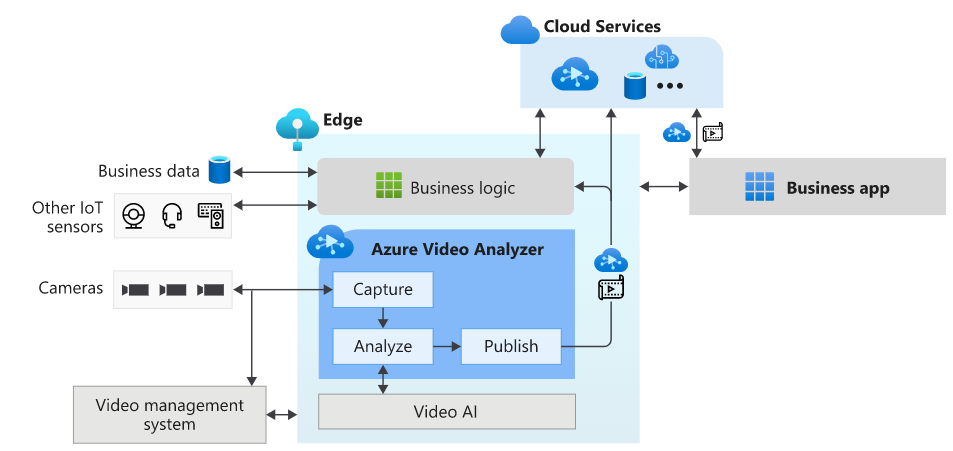

Azure Video Analyzer – a one-stop solution from Microsoft

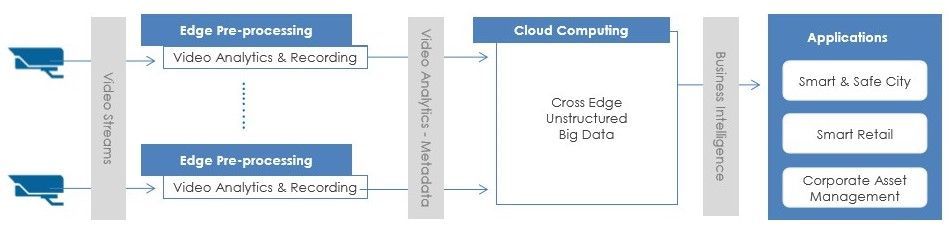

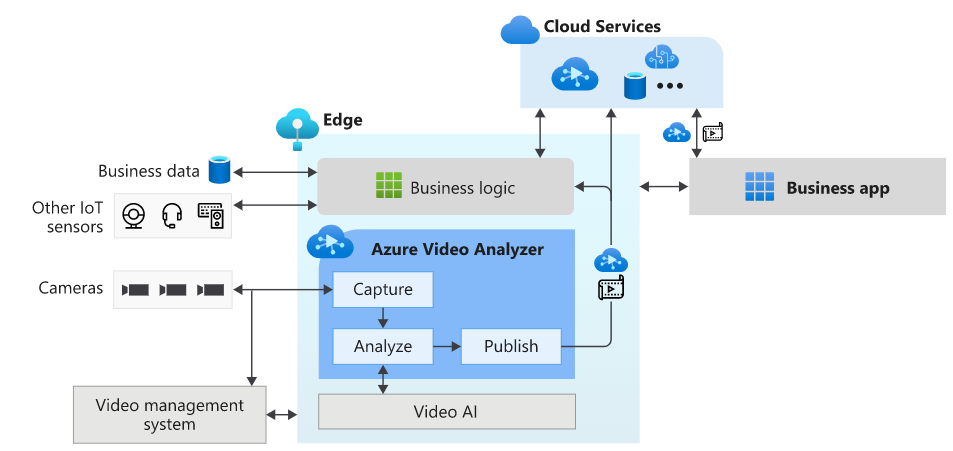

Azure Video Analyzer (AVA) is a brand new service to build intelligent video applications that span the edge and the cloud. It offers the capability to capture, record, and analyze live video along with publishing the results – video and/or video analytics. Video can be published to the edge or the Video Analyzer cloud service, while video analytics can be published to Azure services (in the cloud and/or the edge). With Video Analyzer, you can continue to use your existing video management systems (VMS) and build video analytics apps independently. AVA can be used in conjunction with computer vision SDKs and toolkits to build cutting edge IoT solutions. The diagram below illustrates this.

This is the most essential component of creating the AI NVR. As you may have guessed, in this article we are going to deploy an AVA module on IoT edge to coordinate between the model server and the video feeds through an http extension. You can ‘bring you own model’ and call it through either http or grpc endpoint.

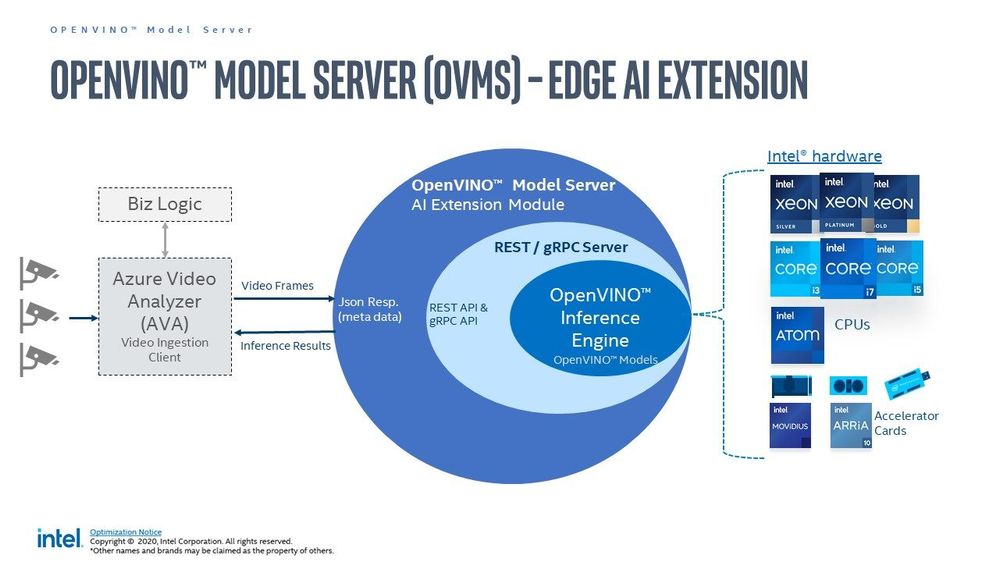

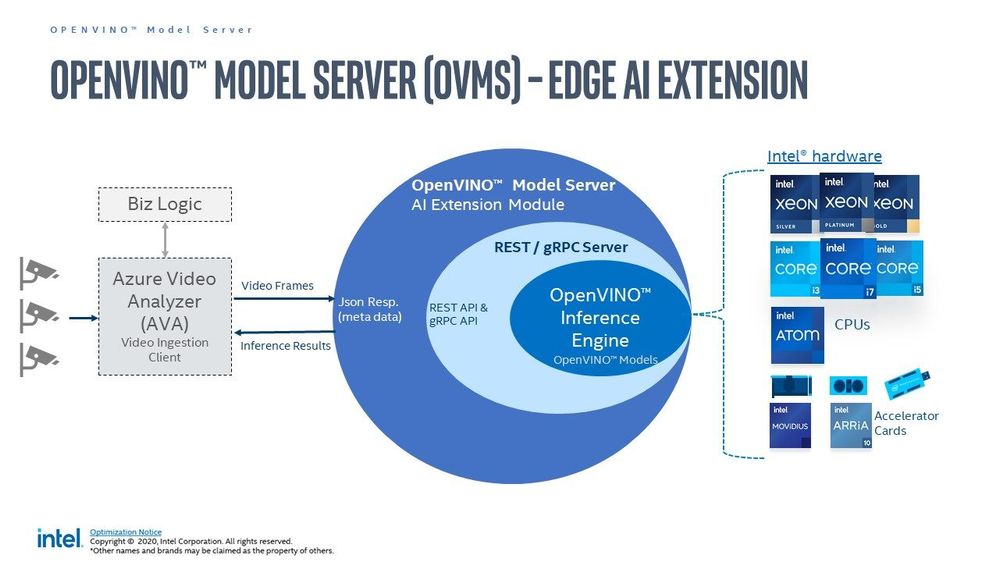

Intel OpenVINO toolkit

OpenVINO (Open Visual Inference and Neural network Optimization) is a toolkit provided by Intel to facilitate faster inference of deep learning models. It helps developers to create cost-effective and robust computer vision applications. It enables deep learning inference at the edge and supports heterogeneous execution across computer vision accelerators — CPU, GPU, Intel® Movidius™ Neural Compute Stick, and FPGA. It supports a large number of deep learning models out of the box.

OpenVino uses its own Intermediate Representation (IR) (link)(link) format similar to ONNX (link), and works with all your favourite deep learning tools like Tensorflow, Pytorch etc. You can either convert your resultant model to openvino or use/optimize the available pretained models in the Intel model zoo. In this article we are specifically using the OpenVino Model Server (OVMS) available through this Azure marketplace module, Out of the many models in their catalogue I am only using those that count faces, vehicles, and people. These are identified by their call signs – personDetection, faceDetection, and vehicleDetection.

Prerequisites

There are some hardware and software prerequisites for creating this platform.

- ONVIF PoE camera able to send encoded RTSP streams (link)(link)

- Intel edge device with Ubuntu 18/20 (link)

- Active Azure subscription

- Development machine with VSCode & IoT Extension

- Working knowledge of Computer Vision & Model Serving

ONVIF PoE camera

ONVIF (the Open Network Video Interface Forum) is a global and open industry forum with the goal of facilitating the development and use of a global open standard for the interface of physical IP-based security products. ONVIF creates a standard for how IP products within video surveillance and other physical security areas can communicate with each other. This is different from propreitary equipment, and you can use all open source libraries with them. A decent quality camera like Reolink 410 is enough. Technically you can use wireless camera but I would not recommend that in a professional setting.

Intel edge device with Ubuntu

This can be any device with one or more Intel cpu. Intel NUC makes great low cost IoT edge device and even the cheap ones can handle around 10 cameras running at 30 fps. I am using a base model with Celeron processor priced at around 130$. The camera(s), device, and some cables are all you need to implement this. Optionally, like me, you may need a PoE switch or network extender to get connected. Check the wattage of the PoE to be at least 5 W, and bandwidth to be at least 20 mbps per camera. You also need to install Ubuntu Linux.

Active Azure subscription

Surely, you will need this one, but as we know Azure has this immense suit of products, and while ideally we want to have everything, it may not be practically feasible. For practical purposes you might have to ask for access to particular services, meaning you have to know ahead exactly which ones you want to use. We will need the following:

- Azure IoT Hub (link)

- Azure Container Registry (link)

- Azure Media Services (link)

- Azure Video Analyzer (link)

- Azure Streaming Analytics (link)(future article)

- Power BI / React App (link)(future article)

- Azure Linux VM (link)(optional)

Computer Vision & Model Serving

Generally this prerequisite takes a lot of engineering and is expensive. Thankfully the OVMS extension from Intel is capable of serving high quality models from their zoo, because without this you would have to do the whole flask/socket server thing and it wouldn’t be half as good. Whatever models you need you can mention their call sign and it will be served instantly for you at the edge by the extension. We will see more about this in the next article once things are setup. Note: we are making the platform in such a way that you can use Azure CustomVision or Azure Machine Learning models on this same setup in future with very minimal changes.

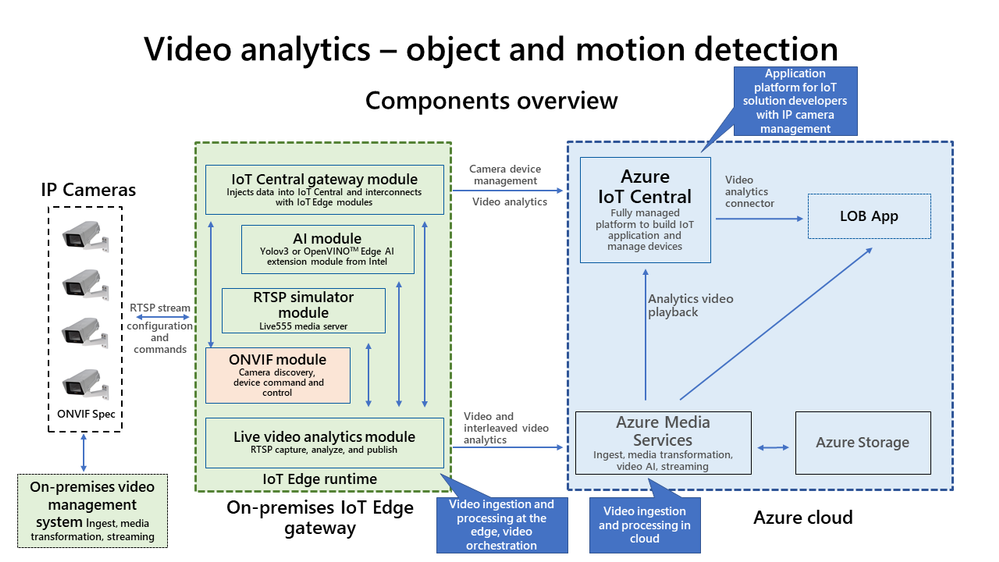

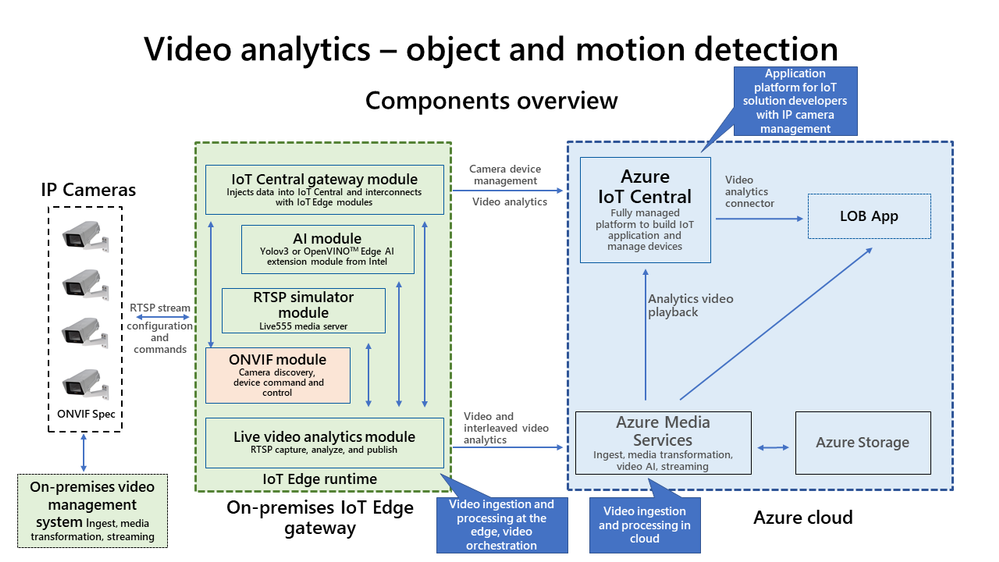

Reference Architecture

We are definitely living in interesting times when something as complex as video analytics is almost an OOTB feature! Below is a ready-to-deploy architecture recommended and maintained by Microsoft Azure for video analytics. Technically if you know what you are doing you can deploy this entire thing with the push of a few buttons. However, I found it to have a bit too much for a Minimum Viable Product (MVP) as it is ‘viable’ but not ‘minimum’ so to say.

Here I present an alternate architecture that we followed, implemented, and got comparable results to the one above. This is a stripped down version of the official architecture, contains only the necessary components of a MVP for AI NVR, and is much easier to disect.

Notice it looks somewhat simliar to the logical flow of an AI NVR shown in one of the prior sections.

Notice it looks somewhat simliar to the logical flow of an AI NVR shown in one of the prior sections.

Inbound feed to the AI NVR

Before we go into the implementation I wanted to mention some aspects about the inputs and outputs of this system.

- Earlier we said the system needs RTSP input, even though there are other forms of streaming protocols such as RTMP(link), HTTP etc. However, we choose RTSP mostly because its optimized for viewing experience and scalability.

- For development purpose it is recommended to use the excellent RTSP Simulator provided by Microsoft.

- To display the video being processed use any of the following players.

- You can technically use a usb webcam and create your own RTSP stream(link)(link), However, underneath it uses GStreamer, RTSPServer, and pipelines. From my experience you should be careful using this method, especially since you will need understanding of hardware/software media encoding (e.g. H.264) and GStreamer dockerization

.

.

- One very interesting option that I used as a video source was the RTSP Camera Server app. This will instantly turn your smartphone camera into an RTSP feed that your AI NVR can consume

!

!

- Last, but not the least you should make sure that your incoming feed has the required resolution that your CV algorithms need. The trick is not to use too good cameras. 4 to 5 MP is fine for maintaining pixel distribution parity with available pretrained models.

Outbound events from the AI NVR

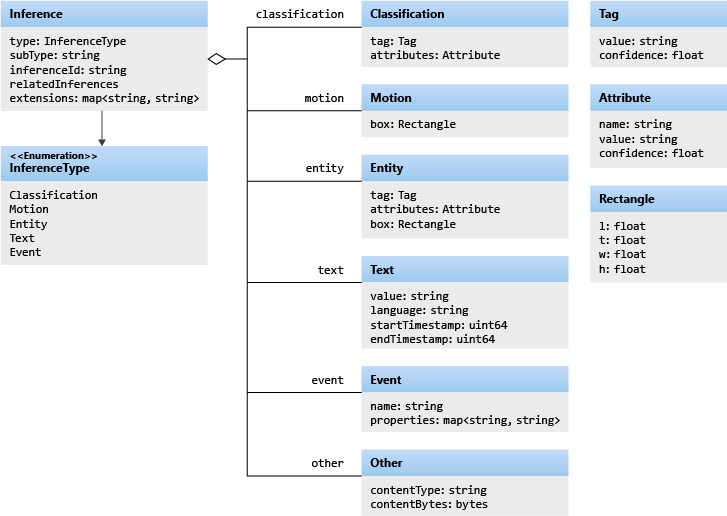

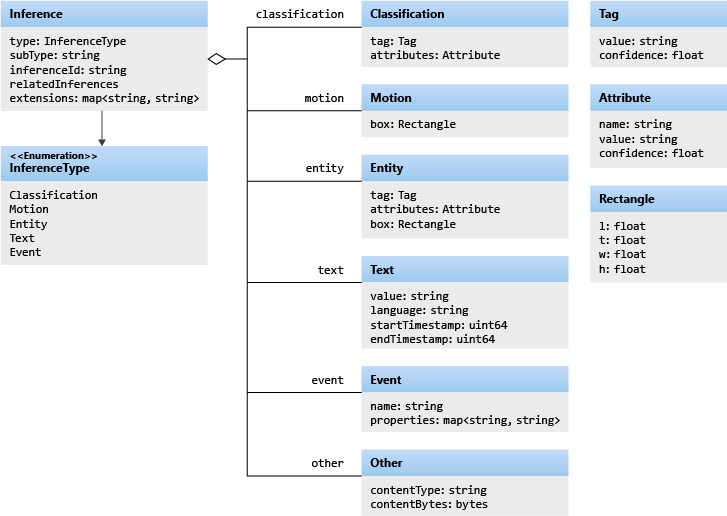

In Azure Video Analyzer, each inference object regardless of using HTTP-based contract or gRPC based contract follows the object model described below.

The example below contains a single Inference event with vehicleDetection. We will see more of these in a future article.

{

"timestamp": 145819820073974,

"inferences": [

{

"type": "entity",

"subtype": "vehicleDetection",

"entity": {

"tag": {

"value": "vehicle",

"confidence": 0.9147264

},

"box": {

"l": 0.6853116,

"t": 0.5035262,

"w": 0.04322505,

"h": 0.03426218

}

}

}

Apart from the inference events there are many other type of events, such as the MediaSessionEstablished event, which happens when you are recording the media either in File Sink or Video Sink.

[IoTHubMonitor] [9:42:18 AM] Message received from [avasampleiot-edge-device/avaedge]:

{

"body": {

"sdp": "SDP:nv=0rno=- 1586450538111534 1 IN IP4 XXX.XX.XX.XXrns=Matroska video+audio+(optional)subtitles, streamed by the LIVE555 Media Serverrni=media/camera-300s.mkvrnt=0 0rna=tool:LIVE555 Streaming Media v2020.03.06rna=type:broadcastrna=control:*rna=range:npt=0-300.000rna=x-qt-text-nam:Matroska video+audio+(optional)subtitles, streamed by the LIVE555 Media Serverrna=x-qt-text-inf:media/camera-300s.mkvrnm=video 0 RTP/AVP 96rnc=IN IP4 0.0.0.0rnb=AS:500rna=rtpmap:96 H264/90000rna=fmtp:96 packetization-mode=1;profile-level-id=4D0029;sprop-parameter-sets=XXXXXXXXXXXXXXXXXXXXXXrna=control:track1rn"

},

"applicationProperties": {

"dataVersion": "1.0",

"topic": "/subscriptions/{subscriptionID}/resourceGroups/{name}/providers/microsoft.media/videoanalyzers/{ava-account-name}",

"subject": "/edgeModules/avaedge/livePipelines/Sample-Pipeline-1/sources/rtspSource",

"eventType": "Microsoft.VideoAnalyzers.Diagnostics.MediaSessionEstablished",

"eventTime": "2021-04-09T09:42:18.1280000Z"

}

}

The above points are mentioned so as to show how some of the expected outputs look like. After all that, lets see how exactly you can create a foundation for your AI NVR.

Implementation

In this section we will see how we can use these tools to our benefit. For the Azure resources I may not go through the entire creation or installation process as there are quite a few articles on the internet for doing those. I shall only mention the main things to look out for. Here is an outline of the steps involved in the implementation.

- Create a resource group in Azure (link)

- Create a IoT hub in Azure (link)

- Create a IoT Edge device in Azure (link)

- Create and name a new user-assigned managed identity (link)

- Create Azure Video Analyzer Account (link)

- Create AVA Edge provisioning token

- Install Ubuntu 18/20 on the edge device

- Prepare the device for AVA module (link)

- Use Dev machine to turn on ONVIF camera(s) RTSP (link)

- Set a local static IP for the camera(s) (link)

- Use any of the players to confirm input streaming video (link)

- Note down RTSP url(s), username(s), and password(s)

- Install docker on the edge device

- Install VSCode on development machine

- Install IoT Edge runtime on the edge device (link)

- Provision the device to Azure IoT using connection string (link)

- Check IoT edge Runtime is running good on the edge device and portal

- Create an IoT Edge solution in VSCode (link)

- Add env file to solution with AVA/ACR/Azure details

- Add Intel OVMS, AVA Edge, and RTSP Simulator modules to manifest

- Create deployment from template (link)

- Deploy the solution to the device

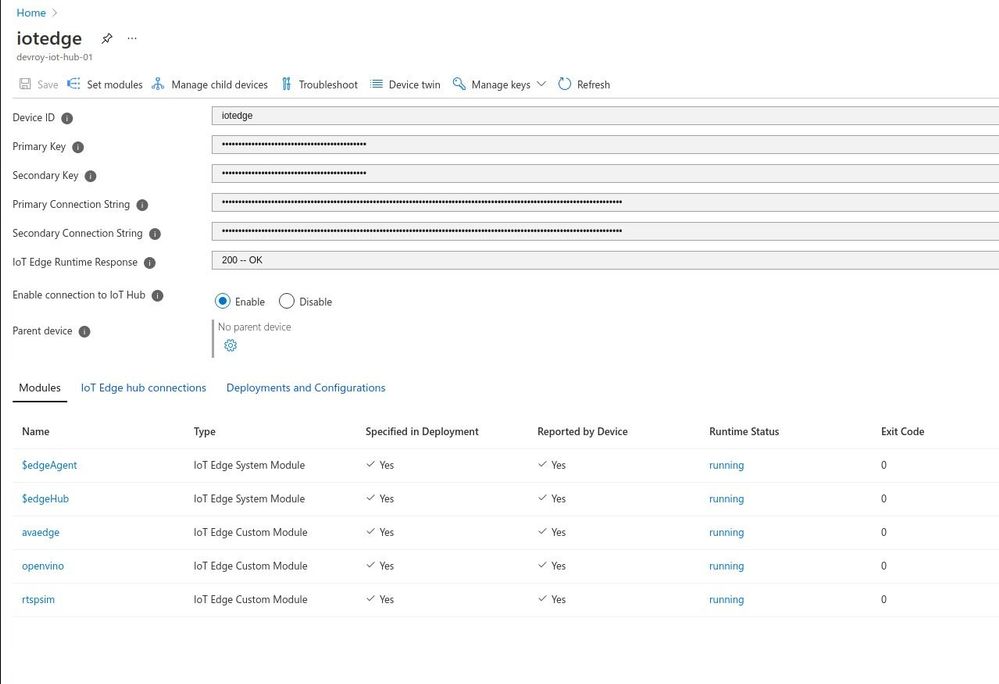

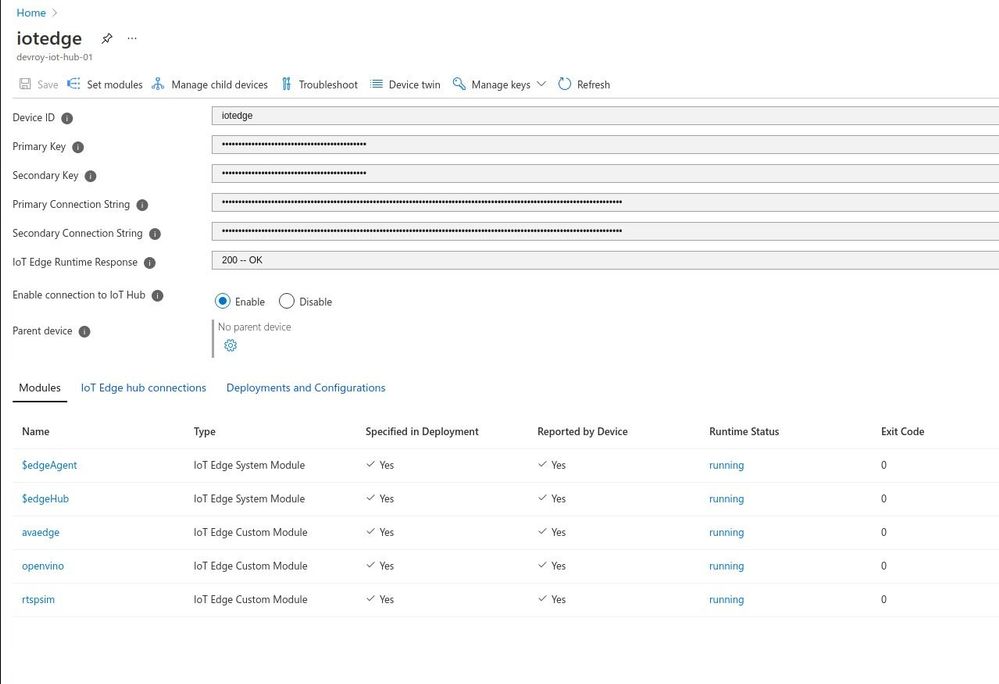

- Check Azure portal for deployed modules running

Lets go some of the items in the list in details.

Steps 1 and 2 are common steps in many use cases and can be done by following this. For 3 you need to make sure you are creating an ‘IoT Edge‘ device and not a simple IoT device. Follow the link for 4 to create a managed identity. For 5 use the interface to create an AVA account. Enter a name for your Video Analyzer account. The name must be all lowercase letters or numbers with no spaces, and 3 to 24 characters in length. Fill in the proper subscription, resource group, storage account, and identity from previous steps. You should now be having a running AVA account. Use these steps to create ‘Edge Provisioninig Token‘ for step 6. Remember, this is just for AVA Edge, not to be confused with provisioning through DPS. For 7, ubuntu linux is good, the support for this in windows is a work in progress. After you create the account keep the following information on standby.

AVA_PROVISIONING_TOKEN="<Provisioning token>"

Step 8, although simple, is an important step in the process. All you actually need to do is to run the below command.

bash -c "$(curl -sL https://aka.ms/ava-edge/prep_device)"

However, underneath this there is a lot going on in preparation for the NVR. The Azure Video Analyzer module should be configured to run on the IoT Edge device with a non-privileged local user account. The module needs certain local folders for storing application configuration data. The RTSP camera simulator module needs video files with which it can synthesize a live video feed. The prep-device script in the above command automates the tasks of creating input and configuration folders, downloading video input files, and creating user accounts with correct privileges.

Steps 9,10, and 11 are for setting up your ONVIF camera(s). Things to note here are that you need to set static class C IP addresses for each camera, and set https protocol along with difficult-to-guess passwords. Again, take extra caution if you are doing this with wireless camera. I use VLC to confirm the live camera feed from each camera. You may think this is obvious or choose to automate this, but I have seen a lot of issues in either. I personally recommend clients to confirm feed/frame-rate from every camera manually using urls. VLC is my player of choice but you have many more choices.

Before you bring Azure into the picture, you must have all your RTSP urls ready and tested in setp 12. Here is an example rtsp url of the main feed. Notice the port number ‘554‘ and encoding ‘h264‘.

rtsp://username:difficultpassword@192.168.0.35:554//h264Preview_01_main

For 13 to 18 keep going by the book(links). For step 19, fill in your details in the following block and create the ‘env‘ file.

SUBSCRIPTION_ID="<Subscription ID>"

RESOURCE_GROUP="<Resource Group>"

AVA_PROVISIONING_TOKEN="<Provisioning token>"

VIDEO_INPUT_FOLDER_ON_DEVICE="/home/localedgeuser/samples/input"

VIDEO_OUTPUT_FOLDER_ON_DEVICE="/var/media"

APPDATA_FOLDER_ON_DEVICE="/var/lib/videoAnalyzer"

CONTAINER_REGISTRY_USERNAME_myacr="<your container registry username>"

CONTAINER_REGISTRY_PASSWORD_myacr="<your container registry password>"

For 20 add the following module definitions in your deployment json. This will cover Azure AVA, Intel OVMS, and RTSP Simulator. Also follow this for more details.

"modules": {

"avaedge": {

"version": "1.1",

"type": "docker",

"status": "running",

"restartPolicy": "always",

"settings": {

"image": "mcr.microsoft.com/media/video-analyzer:1",

"createOptions": {

"Env": [

"LOCAL_USER_ID=1010",

"LOCAL_GROUP_ID=1010"

],

"HostConfig": {

"Dns": [

"1.1.1.1"

],

"LogConfig": {

"Type": "",

"Config": {

"max-size": "10m",

"max-file": "10"

}

},

"Binds": [

"$VIDEO_OUTPUT_FOLDER_ON_DEVICE:/var/media/",

"$APPDATA_FOLDER_ON_DEVICE:/var/lib/videoanalyzer"

]

}

}

}

},

"openvino": {

"version": "1.0",

"type": "docker",

"status": "running",

"restartPolicy": "always",

"settings": {

"image": "marketplace.azurecr.io/intel_corporation/open_vino:latest",

"createOptions": {

"HostConfig": {

"Dns": [

"1.1.1.1"

]

},

"ExposedPorts": {

"4000/tcp": {}

},

"Cmd": [

"/ams_wrapper/start_ams.py",

"--ams_port=4000",

"--ovms_port=9000"

]

}

}

},

"rtspsim": {

"version": "1.0",

"type": "docker",

"status": "running",

"restartPolicy": "always",

"settings": {

"image": "mcr.microsoft.com/lva-utilities/rtspsim-live555:1.2",

"createOptions": {

"HostConfig": {

"Dns": [

"1.1.1.1"

],

"LogConfig": {

"Type": "",

"Config": {

"max-size": "10m",

"max-file": "10"

}

},

"Binds": [

"$VIDEO_INPUT_FOLDER_ON_DEVICE:/live/mediaServer/media"

]

}

}

}

}

}

21 to 23 are again the usual steps for all IoT solutions and once you deploy the template, you should have the following modules running as below.

There, we have created the foundation for our Azure IoT Edge device to perform as a powerful AI NVR. Here ‘avaedge‘ is the Azure Video Analyzer service, ‘openvino‘ provides the model server extension, and ‘rtspsim‘ creates the simulated ‘live’ input video feed. In the next article we will see how we can use this setup to detect faces or maybe cars and stuff.

Future Work

I hope you enjoyed this article on setting up an AI enabled NVR for video analytics application. We love to share our experiences and get feedback from the community as to how we are doing. Look out for upcoming articles and have a great time with Microsoft Azure.

To learn more about Microsoft apps and services, contact us at contact@abersoft.ca or 1-833-455-1850!

Please follow us here for regular updates: https://lnkd.in/gG9e4GD and check out our website https://abersoft.ca/ for more information!

Recent Comments