by Contributed | Aug 22, 2024 | Technology

This article is contributed. See the original author and article here.

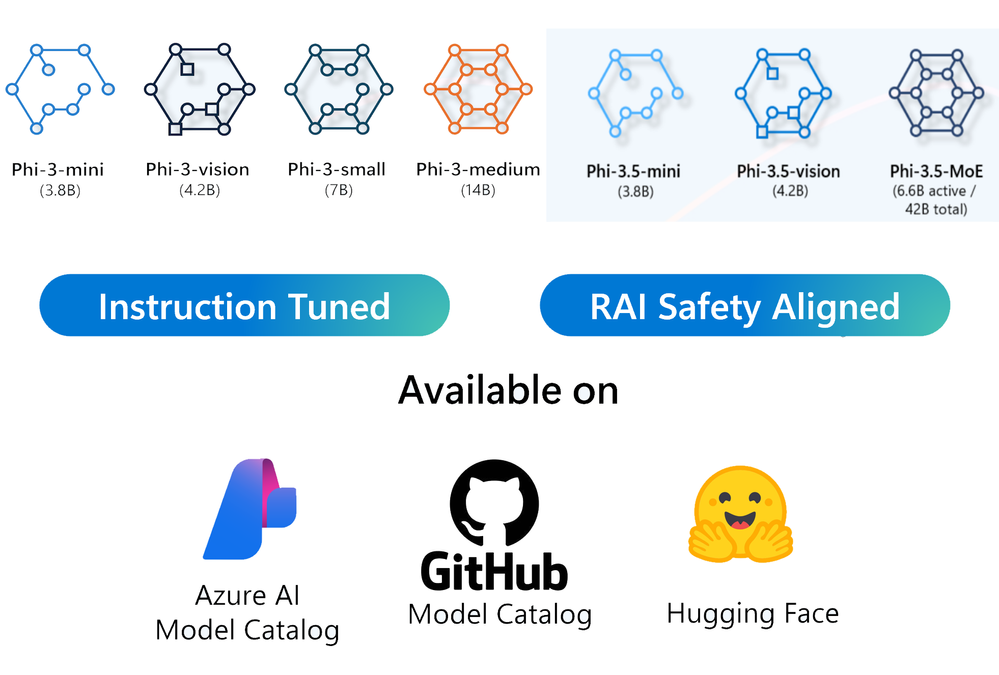

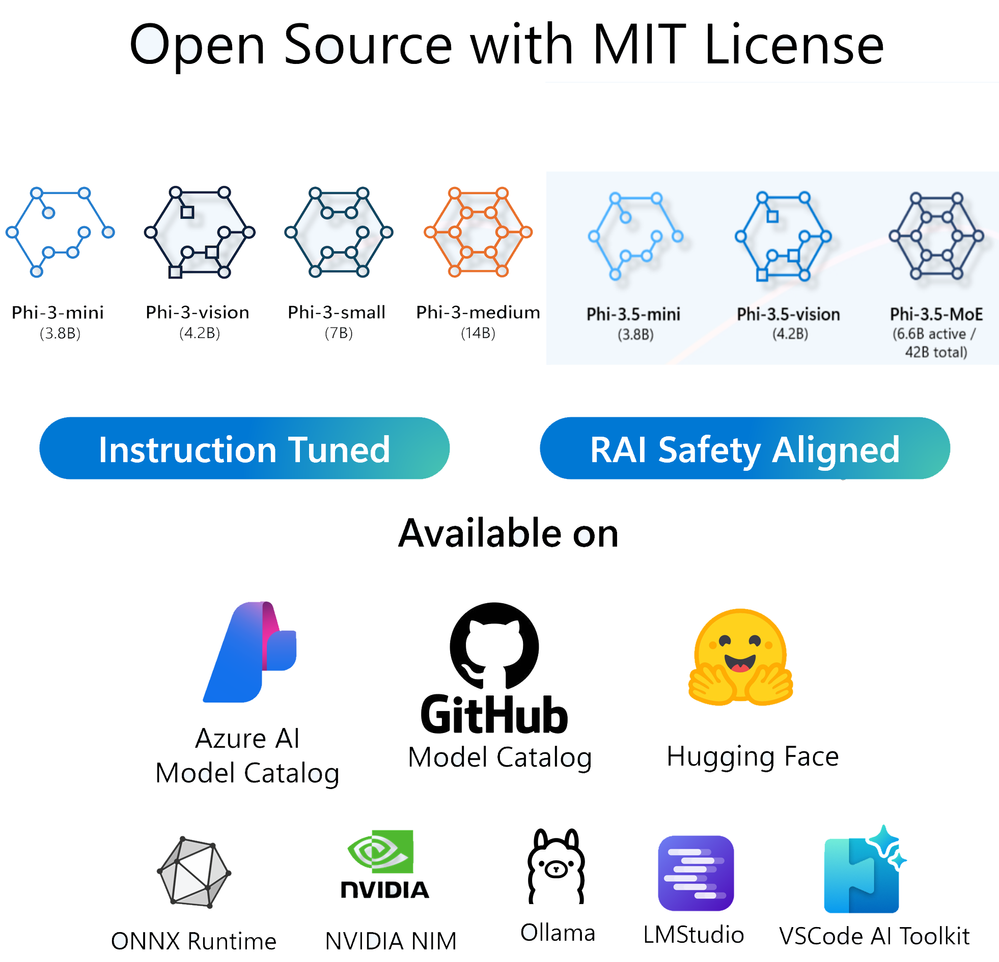

After the release of Phi-3 at Microsoft Build 2024, it has received different attention, especially the application of Phi-3-mini and Phi-3-vision on edge devices. In the June update, we improved Benchmark and System role support by adjusting high-quality data training. In the August update, based on community and customer feedback, we brought Phi-3.5-mini-128k-instruct multi-language support, Phi-3.5-vision-128k with multi-frame image input, and provided Phi-3.5 MOE newly added for AI Agent. Next, let’s take a look

Multi-language support

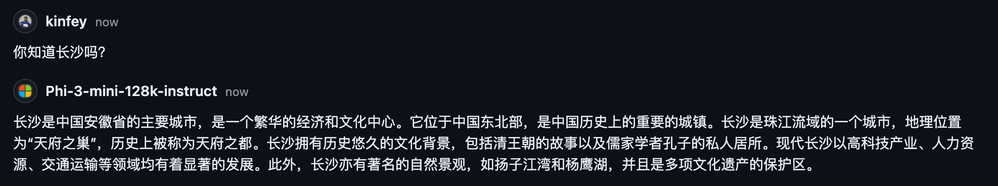

In previous versions, Phi-3-mini had good English corpus support, but weak support for non-English languages. When we tried to ask questions in Chinese, there were often some wrong questions, such as

Obviously, this is a wrong answer

But in the new version, we can have better understanding and corpus support with the new Chinese prediction support

You can also try the enhancements in different languages, or in the scenario without fine-tuning and RAG, it is also a good model.

Code Sample: https://github.com/microsoft/Phi-3CookBook/blob/main/code/09.UpdateSamples/Aug/phi3-instruct-demo.ipynb

Better vision

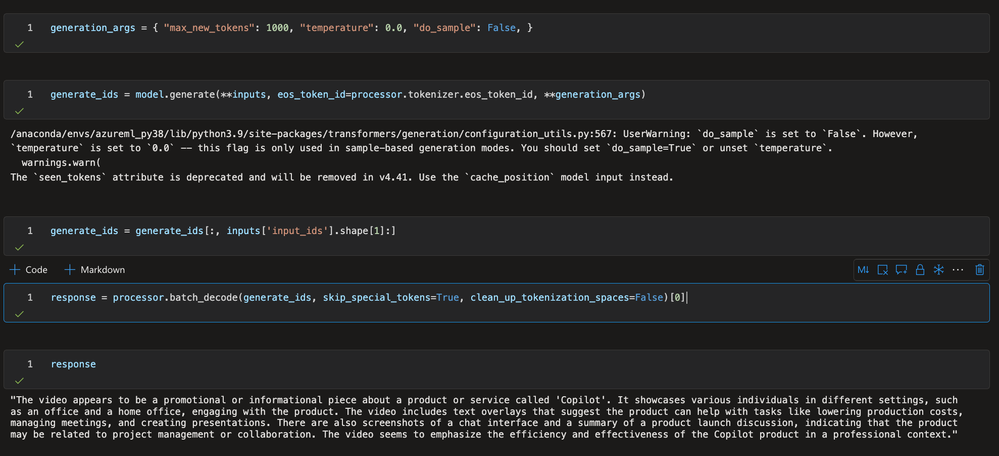

Phi-3.5-Vision enables Phi-3 to not only understand text and complete dialogues, but also have visual capabilities (OCR, object recognition, and image analysis, etc.). However, in actual application scenarios, we need to analyze multiple images to find associations, such as videos, PPTs, books, etc. In the new Phi-3-Vision, multi-frame or multi-image input is supported, so we can better complete the inductive analysis of videos, PPTs, and books in visual scenes.

As shown in this video

We can use OpenCV to extract key frames. We can extract 21 key frame images from the video and store them in an array.

images = []

placeholder = “”

for i in range(1,22):

with open(“../output/keyframe_”+str(i)+“.jpg”, “rb”) as f:

images.append(Image.open(“../output/keyframe_”+str(i)+“.jpg”))

placeholder += f”n”

Combined with Phi-3.5-Vision’s chat template, we can perform a comprehensive analysis of multiple frames.

This allows us to more efficiently perform dynamic vision-based work, especially in edge scenarios.

Code Sample: https://github.com/microsoft/Phi-3CookBook/blob/main/code/09.UpdateSamples/Aug/phi3-vision-demo.ipynb

Intelligence MOEs

In order to achieve higher performance of the model, in addition to computing power, model size is one of the key factors to improve model performance. Under a limited computing resource budget, training a larger model with fewer training steps is often better than training a smaller model with more steps.

Mixture of Experts Models (MoEs) have the following characteristics:

- Faster pre-training speed than dense models

- Faster inference speed than models with the same number of parameters

- Requires a lot of video memory because all expert systems need to be loaded into memory

- There are many challenges in fine-tuning, but recent research shows that instruction tuning for mixed expert models has great potential.

Now there are a lot of AI Agents applications, we can use MOEs to empower AI Agents. In multi-task scenarios, the response is faster.

We can explore a simple scenario where we want to use AI to help us write Twitter based on some content and translate it into Chinese and publish it to social networks. We can combine Phi-3.5 MOEs to complete this. We can use Prompt to set and arrange tasks, such as blog content publishing, translated content, and the best answer.

“””

sys_msg = “””

You are a helpful AI assistant, you are an agent capable of using a variety of tools to answer a question. Here are a few of the tools available to you:

– Blog: This tool helps you describe a certain knowledge point and content, and finally write it into Twitter or Facebook style content

– Translate: This is a tool that helps you translate into any language, using plain language as required

– Final Answer: the final answer tool must be used to respond to the user. You must use this when you have decided on an answer.

To use these tools you must always respond in JSON format containing `“tool_name”` and `“input”` key–value pairs. For example, to answer the question, “Build Muliti Agents with MOE models” you must use the calculator tool like so:

{

“tool_name”: “Blog”,

“input”: “Build Muliti Agents with MOE models”

}

Or to translate the question “can you introduce yourself in Chinese” you must respond:

{

“tool_name”: “Search”,

“input”: “can you introduce yourself in Chinese”

}

Remember just output the final result, ouput in JSON format containing `“agentid”`,`“tool_name”` , `“input”` and `“output”` key–value pairs .:

[

{ “agentid”: “step1”,

“tool_name”: “Blog”,

“input”: “Build Muliti Agents with MOE models”,

“output”: “………”

},

{ “agentid”: “step2”,

“tool_name”: “Search”,

“input”: “can you introduce yourself in Chinese”,

“output”: “………”

},

{

“agentid”: “final”

“tool_name”: “Result”,

“output”: “………”

}

]

The users answer is as follows.

“”“

We can see that by telling the model what skills it needs to have and the arrangement of tasks, we can assign them to different tasks through Phi-3.5 MOEs to complete related work.

Write something about Generative AI with MOEs , translate it to Chinese

The result :

[

{

“agentid”: “step1”,

“tool_name”: “Blog”,

“input”: “Generative AI with MOE”,

“output”: “Generative AI with MOE (Mixture of Experts) is a powerful approach that combines the strengths of generative models and the flexibility of MOE architecture. This hybrid model can generate high-quality, diverse, and contextually relevant content, making it suitable for various applications such as content creation, data augmentation, and more.”

},

{

“agentid”: “step2”,

“tool_name”: “Translate”,

“input”: “Generative AI with MOE is a powerful approach that combines the strengths of generative models and the flexibility of MOE architecture. This hybrid model can generate high-quality, diverse, and contextually relevant content, making it suitable for various applications such as content creation, data augmentation, and more.”,

“output”: “基于生成AI的MOE(Mixture of Experts)是一种强大的方法,它结合了生成模型的优势和MOE架构的灵活性。这种混合模型可以生成高质量、多样化且上下文相关的内容,使其适用于各种应用,如内容创建、数据增强等。”

},

{

“agentid”: “final”,

“tool_name”: “Result”,

“output”: “基于生成AI的MOE(Mixture of Experts)是一种强大的方法,它结合了生成模型的优势和MOE架构的灵活性。这种混合模型可以生成高质量、多样化且上下文相关的内容,使其适用于各种应用,如内容创建、数据增强等。”

}

]

If conditions permit, we can more smoothly integrate the Phi-3 MOEs model into frameworks such as AutoGen, Semantic Kernel, and Langchain.

Code Sample: https://github.com/microsoft/Phi-3CookBook/blob/main/code/09.UpdateSamples/Aug/phi3_moe_demo.ipynb

SLMs do not replace LLMs but give GenAI a broader scenario. The update of Phi-3 allows more edge devices to have better support, including text, chat, and vision. In modern AI Agents application scenarios, we hope to have more efficient task execution efficiency. In addition to computing power, MoEs are the key to solving problems. Phi-3 is still iterating, and I hope everyone will pay more attention and give us better feedback.

by Contributed | Aug 21, 2024 | Technology

This article is contributed. See the original author and article here.

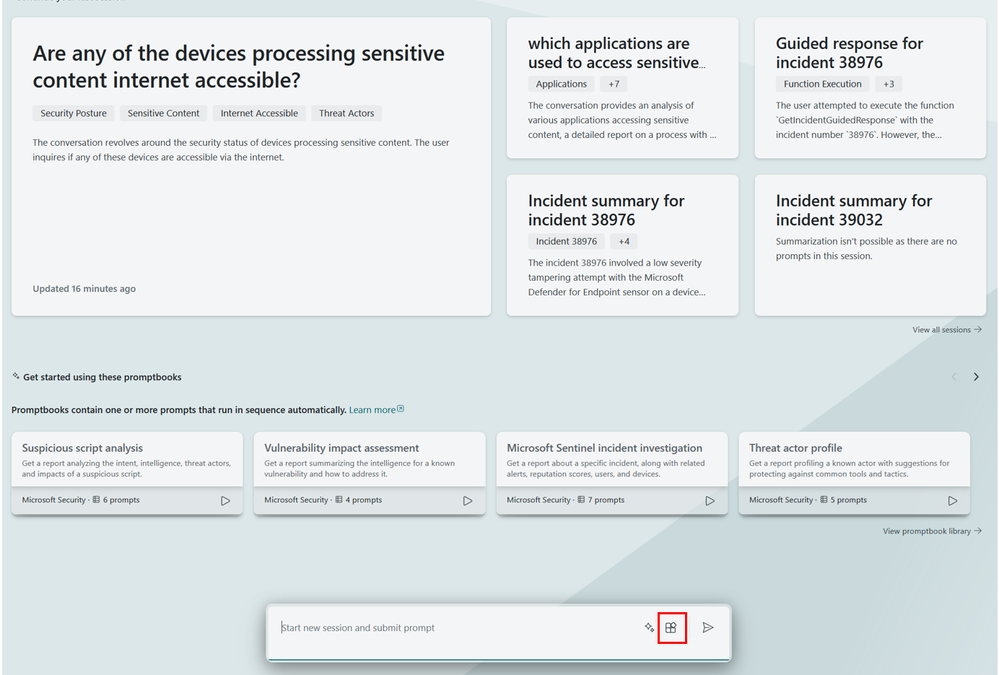

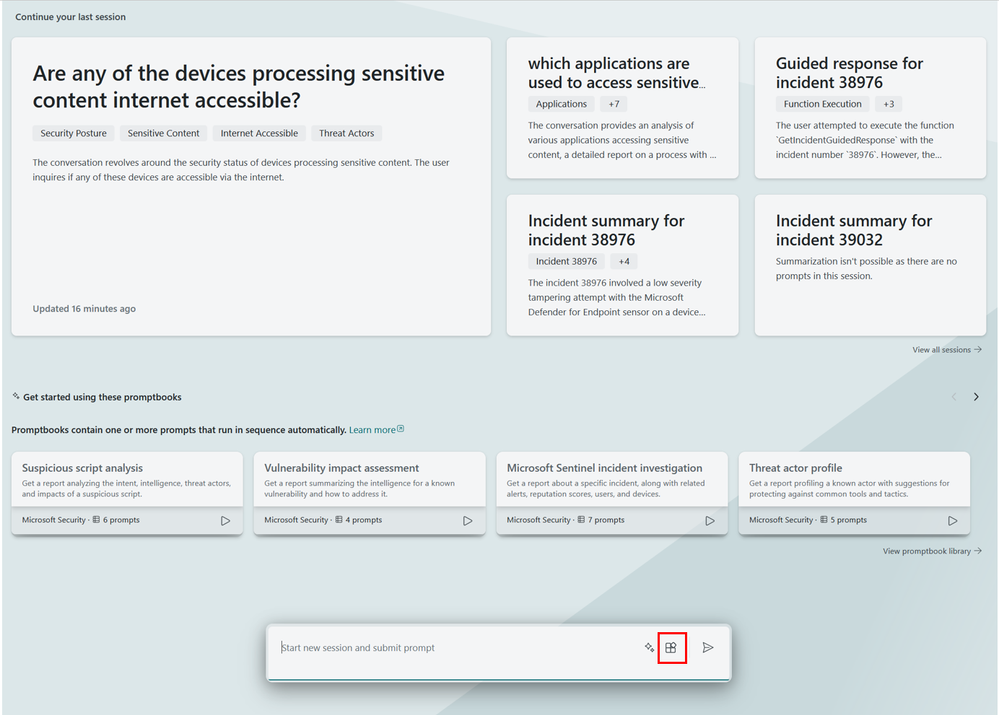

This is a step-by-step guided walkthrough of how to use the custom Copilot for Security pack for Microsoft Data Security and how it can empower your organization to understand the cyber security risks in a context that allows them to achieve more. By focusing on the information and organizational context to reflect the real impact/value of investments and incidents in cyber. We are working to add this to our native toolset as well, we will update once ready.

Prerequisites

- License requirements for Microsoft Purview Information Protection depend on the scenarios and features you use. To understand your licensing requirements and options for Microsoft Purview Information Protection, see the Information Protection sections from Microsoft 365 guidance for security & compliance and the related PDF download for feature-level licensing requirements. You also need to be licensed for Microsoft Copilot for Security, more information here.

- Consider setting up Azure AI Search to ingest policy documents, so that they can be part of the process.

Step-by-step guided walkthrough

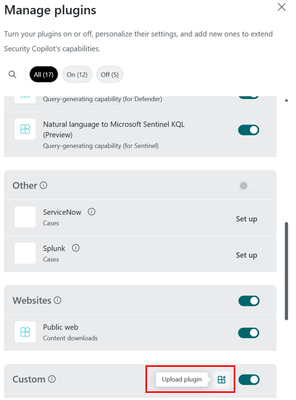

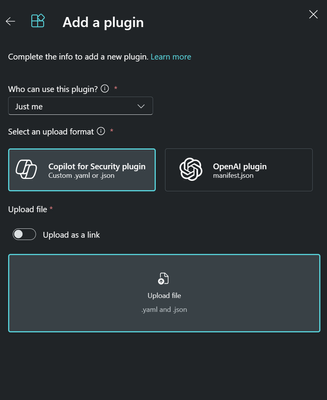

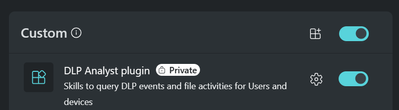

In this guide we will provide high-level steps to get started using the new tooling. We will start by adding the custom plugin.

- Go to securitycopilot.microsoft.com

- Download the DataSecurityAnalyst.yml file from here.

- Select the plugins icon down in the left corner.

- Under Custom upload, select upload plugin.

- Select the Copilot for Security plugin and upload the DataSecurityAnalyst.yml file.

- Click Add

- Under Custom you will now see the plug-in

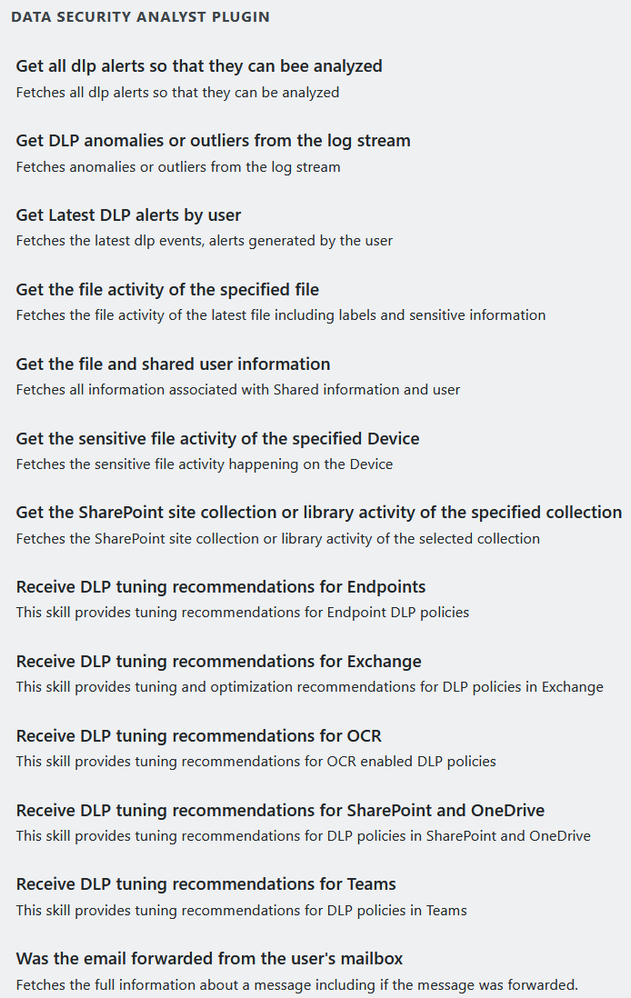

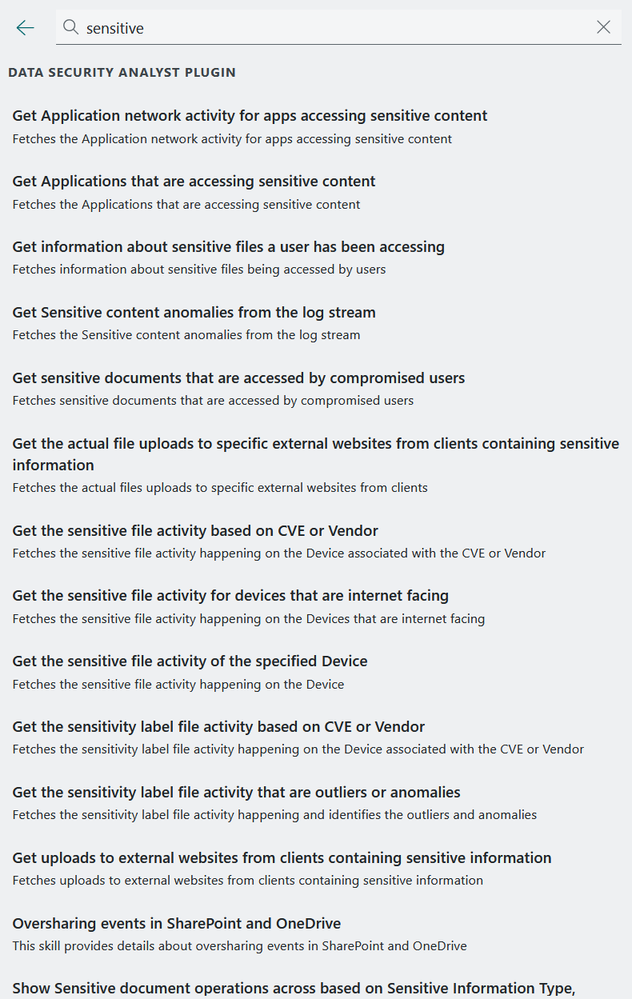

The custom package contains the following prompts

Under DLP you will find this if you type /DLP

Under Sensitive you will find this if you type sensitive

Let us get started using this together with the Copilot for Security capabilities

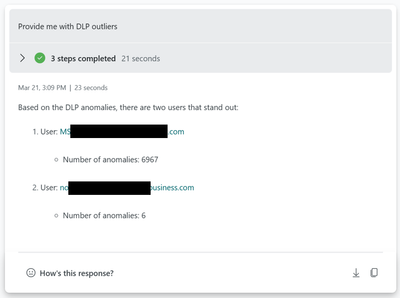

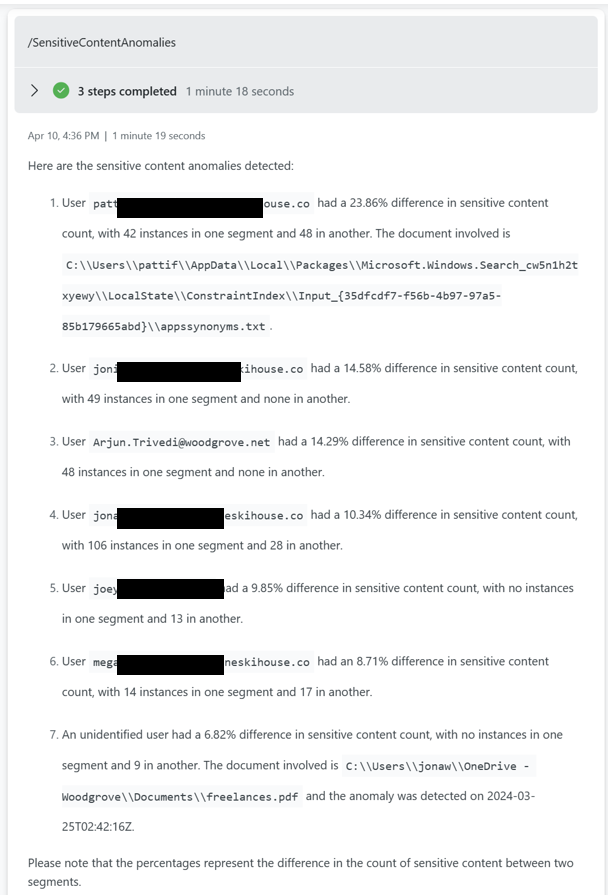

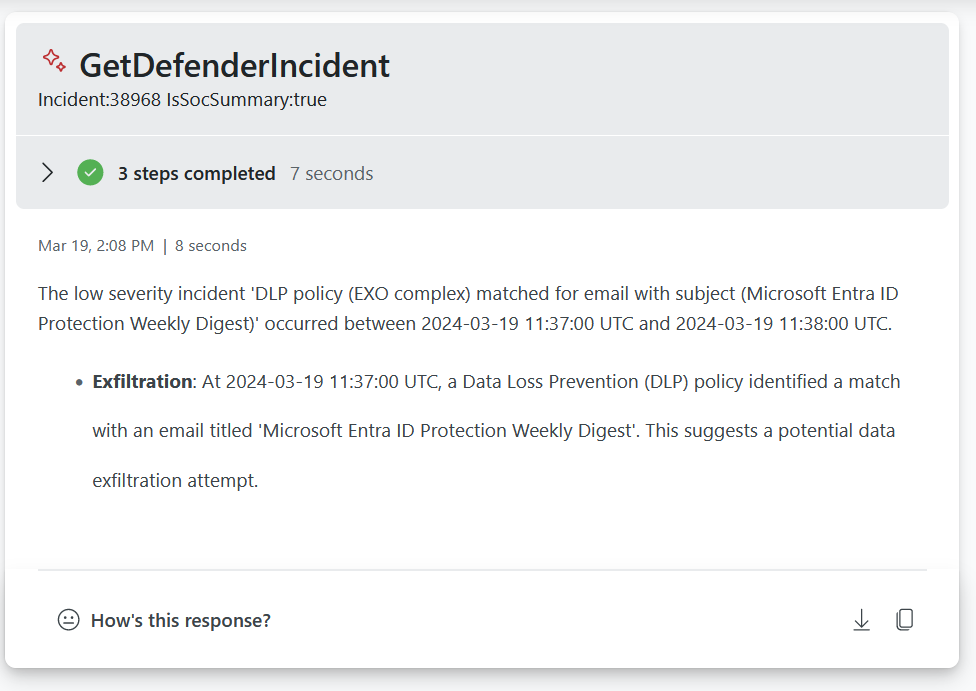

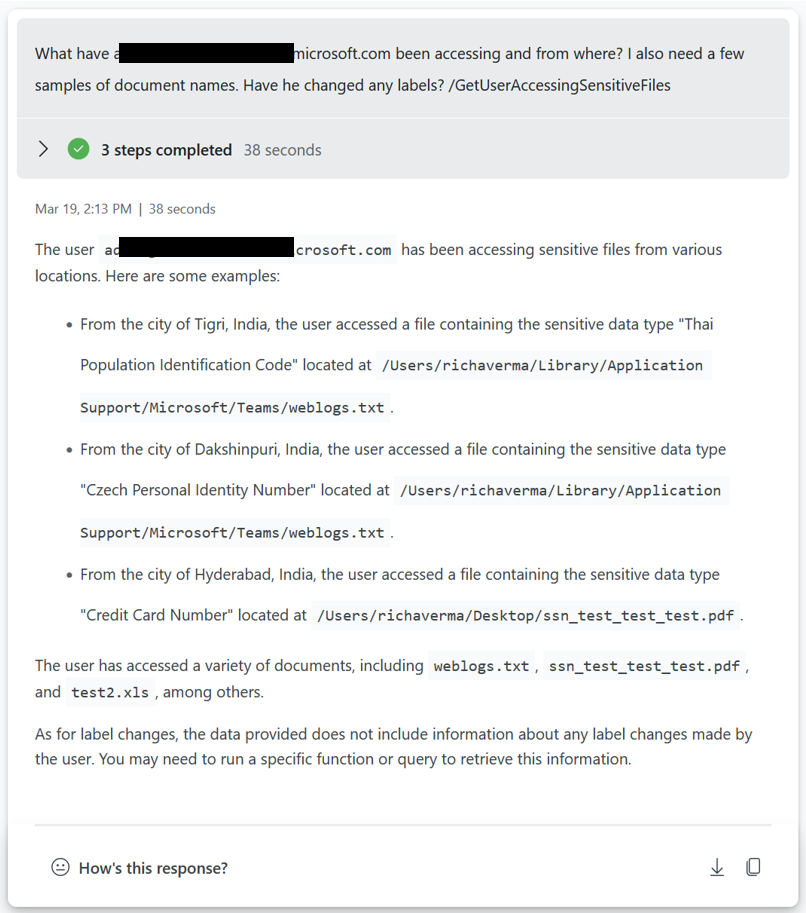

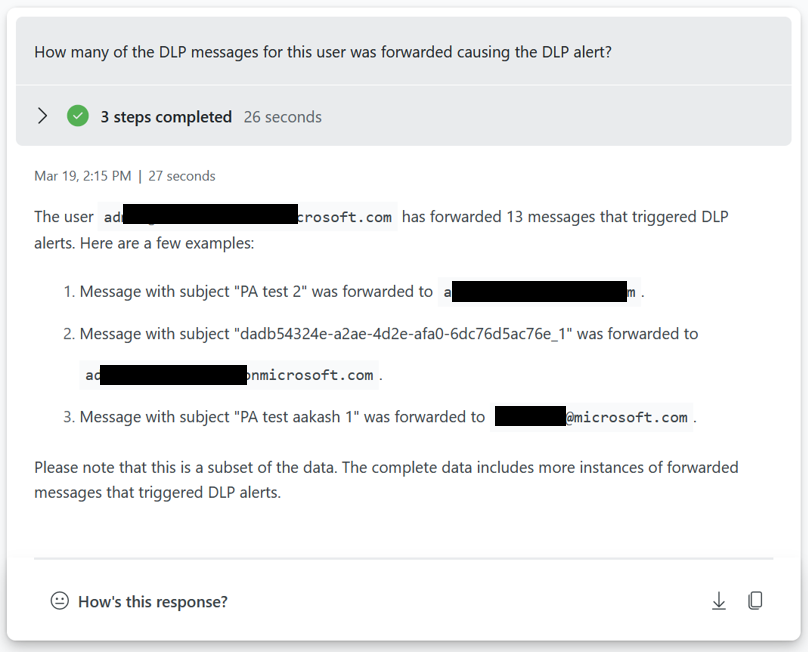

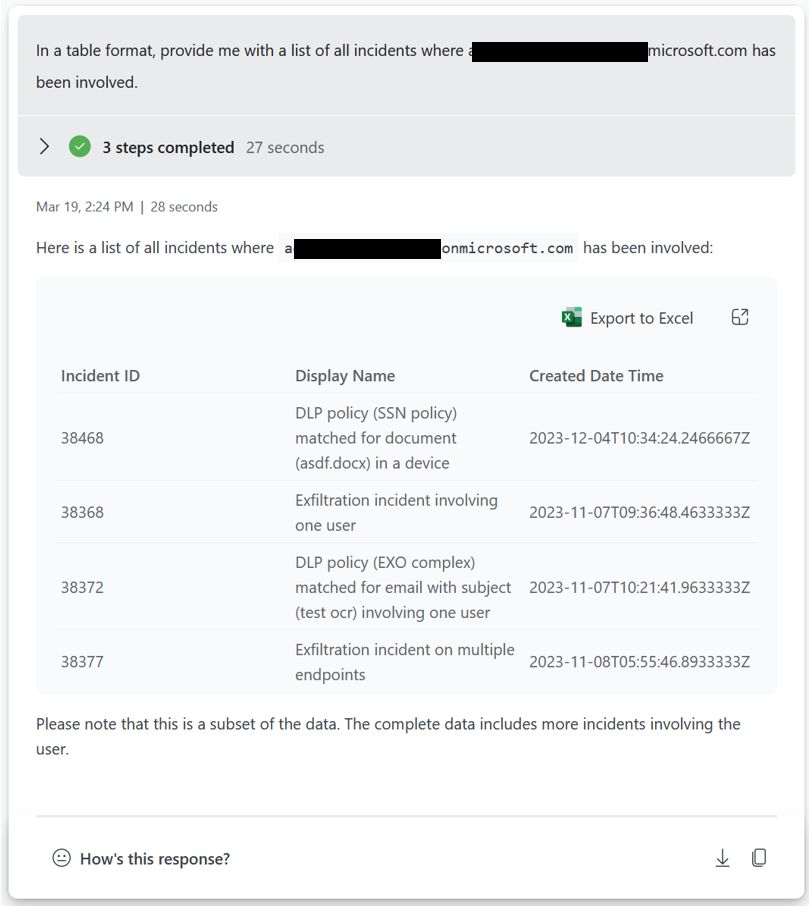

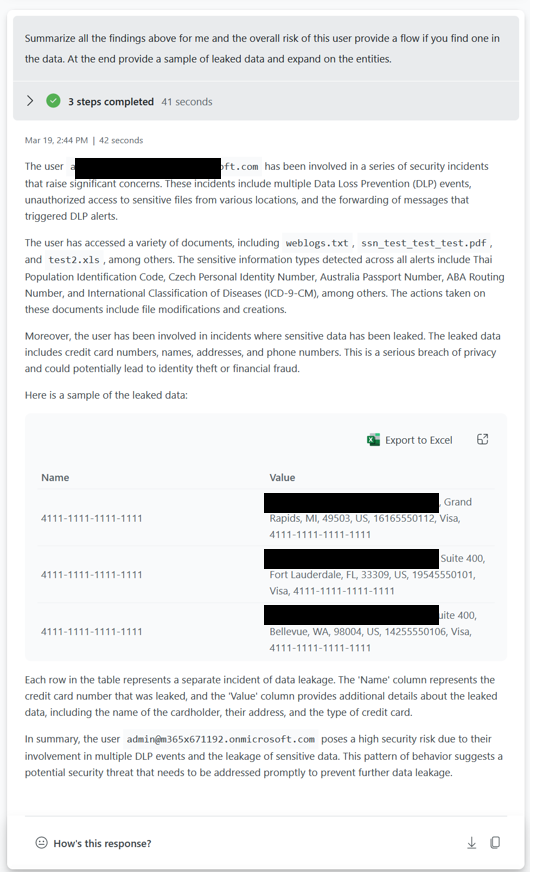

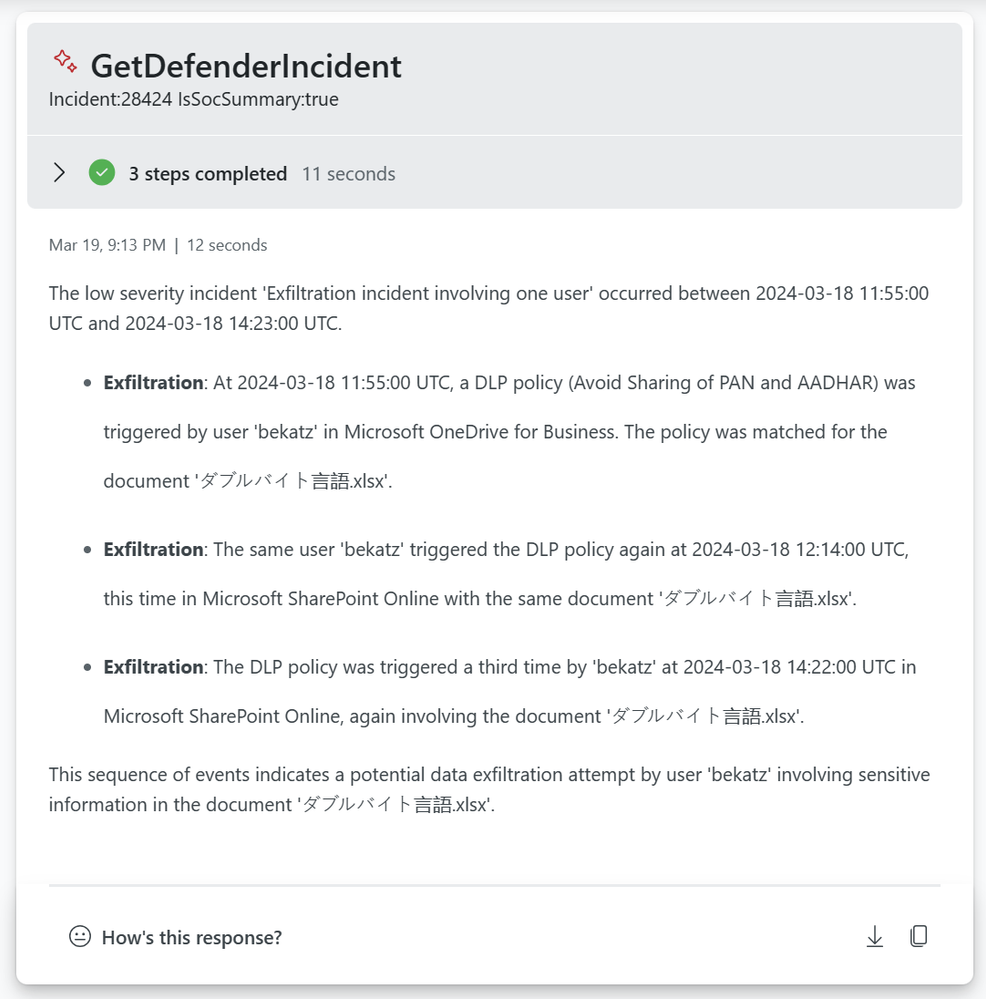

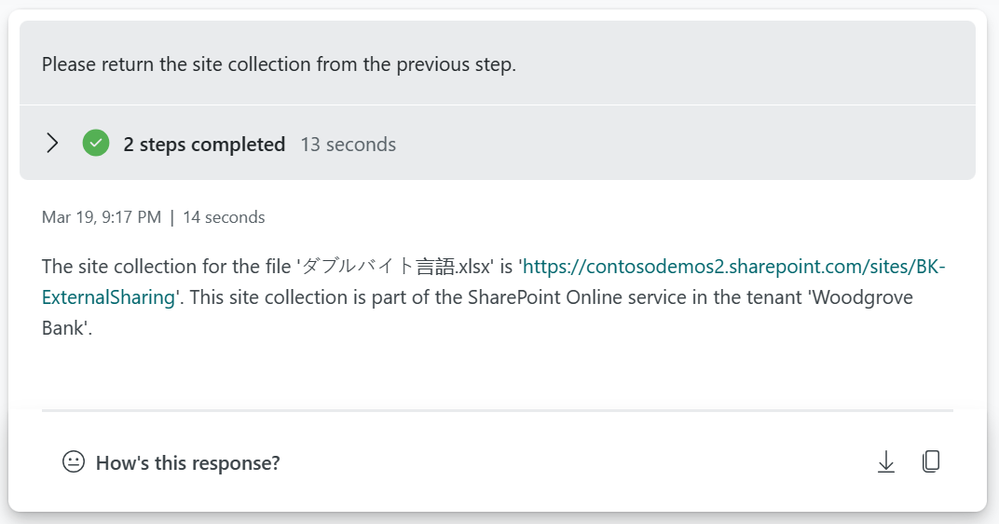

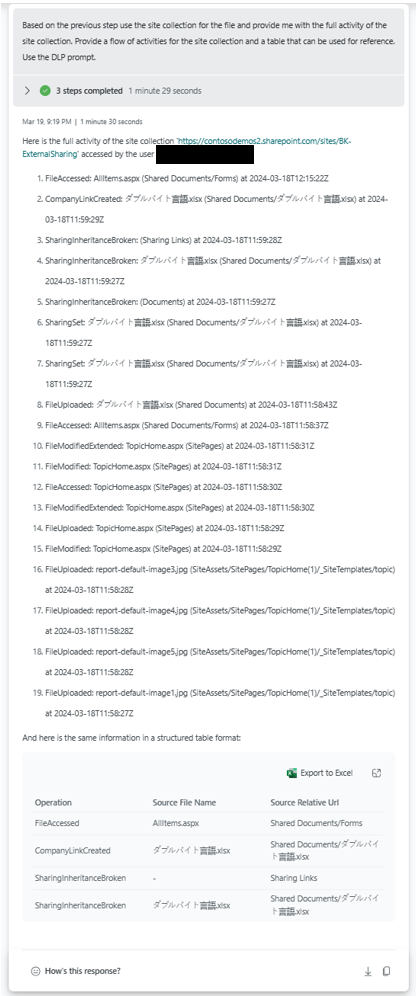

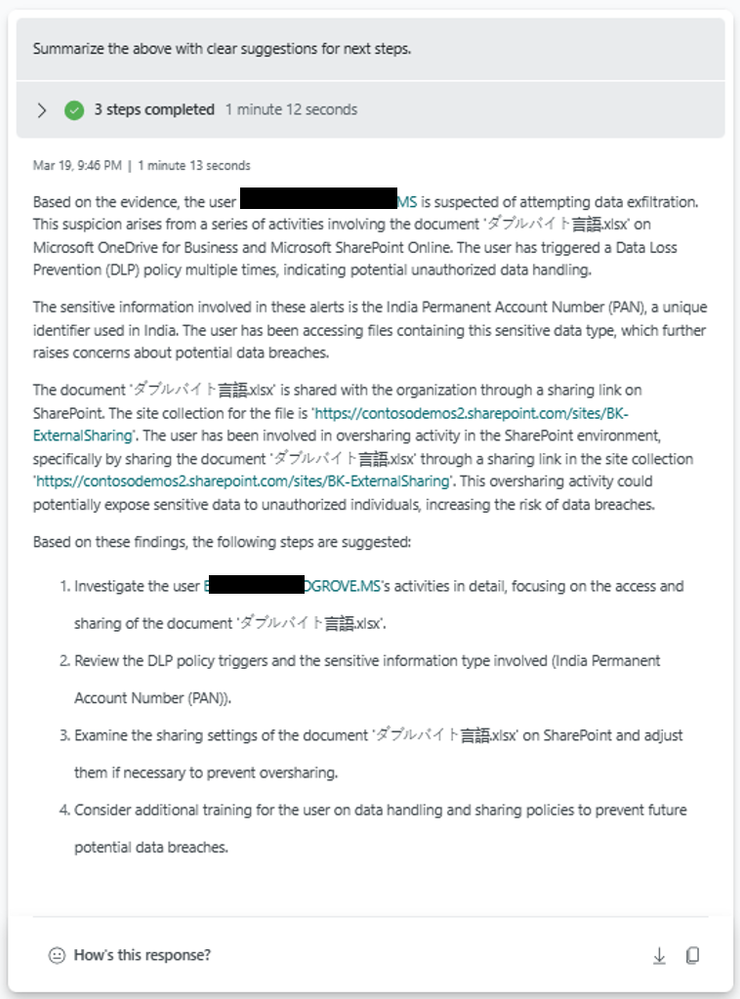

Anomalies detection sample

The DLP anomaly is checking data from the past 30 days and inspect on a 30m interval for possible anomalies. Using a timeseries decomposition model.

The sensitivity content anomaly is using a slightly different model due to the amount of data. It is based on the diffpatterns function that compares week 3,4 with week 1,2.

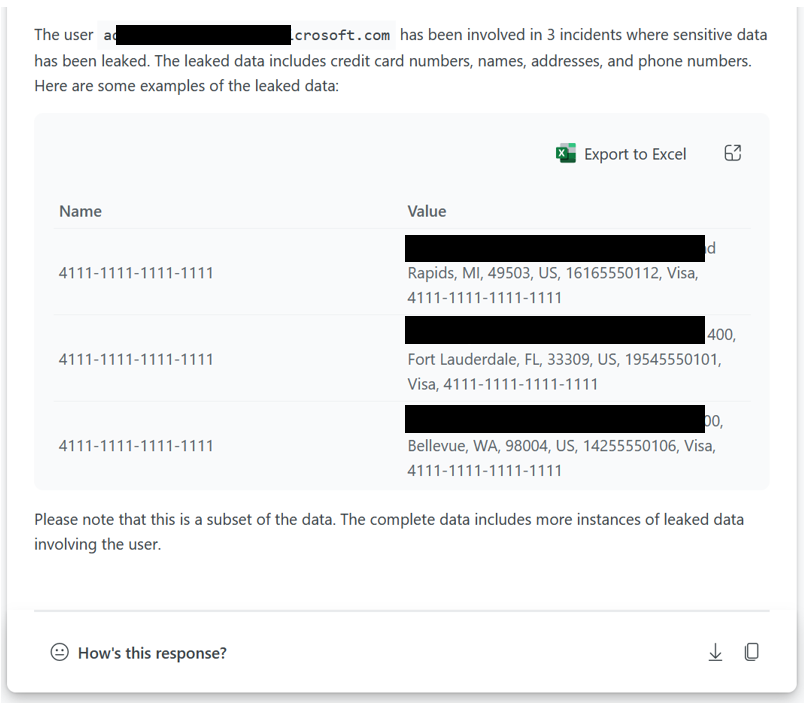

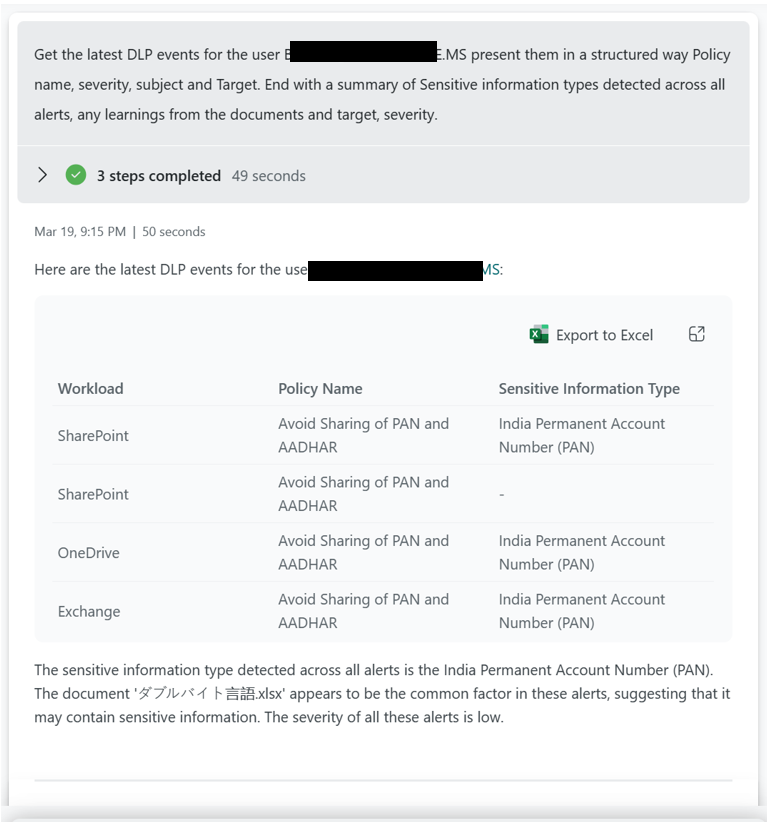

Access to sensitive information by compromised accounts.

This example is checking the alerts reported against users with sensitive information that they have accessed.

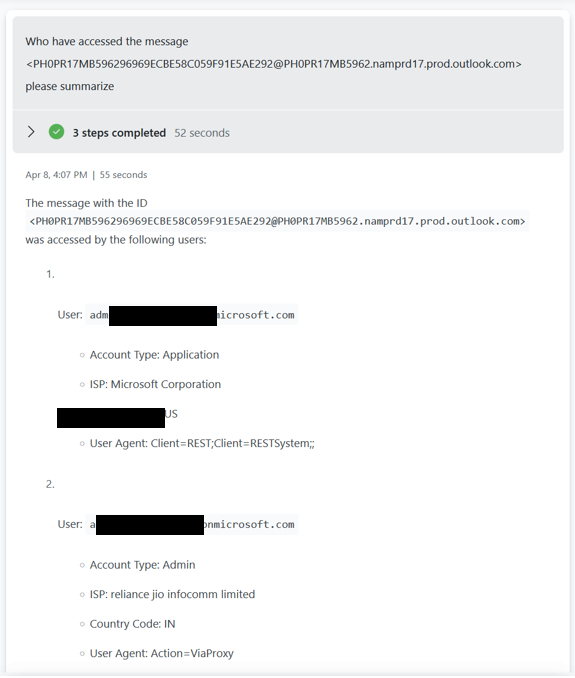

Who has accessed a Sensitive e-mail and from where?

We allow for organizations to input message subject or message Id to identify who has opened a message. Note this only works for internal recipients.

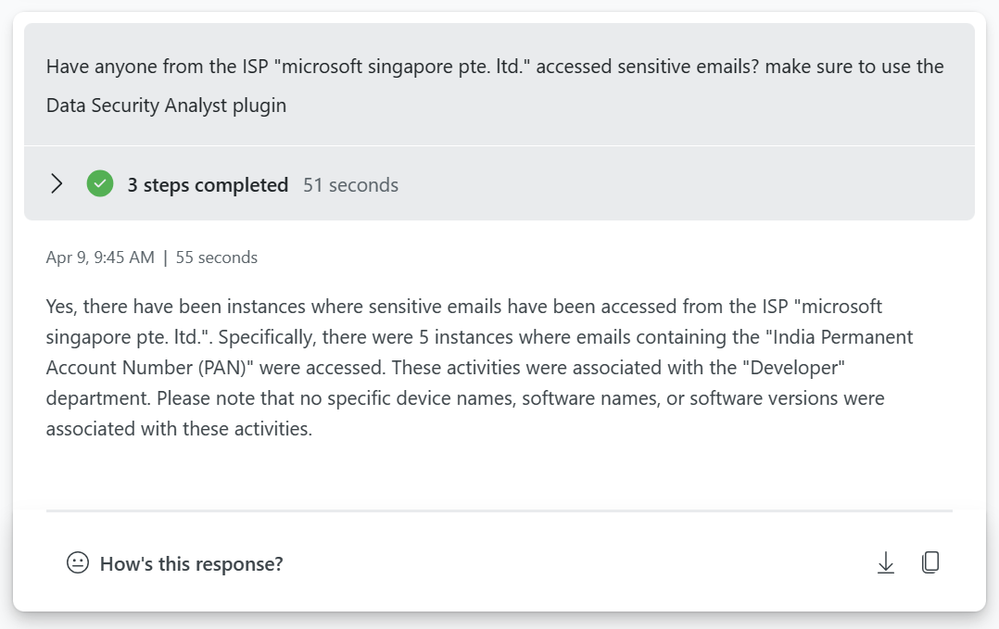

You can also ask the plugin to list any emails classified as Sensitive being accessed from a specific network or affected of a specific CVE.

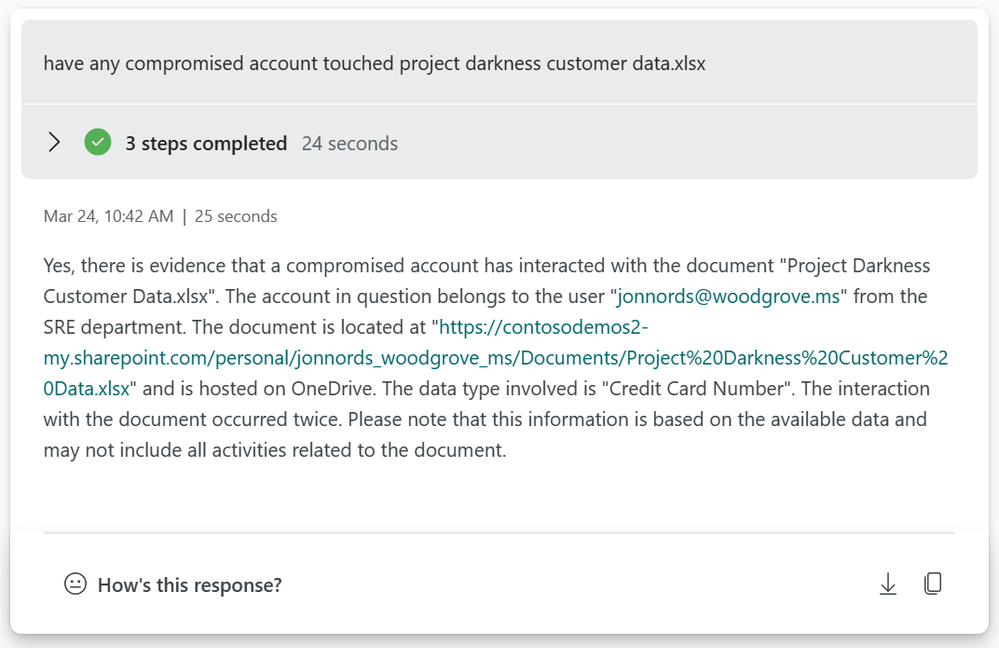

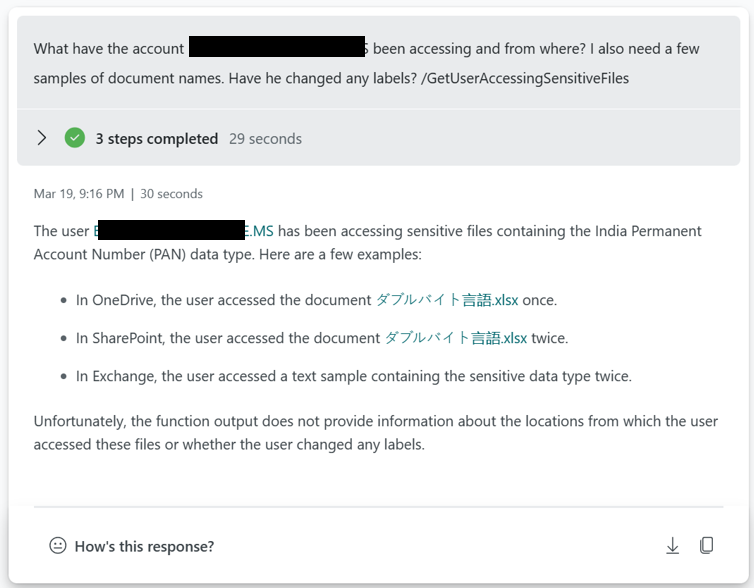

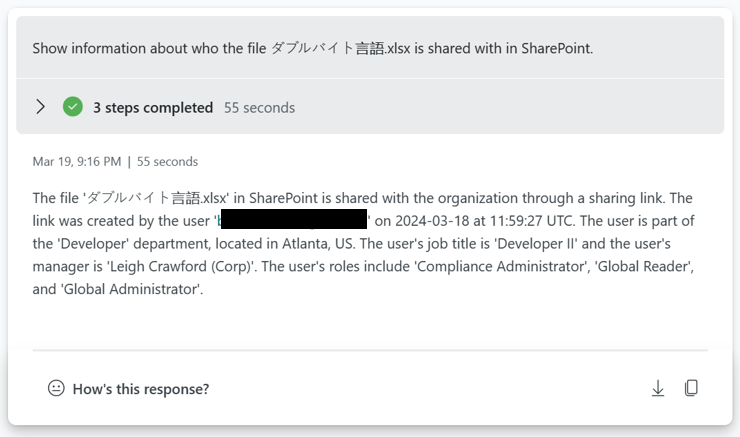

Document accessed by possible compromised accounts.

You can use the plugin to check if compromised accounts have been accessing a specific document.

CVE or proximity to ISP/IPTags

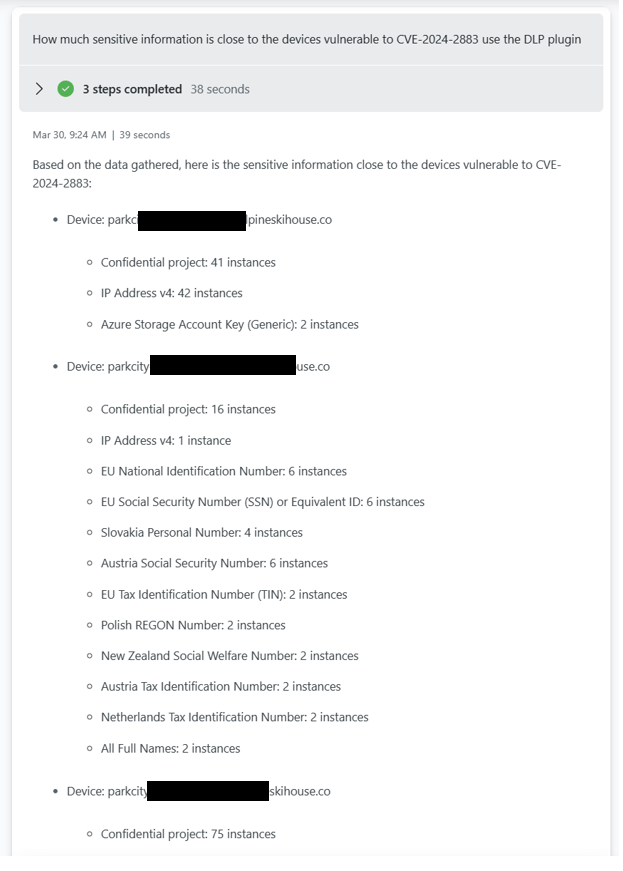

This is a sample where you can check how much sensitive information that is exposed to a CVE as an example. You can pivot this based on ISP as well.

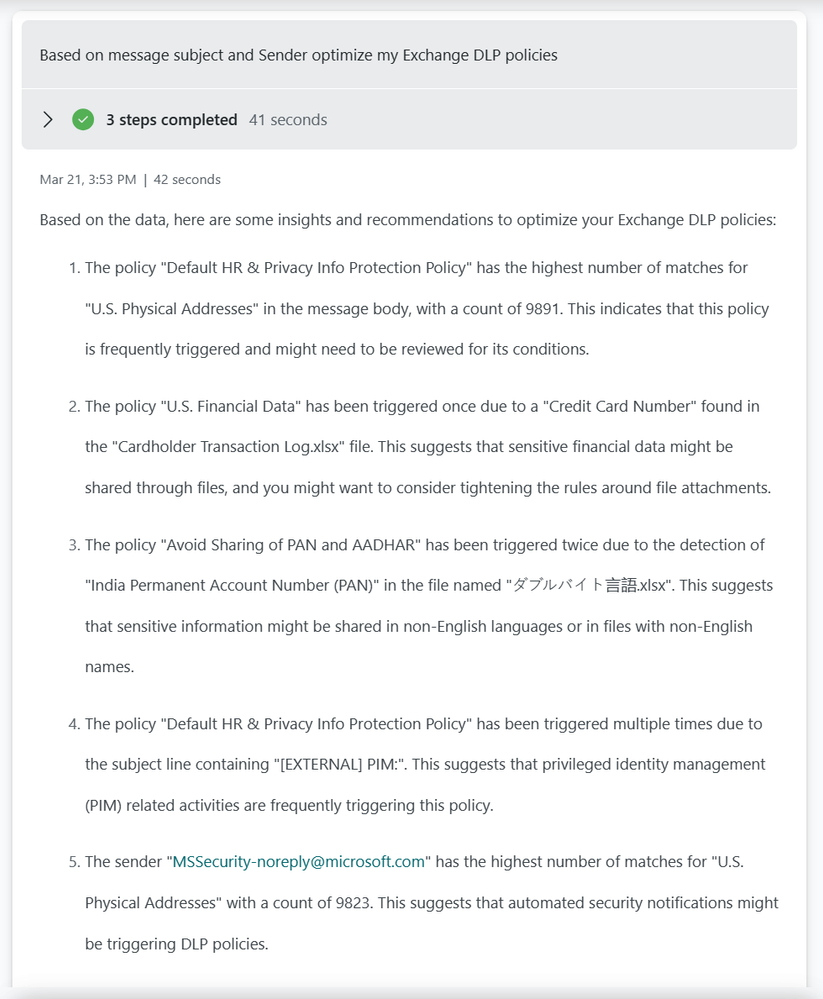

Tune Exchange DLP policies sample.

If you want to tune your Exchange, Teams, SharePoint, Endpoint or OCR rules and policies you can ask Copilot for Security for suggestions.

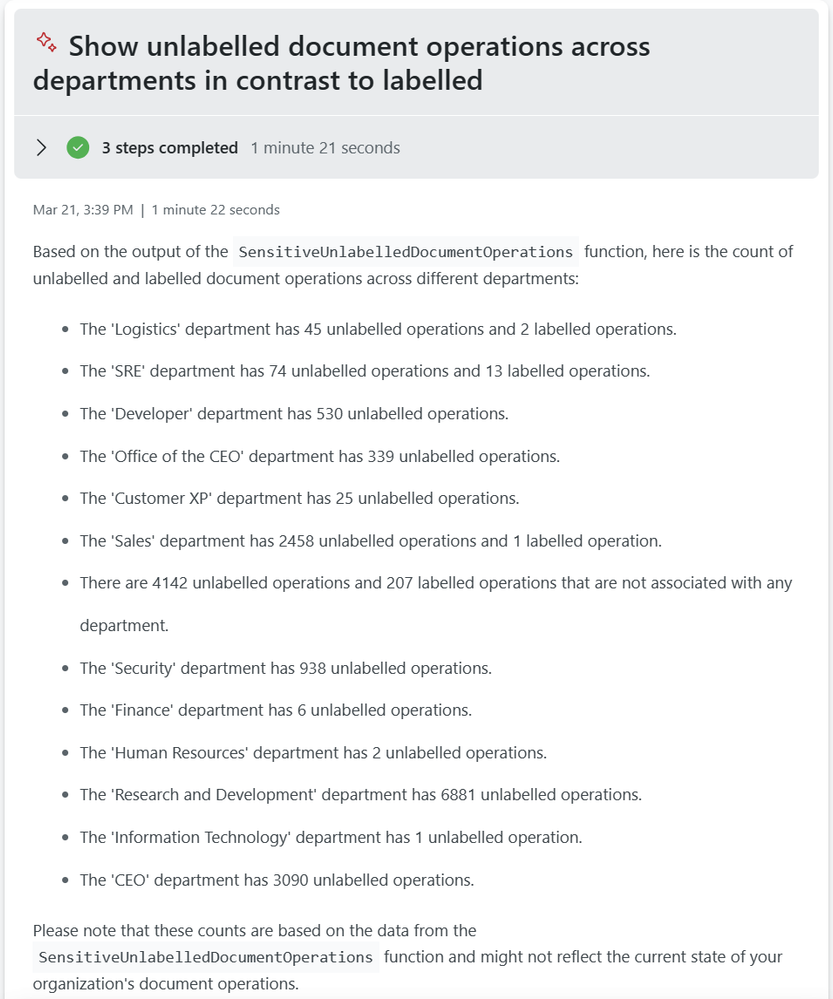

Purview unlabelled operations

How many of the operations in your different departments are unlabelled? Are any of the departments standing out?

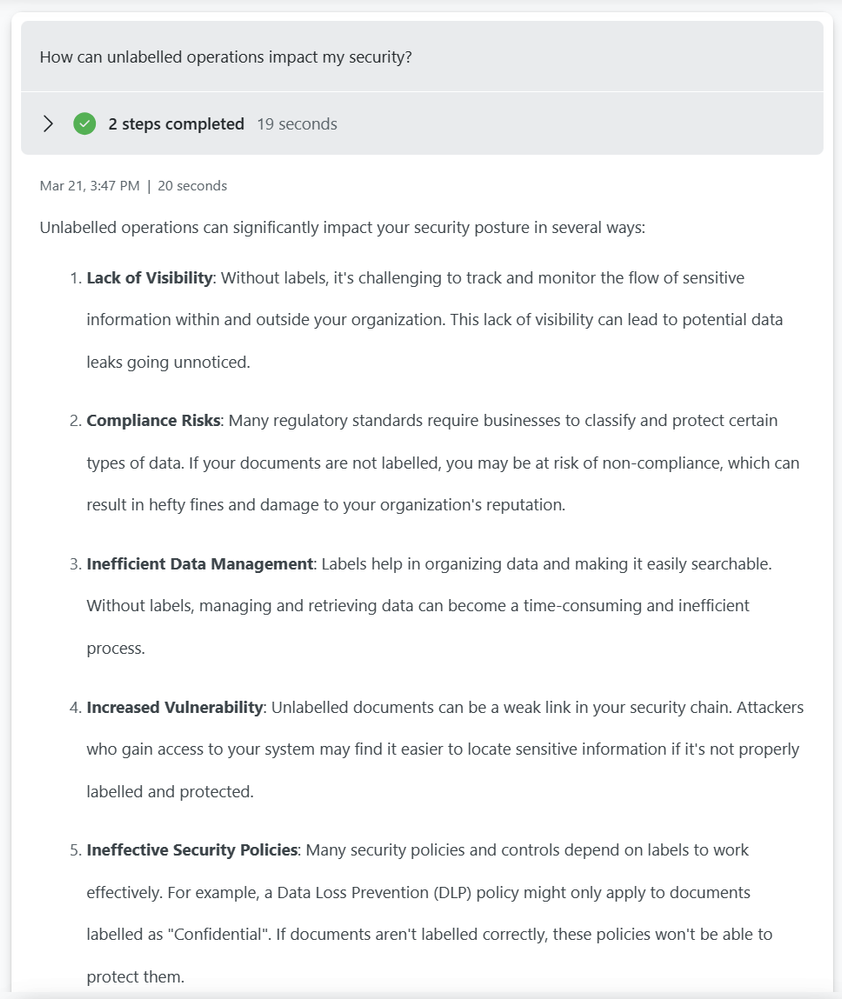

In this context you can also use Copilot for Security to deliver recommendations and highlight what the benefit of sensitivity labels are bringing.

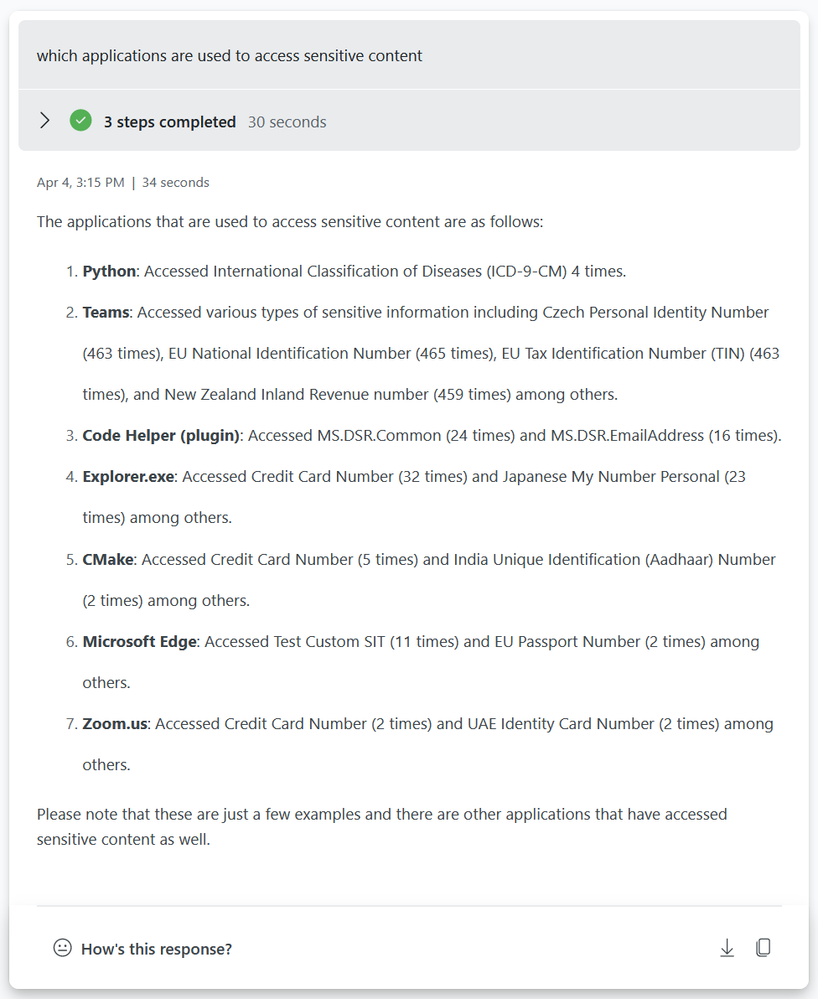

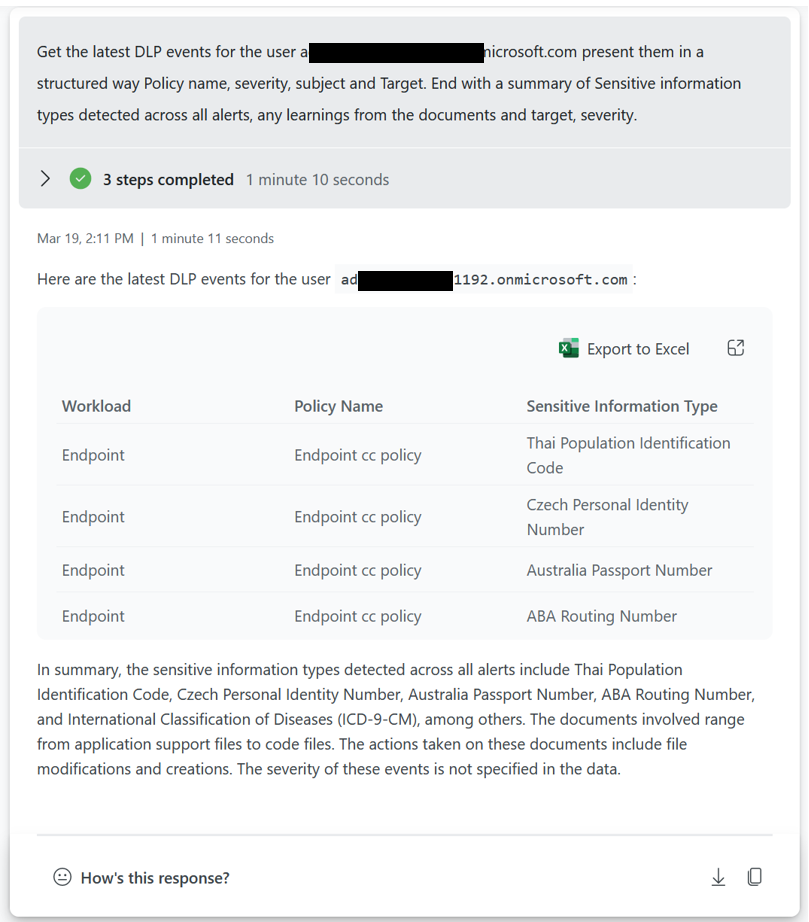

Applications accessing sensitive content.

What applications have been used to access sensitive content? The plugin supports asking for applications being used to access sensitive content. This can be a fairly long list of applications, you can add filters in the code to filter out common applications.

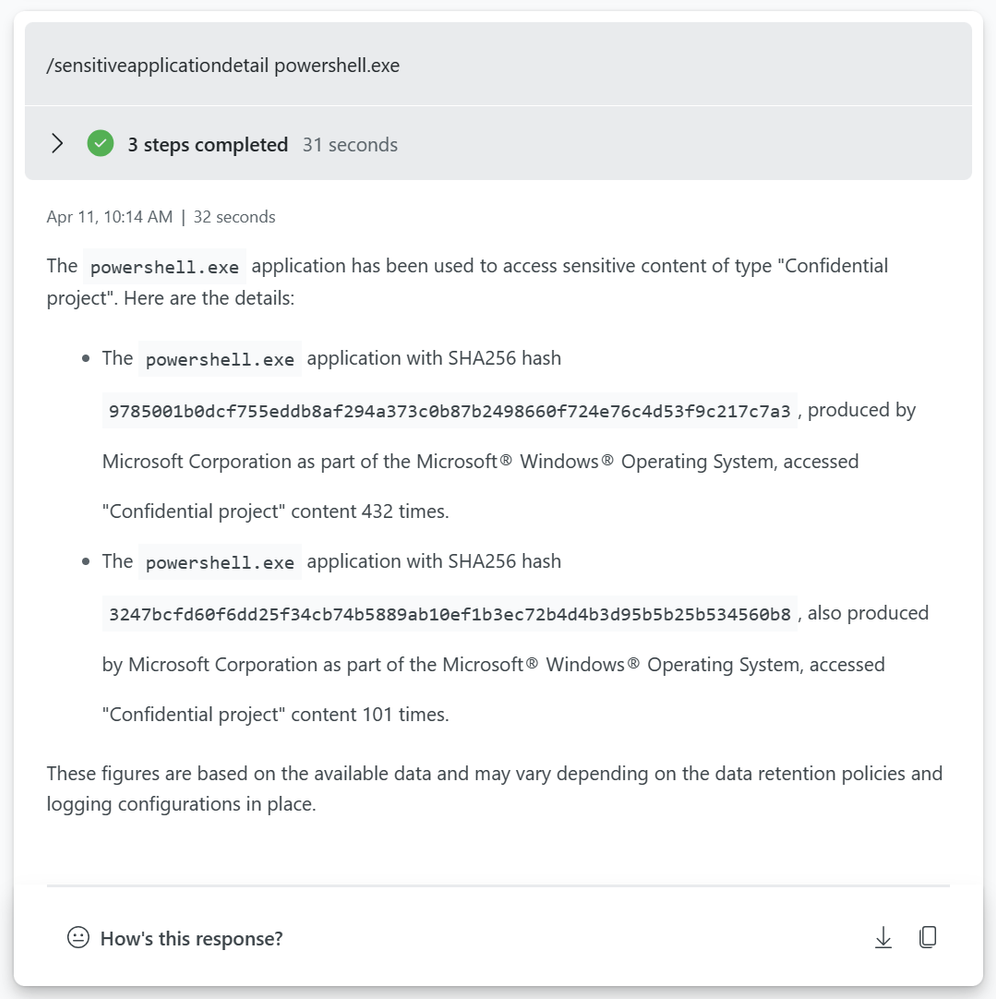

If you want to zoom into what type of content a specific application is accessing.

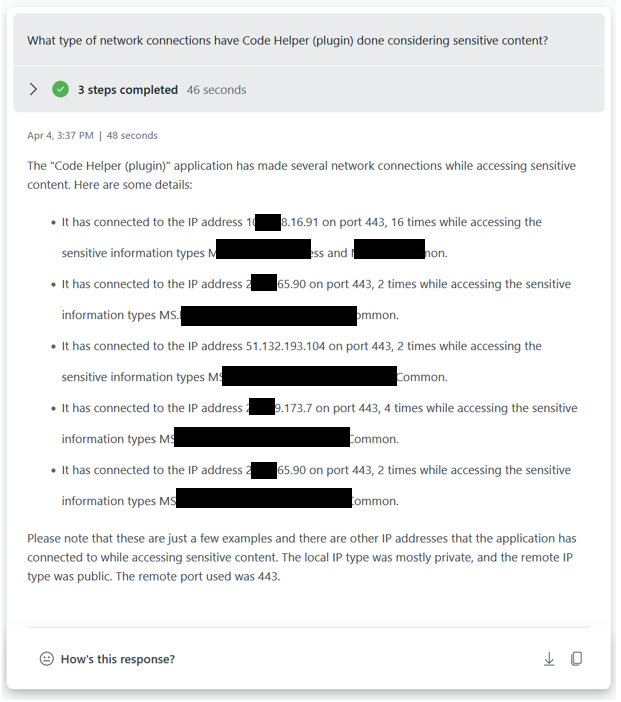

What type of network connectivity has been made from this application?

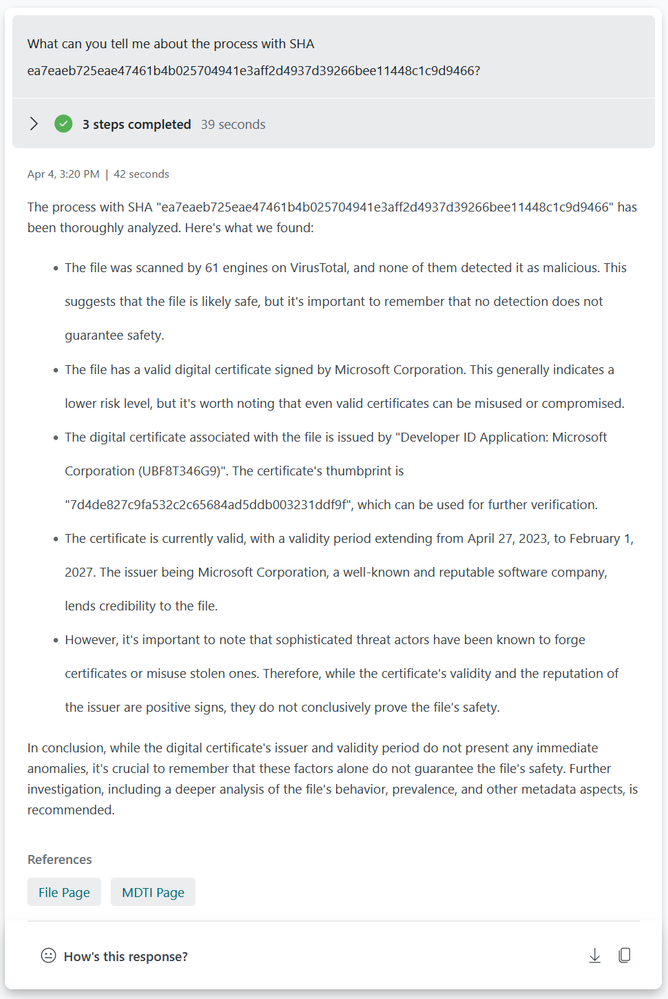

Or what if you get concerned about the process that has been used and want to validate the SHA256?

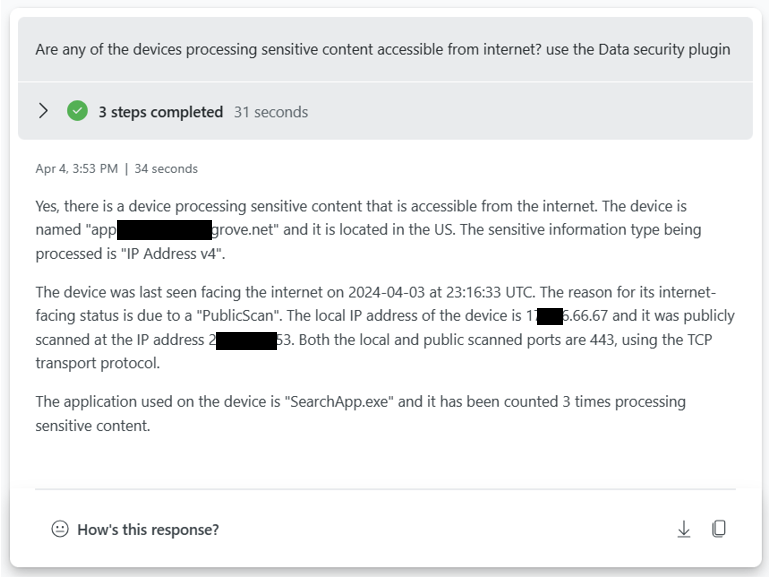

Hosts that are internet accessible accessing sensitive content

Another threat vector could be that some of your devices are accessible to the Internet and sensitive content is being processed. Check for processing of secrets and other sensitive information.

Promptbooks

Promptbooks are a valuable resource for accomplishing specific security-related tasks. Consider them as a way to practically implement your standard operating procedure (SOP) for certain incidents. By following the SOP, you can identify the various dimensions in an incident in a standardized way and summarize the outcome. For more information on prompt books please see this documentation.

Exchange incident sample prompt book

Note: The above detail is currently only available using Sentinel, we are working on Defender integration.

SharePoint sample prompt book

Posts part of this series

by Contributed | Aug 20, 2024 | Technology

This article is contributed. See the original author and article here.

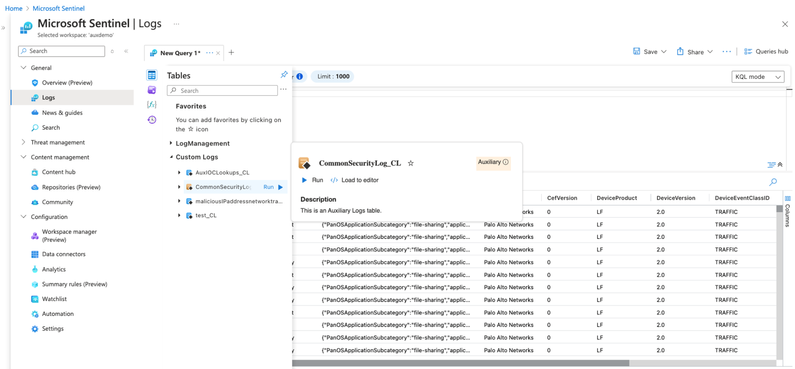

As digital environments grow across platforms and clouds, organizations are faced with the dual challenges of collecting relevant security data to improve protection and optimizing costs of that data to meet budget limitations. Management complexity is also an issue as security teams work with diverse datasets to run on-demand investigations, proactive threat hunting, ad hoc queries and support long-term storage for audit and compliance purposes. Each log type requires specific data management strategies to support those use cases. To address these business needs, customers need a flexible SIEM (Security Information and Event Management) with multiple data tiers.

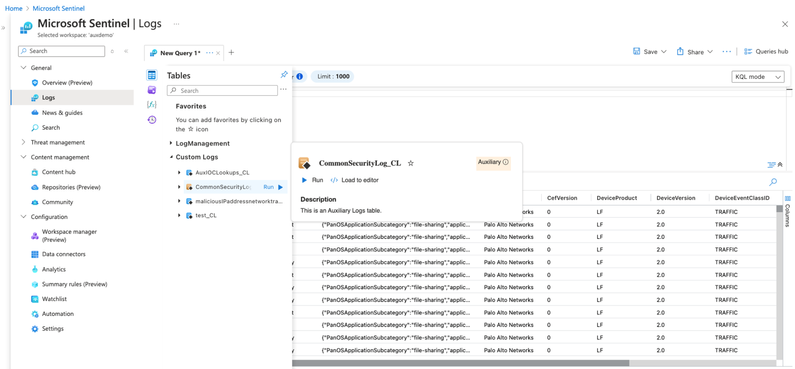

Microsoft is excited to announce the public preview of a new data tier Auxiliary Logs and Summary Rules in Microsoft Sentinel to further increase security coverage for high-volume data at an affordable price.

Auxiliary Logs supports high-volume data sources including network, proxy and firewall logs. Customers can get started today in preview with Auxiliary Logs today at no cost. We will notify users in advance before billing begins at $0.15 per Gb (US East). Initially Auxiliary Logs allow long term storage, however on-demand analysis is limited to the last 30 days. In addition, queries are on a single table only. Customers can continue to build custom solutions using Azure Data Explorer however the intention is that Auxiliary Logs cover most of those use-cases over time and are built into Microsoft Sentinel, so they include management capabilities.

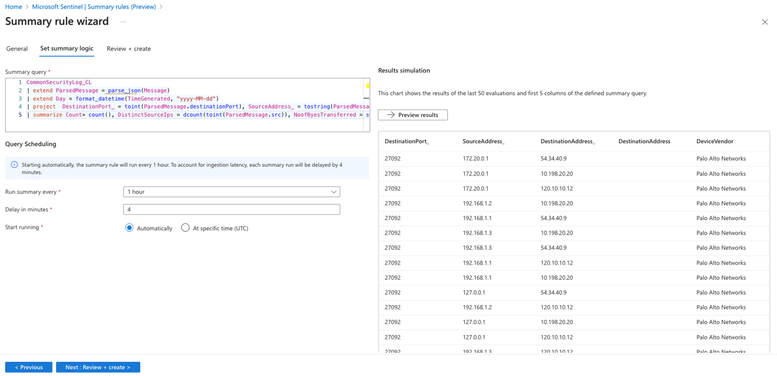

Summary Rules further enhance the value of Auxiliary Logs. Summary Rules enable customers to easily aggregate data from Auxiliary Logs into a summary that can be routed to Analytics Logs for access to the full Microsoft Sentinel query feature set. The combination of Auxiliary logs and Summary rules enables security functions such as Indicator of Compromise (IOC) lookups, anomaly detection, and monitoring of unusual traffic patterns. Together, Auxiliary Logs and Summary Rules offer customers greater data flexibility, cost-efficiency, and comprehensive coverage.

Some of the key benefits of Auxiliary Logs and Summary Rules include:

- Cost-effective coverage: Auxiliary Logs are ideal for ingesting large volumes of verbose logs at an affordable price-point. When there is a need for advanced security investigations or threat hunting, Summary Rules can aggregate and route Auxiliary Logs data to the Analytics Log tier delivering additional cost-savings and security value.

- On-demand analysis: Auxiliary Logs supports 30 days of interactive queries with limited KQL, facilitating access and analysis of crucial security data for threat investigations.

- Flexible retention and storage: Auxiliary Logs can be stored for up to 12 years in long-term retention. Access to these logs is available through running a search job.

Microsoft Sentinel’s multi-tier data ingestion and storage options

Microsoft is committed to providing customers with cost-effective, flexible options to manage their data at scale. Customers can choose from the different log plans in Microsoft Sentinel to meet their business needs. Data can be ingested as Analytics, Basic and Auxiliary Logs. Differentiating what data to ingest and where is crucial. We suggest categorizing security logs into primary and secondary data.

- Primary logs (Analytics Logs): Contain data that is of critical security value and are utilized for real-time monitoring, alerts, and analytics. Examples include Endpoint Detection and Response (EDR) logs, authentication logs, audit trails from cloud platforms, Data Loss Prevention (DLP) logs, and threat intelligence.

- Primary logs are usually monitored proactively, with scheduled alerts and analytics, to enable effective security detections.

- In Microsoft Sentinel, these logs would be directed to Analytics Logs tables to leverage the full Microsoft Sentinel value.

- Analytics Logs are available for 90 days to 2 years, with 12 years long-term retention option.

- Secondary logs (Auxiliary Logs): Are verbose, low-value logs that contain limited security value but can help draw the full picture of a security incident or breach. They are not frequently used for deep analytics or alerts and are often accessed on-demand for ad-hoc querying, investigations, and search.

- These include NetFlow, firewall, and proxy logs, and should be routed to Basic Logs or Auxiliary Logs.

- Auxiliary logs are appropriate when using Log Stash, Cribl or similar for data transformation.

- In the absence of transformation tools, Basic Logs are recommended.

- Both Basic and Auxiliary Logs are available for 30 days, with long-term retention option of up to 12 years.

- Additionally, for extensive ML, complex hunting tasks and frequent, extensive long-term retention customers have the choice of ADX. But this adds additional complexity and maintenance overhead.

Microsoft Sentinel’s native data tiering offers customers the flexibility to ingest, store and analyze all security data to meet their growing business needs.

Use case example: Auxiliary Logs and Summary Rules Coverage for Firewall Logs

Firewall event logs are a critical network log source for threat hunting and investigations. These logs can reveal abnormally large file transfers, volume and frequency of communication by a host, and port scanning. Firewall logs are also useful as a data source for various unstructured hunting techniques, such as stacking ephemeral ports or grouping and clustering different communication patterns.

In this scenario, organizations can now easily send all firewall logs to Auxiliary Logs at an affordable price point. In addition, customers can run a Summary Rule that creates scheduled aggregations and route them to the Analytics Logs tier. Analysts can use these aggregations for their day-to-day work and if they need to drill down, they can easily query the relevant records from Auxiliary Logs. Together Auxiliary Logs and Summary Rules help security teams use high volume, verbose logs to meet their security requirements while minimizing costs.

Figure 1: Ingest high volume, verbose firewall logs into an Auxiliary Logs table.

Figure 2: Create aggregated datasets on the verbose logs in Auxiliary Logs plan.

Customers are already finding value with Auxiliary Logs and Summary Rules as seen below:

“The BlueVoyant team enjoyed participating in the private preview for Auxiliary logs and are grateful Microsoft has created new ways to optimize log ingestion with Auxiliary logs. The new features enable us to transform data that is traditionally lower value into more insightful, searchable data.”

Mona Ghadiri

Senior Director of Product Management, BlueVoyant

“The Auxiliary Log is a perfect fusion of Basic Log and long-term retention, offering the best of

both worlds. When combined with Summary Rules, it effectively addresses various use cases for ingesting large volumes of logs into Microsoft Sentinel.”

Debac Manikandan

Senior Cybersecurity Engineer, DEFEND

Looking forward

Microsoft is committed to expanding the scenarios covered by Auxiliary Logs over time, including data transformation and standard tables, improved query performance at scale, billing and more. We are working closely with our customers to collect feedback and will continue to add more functionality. As always, we’d love to hear your thoughts.

Learn more

by Contributed | Aug 19, 2024 | Technology

This article is contributed. See the original author and article here.

Are you ready to connect with OneDrive product makers this month? We’re gearing up for the next call. And a small FYI, we are approaching production a little different: The call broadcasts from the Microsoft Tech Community, within the OneDrive community hub. Same value. Same engagement. New and exciting home.

Join the OneDrive product team live each month on our monthly OneDrive Community Call (previously ‘Office Hours’) to hear what’s top of mind, get insights into roadmap updates, and dig into a special topic. Each call includes live Q&A where you’ll have a chance to ask the OneDrive product team any question about OneDrive – The home of your files.

Use this link to register and join live: https://aka.ms/OneDriveCommunityCall. Each call is recorded and made available on demand shortly after. Our next call is Wednesday, August 21st, 2024, 8:00am – 9:00am PDT. This month’s special topic: “Copilot in OneDrive” with @Arjun Tomar, Senior Product Manager on the OneDrive team at Microsoft. “Add to calendar” (.ics file) and share the event page with anyone far and wide.

Use this link to register and join live: https://aka.ms/OneDriveCommunityCall. Each call is recorded and made available on demand shortly after. Our next call is Wednesday, August 21st, 2024, 8:00am – 9:00am PDT. This month’s special topic: “Copilot in OneDrive” with @Arjun Tomar, Senior Product Manager on the OneDrive team at Microsoft. “Add to calendar” (.ics file) and share the event page with anyone far and wide.

OneDrive Community Call – August 21, 2024, 8am PDT. Special guest, Arjun Tomar, to share more about our special topic this month: “Copilot in OneDrive.”

OneDrive Community Call – August 21, 2024, 8am PDT. Special guest, Arjun Tomar, to share more about our special topic this month: “Copilot in OneDrive.”

Our goal is to simplify the way you create and access the files you need, get the information you are looking for, and manage your tasks efficiently. We can’t wait to share, listen, and engage – monthly! Anyone can join this one-hour webinar to ask us questions, share feedback, and learn more about the features we’re releasing soon and our roadmap.

Note: Our monthly public calls are not an official support tool. To open support tickets, go to see Get support for Microsoft 365; distinct support for educators and education customers is available, too.

Stay up to date on Microsoft OneDrive adoption on adoption.microsoft.com. Join our community to catch all news and insights from the OneDrive community blog. And follow us on Twitter: @OneDrive. Thank you for your interest in making your voice heard and taking your knowledge and depth of OneDrive to the next level.

You can ask questions and provide feedback in the event Comments below and we will do our best to address what we can during the call.

Register and join live: https://aka.ms/OneDriveCommunityCall

Register and join live: https://aka.ms/OneDriveCommunityCall

See you there, Irfan Shahdad (Principal Product Manager – OneDrive, Microsoft)

by Contributed | Aug 18, 2024 | Technology

This article is contributed. See the original author and article here.

Recently, we faced a requirement to upgrade large number of Azure SQL databases from general-purpose to business-critical.

As you’re aware, this scaling-up operation can be executed via PowerShell, CLI, or the Azure portal and follow the guidance mentioned here – Failover groups overview & best practices – Azure SQL Managed Instance | Microsoft Learn

Given the need to perform this task across a large number of databases, individually running commands for each server is not practical. Hence, I have created a PowerShell script to facilitate such extensive migrations.

# Scenarios tested:

# 1) Jobs will be executed in parallel.

# 2) The script will upgrade secondary databases first, then the primary.

# 3) Upgrade the database based on the primary listed in the database info list.

# 4) Script will perform the check prior to the migration in case of the role has changed from primary to secondary of the database mentioned in the database info list.

# 5) Upgrade individual databases in case of no primary/secondary found for a given database.

# 6) Upgrade the database if secondary is upgraded but primary has not been upgraded. Running the script again will skip the secondary and upgrade the primary database.

# In other words, SLO mismatch will be handled based on the SKU defined in the database info list.

# 7) Track the database progress and display the progress in the console.

Important consideration:

# This script performs an upgrade of Azure SQL databases to a specified SKU.

# The script also handles geo-replicated databases by upgrading the secondary first, then the primary, and finally any other databases without replication links.

# The script logs the progress and outcome of each database upgrade to the console and a log file.

# Disclaimer: This script is provided as-is, without any warranty or support. Use it at your own risk.

# Before running this script, make sure to test it in a non-production environment and review the impact of the upgrade on your databases and applications.

# The script may take a long time to complete, depending on the number and size of the databases to be upgraded.

# The script may incur additional charges for the upgraded databases, depending on the target SKU and the duration of the upgrade process.

# The script requires the Az PowerShell module and the appropriate permissions to access and modify the Azure SQL databases.

# Define the list of database information

$DatabaseInfoList = @(

#@{ DatabaseName = '{DatabaseName}'; PartnerResourceGroupName = '{PartnerResourcegroupName}'; ServerName = '{ServerName}' ; ResourceGroupName = '{ResourceGroupName}'; RequestedServiceObjectiveName = '{SLODetails}'; subscriptionID = '{SubscriptionID}' }

)

# Define the script block that performs the update

$ScriptBlock = {

param (

$DatabaseInfo

)

Set-AzContext -subscriptionId $DatabaseInfo.subscriptionID

###store output in txt file

$OutputFilePath = "C:temp$($DatabaseInfo.DatabaseName)_$($env:USERNAME)_$($Job.Id)_Output.txt"

$OutputCapture = @()

$OutputCapture += "Database: $($DatabaseInfo.DatabaseName)"

$ReplicationLink = Get-AzSqlDatabaseReplicationLink -DatabaseName $DatabaseInfo.DatabaseName -PartnerResourceGroupName $DatabaseInfo.PartnerResourceGroupName -ServerName $DatabaseInfo.ServerName -ResourceGroupName $DatabaseInfo.ResourceGroupName

$PrimaryServerRole = $ReplicationLink.Role

$PrimaryResourceGroupName = $ReplicationLink.ResourceGroupName

$PrimaryServerName = $ReplicationLink.ServerName

$PrimaryDatabaseName = $ReplicationLink.DatabaseName

$PartnerRole = $ReplicationLink.PartnerRole

$PartnerServerName = $ReplicationLink.PartnerServerName

$PartnerDatabaseName = $ReplicationLink.PartnerDatabaseName

$PartnerResourceGroupName = $ReplicationLink.PartnerResourceGroupName

$UpdateSecondary = $false

$UpdatePrimary = $false

if ($PartnerRole -eq "Secondary" -and $PrimaryServerRole -eq "Primary") {

$UpdateSecondary = $true

$UpdatePrimary = $true

}

#For Failover Scenarios only

elseif ($PartnerRole -eq "Primary" -and $PrimaryServerRole -eq "Secondary") {

$UpdateSecondary = $true

$UpdatePrimary = $true

$PartnerRole = $ReplicationLink.Role

$PartnerServerName = $ReplicationLink.ServerName

$PartnerDatabaseName = $ReplicationLink.DatabaseName

$PartnerResourceGroupName = $ReplicationLink.ResourceGroupName

$PrimaryServerRole = $ReplicationLink.PartnerRole

$PrimaryResourceGroupName = $ReplicationLink.PartnerResourceGroupName

$PrimaryServerName = $ReplicationLink.PartnerServerName

$PrimaryDatabaseName = $ReplicationLink.PartnerDatabaseName

}

Try

{

if ($UpdateSecondary) {

$DatabaseProperties = Get-AzSqlDatabase -ResourceGroupName $PartnerResourceGroupName -ServerName $PartnerServerName -DatabaseName $PartnerDatabaseName

#$DatabaseEdition = $DatabaseProperties.Edition

$DatabaseSKU = $DatabaseProperties.RequestedServiceObjectiveName

if ($DatabaseSKU -ne $DatabaseInfo.RequestedServiceObjectiveName) {

Write-host "Secondary started at $(Get-Date) of DB $UpdateSecondary"

$OutputCapture += "Secondary started at $(Get-Date) of DB $UpdateSecondary"

Set-AzSqlDatabase -ResourceGroupName $PartnerResourceGroupName -DatabaseName $PartnerDatabaseName -ServerName $PartnerServerName -Edition "BusinessCritical" -RequestedServiceObjectiveName $DatabaseInfo.RequestedServiceObjectiveName

Write-host "Secondary end at $(Get-Date)"

$OutputCapture += "Secondary end at $(Get-Date)"

# Start Track Progress

$activities = Get-AzSqlDatabaseActivity -ResourceGroupName $PartnerResourceGroupName -ServerName $PartnerServerName -DatabaseName $PartnerDatabaseName |

Where-Object {$_.State -eq "InProgress" -or $_.State -eq "Succeeded" -or $_.State -eq "Failed"} | Sort-Object -Property StartTime -Descending | Select-Object -First 1

if ($activities.Count -gt 0) {

Write-Host "Operations in progress or completed for $($PartnerDatabaseName):"

$OutputCapture += "Operations in progress or completed for $($PartnerDatabaseName):"

foreach ($activity in $activities) {

Write-Host "Activity Start Time: $($activity.StartTime) , Activity Estimated Completed Time: $($activity.EstimatedCompletionTime) , Activity ID: $($activity.OperationId), Server Name: $($activity.ServerName), Database Name: $($activity.DatabaseName), Status: $($activity.State), Percent Complete: $($activity.PercentComplete)%, Description: $($activity.Description)"

$OutputCapture += "Activity Start Time: $($activity.StartTime) , Activity Estimated Completed Time: $($activity.EstimatedCompletionTime) , Activity ID: $($activity.OperationId), Server Name: $($activity.ServerName), Database Name: $($activity.DatabaseName), Status: $($activity.State), Percent Complete: $($activity.PercentComplete)%, Description: $($activity.Description)"

}

Write-Host "$PartnerDatabaseName Upgrade Successfully Completed!"

$OutputCapture += "$PartnerDatabaseName Upgrade Successfully Completed!"

} else {

Write-Host "No operations in progress or completed for $($PartnerDatabaseName)"

$OutputCapture += "No operations in progress or completed for $($PartnerDatabaseName)"

}

# End Track Progress

#

}

else {

Write-host "Database $PartnerDatabaseName is already upgraded."

$OutputCapture += "Database $PartnerDatabaseName is already upgraded."

}

}

if ($UpdatePrimary) {

$DatabaseProperties = Get-AzSqlDatabase -ResourceGroupName $PrimaryResourceGroupName -ServerName $PrimaryServerName -DatabaseName $PrimaryDatabaseName

# $DatabaseEdition = $DatabaseProperties.Edition

$DatabaseSKU = $DatabaseProperties.RequestedServiceObjectiveName

if ($DatabaseSKU -ne $DatabaseInfo.RequestedServiceObjectiveName){

Write-host "Primary started at $(Get-Date) of DB $UpdatePrimary"

$OutputCapture += "Primary started at $(Get-Date) of DB $UpdatePrimary"

Set-AzSqlDatabase -ResourceGroupName $PrimaryResourceGroupName -DatabaseName $PrimaryDatabaseName -ServerName $PrimaryServerName -Edition "BusinessCritical" -RequestedServiceObjectiveName $DatabaseInfo.RequestedServiceObjectiveName

Write-host "Primary end at $(Get-Date)"

$OutputCapture += "Primary end at $(Get-Date)"

# Start Track Progress

$activities = Get-AzSqlDatabaseActivity -ResourceGroupName $PrimaryResourceGroupName -ServerName $PrimaryServerName -DatabaseName $PrimaryDatabaseName |

Where-Object {$_.State -eq "InProgress" -or $_.State -eq "Succeeded" -or $_.State -eq "Failed"} | Sort-Object -Property StartTime -Descending | Select-Object -First 1

if ($activities.Count -gt 0) {

Write-Host "Operations in progress or completed for $($PrimaryDatabaseName):"

$OutputCapture += "Operations in progress or completed for $($PrimaryDatabaseName):"

foreach ($activity in $activities) {

Write-Host "Activity Start Time: $($activity.StartTime) , Activity Estimated Completed Time: $($activity.EstimatedCompletionTime) , Activity ID: $($activity.OperationId), Server Name: $($activity.ServerName), Database Name: $($activity.DatabaseName), Status: $($activity.State), Percent Complete: $($activity.PercentComplete)%, Description: $($activity.Description)"

$OutputCapture += "Activity Start Time: $($activity.StartTime) , Activity Estimated Completed Time: $($activity.EstimatedCompletionTime) , Activity ID: $($activity.OperationId), Server Name: $($activity.ServerName), Database Name: $($activity.DatabaseName), Status: $($activity.State), Percent Complete: $($activity.PercentComplete)%, Description: $($activity.Description)"

}

Write-Host "$PrimaryDatabaseName Upgrade Successfully Completed!"

$OutputCapture += "$PrimaryDatabaseName Upgrade Successfully Completed!"

} else {

Write-Host "No operations in progress or completed for $($PrimaryDatabaseName)"

$OutputCapture += "No operations in progress or completed for $($PrimaryDatabaseName)"

}

# End Track Progress

#

}

else {

Write-host "Database $PrimaryDatabaseName is already upgraded."

$OutputCapture += "Database $PrimaryDatabaseName is already upgraded."

}

}

if (!$UpdateSecondary -and !$UpdatePrimary) {

$DatabaseProperties = Get-AzSqlDatabase -ResourceGroupName $DatabaseInfo.ResourceGroupName -ServerName $DatabaseInfo.ServerName -DatabaseName $DatabaseInfo.DatabaseName

# $DatabaseEdition = $DatabaseProperties.Edition

$DatabaseSKU = $DatabaseProperties.RequestedServiceObjectiveName

If ($DatabaseSKU -ne $DatabaseInfo.RequestedServiceObjectiveName) {

Write-Host "No Replica Found."

$OutputCapture += "No Replica Found."

Write-host "Upgrade started at $(Get-Date)"

$OutputCapture += "Upgrade started at $(Get-Date)"

Set-AzSqlDatabase -ResourceGroupName $DatabaseInfo.ResourceGroupName -DatabaseName $DatabaseInfo.DatabaseName -ServerName $DatabaseInfo.ServerName -Edition "BusinessCritical" -RequestedServiceObjectiveName $DatabaseInfo.RequestedServiceObjectiveName

Write-host "Upgrade completed at $(Get-Date)"

$OutputCapture += "Upgrade completed at $(Get-Date)"

# Start Track Progress

$activities = Get-AzSqlDatabaseActivity -ResourceGroupName $DatabaseInfo.ResourceGroupName -ServerName $DatabaseInfo.ServerName -DatabaseName $DatabaseInfo.DatabaseName |

Where-Object {$_.State -eq "InProgress" -or $_.State -eq "Succeeded" -or $_.State -eq "Failed"} | Sort-Object -Property StartTime -Descending | Select-Object -First 1

if ($activities.Count -gt 0) {

Write-Host "Operations in progress or completed for $($DatabaseInfo.DatabaseName):"

$OutputCapture += "Operations in progress or completed for $($DatabaseInfo.DatabaseName):"

foreach ($activity in $activities) {

Write-Host "Activity Start Time: $($activity.StartTime) , Activity Estimated Completed Time: $($activity.EstimatedCompletionTime) , Activity ID: $($activity.OperationId), Server Name: $($activity.ServerName), Database Name: $($activity.DatabaseName), Status: $($activity.State), Percent Complete: $($activity.PercentComplete)%, Description: $($activity.Description)"

$OutputCapture += "Activity Start Time: $($activity.StartTime) , Activity Estimated Completed Time: $($activity.EstimatedCompletionTime) , Activity ID: $($activity.OperationId), Server Name: $($activity.ServerName), Database Name: $($activity.DatabaseName), Status: $($activity.State), Percent Complete: $($activity.PercentComplete)%, Description: $($activity.Description)"

}

Write-Host " "$DatabaseInfo.DatabaseName" Upgrade Successfully Completed!"

$OutputCapture += "$($DatabaseInfo.DatabaseName) Upgrade Successfully Completed!"

} else {

Write-Host "No operations in progress or completed for $($DatabaseInfo.DatabaseName)"

$OutputCapture += "No operations in progress or completed for $($DatabaseInfo.DatabaseName)"

}

# End Track Progress

# Write-Host " "$DatabaseInfo.DatabaseName" Upgrade Successfully Completed!"

}

else {

Write-host "Database "$DatabaseInfo.DatabaseName" is already upgraded."

$OutputCapture += "Database $($DatabaseInfo.DatabaseName) is already upgraded."

}

$OutputCapture += "Secondary started at $(Get-Date) of DB $UpdateSecondary"

}

}

Catch

{

# Catch any error

Write-Output "Error occurred: $_"

$OutputCapture += "Error occurred: $_"

}

Finally

{

Write-Host "Upgrade Successfully Completed!"

$OutputCapture += "Upgrade Successfully Completed!"

# Output the captured messages to the file

$OutputCapture | Out-File -FilePath $OutputFilePath

}

}

# Loop through each database and start a background job

foreach ($DatabaseInfo in $DatabaseInfoList) {

Start-Job -ScriptBlock $ScriptBlock -ArgumentList $DatabaseInfo

}

# Wait for all background jobs to complete

Get-Job | Wait-Job

# Retrieve and display job results

#Get-Job | Receive-Job

Get-Job | ForEach-Object {

$Job = $_

$OutputFilePath = "C:temp$($Job.Id)_Output.txt"

Receive-Job -Job $Job | Out-File -FilePath $OutputFilePath # Append job output to the text file

}

# Clean up background jobs

Get-Job | Remove-Job -Force

write-host "Execution Completed successfully."

$OutputCapture += "Execution Completed successfully."

Recent Comments