by Contributed | Oct 14, 2021 | Technology

This article is contributed. See the original author and article here.

We’re happy to announce the addition of the Azure Cosmos DB Developer Specialty certification to our portfolio, to be released in mid-November 2021.

This new certification is a key step for developers who are ready to prove their expertise supporting their organization’s business goals with modern cloud apps. IT environments where application infrastructure is dated, rigorous, and specialized often cost developers valuable time and resources. Business-critical modern apps require scale, speed, and guaranteed availability, and they need to store ever-increasing volumes of data—all while delivering real-time customer access. Developers who work with Azure Cosmos DB find the tools and security they need to meet these requirements and more to meet today’s business challenges.

Is the Azure Cosmos DB Developer Specialty certification right for you?

You’re a great candidate for the Azure Cosmos DB Developer Specialty certification if you have subject matter expertise designing, implementing, and monitoring cloud-native applications that store and manage data.

Typical responsibilities for developers in this role include designing and implementing data models and data distribution, loading data into a database created with Azure Cosmos DB, and optimizing and maintaining the solution. These professionals integrate the solution with other Azure services. They also design, implement, and monitor solutions that consider security, availability, resilience, and performance requirements.

Professionals in this role have experience developing apps for Azure and working with Azure Cosmos DB database technologies. They should be proficient at developing applications by using the Core (SQL) API and SDKs, writing efficient queries and creating appropriate index policies, provisioning and managing resources in Azure, and creating server-side objects with JavaScript. They should also be able to interpret JSON, read C# or Java code, and use PowerShell.

Developers interested in earning this new certification need to pass Exam DP-420: Designing and Implementing Cloud-Native Applications Using Microsoft Azure Cosmos DB, which will be available in mid-November 2021. If you’re a skilled Azure developer who has experience with Azure Cosmos DB, we encourage you to participate in the beta. Stay tuned for the announcement.

Start preparing now for the upcoming beta exam

Even though the exam won’t be released until November 2021, you can start preparing for it now:

Unlock opportunities with Azure Cosmos DB

Are you ready to take your skills to the next level with Azure Cosmos DB? Get ready for Exam DP-420 (beta) and earn your Azure Cosmos DB Developer Specialty certification. Validate that you have what it takes to unlock business opportunities with modern cloud apps—for you, your team, and your organization.

Related posts

Finding the right Microsoft Azure certification for you

by Contributed | Oct 13, 2021 | Technology

This article is contributed. See the original author and article here.

We are excited to announce the support for Ddsv4 (General Purpose) and Edsv4 (Memory optimized) VM series with Azure Database for PostgreSQL – Flexible Server (Preview).

As you may know, the Flexible Server option in Azure Database for PostgreSQL is a fully managed PostgreSQL service that handles your mission-critical workloads with predictable performance. Flexible Server offers you with compute tier choices including Burstable compute tier for dev/test use cases, a General Purpose compute tier for running your small and medium production workloads, and a Memory Optimized compute tier to handle your large, mission-critical workloads. Flexible Server allows you to dynamically scale your compute across these tiers and compute sizes.

In addition to the existing DsV3 and EsV3 series of compute, you can now deploy using Ddsv4 and Edsv4 (V4-series) computes for your general purpose and memory optimized computing needs.

What are Ddsv4/Edsv4 VM series?

The Ddsv4 and Edsv4 VM series are based on the 2nd generation Intel Xeon Platinum 8272CL (Cascade Lake). This custom processor runs at a base speed of 2.5GHz and can achieve all-core turbo frequency of 3.4GHz. These compute tiers offer 50 percent larger and faster local storage, as well as better local disk IOPS for both Read and Write caching compared to the Dv3 and Ev3 compute sizes.

- Ddsv4 compute sizes provide a good balance of memory-to-vCPU performance, with up to 64 vCPUs, 256 GiB of RAM, and include local SSD storage.

- Edsv4 compute sizes feature a high memory-to-CPU ratio, with up to 64 vCPUs, 504 GiB of RAM, and include local SSD storage. The Edsv4 also supports a 20vCPU compute size with 160GiB of memory.

Ok. What are the benefits of running Flexible Server on v4-series?

- The V4-series compute sizes provide a faster processing power.

- V4-series also include high-speed local storage which are automatically used by PostgreSQL Flexible Server for read caching without requiring any user action.

So, depending on your workload and your data size, you could expect up to 40% performance improvement with V4 series compared to V3.

How about Flexible server V4-series pricing?

Please refer to the Azure Database for PostgreSQL – Flexible Server pricing page for detailed pricing. The V4-series flexible servers can be deployed using pay-as-you-go (PAYG or on-demand) pricing as well as reserved instance (RI) pricing. RI pricing offers up to 58% discount over PAYG pricing, depending on the compute tier and the reservation period.

I am currently running my server Postgres on V3-series. How can I migrate to V4-series?

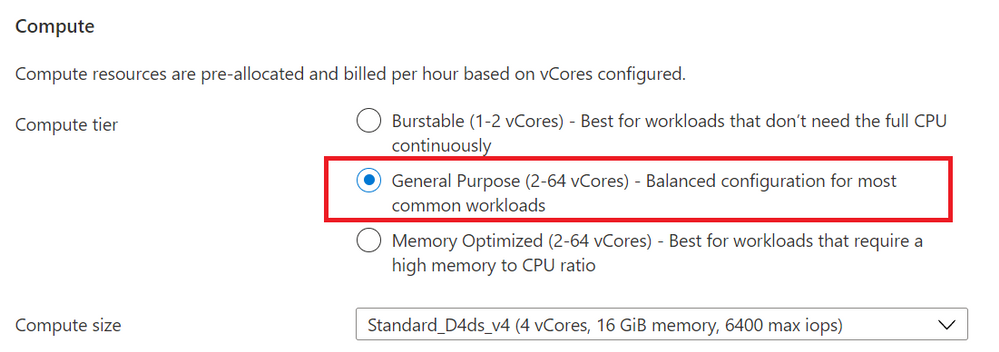

You can simply scale your compute to any V4 compute size with a couple of clicks. From compute + storage blade (as illustrated in Figures 1, 2, and 3), you can simply modify your compute size to the desired V4 compute size. As scaling (compute migration) is an offline operation which would need couple of minutes of downtime in most cases, it is recommended that you perform scale operations during non-peak periods of your server. During the scale operation, your storage is detached from your existing server and gets attached to the new, scaled server. Flexible server offers a complete flexibility to scale your compute across all compute tiers and compute sizes at any time. You can either scale-up or scale-down the compute.

V4-series computes are only available with General Purpose or the Memory Optimized compute tiers. If you choose either of these tiers, then you’ll be able to select the new Ddsv4 compute size for General Purpose—or Edsv4 compute size for Memory Optimized compute tiers.

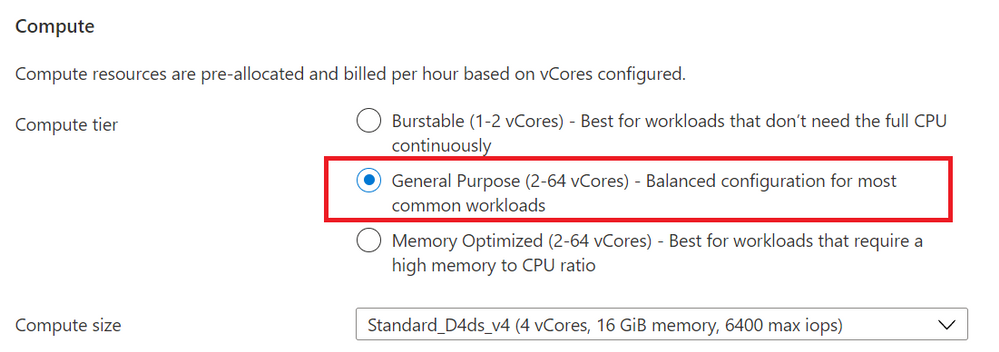

For example, to scale to General V4 compute size, from the “Compute + Storage” blade, choose the General Purpose tier.

Figure 1: Screenshot from the Azure Portal, of the provisioning workflow for the Flexible Server option in Azure Database for PostgreSQL Compute + Storage blade. This is where you can select the compute tier (General purpose / Memory Optimized)

Figure 1: Screenshot from the Azure Portal, of the provisioning workflow for the Flexible Server option in Azure Database for PostgreSQL Compute + Storage blade. This is where you can select the compute tier (General purpose / Memory Optimized)

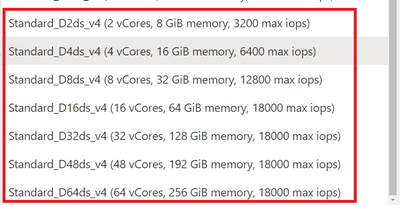

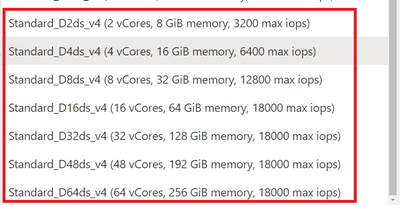

Then choose a General Purpose Ddsv4 compute size that suits your application needs.

Figure 2: Screenshot of the pulldown menu for the “General Purpose” compute size choice in the Compute + Storage blade for Flexible Server in Azure Database for PostgreSQL. You can see new Ddsv4 choices on the list.

Figure 2: Screenshot of the pulldown menu for the “General Purpose” compute size choice in the Compute + Storage blade for Flexible Server in Azure Database for PostgreSQL. You can see new Ddsv4 choices on the list.

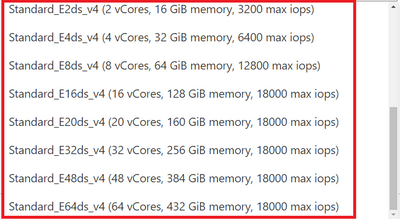

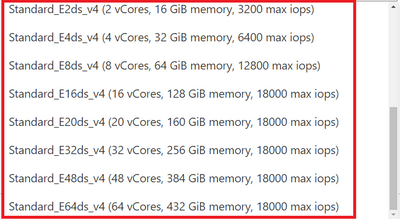

Similarly, when you choose Memory Optimized compute tier shown in Figure 1, you can choose a Edsv4 compute size.

Figure 3: Screenshot of the pulldown menu for the “Memory Optimized” compute size choice in the Compute + Storage blade for Flexible Server in Azure Database for PostgreSQL. You can see new Edsv4 choices on the list.

Figure 3: Screenshot of the pulldown menu for the “Memory Optimized” compute size choice in the Compute + Storage blade for Flexible Server in Azure Database for PostgreSQL. You can see new Edsv4 choices on the list.

How do I transfer my existing reservations from V3 to V4?

If you are already using reserved instances with V3, you can easily exchange the reservations to the desired V4 compute, and you may just have to pay the difference in pricing depending on the compute tier.

Are these V4 compute tiers available in all regions?

Please see this list of regions for V3/V4 VM series availability.

All sounds good. What are the limitations?

- Currently, local disk caching is enabled for storage provisioned up to 2TiB, with plans to support caching for larger provisioned storage sizes in the future.

- The compute scaling is an offline operation and expect a couple of minutes of downtime. It is recommended to perform such scale operations during non-peak periods.

Where can I learn more about Flexible Server?

by Contributed | Oct 12, 2021 | Technology

This article is contributed. See the original author and article here.

Because of the retirement of Azure AD Graph has been announced, all applications using the service need to switch to Microsoft Graph, which provides all the functionality of Azure AD Graph along with new functionality. This also apply to the Azure command-line tools (Azure CLI, Azure PowerShell, and Terraform) and we are currently updating our tools to use Microsoft Graph and make it available to you as early as possible to give you enough time to update your code.

Impact on existing scripts

Our principle is to minimize the disruption to existing scripts. Therefore, whenever possible, we will keep the same command signature so that a version upgrade of your tool will be sufficient with no additional effort.

In few cases, the behavioral difference of the Microsoft Graph API from the AzureAD Graph API will induce a breaking change. For example, when creating an Azure AD application, the associated password can no longer be set at creation time. If you want to specify this secret, it must be updated afterward. Along with the preview versions of the tools, we will publish a full list of these breaking changes and instructions how to update your commands.

Azure vs Microsoft Graph command-line tools

AzureAD capabilities in the Azure command-line tools are provided to simplify the getting started experience for script developers, hence the limited scenarios covered with those commands.

While we plan keep supporting a subset of the AzureAD resources in the upcoming releases of our tools, we will implement new Graph capabilities as it pertains to fundamentals like authentication. For resources not supported with the Azure CLIs tools, we recommend using the Microsoft Graph tools: either the Microsoft Graph SDK PowerShell modules or the Microsoft Graph CLI.

Availability and next steps

To help you plan your migration work before the deadline, we are sharing our current timeline:

- October 2021

- Public preview of Azure CLI using MSAL (pre-requisite to migrating to MS Graph)

- Public preview of Azure PowerShell using Microsoft Graph API

- Each tool documentation will have guidance on how to install and test the previews.

- December 2021:

- General availability of Azure PowerShell using Microsoft Graph

- Update of Azure services documentation and scripts using outdated commands

- January 2022:

- Preview of Azure CLI using Microsoft Graph

- Q1 2022:

- GA of Azure CLI using Microsoft Graph

For Terraform, HashiCorp has already completed the migration to Microsoft graph with the AzureAD provider v2. Additional information here: https://registry.terraform.io/providers/hashicorp/azuread/latest/docs/guides/microsoft-graph

Please comment on this article or reach out to the respective teams if you have any questions for Azure CLI (@azurecli) or Azure PowerShell (@azureposh).

Additional resources

While we update the official documentation for Azure tools, you can use the following resources for additional guidance on migrating to Microsoft Graph.

Further information regarding the migration to MSAL and its importance in the migration to MS Graph:

The content provided for Terraform, is very useful to understand the API changes:

Open issues in the respective repositories if you face any:

Let us know what you think in the comment section below.

Damien

on behalf of the Azure CLIs tools team

by Contributed | Oct 10, 2021 | Technology

This article is contributed. See the original author and article here.

Active geo-replication is an Azure SQL Database feature that allows you to create readable secondary databases of individual databases on a server in the same or different data center (region).

We have received few cases where customers would like to have this setup across subscriptions with private endpoints. This article describes how to achieve it and set up GEO replication between two Azure SQL servers across subscriptions using private endpoints while public access is disallowed.

To start with this setup, kindly make sure the below are available in your environment.

- Two subscriptions for primary and secondary environments,

- Primary Environment: Azure SQL Server, Azure SQL database, and Virtual Network.

- Secondary Environment: Azure SQL Server, Azure SQL database, and Virtual Network.

Note: Use paired region for this setup, and you can have more information about paired regions by accessing this link.

- Public access should be enabled during the GEO replication configuration.

- Both your virtual network’s subnet should not overlap IP addresses. You can refer to this blog for more information.

For this article , the primary and secondary environments will be as below:

Primary Environment

Subscription ID: Primary-Subscription

Server Name: primaryservertest.database.windows.net

Database Name: DBprim

Region: West Europe

Virtual Network: VnetPrimary

Subnet: PrimarySubnet – 10.0.0.0/24

Secondary Environment

Subscription ID: Secondary-Subscription

Server Name: secservertest1.database.windows.net

Region: North Europe

Virtual Network: VnetSec

Subnet: SecondarySubnet – 10.2.0.0/24

Limitations

- Creating a geo-replica on a logical server in a different Azure tenant is not supported

- Cross-subscription geo-replication operations including setup and failover are only supported through T-SQL commands.

- Creating a geo-replica on a logical server in a different Azure tenant is not supported when Azure Active Directory only authentication for Azure SQL is active (enabled) on either primary or secondary logical server.

GEO Replication Configuration

Follow the below steps to configure GEO replication (make sure the public access is enabled while executing the below steps)

1) Create a privileged login/user on both primary and secondary to be used for this setup:

a. Connect to your primary Azure SQL Server and create a login and a user on your master database using the below script:

--Primary Master Database

create login GeoReplicationUser with password = 'P@$$word123'

create user GeoReplicationUser for login GeoReplicationUser

alter role dbmanager add member GeoReplicationUser

Get the created user SID and save it:

select sid from sys.sql_logins where name = 'GeoReplicationUser'

b. On the primary database create the required user as below:

-- primary user database

create user GeoReplicationUser for login GeoReplicationUser

alter role db_owner add member GeoReplicationUser

c. Connect to your secondary server and create the same login and user while using the same SID you got from point A:

--Secondary Master Database

create login GeoReplicationUser with password = 'P@$$word123', sid=0x010600000000006400000000000000001C98F52B95D9C84BBBA8578FACE37C3E

create user GeoReplicationUser for login GeoReplicationUser;

alter role dbmanager add member GeoReplicationUser

2) Make sure that both primary and secondary Azure SQL servers firewall rules are configured to allow the connection (such as the IP address of the host running SQL Server Management Studio).

3) Log in with the created user to your primary Azure SQL server to add the secondary server and configure GEO replication, by running the below script on the primary master database:

-- Primary Master database

alter database DBprim add secondary on server [secservertest1]

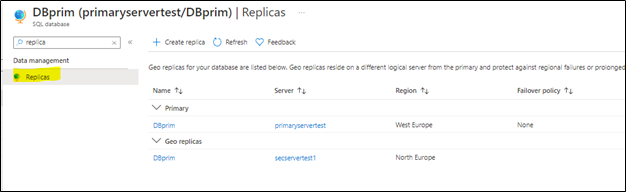

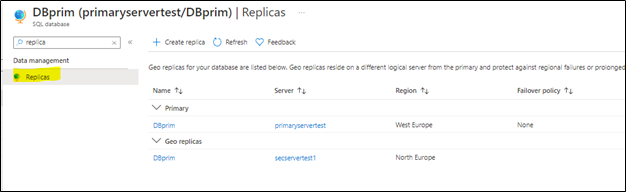

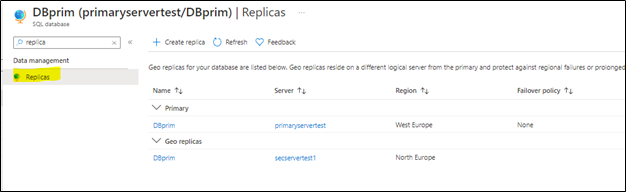

4) To verify the setup, access your Azure portal, go to your primary Azure SQL database, and access Replicas blade as below:

You will notice that the secondary database has been added and configured.

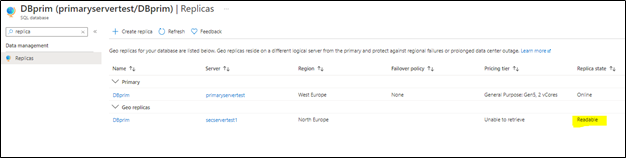

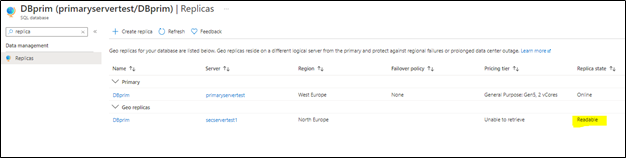

Note: before moving to the next step make sure your replica has completed the seeding and is marked as “readable” under replica status (as highlighted below):

Configuring private endpoints for both servers

Now, we will start preparing the private endpoints setup for both primary and secondary servers.

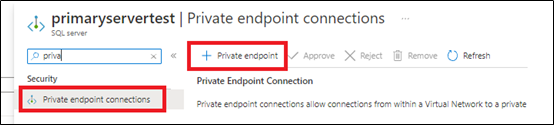

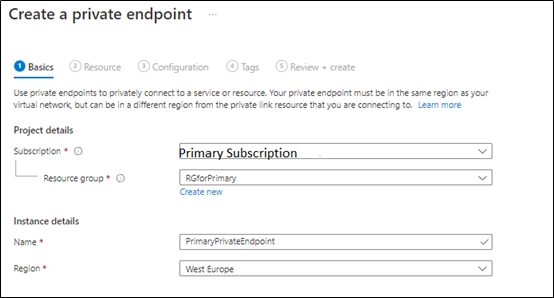

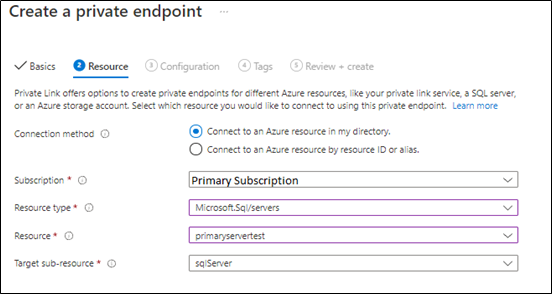

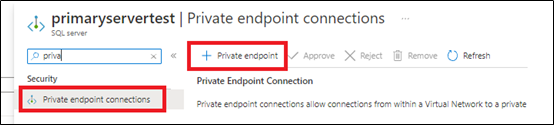

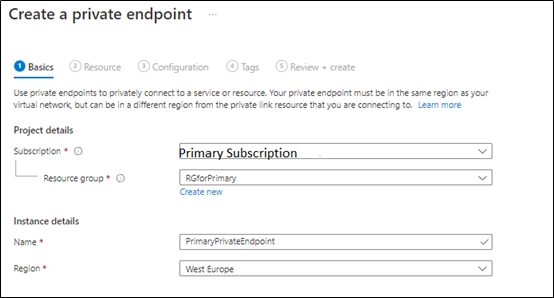

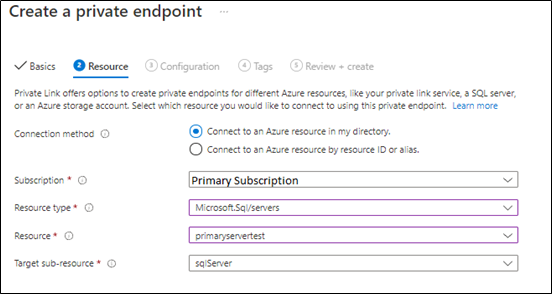

1) From Azure Portal > Access Primary Server > private endpoints connections blade > add new private endpins as below:

we will select the primary subscription to host the primary server private endpoints,

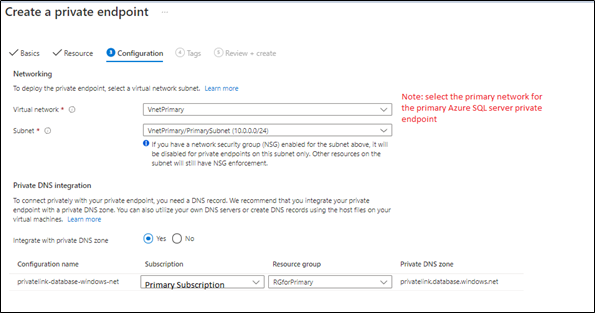

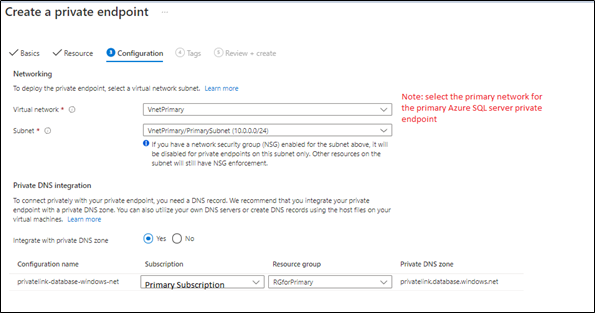

Next, the primary private endpoint will be linked to the primary virtual network and make sure the private DNS zone is linked to the primary subscription as below:

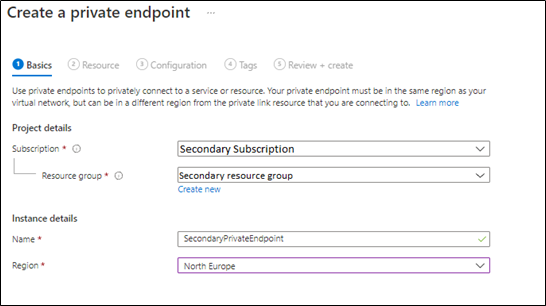

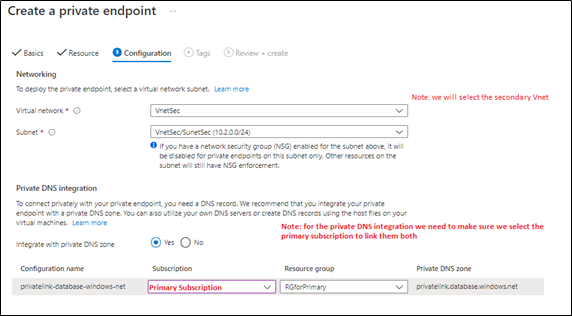

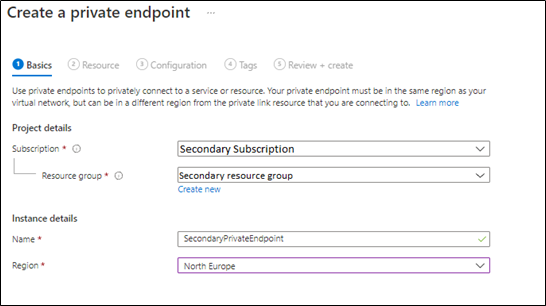

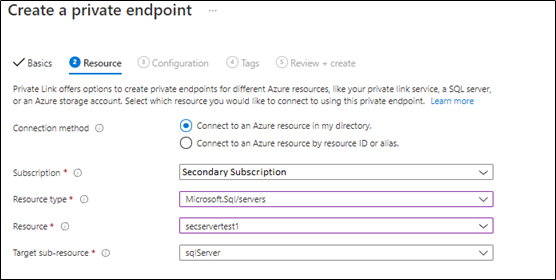

2. Create secondary server private endpoint, from Azure Portal > Access Secondary Server > private endpoints connections blade > add a new private endpoint as below:

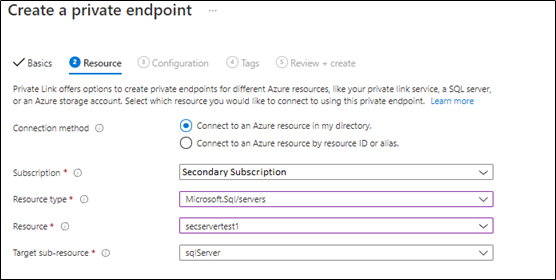

in the below steps, we will select the secondary server virtual network and subscription,

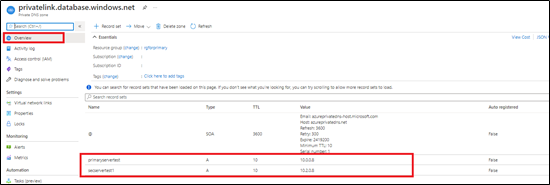

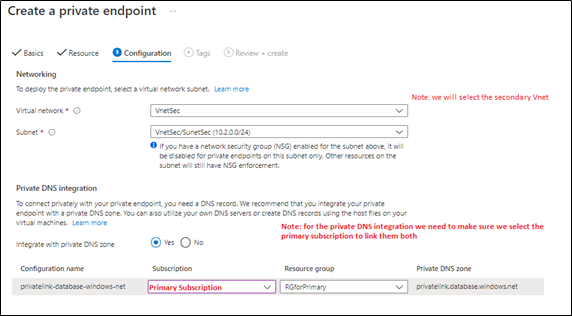

In the next step, will link the secondary server private endpoint with the primary private DNS Zone, as Both primary and secondary private endpoints should be linked to the same private DNS zone (as below),

3) Once both private endpoints are created, make sure that they are accepted as mentioned in this document.

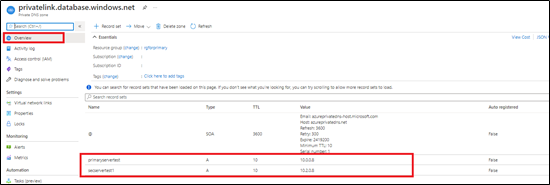

4) Access your private DNS zone from Azure portal, and verify that both are linked to the same one. This can be checked by accessing Azure portal > go to private DNS zone > select your primary subscription and check it as below,

Note: this step has been discussed in detail in this blog article.

Virtual Network setup

You need to make sure your Azure Virtual networks have Vnet peering between primary and secondary, in order to allow communication once the public access is disabled. For more information, you can access this document.

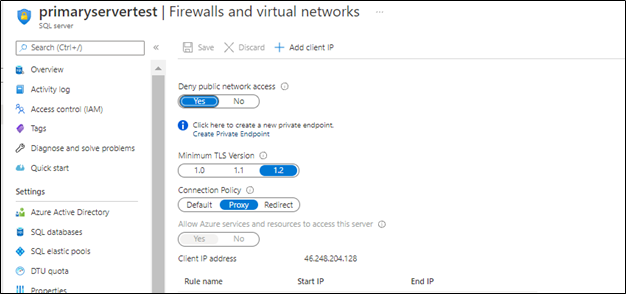

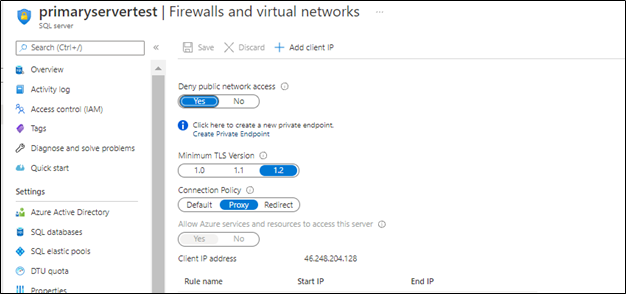

Disabling public access

Once the setup is ready you can disallow public access on your Azure SQL servers,

Next

once the public access is disabled, the GEO replication will be running under private endpoints between your Azure SQL server across subscriptions.

Troubleshooting

1- You may encounter below error when adding the secondary using T-SQL

alter database DBprim add secondary on server [secservertest1]

Msg 42019, Level 16, State 1, Line 1

ALTER DATABASE SECONDARY/FAILOVER operation failed. Operation timed out.

Possible solution: Set “deny public access” to off while setting up the geo replication via the T-SQL commands , once the geo replication is set up “deny public access” can be turned back on and the secondary will be able to sync and get the data from primary, public access only needs to be on for setting up the geo replication.

2- Also, You may encounter below error when adding the secondary using T-SQL

alter database DBprim add secondary on server [secservertest1]

Msg 40647, Level 16, State 1, Line 1

Subscription ‘xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxx’ does not have the server ‘secservertest1’.

Possible solution: Make sure that both private links use the same private DNS zone that was used for the primary. Refer to blog for more information.

References

Active geo-replication – Azure SQL Database | Microsoft Docs

Using Failover Groups with Private Link for Azure SQL Database – Microsoft Tech Community

Disclaimer

Please note that products and options presented in this article are subject to change. This article reflects the Geo Replication across different subscriptions with private endpoints option available for Azure SQL Database in October, 2021.

Closing remarks

I hope this article was helpful for you, please like it on this page and share through social media. please feel free to share your feedback in the comments section below.

Recent Comments