by Contributed | Mar 7, 2022 | Technology

This article is contributed. See the original author and article here.

Protegendo o backend com o Azure API Management

O Azure API Management é uma excelente opção para projetos que lidam com APIs. Estratégias como centralização, monitoria, gerenciamento e documentação são características que o APIM ajuda você a entregar. saiba mais.

No entanto muitas vezes esquecemos que nossos backends precisam estar protegidos de acessos externos. Pensando nisso vamos mostrar uma forma muito simples de proteger seu backend usando o recurso de private endpoint, VNETS e sub-redes. Assim podemos impedir chamadas públicas da internet no seu backend, porém permitindo que o APIM o acesse de forma simples e transparente.

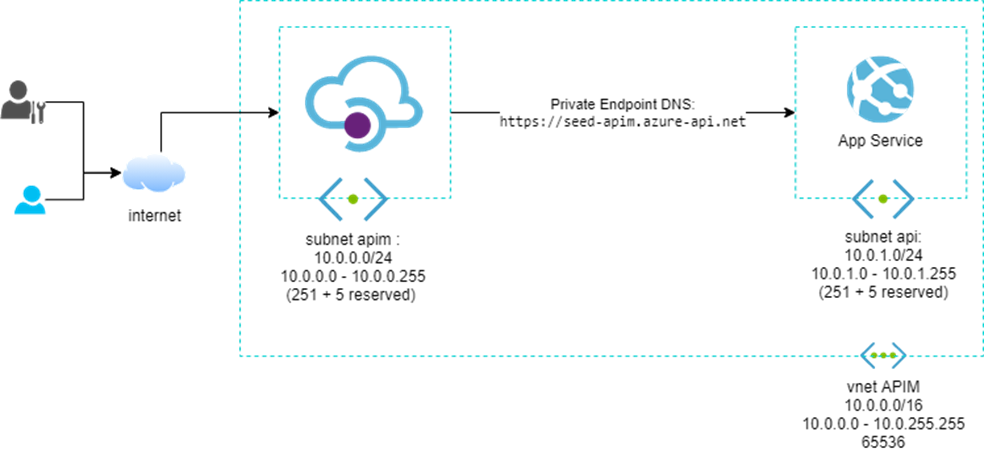

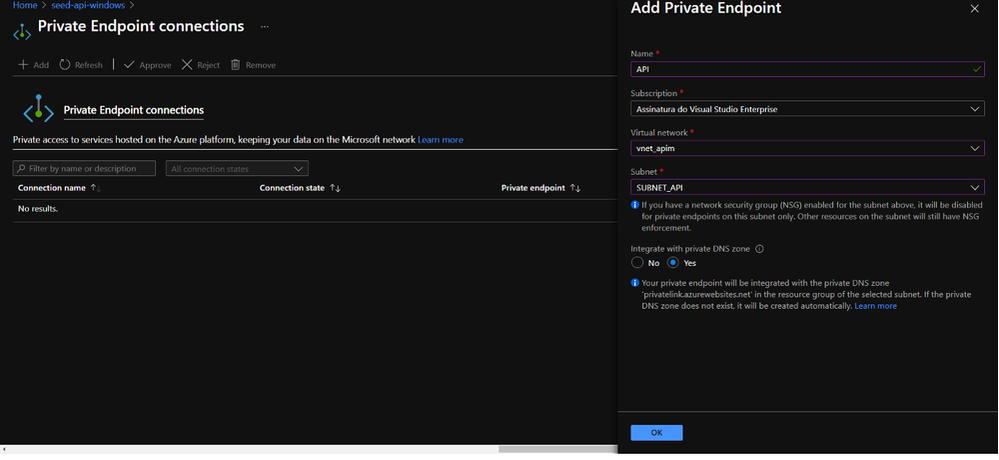

O primeiro passo é entender a VNET, que é a representação virtual de uma rede de computadores, ela permite que os recursos do Azure se comuniquem com segurança entre si, com a Internet e com redes locais. Como em qualquer rede, ela pode ser segmentada em partes menores chamadas sub-redes. Essa segmentação nos ajuda a definir a quantidade de endereços disponíveis em cada sub-redes, evitando conflitos entre essas redes e diminuindo o tráfego delas. Observe o desenho abaixo:

Diagrama com uma VNET de CIDR 10.0.0.0/16 e duas sub-redes com CIDR 10.0.0.0/24 e 10.0.1.0/24.

Diagrama com uma VNET de CIDR 10.0.0.0/16 e duas sub-redes com CIDR 10.0.0.0/24 e 10.0.1.0/24.

É importante entender quais opções de conexão com uma VNET (Modos) o APIM oferece:

- Off: Esse é o padrão sem rede virtual com os endpoints abertos para a internet.

- Externa: O portal de desenvolvedor e o gateway podem ser acessados pela Internet pública, e o gateway pode acessar recursos dentro da rede virtual e da internet.

- Interna: O portal de desenvolvedor e o gateway só podem ser acessados pela rede interna. e o gateway pode acessar recursos dentro da rede virtual e da internet.

Em um ambiente de produção essa arquitetura contaria com um firewall de borda, tal como um Application Gateway ou um Front Door, essas ferramentas aumentam a segurança do circuito oferecendo proteções automáticas contra os ataques comuns, por exemplo, SQL Injection, XSS Attack (cross-site scripting) entre outros. No entanto para fins de simplificação vamos ficar sem essa proteção.

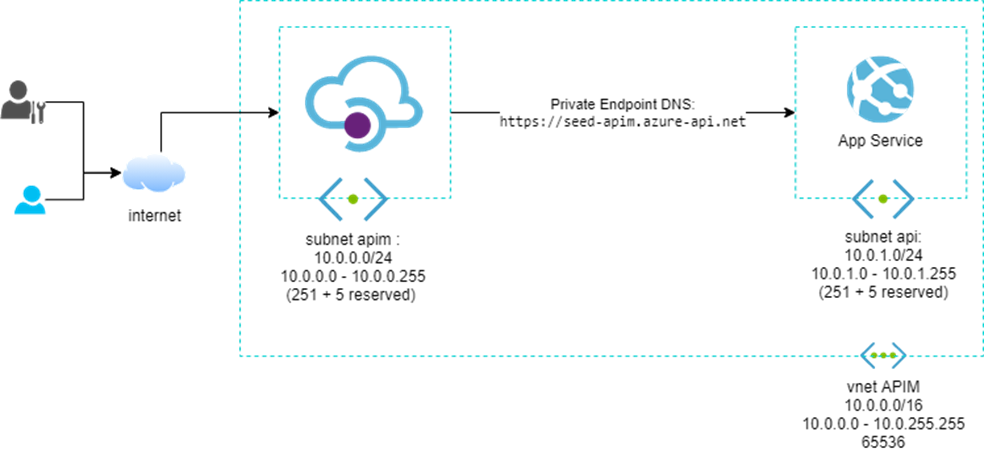

A configuração de rede do APIM pode ser feita no menu lateral Virtual Networking, no portal de gerenciamento do Azure.

APIM com configuração externa na VNET vnet_apim e sub-rede SUBNET_APIM

APIM com configuração externa na VNET vnet_apim e sub-rede SUBNET_APIM

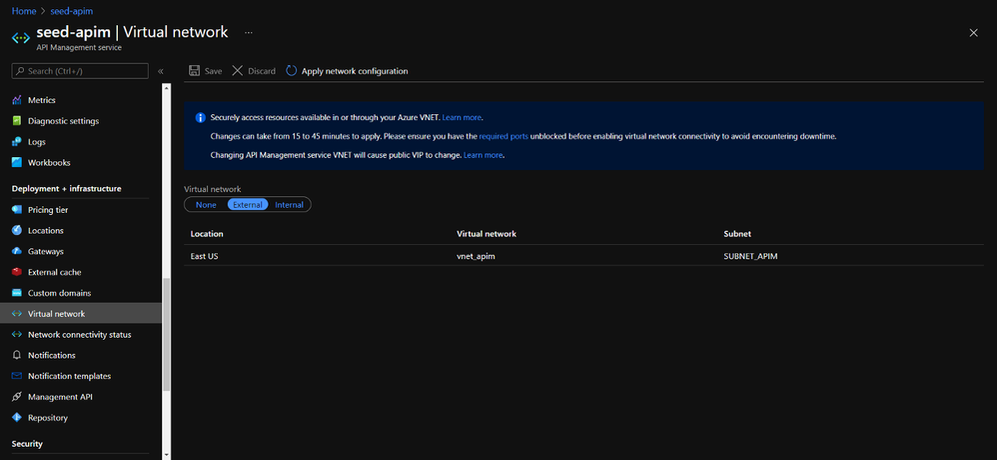

Depois de configurar o APIM, devemos configurar o App Services. Nele vamos até o menu Networking e configuramos um private endpoint, é com esse recurso que associamos um App Services a uma VNET e uma sub-rede. Durante essa configuração é importante marcamos a opção que integra com um DNS privado, para garantir a resolução de nomes dentro da rede privada.

Portal do Azure, configuração do private endpoint do App Services

Portal do Azure, configuração do private endpoint do App Services

Agora podemos conferir que foi criado um private zone para o domínio azure.websites.net apontando para o IP privado do App Services, isso permite que o APIM acesse o App Services de forma transparente, assim como era antes da implementação da VNET.

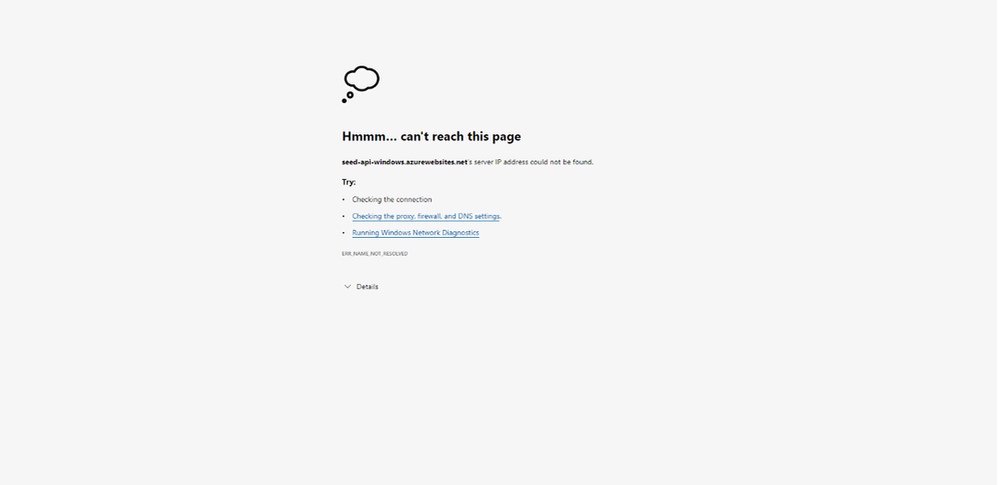

Para realmente termos certeza de que nosso App Services está protegido, podemos tentar acessar seu endereço pelo navegador, algo como a imagem abaixo deve acontecer. Não vamos conseguir resolver esse DNS.

Acessando URL do APP Services pelo navegador, e recebendo um erro de resolução de DNS.

Acessando URL do APP Services pelo navegador, e recebendo um erro de resolução de DNS.

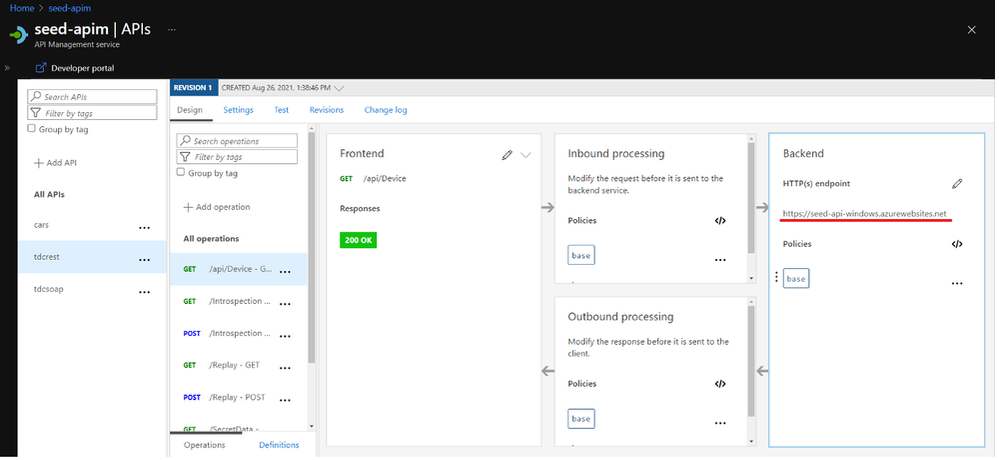

No entanto as APIs do APIM continuam funcionando com o mesmo endereço, já que o APIM está na mesma VNET que o App Services e o DNS privado resolve os nomes para endereços dessa VNET.

Tela do APIM com mesmo backend que não pode ser acessado pelo browser.

Tela do APIM com mesmo backend que não pode ser acessado pelo browser.

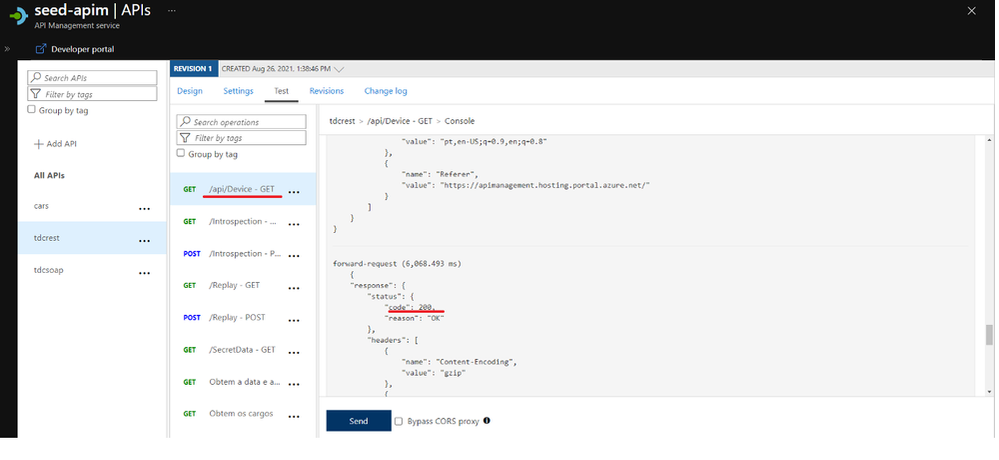

Usando a ferramenta de Teste do APIM podemos confirmar que o APIM consegue acessar o back-end.

Explorando a opção de Teste do APIM.

Explorando a opção de Teste do APIM.

Conclusão

A implantação da Rede Virtual do Azure fornece segurança aprimorada, isolamento e permite que você coloque seu serviço de gerenciamento de API em uma rede protegida da Internet. Todos os acessos como portas e serviços podem ser controlados pelo NSG da VNET, dessa forma você garante que suas APIS serão acessadas apenas pelo endpoint de gateway do APIM.

Referências

- https://docs.microsoft.com/pt-br/azure/api-management/api-management-using-with-vnet

- https://docs.microsoft.com/en-us/azure/api-management/api-management-using-with-internal-vnet

- https://docs.microsoft.com/en-us/azure/virtual-network/virtual-networks-overview

by Contributed | Mar 6, 2022 | Technology

This article is contributed. See the original author and article here.

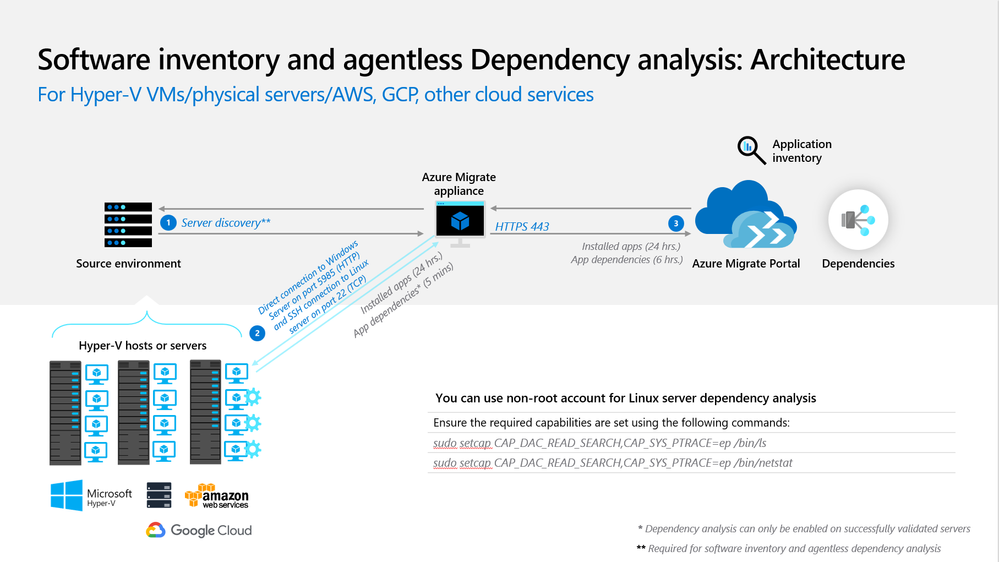

Build high confidence migration plans using Azure Migrate’s software inventory and agentless dependency analysis

Authored by Vikram Bansal, Senior PM, Azure Migrate

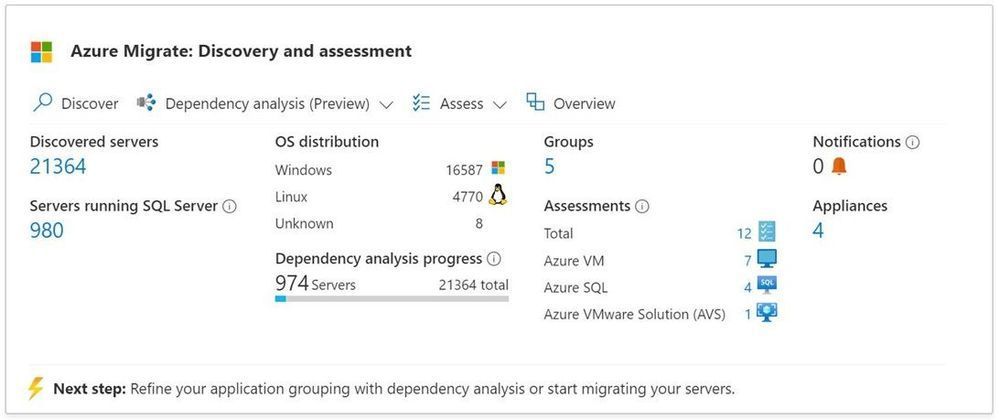

Migrating a large & complex IT environment from on-premises to the cloud can be quite daunting. Customers are often challenged with the problem of unknown where they may not have complete visibility of applications running on their servers or the dependencies between them, as they start planning their migration to cloud. This results not only in leaving behind dependent servers causing the application to break, but also adds up to the migration cost that the customers want to reduce. Azure Migrate aims at helping customers build a high-confidence migration plan with features like software inventory and agentless dependency analysis.

Software inventory provides the list of applications, roles and features running on Windows and Linux servers, discovered using Azure Migrate. Agentless dependency analysis helps you analyze the dependencies between the discovered servers which can be easily visualized with a map view in Azure Migrate project and can be used to group related servers for migration to Azure.

Today, we are announcing the public preview of at-scale, software inventory and agentless dependency analysis for Hyper-V virtual machines and bare-metal servers.

How to get started?

- To get started, create a new Azure Migrate project or use an existing one.

- Deploy and configure the Azure Migrate appliance for Hyper-V or for bare-metal servers.

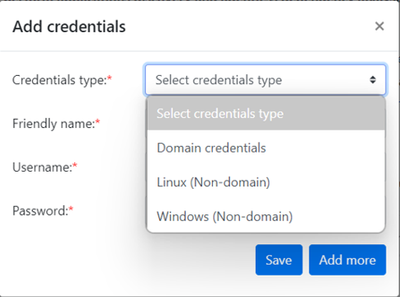

- Enable software inventory by providing server credentials on the appliance and start discovery. For Hyper-V virtual machines, appliance lets you enter multiple credentials and will automatically map each server to the appropriate credential.

The credentials provided on the appliance are encrypted and stored on the appliance server locally and are never sent to Microsoft.

- As servers start getting discovered, you can view them in the Azure Portal.

Software inventory

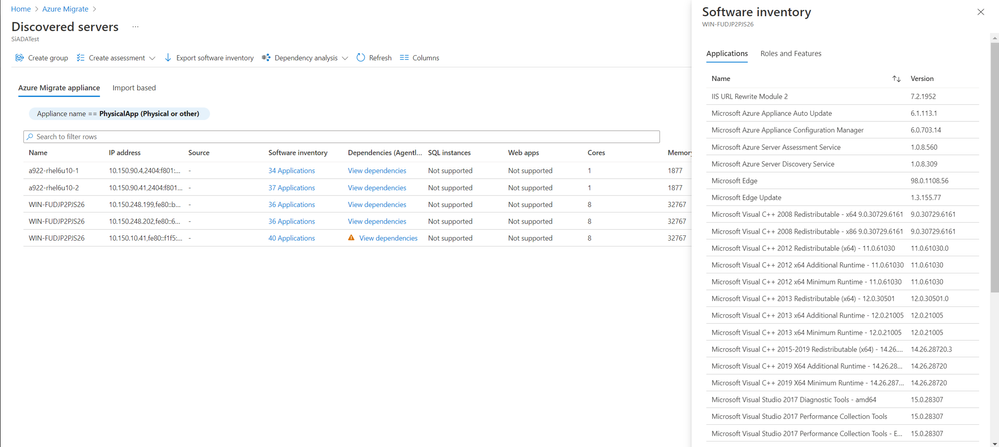

- Using the credentials provided, the appliance gathers information on the installed applications, enabled roles and features on the on-premises Windows and Linux servers.

- Software inventory is completely agentless and does not require installing any agents on the servers.

- Software inventory is performed by directly connecting to the servers using the server credentials added on the appliance. The appliance gathers the information about the software inventory from Windows servers using PS remoting and from Linux servers using SSH connectivity.

- Azure Migrate directly connects to the servers to execute a list of queries and pull the required data once every 12 hours.

- A single Azure Migrate appliance can discover up to 5000 Hyper-V VMs or 1000 physical servers and perform software inventory across all of them.

Agentless dependency analysis

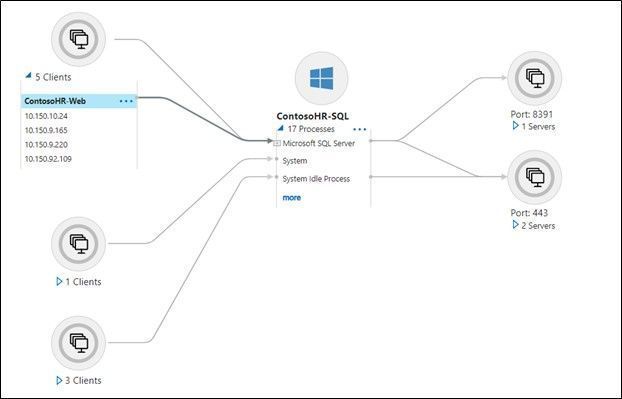

- Agentless dependency analysis feature helps in visualizing the dependencies between your servers and can be used to determine servers that should be migrated together.

- The dependency analysis is completely agentless and does not require installing any agents on the servers.

- You can enable dependency analysis on those servers where the prerequisite validation checks succeed during software inventory.

- Agentless dependency analysis is performed by directly connecting to the servers using the server credentials added on the appliance. The appliance gathers the dependency information from Windows servers using PS remoting and from Linux servers using SSH connection.

- Azure Migrate directly connects to the servers to execute a list of ‘ls’ and ‘netstat’ queries and pull the required data every 5 mins. The appliance aggregates the 5 min data points and sends it to Azure every 6 hours.

- Using the built-in dependency map view, you can easily visualize dependencies between servers. You can also download the dependency data including process, application, and port information in a CSV format for offline analysis.

- Dependency analysis can be performed concurrently on up to 1000 servers discovered from one appliance in a project. To analyze dependencies on more than 1000 servers from the same appliance, you can sequence the analysis in multiple batches of 1000.

Workflow and architecture

The architecture diagram below shows how software inventory and agentless dependency analysis works. The appliance:

- discovers the Windows and Linux servers using the source details provided on configuration manager

- collects software inventory (installed applications, roles and features) information from discovered servers

- performs a validation of all prerequisites required to enable dependency analysis on a server. The validation is done when appliance performs software inventory. Users can enable dependency analysis only on those servers where the validation succeeds, so that they are less prone to hit errors after enabling the dependency analysis.

- collects the dependency data from servers where dependency analysis was enabled from the portal.

- periodically sends collected information to the Azure Migrate project via HTTPS port 443 over a secure encrypted connection.

Resources to get started

- Tutorial on how to perform software inventory using Azure Migrate: Discovery and assessment.

- Tutorial on how to perform agentless dependency analysis using Azure Migrate: Discovery and assessment.

by Contributed | Mar 5, 2022 | Technology

This article is contributed. See the original author and article here.

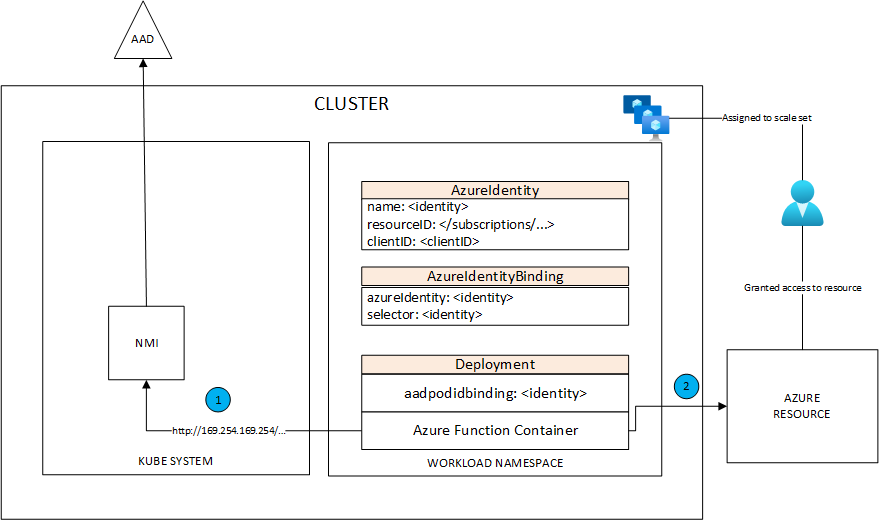

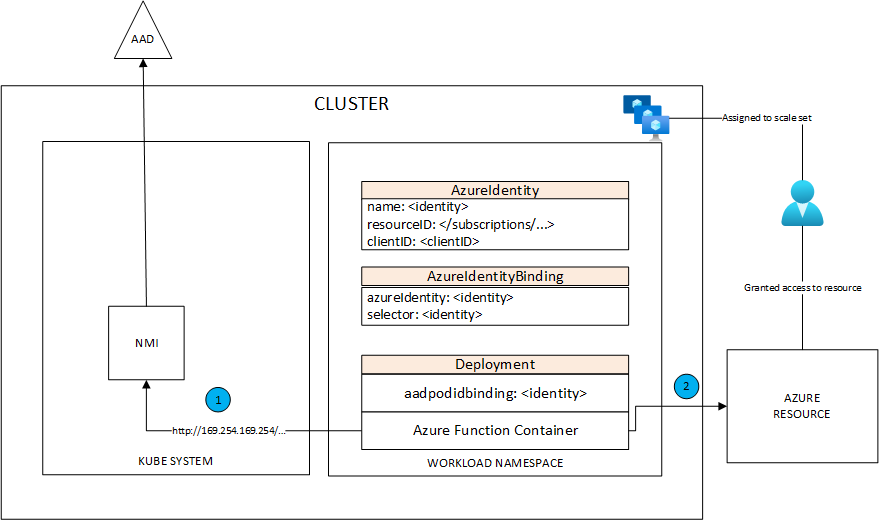

I recently implemented a change in KEDA (currently evaluated as a potential pull request), consisting of leveraging managed identities in a more granular way, in order to adhere to the least privilege principle. While I was testing my changes, I wanted to use managed identities not only for KEDA itself but also for the Azure Functions I was using in my tests. I found out that although there are quite a few docs on the topic, none is targeting AKS:

https://docs.microsoft.com/en-us/azure/azure-functions/functions-bindings-storage-queue-trigger?tabs=csharp#identity-based-connections

https://docs.microsoft.com/en-us/azure/azure-functions/functions-reference?tabs=blob#connecting-to-host-storage-with-an-identity-preview

You can find many articles showing how to grab a token from an HTTP triggered function, or using identity-based triggers, but in the context of a function hosted in Azure itself. It’s not rocket science to make this work in AKS but I thought it was a good idea to recap it here as I couldn’t find anything on that.

Quick intro to managed identities

Here is a quick reminder for those who would still not know about MI. The value proposition of MI is: no password in code (or config). MI are considered best practices because the credentials used by identities are entirely managed by Azure itself. Workloads can refer to identities without the need to store credentials anywhere. On top of this, you can manage authorization with Azure AD (single pane of glasses), unlike shared access signatures and alternate authorization methods.

AKS & MI

For MI to work in AKS, you need to enable them. You can find a comprehensive explanation on how to do this here. In a nutshell, MI works the following way in AKS:

An AzureIdentity and AzureIdentityBinding resource must be defined. They target a user-assigned identity, which is attached to the cluster’s VM scale set. The identity can be referred to by deployments through the aadpodbinding annotation. The function (or anything else) container makes a call to the MI system endpoint http://169…, that is intercepted by the NMI pod, which in turn, performs a call to Azure Active Directory to get an access token for the calling container. The calling container can present the returned token to the Azure resource to gain access.

Using the right packages for the function

The packages you have to use depend on the Azure resource you interact with. In my example, I used storage account queues as well as service bus queues. To leverage MI from within the function, you must:

- use the Microsoft.Azure.WebJobs.Extensions.Storage >= 5.0.0

- use the Microsoft.Azure.WebJobs.Extensions.ServiceBus >= 5.0.0

- use the Microsoft.NET.Sdk.Functions >= 4.1.0

Note that the storage package is not really an option because Azure Functions need an Azure Storage account for the most part.

Passing the right settings to the function

Azure functions takes their configuration from the local settings and from their host’s configuration. When using Azure Functions hosted on Azure, we can simply use the function app settings. In AKS, this is slightly different as we have to pass the settings through a ConfigMap or a Secret. To target both the Azure Storage account and the Service Bus, you’ll have to define a secret like the following:

data:

AzureWebJobsStorage__accountName: <base64 value of storage account name>

ServiceBusConnection__fullyQualifiedNamespace: <base64 value of the service bus FQDN>

FUNCTIONS_WORKER_RUNTIME: <base64 value of the function language>

apiVersion: v1

kind: Secret

metadata:

name: <secret name>

---

In the above example, I use the same storage account for my storage-queue trigger as well as the storage account that is required by functions to work. In case I was using a different storage account for the queue trigger, I’d declare an extra setting with the account name. The service bus queue-triggered function relies on the __fullyQualifiedNamespace to start listening to the service bus. Paradoxally, although I create a K8s secret, there is no secret information here, thanks to the MI.

For your reference, I’m pasting the entire YAML here:

data:

AzureWebJobsStorage__accountName: <base64 value of the storage account name>

ServiceBusConnection__fullyQualifiedNamespace: <base64 value of the service bus FQDN>

FUNCTIONS_WORKER_RUNTIME: <base64 value of the function code>

apiVersion: v1

kind: Secret

metadata:

name: misecret

---

apiVersion: aadpodidentity.k8s.io/v1

kind: AzureIdentity

metadata:

name: storageandbushandler

annotations:

aadpodidentity.k8s.io/Behavior: namespaced

spec:

type: 0

resourceID: /subscriptions/.../resourceGroups/.../providers/Microsoft.ManagedIdentity/userAssignedIdentities/storageandbushandler

clientID: <client ID of the user-assigned identity>

---

apiVersion: aadpodidentity.k8s.io/v1

kind: AzureIdentityBinding

metadata:

name: storageandbushandler-binding

spec:

azureIdentity: storageandbushandler

selector: storageandbushandler

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: busandstoragemessagehandlers

labels:

app: busandstoragemessagehandlers

spec:

selector:

matchLabels:

app: busandstoragemessagehandlers

template:

metadata:

labels:

app: busandstoragemessagehandlers

aadpodidbinding: storageandbushandler

spec:

containers:

- name: secretlessfunc

image: stephaneey/secretlessfunc:dev

imagePullPolicy: Always

envFrom:

- secretRef:

name: misecret

---

You can see that the secret is passed to the function through the envFrom attribute. If you want to give it a test, you can use the docker image I pushed to Docker Hub.

and the code of both functions, embedded in the above docker image (nothing special):

[FunctionName("StorageQueue")]

public void StorageQueue([QueueTrigger("myqueue-items", Connection = "AzureWebJobsStorage")]string myQueueItem, ILogger log)

{

log.LogInformation($"C# Queue trigger function processed: {myQueueItem}");

}

[FunctionName("ServiceBusQueue")]

public void ServiceBusQueue([ServiceBusTrigger("myqueue-items", Connection = "ServiceBusConnection")] string myQueueItem, ILogger log)

{

log.LogInformation($"C# Queue trigger function processed: {myQueueItem}");

}

You just need to make sure the connection string names you mention in the triggers correspond to the settings you specify in the K8s secret.

by Contributed | Mar 4, 2022 | Technology

This article is contributed. See the original author and article here.

Microsoft Defender for Cloud provides advanced threat detection capabilities across your cloud workloads. This includes comprehensive coverage plans for compute, PaaS and data resources in your environment. Before enabling Defender for Cloud across subscriptions, customers are often interested in having a cost estimation to make sure the cost aligns with the team’s budget. We previously released the Microsoft Defender for Storage Price Estimation Workbook, which was widely and positively received by customers. Based on customer feedback, we have extended this offering by creating one comprehensive workbook that covers most Microsoft Defender for Cloud plans. This includes Defender for Key Vault, Containers, App Service, Servers, Storage and Databases. After reading this blog, you can deploy the workbook from our GitHub community and be sure to leave your feedback to be considered for future enhancements. Please remember these numbers are only estimated based on retail prices and do not provide actual billing data. For reference on how these prices are calculated, visit the Pricing—Microsoft Defender | Microsoft Azure.

Overview

When first opening the workbook, an overview page is shown that displays your overall Microsoft Defender for Cloud coverage across all selected subscriptions. The coverage is represented through the green and gray “on/off” tabs. If the plan is enabled on that subscription, the tab shows green. If the plan is not enabled, the tab shows gray. When clicking on “on/off” in this table, you will be redirected to a subscription’s Defender for Cloud plans page from where you can directly enable additional plans.

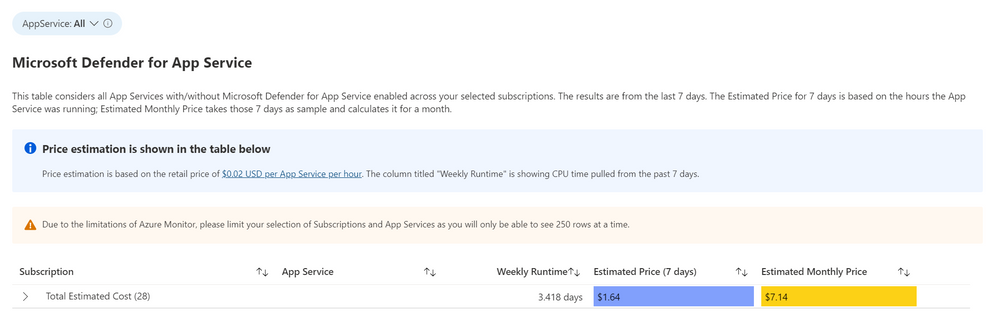

Defender for App Service

This workbook considers all App Services with and without Microsoft Defender for App Services enabled across your selected subscription. It is based on the retail price of $0.02 USD per App Service per hour. The column “Weekly Runtime” is showing CPU time pulled from the past 7 days. In the column “Estimated Price (7 days)”, the CPU time is multiplied by .02 to give an estimated weekly price. The “Estimated Monthly Price” uses the results of the “Estimated Price (7 days) to give the estimated price for one month.

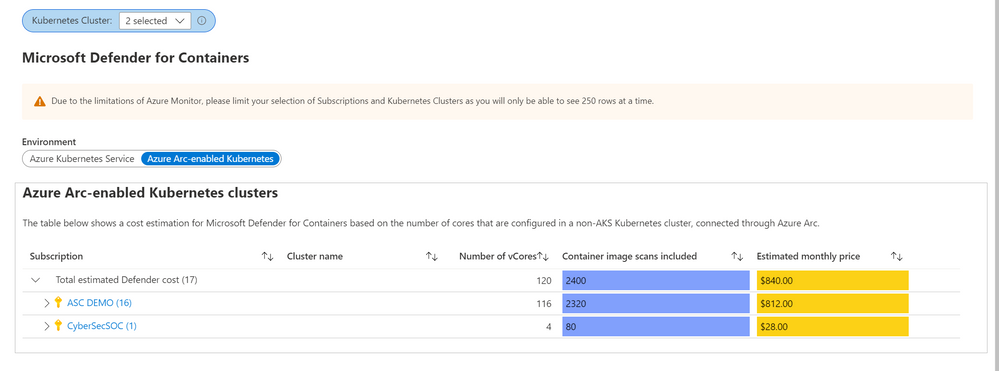

Defender for Containers

The Defender for Containers blade shows price estimations for two different environments: Azure Kubernetes Services (AKS) clusters, and Azure Arc-enabled Kubernetes clusters. For AKS, price estimation is calculated based on the average number of worker nodes in this cluster during the past 30 days. Defender for Containers pricing is based on the average number of vCores used in a cluster so based on the average number of nodes and the VM size, we can calculate a valid price estimation. In case the workbook cannot access telemetry for average node numbers, the table will show a price estimation based on the current number of vCores used in the AKS cluster.

The Defender for Containers blade shows price estimations for two different environments: Azure Kubernetes Services (AKS) clusters, and Azure Arc-enabled Kubernetes clusters. For AKS, price estimation is calculated based on the average number of worker nodes in this cluster during the past 30 days. Defender for Containers pricing is based on the average number of vCores used in a cluster so based on the average number of nodes and the VM size, we can calculate a valid price estimation. In case the workbook cannot access telemetry for average node numbers, the table will show a price estimation based on the current number of vCores used in the AKS cluster.

For Azure Arc-enabled Kubernetes clusters, price estimation is based on the number of vCores that are configured in this cluster. Both tables will also show the number of container images that can be scanned at no additional cost based on the number of vCores used in both, AKS and Azure Arc-enabled Kubernetes clusters.

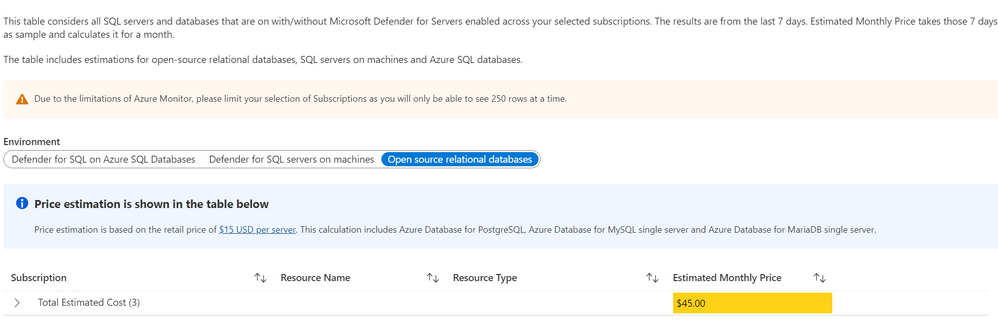

Defender for Databases

The Defender for Databases dashboard covers three key environments: Defender for SQL on Azure SQL Databases, Defender for SQL servers on machines and Open-source relational databases.

All estimations are based on the retail price of $15 USD per resource per month. “Defender for SQL on Azure SQL databases” includes Azure SQL Database’s Single databases and Elastic pools, Azure SQL Managed Instances and Azure Synapse (formerly SQL DW). “Defender for SQL servers on machines” includes all SQL servers on Azure Virtual Machines and Arc Enabled SQL server. Lastly, “Open-source relational databases” looks at Azure Database for PostgreSQL, Azure Database for MySQL single server and Azure Database for MariaDB single server. The logic and calculation for all three environments are the same. On the backend, the workbook runs a query to find all SQL or database resources in the selected environment and multiplies each one by 15 to get the estimated monthly cost.

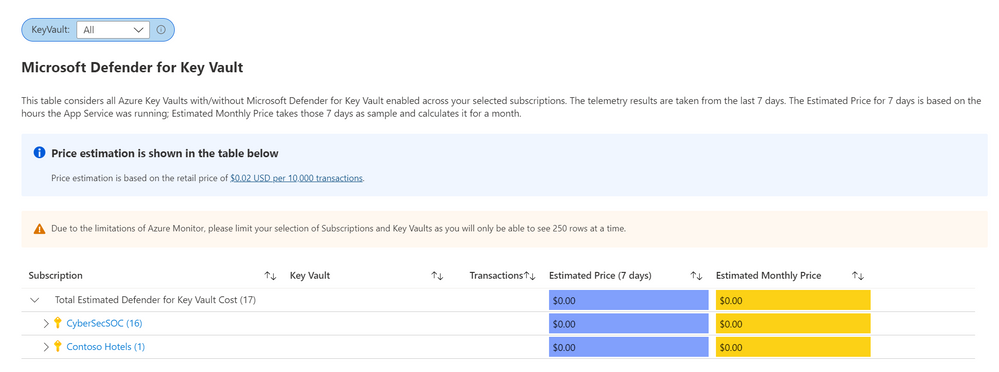

Defender for Key Vault

The Defender for Key Vault dashboard considers all Key Vaults with or without Defender for Key Vault enabled on the selected subscriptions. The calculations are based on the retail price of $0.02 USD per 10k transactions. The “Estimated Cost (7 days)” column takes the total Key Vault transactions of the last 7 days, divides them by 10K and multiples them by 0.02. In “Estimated Monthly Price”, the results of “Estimated Cost (7 days)” are multiplied by 4.35 to get the monthly estimate.

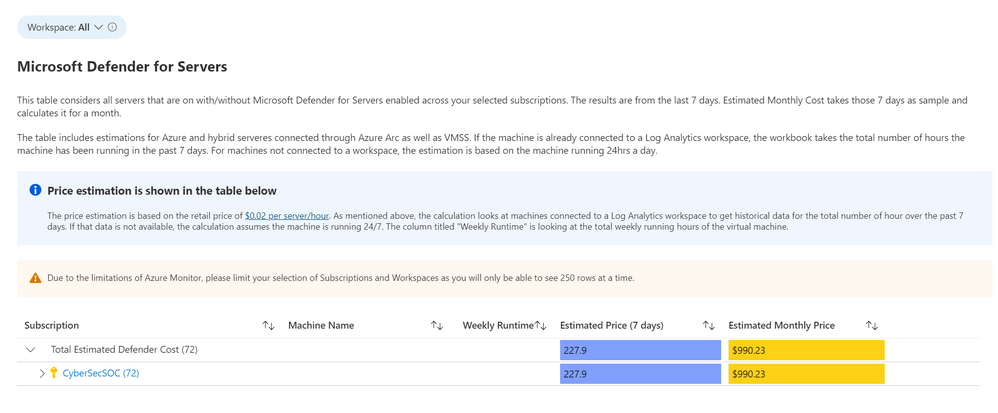

Defender for Servers

The Defender for Servers dashboard considers all servers on your subscriptions with or without Defender for Servers enabled. This dashboard includes estimations for Azure and hybrid servers connected through Azure Arc. The estimation is based on the retail price of $0.02 USD per server per hour. This dashboard includes the option to select a Log Analytics Workspaces. By selecting a workspace, the workbook can retrieve historical data for how many hours the machine has been running in the past seven days. If there is no historical data for the machine, the workbook assumes the machine has been running for 24hrs in the past 7 days. The column “Weekly Runtime” presents the total number of running hours from the past 7 days using the aforementioned strategies. The column “Estimated Cost (7 days)” takes the weekly hours and multiplies them by .02. Finally, in “Estimated Monthly Cost”, the result from “Estimated Cost (7 days)” is multiplied by * 4.35 to give the estimated monthly cost.

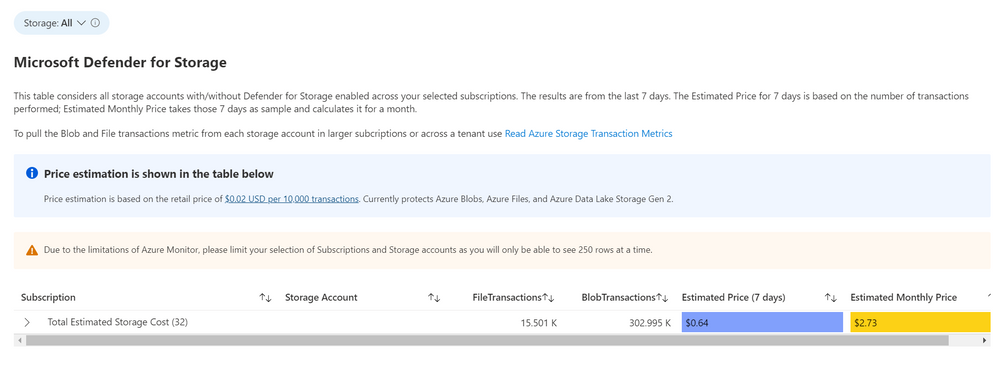

Defender for Storage

The Defender for Storage workbook looks at historical file and blob transaction data on supported storage types such as Blob Storage, Azure Files and Azure Data Lake Storage Gen 2. To learn more about the storage workbook, visit Microsoft Defender for Storage – Price Estimation Dashboard – Microsoft Tech Community.

Known Issues

Azure Monitor Metrics data backends have limits and the number of requests to fetch data might time out. To solve this, narrow your scope by reducing the selected subscriptions or resource types.

Acknowledgements

Special thanks to Fernanda Vela, Helder Pinto, Lili Davoudian, Sarah Kriwet and Tom Janetscheck for contributing their code to this consolidated workbook.

References:

by Contributed | Mar 3, 2022 | Technology

This article is contributed. See the original author and article here.

Over the past several SQL Server releases Microsoft has improved the concurrency and scalability of the tempdb database. Starting in SQL Server 2016 several improvements address best practices in the setup process, i.e. when there are multiple tempdb data files all files autogrow and grow by the same amount.

Additionally, starting in SQL Server 2019 we added the memory optimized metadata capability to tempdb and eliminated most PFS contention with concurrent PFS updates.

In SQL Server 2022 we are now addressing another common area of contention by introducing concurrent GAM and SGAM updates.

In previous releases, we may witness GAM contention different threads want to allocate or deallocate extents represented on the same GAM pages. Because of this contention, throughput is decreased and workloads that require many updates to the GAM page will take longer to complete. This is due to the workload volume and the use of repetitive create-and-drop operations, table variables, worktables that are associated with CURSORS, ORDER BYs, GROUP BYs, and work files that are associated with HASH PLANS.

The Concurrent GAM Updates feature in SQL Server 2022 adds the concurrent GAM and SGAM updates capability to avoid tempdb contention.

With GAM and SGAM contention being addressed, customer workloads will be much more scalable and will provide even better throughput.

SQL Server has improved tempdb in every single release and SQL Server 2022 is no exception.

Resources:

tempdb database

Recommendations to reduce allocation contention in SQL Server tempdb database

Learn more about SQL Server 2022

Register to apply for the SQL Server 2022 Early Adoption Program and stay informed

Watch technical deep-dives on SQL Server 2022

SQL Server 2022 Playlist

Diagrama com uma VNET de CIDR 10.0.0.0/16 e duas sub-redes com CIDR 10.0.0.0/24 e 10.0.1.0/24.

APIM com configuração externa na VNET vnet_apim e sub-rede SUBNET_APIM

Portal do Azure, configuração do private endpoint do App Services

Acessando URL do APP Services pelo navegador, e recebendo um erro de resolução de DNS.

Tela do APIM com mesmo backend que não pode ser acessado pelo browser.

Explorando a opção de Teste do APIM.

Recent Comments