by Contributed | Jul 14, 2022 | Technology

This article is contributed. See the original author and article here.

Introduction:

The purpose of this article is to provide specific guidelines on how to perform a Proof of Concept (PoC) for Microsoft Defender for Cloud’s native Amazon Web Services (AWS) support. This article is part of a series of articles called , each providing specific guidelines on how to perform a PoC for a specific Microsoft Defender for Cloud plan. For a more holistic approach and where you need to validate Microsoft Defender for Cloud’s Cloud Security Posture Management (CSPM) and Cloud Workload Protection (CWP) capabilities all up, see the How to Effectively Perform an Microsoft Defender for Cloud PoC article.

Planning:

This section highlights important considerations and availability information that you should be aware of when planning for the PoC. Let’s start with outlining how to go about planning for a PoC of Microsoft Defender for Cloud native AWS support.

NOTE: At the time of writing this article, Microsoft Defender for Cloud native AWS support isn’t available for national clouds (such as Azure Government and Azure China 21Vianet). For most actual information, see Feature support in government and national clouds.

The first step begins with a clear understanding of the benefits that enabling the native AWS support in Microsoft Defender for Cloud brings to your organization. Microsoft Defender for Cloud’s native AWS support provides:

- Agentless CSPM for AWS resources

- CWP support for Amazon EKS clusters

- CWP support for AWS EC2 instances

- CWP support for SQL servers running on AWS EC2, RDS Custom for SQL Server

The CSPM for AWS resources is completely agentless and at the time of writing this article, supports the data types in AWS as mentioned towards the end of Providing you with recommendations on how to best harden your AWS resources and remediate misconfigurations.

Keep in mind that the CSPM plan for AWS resources is available for Free. Refer this for more information.

The CWP support for Amazon EKS clusters offers a wide set of capabilities including discovery of unprotected clusters, advanced threat detection for the control plane and workload level, Kubernetes data plane recommendations (through the Azure Policy extension) and more.

The CWP support for AWS EC2 instances offers a wide set of capabilities, including automatic provisioning of pre-requisites on existing and new machines, vulnerability assessment, integrated license for Microsoft Defender for Endpoint (MDE), file integrity monitoring and more.

The CWP support for SQL servers running on AWS EC2, AWS RDS Custom for SQL Servers offers a wide set of capabilities, including advanced threat protection, vulnerability assessment scanning, and more.

Now that we’ve touched briefly on the benefits that Microsoft Defender for Cloud’s native AWS support provides, let’s move onto the next step. Next up is identifying which use cases the PoC should cover. A common use case might be that Management ports of EC2 instances should be protected with just-in-time network access control, or blocking public access on S3 buckets.

Preparation and Implementation:

This section highlights the requirements that you should be aware of before starting the PoC. For the complete list of pre-requirements, see the Prerequisites section.

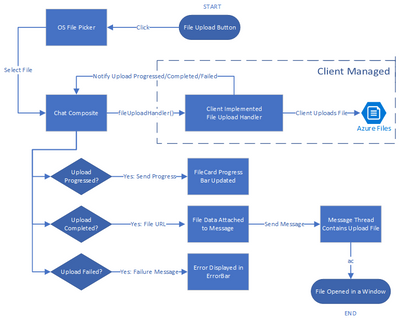

There are three main steps when preparing to enable Microsoft Defender for Cloud’s native AWS support.

- Determining which capabilities are in the scope of the PoC

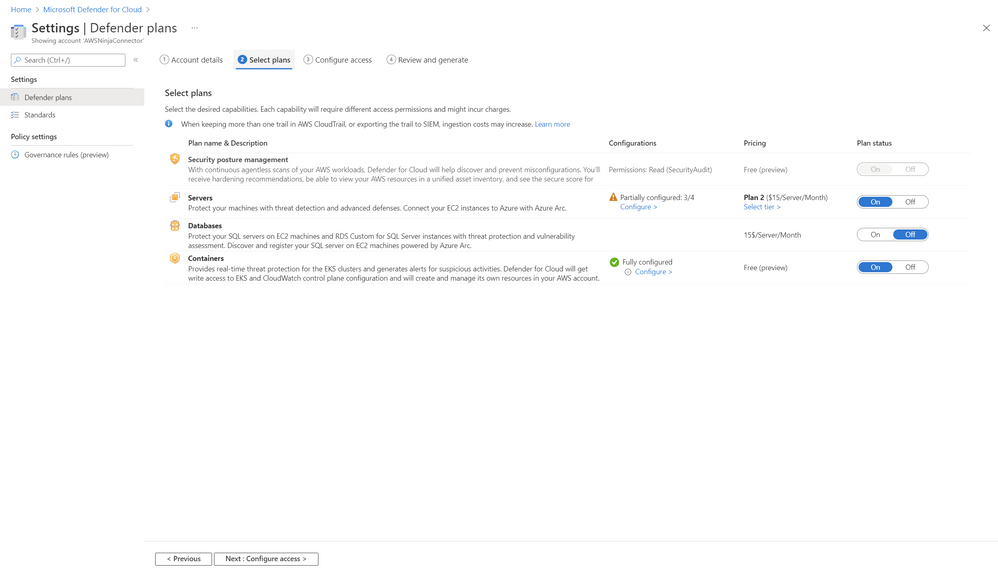

At the time of writing this article, Defender for Cloud supports the following AWS capabilities: (see Figure 1):

- Agentless CSPM for AWS resources

- CWP support for Amazon EKS clusters

- CWP support for AWS EC2 instances

- CWP support for SQL servers running on AWS EC2, RDS Custom for SQL Server

Figure 1: Native CSPM and CWP capabilities for AWS in Microsoft Defender for Cloud

Figure 1: Native CSPM and CWP capabilities for AWS in Microsoft Defender for Cloud

- Selecting the AWS accounts on which you’d like to perform the PoC

For the purposes of this PoC, it’s important that you identify which AWS account(s) are going to be used to perform the PoC of Defender for Cloud’s native AWS support. You can choose a single AWS account or optionally, you can choose a management account, which will include each member account discovered under the provisioned Management account.

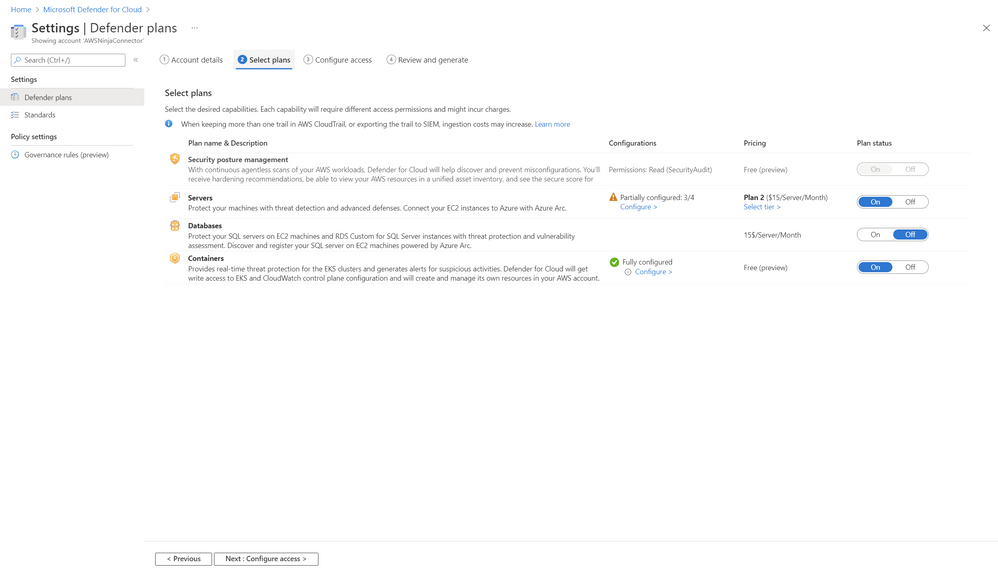

- Connecting AWS accounts Microsoft Defender for Cloud

You can connect AWS accounts to Microsoft Defender for Cloud with a few clicks in Azure and AWS. For detailed technical guidance see Microsoft Docs. For a video of step-by-step guidance on how this process looks like end-to-end in Azure and AWS, see this short video.

Figure 2: Connecting AWS accounts to Microsoft Defender for Cloud

Figure 2: Connecting AWS accounts to Microsoft Defender for Cloud

NOTE: Our service performs API calls to discover resources and refresh their state. If you’ve enabled a trail for read events in CloudTrail and are exporting data out of AWS (i.e. to an external SIEM), the increased volume of calls might also increase ingestion costs and we recommend filtering out the read-only calls from the Defender for (as stated here, under ”Important”).

Validation

Once you’ve created the connector, you can validate it by analyzing the data relevant to the use cases that your PoC covers.

When validating recommendations for AWS resource, you can consult reference list of AWS recommendations.

When validating alerts for EC2 instances, you can consult reference list of alerts for machines.

When validating alerts for EKS clusters, you can consult reference list of alerts for containers – Kubernetes clusters.

When validating alerts for SQL servers running on AWS EC2 and AWS RDS Custom for SQL Server, you can consult reference list of alerts.

Closing Considerations:

By the end of this PoC, you should be able to determine the value of the native AWS support in Microsoft Defender for Cloud and the importance of having it enabled for your AWS resources. Stay tuned for more Microsoft Defender for Cloud PoC Series here.

P.S. To stay up to date on helpful tips and new release, subscribe to our Microsoft Defender for Cloud Newsletter and join our Tech Community where you can be one of the first to hear the latest Defender for Cloud news, announcements and get your questions answered by Azure Security experts.

Reviewers:

Or Serok Jeppa, Senior Program Manager

by Contributed | Jul 11, 2022 | Technology

This article is contributed. See the original author and article here.

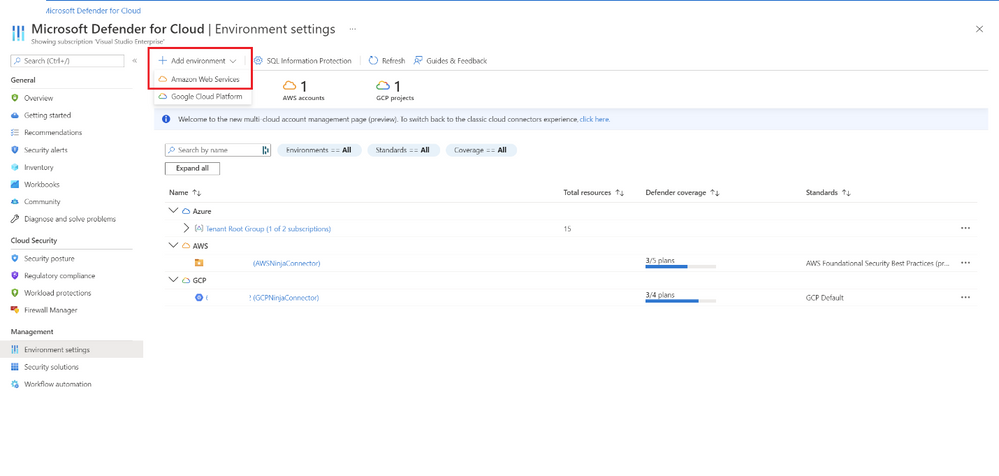

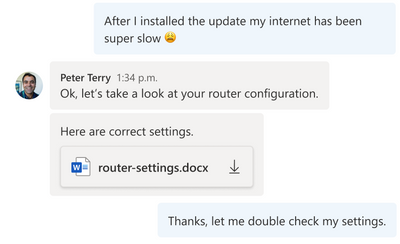

Azure Communication Services allows you to add communications to your applications to help you connect with your customers and across your teams. Available capabilities include voice, video, chat, SMS and more. Frequently you need to share media, such as a Word document, an image, or a video as part of your communication experience. During a meeting, users want to share, open, or download the media directly. This content can be referenced throughout the conversation for visibility and feedback – whether it is a doctor sending a patient a note in a PDF, a retailer sending detailed images of their product, or a customer sharing a scanned financial document with their advisor.

As part of the Azure Family, Azure Communication Services works together with Azure Blob Storage to share media between communication participants. Azure Blob Storage provides you with globally redundant, scalable, encrypted storage for your content and Azure Communication Services allow you to deliver that content.

Using Azure Communication Services chat SDK and the UI Library, developers can easily enable experiences that incorporate chat communications and media sharing into your existing applications. Check out the recently published tutorial and reference implementation. You can find the completed sample on GitHub.

This tutorial covers how to upload media to Azure Blob Storage and link it to your Azure Communication Services chat messages. Going one step further, the guide shows you how to use the Azure Communication Services UI Library to create a beautiful chat user experience which includes these file sharing capabilities. You can even stylize the UI components using the UI library’s simple interfaces to match your existing app.

The tutorial yields a sample of how file sharing capability can be enabled. You should ensure that the file system used and the process of uploading and downloading files to be compliant with your requirements related to privacy and security.

We hope you check out the tutorial to learn how you can bring interactive communication and media sharing experiences to your application using Azure Communication Services.

by Contributed | Jul 10, 2022 | Technology

This article is contributed. See the original author and article here.

Introduction

Hi folks! My name is Felipe Binotto, Cloud Solution Architect, based in Australia.

The purpose of this article is to provide a comparison on how you can do something with Bicep vs how you can do the same thing with Terraform. My intension here is not to provide an extensive comparison or dive deep into what each language can do but to provide a comparison of the basics.

I have worked with Terraform for a long time before I started working with Bicep and I can say to Terraform Engineers that it should be an easy learning curve with Bicep if you already have good experience with Terraform.

Before we get to the differences when writing the code, let me provide you with a quick overview of why someone would choose one over the other.

The main differentiator of Terraform is being multi-cloud and the nice UI it provides if you leverage Terraform Cloud to store the state. I like the way you can visualize plans and deployments.

Bicep, on the other hand, is for Azure only, but it provides deep integration which unlocks what some call ‘day zero support’ for all resource types and API versions. This means that as soon as some new feature or resource is released, even preview features, they are immediately available to be used with Bicep. If you have been using Terraform for a while, you know that it can take a long time until a new Azure release is also available in Terraform.

Terraform stores a state of your deployment which is a map with the relationship of your deployed resources and the configuration in your code. Based on my field experience, this Terraform state causes more problems than provides benefits. Bicep doesn’t rely on the state but on incremental deployments.

Code

Both Terraform and Bicep are declarative languages. Terraform files have TF extension and Bicep files have BICEP extension.

The main difference is that for Terraform you can have as many TF files as you want in the same folder, and they will be interpreted as a single TF file which is not true for Bicep.

Throughout this article, you will also notice that Bicep uses single quotes while Terraform uses double quotes.

Variables, Parameters & Outputs

Variables

In Bicep, variables can be used to simplify complex expressions which are equivalent to Terraform “local variables”.

The example below depicts how you can concatenate parameter values in a variable to make up a resource name.

param env string

param location string

param name string

var resourceName = '${location}-${env}-${name}'

The same can be achieved in Terraform as follows.

variable "env" {}

variable "name" {}

variable "location" {}

locals {

resourceName = "${var.location}-${var.env}-${var.name}"

}

Parameters

In Bicep, parameters can be used to pass inputs to your code and make it reusable which is the equivalent to “input variables” in Terraform.

Parameters in Bicep are made of the key work “param”, followed by the parameter name followed by the parameter type, in the example below, a string.

param env string

A default value can also be provided.

param env string = 'prd'

Parameters in Bicep can also use decorators which is a way to provide constraints or metadata. For example, we can constrain the parameters “env” to be three letters only.

@minLength(3)

@maxLength(3)

param env string = 'prd'

Parameter values can be provided from the command line or passed in a JSON file.

In Terraform, input variables can be declared as simple as the following.

variable "env" {}

A default value can also be provided.

variable "env" {

default = "prd"

}

In Terraform, a validation block is the equivalent to the Bicep parameter decorators.

variable "env" {

default = "prd"

validation {

condition = length(var.env) == 3

error_message = "The length must be 3."

}

}

Parameter values can be provided from the command line or passed in a TFVARS file.

Outputs

Outputs are used when a value needs to be returned from the deployed resources.

In Bicep, an output is represented by the keyword “output” followed by the output type and the value to be returned.

In the example below, the hostname is returned which is the FQDN property of a public IP address object.

output hostname string = publicIP.properties.dnsSettings.fqdn

In Terraform, the same can be done as follows.

output "hostname" {

value = azurerm_public_ip.vm.fqdn

}

Resources

Resources are the most important element in both Bicep and Terraform. They represent the resources which will be deployed to the target infrastructure.

In Bicep, resources are represented by the keyword “resource” followed by a symbolic name, followed by the resource type and API version.

The following represents a compressed version of an Azure VM.

resource vm 'Microsoft.Compute/virtualMachines@2020-06-01' = {

name: vmName

location: location

…

}

The following is how you can reference an existing Azure VM.

resource vm 'Microsoft.Compute/virtualMachines@2020-06-01' existing = {

name: vmName

}

The same resource can be represented in Terraform as follows.

resource "azurerm_windows_virtual_machine" "vm" {

name = var.vmName

location = azurerm_resource_group.resourceGroup.location

…

}

However, to reference an existing resource in Terraform, you must use a data block.

data "azurerm_virtual_machine" "vm" {

name = vmName

resource_group_name = rgName

}

The main differences in the examples above are the following:

- Resource Type

- For Bicep, the resource type version is provided in the resource definition.

- For Terraform, the version will depend on the plugin versions downloaded during “terraform init” which depends on what has been defined in the “required_providers” block. We will talk about providers in a later section.

- Scope

- For Bicep, the default scope is the Resource Group unless other scope is specified, and the resources don’t have a Resource Group property which requires to be specified.

- For Terraform, the Resource Group has to be specified as part of the resource definition

- Referencing existing resources

- For Bicep, you can use the same construct using the “existing” keyword.

- For Terraform, you must use a data block.

Modules

Modules have the same purpose for both Bicep and Terraform. Modules can be packaged and reused on other deployments. It also improves the readability of your files.

Modules in Bicep are made of the key word “module”, followed by the module path which can be a local file path or a remote registry.

The code below provides a read-world example of a very simple Bicep module reference.

module vmModule '../virtualMachine.bicep' = {

name: 'vmDeploy'

params: {

name: 'myVM'

}

}

One important distinction of Bicep modules is the ability to provide a scope. As an example, you could have your main deployment file using subscription as the default scope and a resource group as the module scope as depicted below.

module vmModule '../virtualMachine.bicep' = {

name: 'vmDeploy'

scope: resourceGroup(otherRG)

params: {

name: 'myVM'

}

}

The same can be achieved with Terraform as follows.

module "vmModule" {

source = "../virtualMachine"

name = "myVM"

}

Providers & Scopes

Terraform uses providers to interact with cloud providers. You must declare at least one azurerm provider block in your Terraform configuration to be able to interact with Azure as displayed below.

provider "azurerm" {

features {}

}

To reference multiple subscriptions, you can use an alias for the providers. In the example below we reference two distinct subscriptions.

provider "azurerm" {

alias = "dev"

subscription_id = "DEV_SUB_ID"

tenant_id = "TENANTD_ID"

client_id = "CLIENT_ID"

client_secret = "CLIENT_SECRET"

features {}

}

provider "azurerm" {

alias = "prd"

subscription_id = "PRD_SUB_ID"

tenant_id = "TENANTD_ID"

client_id = "CLIENT_ID"

client_secret = "CLIENT_SECRET"

features {}

}

Bicep uses scopes to target different resource groups, subscriptions, management groups or tenants.

For example, to deploy a resource to a different resource group, you can add to the resource, the scope property, and use the “resourceGroup” function.

module vmModule '../virtualMachine.bicep' = {

name: 'vmDeploy'

scope: resourceGroup(otherRG)

params: {

name: 'myVM'

}

}

To deploy the resource to a resource group in a different subscription, you can also include the subscription id as per the example below.

module vmModule '../virtualMachine.bicep' = {

name: 'vmDeploy'

scope: resourceGroup(otherSubscriptionID, otherRG)

params: {

name: 'myVM'

}

}

Deployment

There are many Bicep and Terraform commands and variations which can be used for deployment or to get to a point where a deployment can be performed, but in this section, I will just compare “terraform plan” and “terraform apply” with Bicep’s equivalent commands.

“terraform plan” is the command used to preview the changes before they actually happen. Running it from the command line will output the resources which would be added, modified, or deleted in plain text. Running the plan from Terraform Cloud, you can see the same information but in a nice visual way. Parameters can be passed as variables or variables files as per below.

terraform plan -var 'vmName=myVM'

terraform plan -var-file prd.tfvars

“terraform apply” deploys the resources according to what was previewed in the plan.

In Bicep, the “terraform plan” command is equivalent to the CLI “az deployment group what-if” command or “New-AzResourceGroupDeployment -Whatif” PowerShell command.

Running it from the command line will also output the resources which would be added, modified, or deleted in plain text. However, Bicep still doesn’t provide a user interface for the what-if visualization.

The “terraform apply” command is equivalent to the Bicep CLI command “az deployment group create” or “New-AzResourceGroupDeployment -Confirm” PowerShell command.

Note that these Bicep commands are for resource group deployments. There are similar commands for subscription, management group and tenant deployments.

Conclusion

Terraform still has its place in companies which are multi-cloud or using it for on-premises deployments. I’m Terraform certified and always loved Terraform. However, I must say when considering Azure by itself, Bicep has the upper hand. Even for multi-cloud companies, if you wish to enjoy deep integration and be able to use all new features as soon as they are released, Bicep is the way to go.

I hope this was informative to you and thanks for reading! Add your experiences or questions in the comments section.

Disclaimer

The sample scripts are not supported under any Microsoft standard support program or service. The sample scripts are provided AS IS without warranty of any kind. Microsoft further disclaims all implied warranties including, without limitation, any implied warranties of merchantability or of fitness for a particular purpose. The entire risk arising out of the use or performance of the sample scripts and documentation remains with you. In no event shall Microsoft, its authors, or anyone else involved in the creation, production, or delivery of the scripts be liable for any damages whatsoever (including, without limitation, damages for loss of business profits, business interruption, loss of business information, or other pecuniary loss) arising out of the use of or inability to use the sample scripts or documentation, even if Microsoft has been advised of the possibility of such damages.

Figure 1: Native CSPM and CWP capabilities for AWS in Microsoft Defender for Cloud

Figure 2: Connecting AWS accounts to Microsoft Defender for Cloud

Recent Comments