by Contributed | Oct 21, 2022 | Technology

This article is contributed. See the original author and article here.

Microsoft.Data.SqlClient 5.1 Preview 1 has been released. This release contains improvements and updates to the Microsoft.Data.SqlClient data provider for SQL Server.

Our plan is to provide GA releases twice a year with two or three preview releases in between. This cadence should provide time for feedback and allow us to deliver features and fixes in a timely manner. This third 5.0 preview includes fixes and changes over the previous preview release.

Fixed

- Fixed

ReadAsync() behavior to register Cancellation token action before streaming results. #1781

- Fixed

NullReferenceException when assigning null to SqlConnectionStringBuilder.Encrypt. #1778

- Fixed missing

HostNameInCertificate property in .NET Framework Reference Project. #1776

- Fixed async deadlock issue when sending attention fails due to network failure. #1766

- Fixed failed connection requests in ConnectionPool in case of PoolBlock. #1768

- Fixed hang on infinite timeout and managed SNI. #1742

- Fixed Default UTF8 collation conflict. #1739

Changed

- Updated

Microsoft.Data.SqlClient.SNI (.NET Framework dependency) and Microsoft.Data.SqlClient.SNI.runtime (.NET Core/Standard dependency) version to 5.1.0-preview1.22278.1. #1787 which includes TLS 1.3 Support and fix for AppDomain crash in issue #1418

- Changed the

SqlConnectionEncryptOption string parser to public. #1771

- Converted

ExecuteNonQueryAsync to use async context object. #1692

- Code health improvements #1604 #1598 #1595 #1443

Known issues

- When using

Encrypt=Strict with TLS v1.3, the TLS handshake occurs twice on initial connection on .NET Framework due to a timeout during the TLS handshake and a retry helper re-establishes the connection; however, on .NET Core, it will throw a System.ComponentModel.Win32Exception (258): The wait operation timed out. and is being investigated. If you’re using Microsoft.Data.SqlClient with .NET Core on Windows 11, you will need to enable the managed SNI on Windows context switch using following statement AppContext.SetSwitch(“Switch.Microsoft.Data.SqlClient.UseManagedNetworkingOnWindows”, true); to use TLS v1.3 or disabling TLS 1.3 from the registry by assigning 0 to the following HKEY_LOCAL_MACHINESYSTEMCurrentControlSetControlSecurityProvidersSCHANNELProtocolsTLS 1.3ClientEnabled registry key and it’ll use TLS v1.2 for the connection. This will be fixed in a future release.

For the full list of changes in Microsoft.Data.SqlClient 5.1 Preview 1, please see the Release Notes.

To try out the new package, add a NuGet reference to Microsoft.Data.SqlClient in your application and pick the 5.1 preview 1 version.

We appreciate the time and effort you spend checking out our previews. It makes the final product that much better. If you encounter any issues or have any feedback, head over to the SqlClient GitHub repository and submit an issue.

David Engel

by Contributed | Oct 20, 2022 | Technology

This article is contributed. See the original author and article here.

Table of Contents

Abstract

Introduction

Scenario

Azure NetApp Files backup preview enablement

Managing Resource Providers in Terraform

Terraform Configuration

Terraform AzAPI and AzureRM Providers

Declaring the Azure NetApp Files infrastructure

Azure NetApp Files backup policy creation

Assigning a backup policy to an Azure NetApp Files volume

AzAPI to AzureRM migration

Summary

Additional Information

Abstract

This article demonstrates how to enable the use of preview features in Azure NetApp Files in combination with Terraform Cloud and the AzAPI provider. In this example we enhance data protection with Azure NetApp Files backup (preview) by enabling and creating backup policies using the AzAPI Terraform provider and leveraging Terraform Cloud for the deployment.

Co-authors: John Alfaro (NetApp)

Introduction

As Azure NetApp Files development progresses new features are continuously being brought to market. Some of those features arrive in a typical Azure ‘preview’ fashion first. These features normally do not get included into Terraform before general availability (GA). A recent example of such a preview feature at the time of writing is Azure NetApp Files backup.

In addition to snapshots and cross-region replication, Azure NetApp Files data protection has extended to include backup vaulting of snapshots. Using Azure NetApp Files backup, you can create backups of your volumes based on volume snapshots for longer term retention. At the time of writing, Azure NetApp files backup is a preview feature, and has not yet been included in the Terraform AzureRM provider. For that reason, we decided to use the Terraform AzAPI provider to enable and manage this feature.

Azure NetApp Files backup provides fully managed backup solution for long-term recovery, archive, and compliance.

- Backups created by the service are stored in an Azure storage account independent of volume snapshots. The Azure storage account will be zone-redundant storage (ZRS) where availability zones are available or locally redundant storage (LRS) in regions without support for availability zones.

- Backups taken by the service can be restored to an Azure NetApp Files volume within the region.

- Azure NetApp Files backup supports both policy-based (scheduled) backups and manual (on-demand) backups. In this article, we will be focusing on policy-based backups.

For more information regarding this capability go to Azure NetApp Files backup documentation.

Scenario

In the following scenario, we will demonstrate how Azure NetApp Files backup can be enabled and managed using the Terraform AzAPI provider. To provide additional redundancy for our backups, we will backup our volumes in the Australia East region, taking advantage of zone-redundant storage (ZRS).

Azure NetApp Files backup preview enablement

To enable the preview feature for Azure NetApp Files, you need to enable the preview feature. In this case, this feature needs to be requested via the Public Preview request form. Once the feature is enabled, it will appear as ‘Registered’.

Get-AzProviderFeature -ProviderNamespace “Microsoft.NetApp” -Feature ANFBackupPreview

FeatureName ProviderName RegistrationState

———– ———— —————–

ANFBackupPreview Microsoft.NetApp Registered

(!) Note

A ‘Pending’ status means that the feature needs to be enabled by Microsoft before it can be used.

|

Managing Resource Providers in Terraform

In case you manage resource providers and its features using Terraform you will find that registering the preview feature will fail with the below message, which is expected as it is a forms-based opt-in feature.

Resource “azurerm_resource_provider_registration” “anfa” {

name = “Microsoft.NetApp”

feature {

name = “ANFSDNAppliance”

registered = true

}

feature {

name = “ANFChownMode”

registered = true

}

feature {

name = “ANFUnixPermissions”

registered = true

}

feature {

name = “ANFBackupPreview”

registered = true

}

}

Terraform Configuration

We are deploying Azure NetApp Files using a module with the Terraform AzureRM provider and configuring the backup preview feature using the AzAPI provider.

Microsoft has recently released the Terraform AzAPI provider which helps to break the barrier in the infrastructure as code (IaC) development process by enabling us to deploy features that are not yet released in the AzureRM provider. The definition is quite clear and taken from the provider GitHub page.

The AzAPI provider is a very thin layer on top of the Azure ARM REST APIs. This new provider can be used to authenticate to and manage Azure resources and functionality using the Azure Resource Manager APIs directly.

The code structure we have used looks like the sample below. However, if using Terraform Cloud you use the private registry for module consumption. For this article, we are using local modules.

ANF Repo

|_Modules

|_ANF_Pool

| |_ main.tf

| |_ variables.tf

| |_ outputs.tf

| |_ ANF_Volume

| |_ main.tf

| |_ variables.tf

| |_ outputs.tf

|_ main.tf

|_ providers.tf

|_ variables.tf

|_ outputs.tf

Terraform AzAPI and AzureRM Providers

We have declared the Terraform providers configuration to be used as below.

provider “azurerm” {

skip_provider_registration = true

features {}

}

provider “azapi” {

}

terraform {

required_providers {

azurerm = {

source = “hashicorp/azurerm”

version = “~> 3.00”

}

azapi = {

source = “azure/azapi”

}

}

}

Declaring the Azure NetApp Files infrastructure

To create the Azure NetApp Files infrastructure, we will be declaring and deploying the following resources:

- NetApp account

- capacity pool

- volume

- export policy which contains one or more export rules that provide client access rules

resource “azurerm_netapp_account” “analytics” {

name = “cero-netappaccount”

location = data.azurerm_resource_group.one.location

resource_group_name = data.azurerm_resource_group.one.name

}

module “analytics_pools” {

source = “./modules/anf_pool”

for_each = local.pools

account_name = azurerm_netapp_account.analytics.name

resource_group_name = azurerm_netapp_account.analytics.resource_group_name

location = azurerm_netapp_account.analytics.location

volumes = each.value

tags = var.tags

}

To configure Azure NetApp Files policy-based backups for a volume there are some requirements. For more info about these requirements, please check requirements and considerations for Azure NetApp Files backup.

- snapshot policy must be configured and enabled

- Azure NetApp Files backup is supported in the following regions. In this example we are using the Australia East region.

After deployment, you will be able to see the backup icon as part of the NetApp account as below.

Azure NetApp Files backup policy creation

The creation of the backup policy is similar to a snapshot policy and has its own Terraform resource. The backup policy is a child element of the NetApp account. You’ll need to use the ‘azapi_resource’ resource type with the latest API version.

(!) Note

It is helpful to install the Terraform AzAPI provider extension in VSCode, as it will make development easier with the IntelliSense completion.

|

The code looks like this:

resource “azapi_resource” “backup_policy” {

type = “Microsoft.NetApp/netAppAccounts/backupPolicies@2022-01-01”

parent_id = azurerm_netapp_account.analytics.id

name = “test”

location = “australiaeast”

body = jsonencode({

properties = {

enabled = true

dailyBackupsToKeep = 1

weeklyBackupsToKeep = 0

monthlyBackupsToKeep = 0

}

})

}

(!) Note

The ‘parent_id’ is the resource id of the NetApp account

|

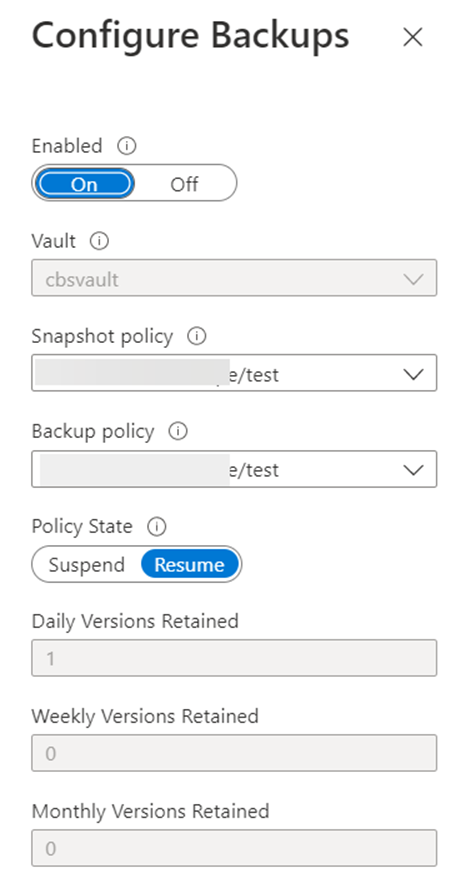

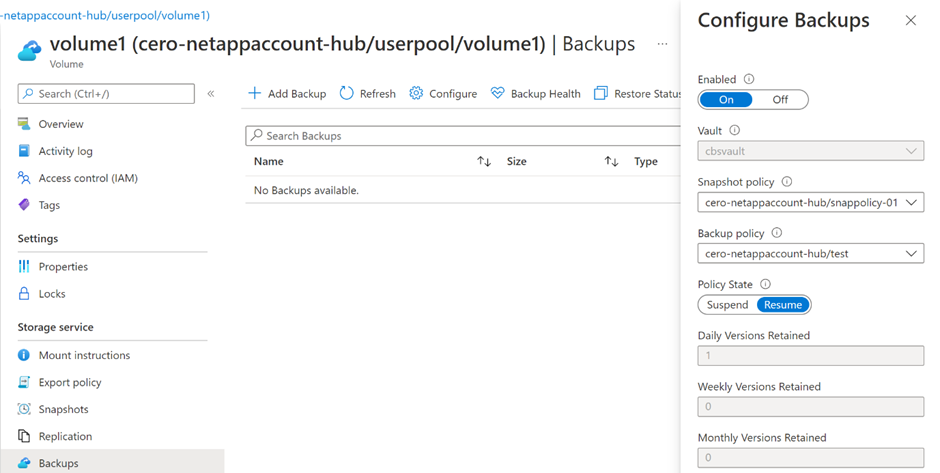

Because we are deploying this in the Australia East region, which has support for availability zones, the Azure storage account used will be configured with zone-redundant storage (ZRS), as documented under Requirements and considerations for Azure NetApp Files backup. In the Azure Portal, within the volume context, it will look like the following:

(!) Note

Currently Azure NetApp File backups supports backing up the daily, weekly, and monthly local snapshots created by the associated snapshot policy to the Azure Storage account.

|

The first snapshot created when the backup feature is enabled is called a baseline snapshot, and its name includes the prefix ‘snapmirror’.

Assigning a backup policy to an Azure NetApp Files volume

The next step in the process is to assign the backup policy to an Azure NetApp Files volume. Once again, as this is not yet supported by the AzureRM provider, we will use the `azapi_update_resource` as it allows us to manage the resource properties we need from the existing NetApp account. Additionally, it does use the same auth methods as the AzureRM provider. In this case, the configuration code looks like the following where the data protection block is added to the volume configuration.

resource “azapi_update_resource” “vol_backup” {

type = “Microsoft.NetApp/netAppAccounts/capacityPools/volumes@2021-10-01”

resource_id = module.analytics_pools[“pool1”].volumes.volume1.volume.id

body = jsonencode({

properties = {

dataProtection = {

backup = {

backupEnabled = true

backupPolicyId = azapi_resource.backup_policy.id

policyEnforced = true

}

}

unixPermissions = “0740”,

exportPolicy = {

rules = [{

ruleIndex = 1,

chownMode = “unRestricted” }

]

}

}

})

}

The data protection policy will look like the screenshot below indicating the specified volume is fully protected within the region.

AzAPI to AzureRM migration

At some point, the resources created using the AzAPI provider will become available in the AzureRM provider, which is the recommended way to provision infrastructure as code in Azure. To make code migration a bit easier, Microsoft has provided the AzAPI2AzureRM migration tool.

Summary

The Terraform AzAPI provider is a tool to deploy Azure features that have not yet been integrated in to the AzureRM Terraform provider. As we see more adoption of preview features in Azure NetApp Files this new functionality will give us deployment support to manage zero-day and preview features, such as Azure NetApp Files backup and more.

Additional Information

- https://learn.microsoft.com/azure/azure-netapp-files

- https://learn.microsoft.com/azure/azure-netapp-files/backup-introduction

- https://learn.microsoft.com/azure/azure-netapp-files/backup-requirements-considerations

- https://learn.microsoft.com/azure/developer/terraform/overview-azapi-provider#azapi2azurerm-migration-tool

- https://registry.terraform.io/providers/hashicorp/azurerm

- https://registry.terraform.io/providers/Azure/azapi

- https://github.com/Azure/terraform-provider-azapi

by Contributed | Oct 20, 2022 | Azure, Business, Dynamics 365, Microsoft 365, Technology

This article is contributed. See the original author and article here.

Commercial and public sector organizations continue to look for new ways to advance their goals, improve efficiencies, and create positive employee experiences. The rise of the digital workforce and the current economic environment compels organizations to utilize public cloud applications to benefit from efficiency and cost reduction.

The post Microsoft 365 expands data residency commitments and capabilities appeared first on Microsoft 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

by Contributed | Oct 19, 2022 | Technology

This article is contributed. See the original author and article here.

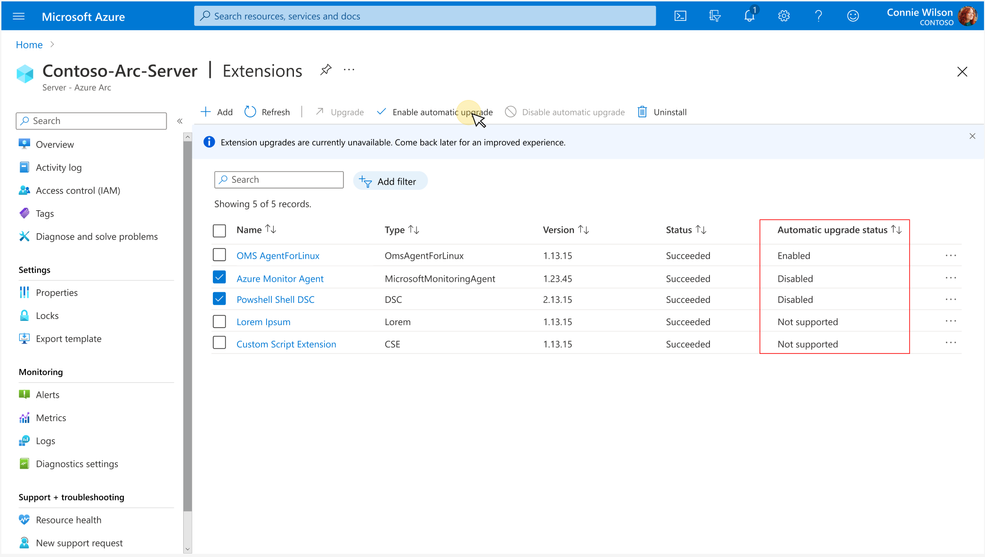

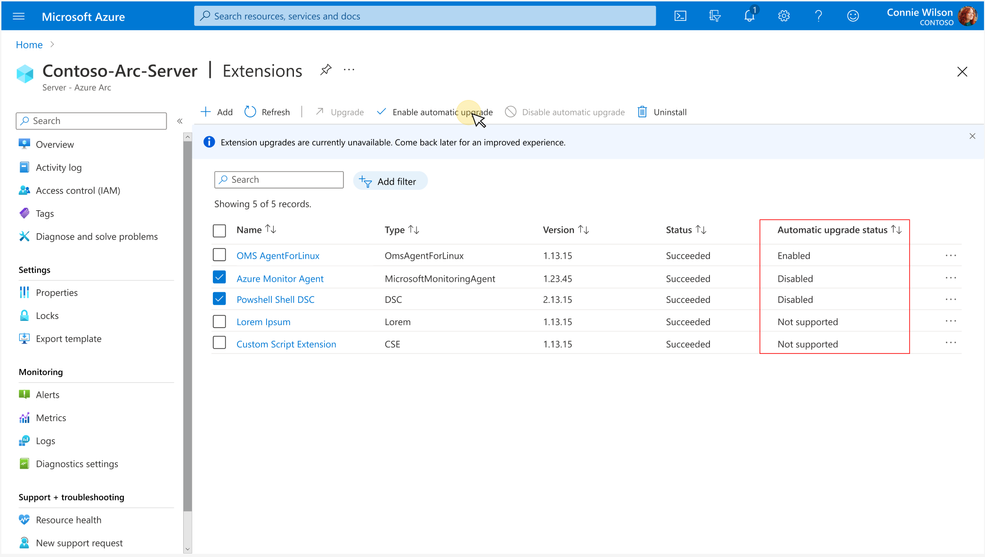

The Azure Arc team is excited to announce generally availability of Automatic VM extension upgrades for Azure Arc-enabled servers. VM extensions allow customers to easily include additional capabilities on their Azure Arc-enabled servers. Extension capabilities range from collecting log data with Azure Monitor to extending your security posture with Azure Defender to deploying a hybrid runbook worker on Azure Automation. Over time, these VM extensions get updated with security enhancements and new functionality. Maintaining high availability of these services during these upgrades can be challenging and a manual task. The complexity only grows as the scale of your service increases.

With Automatic VM extension upgrades, extensions are automatically upgraded by Azure Arc whenever a new version of an extension is published. Auto extension upgrade is designed to minimize service disruption of workloads during upgrades even at high scale and to automatically protect customers against zero-day & critical vulnerabilities.

How does this work?

Gone are the days of manually checking for and scheduling updates to the VM Extensions used by your Azure Arc-enabled servers. When a new version of an extension is published, Azure will automatically check to see if the extension is installed on any of your Azure Arc-enabled servers. If the extension is installed, and you’ve opted into automatic upgrades, your extension will be queued for an upgrade.

The upgrades across all eligible servers are rolled out in multiple iterations where each iteration contains a subset of servers (about 20% of all eligible servers). Each iteration has a randomly selected set of servers and can contain servers from one or more Azure regions. During the upgrade, the latest version of the extension is downloaded to each server, the current version is removed, and finally the latest version is installed. Once all the extensions in the current phase are upgraded, the next phase will begin. If upgrade fails on any of the VM, then rollback to previous stable extension version is triggered immediately. This will remove the extension and install the last stable version of the extension. This rolled back VM is then included in the next phase to retry upgrade. You’ll see an event in the Azure Activity Log when an extension upgrade is initiated.

How do I get started?

No user action is required to enable automatic extension upgrade. When you deploy an extension to your server, automatic extension upgrades will be enabled by default. All your existing ARM templates, Azure Policies, and deployment scripts will honor the default selection. You however will have an option to opt-out during or any time after extension installation on the server.

After an extension installation, you can verify if the extension is enabled for automatic upgrade by looking for the status under “Automatic upgrade status” column in Azure Portal. Azure Portal can also be used to opt-in or opt-out of auto upgrades by first selecting the extensions using checkboxes and then by clicking on the “Enable Automatic Upgrade” or “Disable Automatic Upgrade” buttons respectively.

You can also use Azure CLI and Azure PowerShell to view the auto extension upgrade status and to opt-in or opt-out. You can learn more about this using our Azure documentation.

What extensions & regions are supported?

Limited set of extensions are currently supported for Auto extension upgrade. Extensions not yet supported for auto upgrade will have status as “Not supported” under the “Automatic upgrade status” column. You can also refer Azure documentation for complete list of supported extensions.

All public azure regions are currently supported. Arc enabled Servers connected to any public azure region are eligible for automatic upgrades.

Upcoming enhancements

We will be gradually supporting many more extensions available on Arc enabled Servers.

by Contributed | Oct 18, 2022 | Technology

This article is contributed. See the original author and article here.

The Challenge

Five years ago employee satisfaction with finding information within the company was very low. it was the lowest rated it service among all those we surveyed about. Related surveys done by other teams supported this, for instance that our software engineers “finding information” as one of the most wasteful frustrating activities in their job, costing the company thousands of man years of productivity.

A project team was formed to improve this. In the years since we have pursued:

- Improving search result relevance

- Improving search content completeness

- Address content quality issues

The Microsoft Search Environment

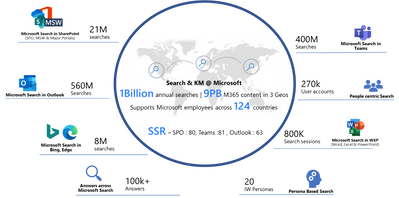

Microsoft has >300,000 employees working around the globe, and collectively, our employees use or access many petabytes of content as they move through their workday. within our employee base, there are many different personas who have widely varying search interests and use hundreds of content sources. Those content sources can be file shares, Microsoft sharepoint sites, documents and other files, and internal websites. our employees also frequently access external websites, such as hr partners’ websites.

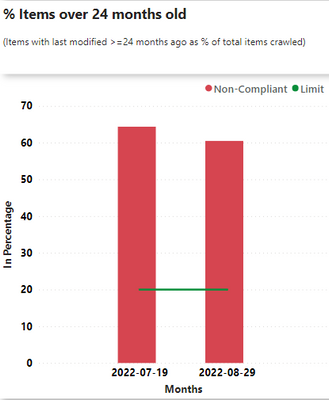

We began with user satisfaction survey net score at 87 (scale of 1-200, with 200 being perfect). We have reached satisfaction of 117. Our goal is 130+.

What We’ve Done

Core to our progress has been:

- Understanding the needs of the different personas around the company. At Microsoft, personas are commonly clustered based on three factors: their organization within the company, their profession, and their geographic location. For example, a Microsoft seller working in Latin America has different search interests than an engineer working in China.

- Has resulted in targeting bookmarks to certain security groups.

- Has led to outreach to certain groups and connecting with efforts they had underway to build a custom search portal or improve content discoverability.

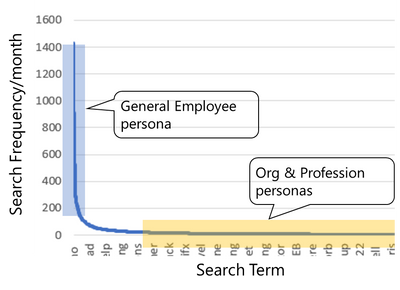

- Understanding typical search behavior. For instance, the diagram below shows that a relatively small number of search terms account for a large portion of the search activity.

- We ensure bookmarks exist for most of the high frequency searches.

- We look for commonalities in low frequency searches for potential content to connect in.

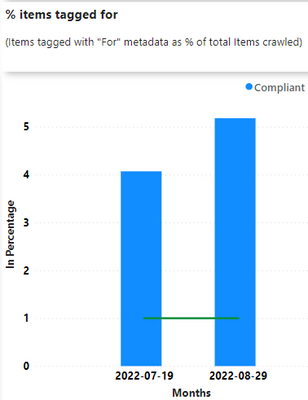

- Improving content quality. This has ranged from deleting old content to educating content owners on most effective ways to adding metadata to their content so it ranks better in search results. As part of our partnership with this community, we provide reporting on measurable aspects of content quality. We are in early stages of pursuing quality improvement, with much to do in building a community across the company, measuring, and enabling metadata.

- For those site owners actively using this reporting, we have seen a decrease of up to 70% in content with no recent updates.

- Utilizing improvements delivered in product, from improved relevance ranking to UX options like custom filters.

- We have seen steady improvement in result ranking.

- We also take advantage of custom filters and custom result KQL.

- We use Viva Topics. Topics now receive the most clicks after Bookmarks.

- Making our search coverage more complete. Whether it’s via bookmarks or connectors, there are many ways of making the search experience feel like it covers the entire company.

- We currently have 7 connections, one of which is custom built and brings in 10 different content sources. This content is clicked on in 5% of searches on our corporate SharePoint portal.

- About half of our bookmarks (~600) point to URLs outside of the corporate boundary, such as third-party hosted services.

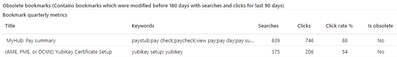

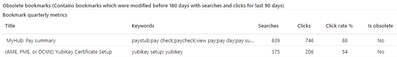

- Analytics. Using SharePoint extensions, we capture all search terms and click actions on our corporate portal’s search page. We’ve used these extensively in deciding what actions to take. The sample below is a report on bookmarks and their usage. This chart alone enabled us to remove 30% of our bookmarks due to lack of use.

In analyzing the click activity on our corporate portal, the most impactful elements are:

Bookmarks

|

Are clicked on in 45% of all searches and significantly shortens the duration of a search session.

We currently have ~1200 bookmarks making for quick discovery of the most commonly searched for content and tools around the company.

|

Topics

|

Are clicked on in 5-7% of all searches.

|

Connectors

|

Are clicked on in 4-5% of all searches.

|

Metadata

|

Good metadata typically moves an item from the bottom of the first page to the top half and from page 2 or later onto the bottom of page 1.

|

Additional details will be published in later blog posts. If of interest, details as to exactly what Microsoft search admin does in its regular administrative activities are described here.

Business Impact of Search

As shown in the preceding table, roughly half of all enterprise-level searches benefit from one of the search admin capabilities. Employees who receive such benefits average a one-minute faster search completion time than those whose searches don’t use those capabilities. Across 1.2 million monthly enterprise-level searches at Microsoft, that time savings amounts to more than 8,000 hours a month of direct employee-productivity benefit.

We achieve these results with an admin team of part-time individuals, investing a total of <300 hours per month doing direct search administration, responding to user requests to help find individual items, and maintaining a self-help site which advises employees on where and how to search best. We also have a larger improvement program striving to improve information discoverability across the company.

So 5 years into our improvement efforts, we have significantly improved user satisfaction, can now measure the productivity impact search is having, and built numerous partnerships across the company that are expected to continue yielding improvements in the years to come.

Lessons from this work is actively improving search has significant payback. The first step is to actively administer search, doing whatever helps the most popular searches to deliver the right results.

Recent Comments