by Contributed | Oct 31, 2022 | Technology

This article is contributed. See the original author and article here.

Microsoft partners like Rubrik and Standss deliver transact-capable offers, which allow you to purchase directly from Azure Marketplace. Learn about these offers below:

|

Rubrik for Microsoft 365: Safeguard your enterprise data from insider threats or ransomware with Rubrik’s air-gapped, immutable, access-controlled backups, while continuously monitoring and remediating data risks. Rubrik Zero Trust Data Security for Microsoft 365 offers unprecedented simplicity and performance for search and restore operations for Microsoft Exchange Online, OneDrive, SharePoint, and Teams.

|

|

SendGuard for Outlook (Microsoft 365): Prevent accidental data disclosure and improve your compliance posture with SendGuard for Outlook. The software scans, detects, warns or blocks potentially sensitive, confidential or non-compliant emails and prevents them from reaching unintended recipients. It prompts Microsoft Outlook users to review and confirm both attachments and recipients before an email with confidential information can be sent.

.

|

by Contributed | Oct 29, 2022 | Technology

This article is contributed. See the original author and article here.

When you start a PITR restore in your Managed Instance, is very useful be able to track how it progresses. Azure Portal does not show too many details about it, but we can use Managed Instance logs to track it.

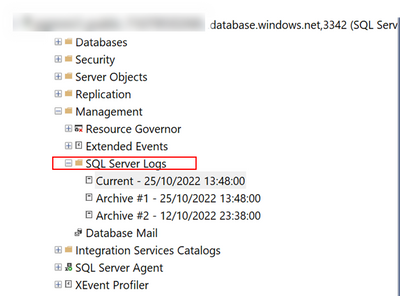

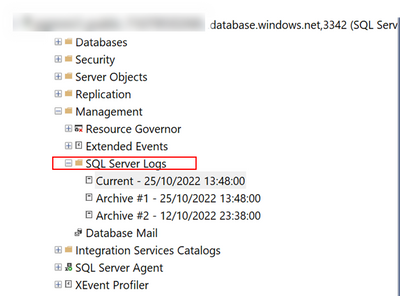

The way to do it, is quite simple. You just need to connect to your MI using your SSMS (SQL Server Management Studio) and open “SQL Server Logs” that you will find under “Management“

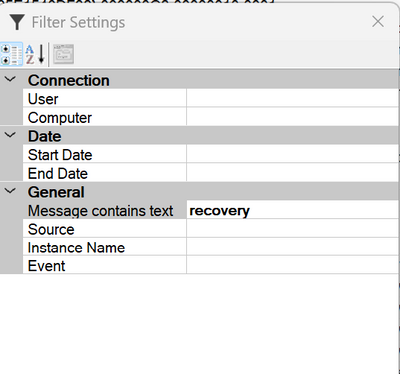

After open logs, is the time to filter then to be focus on the recovery process.

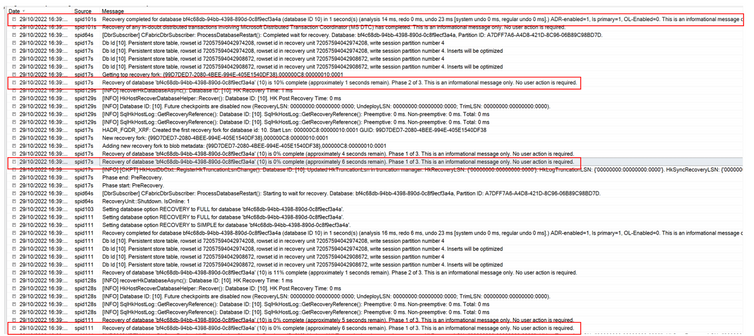

After applying the filter, you will be able to track restore progression.

Simple and effective

Enjoy. –

Paloma.-

by Contributed | Oct 29, 2022 | Technology

This article is contributed. See the original author and article here.

Hi,

AKS takes more and more space in the Azure landscape, and there are a few best practices that you can follow to harden the environment and make it as secure as possible. As a preamble, remember that containers all share the kernel through system calls, so the level of isolation in the container world is not as strong as with virtual machines, and even more as with physical hosts. Mistakes can quickly lead to security issues.

1. Hardening the application itself

This might sound obvious but one of the best ways to defend against malicious attacks, is to use bullet proof code. There is no way you’ll be 100% bullet proof, but a few steps can be taken to maximize the robustness:

- Try to use up-to-date libraries in your code (NuGet, npm, etc.), because as you know, most of your code is actually not yours.

- Make sure that any input is validated, any memory allocation is well under control, should you not use frameworks with managed memory. Many vulnerabilities are memory-related (Buffer overflow, Use-after-free, etc.).

- Rely on well-known security standards and do not invent your own stuff.

- Use SAST tools to perform static code analysis using specialized software such as Snyk, Fortify, etc.

- Try to integrate security-related tests in your integration tests

2. Hardening container images

I’ve seen countless environments where the docker image itself is not hardened properly. I wrote a full blog post about this, so feel free to to read it https://techcommunity.microsoft.com/t5/azure-developer-community-blog/hardening-an-asp-net-container-running-on-kubernetes/ba-p/2542224. I took an ASP.NET code base as an example, but this is applicable to other languages. I’ll summarize it here, in a nutshell:

- Do not expose ports below 1024, because this requires extra capabilities

- Specify another user than root

- Change ownership of the container’s file system

3. Scanning container images

Most of the times, we are using base images to build our own images, and most of the times, these base images have vulnerabilities. Use specialized software such as Snyk, Falco, Cloud Defender for Containers, etc. to identify them. Once identified, you should:

- Try to stick to the most up-to-date images as they often include security patches

- Try to use a different base image. Usually light images such as Alpine-based ones are a good start because they embed less tools and libraries, so are less likely to have vulnerabilities.

- Make a risk assessment against the remaining vulnerabilities and see if that’s really applicable to your use case. A vulnerability does not automatically mean that you are at risk. You might have some other mitigations in place that would prevent an exploit.

To push the shift left principle to the maximum, you can use Snyk’s docker scan operation, right from the developer’s machine to already identify vulnerabilities. Although Snyk is a paid product, you can scan a few images for free.

4. Hardening K8s deployments

In the same post as before (https://techcommunity.microsoft.com/t5/azure-developer-community-blog/hardening-an-asp-net-container-running-on-kubernetes/ba-p/2542224), I also explain how to harden the K8s deployment itself. In a nutshell,

- Make sure to drop all capabilities and only add the needed ones if any

- Do not use privileged containers nor allow privilege escalation (make values explicit)

- Try to stick to a read only file system whenever possible

- Specify user/group other than root

5. Request – Limits declaration

Although this might not be seen as a potential security issue, not specifying memory requests and limits can lead to an arbitrary eviction of other pods. Malicious users can take advantage of this to spread chaos within your cluster. So, you must always declare memory request and limits. You can optionally declare CPU requests/limits but this is not as important as memory.

6. Namespace-level logical isolation

K8s is a world where logical isolation takes precedence over physical isolation. So, whatever you do, you should make sure to adhere to the least privilege principle through proper RBAC configuration and proper network policies to control network traffic within the cluster, and potentially going outside (egress). Remember that by default, K8s is totally open, so every pod can talk to any other pod, whatever namespace it is located in. If you can’t live with internal logical isolation only, you can also segregate workloads into different node pools and leverage Azure networking features such as NSGs to control network traffic at another level. I wrote an entire blog post on this: AKS, the elephant in the hub & spoke room, deep dive

6.1 RBAC

Role-based access control can be configured for both humans and systems, thanks to Azure AD and K8s RBAC. There are multiple flavors available for AKS. Whichever one you use, you should make sure to:

- Define groups and grant them permissions using K8s roles

- Define service accounts and let your applications leverage them

- Prefer namespace-scoped permissions rather than cluster-scope ones

6.2 Namespace-scoped & global network policies

Traffic can be controlled using plain K8s network policies or tools such as Calico. Network policies can be used to control pod-level ingress/egress traffic.

7. Layer 7 protection

Because defense-in-depth relies on multiple ways to validate whether an ongoing operation is legal or not, you should also use a layer-7 protection, such as a Service Mesh or Dapr, which has some overlapping features with service meshes. The main difference between Dapr and a true Service Mesh is that applications using Dapr must be Dapr-aware while they don’t need to know anything about a service mesh. The purpose of a layer-7 protection is to enable mTLS and fine-grained authorizations, in order to specify who can talk to who (on top of network policies). Most solutions today allow for fine-grained authorizations targeting operation-level scopes, when dealing with APIs. Dapr and Service Meshes come with many more juicy features that make you understand what a true Cloud native environment is.

8. Azure Policy

Azure Policy is the corner stone of a tangible governance in Azure in general, and AKS makes no exception. With Azure Policy, you’ll have a continuous assessment of your cluster’s configuration as well as a way to control what can be deployed to the cluster. Azure Policy leverages Gatekeeper to deny non-compliant deployments. You can start smoothly in non-production by setting everything to Audit mode and switch to Deny in production. Azure Policy also allows you to whitelist known registries to make sure images cannot be pulled from everywhere.

9. Cloud Defender for Containers

Microsoft recently merged Defender for Registries and Defender for Kubernetes into Defender for Containers. There is a little bit of overlap with Azure Policy, but Defender also deploys DaemonSets that check for real-time threats. All incidents are categorized using the popular MITRE ATT&CK framework. One of the selling point is that Defender can handle any cluster, whether hosted on Azure or not. So, it is a multi-cloud solution. On top of assessing configuration and threats, Defender also ships with a built-in image scanning process leveraging Qualys behind the scenes. Images are scanned upon push operations as well as continuously to detect newer vulnerabilities that came after the push. Of course, there are other third party tools available such as Prisma Cloud, which you might be tempted to use, especially if you already run the Palo Alto NVAs.

10. Private API server

This one is an easy one. Make sure to isolate the API server from internet. You can easily do that using Azure Private Link. If you can’t do it for some reasons, try to at least restrict access to authorized IP address ranges.

11. Cluster boundaries

Of course, an AKS cluster is by design inside an Azure Virtual Network. The cluster can expose some workloads outside through the use of an ingress controller, and anything can potentially go outside of the cluster, through an egress controller and/or an appliance controlling the network traffic.

11.1 Ingress

Ingress can either be internet-facing callers or internal callers. A best practice is to isolate the AKS ingress controller (NGINX, Traefik, AGIC, etc.) from internet. You link it to an internal load balancer. Traffic that must be exposed to internet should be exposed through an Application Gateway, Front Door (using Private Link Service) or any other well-known non-Azure solution such as Barracuda, F5 etc. You should also distinguish pure UI traffic from API traffic. API traffic should also be filtered using an API gateway such as Azure APIM, Kong, Ambassador, etc. For “basic” scenarios, you might also offload JWT token validation to service meshes, but they will not have comparable features. You should for sure consider real API gateways for internet-facing APIs.

11.2 Egress

Pod-level egress traffic can be controlled by network policies or Calico, but also by most Service Meshes. Istio has even a dedicated egress controller, which can act as a proxy. On top of handling egress from within the cluster itself, it is a best practice to have a next-gen firewall waiting outside, such as Azure Firewall or third-party Network Virtual Appliances (NVA).

12. Keep consistence across clusters and across data centers

You start with one cluster, then 2, then a hundred. To keep some sort of consistency across cluster configurations, you can leverage Azure Policy. If your clusters are using on-premises or in another cloud, you can also use Azure Arc. Microsoft recently launched Azure Kubernetes Fleet Manager, which I haven’t tried yet but is surely something to keep an eye on.

Conclusion

The above tips are by no means exhaustive but if you start with that, you should be in a better position when it comes to handling container security. There are a myriad of tools available on the market to better handle container security. Azure has some built-in capabilities and it is up to you to see if you prefer to use best of breed or best of suite. Note that more and more Azure native tools span beyond Azure itself, so your single pane of glasses could be Azure.

by Contributed | Oct 28, 2022 | Technology

This article is contributed. See the original author and article here.

Adoption and usage of data governance tools are critical and lack of user engagement can be a serious blocker for the whole organization in its data governance journey. When it comes to solution adoption, fortunately Microsoft Purview comes with the built-in ability to analyze it.

This functionality is very useful to answer the following questions:

- Are users actively using Microsoft Purview?

- How is usage changing over time?

- What is activity type e.g., data curation or search data?

- Which assets are the most viewed ones in an organization?

- What are we missing in the catalog?

How to track the adoption?

Adoption tracking is part of Data estate insights functionality in Microsoft Purview. To be able to use it, the user needs to have appropriate permissions assigned. There is a dedicated Insights Reader role that can be assigned to any Data Map user, by the Data Curator of the root collection. More information about required permissions can be found in Permissions for Data Estate Insights in Microsoft Purview – Microsoft Purview | Microsoft Docs.

Let’s start with some basics

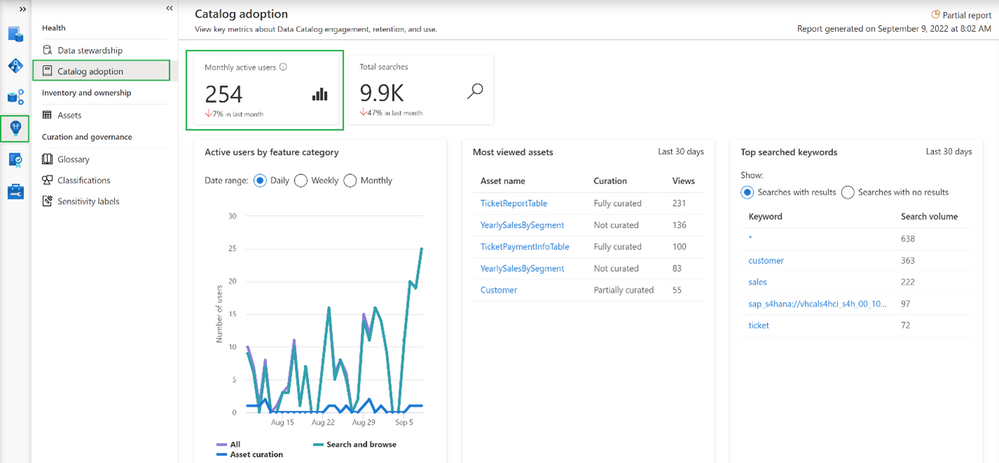

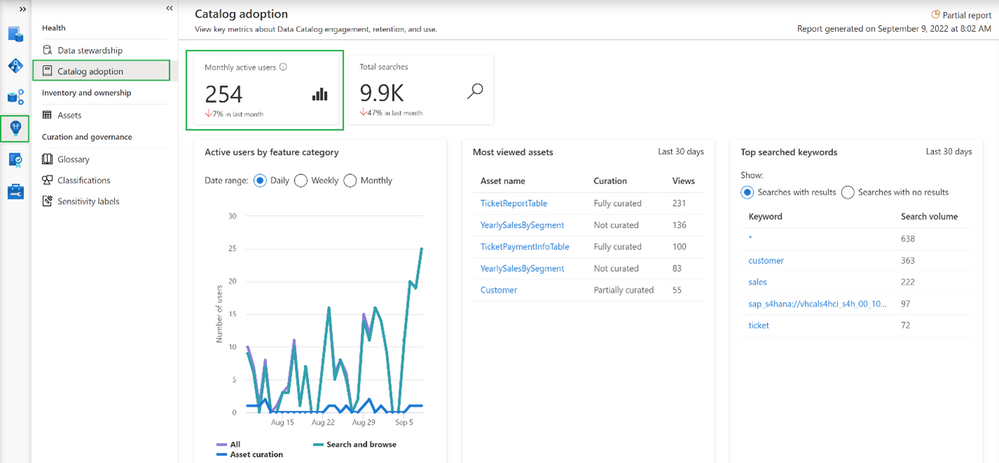

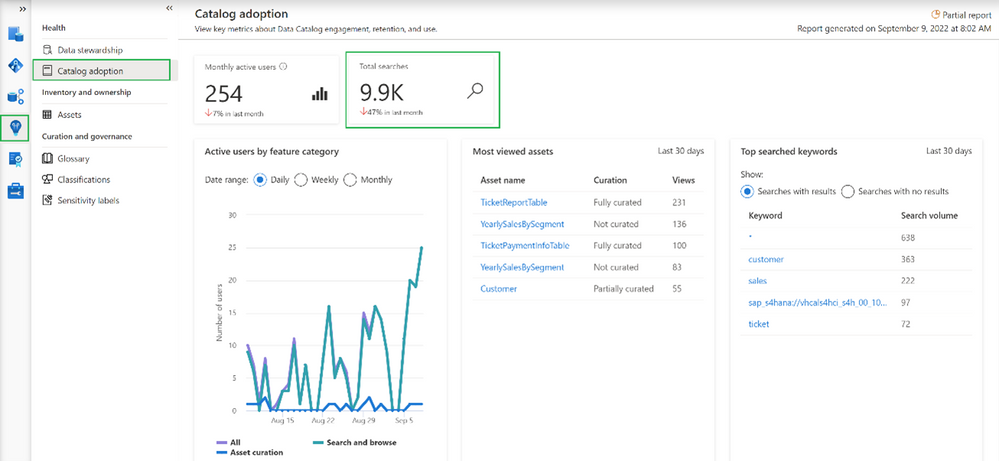

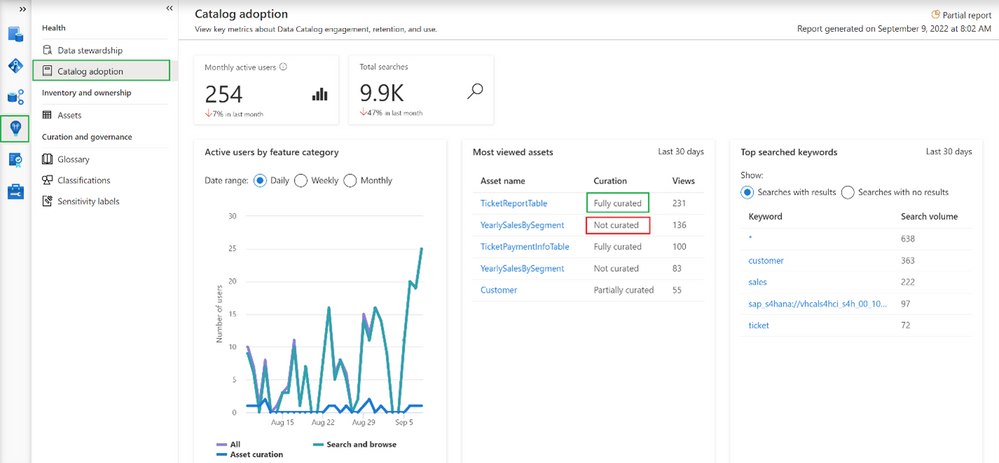

Going into the Insights area and choosing Catalog adoption, we can find information about monthly active users.

In our case, we can see that currently we have 254 distinct users and the number dropped 7% in the last month. Microsoft Purview counts active users as a user who took at least one intentional action across all feature categories in the Data Catalog within a 28-day period. It’s also possible to determine how active our users are in total as Microsoft Purview aggregates number of total searches performed by users

Note

Data estate insights functionality in Microsoft Purview shows information based on user permissions, which means data seen in Insights is limited to collections to which the user has permission to access. In this case, the user used to see insights has access to all collections, meaning the information visible in the catalog adoption is the overall number of users in the organization.

Even more information about catalog users

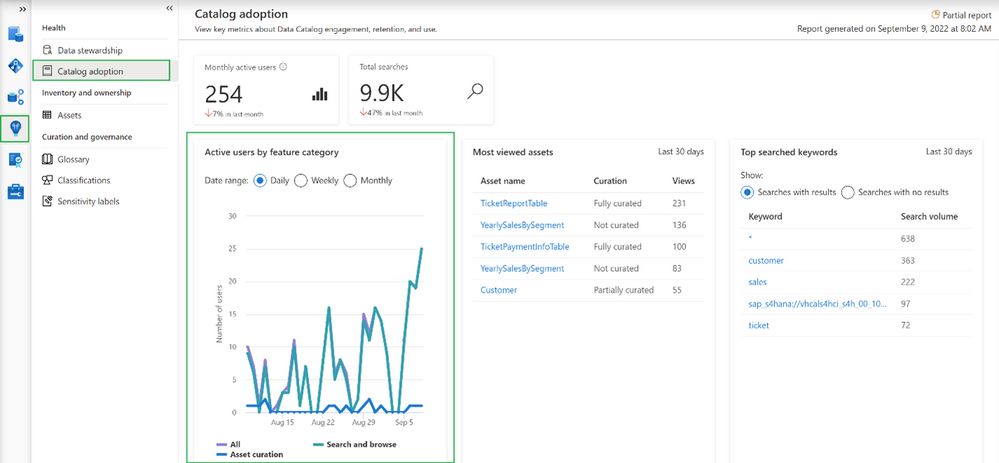

More adoption data means more insights into how the catalog is used.

This option shows the breakdown of active users by feature category. Feature category was divided into:

- All (which covers all kinds of users)

- Search and browse (which indicates users who are reading data from the catalog by searching them or directly browsing the catalog assets)

- Asset curation (activities related to data curation like assigning data owner, description, applying classification, etc.)

Information on the chart can be shown in Daily/Weekly/Monthly time range.

Increase catalog adoption by giving users more precise information…

Among the information that you get as part of adoption reports is information about which assets are the most viewed in the organization. If you are wondering why it is important to have a look at the following summary:

The most viewed asset (231 views) “TicketReportTable” is fully curated (more about curation in the 2nd part of the article) which means the asset has an assigned owner, description, and at least one classification. On the other hand, the 2nd most viewed asset (136 views in last 30 days) “YearlySalesBySegment” is not curated at all. This can lead to situations where users are accessing catalogs and get poor-quality information. As a result, users may step back from using data catalog and adoption will be dropping. Based on such insights you can intensively work on asset curation and only provide users with high-quality information about data in your organization.

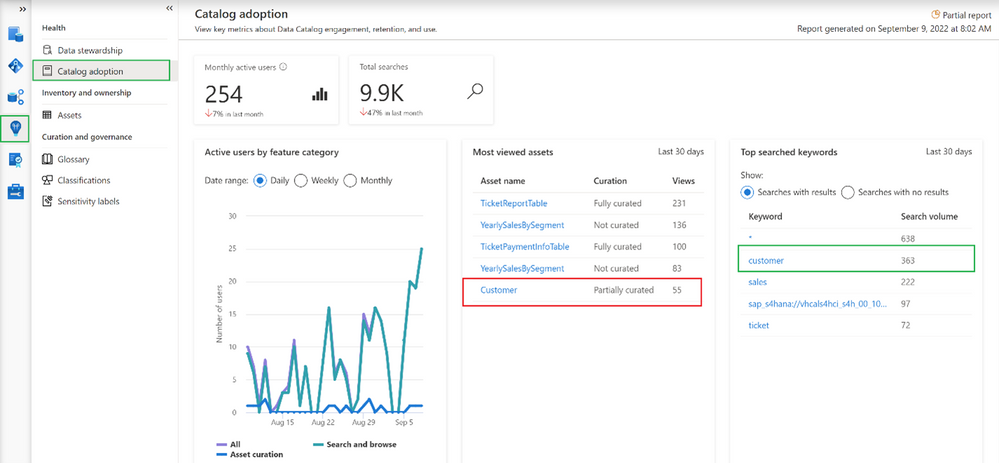

Adoption insights available in Microsoft Purview also give the ability to identify the most searched keywords.

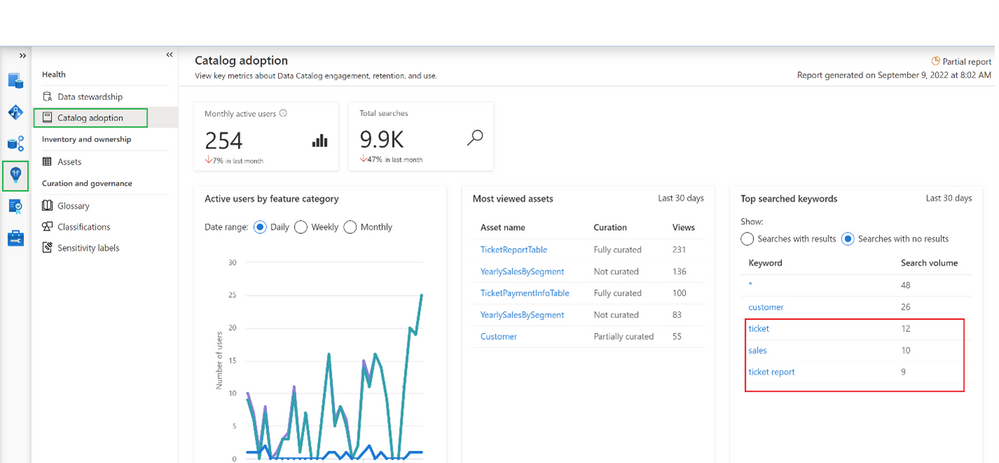

It is interesting that one of the most searched assets is only partially curated. Based on this information it is possible to help data stewards and owners set priorities and identify the most important areas in an organization. On the other hand, it’s also possible to get information about keywords that were searched by users but yielded no results.

In this example, it looks like users are looking for information related to “sales” and couldn’t find it. This is an important tip for a data governance team and shows the next possible areas to investigate.

Summary

Now you should have a better understanding of how to identify the progress of Microsoft Purview adoption, You should also have learned how to improve it by converting provided insights into actions, like a better data curation process or by adding new assets to your catalog, which are searched by users.

by Contributed | Oct 27, 2022 | Technology

This article is contributed. See the original author and article here.

Key takeaways:

- As some companies pulled back in the early days of the COVID-19 pandemic, Crayon doubled down broadly on training—including resources from Microsoft Learn—and is now in position to better serve its IT customers as organizations resume business at higher levels.

- Access to Microsoft Learn resources and the expectation of earning certifications help the company maintain minimal employee turnover.

- Crayon management says access to training and certification helps fulfill its commitment to addressing social concerns, including gender, culture, neurodiversity, equity, and inclusion.

Norway’s Crayon confronted the challenge of the COVID-19 era by doubling down on training and certification for its employees. Like many organizations, the global IT consultancy had to find ways for its teams to be productive while working remotely. Unlike others, though, Crayon saw distributed working as an opportunity to position the company for a return to normalcy. The company’s leadership projected that its corporate IT customers would have greater needs as the transition to the cloud accelerated. Crayon anticipated those needs by preparing employees with the Microsoft training and certifications required to support their customers’ ambitions.

Microsoft Learn resources naturally aligned with Crayon’s commitment to training, which is broad and long-standing. “We started out with having focus on certification and training from day one,” recalls Crayon Chief Operating Officer (COO) Bente Liberg, who joined the 3,300-person company 20 years ago as its sixth employee. She cites the strategic importance of training—internally and externally. “Our strategy has always been to help customers implement. We train them so that they can use the things they buy from us, and our commitment to training starts with how we educate our own people.”

Because Crayon both provides services and creates solutions that it sells to customers, the company has a need for its employees to step out of the revenue stream and invest in learning. Bente notes, “It starts with our GMs—actually, all of our country managers have a development KPI for the company. And for them to be able to deliver on that KPI, they need to develop skill sets in the company.”

This is true at the line level, too, and for recruitment. “That was actually something positive for hiring and also for retention,” Bente continues. “We heard from candidates: ‘Oh, can I [do] training?’ Yes, not only you can do training, you have to do training. ‘Can I take [a] certification?’ Yes. You have to take certifications.”

Crayon Chief Executive Officer (CEO) Melissa Mulholland made training and Microsoft Certification available broadly across the company—and not just for consultants. In the company’s India team, for example, “We actually had everybody, including finance—everybody—go and pass [Exam] AZ-900, the [Azure] fundamentals exam, because if they have a better understanding, that will make them better at their job.” Beyond fundamentals, more than one-third of the company’s 8,000 certifications cover in-depth topics, she reports.

From the perspective of a potential recruit or a new employee, this focus on training and certification is a professional opportunity. Senior Power BI Developer Allen Deniega recalls what drew him to the company earlier this year, noting that he has already completed two certifications since he joined Crayon. “The whole culture of helping others and promoting professional development—those two really made me come to Crayon,” he recalls. He started investigating training opportunities on his second day and made particular use of the Microsoft Official Practice Tests, often taking the same one multiple times. “Apart from giving you an idea of the structure and the format of the exam and the actual feel of the exam, it allows you to identify your gaps every time.”

Melissa believes that the learning culture not only makes Crayon more competitive and better able to differentiate its depth of knowledge to customers, but it also helps reduce turnover as employees see their career paths clearly. “It directly corresponds with talent retention, and we have very high retention in our organization. Globally speaking, from an annual standpoint, [turnover is] less than 10 percent, and I really believe that’s driven by this culture of learning and development.”

She also believes that training and certification are key to helping the company fulfill its social commitments. In 2021, Crayon created its first environmental, social, and governance (ESG) report.[1] For Crayon, Melissa explains, “Certifications [are] an excellent way to bring in more diverse skill sets and, for example, giving women who want to be in technical roles the ability to.” She says certifications provide a pathway for individuals who may not have had access to professional opportunities because of gender, culture, color, or neurodiversity. Through the training program, in partnership with Microsoft, she says, “If you have the passion and will, and you have the demonstrated certifications behind that, I’m willing to give people chances to prove themselves in roles, and I think that’s an important mindset that we have in the company that very much aligns to our ESG focus.”

Microsoft and Microsoft Learn have been steady partners for Crayon in these achievements, Melissa points out. “I am so grateful for Microsoft, I think really having our back, at being able to guide us,” she says. “You experience growth when you push yourself to learn and adapt, and it’ll open up not only career opportunities, but it’ll also give you more information to be able to do your job better. Never get in the ‘comfort zone.’”

[1] An ESG report focuses on an organization’s environmental, social, and governance impacts and priorities. The United Nations has published a comprehensive set of these sorts of priorities, called Sustainable Development Goals (SDGs), which many organizations use to guide their own ESG goals and reporting.

Recent Comments