by Contributed | Mar 1, 2023 | Technology

This article is contributed. See the original author and article here.

In this guest blog post, Omri Eytan, CTO of odix, discusses how businesses relying on Microsoft 365 can protect themselves from file-based attacks with Filewall, available via Microsoft Azure Marketplace.

Many theses were written about the latest pandemic impact on our working habits, preferences, and how companies had to adopt new processes to keep business alive.

We see numerous reports describing the work-from-home trend becoming the new reality of hybrid working environment. This had a huge impact on IT departments, which had to enable a secured yet transparent working environment for all employees, wherever they work. A McAfee Report showed a 50 percent increase in cloud use across enterprises in all industries during COVID while the number of threat actors targeting cloud collaboration services increased 630 percent for the same period!

Furthermore, the reports highlighted the increase in number of threats mainly targeted collaboration services such as Microsoft 365.

Microsoft 365 security approaches: attack channel vs. attack vector protection

To begin with, businesses must take responsibility for their cloud SaaS deployments when it comes to security, data backup, and privacy. Microsoft 365 business applications (Exchange Online, OneDrive, SharePoint, and Teams) are no different.

Security solutions offering protection for Microsoft 365 users can be divided into two main methodologies:

- Protecting attack channel: dedicated security solutions designed to protect users from various attack vectors within a specific channel such as email. The email channel has many third-party solutions working to protect users against various attack vectors such as phishing, spam, and malicious attachments.

- Protecting attack vector: advanced security solutions aiming to protect users from a specific attack vector across multiple channels such as email, OneDrive, and SharePoint.

These approaches remind us of an old debate when purchasing IT products: Should the company compromise a bit on innovation and quality and purchase a one-stop-shop type of solution, or is it better to choose multiple best-of-breed solutions and be equipped with the best technology available?

Security solutions for Microsoft 365 are no different.

Protecting Microsoft 365 users against file-based attacks

This article focuses on one of the top attack vectors hackers use: the file-based attack vector. Research shows the top file types used to embed malware in channels like email are commonly used, like Word, Excel, and PDF. Hackers use these file types because people tend to click and open them naturally. When malicious code is embedded well (e.g., in nested files), the file bypasses common anti-malware solutions such as anti-virus and sandbox methods that scan files for threats and block the files if malware was detected.

Deep file analysis (DFA) technology, introduced by odix, was designed to handle all commonly used files and offers a detectionless approach. With DFA, all malware, including zero-day exploits, is prevented and the user gets a safe copy of the original file.

What are DFA and CDR?

DFA or CDR (content disarm and reconstruction) describes the process of creating a safe copy of an original file by including only the safe elements from the original file. CDR focuses on verifying the validity of the file structure on the binary level and disarms both known and unknown threats. The detectionless approach ensures all files that complete the sanitization process successfully are malware-free copies and can be used safely.

odix, an Israel-based cybersecurity company driving innovation in content disarm and reconstruction technology, developed the FileWall solution to complement and strengthen existing Microsoft security systems. FileWall, available in Microsoft AppSource and Azure Marketplace, helps business users easily strengthen Microsoft 365 security within a few clicks.

How FileWall works with Microsoft security technology

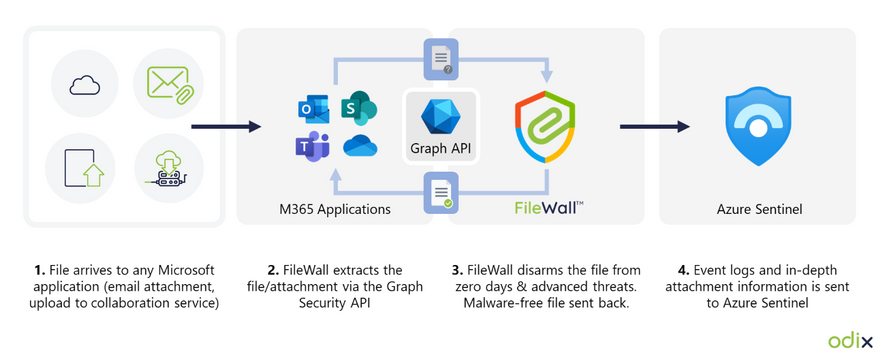

FileWall integrates with the Microsoft Graph security API and Microsoft Azure Sentinel, bringing malware protection capabilities with an essential added layer of deep file analysis technology containing CDR proprietary algorithms.

FileWall blocks malicious elements embedded in files across Microsoft 365 applications including Exchange Online, SharePoint, OneDrive, and Teams. The unique DFA process is also extremely effective in complex file scenarios such as nested files and password-protected attachments where traditional sandbox methods could miss or result in lengthy delays and disruption of business processes.

Empowering Microsoft 365 security: granular filtering per channel

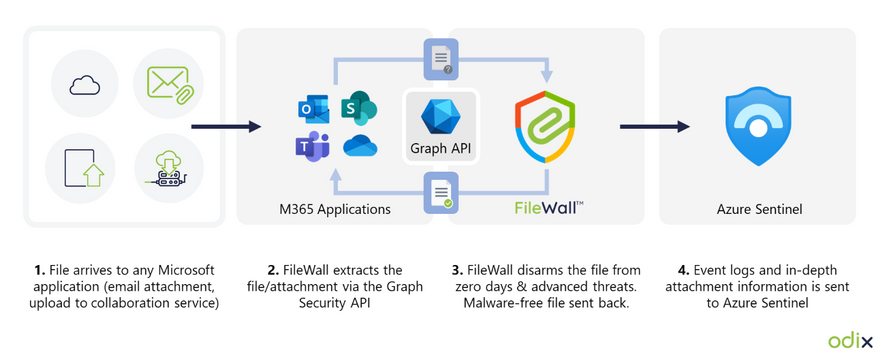

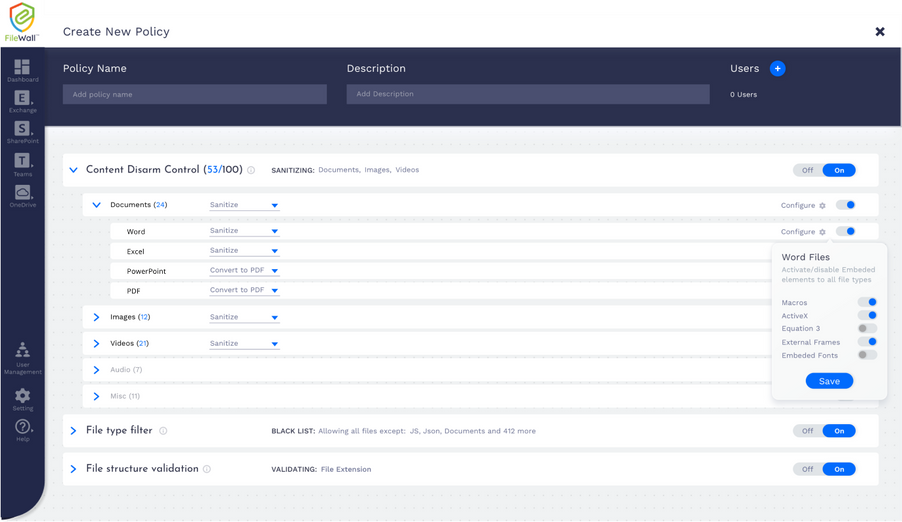

FileWall includes a modern admin console so the Microsoft 365 administrator can set security policies and gain overall control of all files and attachments across Exchange Online, SharePoint, OneDrive, and Teams. The FileWall file type filter lets the admin define which file types are permitted in the organization and which should be blocked. This minimizes the attack surface the organization is exposing via email and collaboration services by eliminating the threat vectors available in certain file types.

The type filter has three main controls:

- On/off: enabling or disabling the filter functionality on all file types

- Work mode: the ability to create preset lists of permitted and non-permitted file types for specific users within the organization

- Default settings: suggested default policy by FileWall which includes 204 file types categorized as dangerous, including executable files (*.exe), windows batch files (*.bat), windows links (*.lnk), and others

How FileWall complements Defender’s safe attachments

As a native security solution within the Microsoft 365 deployment, FileWall doesn’t harm productivity. Consequently, all FileWall’s settings have been configured to complement Microsoft 365 Defender. Combining the two products provides high levels of security with multi antivirus, sandbox, and CDR capabilities. While the sandbox can manage executables and active content, FileWall handles all commonly used files such as Microsoft Office, PDF, and images. As most organizational traffic consists of non-executable documents, this method can reduce sandbox load by 90 percent to 95 percent, lowering total costs and improving the average latency.

FileWall enhances the existing Microsoft type filter and allows additional granular controls over the types of files that are allowed to enter the organization while enforcing these restrictions on nested and embedded files as well.

Call for Certified Microsoft CSPs who wish to increase revenues

FileWall for Microsoft 365 is available for direct purchase through Microsoft AppSource, Azure Marketplace, or via the FileWall-certified partner program for Microsoft CSPs.

Microsoft CSPs can bundle FileWall via Microsoft Partner Center for their customers. odix offers generous margins to Microsoft CSPs who joined the FileWall partner program.

FileWall-certified partners are eligible for a free NFR license according to odix terms of use.

by Contributed | Mar 1, 2023 | Business, Microsoft 365, Technology

This article is contributed. See the original author and article here.

Today marks a significant shift in endpoint management and security. We are launching the Microsoft Intune Suite, which unifies mission-critical advanced endpoint management and security solutions into one simple bundle.

The post The Microsoft Intune Suite fuels cyber safety and IT efficiency appeared first on Microsoft 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

by Contributed | Feb 28, 2023 | Technology

This article is contributed. See the original author and article here.

Join Microsoft at GTC, a global technology conference running March 20 – 23, 2023, to learn how organizations of any size can power AI innovation with purpose-built cloud infrastructure from Microsoft.

Microsoft’s Azure AI supercomputing infrastructure is uniquely designed for AI workloads and helps build and train some of the industry’s most advanced AI solutions. From data preparation to model and infrastructure performance management, Azure’s comprehensive portfolio of powerful and massively scalable GPU-accelerated virtual machines (VMs) and seamless integration with services like Azure Batch and open-source solutions helps streamline management and automation of large AI models and infrastructure.

Attend GTC to discover how Azure AI infrastructure optimized for AI performance can deliver speed and scale in the cloud and help you reduce the complexity of building, training, and bringing AI models into production. Register today! GTC Developer Conference is a free online event.

Microsoft sessions at NVIDIA GTC

Add the below Microsoft sessions at GTC to your conference schedule to learn about the latest Azure AI infrastructure and dive deep into a variety of use cases and technologies.

Featured sessions

Accelerate AI Innovation with Unmatched Cloud Scale and Performance

Thursday, Mar 23 | 7:00 AM – 7:50 AM MST

Nidhi Chappell, General Manager, Azure HPC, AI, SAP and Confidential Computing

Kathleen Mitford, Corporate Vice President, Azure Marketing, Microsoft

Manuvir Das, Head of Enterprise Computing, NVIDIA

Azure’s purpose-built AI infrastructure is enabling leading organizations in AI to build a new era of innovative applications and services. The convergence of cloud flexibility and economics, with advances in cloud performance, is paving the way to accelerate AI initiatives across simulations, science, and industry. Whether you need to scale to 80,000 cores for MPI workloads, or you’re looking for AI supercomputing capabilities, Azure can support your needs. Learn more about Azures AI platform, our latest updates, and hear about customer experiences.

Azure’s Purpose-Built AI Infrastructure Using the Latest NVIDIA GPU Accelerators

On-demand

Matt Vegas, Principal Product Manager, Microsoft

Microsoft offers some of the most powerful and massively scalable Virtual Machines, optimized for AI workloads. Join us for an in-depth look at the latest updates for Azure’s ND series based on NVIDIA GPUs, engineered to deliver a combination of high-performance, interconnected GPUs, working in parallel, that can help you reduce complexity, minimize operational bottlenecks operations, and can deliver reliability at scale.

Talks and panel sessions

Session ID

|

Session Title

|

Speakers

|

Primary Topic

|

S51226

|

Accelerating Large Language Models via Low-Bit Quantization

|

Young Jin Kim, Principal Researcher, Microsoft

Rawn Henry, Senior AI Developer Technology Engineer, NVIDIA

|

Deep Learning – Inference

|

S51204

|

Transforming Clouds to Cloud-Native Supercomputing: Best Practices with Microsoft Azure

|

Jithin Jose, Principal Software Engineer, Microsoft Azure

Gilad Shainer, SVP Networking, NVIDIA

|

HPC – Supercomputing

|

S51756

|

Accelerating AI in Federal Cloud Environments

|

Bill Chappel, Vice President of Mission Systems in Strategic Missions and Technology, Microsoft

Steven H. Walker, Chief Technology Officer, Lockheed Martin

Matthew Benigni, Chief Data Officer, Army Futures Command

Christi DeCuir, Director, Cloud Go-to-Market, NVIDIA

|

Data Center / Cloud – Business Strategy

|

S51703

|

Accelerating Disentangled Attention Mechanism in Language Models

|

Pengcheng He, Principal Researcher, Microsoft

Haohang Huang, Senior AI Engineer, NVIDIA

|

Conversational AI / NLP

|

S51422

|

SwinTransformer and its Training Acceleration

|

Han Hu, Principal Research Manager, Microsoft Research Asia

Li Tao, Tech Software Engineer, NVIDIA

|

Deep Learning – Training+

|

S51260

|

Multimodal Deep Learning for Protein Engineering

|

Kevin Yang, Senior Researcher, Microsoft Research New England

|

Healthcare – Drug Discovery

|

S51945

|

Improving Dense Text Retrieval Accuracy with Approximate Nearest Neighbor Search

|

Menghao Li, Software Engineer, Microsoft

Akira Naruse, Senior Developer Technology Engineer, NVIDIA

|

Data Science

|

S51709

|

Hopper Confidential Computing: How it Works under the Hood

|

Antoine Delignat-Lavaud, Principal Researcher Microsoft Research, Microsoft

Phil Rogers, VP of System Software, NVIDIA

|

Data Center / Cloud Infrastructure – Technical

|

S51447

|

Data-Driven Approaches to Language Diversity

|

Kalika Bali, Principal Researcher, Microsoft Research India

Caroline Gottlieb, Product Manager, Data Strategy, NVIDIA

Damian Blasi, Harvard Data Science Fellow, Department of Human Evolutionary Biology, Harvard University

Bonaventure Dossou, Ph.D. Student, McGill University and Mila Quebec AI Institute

EM Lewis-Jong, Common Voice – Product Lead, Mozilla Foundation

|

Conversational AI / NLP

|

S51756a

|

Accelerating AI in Federal Cloud Environments, with Q&A from EMEA Region

|

Bill Chappel, Vice President of Mission Systems in Strategic Missions and Technology, Microsoft

Steven H. Walker, Chief Technology Officer, Lockheed Martin

Larry Brown, SA Manager, NVIDIA

Christi DeCuir, Director, Cloud Go-to-Market, NVIDIA

|

Data Center / Cloud – Business Strategy

|

S51589

|

Accelerating Wind Energy Forecasts with AceCast

|

Amirreza Rastegari, Senior Program Manager, Azure Specialized Compute, Microsoft

Gene Pache, TempoQuest

|

HPC – Climate / Weather / Ocean Modeling

|

S51278

|

Next-Generation AI for Improving Building Security and Safety

|

Adina Trufinescu, Senior Program Manager, Azure Specialized Compute, Microsoft

|

Computer Vision – AI Video Analytics

|

Deep Learning Institute Workshops and Labs

We are proud to host NVIDIA’s Deep Learning Institute (DLI) training at NVIDIA GTC. Attend full-day, hands-on, instructor-led workshops or two-hour free training labs to get up to speed on the latest technology and breakthroughs. Hosted on Microsoft Azure, these sessions enable and empower you to leverage NVIDIA GPUs on the Azure platform to solve the world’s most interesting and relevant problems.

Register for a Deep Learning Institute workshop or lab today!

Learn more about Azure AI infrastructure

Whether your project is big or small, local or global, Microsoft Azure is empowering companies worldwide to push the boundaries of AI innovation. Learn how you can make AI your reality.

Azure AI Infrastructure

Azure AI Platform

Accelerating AI and HPC in the Cloud

AI-first Infrastructure and Toolchain at Any Scale

The case for AI in the Azure Cloud

AI Infrastructure for Smart Manufacturing

AI Infrastructure for Smart Retail

by Contributed | Feb 28, 2023 | Business, Microsoft 365, Technology

This article is contributed. See the original author and article here.

This month, we’re bringing new AI-powered capabilities to Microsoft Teams Premium, helping keep everyone aligned with Microsoft Viva Engage, and sharing new Loop components in Whiteboard to help your team collaborate in sync.

The post From AI in Teams Premium to updates for Viva—here’s what’s new in Microsoft 365 appeared first on Microsoft 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

![[Data Virtualization] May need to update Java (JRE7 only uses TLS 1.0)](https://www.drware.com/wp-content/uploads/2023/02/medium-52)

by Contributed | Feb 27, 2023 | Technology

This article is contributed. See the original author and article here.

At end of October 2022 we saw an issue where a customer using PolyBase external query to Azure Storage started seeing queries fail with the following error:

Msg 7320, Level 16, State 110, Line 2

Cannot execute the query “Remote Query” against OLE DB provider “SQLNCLI11” for linked server “(null)”. EXTERNAL TABLE access failed due to internal error: ‘Java exception raised on call to HdfsBridge_IsDirExist: Error [com.microsoft.azure.storage.StorageException: The server encountered an unknown failure: ]occurred while accessing external file.’

Prior to this, everything was working fine; the customer made no changes to SQL Server or Azure Storage.

“The server encountered an unknown failure” – not the most descriptive of errors. We checked the PolyBase logs for more information:

10/30/2022 1:12:23 PM [Thread:13000] [EngineInstrumentation:EngineQueryErrorEvent] (Error, High):

EXTERNAL TABLE access failed due to internal error: ‘Java exception raised on call to HdfsBridge_IsDirExist: Error [com.microsoft.azure.storage.StorageException: The server encountered an unknown failure: ] occurred while accessing external file.’

Microsoft.SqlServer.DataWarehouse.Common.ErrorHandling.MppSqlException: EXTERNAL TABLE access failed due to internal error: ‘Java exception raised on call to HdfsBridge_IsDirExist: Error [com.microsoft.azure.storage.StorageException: The server encountered an unknown failure: ] occurred while accessing external file.’ —> Microsoft.SqlServer.DataWarehouse.DataMovement.Common.ExternalAccess.HdfsAccessException: Java exception raised on call to HdfsBridge_IsDirExist: Error [com.microsoft.azure.storage.StorageException: The server encountered an unknown failure: ] occurred while accessing external file.

at Microsoft.SqlServer.DataWarehouse.DataMovement.Common.ExternalAccess.HdfsBridgeFileAccess.GetFileMetadata(String filePath)

at Microsoft.SqlServer.DataWarehouse.Sql.Statements.HadoopFile.ValidateFile(ExternalFileState fileState, Boolean createIfNotFound)

— End of inner exception stack trace —

We got a little bit more information. PolyBase Engine is checking for file metadata, but still failing with “unknown failure”.

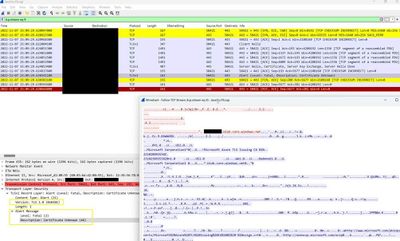

The engineer working on this case did a network trace and found out that the TLS version used for encrypting the packets sent to Azure Storage was TLS 1.0. The following screenshot demonstrates the analysis (note lower left corner where “Version: TLS 1.0” is clearly visible

He compared this to a successful PolyBase query to Azure Storage account and found it was using TLS 1.2.

Azure Storage accounts can be configured to only allow a minimum TLS version. Our intrepid engineer checked the storage account and it was so old that it predated the time when this option was configurable for the storage account. But, in an effort to resolve the customer’s issue, he researched further. The customer was using a Version 7 Java Runtime Environment. Our engineer reproduced the error by downgrading his JRE to Version 7 and then querying a PolyBase external table pointing to his Azure storage account. Network tracing confirmed that JRE v7 will use TLS 1.0. He tried changing the TLS version in the Java configuration but it did not resolve the issue. He then switched back to JRE v8 and the issue was resolved in his environment. He asked the customer to upgrade to Version 8 and found the issue was resolved.

Further research showed that there were Azure TLS Certificate Changes requirements for some Azure endpoints and this old storage account was affected by these changes. TLS 1.0 was no longer sufficient and TLS 1.2 was now required. Switching to Java Runtime Environment Version 8 made PolyBase utilize TLS 1.2 when sending packets to Azure Storage Account and the problem was resolved.

Nathan Schoenack

Sr. Escalation Engineer

Recent Comments