by Contributed | Mar 6, 2023 | Technology

This article is contributed. See the original author and article here.

Today we have an exciting announcement to share about the evolution of Microsoft Outlook for macOS. Don’t have Outlook on your Mac yet? Get Outlook for Mac in the App Store.

An image demonstrating the user interface of Outlook for Mac.

An image demonstrating the user interface of Outlook for Mac.

Outlook for Mac is now free to use

Now consumers can use Outlook for free on macOS, no Microsoft 365 subscription or license necessary.

Whether at home, work or school, Mac users everywhere can easily add Outlook.com, Gmail, iCloud, Yahoo! or IMAP accounts in Outlook and experience the best mail and calendar app on macOS. The Outlook for Mac app complements Outlook for iOS – giving people a consistent, reliable, and powerful experience that brings the best-in-class experience of Outlook into the Apple ecosystem that so many love.

Outlook makes it easy to manage your day, triage your email, read newsletters, accept invitations to coffee, and much more.

Bring your favorite email accounts

One of Outlook’s strengths is helping you manage multiple accounts in a single app. We support most personal email providers and provide powerful features, such as viewing all your inboxes at once and an all-mailbox search to make managing multiple email accounts a breeze.

An image demonstrating examples of personal email providers supported in Outlook.When you log into Outlook with a personal email account, you get enterprise-grade security, with secure sign-on to authenticate and protect your identity—all while keeping your email, calendar, contacts, and files protected.

An image demonstrating examples of personal email providers supported in Outlook.When you log into Outlook with a personal email account, you get enterprise-grade security, with secure sign-on to authenticate and protect your identity—all while keeping your email, calendar, contacts, and files protected.

Outlook is built for Mac

With Outlook, you’ll get a modern and beautiful user interface that has been designed and optimized for macOS. And these enhancements go far beyond surface level. The new Outlook is optimized for Apple Silicon, with snappy performance and faster sync speeds than previous versions.

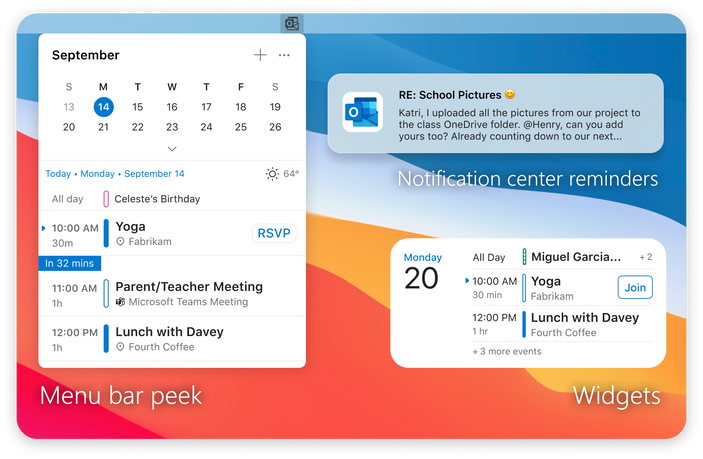

Outlook for Mac does more to integrate into the Apple platform so that you get the most from your macOS device. To help you stay on top of your email and calendar while using other apps, you can view your agenda using a widget and see reminders in the Notification Center. We are also creating a peek of upcoming calendar events in the Menu Bar (coming soon).

An image demonstrating previews of the Menu Bar (left), a Notification Center reminder (top right), and a Widget (bottom right) in Outlook for Mac.

An image demonstrating previews of the Menu Bar (left), a Notification Center reminder (top right), and a Widget (bottom right) in Outlook for Mac.

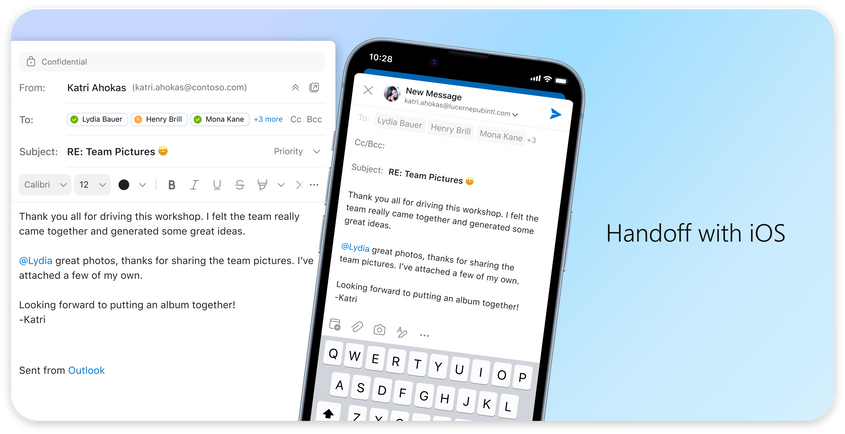

We know life is multifaceted and Outlook is leveraging the Apple platform to support you by making it easier to switch contexts. With our new Handoff feature, you can pick up tasks where you left off between iOS and Mac devices, so you can get up and go without missing a beat*.

An image demonstrating the Handoff feature in Outlook for Mac on a desktop and mobile device.

An image demonstrating the Handoff feature in Outlook for Mac on a desktop and mobile device.

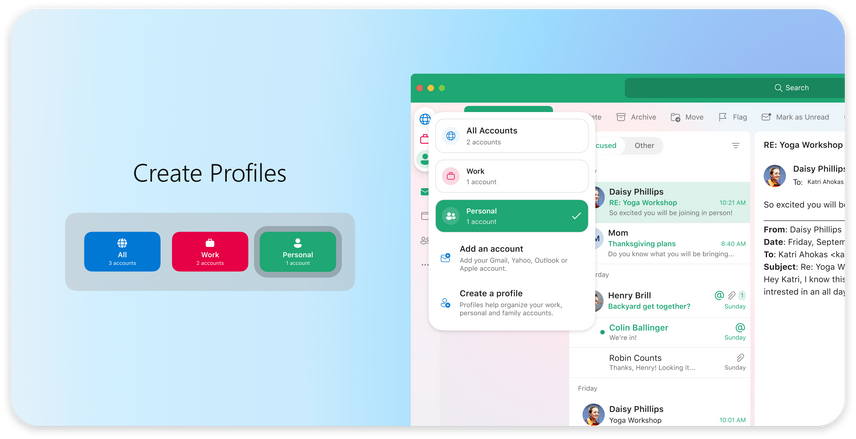

We understand it’s more important than ever to protect your time. The new Outlook Profiles (coming soon) allow you to connect your email accounts to Apple’s Focus experience. With Outlook Profiles, you won’t get unwanted notifications at the wrong time so you can stay focused on that important work email, with no distractions from your personal email.

An image demonstrating the Outlook Profiles feature in Outlook for Mac.

An image demonstrating the Outlook Profiles feature in Outlook for Mac.

Your hub to help you focus

Outlook provides a variety of ways to help you stay focused and accomplish more by helping you decide what you want to see and when. For people with multiple accounts, the all-accounts view lets you manage all your inboxes at once, without having to switch back and forth. It’s a great way to see all new messages that come in so you can choose how to respond.

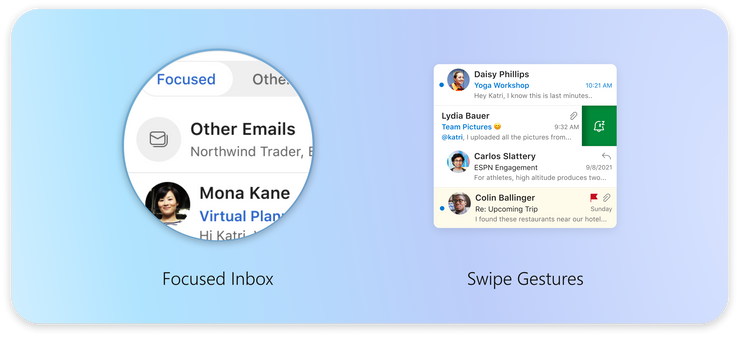

Outlook also has more options for keeping important emails front and center**. The Focused Inbox automatically sorts important from non-important messages so you can easily find them with a toggle above the message list and add frequently used folders to Favorites to find them easily. Outlook also gives you power to prioritize messages your way. You can pin messages in your inbox to keep them at the top or snooze non-urgent messages until you have time to reply. Categories and flagging are available as well. With so many options for organizing email, you can make Outlook work for you.

An image providing a preview of the Focused Inbox and Swipe Gestures features in Outlook for Mac.

An image providing a preview of the Focused Inbox and Swipe Gestures features in Outlook for Mac.

With Outlook, you can stay on top of your daily schedule from anywhere in the Mac app. Open My Day in the task pane to view an integrated, interactive calendar right from your inbox. Not only can you see upcoming events at a glance, but you can view event details, RSVP, join virtual meetings, and create new events from this powerful view of your calendar.

What’s next

We are thrilled to invite all Mac users to try our redesigned Outlook app with the latest and greatest features for macOS. Download Outlook for free in the App Store.

There is more to do and many more features we are excited to bring to the Outlook Mac experience. We are rebuilding Outlook for Mac from the ground up to be faster, more reliable, and to be an Outlook for everyone.

*The Handoff feature requires downloading Outlook for Mac from the App Store and login to your iOS device and macOS using the same AppleID.

**Categories only available with Outlook.com, MSN.com, Live.com, and Hotmail.com accounts. Focused Inbox, Snooze, and Pinning are not available with Direct Sync accounts. Click here for more information on Direct Sync accounts.

by Contributed | Mar 4, 2023 | Technology

This article is contributed. See the original author and article here.

Hi, I am Samson Amaugo, a Microsoft MVP and a Microsoft Learn Student Ambassador. I love writing and talking about all things DotNet. I am currently a student at the Federal University of Technology, Owerri. We could connect on Linkedin at My Linkedin Profile.

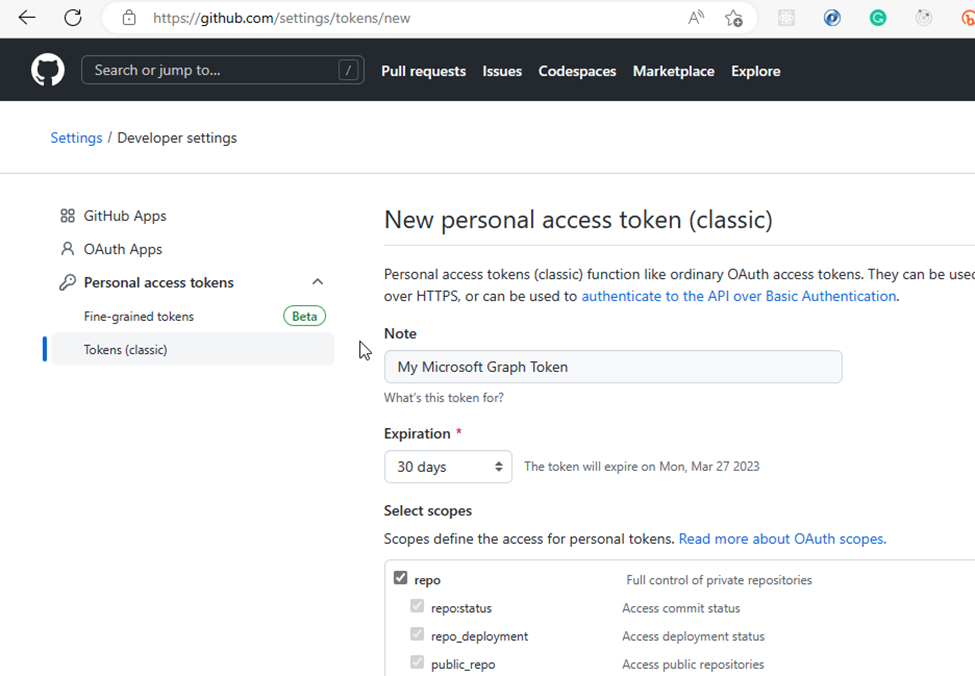

Have you ever thought about going through all your GitHub Repositories, taking note of the languages used, aggregating them, and visualizing it on Excel?

Well, that is what this post is all about except you don’t have to do it manually in a mundane way.

With the Aid of the Octokit GraphQL Library and Microsoft Graph .NET SDK, you could code up a cool that automates this process.

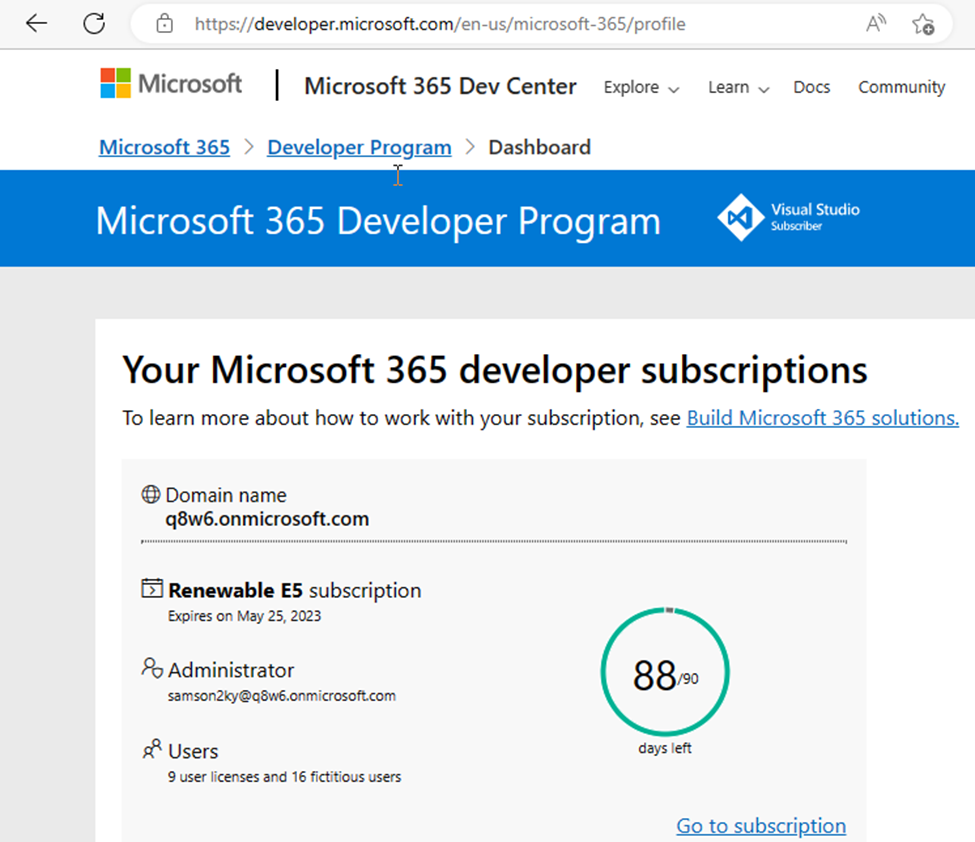

To build out this project in a sandboxed environment with the appropriate permissions I had to sign up on Microsoft 365 Dev Center to Create an Account that I could use to interact with Microsoft 365 products.

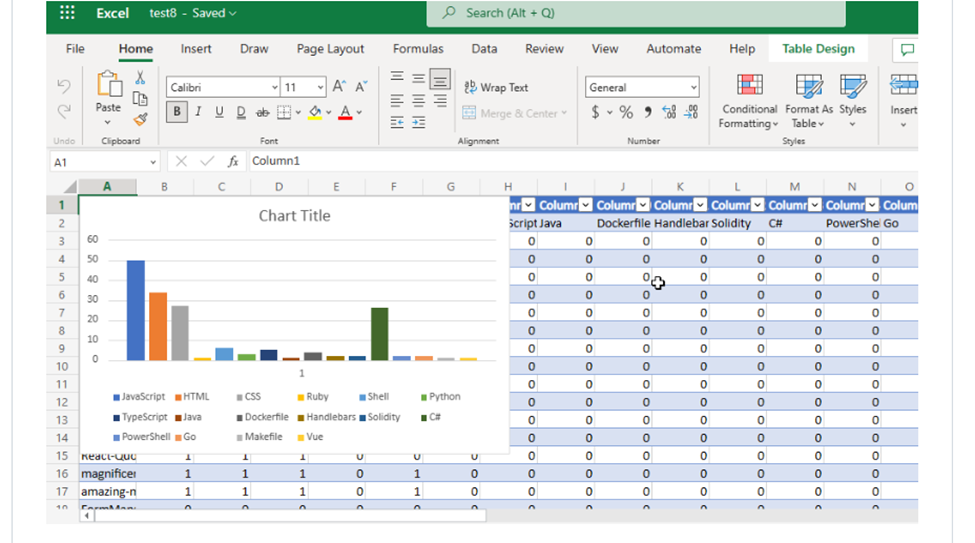

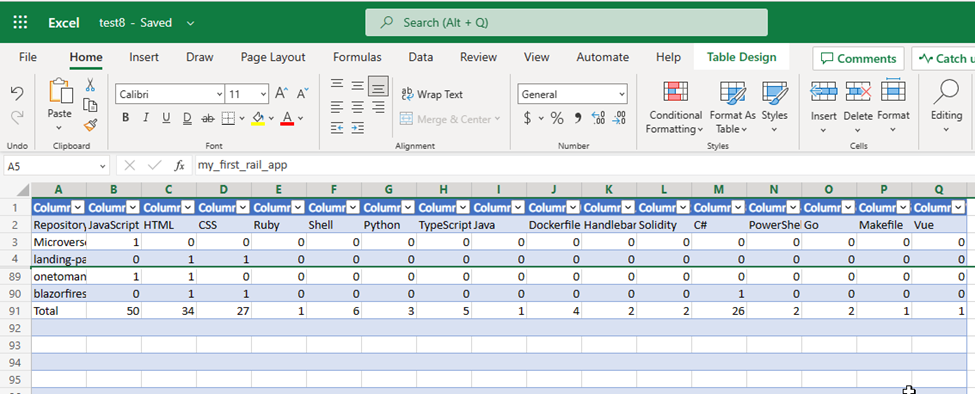

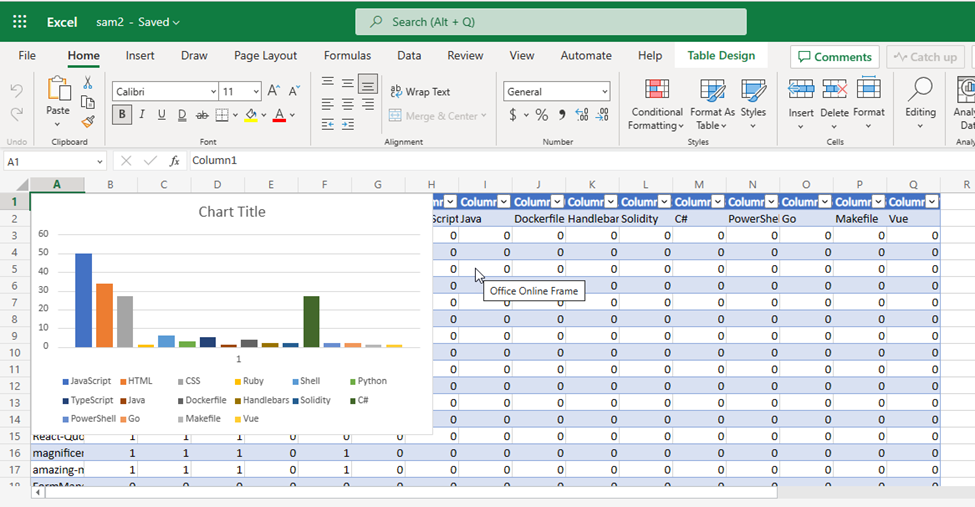

The outcome of the project could be seen below

Steps to Build

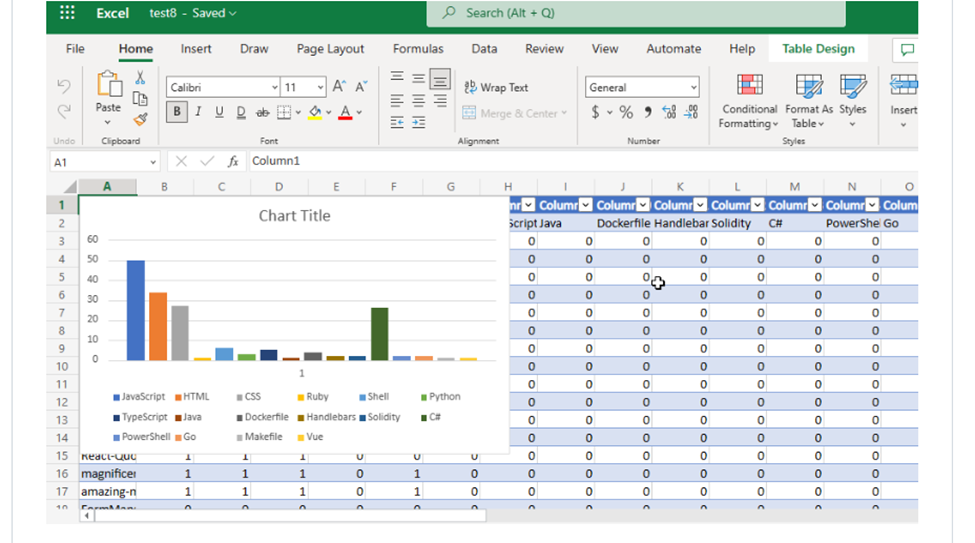

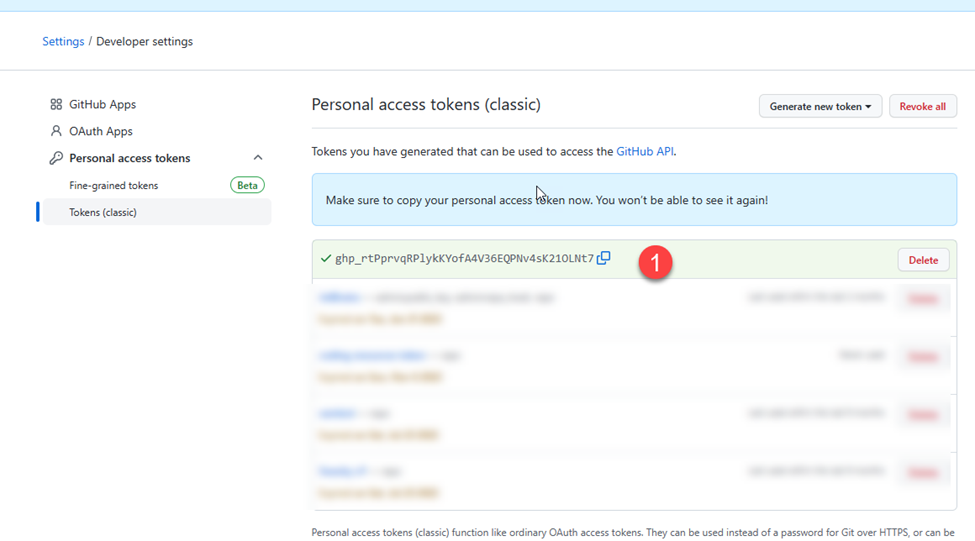

Generating an Access Token From GitHub

- I went to https://github.com/settings/apps and selected the Tokens (classic) option. This is because that is what the Octokit GraphQL library currently supports.

- I clicked on the Generate new token dropdown.

- I clicked on the Generate new token (classic) option.

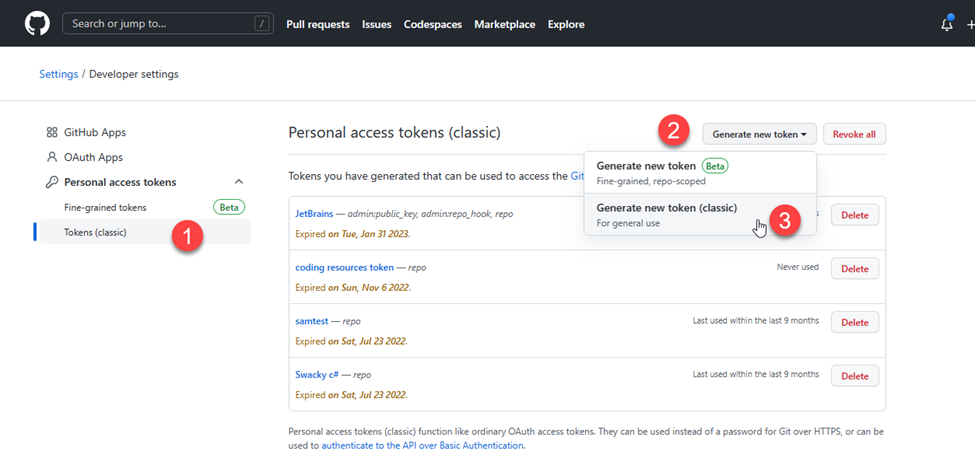

- I filled in the required Note field and selected the repo scope which would enable the token to have access to both my private and public repositories. After that, I scrolled down and selected the Generate token button.

- Next, I copied the generated token to use during my code development.

Creating a Microsoft 365 Developer Account

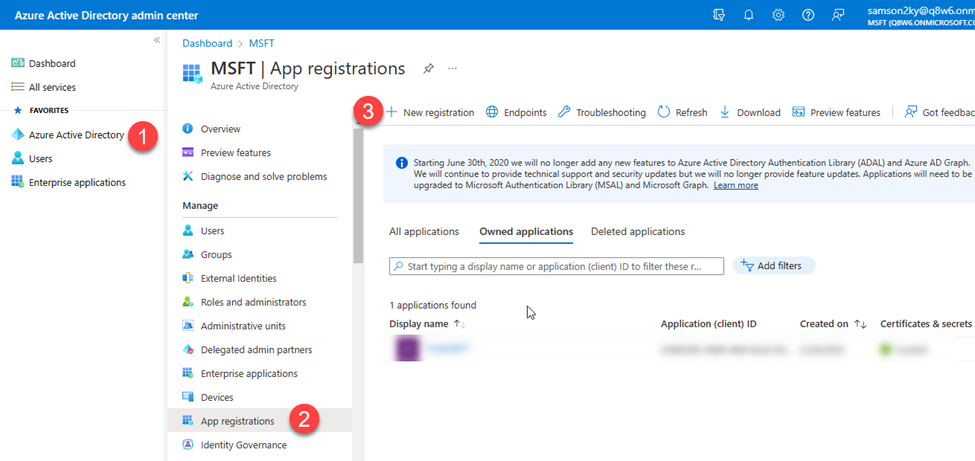

Registering an Application on Azure

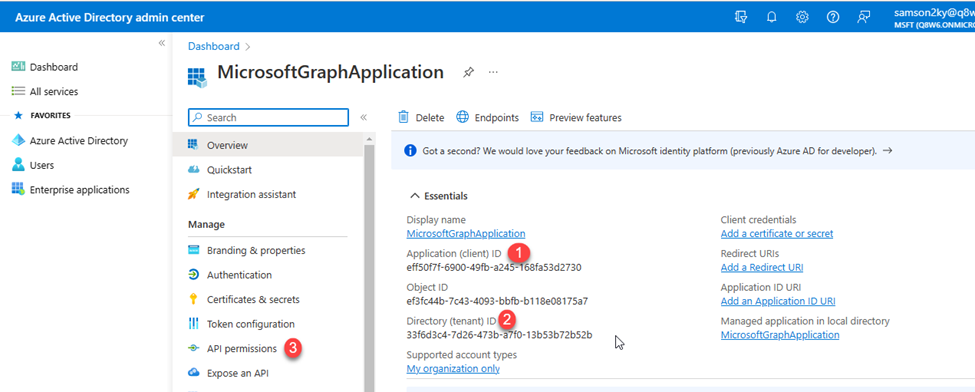

- To interact with Microsoft 365 applications via the graph API, I had to register an app on Azure Active Directory.

- I signed in to https://aad.portal.azure.com/ (Azure Active Directory Admin Center) using the developer email gotten from the Microsoft 365 developer subscription (i.e. samson2ky@q8w6.onmicrosoft.com).

- I clicked on the Azure Active Directory menu on the left menu pane.

- I clicked on the App Registration option.

- I clicked on the New registration option.

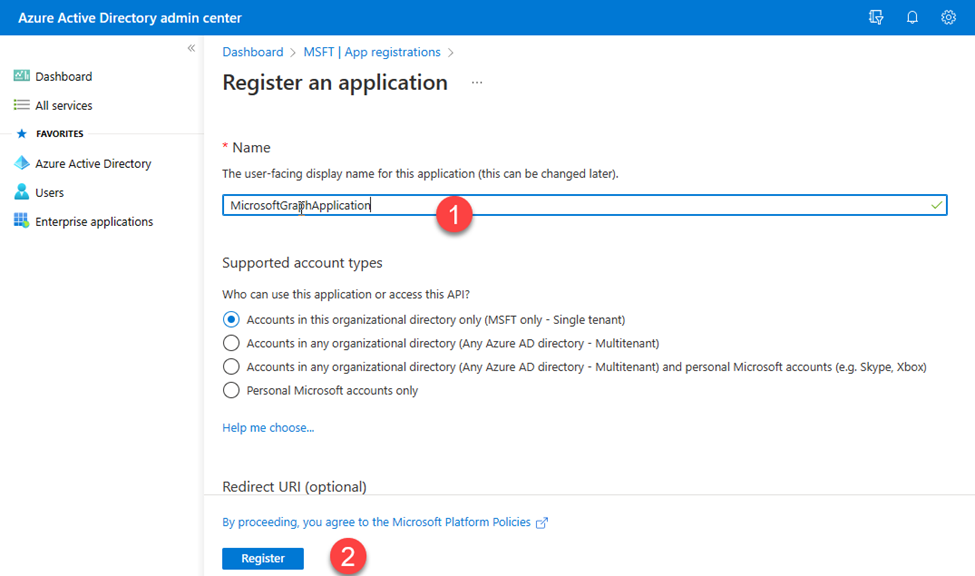

- I filled in the application name and clicked on the Register button.

- I copied the clientId and the tenantId

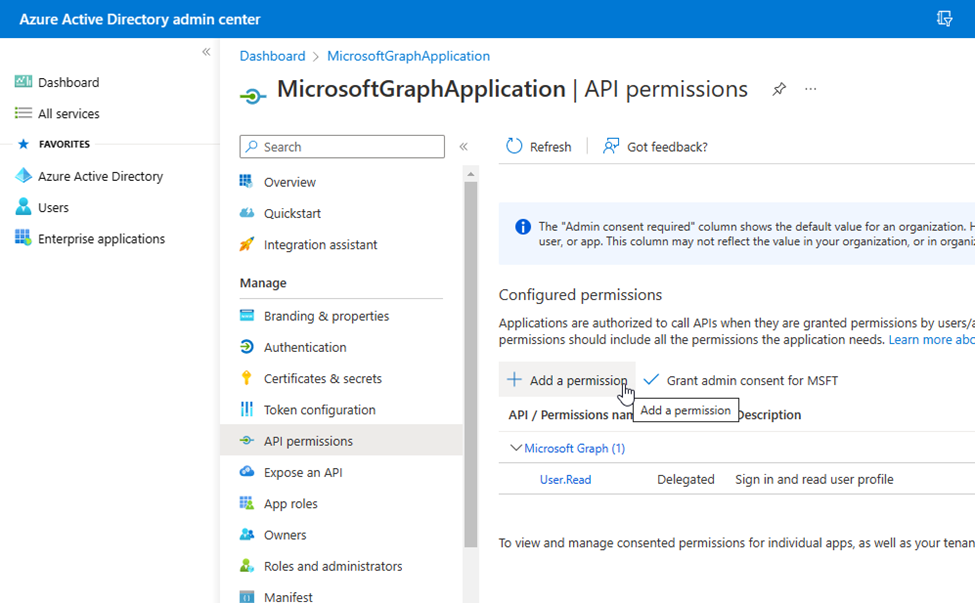

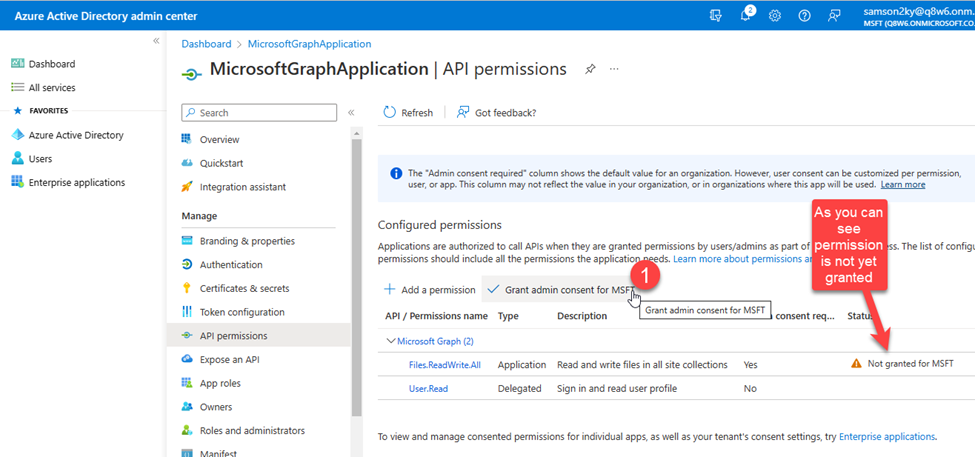

- I clicked on the API permissions menu on the left pane

- To grant the registered application access to manipulate files, I had to grant it read and write access to my files.

- I clicked on the Add a permission option.

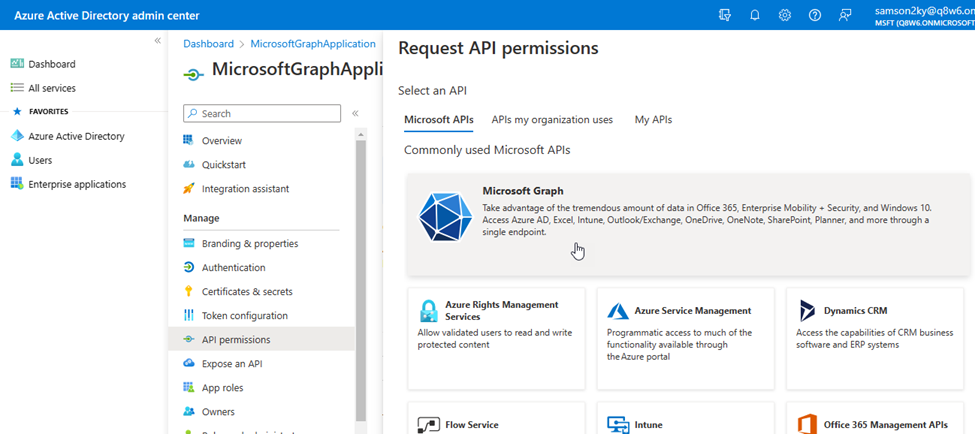

- I clicked on the Microsoft Graph API.

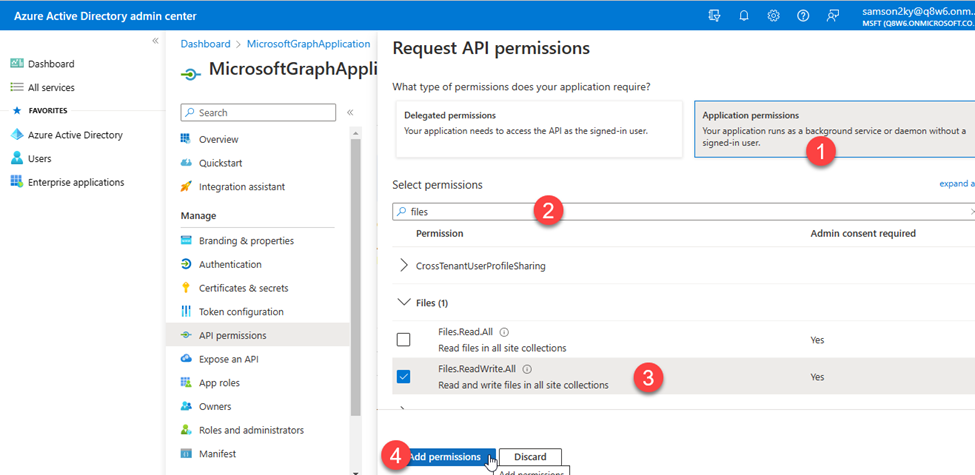

- Since I wanted the application to run in the background without signing in as a user, I selected the Application permissions option, typed files in the available field for easier selection, checked the Files.ReadWrite.All permission and clicked on the Add permissions button.

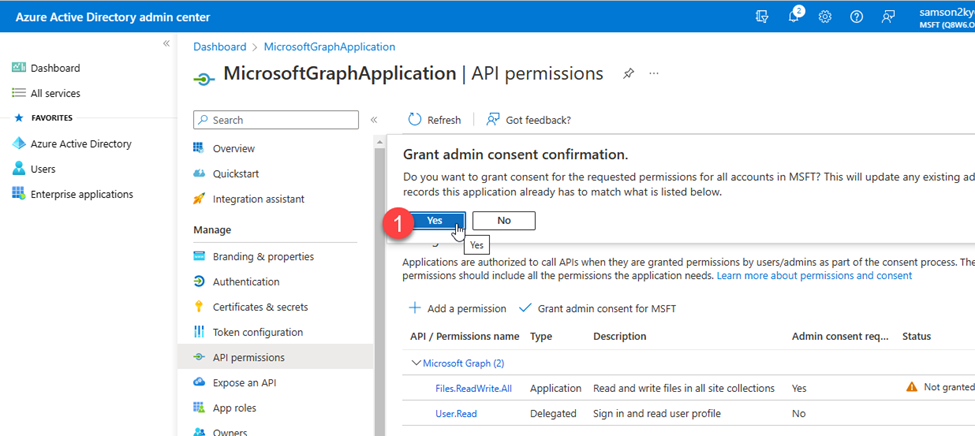

- At this point, I had to grant the application ADMIN consent before it would be permitted to read and write to my files.

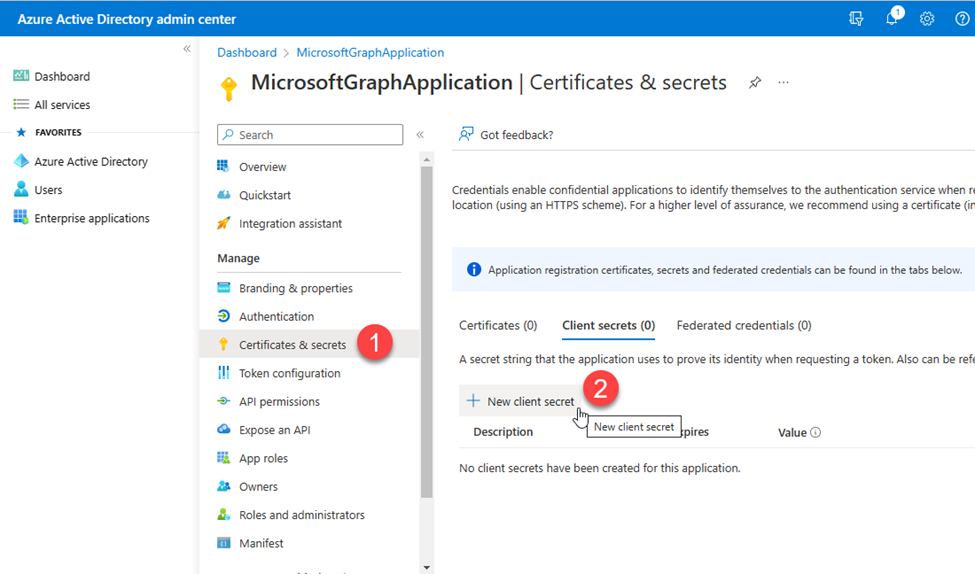

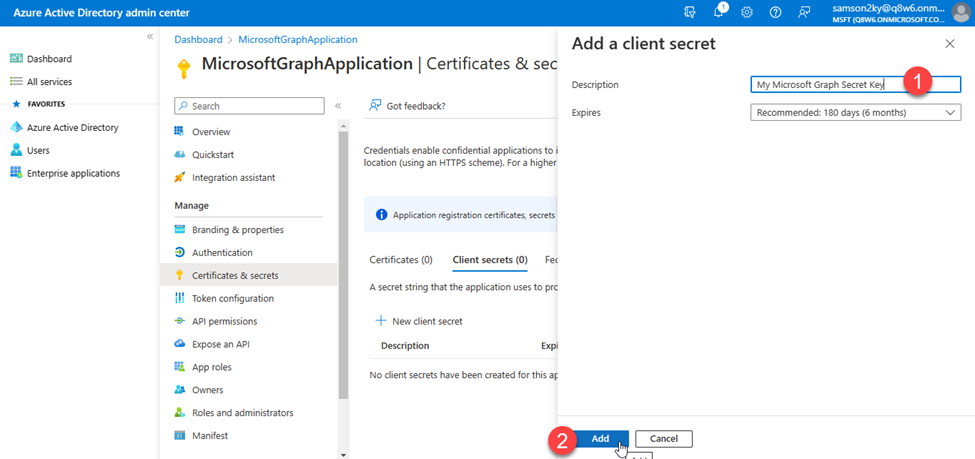

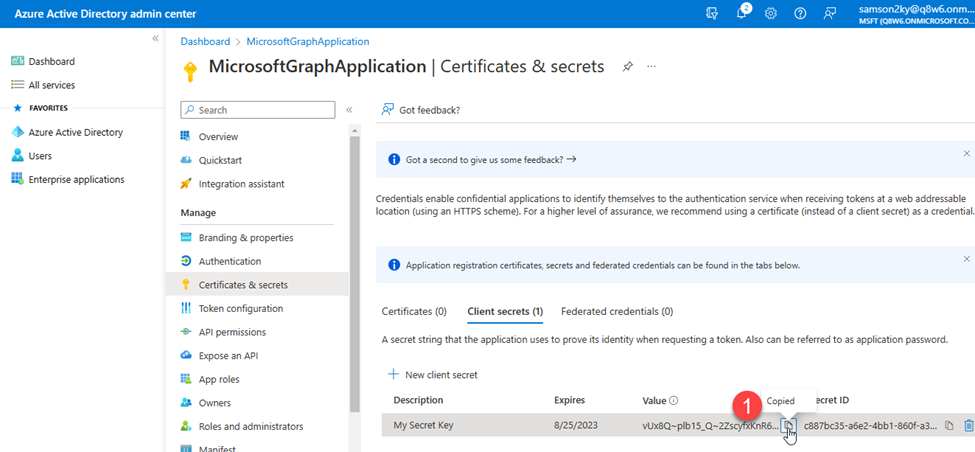

- Next, I had to generate the client’s secret by clicking on the Certificates & secrets menu on the left panel and clicking on the New client secret button.

- I filled up the client’s secret description and clicked on the Add button.

- Finally, I copied the Value of the generated secret key.

Writing the Code

- I created a folder, opened a terminal in the directory and executed the command below to bootstrap my console application.

dotnet new console

- I installed some packages which you can easily install by adding the item group below in your .csproj file within the Project tag.

- Next, execute the command below to install them

dotnet restore

- To have my necessary configuration in a single place, I created an appsettings.json file at the root of my project and added the JSON data below:

{

"AzureClientID": "eff50f7f-6900-49fb-a245-168fa53d2730",

"AzureClientSecret": "vUx8Q~plb15_Q~2ZscyfxKnR6VrWm634lIYVRb.V",

"AzureTenantID": "33f6d3c4-7d26-473b-a7f0-13b53b72b52b",

"GitHubClientSecret": "ghp_rtPprvqRPlykkYofA4V36EQPNV4SK210LNt7",

"NameOfNewFile": "chartFile.xlsx"

}

You would need to replace the credential above with yours.

- In the above JSON, you can see that I populated the values of the key with the saved secrets and IDs I obtained during the GitHub token registration and from the Azure Directory Application I created.

- To be able to bind the values in the JSON above to a C# class, I created a Config.cs file in the root of my project and added the code below:

using Microsoft.Extensions.Configuration;

namespace MicrosoftGraphDotNet

{

internal class Config

{

// Define properties to hold configuration values

public string? AzureClientId { get; set; }

public string? AzureClientSecret { get; set; }

public string? AzureTenantId { get; set; }

public string? GitHubClientSecret { get; set; }

public string? NameOfNewFile { get; set; }

// Constructor to read configuration values from appsettings.json file

public Config()

{

// Create a new configuration builder and add appsettings.json as a configuration source

IConfiguration config = new ConfigurationBuilder()

.SetBasePath(Directory.GetCurrentDirectory())

.AddJsonFile("appsettings.json")

.Build();

// Bind configuration values to the properties of this class

config.Bind(this);

}

}

}

In the Program.cs file I imported the necessary namespaces that I would be needing by adding the code below:

// Import necessary packages

using System.Text.Json;

using Octokit.GraphQL;

using Octokit.GraphQL.Core;

using Octokit.GraphQL.Model;

using Azure.Identity;

using Microsoft.Graph;

using MicrosoftGraphDotNet;

- Next, I instantiated my, Config class:

// retrieve the config

var config = new Config();

- I made use of the Octokit GraphQL API to query all my GitHub repositories for repository names and the languages used in individual repositories. Then I created a variable to hold the list of distinct languages available in all my repositories. After that, I deserialized the response into an array of a custom class I created (Repository class).

// Define user agent and connection string for GitHub GraphQL API

var userAgent = new ProductHeaderValue("YOUR_PRODUCT_NAME", "1.0.0");

var connection = new Connection(userAgent, config.GitHubClientSecret!);

// Define GraphQL query to fetch repository names and their associated programming languages

var query = new Query()

.Viewer.Repositories(

isFork: false,

affiliations: new Arg<IEnumerable>(

new RepositoryAffiliation?[] { RepositoryAffiliation.Owner })

).AllPages().Select(repo => new

{

repo.Name,

Languages = repo.Languages(null, null, null, null, null).AllPages().Select(language => language.Name).ToList()

}).Compile();

// Execute the GraphQL query and deserialize the result into a list of repositories

var result = await connection.Run(query);

var languages = result.SelectMany(repo => repo.Languages).Distinct().ToList();

var repoNameAndLanguages = JsonSerializer.Deserialize(JsonSerializer.Serialize(result));

- Since I am using top-level statements in my code I decided to ensure the custom class I created would be the last thing in the Program.cs file.

// Define a class to hold repository data

class Repository

{

public string? Name { get; set; }

public List? Languages { get; set; }

}

- Now that I’ve written the code to retrieve my repository data, the next step is to write the code to create an Excel File, Create a table, create rows and columns, populate the rows and columns with data and use that to plot a chart that visualizes the statistics of the top GitHub Programming languages used throughout my repositories.

- I initialized the Microsoft Graph .NET SDK using the :

// Define credentials and access scopes for Microsoft Graph API

var tokenCred = new ClientSecretCredential(

config.AzureTenantId!,

config.AzureClientId!,

config.AzureClientSecret!);

var graphClient = new GraphServiceClient(tokenCred);

- Next, I created an Excel file :

// Define the file name and create a new Excel file in OneDrive

var driveItem = new DriveItem

{

Name = config.NameOfNewFile!,

File = new Microsoft.Graph.File

{

}

};

var newFile = await graphClient.Drive.Root.Children

.Request()

.AddAsync(driveItem);

- I created a table that spans the length of the data I have horizontally and vertically:

// Define the address of the Excel table and create a new table in the file

var address = "Sheet1!A1:" + (char)('A' + languages.Count) + repoNameAndLanguages?.Count();

var hasHeaders = true;

var table = await graphClient.Drive.Items[newFile.Id].Workbook.Tables

.Add(hasHeaders, address)

.Request()

.PostAsync();

- I created a 2-Dimensional List that would represent my data in the format below

The code that represents the data above can be seen below:

// Define the first row of the Excel table with the column headers

var firstRow = new List { "Repository Name" }.Concat(languages).ToList();

// Convert the repository data into a two-dimensional list

List<List> totalRows = new List<List> { firstRow };

foreach (var value in repoNameAndLanguages!)

{

var row = new List { value.Name! };

foreach (var language in languages)

{

row.Add(value.Languages!.Contains(language) ? "1" : "0");

}

totalRows.Add(row);

}

// Add a new row to the table with the total number of repositories for each language

var languageTotalRow = new List();

// Add "Total" as the first item in the list

languageTotalRow.Add("Total");

// Loop through each programming language in the header row

for (var languageIndex = 1; languageIndex < totalRows[0].Count; languageIndex++)

{

// Set the total count for this language to 0

var languageTotal = 0;

// Loop through each repository in the table

for (var repoIndex = 1; repoIndex < totalRows.Count; repoIndex++)

{

// If the repository uses this language, increment the count

if (totalRows[repoIndex][languageIndex] == "1")

{

languageTotal++;

}

}

// Add the total count for this language to the languageTotalRow list

languageTotalRow.Add(languageTotal.ToString());

}

// Add the languageTotalRow list to the bottom of the table

totalRows.Add(languageTotalRow);

- I added the rows of data to the table using the code below:

// Create a new WorkbookTableRow object with the totalRows list serialized as a JSON document

var workbookTableRow = new WorkbookTableRow

{

Values = JsonSerializer.SerializeToDocument(totalRows),

Index = 0,

};

// Add the new row to the workbook table

await graphClient.Drive.Items[newFile.Id].Workbook.

Tables[table.Id].Rows

.Request()

.AddAsync(workbookTableRow);

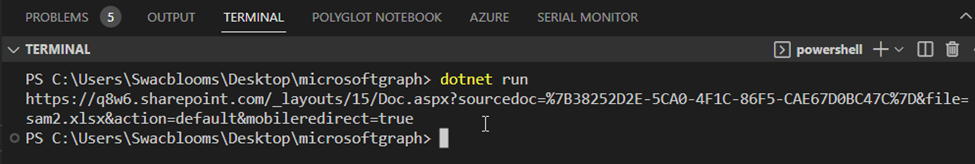

- Finally, I created a ColumnClustered chart using my data and logged the URL of the spreadsheet.

// Add a new chart to the worksheet with the language totals as data

await graphClient.Drive.Items[newFile.Id].Workbook.Worksheets["Sheet1"].Charts

.Add("ColumnClustered", "Auto", JsonSerializer.SerializeToDocument($"Sheet1!B2:{(char)('A' + languages.Count)}2, Sheet1!B{repoNameAndLanguages.Count() + 3}:{(char)('A' + languages.Count)}{repoNameAndLanguages.Count() + 3}"))

.Request()

.PostAsync();

// Print the URL of the new file to the console

Console.WriteLine(newFile.WebUrl);

- After executing the command: dotnet run, I got the URL or link to the excel file as an output.

- On clicking the link I was able to view the awesome visualization of the languages used across my GitHub repositories.

And that’s the end of this article. I hope you enjoyed it and got to see how I used Microsoft Graph .NET SDK to automate this process.

To learn more about Microsoft Graph API and SDKs:

Microsoft Graph https://developer.microsoft.com/graph

Develop apps with the Microsoft Graph Toolkit – Training

Hack Together: Microsoft Graph and .NET

Is a hackathon for beginners to get started building scenario-based apps using .NET and Microsoft Graph. In this hackathon, you will kick-start learning how to build apps with Microsoft Graph and develop apps based on the given Top Microsoft Graph Scenarios, for a chance to win exciting prizes while meeting Microsoft Graph Product Group Leaders, Cloud Advocates, MVPs and Student Ambassadors. The hackathon starts on March 1st and ends on March 15th. It is recommended for participants to follow the Hack Together Roadmap for a successful hackathon.

Demo/Sample Code

You can access the code for this project at https://github.com/sammychinedu2ky/MicrosoftGraphDotNet

by Contributed | Mar 3, 2023 | Technology

This article is contributed. See the original author and article here.

Happy Friday, MTC’ers! I hope you’ve had a good week and that March is treating you well so far. Let’s dive in and see what’s going on in the community this week!

MTC Moments of the Week

First up, we are shining our MTC Member of the Week spotlight on a community member from Down Under, @Doug_Robbins_Word_MVP! True to his username, Doug has been a valued MVP for over 20 years and an active contributor to the MTC across several forums, including M365, Word, Outlook, and Excel. Thanks for being awesome, Doug!

Jumping to events, on Tuesday, we closed out February with a brand new episode of Unpacking Endpoint Management all about Group Policy migration and transformation. Our hosts @Danny Guillory and @Steve Thomas (GLADIATOR) sat down for a fun policy pizza party with guests @mikedano, @Aasawari Navathe, @LauraArrizza, and @Joe Lurie from the Intune engineering team. We will be back next month to have a conversation about Endpoint Data Loss Prevention and Zero Trust – click here to RSVP and add it to your calendar!

Then on Wednesday, we had our first AMA of the new month with the Microsoft Syntex team. This event focused on answering the community’s questions and listening to user feedback about Syntex’s new document processing pay-as-you-go metered services with a stacked panel of experts including @Ian Story, @James Eccles, @Ankit_Rastogi, @jolenetam, @Kristen_Kamath, @Shreya_Ganguly, and @Bill Baer. Thank you to everyone who contributed to the discussion, and stay tuned for more Syntex news!

And last but not least, over on the Blogs, @Hung_Dang has shared a skilling snack for you to devour during your next break, where you can learn how to make the most of your time with the help of Windows Autopilot!

Unanswered Questions – Can you help them out?

Every week, users come to the MTC seeking guidance or technical support for their Microsoft solutions, and we want to help highlight a few of these each week in the hopes of getting these questions answered by our amazing community!

In the Exchange forum, @Ian Gibason recently setup a Workspace mailbox that is not populating the Location field when using Room Finder, leading to user requests being denied. Have you seen this before?

Perhaps you can help @infoatlantic over in the Intune forum, who’s looking for a way to sync a list of important support contacts to company owned devices.

Upcoming Events – Mark Your Calendars!

——————————–

For this week’s fun fact…

Did you know that the little wave-shaped blob of toothpaste you squeeze onto your toothbrush has a name? It’s called a “nurdle” (the exact origin of this term isn’t clear), but there was even a lawsuit in 2010 that Colgate filed against Glaxo (the maker of Aquafresh) to prohibit them from depicting the nurdle in their toothpaste packaging. Glaxo countersued, and the case was settled after a few months. The more you know!

Have a great weekend, everyone!

by Contributed | Mar 3, 2023 | Technology

This article is contributed. See the original author and article here.

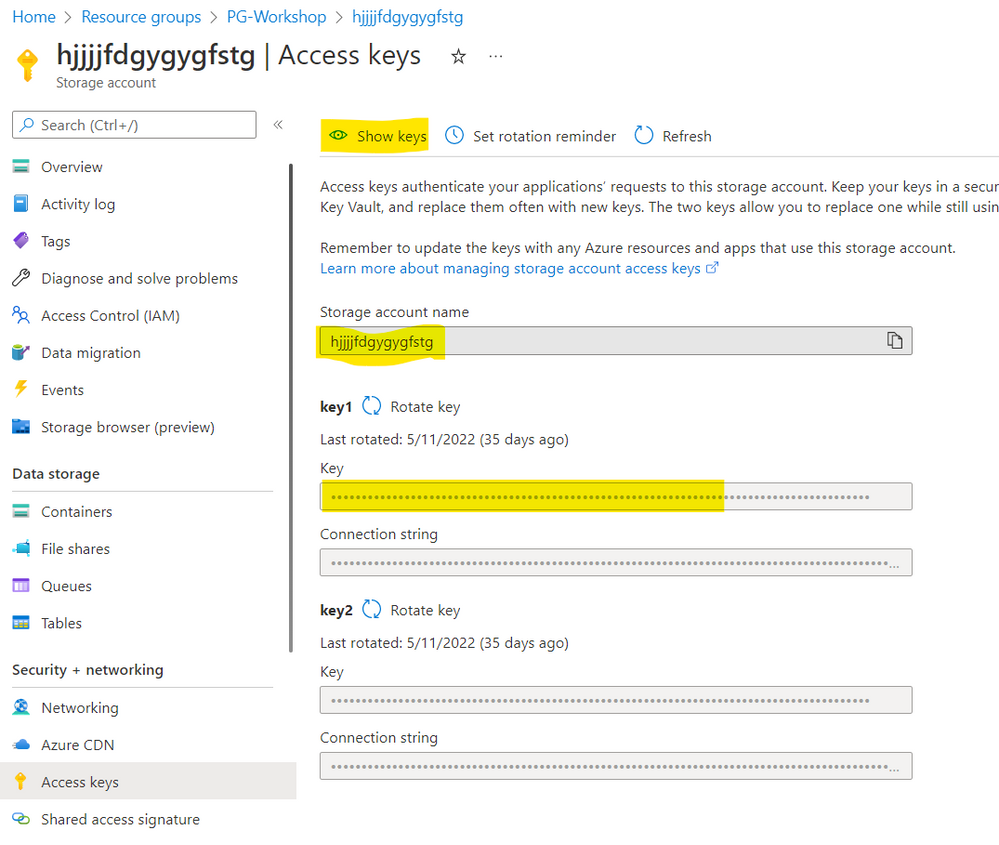

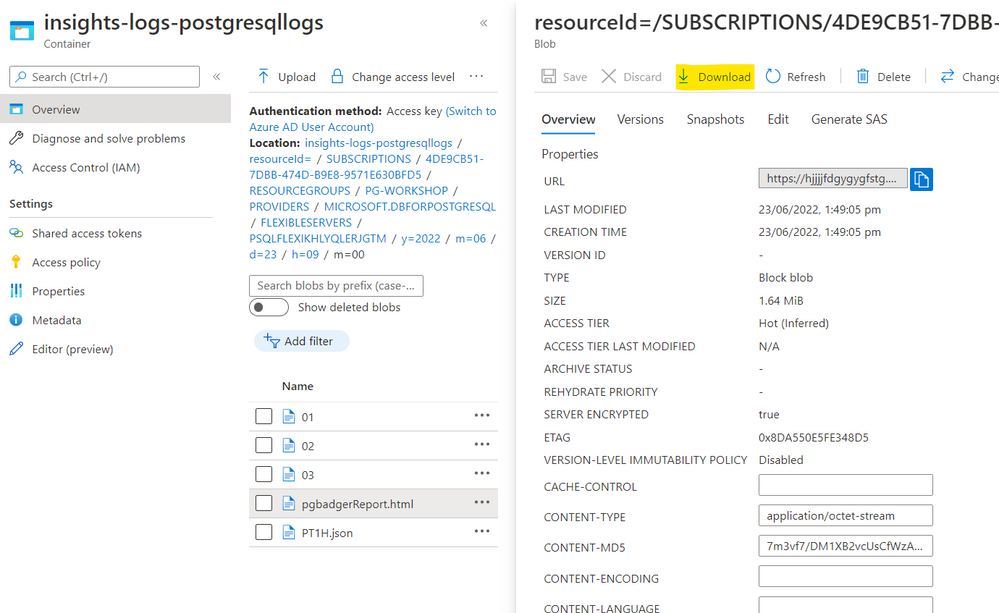

PgBadger is one of the most comprehensive Postgres troubleshooting tools available. It allows users to have insight into a wide variety of events happening in the database including:

- (Vacuums tab) Autovacuum actions – how many ANALYZE and VACUUM actions were triggered by autovacuum daemon including number of tuples and pages removed per table.

- (Temp Files tab) Distribution of temporary files and their sizes, queries that generated temporary files.

- (Locks tab) type of locks in the system, most frequent waiting queries, queries that waited the most; unfortunately, there is no information provided which query is holding the lock, only the queries that are locked are shown.

- (Top tab) Slow query log

- (Events tab) all the errors, fatals, warnings etc. aggregated.

- And many more

You can see a sample pgBadger report here.

You can generate a pgBadger report from Azure Database for PostgreSQL Flexible Server in multiple ways:

- Using Diagnostic Settings and redirecting logs to a storage account; mount storage account onto VM with BlobFuse.

- Using Diagnostic Settings and redirecting logs to a storage account; download the logs from storage account to the VM.

- Using Diagnostic Settings and redirecting logs to Log Analytics workspace.

- Using plain Server Logs*

*Coming soon!

In this article we will describe the first solution – Using Diagnostic Settings and redirecting logs to a storage account. At the end of exercise we will have storage account filled with the logs from Postgres Flexible Server and a operational VM with direct access to the logs stored in the storage account like shown below in the picture:

generate pgBadger report from Azure Database for PostgreSQL Flexible Server

generate pgBadger report from Azure Database for PostgreSQL Flexible Server

To be able to generate the report you need to configure the following items:

- Adjust Postgres Server configuration

- Create storage account (or use existing one)

- Create Linux VM (or use existing one)

- Configure Diagnostic Settings in Postgres Flexible Server and redirect logs to the storage account.

- Mount storage account onto VM using BlobFuse

- Install pgBadger on the VM

- Ready to generate reports!

Step 1 Adjust Postgres Server configuration

Navigate to the Server Parameters blade in the portal and modify the following parameters:

log_line_prefix = ‘%t %p %l-1 db-%d,user-%u,app-%a,client-%h ‘ #Please mind the space at the end!

log_lock_waits = on

log_temp_files = 0

log_autovacuum_min_duration = 0

log_min_duration_statement=0 # 0 is recommended only for test purposes, for production usage please consider much higher value, like 60000 (1 minute) to avoid excessive usage of resources

Adjust Postgres Server configuration

Adjust Postgres Server configuration

After the change hit the “Save”:

Save changed Postgres parameters

Save changed Postgres parameters

Step 2 Create storage account (or use existing one)

Please keep in mind that the storage account needs to be created in the same region as Azure Database for PostgreSQL Flexible Server. Please find the instruction here.

Step 3 Create Linux VM (or use existing one)

In this blog post we will use Ubuntu 20.04 as an example, but nothing stops you from using rpm-based system, the only difference will be in a way that BlobFuse and pgBadger is installed.

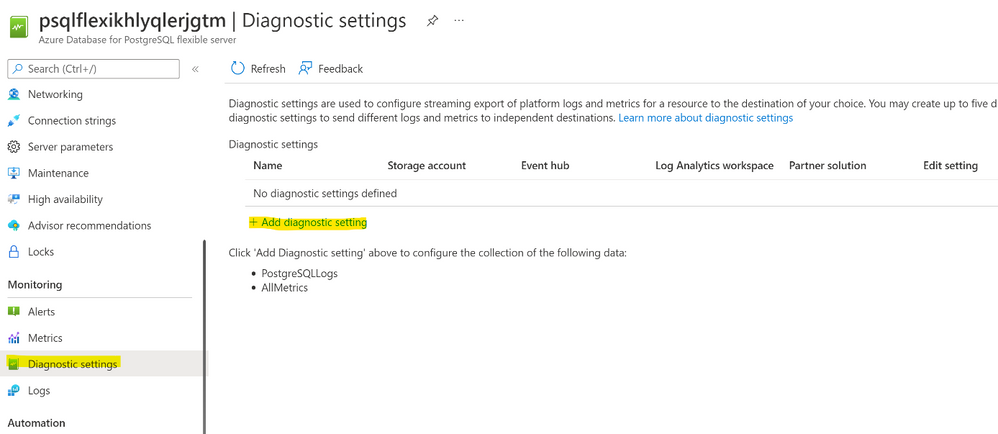

Step 4 Configure Diagnostic Settings in Postgres Flexible Server and redirect logs to the storage account.

Navigate to Diagnostic settings page in the Azure Portal, Azure Database for PostgreSQL Flexible Server instance and add a new diagnostic setting with storage account as a destination:

Hit save button.

Step 5 Mount storage account onto VM using BlobFuse

In this section you will mount storage account to your VM using BlobFuse. This way you will see the logs on the storage account as standard files in your VM. First let’s download and install necessary packages. Commands for Ubuntu 20.04 are as follows (feel free to simply copy and paste the following commands):

wget https://packages.microsoft.com/config/ubuntu/20.04/packages-microsoft-prod.deb

sudo dpkg -i packages-microsoft-prod.deb

sudo apt-get update -y

For other distributions please follow the official documentation.

Use a ramdisk for the temporary path (Optional Step)

The following example creates a ramdisk of 16 GB and a directory for BlobFuse. Choose the size based on your needs. This ramdisk allows BlobFuse to open files up to 16 GB in size.

sudo mkdir /mnt/ramdisk

sudo mount -t tmpfs -o size=16g tmpfs /mnt/ramdisk

sudo mkdir /mnt/ramdisk/blobfusetmp

sudo chown /mnt/ramdisk/blobfusetmp

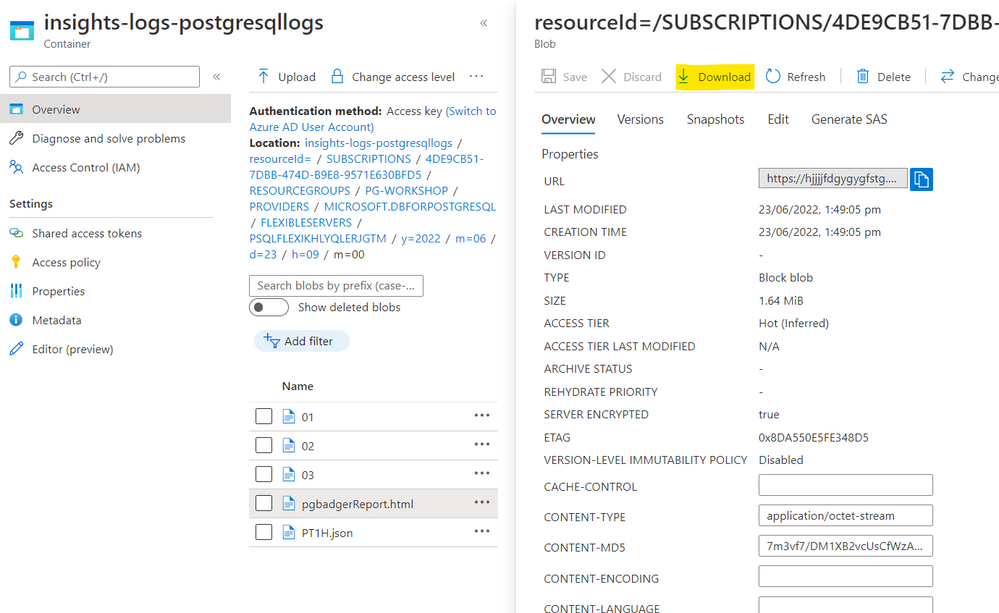

Authorize access to your storage account

You can authorize access to your storage account by using the account access key, a shared access signature, a managed identity, or a service principal. Authorization information can be provided on the command line, in a config file, or in environment variables. For details, see Valid authentication setups in the BlobFuse readme.

For example, suppose you are authorizing with the account access keys and storing them in a config file. The config file should have the following format:

accountName myaccount

accountKey storageaccesskey

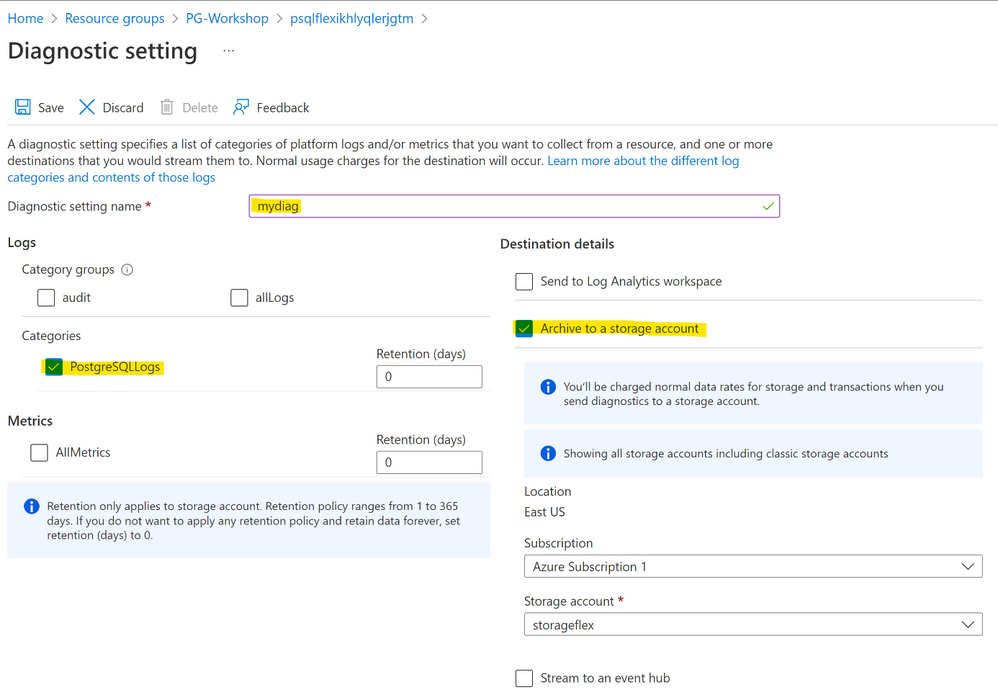

containerName insights-logs-postgresqllogs

Please prepare the following file in editor of your choice. Values for the accountName and accountKey you will find in the Azure Portal and the container name is the same as in the example above. The accountName is the name of your storage account, and not the full URL.

Please navigate to your storage account in the portal and then choose Access keys page:

Copy accountName and accountKey and paste it to the file. Copy the content of your file and paste it to the fuse_connection.cfg file in your home directory, then mount your storage account container onto the directory in your VM:

vi fuse_connection.cfg

chmod 600 fuse_connection.cfg

mkdir ~/mycontainer

sudo blobfuse ~/mycontainer –tmp-path=/mnt/resource/blobfusetmp –config-file=/home//fuse_connection.cfg -o attr_timeout=240 -o entry_timeout=240 -o negative_timeout=120

sudo -i

cd /home//mycontainer/

ls # check if you see container mounted

# Please use tab key for directory autocompletion; do not copy and paste!

cd resourceId=/SUBSCRIPTIONS/<your subscription id>/RESOURCEGROUPS/PG-WORKSHOP/PROVIDERS/MICROSOFT.DBFORPOSTGRESQL/FLEXIBLESERVERS/PSQLFLEXIKHLYQLERJGTM/y=2022/m=06/d=16/h=09/m=00/

head PT1H.json # to check if file is not empty

At this point you should be able to see some logs being generated.

Step 6 Install pgBadger on the VM

Now we need to install pgBadger tool on the VM. For Ubuntu please simply use the command below:

sudo apt-get install -y pgbadger

For other distributions please follow the official documentation.

Step 7 Ready to generate reports!

Choose the file you want to generate pgBadger from and go to the directory where the chosen PT1H.json file is stored, for instance, to generate a report from 2022-05-23, 9 o’clock you need to go to the following directory:

cd /home/pgadmin/mycontainer/resourceId=/SUBSCRIPTIONS/***/RESOURCEGROUPS/PG-WORKSHOP/PROVIDERS/MICROSOFT.DBFORPOSTGRESQL/FLEXIBLESERVERS/PSQLFLEXIKHLYQLERJGTM/y=2022/m=05/d=23/h=09/m=00

Since PT1H.json file is a json file and the Postgres log lines are stored in the message and statement values of the json we need to extract the logs first. The most convenient tool for the job is jq which you can install using the following command on Ubuntu:

sudo apt-get install jq -y

Once jq is installed we need to extract Postgres log from json file and save it in another file (PTH1.log in this example):

jq -r ‘.properties | .message + .statement’ PT1H.json > PT1H.log

Finally we are ready to generate pgBadger report:

pgbadger –prefix=’%t %p %l-1 db-%d,user-%u,app-%a,client-%h ‘ PT1H.log -o pgbadgerReport.html

Now you can download your report either from Azure Portal – your storage account or by using scp command:

Happy Troubleshooting!

by Contributed | Mar 2, 2023 | Technology

This article is contributed. See the original author and article here.

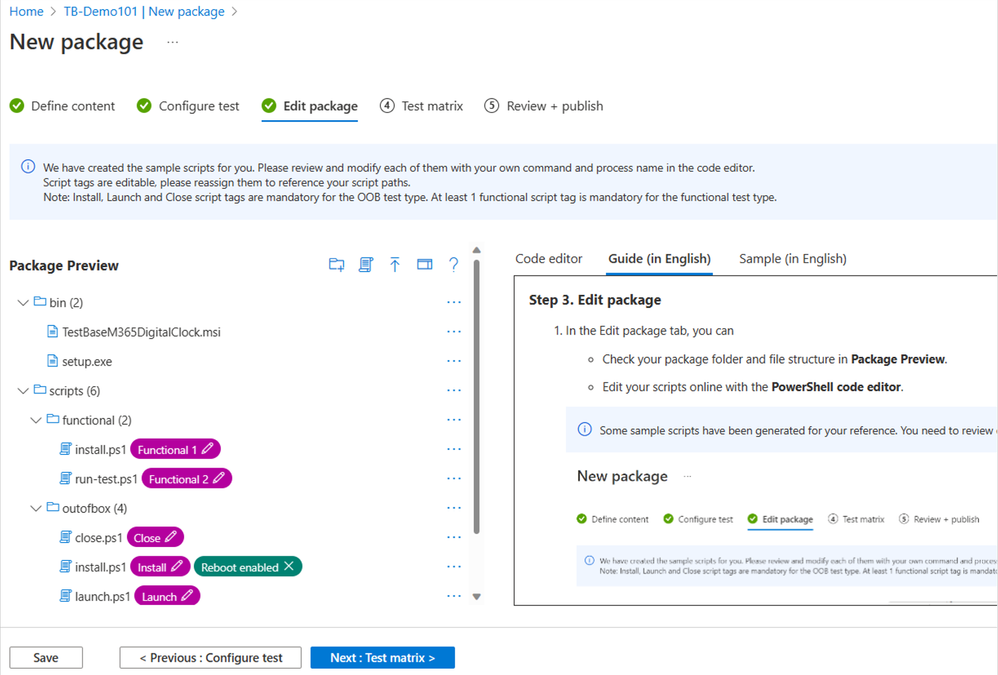

Expect your apps to work on Windows 11. If you still need extra reassurance, test only your most critical apps with Test Base for Microsoft 365. This efficient and cost-effective testing service is available today to all IT pros, along with developers and independent software vendors.

We hope you’re already experiencing the safer, smoother, and more collaborative work environment with the new Windows 11 features. We’re also here to help you ensure business continuity by maintaining high application compatibility rates and supporting you in testing the mission-critical applications that you manage. Many of you have traditional (read legacy) testing systems that are provisioned in on-premises lab infrastructure. This setup has a myriad of challenges: limited capacity, manual setup and configuration, cost associated with third-party software, etc. That’s why we created Test Base for Microsoft 365. It’s a cloud-based testing solution that allows IT pros and developers to test your applications against pre-release and in-market versions of Windows and Office in a Microsoft-managed environment. Scale your testing, use data-driven insights, and ensure fewer deployment issues and helpdesk escalations with targeted testing of critical apps.

Let’s take a closer look at how you can leverage Test Base to optimize your testing with:

- Proactive issue discovery with insights

- Automated testing against a large matrix

- Worry-free interoperability assurance

- Support throughout the entire validation lifecycle

Proactive issue discovery with insights

If you’re on a mission to be more proactive with issue detection, give Test Base a try. Do you wish to have more time to test your apps before the monthly Windows updates go out? We know reactive issue detection is stressful after your end users have already been impacted. So why not leverage prebuilt test scripts in our smooth onboarding experience! With that, let Test Base perform the install, launch, close, and uninstall actions on your apps 30 times for extra assurance. Get helpful reports and know that your apps won’t have any foundational issues on the new version of Windows.

Use prebuilt or personalized scripts to test updates

Here’s how you can use the prebuilt test scripts and get actionable insights:

- Access the Test Base portal.

- Select New Package from the left-hand side menu.

- Click on the Edit Package tab and select a pre-generated test script from the left-hand side pane.

- Follow the step-by-step onboarding experience from there!

The following image shows the Package Preview step of the onboarding experience, illustrating a custom script to test the installation of an application. You can also choose to write your own functional tests to validate the specific functionality of your applications.

Pre-generated template scripts available on the New Package page inside Test Base

Pre-generated template scripts available on the New Package page inside Test Base

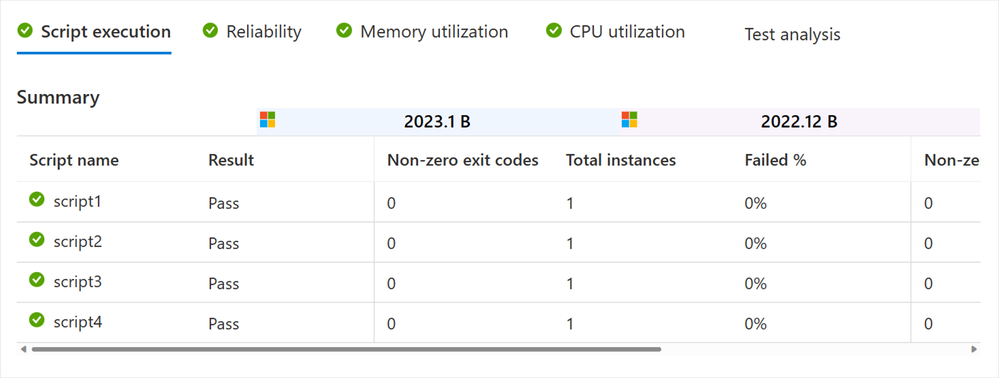

After you configure your test matrix on the following screen, we automatically test your applications against Windows updates. You’ll also get an execution report and summary of any failures. Use this report to see data on your applications’ reliability, memory, and CPU utilization across all your configured tests. At the end, see Event Trace Logs (ETLs) and a video recording of the test execution to easily debug any issues that you find.

Script execution report in the test results summary in Test Base

Script execution report in the test results summary in Test Base

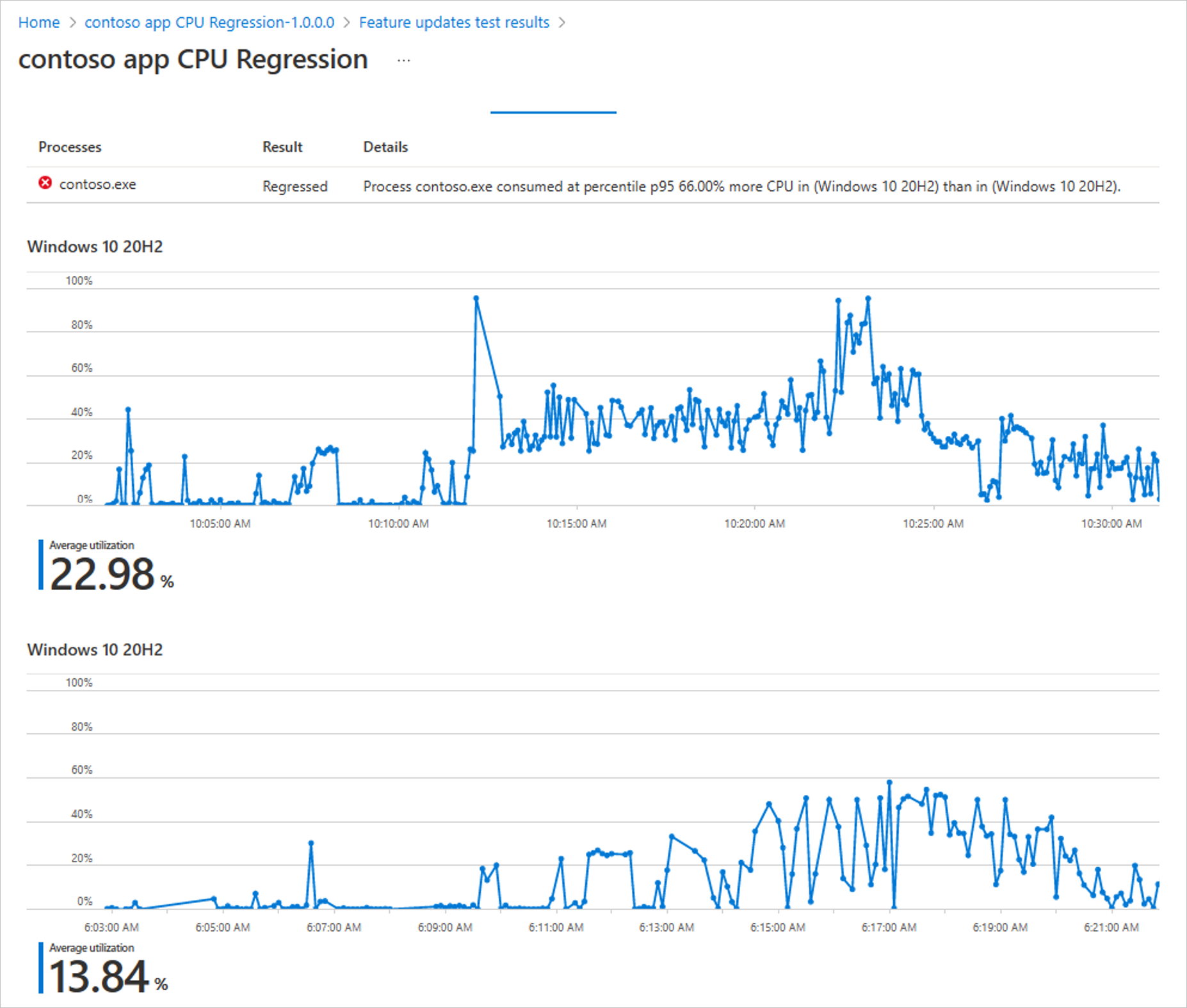

Compare CPU usage for feature updates

As part of the test results, view CPU utilization insights for your applications during Windows feature updates. Within the same onboarding experience in Test Base > New Package > Create New Package Online > Test Matrix tab, you can view a side-by-side analysis between your configured run and a baseline run. Select any of the familiar OSs as the baseline, and it will appear below your test graph. In the following example, the tested December monthly security build (2022.12 B) shows up on the top, while the baseline November monthly security build (2022.11 B) is listed on the bottom. The results are summarized above both charts with a regression status and details.

Side-by-side CPU utilization analysis of a test run against the baseline inside Test Base

Side-by-side CPU utilization analysis of a test run against the baseline inside Test Base

How do we determine that? We check for a statistically significant difference in CPU usage for processes between the baseline and target run. A run is regressed if one or more relevant processes experiences a statistically significant increase at one of our observed percentiles. Use these insights for faster regression detection and to ensure enough lead time to fix any issues you might find. Learn more about the CPU regression analysis in our official documentation.

Automated testing against a large matrix

Can you identify with managing scores of devices that run multiple different versions of Windows? Then you might worry about eventually facing an unexpected compatibility issue between Windows and another Microsoft first-party app update. Test Base is integrated with the Windows build system, so we can help you test your apps against builds as soon as they are available and before they are released to Windows devices. Use our comprehensive OS coverage and automated testing to detect potential issues as early as possible, improve your SLA, and reduce labor costs.

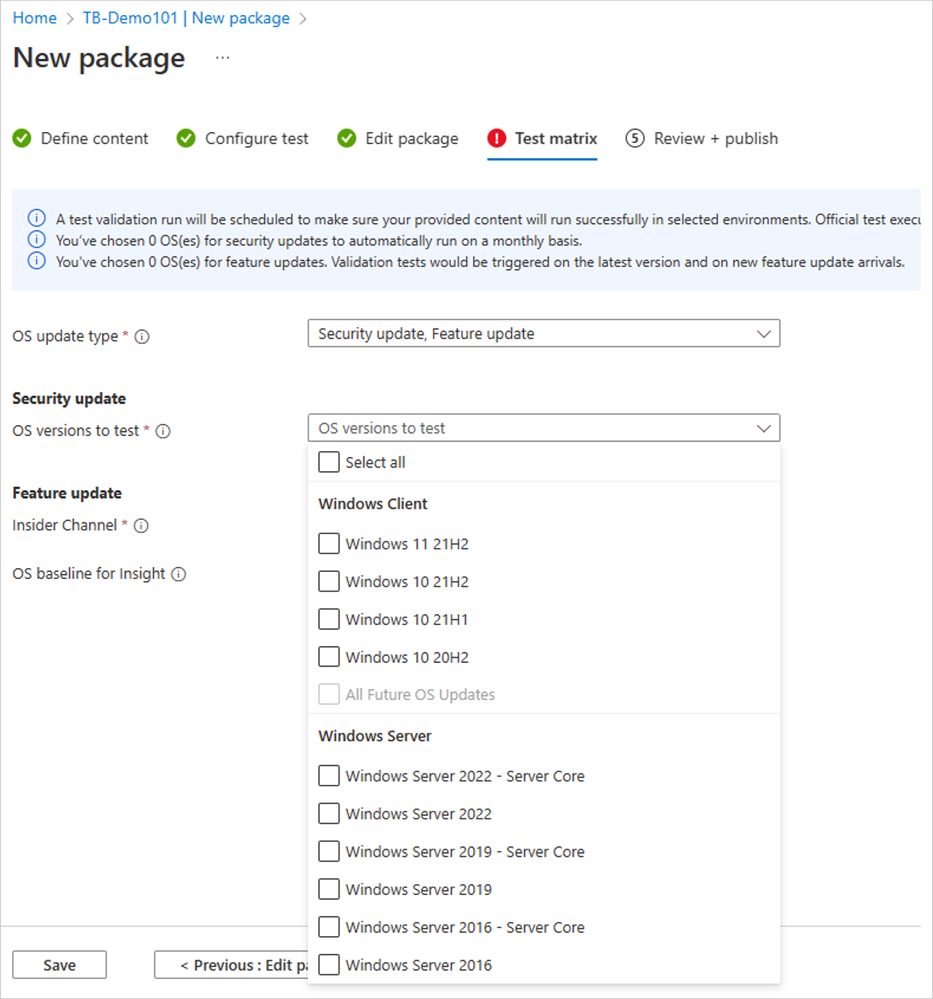

- Select New Package from the left-hand side menu.

- Within the onboarding guide, click on the Test Matrix page.

- Select the OS update type from among security update, feature update, or both.

- Use the drop-down menu to select all applicable OS versions (either in-market or pre-release) that you want to test.

- Optional: Select Inside Channel if you want to include tests on the latest available features.

- Choose your OS baseline for insight.

Note: To choose a baseline OS, evaluate which OS version your organization is currently using. This will offer you more regression insights. You can also leave it empty if you prefer to focus on new feature testing.

|

The Test Base testing matrix of in-market and pre-release security and feature updates

The Test Base testing matrix of in-market and pre-release security and feature updates

That’s how you can easily automate testing against in-market and pre-release Windows security and feature updates. Start today with these considerations:

- To become a Full Access customer and start testing on pre-release updates, see Request to change access level.

- To always get the latest features available, select a Windows Insider channel to run your tests on.

- In case you still don’t find the OS you want to use as a baseline, let us know!

Worry-free interoperability assurance

We know your users have multiple applications running on their devices at the same time: mail app, browser, collaboration tools, etc. Sometimes, updates affect the interaction between applications and the OS, which could then affect the application’s performance.

Do you find yourself wishing for better predictability of regressions from Microsoft product updates or more lead time to fix occasional resulting issues? Use our interoperability tests with detailed reliability signals just for that. Find them within the same Test Base onboarding experience:

- Select the New Package flow.

- Navigate to the Configure test tab.

- Toggle the Pre-install Microsoft apps to turn it on under Test type.

The pre-install Microsoft apps is toggled on in the New package configuration in Test Base

The pre-install Microsoft apps is toggled on in the New package configuration in Test Base

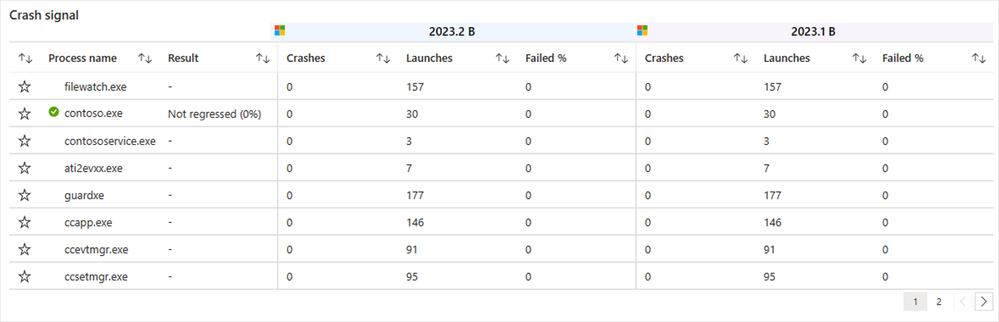

For interoperability assurance, you’d want to look for any signals of regressions after running the test. From the test summary, select the run you want to examine closer, and navigate to the Reliability tab. Here’s what a successful test would look like, comparing the number of crashes between the target test run and the baseline.

Detailed reliability signals for feature update test results in Test Base

Detailed reliability signals for feature update test results in Test Base

With Test Base, you can conduct pre-release testing against monthly Microsoft 365 and Office 365 updates for extra confidence that you are covered across a broad range of Microsoft product updates. Leverage the automation in Test Base to schedule monthly tests whenever the monthly updates become available! For that, learn how to pick security testing options in the Set test matrix step in our official documentation.

Support throughout the entire validation lifecycle

The best part about Test Base is that you can use it throughout the entire Windows and Microsoft 365 apps validation lifecycle, supported by people and tools you trust. Use our support not only for the annual Windows updates, but also for the monthly security updates.

- If you’re a developer, take advantage of integrations with familiar development tools like Azure DevOps and GitHub to test as you develop. That way, you can catch regressions before they are introduced to end users. Check out our documentation on how you can integrate Test Base into your Continuous Integration/Continuous Delivery pipeline.

- Whether you’re an app developer or an IT pro, work with an App Assure engineer to get support with remediating any issues you find.

The table below outlines some of the key benefits that Test Base provides across the end-to-end validation lifecycle: from testing environment, to testing tools and software, and, finally, to testing services.

Testing environment

|

Testing tools & software

|

Testing services

|

- Elastic cloud capacity

- Access to pre-release Windows and Microsoft 365 Apps builds

|

- Low-code, no-code automation

- CPU/memory insights

- Automated Failure analysis

|

- Automated setup and configuration

- Actionable notifications

- Joint remediation with App Assure

|

Get started today

Sign up for a free trial of Test Base to try out these features and optimize your testing process! To get started, simply sign up for an account via Azure or visit our website for more information.

Interested in learning more? Watch this contextualized walk-through of the Test Base service: How to build app confidence with Test Base. You can also leverage our series of how-to videos.

Check out more information and best practices on how to integrate Test Base into your Continuous Integration/Continuous Delivery pipeline in our GitHub documentation. We’ve also put together some sample packages that you can use to test out the onboarding process:

Finally, join our Test Base for Microsoft 365 Tech Community or GitHub Discussions Community to share your experiences and connect with other users.

Continue the conversation. Find best practices. Bookmark the Windows Tech Community and follow us @MSWindowsITPro on Twitter. Looking for support? Visit Windows on Microsoft Q&A.

An image demonstrating the user interface of Outlook for Mac.

An image demonstrating examples of personal email providers supported in Outlook.When you log into Outlook with a personal email account, you get enterprise-grade security, with secure sign-on to authenticate and protect your identity—all while keeping your email, calendar, contacts, and files protected.

An image demonstrating previews of the Menu Bar (left), a Notification Center reminder (top right), and a Widget (bottom right) in Outlook for Mac.

An image demonstrating the Handoff feature in Outlook for Mac on a desktop and mobile device.

An image demonstrating the Outlook Profiles feature in Outlook for Mac.

An image providing a preview of the Focused Inbox and Swipe Gestures features in Outlook for Mac.

Recent Comments