by Contributed | Jul 7, 2023 | Technology

This article is contributed. See the original author and article here.

Para te ajudar a obter novos conhecimentos, teremos a Maratona #AISkills, uma imersão gratuita e online, destinada para pessoas que querem aprender a usar a IA na prática, abordando temas como Ética na IA, Prompt Engineering, GitHub Copilot, Análise de Dados, Machine Learning, Azure OpenAI e muito mais.

18 de Julho, 12:30h

Palestrante:

Danielle Monteiro

|

Princípios do Microsoft Responsible AI na prática

IA responsável é a aplicação de princípios éticos e sociais na criação, desenvolvimento e uso de tecnologias de Inteligência Artificial (IA). Estes princípios ajudam a garantir que os sistemas de IA sejam usados de forma responsável e segura, ao mesmo tempo em que ajudam a proteger os direitos humanos.

|

19 de Julho, 12:30h

Palestrante:

Henrique Eduardo Souza

|

Ganhe produtividade com Prompt Engineering

Prompt engineering é a prática de fornecer instruções específicas a um modelo de IA para produzir os resultados desejados. Do Chat GPT ao GitHub Copilot, você aprenderá a escrever prompts eficientes para gerar textos e até mesmo, linhas de código. |

01 de Agosto, 12:30h

Palestrante:

Cynthia Zanoni

|

Como criar um jogo de pedra, papel e tesoura com o GitHub Copilot

O GitHub Copilot está transformando a produtividade e a jornada de aprendizado de pessoas desenvolvedoras. Nesta palestra, vamos explorar o Codespaces e o GitHub Copilot para criar um jogo de pedra, papel e tesoura! |

03 de Agosto, 12:30h

Palestrante:

Livia Regina Bijelli Ferreira

|

Microsoft Fabric: análise de dados para a era da IA

O mundo de hoje está inundado de dados — sempre em fluxo dos dispositivos que usamos, dos aplicativos que construímos e das interações que temos. O Microsoft Fabric é uma plataforma de análise unificada de ponta a ponta que reúne todos os dados e ferramentas de análise de que as organizações precisam. O Fabric integra tecnologias como Azure Data Factory, Azure Synapse Analytics e Power BI em um único produto unificado, capacitando dados e profissionais de negócios a liberar o potencial de seus dados e estabelecer as bases para a era da IA. |

15 de Agosto, 12:30h

Palestrante:

Pablo Lopes

|

Criando um assistente com o Azure OpenAI Service

Crie um assistente natural com o Azure OpenAI, faça notas, pegue os pontos mais importantes e feedback sobre as ideias como especialista.

|

16 de Agosto, 19h

Palestrante:

Beatriz Matsui

|

Introdução a MLOps: Conceitos e Prática

O machine learning está no núcleo da inteligência artificial, e muitos aplicativos e serviços modernos dependem de modelos de machine learning preditivos. Nesta sessão vamos falar sobre conceitos de MLOps (Machine Learning Operations) e como trabalhar com tais práticas usando ferramentas de DevOps e aprendizado de máquina da Microsoft. |

O Cloud Skills Challenge é uma plataforma integrada com o Microsoft Learn, portal global de cursos gratuitos da Microsoft, disponível 24 horas por dia, 7 dias por semana. Além de assistir as aulas gratuitas da Maratona #AISkills, você pode participar do nosso grupo de estudos de Inteligência Artificial, basta realizar a sua inscrição no Cloud Skills Challenge.

Depois de concluir um desafio, você receberá um selo do Microsoft Learn AI Skills Challenge e um certificado de conclusão. Na Maratona #AISKills, temos 4 trilhas de desafio de estudos. Você pode escolher uma ou mais trilhas!

Desafio de Machine Learning

O aprendizado de máquina está no centro da inteligência artificial, e muitos serviços modernos dependem de modelos preditivos de aprendizado de máquina. Saiba como usar o Aprendizado de Máquina do Azure para criar e publicar modelos sem escrever código. Você também explorará as várias ferramentas de desenvolvedor que pode usar para interagir com o espaço de trabalho.

Desafio dos Serviços Cognitivos

Os Serviços Cognitivos do Azure são blocos de construção da funcionalidade de IA que podem ser integrados em aplicativos. Você aprenderá a provisionar, proteger e implantar serviços cognitivos. Usando essa ferramenta, você pode criar soluções inteligentes que extraem significado semântico do texto e oferecem suporte a cenários comuns de visão computacional.

Desafio de operações de aprendizado de máquina (MLOps)

As operações de aprendizado de máquina (MLOps) aplicam princípios de DevOps a projetos de aprendizado de máquina. Você aprenderá a implementar conceitos chave, como controle de origem, automação e CI/CD para criar uma solução MLOps de ponta a ponta enquanto usa Python para treinar, salvar e usar um modelo de aprendizado de máquina.

Desafio do Construtor de IA

Este desafio apresenta o AI Builder, ensina como criar modelos e explica como você pode usá-los no Power Apps e no Power Automate. Você aprenderá a criar tópicos, entidades personalizadas e variáveis para capturar, extrair e armazenar informações em um bot.

Os desafios começam dia de 17 de Julho e se quiser antecipar sua inscrição, acesse a página do Cloud Skills Challenge.

BÔNUS

BÔNUS

Benefícios gratuitos para estudantes

Tutoriais do GitHub para iniciantes em programação

Quero trazer um destaque para estes recursos, pois todos os tutoriais foram traduzidos para Português com a ajuda de nossa comunidade Microsoft Learn Student Amabassadors. Então, acesse os tutoriais e não esqueça de deixar uma estrelinha!

Cursos no Microsoft Learn com certificado

by Contributed | Jul 6, 2023 | Technology

This article is contributed. See the original author and article here.

Microsoft Purview Data owner policies is a cloud-based service that helps you provision access to data sources and datasets securely and at scale. Data owner policies expose a REST API through which you can grant any Azure AD identity (user, group or service principal) to have Read or Modify access to a dataset or data resource. The scope for the access can range from fine-grained (e.g., Table or File) to broad (e.g., entire Azure Resource Group or Subscription). This API provides a consistent interface that abstracts the complexity of permissions for each type of data source.

More about Microsoft Purview Data policy app and the Data owner policies at these links:

If you would like to test drive the API, sign-up here to join the private preview.

by Contributed | Jul 5, 2023 | Technology

This article is contributed. See the original author and article here.

Today, we’re excited to announce the public preview of Exchange Online Role Based Access Control (RBAC) management in Microsoft Graph. The preview is designed for admins who want a consistent management interface, and for developers who want to programmatically control RBAC.

The public preview supports create, read, update, and delete APIs in Microsoft Graph which conform to a Microsoft-wide RBAC schema. Exchange Online RBAC role assignments, role definitions, and management scopes are supported through this new API.

With this preview, Exchange Online joins other RBAC systems in the Microsoft Graph Beta API, namely, Cloud PC, Intune, and Azure AD directory roles and entitlement management.

How Unified RBAC for Exchange Online works

Admins assigned the appropriate RBAC role in Exchange Online can access Unified RBAC using the Microsoft Graph beta endpoint or by using Microsoft Graph PowerShell. RBAC data remains stored in Exchange Online and can be configured using Exchange Online PowerShell.

In addition to Exchange RBAC permissions, you will also need one of these permissions:

- RoleManagement.Read.All

- RoleManagement.ReadWrite.All

- RoleManagement.Read.Exchange

- RoleManagement.ReadWrite.Exchange

Actions and entities supported in this preview:

Entity

|

Endpoint

|

Allowed API Actions

|

Read

|

Create

|

Update

|

Delete

|

Roles

|

graph.microsoft.com /beta/roleManagement/exchange/roleDefinitions

|

✓

|

X

|

X

|

✓

|

Assignments

|

graph.microsoft.com /beta/roleManagement/exchange/roleAssignments

|

✓

|

✓

|

✓

|

✓

|

Scopes

|

graph.microsoft.com /beta/roleManagement/exchange/customAppScopes

|

✓

|

✓

|

✓

|

✓

|

Role Groups

|

Not supported

|

X

|

X

|

X

|

X

|

Transitive Role Assignment

|

Not supported

|

X

|

X

|

X

|

X

|

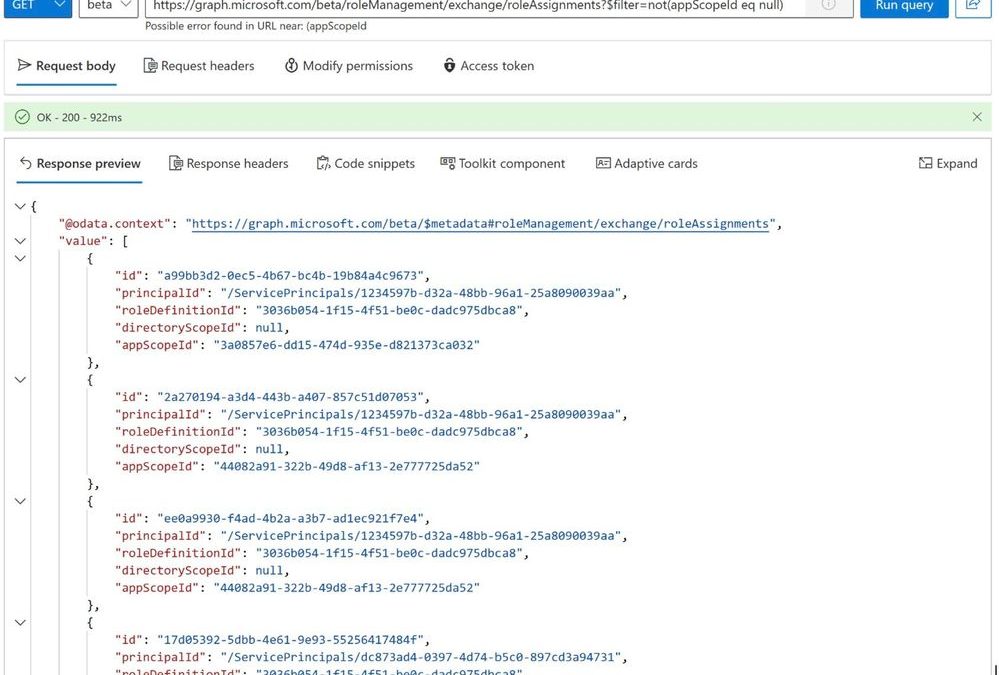

Reading the list of role assignments assigned with a management scope:

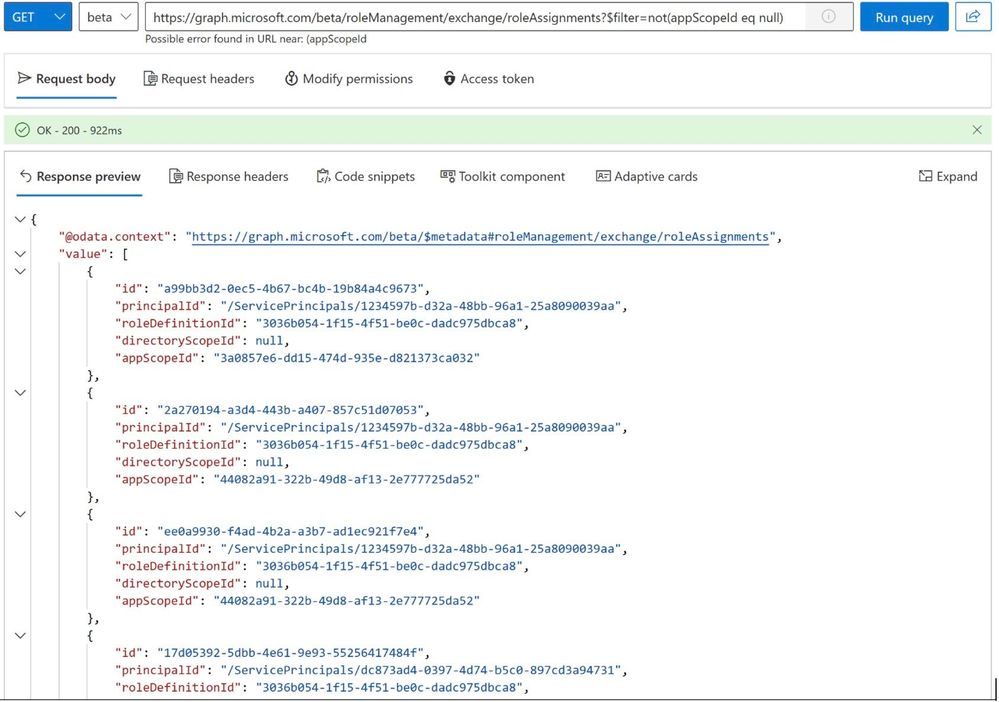

Reading the list of Management Scopes:

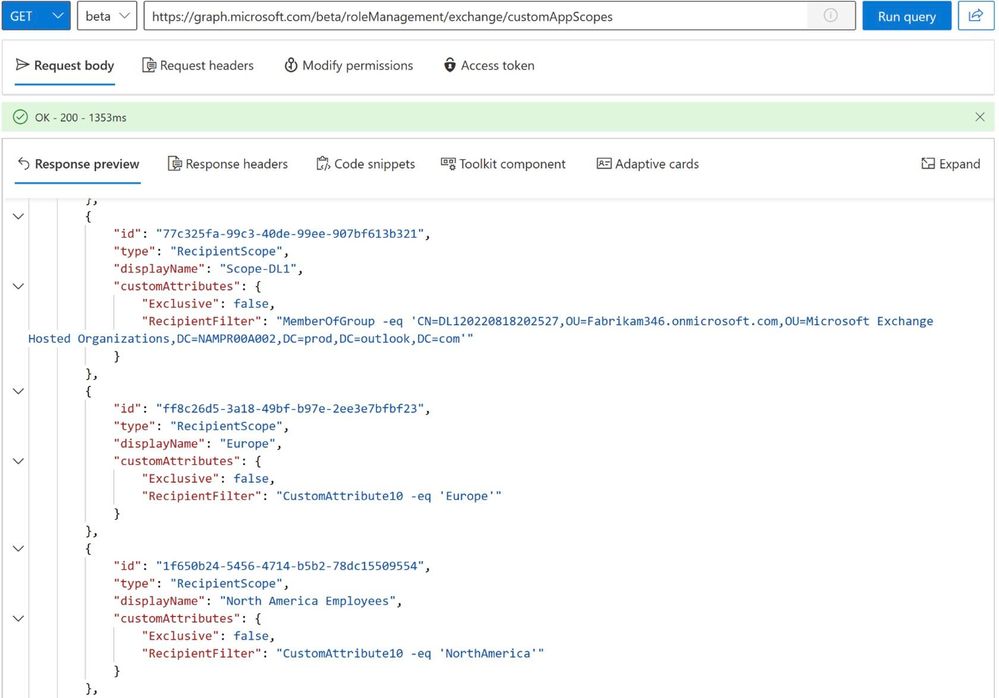

List roles using Microsoft Graph PowerShell:

Try the Public Preview Today

Unified RBAC is available to all tenants today as a part of the public preview. See Use the Microsoft Graph SDKs with the beta API and roleManagement resource type for more information.

We’d love your feedback on the preview. You can leave a comment here or share it with us at exourbacpreview@microsoft.com.

FAQs

Does this API support app-only access?

Not yet. This will be added to the preview later.

Exchange Online Team

by Contributed | Jul 4, 2023 | Technology

This article is contributed. See the original author and article here.

People sometimes wish to review and change their form or quiz responses after submission, even days later. We’re happy to share that you can now review and edit responses when needed.

- First, save your response

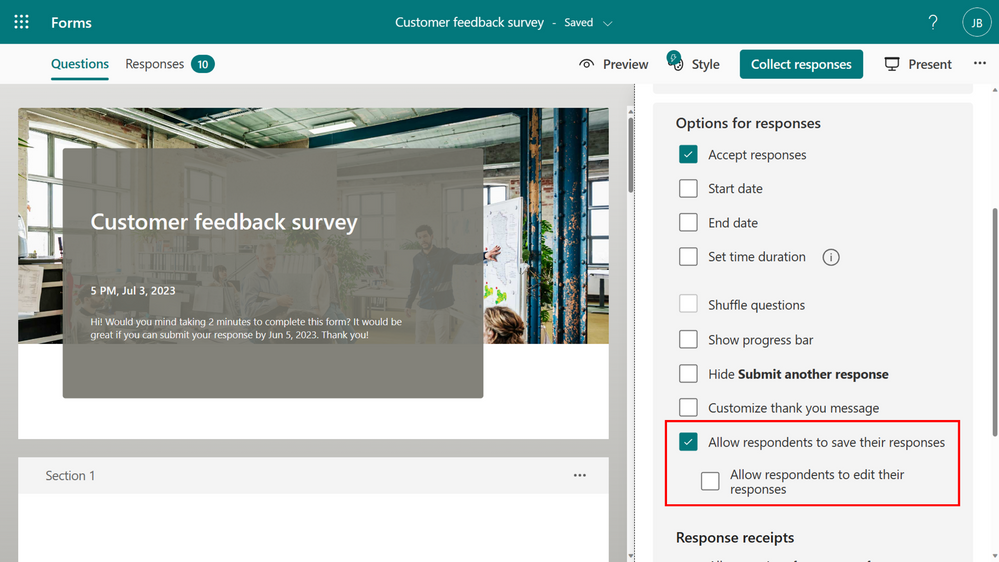

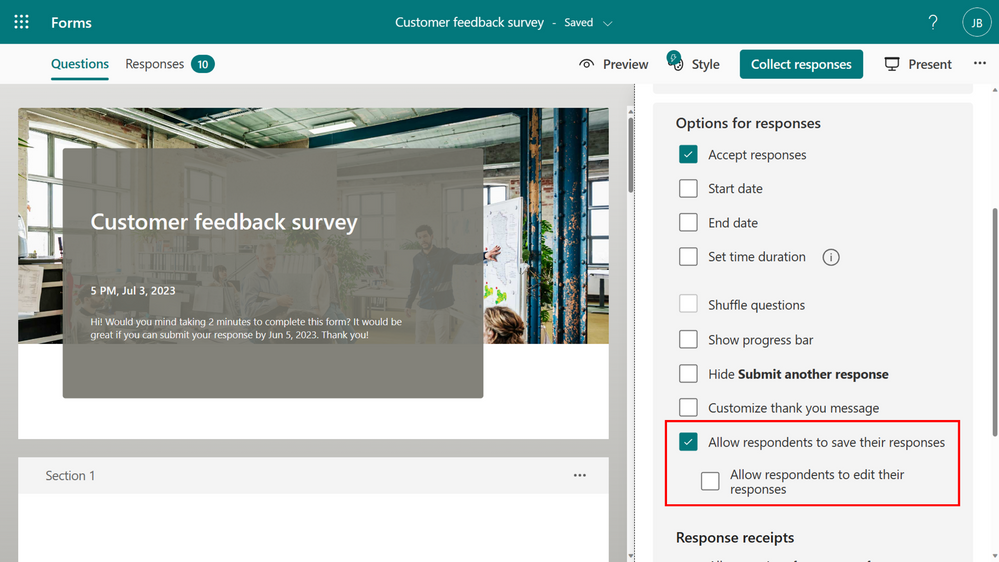

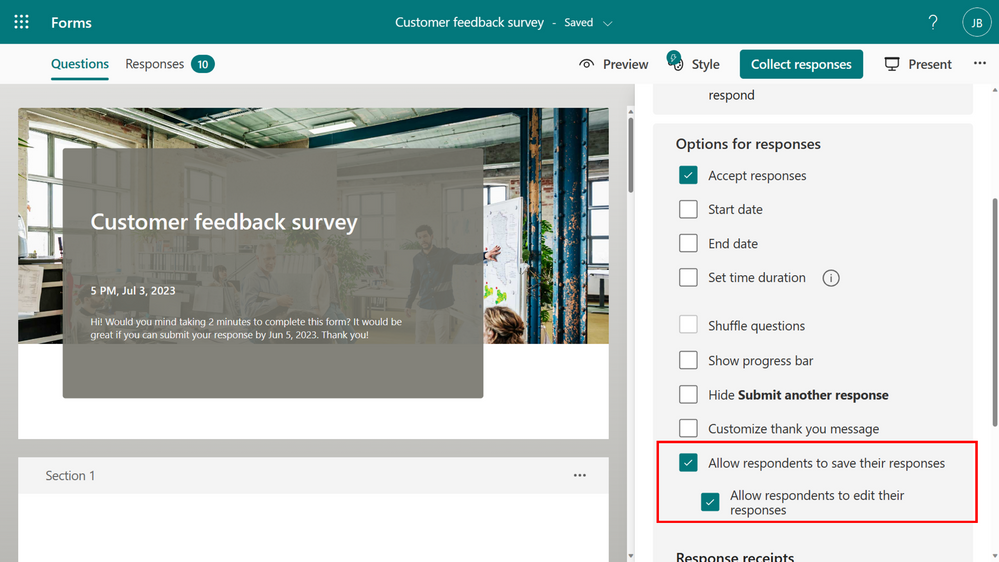

To save your response, ensure that the form creator has selected “Allow respondents to save their responses” option in the Forms settings.

Forms setting – save response

Forms setting – save response

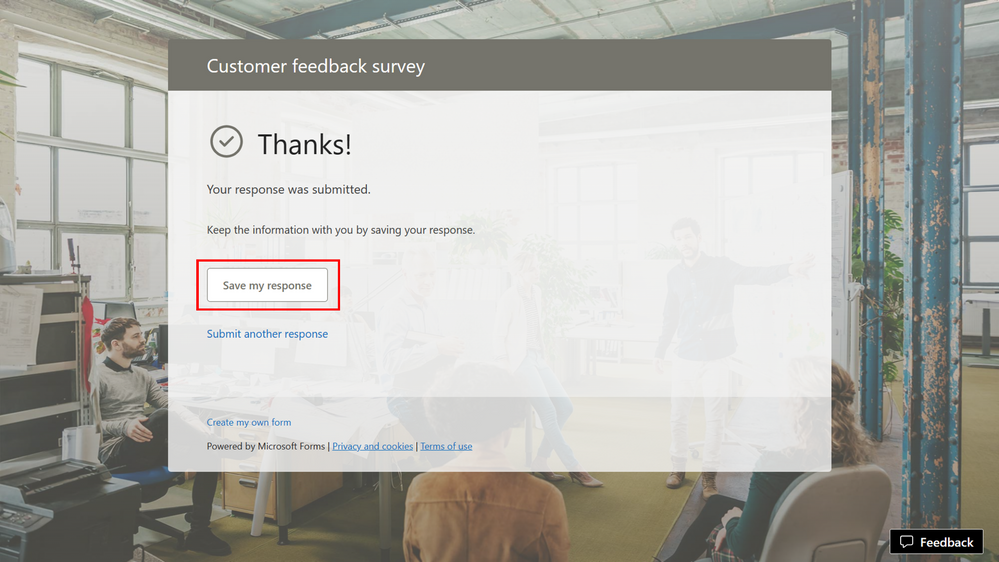

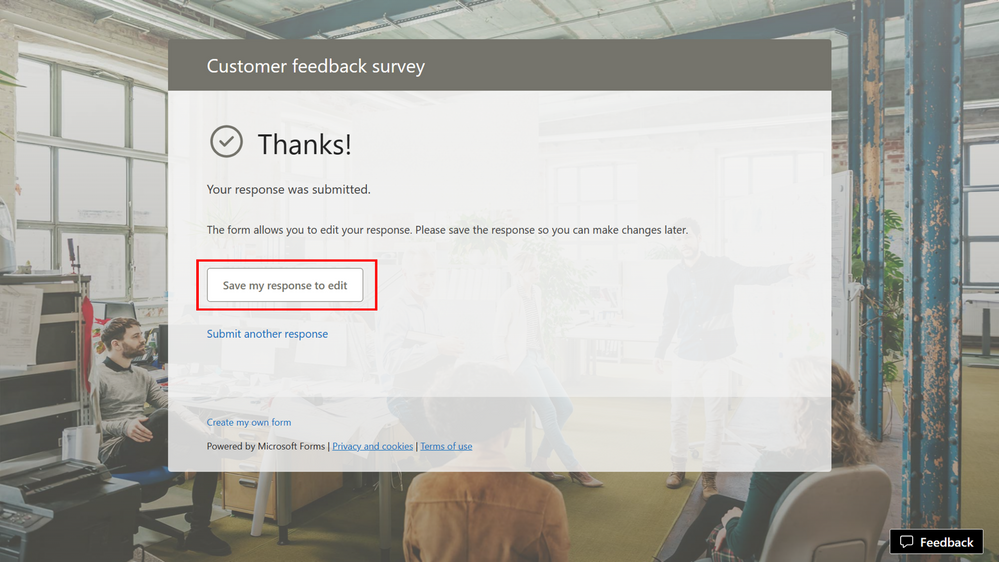

Once the setting is enabled, and you submit a form, you will have the option to save your response from the thank you page.

“Save my response” in Thank you page

“Save my response” in Thank you page

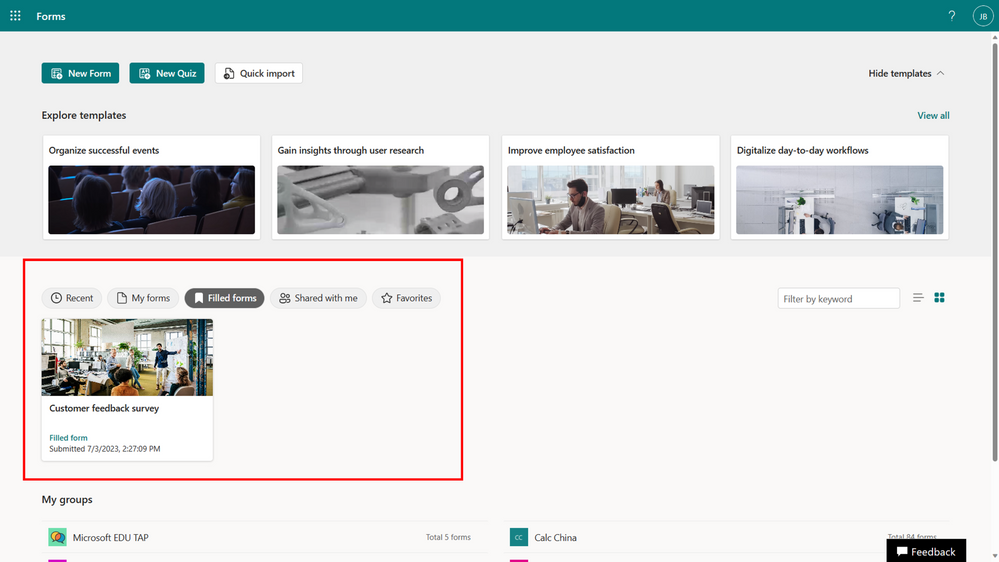

The response will be saved in “Filled forms” in Microsoft Forms.

Filled forms

Filled forms

- If enabled, you can then edit your response

The form’s creator must select “Allow respondents to edit their responses” in the form’s setting.

Forms setting – edit response

Forms setting – edit response

If enabled, you will have the option to “Save my response to edit” on the thank you page.

“Save my response to edit” in Thank you page

“Save my response to edit” in Thank you page

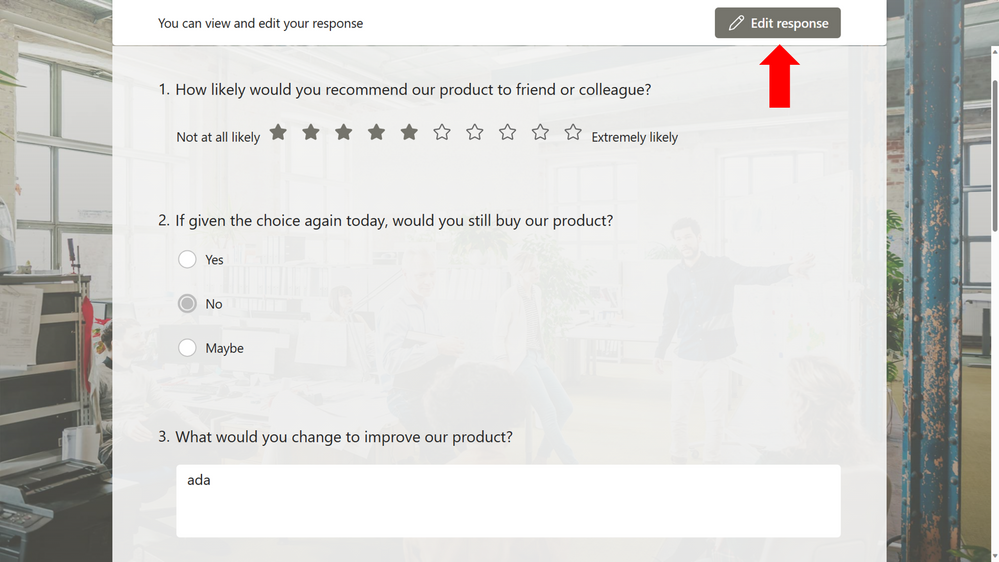

As long as the form is open, you have the flexibility to revisit the form at any time to edit your answers. However, edits cannot be made once a form has been closed or deleted.

Edit response

Edit response

FAQ

Where is the data saved?

As with all forms, the response data will be stored in the form creator’s repository only. Respondents can only view their own and cannot make any changes unless the form creator allows them to edit their response. If the form or the response is removed, the respondent will no longer have access to the response.

What’s the limitation of the feature?

Updating a response do not trigger a Power Automate flow. This capability will be enabled soon.

We hope you find these new features helpful! We will continue working on enabling more options with Microsoft Forms. Let us know if you have questions or if there’s anything else we can help you with!

by Contributed | Jul 3, 2023 | Technology

This article is contributed. See the original author and article here.

In this issue:

Ace Aviator of the Month

What is your role and title? What are your responsibilities associated with your position?

I am a Senior Digital Architect with Sonata Software Europe Limited. I am responsible for delivering the right integration technologies to the customers. I interact with my team members to share the latest updates on various integrations and brainstorm the best fit for the client requirement. My manager provides me additional support in exploring new concepts and encourages us to do POCs with latest updates and share the knowledge to the wider forum.

Can you provide some insights into your day-to-day activities and what a typical day in your role looks like?

I plan my day beforehand, but it takes a different direction as it progresses. This is what my role demands. I am involved in multiple assignments and learn everyday with various challenges. More complex it is, the more growth is my thought, and this keeps me motivated throughout the day. I also offer guidance to other teams, and I get excited when the discussion or the task is for Logic Apps integration.

What motivates and inspires you to be an active member of the Aviators/Microsoft community?

My spouse, Santosh Ramamurthy, always motivates me in upgrading my skills. He supported my learning path and noticed my passion, he advised me to write blogs, sharing techniques via forums.

I started my career in 2006 and have been travelling with Microsoft Technologies for close to 17 years. With the limited resources those days, we had challenges in getting proper guidance. We referred to books and often learnt from our mistakes. I thought our future generation should have a strong foundation on technologies which they can pass on. So, I started writing blogs, attending forums, giving sessions which will motivate the people who are new to the technology or planning to switch the domain. Resources are abundant nowadays. How to use and where to apply is our skill. Logic Apps Aviators is a community which binds the people at all levels – be it beginner, intermediate or expert.

Looking back, what advice do you wish you would have been told earlier on that you would give to individuals looking to become involved in STEM/technology?

As I said earlier, resources are abundant. Learn to use, apply, make mistakes, correct them, and keep moving. Gone are the days where a high-speed PC or a classroom is needed to start your learning path. Most of the technologies are giving free trial and lab environments to encourage the individuals and give an attempt on the new skill. It is only the interest and the passion which keeps their learning spirit on.

What has helped you grow professionally?

Passion for learning new skills, handling complexities are my best teachers. Expand the network and time management is an important skill as we have numerous distractions around us. Tiny drops make a mighty ocean – so learning something new every day (be it simple concept or complex) and we are sure to trace the growth in the learning graph.

Imagine you had a magic wand that could create a feature in Logic Apps. What would this feature be and why?

Logic apps can create wonders in integration when some of the minor features can be incorporated to make them more friendly to the beginners.

For instance, when suggesting recurring API integration with D365FO via logic apps, there comes a question of creating a zipped package file. As most connectors are missing this action and even though azure functions / ADF / third-party tool comes to rescue, integration becomes simpler if available readymade.

Also, a feature to track the state of the previous run – this is needed for integrations to safeguard the data overlap and thus to cancel the run automatically if the previous execution is still in progress.

News from our product group:

News from our community:

Microsoft Previews .NET Framework Custom Code for Azure Logic Apps Standard

Post by Steef-Jan Wiggers

Read more about the public preview for .NET Framework Custom Code from Aviator’s own Steef-Jan!

Resolving 401 “Forbidden” Error When Deploying Logic Apps ARM Template

Post by Harris Kristanto

Harris discusses the issue of encountering a 401 Forbidden error during the deployment of Logic Apps ARM templates and provides steps to resolve it. Learn the potential causes of the error and suggests troubleshooting methods, including adjusting authentication settings and ensuring proper access permissions are set, to successfully deploy the Logic Apps template.

Compare Azure Messaging Services | How to Chose | Azure Service Bus vs Event Hub vs Event Grid

Post by Srikanth Gunnala

In this video Srikanth discusses Azure Messaging Services’ three most utilized components: Azure Service Bus, Azure Event Grid, and Azure Event Hub. See real-world applications and demonstrations on how these services ensure smooth communication between different parts of a software program.

Mastering GraphQL Resolvers for Cosmos DB

Post by Ryan Langford

Developers looking for more cloud skills should read Ryan’s post on mastering GraphQL Resolvers in Azure Cosmos DB. He covers everything from setting up your API Manager Instance to querying and mutating the graph in Azure Cosmos DB.

BÔNUS

BÔNUS

Recent Comments