by Contributed | Dec 6, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

Final Update: Monday, 07 December 2020 00:49 UTC

We’ve confirmed that all systems are back to normal with no customer impact as of 12/7, 00:32 UTC. Our logs show the incident started on 12/6, 23:46 UTC and that during the 46 minutes that it took to resolve the issue customers may have experienced data access issues as well as failed or misfired alerts for Azure Log Analytics in North Europe.

- Root Cause: The failure was due to an upgrade of one of our back end systems.

- Incident Timeline: 46 minutes – 12/6, 23:46 UTC through 12/7, 00:32 UTC

We understand that customers rely on Azure Log Analytics as a critical service and apologize for any impact this incident caused.

-Ian

Update: Monday, 07 December 2020 00:17 UTC

We continue to investigate issues within Log Analytics. Root cause is not fully understood at this time. Some customers may experience experience data access issues as well as failed or misfired alerts for Azure Log Analytics in North Europe. We are working to establish the start time for the issue, initial findings indicate that the problem began at 12/06 23:46 UTC. We currently have no estimate for resolution.

- Work Around: none

- Next Update: Before 12/07 03:30 UTC

-Ian

by Contributed | Dec 6, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

Symptoms:

Creating or importing database failed due to violation of policy “Azure SQL DB should avoid using GRS backup”

When creating database, you should specify the backup storage to be used, while the default is GRS (Globally redundant storage) and it violates the policy.

While importing database either using Azure Portal, RestAPI, SSMS or SQLPackage you cannot specify the backup storage as it’s not implemented yet.

This cause the import operation to fail with the following exception:

Configuring backup storage account type to ‘Standard_RAGRS’ failed during Database create or update.

Solution:

You can either

- create the database with local backup storage and then import to existing database.

- Use ARM Template to do both create the database with local backup storage and import the bacpac file.

Examples:

Option (A)

- Create the database with Azure Portal or by using T-SQL

CREATE DATABASE ImportedDB WITH BACKUP_STORAGE_REDUNDANCY = ‘ZONE’;

- Import the database using SSMS or SQLPackage

Option (B)

You may use ARM template to create database with specific backup storage and import the database at the same time.

This can be done with ARM template by using the extension named “import”

Add the following Json to your ARM template to your database resource section and make sure you provide the values for the parameters or set the values hardcoded in the template

The needed information is:

Storage Account key to access the bacpac file

Bacpac file URL

Azure SQL Server Admin Username

Azure SQL Server Admin Password

"resources": [

{

"type": "extensions",

"apiVersion": "2014-04-01",

"name": "Import",

"dependsOn": [

"[resourceId('Microsoft.Sql/servers/databases', parameters('ServerName'), parameters('databaseName'))]"

],

"properties": {

"storageKeyType": "StorageAccessKey",

"storageKey": "[parameters('storageAccountKey')]",

"storageUri": "[parameters('bacpacUrl')]",

"administratorLogin": "[parameters('adminUser')]",

"administratorLoginPassword": "[parameters('adminPassword')]",

"operationMode": "Import"

}

}

]

by Contributed | Dec 6, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

Final Update: Sunday, 06 December 2020 19:44 UTC

We’ve confirmed that all systems are back to normal with no customer impact as of 12/6, 19:30 UTC. Our logs show the incident started on 12/6, 18:08 UTC and that during the 1 hour 22 minutes that it took to resolve the issue customers in Australia Central may have experienced data access issues which could have resulted in missed or false alerts.

- Root Cause: The failure was due to an upgrade to a back end service that failed. The system was rolled back to mitigate the issue.

- Incident Timeline: 1 Hours & 22 minutes – 12/6, 18:08 UTC through 12/6, 19:30 UTC

We understand that customers rely on Azure Log Analytics as a critical service and apologize for any impact this incident caused.

-Ian

Update: Sunday, 06 December 2020 19:17 UTC

Root cause has been isolated to an update to a backend service in Australia Central which is impacting data access. To address this issue we are rolling back the update. Some customers may experience data access issues along with failed or misfired alerts.

- Work Around: none

- Next Update: Before 12/06 22:30 UTC

-Ian

by Contributed | Dec 5, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

Final Update: Saturday, 05 December 2020 14:50 UTC

We’ve confirmed that all systems are back to normal with no customer impact as of 12/05, 14:14 UTC. Our logs show the incident started on 12/05, 12:02 UTC and that during the 2 hours & 2minutes that it took to resolve the issue. Customers using Application Insights might have experienced intermittent metric data gaps and incorrect alert activations in US Gov regions.

- Root Cause: The failure was due to configuration issue in application insights .

- Incident Timeline: 2 Hours & 2 minutes – 12/05, 12:02 UTC through 12/05, 14:14 UTC

We understand that customers rely on Application Insights as a critical service and apologize for any impact this incident caused.

-Deepika

by Contributed | Dec 4, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

Most of the developers may want to stop their MySQL instance over the weekend or by end of business day after development or testing to stop billing and save cost.

Azure Database for MySQL Single Server & Flexible Server (in Preview) supports stop/start of standalone mysql server. These operations are not supported for servers which are involved in replication using read replica feature. Once you stop the server, you will not be able to run any other management operation by design.

In this blog post, I will share how can you use PowerShell Automation runbook to stop or start your Azure Database for MySQL Single Server automatically based on time-based schedule. While the example below is for Single Server, the concept can be easily be extended to Flexible Server deployment option too when PowerShell support is available.

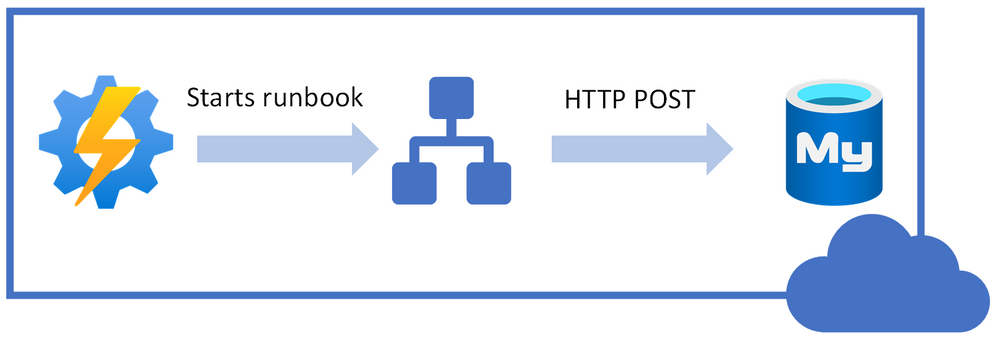

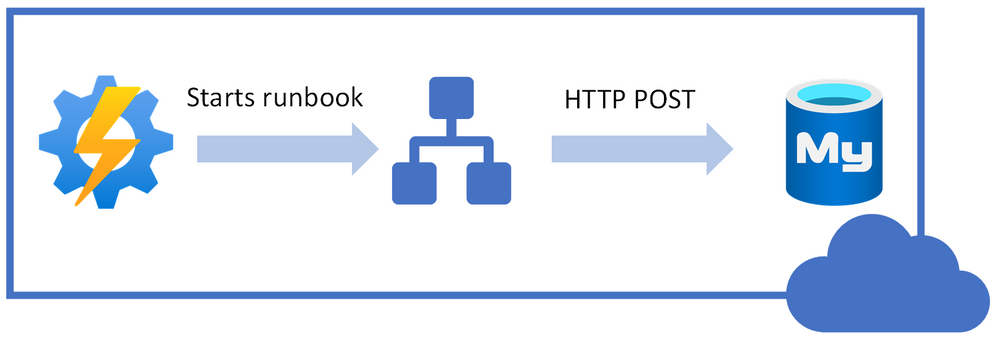

Following is high-level idea of performing stop/start on Azure Database for MySQL Single Server using REST API call from PowerShell runbook.

I will be using Azure Automation account to schedule the runbook to stop/start our Azure Database MySQL Single Server.

You can use the steps below to achieve this task:

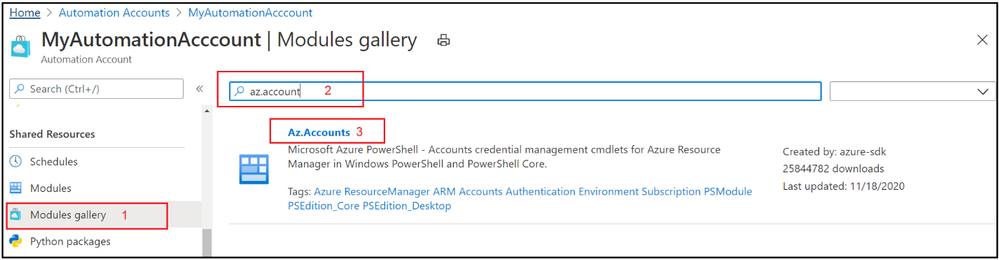

1. Navigate to the Azure Automation Account and make sure that you have Az.Accounts module imported. If it’s not available in modules, then import the module first:

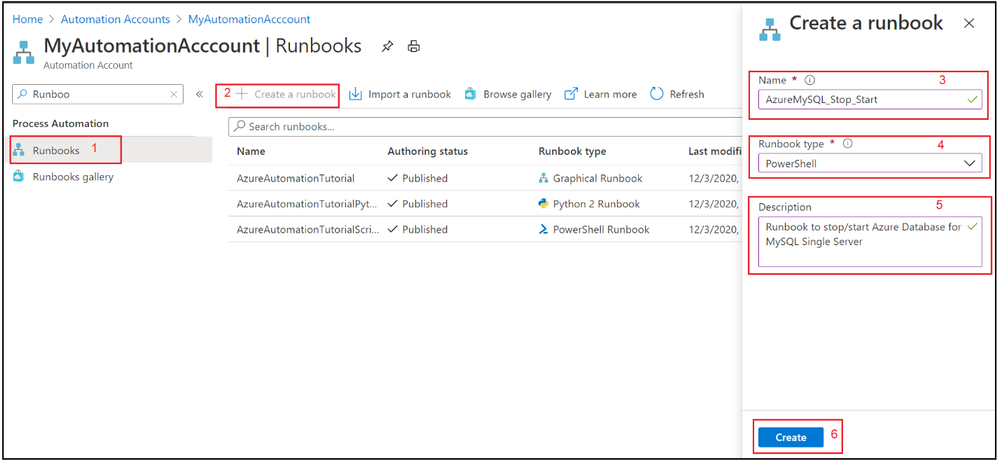

2. Select Runbooks blade and create a new PowerShell runbook.

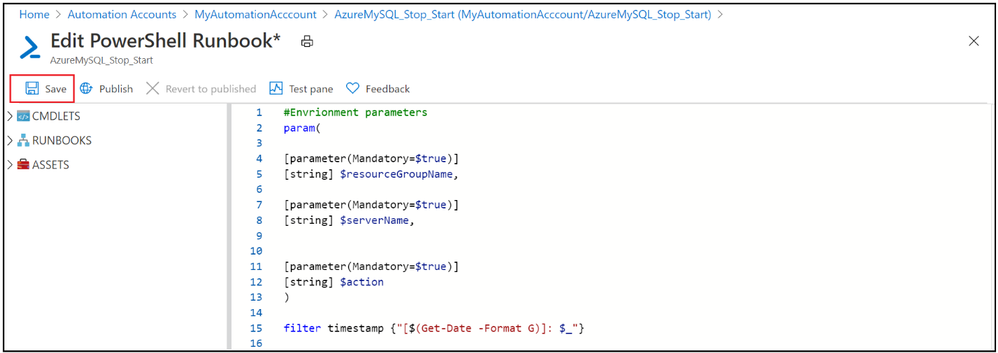

3. Copy & Paste the following PowerShell code to newly created runbook.

#Envrionment parameters

param(

[parameter(Mandatory=$true)]

[string] $resourceGroupName,

[parameter(Mandatory=$true)]

[string] $serverName,

[parameter(Mandatory=$true)]

[string] $action

)

filter timestamp {"[$(Get-Date -Format G)]: $_"}

Write-Output "Script started." | timestamp

#$VerbosePreference = "Continue" ##enable this for verbose logging

$ErrorActionPreference = "Stop"

#Authenticate with Azure Automation Run As account (service principal)

$connectionName = "AzureRunAsConnection"

try

{

# Get the connection "AzureRunAsConnection "

$servicePrincipalConnection=Get-AutomationConnection -Name $connectionName

"Logging in to Azure..."

Add-AzAccount `

-ServicePrincipal `

-TenantId $servicePrincipalConnection.TenantId `

-ApplicationId $servicePrincipalConnection.ApplicationId `

-CertificateThumbprint $servicePrincipalConnection.CertificateThumbprint | Out-Null

}

catch {

if (!$servicePrincipalConnection)

{

$ErrorMessage = "Connection $connectionName not found."

throw $ErrorMessage

} else{

Write-Error -Message $_.Exception

throw $_.Exception

}

}

Write-Output "Authenticated with Automation Run As Account." | timestamp

$startTime = Get-Date

Write-Output "Azure Automation local time: $startTime." | timestamp

# Get the authentication token

$azContext = Get-AzContext

$azProfile = [Microsoft.Azure.Commands.Common.Authentication.Abstractions.AzureRmProfileProvider]::Instance.Profile

$profileClient = New-Object -TypeName Microsoft.Azure.Commands.ResourceManager.Common.RMProfileClient -ArgumentList ($azProfile)

$token = $profileClient.AcquireAccessToken($azContext.Subscription.TenantId)

$authHeader = @{

'Content-Type'='application/json'

'Authorization'='Bearer ' + $token.AccessToken

}

Write-Output "Authentication Token acquired." | timestamp

##Invoke REST API Call based on specified action

if($action -eq 'stop')

{

# Invoke the REST API

$restUri='https://management.azure.com/subscriptions/6ff855b5-ee6d-4bc2-a901-adf5569842e1/resourceGroups/'+$resourceGroupName+'/providers/Microsoft.DBForMySQL/servers/'+$serverName+'/'+$action+'?api-version=2020-01-01'

$response = Invoke-RestMethod -Uri $restUri -Method POST -Headers $authHeader

Write-Output "$servername is getting stopped." | timestamp

}

else

{

# Invoke the REST API

$restUri='https://management.azure.com/subscriptions/6ff855b5-ee6d-4bc2-a901-adf5569842e1/resourceGroups/'+$resourceGroupName+'/providers/Microsoft.DBForMySQL/servers/'+$serverName+'/'+$action+'?api-version=2020-01-01'

$response = Invoke-RestMethod -Uri $restUri -Method POST -Headers $authHeader

Write-Output "$servername is Starting." | timestamp

}

Write-Output "Script finished." | timestamp

4. Save the runbook

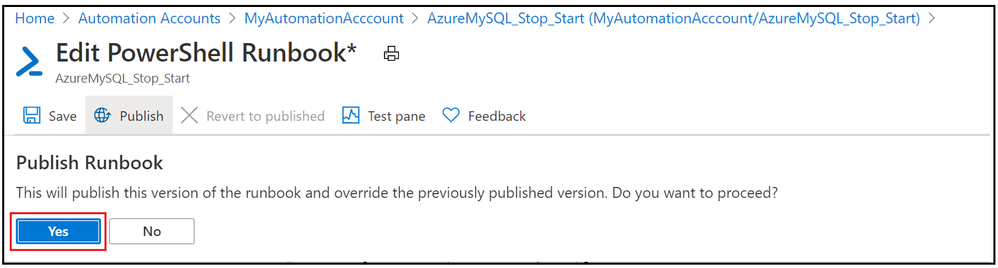

5. Publish the PowerShell runbook

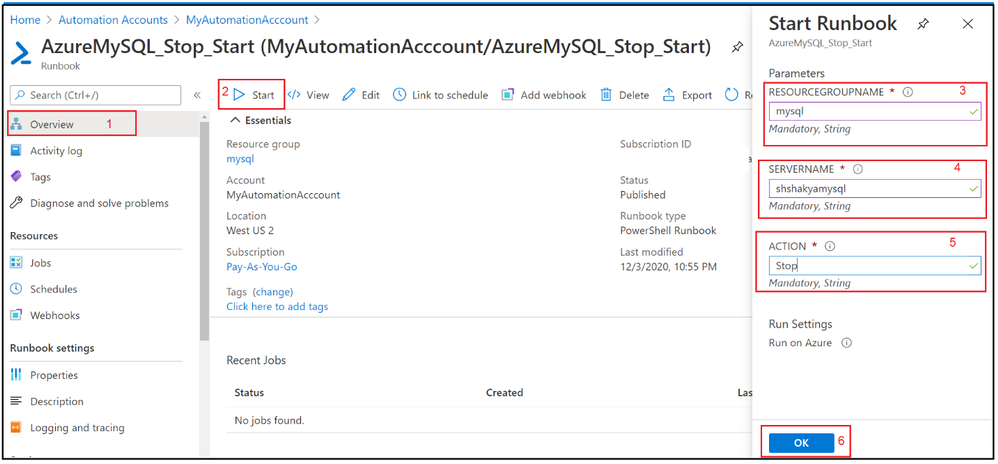

6. Test the PowerShell runbook by entering mandatory field.

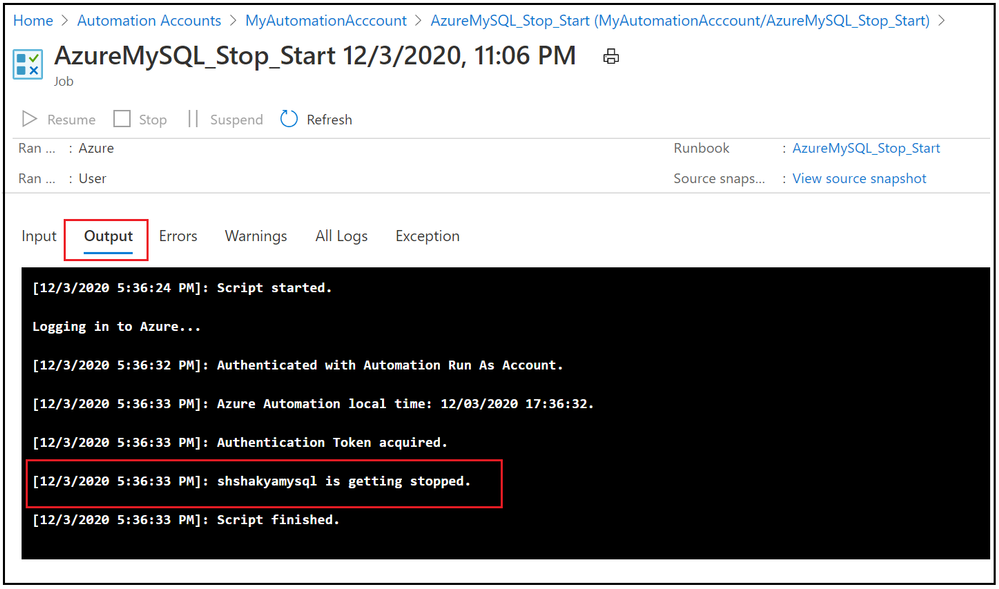

7. Verify the job output:

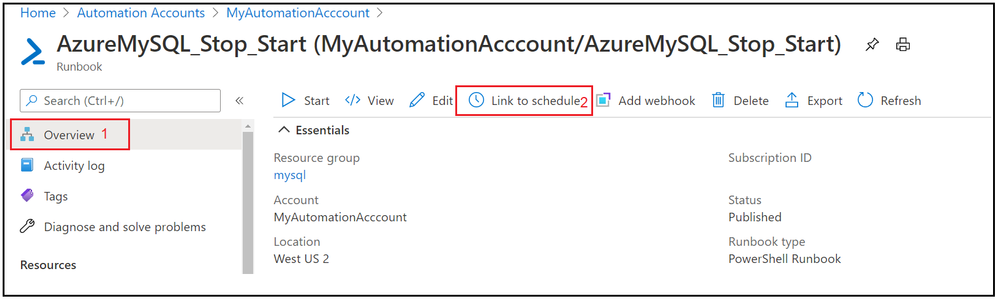

8. Now we have seen that Runbook worked as expected, lets add the schedule to stop and start Azure Database for MySQL Single Server over the weekend. Go to Overview tab and click on Link to schedule

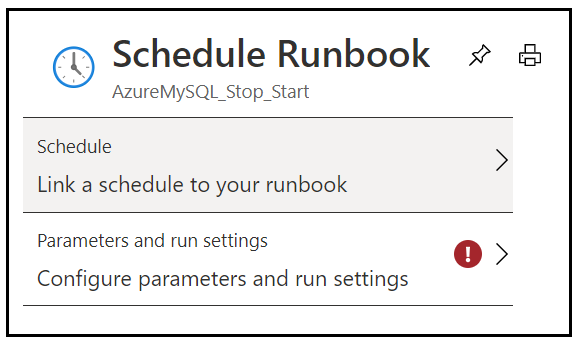

9. Add schedule and runbook parameters.

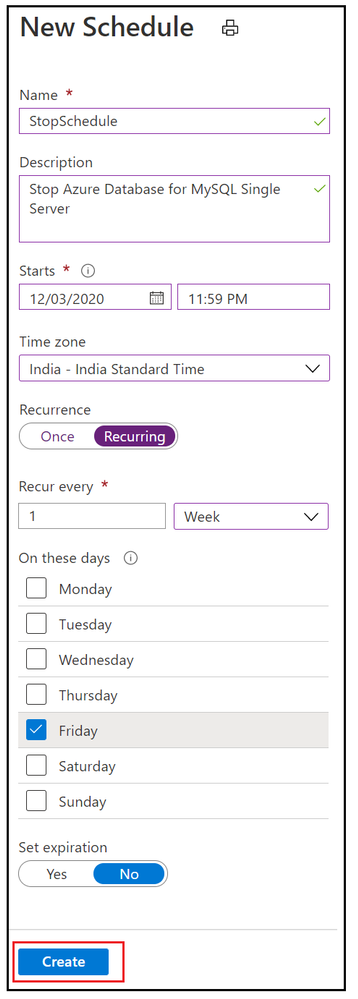

10. Add weekly stop schedule on every Friday night.

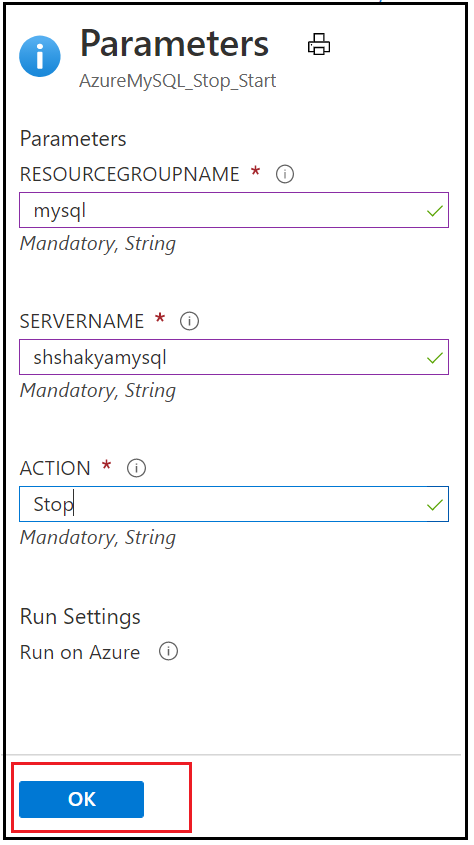

11. Add the parameters to stop Azure Database for MySQL Single Server.

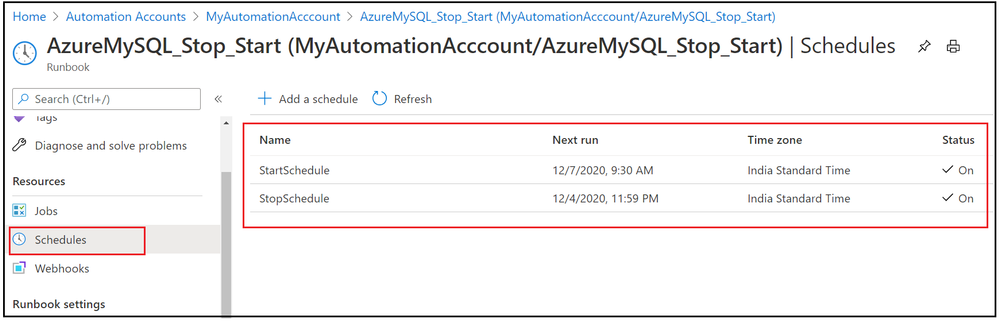

12. Similarly add a weekly schedule for Starting Azure Database for MySQL Single Server on every Monday morning and verify these stop/start schedule by navigating to Runbook’s Schedules blade.

In this activity, we have added two schedules to stop and start Azure Database for MySQL Single Server based on given action.

I hope this will be helpful to you to save cost and hope you enjoyed this learning !!!

Recent Comments