by Contributed | Sep 30, 2020 | Azure, Technology, Uncategorized

This article is contributed. See the original author and article here.

Together with the Azure Stack Hub team, we are starting a journey to explore the ways our customers and partners use, deploy, manage, and build solutions on the Azure Stack Hub platform. Together with the Tiberiu Radu (Azure Stack Hub PM @rctibi), we created a new Azure Stack Hub Partner solution video series to show how our customers and partners use Azure Stack Hub in their Hybrid Cloud environment. In this series, as we will meet customers that are deploying Azure Stack Hub for their own internal departments, partners that run managed services on behalf of their customers, and a wide range of in-between as we look at how our various partners are using Azure Stack Hub to bring the power of the cloud on-premises.

Datacom is an Azure Stack Hub partner that provides both multi-tenant environments, as well as dedicated ones. They focus on providing value to their customers and meeting them where they are by providing managed services as well as complete solutions. Datacom focuses on a number of customers ranging from large government agencies as well as enterprise customers. Join the Datacom team as we explore how they provide value and solve customer issues using Azure and Azure Stack Hub.

Links mentioned through the video:

I hope this video was helpful and you enjoyed watching it. If you have any questions, feel free to leave a comment below. If you want to learn more about the Microsoft Azure Stack portfolio, check out my blog post.

by Contributed | Sep 30, 2020 | Azure, Technology, Uncategorized

This article is contributed. See the original author and article here.

At Microsoft Ignite, we announced the general availability of Azure Machine Learning designer, the drag-and-drop workflow capability in Azure Machine Learning studio which simplifies and accelerates the process of building, testing, and deploying machine learning models for the entire data science team, from beginners to professionals. We launched the preview in November 2019, and we have been excited with the strong customer interest. We listened to our customers and appreciated all the feedback. Your responses helped us reach this milestone. Thank you.

“By using Azure Machine Learning designer we were able to quickly release a valuable tool built on machine learning insights, that predicted occupancy in trains, promoting social distancing in the fight against Covid-19.” – Steffen Pedersen, Head of AI and advanced analytics, DSB (Danish State Railways).

Artificial intelligence (AI) is gaining momentum in all industries. Enterprises today are adopting AI at a rapid pace with different skill sets of people, from business analysts, developers, data scientists to machine learning engineers. The drag-and-drop experience in Azure Machine Learning designer can help your entire data science team to speed up machine learning model building and deployment. Specially, it is tailored for:

- Data scientists who are more familiar with visual tools than coding.

- Users who are new to machine learning and want to learn it in an intuitive way.

- Machine learning experts who are interested in rapid prototyping.

- Machine learning engineers who need a visual workflow to manage model training and deployment.

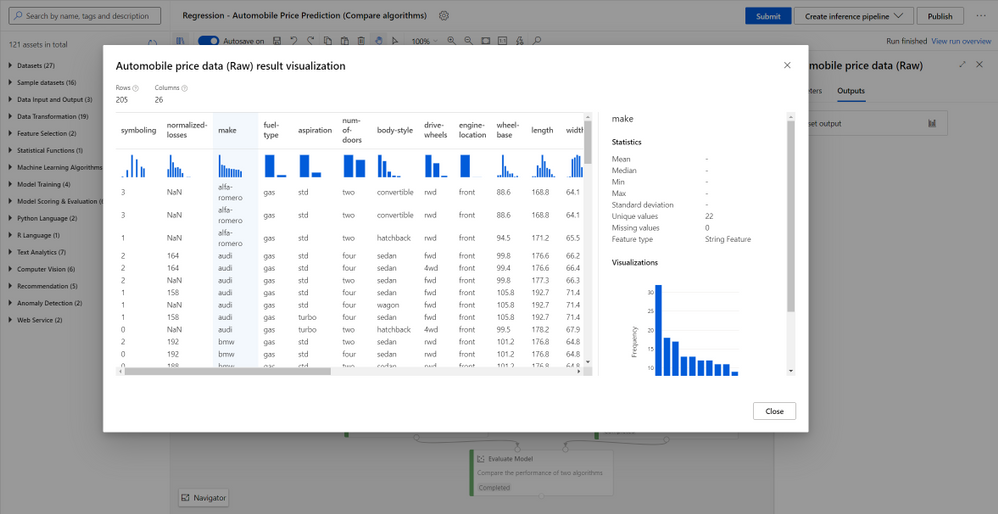

Connect and prepare data with ease

Azure Machine Learning designer is fully integrated with Azure Machine Learning dataset service for the benefit of versioning, tracking and data monitoring. You can import data by dragging and dropping a registered dataset from the asset library, or connecting to various data sources including HTTP URL, Azure blob, Azure Data Lake, Azure SQL or upload from a local file with Import Data module . You can use right click to preview and visualize the data profile, and preprocess data using a rich set of built-in modules for data transformation and feature engineering.

Build and train models with no-code/low-code

In Azure Machine Learning designer, you can build and train machine learning models with state-of-the art machine learning and deep learning algorithms, including those for traditional machine learning, computer vision, text analytics, recommendation and anomaly detection. You can also use customized Python and R code to build your own models. Each module can be configured to run on different Azure Machine Learning compute clusters so data scientists don’t need to worry about the scaling limitation and can focus on their training work.

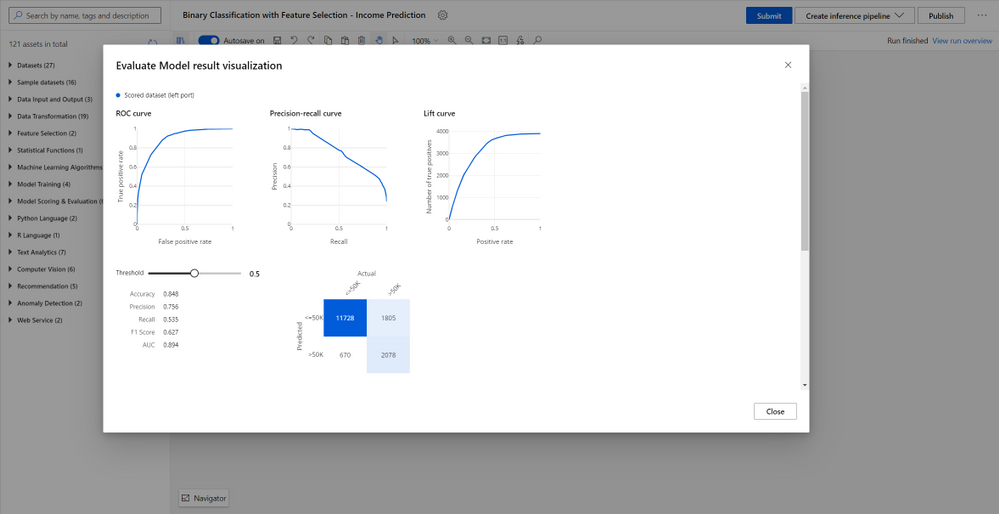

Validate and evaluate model performance

You can evaluate and compare your trained model performance with a few clicks using the built-in evaluate model modules, or use execute Python/R script modules to log the customized metrics/images. All metrics are stored in run history and can be compared among different runs in the studio UI.

Root cause analysis with immersed debugging experience

While interactively running machine learning pipelines, you can always perform quick root cause analysis using the graph search and navigation to quickly nailed down to the failed step, preview logs and outputs for debugging and troubleshooting without losing context of the pipeline, and find snapshots to trace scripts and dependencies used to run the pipeline.

Deploy models and publish endpoints with a few clicks

Data scientists and machine learning engineers can deploy models for real-time and batch inferencing as versioned REST endpoints to their own environment. You don’t need to worry about the deep knowledge of coding, model management, container services, etc., as scoring files and the deployment image are automatically generated with a few clicks. Models and other assets can also be registered in the central registry for MLOps tracking, lineage, and automation.

Get started today

Get started today with your new Azure free trial, and learn more about Azure Machine Learning designer.

by Contributed | Sep 30, 2020 | Azure, Technology, Uncategorized

This article is contributed. See the original author and article here.

Millions of people are using Microsoft Teams as their secure, productive and mobile collaboration & communication tool, today @Pete Bryan from Microsoft Threat Intelligence Center and @Hesham Saad from Microsoft CyberSecurity Global Black Belt will detail Microsoft Teams schema and data structure in Azure Sentinel so let’s get started!

Microsoft Teams now has an official connector at Azure Sentinel:

- Easy deployment (in a single checkbox)

- Data into Office Activity

- It’s free activity logs

- Only keep the custom connector for other workloads

Here’s a quick demonstration:

You can check as well a couple of hunting queries been shared on the Azure Sentinel GitHub

Lets understand now Microsoft Teams Schemas:

- Office 365 Management API

What’s in Teams Logs:

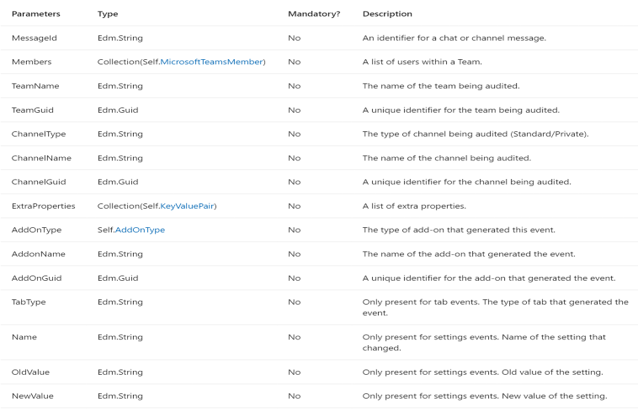

-

TeamsSessionStarted – Sign-in to Teams Client (except token refresh)

-

MemberAdded/MemberRemoved – User added/removed to team or group chat

-

MemberRoleChanged – User’s permissions changed 1 = Owner, 2 = Member, 3 = Guest

-

ChannelAdded/ChannelRemoved – A channel is added/removed to a team

-

TeamCreated/Deleted – A whole team is created or deleted

-

TeamsSettingChanged – A change is made to a team setting (e.g. make it public/private)

-

TeamsTenantSettingChanged – A change is made at a tenant level (e.g. enable product)

+ Bots, Apps, Tabs

https://docs.microsoft.com/en-us/microsoftteams/audit-log-events

-

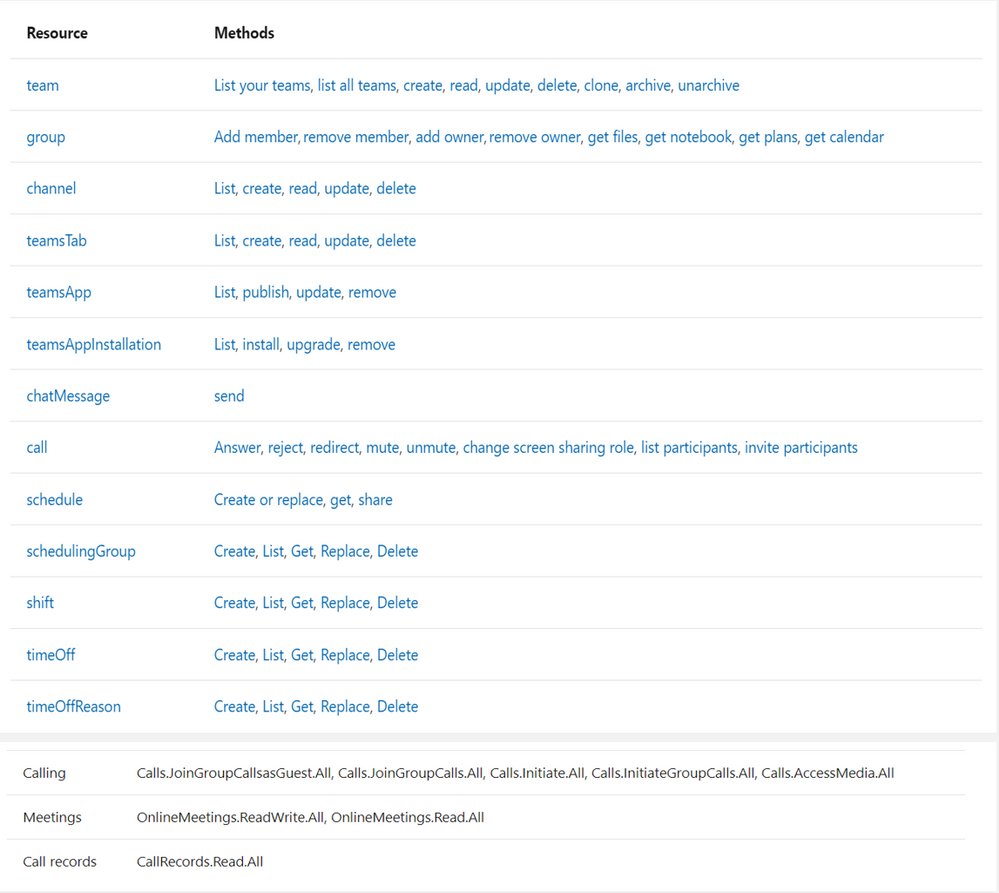

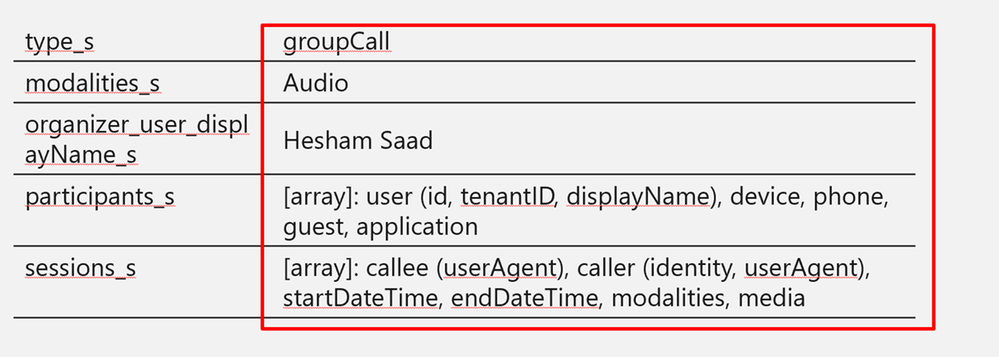

callRecord: Represents a single peer-to-peer call or a group call between multiple participants, sometimes referred to as an online meeting.

-

onlineMeeting: Contains information about the meeting, including the URL used to join a meeting, the attendees list, and the description.

-

callRecord/organizer – the organizing party’s identity

-

callRecord/participants – list of distinct identities involved in the call

-

callRecord/type – type of the call (group call, peer to peer,…etc)

-

callRecord/modalities – list of all modalities (audio, video, data, screen sharing, …etc)

-

callRecord/ (id – startDateTime – endDateTime – joinWebUrl )

-

onlineMeeting/ (subject, chatInfo, participants, startDateTime, endDateTime)

https://docs.microsoft.com/en-us/graph/api/resources/communications-api-overview?view=graph-rest-1.0

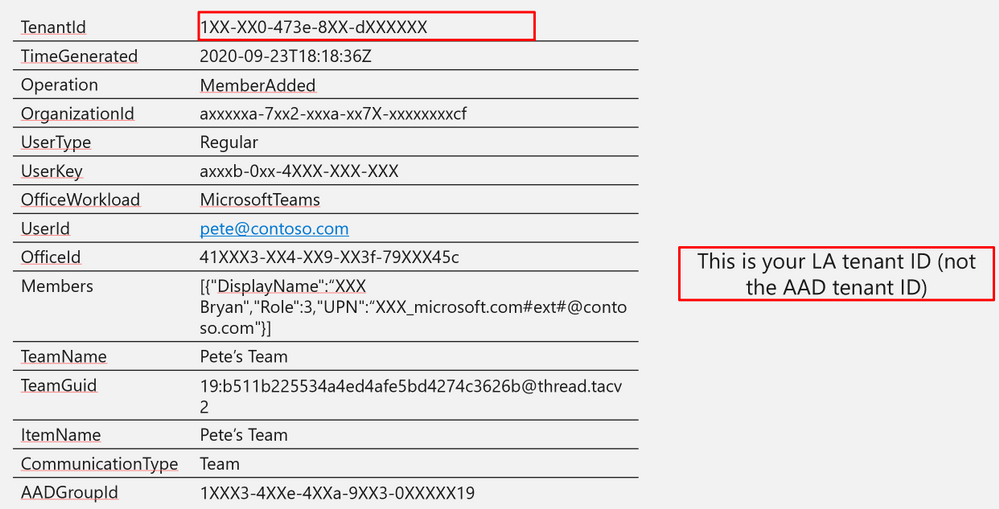

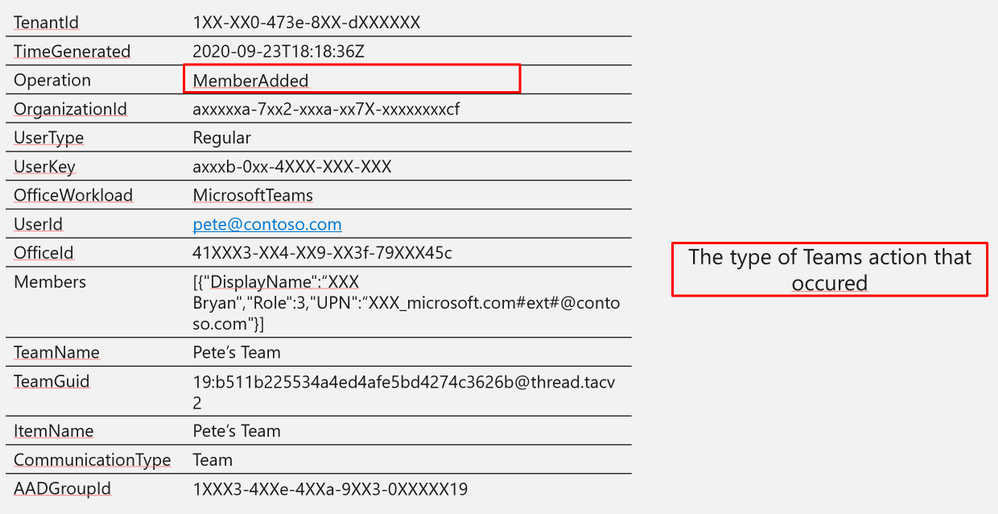

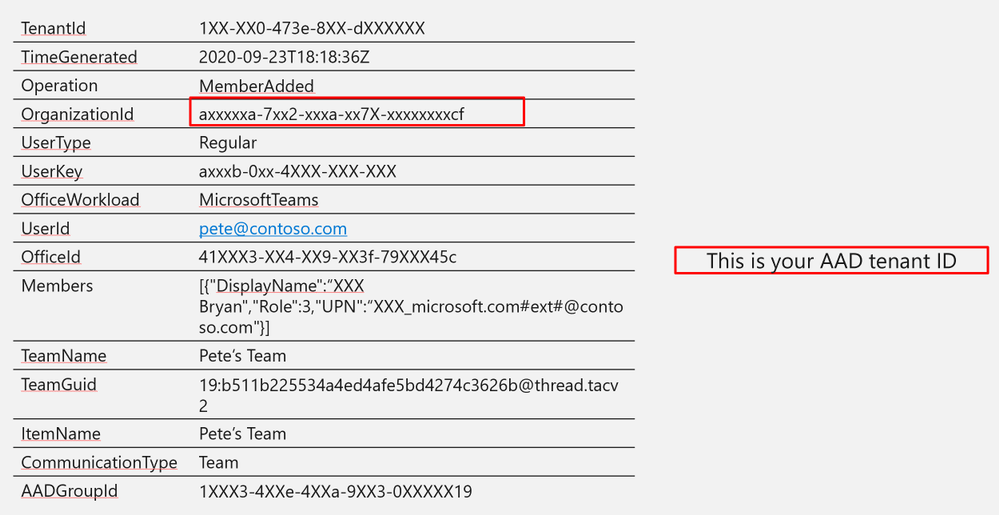

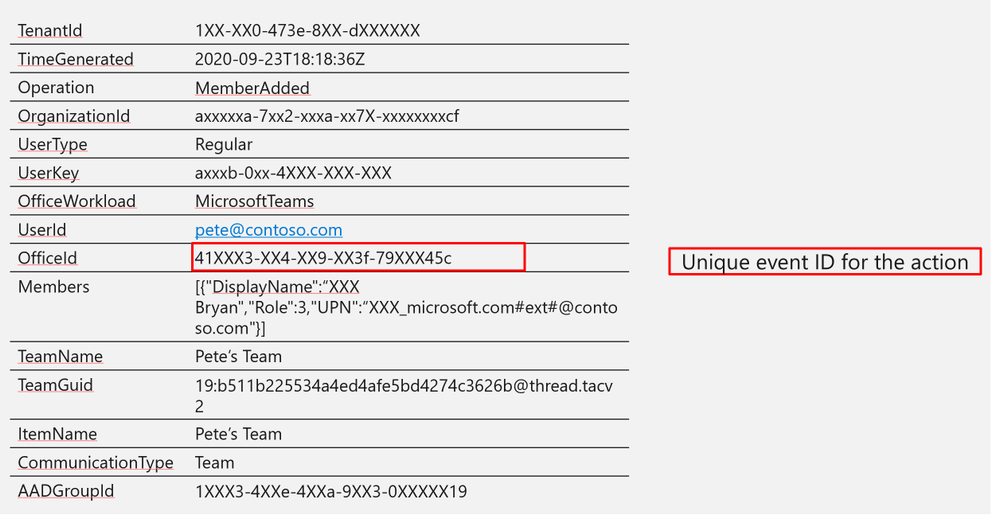

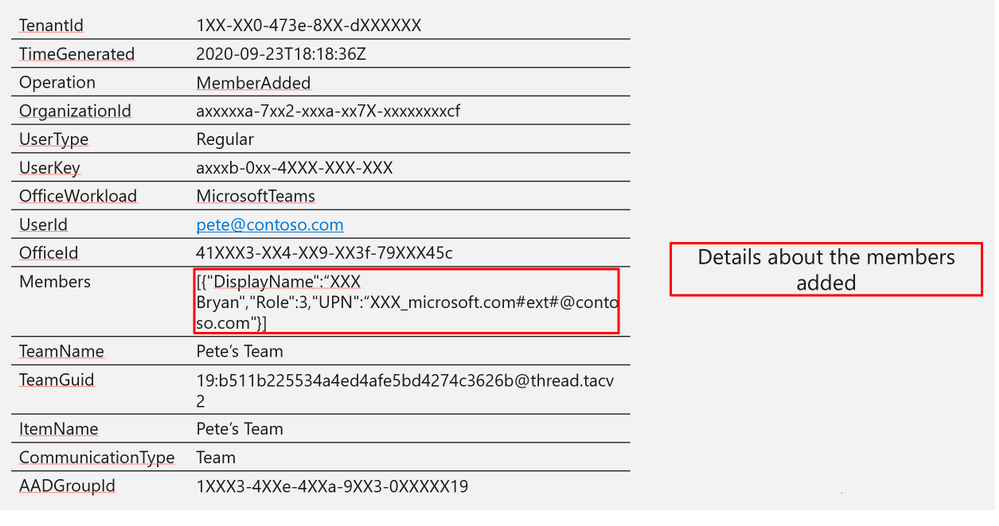

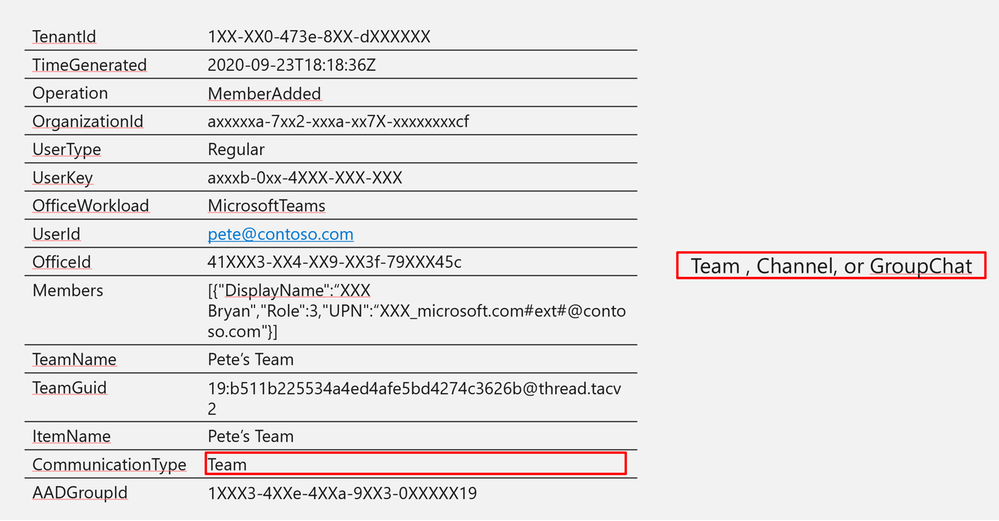

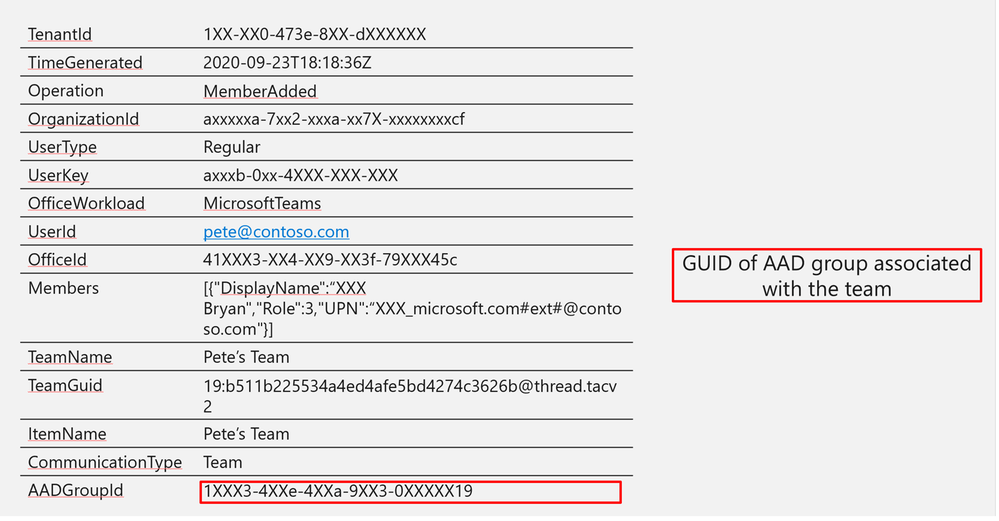

Log Structure:

|

TenantId

|

1XX-XX0-473e-8XX-dXXXXXX

|

|

TimeGenerated

|

2020-09-23T18:18:36Z

|

|

Operation

|

MemberAdded

|

|

OrganizationId

|

axxxxxa-7xx2-xxxa-xx7X-xxxxxxxxcf

|

|

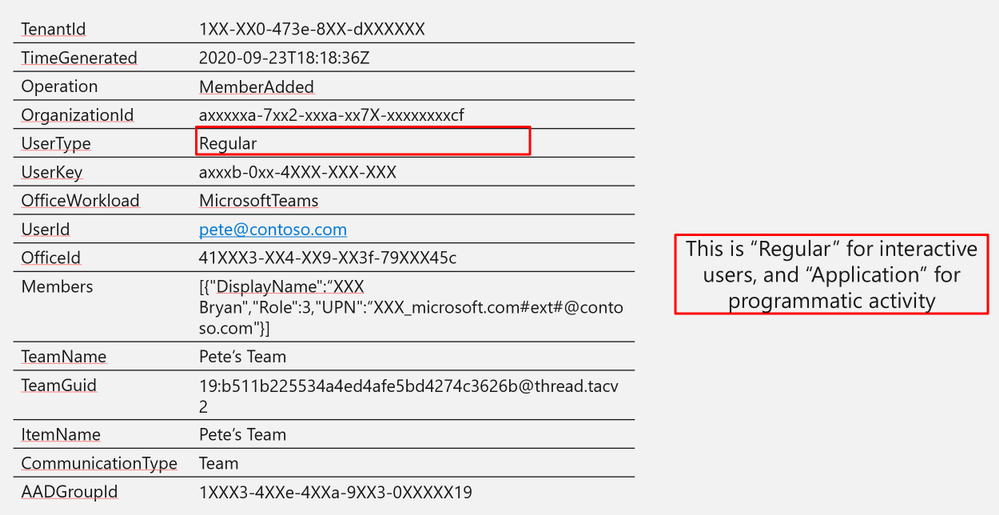

UserType

|

Regular

|

|

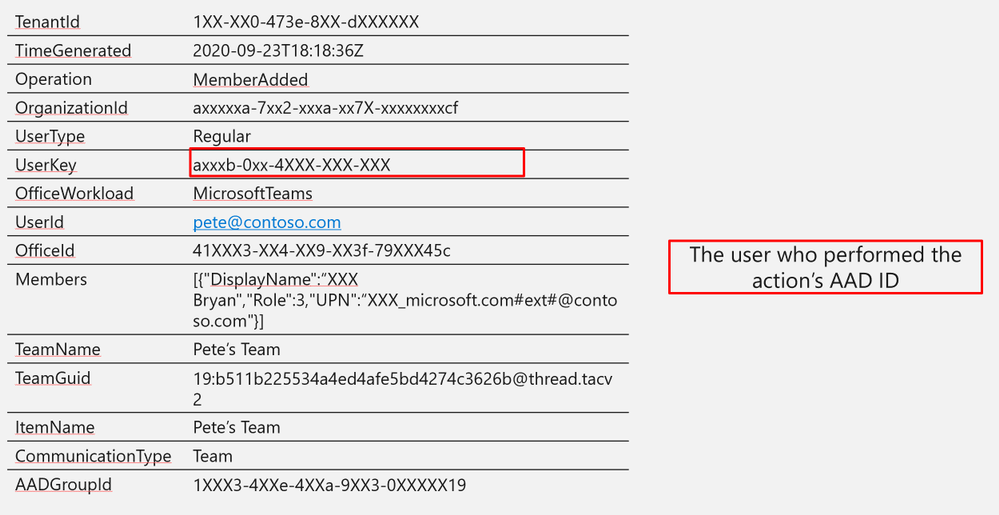

UserKey

|

axxxb-0xx-4XXX-XXX-XXX

|

|

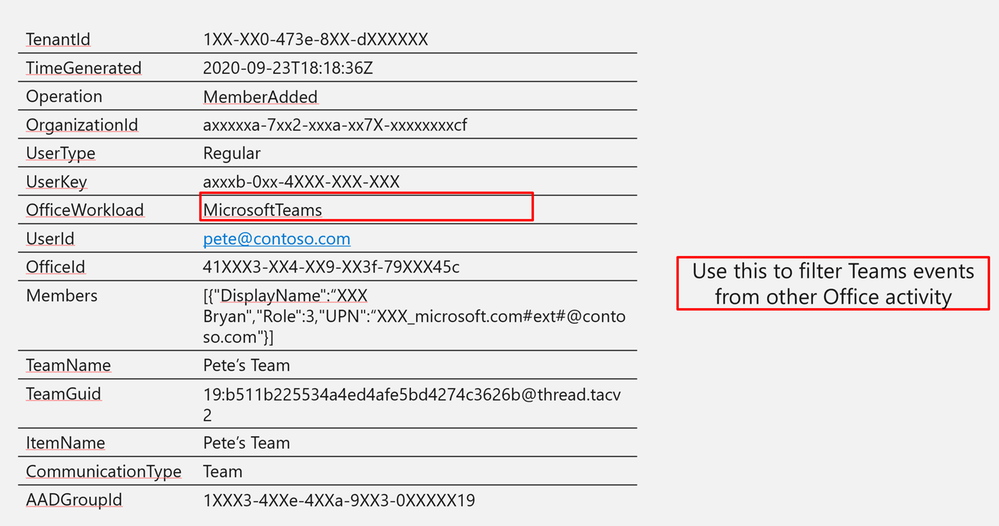

OfficeWorkload

|

MicrosoftTeams

|

|

UserId

|

pete@contoso.com

|

|

OfficeId

|

41XXX3-XX4-XX9-XX3f-79XXX45c

|

|

Members

|

[{“DisplayName”:“XXX Bryan”,”Role”:3,”UPN”:“XXX_microsoft.com#ext#@contoso.com”}]

|

|

TeamName

|

Pete’s Team

|

|

TeamGuid

|

19:b511b225534a4ed4afe5bd4274c3626b@thread.tacv2

|

|

ItemName

|

Pete’s Team

|

|

CommunicationType

|

Team

|

|

AADGroupId

|

1XXX3-4XXe-4XXa-9XX3-0XXXXX19

|

Log Structure (additional fields)

A step-by-step guide on how to ingest CallRecords-Sessions Teams data to Azure Sentinel via Microsoft Graph API, check out Secure your Calls- Monitoring Microsoft TEAMS CallRecords Activity Logs using Azure Sentinel blog post.

Other Logs:

SigninLogs

AAD Signin for Teams

| where AppDisplayName contains “Microsoft Teams”

OfficeActivity

Files uploaded via Teams

| where SourceRelativeUrl contains "Microsoft Teams Chat Files"

Hunting:

Detection:

- Difficult due to usage variations between orgs

- SigninLogs analytics will protect against a lot of common attack types

- New external organization hunting query is a good candidate

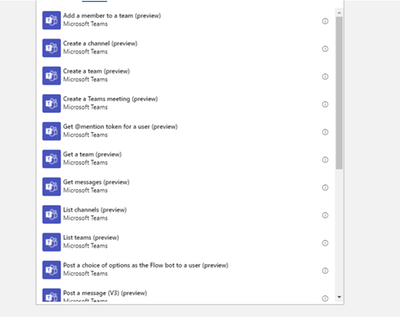

SOAR:

- Plenty of actions in Azure Sentinel Playbooks – LogicApps controls for Teams

- Use this to get additional context for alerts

- You can also post messages to teams

Get started today!

We encourage you to try it now and start hunting in your environment.

You can also contribute new connectors, workbooks, analytics and more in Azure Sentinel. Get started now by joining the Azure Sentinel Threat Hunters GitHub community.

by Contributed | Sep 30, 2020 | Azure, Technology, Uncategorized

This article is contributed. See the original author and article here.

Many customers require the ability to audit what happens in their SOC environment for both internal and external compliance requirements . It is important to understand the who/what/when’s of activities within your Azure Sentinel instance. In this blog, we will explore how you can audit your organization’s SOC if you are using Azure Sentinel and how to get the visibility you need with regard to what activities are being performed within your Sentinel environment. The accompanying Workbook to this blog can be found here.

There are two tables we can use for auditing Sentinel activities:

- LAQueryLogs

- Azure Activity

In the following sections we will show you how to set up these tables and provide examples of the types of queries that you could run with this audit data.

LAQueryLogs table

The LAQueryLogs table containing log query audit logs provides telemetry about log queries run in Log Analytics, the underlying query engine of Sentinel. This includes information such as when a query was run, who ran it, what tool was used, the query text, and performance statistics describing the query’s execution.

Since this table isn’t enabled by default in your Log Analytics workspace you need to enable this in the Diagnostics settings of your workspace. Click here for more information on how to do this if you’re unfamiliar with the process. @Evgeny Ternovsky has written a blog post on this process that you can find here.

A full list of the audit data contained within these columns can be found here. Here are a few examples of the queries you could run on this table:

How many queries have run in the last week, on a per day basis:

LAQueryLogs

| where TimeGenerated > ago(7d)

| summarize events_count=count() by bin(TimeGenerated, 1d)

Number of queries where anything other than HTTP response request 200 OK is received (i.e. the query failed):

LAQueryLogs

| where ResponseCode != 200

| count

Show which users ran the most CPU intensive queries based on CPU used and length of query time:

LAQueryLogs

|summarize arg_max(StatsCPUTimeMs, *) by AADClientId

| extend User = AADEmail, QueryRunTime = StatsCPUTimeMs

| project User, QueryRunTime, QueryText

| order by QueryRunTime desc

Summarize who ran the most queries in the past week:

LAQueryLogs

| where TimeGenerated > ago(7d)

| summarize events_count=count() by AADEmail

| extend UserPrincipalName = AADEmail, Queries = events_count

| join kind= leftouter (

SigninLogs)

on UserPrincipalName

| project UserDisplayName, UserPrincipalName, Queries

| summarize arg_max(Queries, *) by UserPrincipalName

| sort by Queries desc

AzureActivity table

As in other parts of Azure, you can use the AzureActivity table in log analytics to query actions taken on your Sentinel workspace. To list all the Sentinel related Azure Activity logs in the last 24 hours, simply use this query:

AzureActivity

| where OperationNameValue contains "SecurityInsights"

| where TimeGenerated > ago(1d)

This will list all Sentinel-specific activities within the time frame. However, this is far too broad to use in a meaningful way so we can start to narrow this down some more. The next query will narrow this down to all the actions taken by a specific user in AD in the last 24 hours (remember, all users who have access to Azure Sentinel will have an Azure AD account):

AzureActivity

| where OperationNameValue contains "SecurityInsights"

| where Caller == "[AzureAD username]"

| where TimeGenerated > ago(1d)

Final example query – this query shows all the delete operations in your Sentinel workspace:

AzureActivity

| where OperationNameValue contains "SecurityInsights"

| where OperationName contains "Delete"

| where ActivityStatusValue contains "Succeeded"

| project TimeGenerated, Caller, OperationName

You can mix these up and add even more parameters to search the AzureActivities log to explore these logs even more, depending on what your organization needs to report on. Below is a selection of some of the actions you can search for in this table:

- Update Incidents/Alert Rules/Incident Comments/Cases/Data Connectors/Threat Intelligence/Bookmarks

- Create Case Comments/Incident Comments/Watchlists/Alert Rules

- Delete Bookmarks/Alert Rules/Threat Intelligence/Data Connectors/Incidents/Settings/Watchlists

- Check user authorization and license

Alerting on Sentinel activities

You may want to take this one step further and use Sentinel audit logs for proactive alerts in your environment. For example, if you have sensitive tables in your workspace that should not typically be queried, you could set up a detection to alert you to this:

LAQueryLogs

| where QueryText contains "[Name of sensitive table]"

| where TimeGenerated > ago(1d)

| extend User = AADEmail, Query = QueryText

| project User, Query

Sentinel audit activities Workbook

We have created a Workbook to assist you in monitoring activities in Sentinel. Please check it out here and if you have any improvements or have made your own version you’d like to share, please submit a PR to our GitHub repo!

With thanks to @Jeremy Tan @Javier Soriano, @Matt_Lowe and @Nicholas DiCola (SECURITY JEDI) for their feedback and inputs to this article.

by Contributed | Sep 29, 2020 | Azure, Technology, Uncategorized

This article is contributed. See the original author and article here.

Additional certificate updates for Azure Sphere

Microsoft is updating Azure services, including Azure Sphere, to use intermediate TLS certificates from a different set of Certificate Authorities (CAs). These updates are being phased in gradually, starting in August 2020 and completing by October 26, 2020. This change is being made because existing intermediate certificates do not comply with one of the CA/Browser Forum Baseline requirements. See Azure TLS Certificate Changes for a description of upcoming certificate changes across Azure products. Azure IoT TLS: Changes are coming! (…and why you should care) provides details about the reasons for the certificate changes and how they affect the use of Azure IoT.

How does this affect Azure Sphere?

On October 13, 2020 we will update the Azure Sphere Security Service SSL certificates. Please read on to determine whether this update will require any action on your part.

What customer actions are required for the SSL certificate updates?

On October 13, 2020 the SSL certificate for the Azure Sphere Public API will be updated to a new leaf certificate that links to the new DigiCert Global Root G2 certificate. This change will affect only the use of the Public API. It does not affect Azure Sphere applications that run on the device.

For most customers, no action is necessary in response to this change because Windows and Linux systems include the DigiCert Global Root G2 certificate in their system certificate stores. The new SSL certificate will automatically migrate to use the DigiCert Global Root G2 certificate.

However, if you “pin” any intermediate certificates or require a specific subject, name, or issuer (“SNI pinning”), you will need to update your validation process. To avoid losing connectivity to the Azure Sphere Public API, you must make this change before we update the certificate on October 13, 2020.

What about Azure Sphere apps that use IoT and other Azure services?

Additional certificate changes will occur soon that affect Azure IoT and other Azure services. The update to the SSL certificates for the Azure Sphere Public API is separate from those changes.

Azure IoT TLS: Changes are coming! (…and why you should care) describes the upcoming changes that will affect IoT Hub, IoT Central, DPS, and Azure Storage Services. These services are not changing their Trusted Root CAs; they are only changing their intermediate certificates. Azure Sphere on-device applications that use only the Azure IoT and Azure Sphere application libraries should not require any modifications. When future certificate changes are required, we will update the IoT C SDK in the Azure Sphere OS and thus make the updated certificates available to your apps.

If your Azure Sphere on-device applications communicate with other Azure services, however, and pin or supply certificates for those services, you might need to update your image package to include updated certificates. See Azure TLS Certificate Changes for information about which certificates are changing and what changes you need to make.

We continue to test common Azure Sphere scenarios as other teams at Microsoft perform certificate updates and will provide detailed information if additional customer action is required.

For more information:

If you encounter problems

For self-help technical inquiries, please visit Microsoft Q&A or Stack Overflow. If you require technical support and have a support plan, please submit a support ticket in Microsoft Azure Support or work with your Microsoft Technical Account Manager/Technical Specialist. If you would like to purchase a support plan, please explore the Azure support plans.

Recent Comments