by Scott Muniz | Jul 15, 2020 | Azure, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

By: Gourav Bhardwaj (VMware), Trevor Davis (Microsoft) and Jeffrey Moore (VMware)

Challenge

When moving to Azure VMware Solution (AVS) customers may want to maintain their operational consistency with their current 3rd party networking and security platforms. The types of 3rd party platforms could include solutions from Cisco, Juniper, or Palo Alto Networks and the means for connectivity is independent of the NSX-T Service Insertion/Network Introspection certification process for vSphere or AVS. The following is a use case based on an actual customer deployment.

Solution

The Azure VMware Solution environment allows access to the following VMware management components:

- vCenter User Interface (UI)

- NSX-T Manager User Interface (UI)

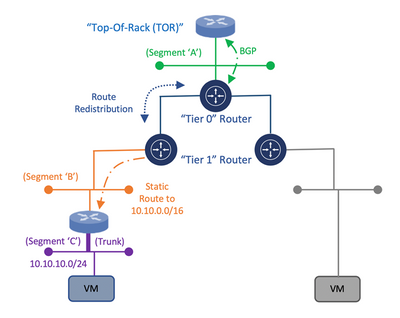

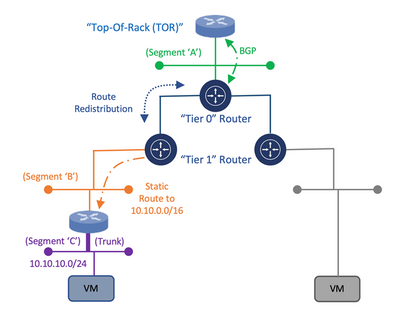

Within this architecture design, the uplink of the 3rd party appliance can be connected to a segment that is attached to the NSX-T “Tier 1” router while the individual or trunked downlinks of the appliance will support the connectivity of the Virtual Machine (VM) workloads which is similar to the On-Premises deployment model as depicted in the following diagram:

Diagram 1: Deployment Model for Connectivity of a 3rd Party Appliance with NSX-T

Once the appliance is attached to the NSX-T “Tier 1” router, static routes need to be configured to direct traffic through the 3rd Party appliance. Within the NSX-T Manager UI, the customer has the ability to create these static routes on the NSX-T “Tier 1” router which will assist in the network traffic direction through the 3rd Party appliance and to the customer’s VM workloads within AVS. Once the static routes are created, they can be redistributed within the dynamic routing protocol, Border Gateway Protocol (BGP).

This would allow for the static route information to be dynamically sent from the NSX-T “Tier 0” router through the uplink to the Top of Rack (ToR) platform and eventually, to the Azure Express Route backbone which will allow for the end-to-end connectivity from the customer’s locally attached On-Premises Express Route service to the AVS environment. This approach would provide a means to maintain the operational consistency between what is currently On-Premises and the Azure VMware Solution environment.

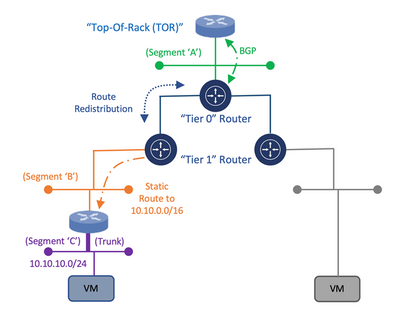

Diagram 2: Operational Consistency Deployment Model of 3rd Party Appliance in Azure VMware Solution

An additional consideration for this design is related to the location of the VM’s within the cluster and the potential vSphere Distributed Resource Scheduler (DRS) event which attempts to load balance the VM’s across the ESXi hosts within a cluster via vMotion based on available resources. During such as event, the 3rd Party appliance may reside on one ESXi host while the VM workload resides on another ESXi host. Within a customer’s On-Premises data center, this situation can be resolved by stretching the VLAN’s on the ToR switch that are associated to the uplinks and downlinks attached to the 3rd Party platform which would provide connectivity independent of which ESXi host the platforms and VM’s reside. Within Azure VMware Solution, this needs to occur as well using NSX Segments or vSphere Distributed Switch (VDS).

Once the solution is verified based on these mentioned topics, the customer is able to consume the 3rd Party platform within Azure VMware Solution in the same way as the On-Premises data center environment which will align with the requirement for operational consistency between On-Premises and AVS for services such as Disaster Recovery.

Author Bio’s:

Gourav Bhardwaj

Staff Cloud Solutions Architect (VMware)

VCDX 76 (VCDX-xx)

Digital and workspace transformation expert; Speaker at conferences and workshops.

LinkedIn: https://www.linkedin.com/in/vcdx076/

Trevor Davis

Azure VMware Solutions, Sr. Technical Specialist (Microsoft)

Twitter: @vTrevorDavis

LinkedIn: https://www.linkedin.com/in/trevorpdavis/

Jeffrey Moore

Staff Cloud Solutions Architect (VMware)

3xCCIE (#29735 – RS, SP, Wireless), CCDE (2013::20)

Focused on incubation and acceleration efforts for Azure VMware Solution (AVS).

LinkedIn: https://www.linkedin.com/in/jjtm/

Twitter: @Jeffrey_29735

by Scott Muniz | Jul 15, 2020 | Azure, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

One of Azure’s largest online conferences returned even bigger this year as Brazil’s technical community rallied to “uncomplicate” all things cloud.

The fifth edition of Uncomplicating Azure – or Descomplicando o Azure – expanded from one to two weeks thanks to the support of more than 40 expert presentations on Cloud, Dev, Data, AI, IoT, Productivity and more.

The online conference from June 15-26 expanded farther than South America’s largest country to include speakers from 11 countries. So far, the event’s 35 hours of content has received 28,000 views and counting.

MVP and event organizer Rubens Guimarães said the conference sought to connect communities, people, cultures, and knowledge through digital channels in real-time.

“Participating in communities is something that requires effort, constant learning, time, and dedication, but it is very rewarding when colleagues come to you to talk about having earned a new certification through a lecture tip or getting a new job or even solving a problem of project,” Rubens said. “Being part of communities is something that completes my daily life, strengthening me as an individual in society.”

The ability to connect different people of different backgrounds was a particular highlight, Rubens said, with speakers hailing from Russia, India, USA, Argentina, Chile, and other countries. The event’s multi-platform nature enabled for the addition of subtitles in several languages.

Especially in the context of Brazil’s ongoing battle against the pandemic, the conference served as a meeting ground for members of the technical community to reconnect with each other and the wider world.

“As usual, being part of the Microsoft Community is incredible – and even now with this complicated situation, Brazilians can keep warm and alive through webinars,” said Brazilian Sara Barbosa, who previously served as an MVP for Office Apps & Services before recently joining Microsoft as an FTE. “The energy and content each speaker shared were great. Even though Descomplicando o Azure has a focus on Azure, the diversity of presentations allowed customers, enthusiasts, and community folks to learn about a variety of Microsoft technologies such as SQL, Power Platform, and others.”

Similarly, Brazilian MVP for Data Platform Rodrigo Crespi reinforced the benefit of consistently learning from other like-minds at home and abroad. “Being part of a technical community allows me to meet and interact with the brightest minds in IT,” he said. “There were several important moments, but I highlight my great satisfaction in sharing the little of my knowledge with various people around the world.” Brazilian MVP for Data Platform Fabricio Lima agreed that the event further developed his professional skillset.

Irish-based MVP speaker Alexandre Malavasi praised the sheer breadth and depth of the conference speakers, noting that the diversity of themes meant any audience from students to company managers would find something of use.

“It is extremely rare to see an event that involves so many speakers, including those with international participation, being completely free to the community,” Alexandre said. “I am sure that the event contributed for IT professionals, in general, to understand more accurately the potential, extent, and possibilities that Azure can provide for the development of robust, resilient, high-performance, and cost-effective applications.”

US-based MVP Gaston Cruz said the conference’s international perspective would be useful to many going forward. “This event not only allowed people to learn but also to get a lot of new ideas of how to implement cutting edge technologies and leverage them to support business needs and transform them in the worldwide context that we are in now.”

Argentine-based MVP Sebastián Pérez said he enjoyed the engagement of attendees, with some even following up after his presentation for more information. Meanwhile, German-based MVP Reconnect member Thiago Lunardi said he simply enjoyed being back in the Microsoft community with other thought leaders. “For me, [the highlight] was the minutes before going live. I was out of the spotlight for over 2 years, and the feeling to be back contributing was like I had never left it behind. It’s great to be part of Microsoft community, just great.”

While Uncomplicating Azure is an annual event, Rubens said the organizing team was already looking for an adaptation to be held every six months. Check out the YouTube channel to watch presentations from the conference.

by Scott Muniz | Jul 15, 2020 | Azure, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

AI and Machine Learning techniques are changing the ways industries process data. And one of the most exciting developments is the ability to process at the edge, next to cameras, sensors, or other systems generating that data. This allows you to get insights right away, without needing to send everything to the cloud first – saving you bandwidth and getting results faster.

We designed Azure Stack Edge for exactly these situations. It’s an extension of the Azure cloud that lets you run analysis locally, but still controlled and managed from the cloud. So, you can deploy and monitor from the cloud, but have everything running at your site, right where your data is generated. Whether that’s a grocery store improving operations, a hospital improving efficiency in the Operating Room, or cities looking to improve traffic safety and efficiency.

One of the cool things about Azure Stack Edge is you can install it, and then have it analyze data from your existing systems. This opens up all sorts of new opportunities you might not expect. For example, airports have big existing scanners checking luggage for dangerous items. But as part of conservation efforts, they also want to check for illegal animal parts. This can reduce poaching and improve environment sustainability. With Azure Stack Edge you have those scanners do double duty. They can continue to do their normal work looking for dangerous items, and also send the scans to Azure Stack Edge to run AI models designed to detect other items. There’s a new Microsoft Mechanics video that highlights this use case and how it uses Azure Stack Edge as a platform to run locally. Definitely check out the video to get the full story.

If your business is looking to do AI analysis at the edge, Azure Stack Edge is a great solution. It’s part of Azure, so there’s nothing to buy. You sign up like any other Azure service and we send you a server and bill you monthly on your normal Azure bill. It has built in ML acceleration hardware, and works with Microsoft’s container deployment systems. Read more about Azure Stack Edge here, or order one from the Azure Portal.

by Scott Muniz | Jul 15, 2020 | Azure, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

High-performance computing (HPC) can be expensive and complex to set up and use efficiently. Traditionally, HPC has been restricted to academia, research institutes, and enterprises with the capacity to operate large datacenters. Furthermore, it has always been a challenge to deliver optimized HPC systems with complex workflows involving different applications in a user-friendly and accessible environment. Dev Subramanian, CTO at Drizti Inc., elaborates on the challenges and describes how the Toronto startup aims to democratize HPC with Microsoft Azure:

Even enterprises that can afford to build massive datacenters can deliver HPC from only a few locations, limiting the availability of HPC for a globally distributed workforce. Additionally, applications requiring HPC generate a lot of data, and a centrally located datacenter increases the strain on networks.

These inherent complexities have limited the use of HPC across industries, slowing down cutting-edge innovation and leading organizations to investigate ways to make HPC more accessible and easier to use.

Drizti offers a cloud-based HPC platform for “personal supercomputing.” The HPCBOX platform on Microsoft Azure Marketplace delivers turnkey vertical solutions with optimized HPC for accelerating innovation in advanced manufacturing, machine learning, artificial intelligence, and finance. Drizti’s mission is to make supercomputing technology accessible on the PC of every scientist, developer, and engineer, wherever they are and whenever they need it.

With 58 global regions and availability in 140 countries, Azure is one of the few public cloud infrastructure providers focused on implementing HPC capability in the cloud.

Various Azure instance sizes are built for handling HPC workloads and are offered alongside high-speed RDMA InfiniBand networking and high-performance file systems. Furthermore, the rich set of APIs that Azure exposes enables HPC to be combined with other services, and this is what Drizti has done with its HPCBOX platform.

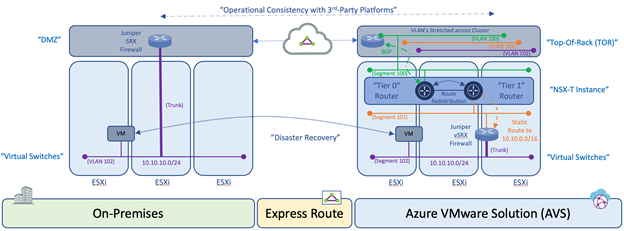

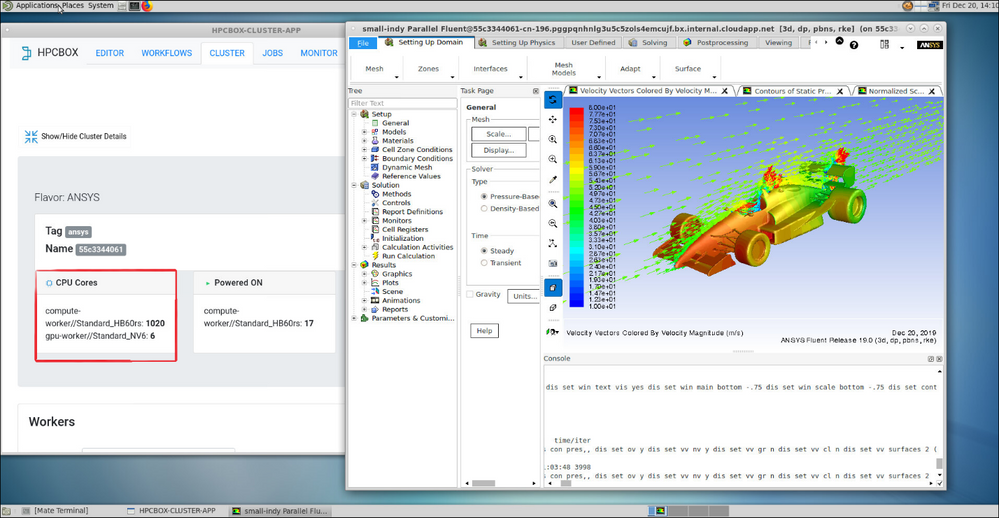

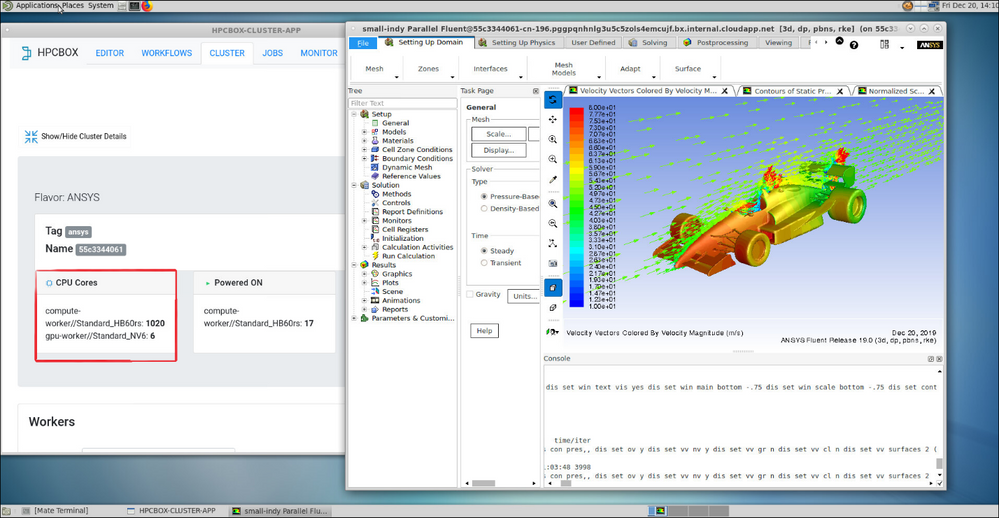

HPCBOX user interface

HPCBOX user interface

HPCBOX delivers a fully interactive, workflow-enabled HPC platform for automating complex application pipelines. It offers a PC-like user experience and delivers various turnkey vertical solutions optimized for applications such as ANSYS, Simcenter STAR-CCM+, Siemens Nastran, Abaqus, Horovod, SU2, OpenFOAM, and many others that can benefit from big compute on Azure. The HPCBOX platform uses big compute capabilities Azure offers, including state-of-the-art HB2, HB, HC, H, N, and NC-Series instance sizes.

Through the reach of the Azure Marketplace, HPCBOX delivers turnkey HPC solutions directly within an end user’s subscription, whether it is an EA, web-direct, or CSP subscription. This approach lets users access HPC for their applications within minutes of deploying an HPCBOX cluster while remaining compliant with all the corporate policies set by their organization. Furthermore, by delivering the HPCBOX solution directly within a user’s subscription, Drizti can make sure all the data resides within the user’s subscription and in their region of choice.

Finally, being a Microsoft co-sell certified solution makes HPCBOX fully compatible with Azure services and infrastructure, giving users confidence they can utilize HPCBOX on Azure to dramatically cut costs, get significantly faster results, and spend more time on innovation rather than system setup and configuration.

To learn more about HPCBOX on Azure, watch this video and sign up for a personal supercomputing promo.

by Scott Muniz | Jul 14, 2020 | Azure, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

In the past few months, the CLI team has been working diligently to improve the in-tool user experience (UX) for you. We listened to your feedback and designed the features so that they could be useful and applicable the moment you start interacting with the tool.

In the following article, we will share with you our latest updates on performance improvement on command execution and configurable output with the “only-show-errors” tag.

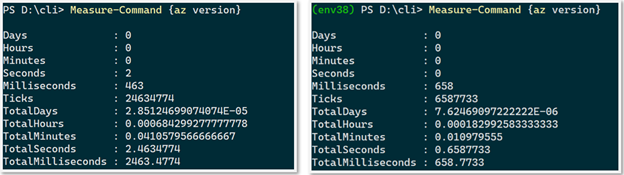

Significant performance improvements on client-side command execution

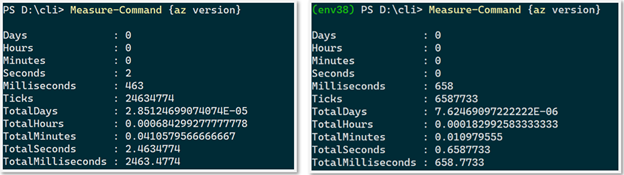

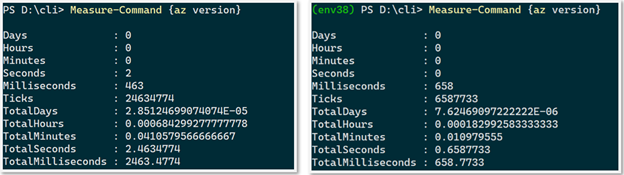

One top frustration we’ve been hearing from both our new and experienced CLI users is that the speed of command execution could use some improvement. Our team recognized this challenge and immediately followed up with optimizations. And the result was phenomenal! Every client-side command is now 74% faster.

Figure 1: Comparison of the performance of client side command execution, before vs. after

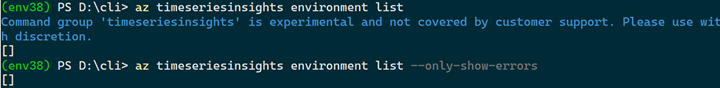

Interruption free automation with “–only-show-errors” flag:

For those of you who’d been frustrated with Azure CLI’s warning messages endlessly interrupting your ongoing scripts, we’re delighted to share with you a simple resolution! Our team recently released the “–only-show-errors” flag which enables you to disable all non-error output (i.e warnings, info, debug messages) in the stderr stream. This has been highly requested by our DevOps and Architect fans and we hope you can take advantage of this as well. We support the feature on both the per command basis and also via direct updates in the global configuration file. This way you could easily leverage the feature either interactively, or for scripting/automation purposes

To enable it, simply append —only-show-errors to the end of any az command. For instance:

az timeseriesinsights environment list —only-show-errors

Below is an example of before vs. after of enabling the flag. We can see that the experimental message has been suppressed:

Fun fact: our team has actually considered the verbiage around “—no-warnings” and “—quiet” but eventually settled on “—only-show-errors” given its syntactical intuitiveness.

Call to action:

Similar to last time, some of these improvements are early in the preview or experimental stage but we certainly do look forward to improving them to serve you better. If you’re interested, here is where you can learn more.

We’d love for you to try out these new experiences and share us your feedback on their usability and applicability for your day-to-day use cases. Also please don’t hesitate to share us any ideas and feature requests on how to improve your overall in tool experience!

Stay tuned for our upcoming blogs and subscribe to it ! See you soon

Recent Comments