by Scott Muniz | Sep 4, 2020 | Azure, Technology, Uncategorized

This article is contributed. See the original author and article here.

JUST ENOUGH AZURE DATA EXPLORER FOR CLOUD ARCHITECTS

This article provides a high-level overview of all the key capabilities in Azure Data Explorer, positioning, and resources, and is intended to demystify Azure Data Explorer for cloud architects.

WHAT IS AN ANALYTICAL DATABASE?

Analytical databases are purpose-built and optimized to query and run advanced analytics on large volumes of data with extremely low response times. Modern analytical databases are generally distributed, scalable, fault-tolerant, and feature columnar, compressed formats, and intelligently use in-memory and disk caching technologies. In contrast, transactional databases are optimized for single digit millisecond point reads by key or intra-partition range query, support random CRUD and ACID semantics, and more.

WHAT IS AZURE DATA EXPLORER?

Azure Data Explorer, code name, Kusto, is a low latency, big data, Microsoft proprietary, append-only analytical database. It is feature and functionality rich as a platform – with durable storage, query engine, search engine, proprietary Kusto Query Language, support for ingestion of data in disparate formats from disparate sources, in batch and streaming, with advanced analytics/data science and geospatial analytical capabilities out of the box, server side programmability and support for visualization, scheduling, orchestration and automation.

Azure Data Explorer is mature and heavily used internally at Microsoft and powers many core Azure offerings such as Azure Monitor, Azure Sentinel, Azure Time Series Insights and more. It is fully managed, scalable, secure, robust, and enterprise ready and is a popular platform for log analytics, time series analytics, IoT, and general-purpose exploratory analytics. In a subsequent section, we cover, what Azure Data Explorer is not.

USE CASES FOR AZURE DATA EXPLORER

- Azure Data Explorer is a big data solution, ideal for-

- Analytics on IoT telemetry

- Analytics on all manners of time series data

- Analytics on all manners of logs

- Analytics on clickstream and any other form of live sources

- Text search

- Geo-spatial analytics

- Advanced analytics

- Exploratory environment for data scientists and business/data analysts

Reference architecture is covered further in this article, after the value proposition.

VALUE PROPOSITION AND SALIENT FEATURES

1. LOW LATENCY QUERY ENGINE

Azure Data Explorer leverages innovative, and contemporary compressed columnar and row stores along with a hierarchical cache paradigm, featuring configurable hot and cold caches backed by memory and local disk, with data persistency on Azure Storage. With this architecture, terabytes and petabytes of data can be queried, and results returned within milliseconds to seconds. Refer the white paper for more implementation details.

For applications where performance of analytical queries is critical, Azure Data Explorer is a great fit as a backend analytical database.

2. DISTRIBUTED BY DESIGN

Azure Data Explorer is a big data, cluster computing system comprised of engine nodes that serve out queries and data management service nodes that perform/orchestrate a variety of data related activities including ingestion and shard management. A few key capabilities of distributed systems are partitioning, consistency, replication, scalability and fault tolerance.

- Data persisted in Azure Data Explorer is durably backed by Azure Storage that offers replication out of the box, locally within an Azure Data Center, zonally within an Azure Region.

- An Azure Data Explorer cluster can be scaled up vertically or down as workload resource requirements change. Azure Data Explorer clusters can be scaled out horizontally and scaled in, manually, or with auto-scale.

- From a partitioning perspective, Azure Data Explorer natively partitions by ingestion time with a proprietary formula to prevent hot spotting, but also offers a user configurable partitioning based on data attributes/columns. Out of the box, the service offers options of strong and weak consistency, with strong consistency as the default. All data ingested into Azure Data Explorer is automatically indexed, allowing fast lookup queries.

For analytical applications where scale and reliability are a must, Azure Data Explorer is a great fit.

3. RICH INTEGRATION AND CONNECTOR ECOSYSTEM

Azure Data Explorer has a rich connector eco-system for batch and near real-time ingestion, with Microsoft first party services as well as open source distributed systems.

It supports open source integration systems and aggregator, connector frameworks, like Apache Kafka’s Kafka Connect and ELK stack’s Logstash. It supports Apache Spark for both read and persist, opening up numerous integration possibilities with open source big data systems, whether transactional or analytical.

From a streaming perspective, Azure Data Explorer supports Azure IoT, Azure Event Hub and Apache Kafka. For bulk ingestion or event-driven ingestion, Azure Data Explorer integrates with Azure Storage, with a queued ingestion functionality, and with Azure Event Grid for configurable event driven ingestion.

From an ingestion format perspective, Azure Data Explorer supports a number of formats from CSV and JSON to binary formats like Avro, Parquet and ORC, with support for multiple compression codecs.

It is a common pattern today to curate all enterprise information assets in a data lake. Azure Data Explorer supports the external table paradigm with an integration with Azure Data Lake Store. It also features continuous ingest and continuous export capabilities to Azure Data Lake Store.

Collectively, with the connector eco-system, support for disparate ingestion formats and compression, whether in batch or streaming modes, with support for business workflows, scheduling, and orchestration, Azure Data Explorer is enterprise-ready from an integration perspective.

4. QUERY LANGUAGE AND SERVER-SIDE PROGRAMMABILITY

Azure Data Explorer features a proprietary Kusto Query Language (KQL), that is expressive, and intuitive. KQL supports querying structured, semi-structured, and unstructured(text search) data, all the typical operators of a database query language, typical aggregation and sorting capability, relational query grammar with joins, and hints and more, cross-cluster and cross database queries, and is feature rich from a parsing (json, XML etc) perspective. It supports geospatial analytics and advanced analytics as well.

Azure Data Explorer supports server-side stored functions, continuous ingest and continuous export to Azure Data Lake store. It also supports ingestion time transformations on the server side with update policies, and precomputed scheduled aggregates with materialized views (preview).

Azure Data Explorer with Kusto Query Language capabilities, and its support for ingesting disparate formats, in batch or near real time, allows enterprises to gain insights instantly from data, in its raw form, in previously unthought of ways and proactively, reactively respond. With the server-side capabilities detailed above, Azure Data Explorer supports building common automated analytical application features.

5. ADVANCED ANALYTICS

Azure Data Explorer offers time series capabilities, including a large set of functions from basic element-wise operation (adding, subtracting time series) via filtering, regression, seasonality detection up to anomaly detection and forecasting. Time series functions are optimized for processing thousands of time series in seconds. Azure Data Explorer also offers clustering plugins for pattern detection that are very powerful for diagnosis of anomalies, and root cause analysis. You can extend Azure Data Explorer capabilities by embedding python code in KQL query, leveraging Python open source eco-system for ML, statistics, signal processing and a lot more. Using inline Python you can do in-place machine learning model training, leveraging Azure Data Explorer compute, against data stored within ADX, or train your model anywhere, export it to ADX and use ADX solely for scoring. You can also query ADX from Jupyter/Azure notebooks by using KqlMagic extension from within the notebook. To note, ADX supports ONNX models.

With its support for data science languages and libraries, training and scoring, both in batch and near real time, Azure Data Explorer makes a compelling modern advanced analytics platform.

6. VISUALIZATION

Azure Data Explorer offers visualization out of the box with its Web/Desktop based integrated development environment. It also offers native dashboarding out of the box, with support for a variety of charts, and direct query support. Further, it has native integration with Power BI with support for predicate and projection pushdown and native connectors for Grafana, Kibana and Redash (now Databricks), and ODBC support for Tableau, Sisense, Qlik and more. With KQL used in Jupyter notebooks along with Python, Python visualization libraries can be leveraged as well.

An analytical database platform is incomplete without a complementary and strong visualization story. With its own native dashboarding, charting, and support for popular BI/dashboarding ISV solutions, Azure Data Explorer makes it possible to build visualization rich analytical solutions.

7. AUTOMATED INFORMATION LIFECYCLE MANAGEMENT

Azure Data Explorer offers configurable time duration based hot cache policy with automated eviction upon expiration. It offers configurable time to live/retention policy with automated removal of data upon expiration.

Historical data can be persisted in Azure Data Lake Store, with the “External Table” feature to query via KQL. Azure Data Lake Store offers its own information lifecycle management with tiers, automated retention and archival.

8. SECURITY

Azure Data Explorer offers enterprise grade security and has adoption in security critical industry domains such as healthcare and finance, and governments.

PERIMETER PROTECTION

Azure Data Explorer is a Vnet injectable service and offers a perimeter protection solution with network isolation, with network security group rules and firewall for inbound and outbound access control respectively. It also supports service endpoint to storage to bypass the public internet and optimally route over the Azure backbone.

AUTHENTICATION

Azure Data Explorer supports Azure Active Directory (AAD) authentication out of the box.

AUTHORIZATION

Azure Data Explorer supports access control based on AAD security groups, with Role Based Access Control at a cluster, database, and table row level granularity.

DATA MASKING

Azure Data Explorer supports configurable data masking.

ENCRYPTION

Azure Data Explorer supports it supports encryption over the wire with TLS 1.2 by default, and AES256 bit “at rest” encryption with Microsoft Managed keys (default) or “Bring Your Own Keys” at its durable storage layer (cold cache), with user configurable “at rest” encryption for its hot cache (disks). Intra–cluster shuffle is encrypted with IPSec.

AUDITING

All operations are audited and persisted.

COMPLIANCE CERTIFICATIONS & GDPR

It is rich from a compliance certification perspective and has delete support for GDPR. Information in Azure Data Explorer can be catalogued in Azure Data Catalog to fulfill governance requirements. Azure Data Explorer audit/monitoring data can be ingested into Azure Sentinel or any other SIEM of choice.

9. WORKLOAD ISOLATION AND COST CENTER CHARGEABILITY

Azure Data Explorer supports centralizing ingestion and data engineering in a (leader) cluster and in-place querying federated to follower clusters made possible by Azure Data Share. This simplifies cost center chargeability, and sizing query clusters, preventing resource contention between data engineering and querying workloads with the separate cluster per workload model.

10. DEVELOPER AND OPERATIONS FRIENDLY

Azure Data Explorer has SDKs in C#, Java, Python, Node, R and Go, and a REST interface for management and data operations. From an IDE perspective it offers a Web user interface and a Desktop IDE with built-in powerful intellisense, visualization and query-sharing/collaboration.

Azure Data Explorer offers logging, monitoring, diagnostics and alerting with its Azure Monitor integration. Patching of infrastructure, health monitoring etc are fully managed by the service. Provisioning and deployment automation is simplified through support for Azure DevOps. Due to its managed nature, it does not require a database administrator.

The capabilities detailed above make the service both developer and operations friendly.

11. COST OPTIMIZATION

Azure Data Explorer offers reserved instance pricing, which is cost optimization with a term commitment model. It offers information lifecycle management by automatically evicting expired data from hot cache, and deleting expired data in cold cache as a cost optimization. It offers configurable auto-scale out and scale-in capability to right-size a cluster based on workload in execution. Azure Advisor recommendations are available for optimization of the cluster, including rightsizing. Azure Data Explorer offers a developer SKU and the ability to pause clusters when not in use as further cost optimizations. Review our

12. AVAILABILITY AND DISASTER RECOVERY

Azure Data Explorer is built natively highly available from the ground up, leverages the natively resilient Azure storage for data durability, supports zonal redundancy, and offers an SLA of 99.9% with configurable options for disaster recovery. Azure Data Explorer’s underlying services are provisioned highly available across compute nodes, load balanced as required and are self-healing.

Azure Data Explorer is available in all Azure regions and is one of the foundational services deployed after infrastructure and identity in a region.

For analytical applications that need to be resilient to all manners of failures, Azure Data Explorer is a great fit. Learn more about high availability and disaster recovery in Azure Data Explorer here.

13. AUTOMATION, SCHEDULING & ORCHESTRATION

Azure Data Explorer supports Azure Data Factory, Azure Logic Apps, and Power Automate (previously Microsoft Flow) for data movement/composing business workflows, for scheduling and orchestration.

REFERENCE ARCHITECTURE

RESOURCES

by Scott Muniz | Sep 4, 2020 | Uncategorized

This article is contributed. See the original author and article here.

Using Apache JMeter™ to perform load testing for the Azure App Service that requires Azure AD authentication

Kuniteru Asami is an Azure MVP and core staff member of the Japan Azure User Group. Kuniteru has been providing technical consulting for Microsoft Azure for more than 10 years since its initial release. Moreover, the Japanese Azure expert is the CEO of a company with six Azure MVPs on its staff. Follow him on Twitter @kunyami

How can I protect static files with authorization on ASP.NET Core?

Jun-ichi Sakamoto is a Japanese MVP for Developer Technologies. The 10-time MVP title holder specializes in C#, ASP.NET, Blazor, Azure Web Apps, TypeScript. Jun-ichi’s publication of NuGet packages – like Entity Framework Core, helper, testing tools, Blazor components, and more – have been downloaded more than 18 million times. For more on Jun-ichi, check out his Twitter @jsakamoto

ASP.NET MVC: LOAD JQUERY PLUGIN ON BOOTSTRAP MODAL

Asma Khalid is an Entrepreneur, ISV, Product Manager, Full Stack .Net Expert, Community Speaker, Contributor, and Aspiring YouTuber. Asma counts more than 7 years of hands-on experience in Leading, Developing & Managing IT-related projects and products as an IT industry professional. Asma is the first woman from Pakistan to receive the MVP award three times, and the first to receive C-sharp corner online developer community MVP award four times. See her blog here.

SQL Server Data Migration Tools and Services

Sergio Govoni is a graduate of Computer Science from “Università degli Studi” in Ferrara, Italy. Following almost two decades at Centro Software, a software house that produces the best ERP for manufacturing companies that are export-oriented, Sergio now manages the Development Product Team and is constantly involved on several team projects. For the provided help to technical communities and for sharing his own experience, since 2010 he has received the Microsoft Data Platform MVP award. During 2011 he contributed to writing the book: SQL Server MVP Deep Dives Volume 2. Follow him on Twitter or read his blogs in Italian and English.

Group SharePoint items by weekday (in the correct order)

Marijn Somers is an MVP for Office Apps and Services who has been active in various roles to help clients deliver successful collaboration and content management solutions for more than 14 years. These roles include project manager, presales engineer, evangelist, SPOC (Single-Point-Of-Contact), trainer, analyst and administrator. Marjin is the founder and owner of Balestra, an outfit which focuses on Microsoft Office 365 and specializes in governance and user adoption for collaboration and document management. Follow him on Twitter @MarjinSomers

by Scott Muniz | Sep 4, 2020 | Azure, Technology, Uncategorized

This article is contributed. See the original author and article here.

Working on a case I got to scenario where customer may would like to better understand the TEMPDB usage and isolation between databases in an elastic pool.

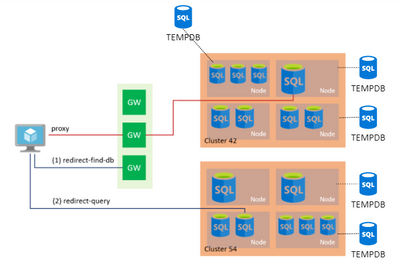

First speaking about isolation. Each DB even though they are below one logical instance, behind the scenes each Azure DB will leave on different node. So, each DB will have one isolated DB, except for Elastic Pool that share same hardware and share same TEMPDB. Even though there are some considerations to check reg isolation of data. More information below:

https://docs.microsoft.com/en-us/sql/relational-databases/databases/tempdb-database

Azure SQL Database single databases and elastic pools support global temporary tables and global temporary stored procedures that are stored in tempdb and are scoped to the database level. Global temporary tables and global temporary stored procedures are shared for all users’ sessions within the same Azure SQL database. User sessions from other Azure SQL databases cannot access global temporary tables. For more information, see Database scoped global temporary tables (Azure SQL Database). Azure SQL Managed Instance) supports the same temporary objects as does SQL Server. For Azure SQL Database single databases and elastic pools, only master database and tempdb database apply. For more information, see What is an Azure SQL Database server. For a discussion of tempdb in the context of Azure SQL Database single databases and elastic pools, see tempdb Database in Azure SQL Database single databases and elastic pools. For Azure SQL Managed Instance, all system databases apply.

One way to test the isolation you can create a global temp table, like sample below.

DROP TABLE IF EXISTS ##TEMP_COLUMNS

GO

SELECT * INTO ##TEMP_COLUMNS

FROM sys.columns

When trying to select from the global temp connected to another database you should get

SELECT * FROM ##TEMP_COLUMNS

Msg 208, Level 16, State 0, Line 1

Invalid object name '##TEMP_COLUMNS'.

On Elastic pool also same rule applies. Even though they are sharing the same space used, global temp tables are scoped to database level.

TEMPDB Space monitoring

First you need to check what is your database SLO. The max space for each DB or Pool will depends on SLO for DB

SELECT * FROM [sys].[database_service_objectives] DSO

database_id edition service_objective elastic_pool_name

----------- ------- ----------------- -----------------

8 Basic ElasticPool fonsecanetPool

DTU Model

vCore

Then you can use queries like sample below to monitor the TEMPDB usage

-- Determining the Amount of Space Used / free

SELECT

[Source] = 'database_files'

,[TEMPDB_max_size_MB] = SUM(max_size) * 8 / 1027.0

,[TEMPDB_current_size_MB] = SUM(size) * 8 / 1027.0

,[FileCount] = COUNT(FILE_ID)

FROM tempdb.sys.database_files

WHERE type = 0 --ROWS

SELECT

[Source] = 'dm_db_file_space_usage'

,[free_space_MB] = SUM(U.unallocated_extent_page_count) * 8 / 1024.0

,[used_space_MB] = SUM(U.internal_object_reserved_page_count + U.user_object_reserved_page_count + U.version_store_reserved_page_count) * 8 / 1024.0

,[internal_object_space_MB] = SUM(U.internal_object_reserved_page_count) * 8 / 1024.0

,[user_object_space_MB] = SUM(U.user_object_reserved_page_count) * 8 / 1024.0

,[version_store_space_MB] = SUM(U.version_store_reserved_page_count) * 8 / 1024.0

FROM tempdb.sys.dm_db_file_space_usage U

-- Obtaining the space consumed currently in each session

SELECT

[Source] = 'dm_db_session_space_usage'

,[session_id] = Su.session_id

,[login_name] = MAX(S.login_name)

,[database_id] = MAX(S.database_id)

,[database_name] = MAX(D.name)

,[elastic_pool_name] = MAX(DSO.elastic_pool_name)

,[internal_objects_alloc_page_count_MB] = SUM(internal_objects_alloc_page_count) * 8 / 1024.0

,[user_objects_alloc_page_count_MB] = SUM(user_objects_alloc_page_count) * 8 / 1024.0

FROM tempdb.sys.dm_db_session_space_usage SU

LEFT JOIN sys.dm_exec_sessions S

ON SU.session_id = S.session_id

LEFT JOIN sys.database_service_objectives DSO

ON S.database_id = DSO.database_id

LEFT JOIN sys.databases D

ON S.database_id = D.database_id

WHERE internal_objects_alloc_page_count + user_objects_alloc_page_count > 0

GROUP BY Su.session_id

ORDER BY [user_objects_alloc_page_count_MB] desc, Su.session_id;

-- Obtaining the space consumed in all currently running tasks in each session

SELECT

[Source] = 'dm_db_task_space_usage'

,[session_id] = SU.session_id

,[login_name] = MAX(S.login_name)

,[database_id] = MAX(S.database_id)

,[database_name] = MAX(D.name)

,[elastic_pool_name] = MAX(DSO.elastic_pool_name)

,[internal_objects_alloc_page_count_MB] = SUM(SU.internal_objects_alloc_page_count) * 8 / 1024.0

,[user_objects_alloc_page_count_MB] = SUM(SU.user_objects_alloc_page_count) * 8 / 1024.0

FROM tempdb.sys.dm_db_task_space_usage SU

LEFT JOIN sys.dm_exec_sessions S

ON SU.session_id = S.session_id

LEFT JOIN sys.database_service_objectives DSO

ON S.database_id = DSO.database_id

LEFT JOIN sys.databases D

ON S.database_id = D.database_id

WHERE internal_objects_alloc_page_count + user_objects_alloc_page_count > 0

GROUP BY SU.session_id

ORDER BY [user_objects_alloc_page_count_MB] desc, session_id;

https://github.com/FonsecaSergio/ScriptCollection/blob/master/SQL/AzureSQL%20-%20Monitor%20tempdb%20usage.sql

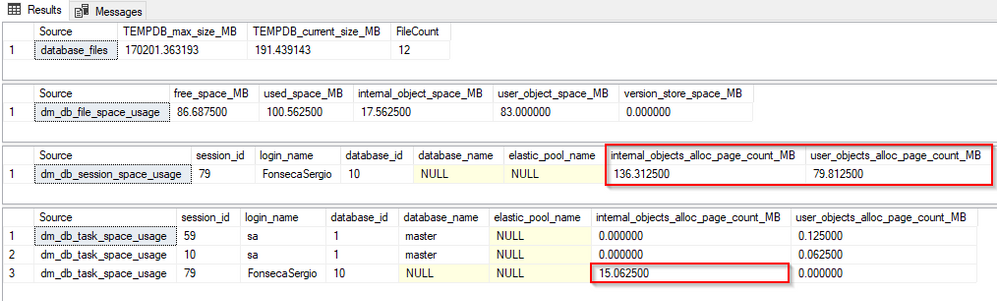

In this test we can see this DB is part of Basic Elastic Pool.

https://docs.microsoft.com/en-us/sql/relational-databases/databases/tempdb-database?view=sql-server-ver15#tempdb-database-in-sql-database

| SLO |

Max tempdb Data File Size (GBs) |

# of tempdb data files |

Max tempdb data size (GB) |

| Basic Elastic Pools (all DTU configurations) |

13.9 |

12 |

166.7 |

We can see in the results above

- TEMPDB max size / current size and File count

- That depends on the DB Service level as mentioned above

- TEMPDB file space used where

-

internal_object_reserved_page_count – Total number of pages in uniform extents allocated for internal objects in the file. Unused pages from an allocated extent are included in the count.

There is no catalog view or dynamic management object that returns the page count of each internal object.

- Internal objects are only in tempdb. The following objects are included in the internal object page counters:

- Work tables for cursor or spool operations and temporary large object (LOB) storage

- Work files for operations such as a hash join

- Sort runs

-

user_object_reserved_page_count – Total number of pages allocated from uniform extents for user objects in the database. Unused pages from an allocated extent are included in the count.

You can use the total_pages column in the sys.allocation_units catalog view to return the reserved page count of each allocation unit in the user object. However, note that the total_pages column includes IAM pages.

- The following objects are included in the user object page counters:

- User-defined tables and indexes

- System tables and indexes

- Global temporary tables and indexes

- Local temporary tables and indexes

- Table variables

- Tables returned in the table-valued functions

-

version_store_reserved_page_count – Total number of pages in the uniform extents allocated for the version store. Version store pages are never allocated from mixed extents.

For more information, see sys.dm_tran_version_store (Transact-SQL).

- TEMPDB usage per session

- We can see session 79 is using around 79 Mb of TEMPDB for user objects

- TEMPDB usage in running task per session

- In this view we can see all user requests currently running that are consuming tempdb, this can be also useful to do some specific troubleshooting. In this image we can see some internal objects being currently used. Could be used for sorting / hash join / etc or some other internal operation.

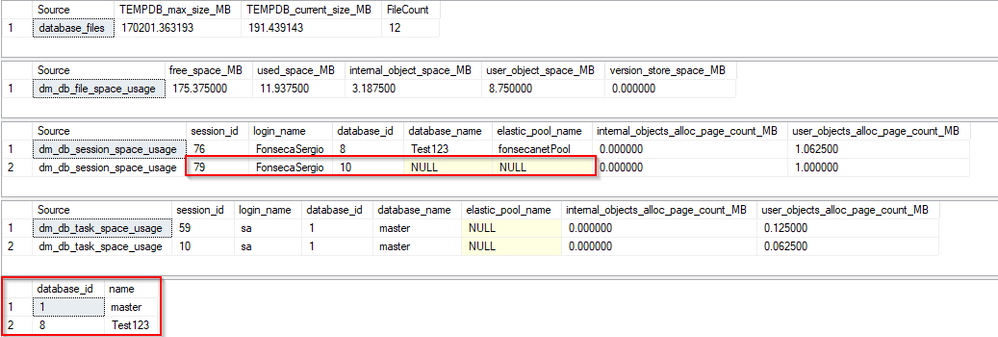

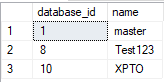

For this second test I created a global temp table in another DB in the pool, we can monitor the usage but by design we will miss some metadata like DB name as we cannot look on master.sys.databases and local user db sys.databases only includes master + current user db. With this we can see that all databases in the same elastic pool share same tempdb database space. Even though you still cannot access global temp table from other database in same pool as temp tables are scoped to database level.

We can still connect to master db and check sys.databases manually to match database id to name

SELECT database_id, name FROM sys.databases

Transactions using TEMPDB

You can also connect directly to user DB and check if there is any session ID that have a open transaction using TEMPDB.

SELECT

[Source] = 'database_transactions'

,[session_id] = ST.session_id

,[transaction_id] = ST.transaction_id

,[database_id] = DT.database_id

,[database_name] = CASE

WHEN D.name IS NULL AND DT.database_id = 2 THEN 'TEMPDB'

ELSE D.name

END

,[database_transaction_log_used_Kb] = CONVERT(numeric(18,2), DT.database_transaction_log_bytes_used / 1024.0 )

,[database_transaction_begin_time] = DT.database_transaction_begin_time

,[transaction_type_desc] = CASE database_transaction_type

WHEN 1 THEN 'Read/write transaction'

WHEN 2 THEN 'Read-only transaction'

WHEN 3 THEN 'System transaction'

WHEN 4 THEN 'Distributed transaction'

END

,[transaction_state_desc] = CASE database_transaction_state

WHEN 0 THEN 'The transaction has not been completely initialized yet'

WHEN 1 THEN 'The transaction has been initialized but has not started'

WHEN 2 THEN 'The transaction is active'

WHEN 3 THEN 'The transaction has ended. This is used for read-only transactions'

WHEN 4 THEN 'The commit process has been initiated on the distributed transaction. This is for distributed transactions only. The distributed transaction is still active but further processing cannot take place'

WHEN 5 THEN 'The transaction is in a prepared state and waiting resolution.'

WHEN 6 THEN 'The transaction has been committed'

WHEN 7 THEN 'The transaction is being rolled back'

WHEN 8 THEN 'The transaction has been rolled back'

END

FROM sys.dm_tran_database_transactions DT

INNER JOIN sys.dm_tran_session_transactions ST

ON DT.transaction_id = ST.transaction_id

LEFT JOIN sys.databases D

ON DT.database_id = D.database_id

ORDER BY ST.session_id

Recent Comments