by Scott Muniz | Sep 11, 2020 | Uncategorized

This article is contributed. See the original author and article here.

ADF Data Flows Expression Builder is getting some new enhancements next week. Here’s a sneak peek at some of what’s coming!

Join @daperlov and @Mark Kromer next week for our YouTube Live Stream where we’ll walk through these updates in detail.

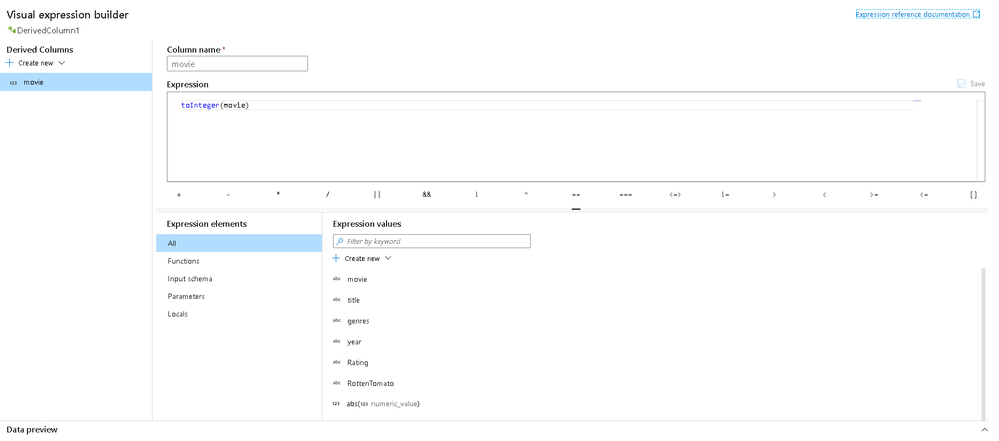

- New “Locals” feature where you can now define a variable that is scoped to your current transformation allowing you to reuse logic that used to require you to copy that formula multiple times inside your expressions. Another current technique is to use Derived Columns to generate synthetic columns that were not meant for loading into your destination and were used in this “temporary” way. Now you can use locals to store data that won’t propagate outside of the current scope.

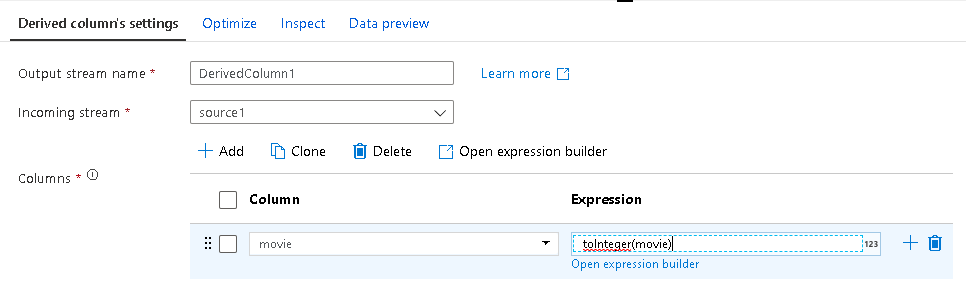

- Now you can edit directly inside Derived Column transformation text boxes without the Expression Builder automatically launching. Typing or editing your formulas directly will also include standard validation and enables copy & paste without needing to open the Expression Builder. This means that the Clone button has moved to the top of the column list as well as a new Open Expression Builder option.

- We are closing in on our 1-year anniversary of the GA of Data Flows in ADF and we gathered a ton of feedback from our community that we consolidated into these updates including making the experience full-screen and adding inline function help. We hope that you will find these changes make for a more productive and enjoyable experience in ADF data flows as we’ve recognized that the bulk of time spent in ADF by our data flow ETL developers ends up being inside the Expression Builder.

by Scott Muniz | Sep 11, 2020 | Uncategorized

This article is contributed. See the original author and article here.

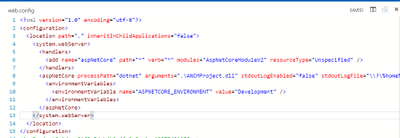

After upgrading the asp.net core application to asp.net core 3.1 version, application is not able to read the “ASPNETCORE_ENVIRONMENT” variable from local environment and getting defaulted to web.config values.

ASPNETCORE_ENVIRONMENT value is set as Development in Web.config.

ASPNETCORE_ENVIRONMENT value is set as Production in local environment of Azure App Service application setting.

ANCM is Preferring value set in Web.config over the local environment variable in Azure App service application setting.

Solution:

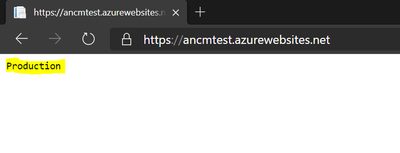

We need to add a switch “ANCM_PREFER_ENVIRONMENT_VARIABLES” and set as “true” to allow ANCM to prefer the local environment over the Web.config values. Below is the screenshot of app service app settings.

If the switch is added in both Web.config and local environment and both of them are set to “true” then web.config “ASPNETCORE_ENVIRONMENT” will take precedence.

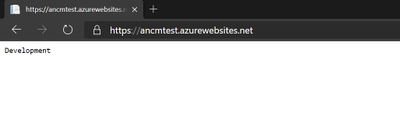

After setting “ANCM_PREFER_ENVIRONMENT_VARIABLES” as “true” in App service App settings, ANCM is preferring the local environment variable “ASPNETCORE_ENVIRONMENT” which is set to production .

For more information about these changes, please refer below Github issues.

https://github.com/dotnet/aspnetcore/pull/20006

https://github.com/dotnet/aspnetcore/issues/19450

by Scott Muniz | Sep 11, 2020 | Azure, Technology, Uncategorized

This article is contributed. See the original author and article here.

Microsoft is updating Azure services in a phased manner to use TLS certificates from a different set of Certificate Authorities (CAs) beginning August 13, 2020 and concluding approximately on October 26, 2020. We expect that most Azure IoT customers will not be impacted; however, your application may be impacted if you explicitly specify a list of acceptable CAs (a practice known as “certificate pinning”). This change is limited to services in public Azure cloud and no sovereign cloud like Azure China.

This change is being made because the current CA certificates do not comply with one of the CA/Browser Forum Baseline requirements. This was reported on July 1, 2020 and impacts multiple popular Public Key Infrastructure (PKI) providers worldwide. Today, most of the TLS certificates used by Azure services are issued from the “Baltimore CyberTrust Root” PKI.

The following services used by Azure IoT devices will remain chained to the Baltimore CyberTrust Root*, but their TLS server certificates will be issued by new Intermediate Certificate Authorities (ICAs) starting October 5, 2020:

- Azure IoT Hub

- Azure IoT Hub Device Provisioning Service (DPS)

- Azure Storage Services

If any client application or device has pinned to an Intermediate CA or leaf certificate rather than the Baltimore CyberTrust Root, immediate action is required to prevent disruption of IoT device connectivity to Azure.

* Other Azure service TLS certificates may be issued by a different PKI.

Certificate Renewal Summary

The table below provides information about the certificates that are being rolled. Depending on which certificate your device or gateway clients use for establishing TLS connections, action may be needed to prevent loss of connectivity.

|

Certificate

|

Current

|

Post Rollover (Oct 5, 2020)

|

Action

|

|

Root

|

Thumbprint: d4de20d05e66fc53fe1a50882c78db2852cae474

Expiration: Monday, May 12, 2025, 4:59:00 PM

Subject Name:

CN = Baltimore CyberTrust Root

OU = CyberTrust

O = Baltimore

C = IE

|

Not Changing

|

None

|

|

Intermediates

|

Thumbprints:

CN = Microsoft IT TLS CA 1

Thumbprint: 417e225037fbfaa4f95761d5ae729e1aea7e3a42

—————————————————-

CN = Microsoft IT TLS CA 2

Thumbprint: 54d9d20239080c32316ed9ff980a48988f4adf2d

—————————————————-

CN = Microsoft IT TLS CA 4

Thumbprint: 8a38755d0996823fe8fa3116a277ce446eac4e99

—————————————————-

CN = Microsoft IT TLS CA 5

Thumbprint: Ad898ac73df333eb60ac1f5fc6c4b2219ddb79b7

—————————————————-

Expiration: Friday, May 20, 2016 5:52:38 AM

Subject Name:

OU = Microsoft IT

O = Microsoft Corporation

L = Redmond

S = Washington

C = US

|

Thumbprints:

CN = Microsoft RSA TLS CA 01

Thumbprint: 417e225037fbfaa4f95761d5ae729e1aea7e3a42

—————————————————-

CN = Microsoft RSA TLS CA 02

Thumbprint: b0c2d2d13cdd56cdaa6ab6e2c04440be4a429c75

—————————————————-

Expiration: Tuesday, October 8, 2024 12:00:00 AM;

Subject Name:

O = Microsoft Corporation

C = US

|

Required

|

|

Leaf (IoT Hub)

|

Thumbprint: 8b1a359705188c5577cb2dcd9a06331807c0bb97

Expiration: Friday, March 19, 2021 6:15:48 PM

Subject Name:

CN = *.azure-devices.net

|

Thumbprint: Coming soon

Expiration: Coming soon

Subject Name:

CN = *.azure-devices.net

|

Required

|

|

Leaf (DPS)

|

Thumbprint: f568f692f3274ecbb479c94272d6f3344a3f0247

Expiration: Friday, March 19, 2021 5:58:35 PM

Subject Name:

CN = *.azure-devices-provisioning.net

|

Thumbprint: Coming soon

Expiration: Coming soon

Subject Name:

CN = *.azure-devices-provisioning.net

|

Required

|

Note: Both the intermediate and lea certificates are expected to change frequently. We recommend not taking dependencies on them and instead pinning the root certificate as it rolls less frequently.

Action Required

- If your devices depend on the operating system certificate store for getting these roots or use the device/gateway SDKs as provided, then no action is required.

- If your devices pin the Baltimore root CA among others, then no action is required related to this change.

- If your device interacts with other Azure services (e.g. IoT Edge -> Microsoft Container Registry), then you must pin additional roots as provided here.

- If your devices use a connection stack other than the ones provided in an Azure IoT SDK, and pin any intermediary or leaf TLS certificates instead of the Baltimore root CA, then immediate action is required:

- To continue without disruption due to this change, Microsoft recommends that client applications or devices pin the Baltimore root –

- Baltimore Root CA

(Thumbprint: d4de20d05e66fc53fe1a50882c78db2852cae474)

- To prevent future disruption, client applications or devices should also pin the following roots:

- Microsoft RSA Root Certificate Authority 2017

(Thumbprint: 73a5e64a3bff8316ff0edccc618a906e4eae4d74)

- Digicert Global Root G2

(Thumbprint: df3c24f9bfd666761b268073fe06d1cc8d4f82a4)

- To continue pinning intermediaries, replace the existing certificates with the new intermediates CAs:

- Microsoft RSA TLS CA 01

(Thumbprint: 703d7a8f0ebf55aaa59f98eaf4a206004eb2516a)

- Microsoft RSA TLS CA 02

(Thumbprint: b0c2d2d13cdd56cdaa6ab6e2c04440be4a429c75)

- To minimize future code changes, also pin the following ICAs:

- Microsoft Azure TLS Issuing CA 01

(Thumbprint: 2f2877c5d778c31e0f29c7e371df5471bd673173)

- Microsoft Azure TLS Issuing CA 02

(Thumbprint: e7eea674ca718e3befd90858e09f8372ad0ae2aa)

- Microsoft Azure TLS Issuing CA 05

(Thumbprint: 6c3af02e7f269aa73afd0eff2a88a4a1f04ed1e5)

- Microsoft Azure TLS Issuing CA 06

(Thumbprint: 30e01761ab97e59a06b41ef20af6f2de7ef4f7b0)

- If your client applications, devices, or networking infrastructure (e.g. firewalls) perform any sub root validation in code, immediate action is required:

- If you have hard coded properties like Issuer, Subject Name, Alternative DNS, or Thumbprint of any certificate other than the Baltimore Root CA, then you will need to modify this to reflect the properties of the newly pinned certificates.

- Note: This extra validation, if done, should cover all the pinned certificates to prevent future disruptions in connectivity.

Validation

We recommend performing some basic validation to mitigate any unintentional impact to your IoT infrastructure connecting to Azure IoT Hub and DPS. We will be providing a test environment for your convenience to try out before we roll these certificates in production environments. The connection strings for this test environment will be updated here soon.

A successful TLS connection to the test environment indicates a positive test outcome – that your infrastructure will work as is with these changes. The test connection strings contain invalid keys, so once the TLS connection has been established, any run time operations performed against these services will fail. This is by design since these resources exist solely for customers to validate their TLS connectivity. The test environment will be available until all public cloud regions have been updated.

Support

If you have any technical questions on implementing these changes, with the options below and a member from our engineering team will get back to you shortly.

- Issue Type: Technical

- Service: Internet of Things/IoT SDKs

- Problem type: Connectivity

- Problem subtype: Unable to connect

Additional Information

- IoT Show: To know more about TLS certificates and PKI changes related to Azure IoT, please check out the IoT Show:

- Microsoft wide communications: To broadly notify customers, Microsoft had sent a Service Health portal notification on Aug 3rd, 2020 and released a public document that include timelines, actions that need to be taken, and details regarding the upcoming changes to our Public Key Infrastructure (PKI).

by Scott Muniz | Sep 11, 2020 | Uncategorized

This article is contributed. See the original author and article here.

We will have our Service Fabric Community Q&A call for this month on Sep 17th 10am PDT.

Starting Aug 2020, we introduced a framework for our monthly community session. In addition to our normal Q&A in each community call we will focus on topics related to various components of the Service Fabric platform, provide updates to roadmap, upcoming releases, and showcase solutions developed by customers that benefit the community.

Agenda:

- Service Fabric Backup Explorer(Preview)

- Service Fabric Roadmap

- Q&A

Join us to learn about roadmap and ask us any questions related to Service Fabric, containers in Azure, etc. This month’s Q&A features one session on:

As usual, there is no need to RSVP – just navigate to the link to the call and you are in.

by Scott Muniz | Sep 11, 2020 | Uncategorized

This article is contributed. See the original author and article here.

This last week, I found an interesting issue. Our customer exported a database to bacpac file using SQLPackage .NET Core.

To import the data to another SQL Server, our customer tried to use SQLPackage for Windows but, they got an error reading the bacpac file with the following error message: File contains corrupted data.

During our investigation we found that we have a known issue using SQLPackage for Windows to import a bacpac file that was created using SQLPackage .NET Core. As a workaround, download SQLPackage .NET Core software in your Windows Server Machine and execute it instead of SQLPackage for Windows or SQL Server Management Studio.

We hope to have the fix very soon.

Enjoy!

by Scott Muniz | Sep 11, 2020 | Uncategorized

This article is contributed. See the original author and article here.

This week I worked on a very interesting service request about a deadlock issue when our customer is running 32 threads at the same time inserting data. I saw an important thing that may prevent a deadlock.

Background:

- Our customer has two tables with the following structure:

CREATE TABLE [dbo].[Header]

(

[Id] UNIQUEIDENTIFIER NOT NULL,

CONSTRAINT [PK_Header] PRIMARY KEY CLUSTERED([Id])

)

GO

CREATE TABLE [dbo].[Detail]

(

[Id] UNIQUEIDENTIFIER NOT NULL,

[HeaderId] UNIQUEIDENTIFIER NOT NULL,

CONSTRAINT [PK_Detail] PRIMARY KEY CLUSTERED([Id]),

CONSTRAINT [FK_Detail_Header]

FOREIGN KEY ([HeaderId])

REFERENCES [dbo].[Header] ([Id])

)

GO

- In our customer code, they create a transaction that insert a new row in the header table and after insert around 1000 rows in the detail table. This operation is running by 32 threads at the same time.

- During the execution we saw some deadlocks because 2 transactions block each other from continuing because each has locked a database resource that the other transaction needs. SQL Server handles deadlocks by terminating and rolling back transactions that were started after the first transaction. In this situation it is a KeyLock.

Solution:

- We saw that the deadlock is happening when these a transaction needs to lock a resource that the other transaction has in exclusive mode.

- We have two options:

- Retry the operation about the transaction that was killed by SQL Server.

- Create a new Partition Key based on the number of threads running.

My suggestion suggested was to create a partitioned table based on the numbers of threads, for example:

- Create a partition function for the 32 threads, running the following command:

CREATE PARTITION FUNCTION [PartitioningByInt](int) AS RANGE RIGHT FOR VALUES (1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17..32)

- Create the partition schema based on the function defined:

CREATE PARTITION SCHEME [PartitionByinT] AS PARTITION [PartitioningByInt] TO ([PRIMARY], [PRIMARY], [PRIMARY], [PRIMARY], [PRIMARY], [PRIMARY], [PRIMARY], [PRIMARY], [PRIMARY], [PRIMARY], [PRIMARY], [PRIMARY], [PRIMARY], [PRIMARY], [PRIMARY], [PRIMARY], [PRIMARY], [PRIMARY], [PRIMARY], [PRIMARY]...)

- For the table header perform two operations:

- First, include the partition key.

- Second, include the PK to have the referential integrity with Detail table.

CREATE TABLE [dbo].[Header](

[Id] [uniqueidentifier] NOT NULL,

[IdPartition] [int] NOT NULL)

CREATE CLUSTERED INDEX [ClusteredIndex_on_PartitionByinT_637343967904700811] ON [dbo].[Header]

( [IdPartition])

WITH (SORT_IN_TEMPDB = OFF, DROP_EXISTING = OFF, ONLINE = OFF) ON [PartitionByinT]([IdPartition])

create UNIQUE nonclustered INDEX [PK_Header] ON [dbo].[Header]

([Id] ASC,iDpARTITION)

WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, SORT_IN_TEMPDB = OFF, IGNORE_DUP_KEY = OFF, ONLINE = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PartitionByinT]([IdPartition])

- In the detail table, include the IdPartition column to maintain the same referential integrity with header table.

CREATE TABLE [dbo].[Detail](

[Id] [uniqueidentifier] NOT NULL,

[HeaderId] [uniqueidentifier] NOT NULL,

[IdPartition] [int] NOT NULL,

CONSTRAINT [PK_Detail] PRIMARY KEY CLUSTERED

(

[Id] ASC

)WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PRIMARY]

) ON [PRIMARY]

GO

ALTER TABLE [dbo].[Detail] WITH CHECK ADD CONSTRAINT [FK_Detail_Header] FOREIGN KEY([HeaderId], [IdPartition])

REFERENCES [dbo].[Header] ([Id], [IdPartition])

GO

ALTER TABLE [dbo].[Detail] CHECK CONSTRAINT [FK_Detail_Header]

GO

But, what is the outcome?

- As we discussed previously, at the moment of the transaction that needs to lock exclusively the PartitionKey of the Primary Key, instead of that all transactions lock the same, every transaction will lock the partition key of the partition, reducing a lot the contention and preventing any deadlock.

Enjoy!

Recent Comments