- Increased auto-refresh rate options on dashboards

- Improvements to the ARM template deployment experience in the Azure portal

- Deployment of templates at the tenant, management group and subscription scopes

Database

- Configuration of Always On availability groups for SQL Server virtual machines

- New features in the Resource Graph Explorer

Azure mobile app

- Azure alerts visualization

Intune

- Updates to Microsoft Intune

Let’s look at each of these updates in greater detail.

General

Increased Auto-Refresh Rate Options on Dashboards

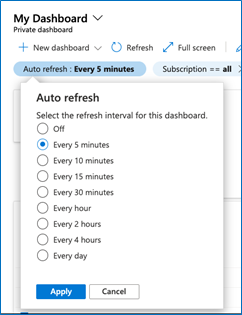

We’ve updated the auto-refresh rate options to include 5, 10, and 15 minutes.

- Go to the left navigation and choose “Dashboard”

- Select the auto-refresh rate you’d like and click “Apply”. Now your dashboard will refresh at the interval you selected.

-

You can check when your dashboard was last refreshed at the top right of your dashboard.

This Azure portal “how to” video will show you how to set up auto-refresh rates.

General

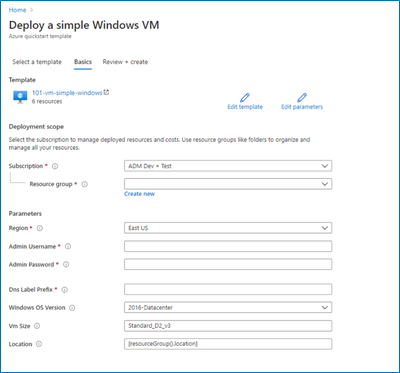

Improvements to the ARM template deployment experience in the Azure portal

The custom template deployment experience in the Azure portal allows customers to deploy an ARM template. This experience has been updated with the following improvements:

-

Easier navigation – Now there are multiple tabs which allows users to go back and select a different template without re-loading the entire page.

-

Review + create tab – The popular “Review + create” tab has been added to the custom deployment experience, allowing users to review parameters before starting the deployment.

-

Multi-line JSONs and comment support – The Edit template view now supports multi-line JSON and JSONs with comments in accordance with ARMs capabilities.

General

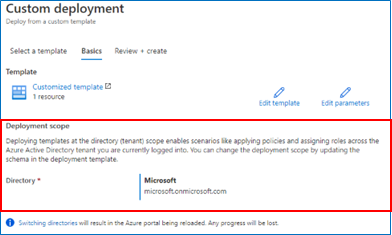

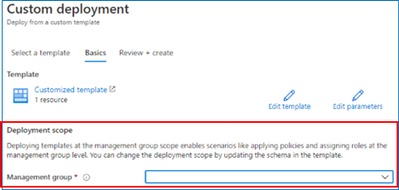

Deployment of templates at the tenant, management group and subscription scopes

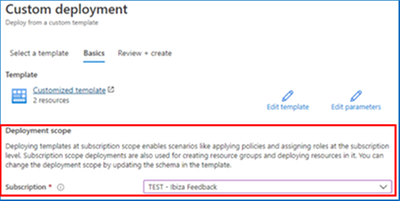

The custom template deployment experience in the Azure portal now supports deploying templates at the tenant, management group, and subscription scopes. The Azure portal looks at the schema of the ARM template to infer the scope of the deployment. The correct deployment template schema for deployments at different scopes can be found here. The Deployment scope section of the Basics tab will automatically update to reflect the scope inferred from the deployment template.

Deployment scope section of the Basics tab when the schema of the template indicates that it is a deployment at tenant scope.

Deployment scope section of the Basics tab when the schema of the template indicates that it is a deployment at management group scope.

Deployment scope section of the Basics tab when the schema of the template indicates that it is a deployment at subscription scope.

Steps:

- Navigate to the custom template deployment experience in the Azure portal.

- Choose the “Build your own template in the editor” option in the “Select a template” tab.

- Author an ARM deployment template that deploys at the tenant, management group or subscription scope and save.

- Complete the Basics tab by providing values for deployment parameters.

- Review the parameters and trigger a deployment in the Review + create tab.

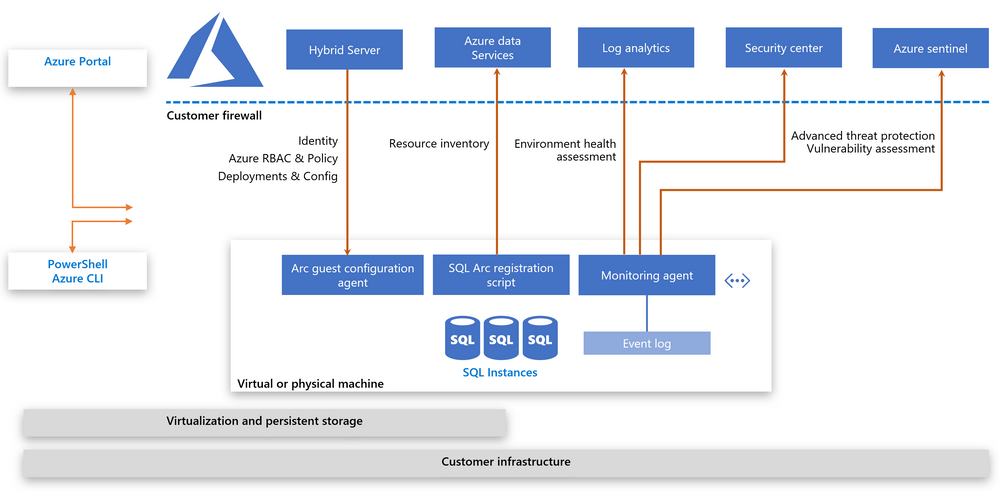

Databases > SQL Virtual Machines

Configuration of Always On availability groups for SQL Server virtual machines

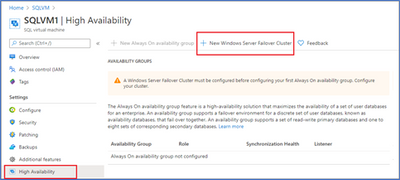

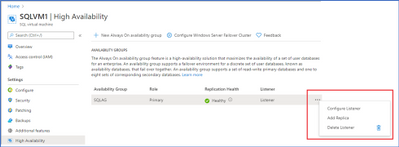

It is now possible to set up an Always On availability group (AG) for your Azure SQL Server Virtual Machines (VM) from the Azure portal. Also available with the use of an ARM template and Azure SQL VM CLI, this new experience simplifies the process of manually configuring availability groups into a few simple steps.

You can find this experience in any of your existing SQL virtual machines so long as they are registered with the SQL VM Resource Provider and are version SQL Server 2016 Enterprise or higher. To get started, the experience outlines the pre-requisites that must be completed outside the portal including joining the VMs to the same domain. After meeting the prerequisites, you can create and manage the Windows Server Failover Cluster, the Availability Groups, and the listener, as well as manage the set of VMs in the cluster and the AGs, all from the same portal experience.

Follow the detailed step-by-step documentation to set up availability groups. Here we will outline a few of the steps to access this capability:

- Sign in to the Azure portal.

- In the top search bar, search for “Azure SQL” and select “Azure SQL” under the list of Services.

- In the Azure SQL browse list, find and select the SQL VM that is at least version SQL Server 2016 Enterprise or higher that you would like to use as your primary VM.

- Select “High availability” in the left-side menu of the SQL VM resource.

- Make sure the VM is domain-joined, then select “New Windows Server Failover Cluster” at the top of the page.

- Once you have created or onboarded to a Windows Server Failover Cluster, you can create your first availability group where you will name the availability group, configure a , and select from a list of viable VMs to include in the AG.

- From there you can add databases and other VMs to the availability group, create more availability groups, and manage the configurations of all related settings.

Management + Governance > Resource Graph Explorer

New features in the Resource Graph Explorer

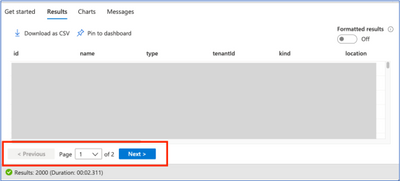

We’ve added three features to the Resource Graph Explorer:

- Keyboard shortcuts are now available, such as Shift+ Enter to run a query. A list of the shortcuts can be found here.

- You can now see more than 1000 results by paging through the list.

- “Download as CSV” will now save up to 5000 results.

Storage/Storage account

Azure blob storage updates

Object Replication for Blobs now generally available

Object replication is a new capability for block blobs that lets you replicate your data from your blob container in one storage account to another anywhere in Azure. This feature unblocks a new set of common replication scenarios:

- Minimize latency – Users consume the data locally rather than issuing cross-region read requests.

- Increase efficiency – Compute clusters process the same set of objects locally in different regions.

- Optimize data distribution – Data is consolidated in a single location for processing/analytics and resulting dashboards are then distributed to your offices worldwide.

- Minimize cost – Tier down your data to archive upon replication completion using lifecycle management policies to minimize the cost.

Learn more

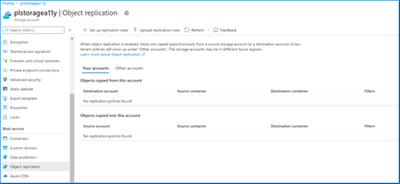

View any existing replication rules on your account by navigating to the Object replication resource menu item in your storage account. Here you can edit or delete existing rules or download them to share with others. This is also the entry point to either create a new rule or upload an existing rule that’s been shared with you.

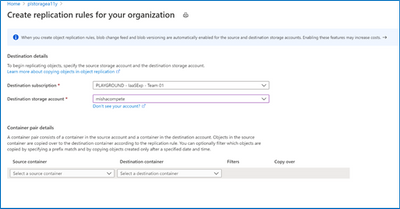

Create a replication rule by specifying the destination and source.

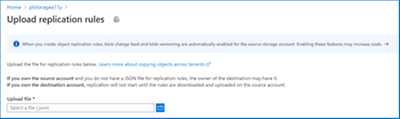

Upload a replication rule that has been shared with you.

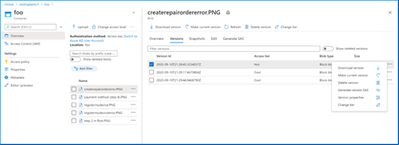

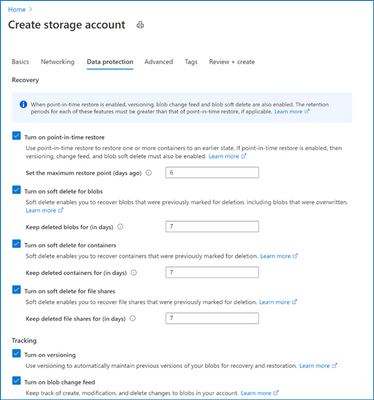

Blob Versioning now generally available

Blob Versioning for Azure Storage automatically maintains previous versions of an object and identifies them with version IDs. You can list both the current blob and previous versions using version ID timestamps. You can also access and restore previous versions as the most recent version of your data if they were erroneously modified or deleted by an application or other users.

Together with our existing data protection features, Azure Blob storage provides the most complete user configurable settings to protect your business-critical data.

Enabling versioning is free, but when versions are created, there will be costs associated with additional data storage being used.

Learn more by viewing documentation and the “how to” video.

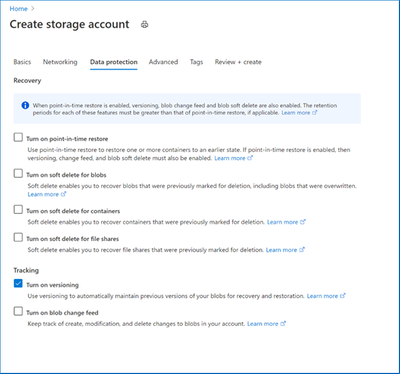

Enable versioning while creating your storage account. Versioning can be enabled/disabled after create as well under the Data Protection resource menu item for your storage account.

View and manage blob versions.

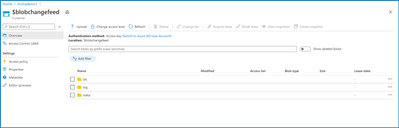

Change feed support for blob storage now generally available

Change feed provides a guaranteed, ordered, durable, read-only log of all the creation, modification, and deletion change events that occur to the blobs in your storage account.

Change feed is the ideal solution for bulk handling of large volumes of blob changes in your storage account, as opposed to periodically listing and manually comparing for changes. It enables cost-efficient recording and processing by providing programmatic access such that event-driven applications can simply consume the change feed log and process change events from the last checkpoint.

Learn more.

Enable change feed while creating your storage account. Change feed can be enabled/disabled after create as well under the Data Protection resource menu item for your storage account.

Change feed logs are written to the $blobchangefeed container in your storage account. Click the following link to understand change feed organization.

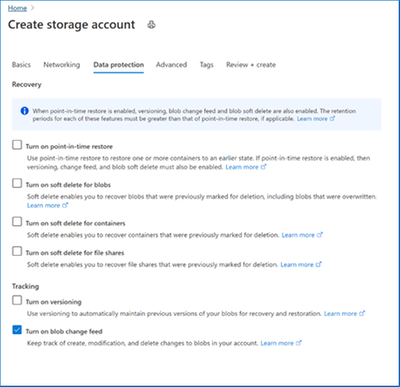

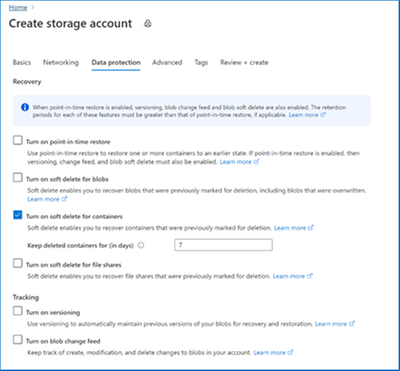

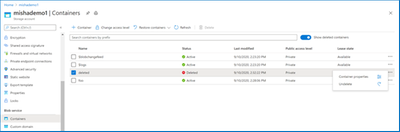

Soft delete for containers now in public preview

Soft delete for containers protects your data from being accidentally or erroneously modified or deleted. When container soft delete is enabled for a storage account, any deleted container and their contents are retained in Azure Storage for the period that you specify. During the retention period, you can restore previously deleted containers and any blobs within them by calling the Undelete container operation.

Learn more.

Enable soft delete for containers while creating your storage account. Soft delete can be enabled/disabled after create as well under the Data Protection resource menu item for your storage account.

View and restore deleted containers.

Point-in-time restore for block blobs now in public preview

Point-in-time restore provides protection against accidental deletion or corruption by enabling you to restore block blob data to an earlier state. This feature is useful in scenarios where a user or application accidentally deletes data or where an application error corrupts data. Point-in-time restore also enables testing scenarios that require reverting a data set to a known state before running further tests.

Point-in-time restore requires the following features to be enabled:

- Soft delete

- Change feed

- Blob versioning

Point-in-time restore is currently supported for preview for general purpose v2 storage accounts in the following regions:

- Canada Central

- Canada East

- France Central

Learn more.

Enable point-in-time restore while creating your storage account. Point-in-time restore can be enabled/disabled after create as well under the Data Protection resource menu item for your storage account.

Kick off a point-in-time restoration and roll back containers to a specified time and date.

Azure mobile app

Azure alerts visualization

The Azure mobile app now has a chart visualization for Azure alerts in the Home view. You can now choose between rendering a list view of your fired Azure alerts or displaying them as a chart. The chart view arranges the alerts by severity so you can quickly check the alerts that are active on your Azure environment.

In order to choose the Azure alerts chart visualization in the mobile app:

- On Android: Turn on the “Chart” toggle in the Latest alerts card in the Home view.

- On iOS: Tap the “Chart” tab in the Latest alerts card in the Home view.

INTUNE

Updates to Microsoft Intune

The Microsoft Intune team has been hard at work on updates as well. You can find the full list of updates to Intune on the What’s new in Microsoft Intune page, including changes that affect your experience using Intune.

Azure portal “how to” video series

Have you checked out our Azure portal “how to” video series yet? The videos highlight specific aspects of the portal so you can be more efficient and productive while deploying your cloud workloads from the portal. Check out our most recently published videos:

Next steps

The Azure portal has a large team of engineers that wants to hear from you, so please keep providing us your feedback in the comments section below or on Twitter @AzurePortal.

Sign in to the Azure portal now and see for yourself everything that’s new. Download the Azure mobile app to stay connected to your Azure resources anytime, anywhere. See you next month!

Recent Comments