by Contributed | Sep 30, 2020 | Azure, Technology, Uncategorized

This article is contributed. See the original author and article here.

Microsoft is committed to enabling the industry to move from ‘computing in the clear’ to ‘computing confidentially’. Why? Common scenarios confidential computing have enabled include:

- Multi-party rich and secure data analytics

- Confidential blockchain with secure key management

- Confidential inferencing with client and server measurements & verifications

- Microservices and secure data processing jobs

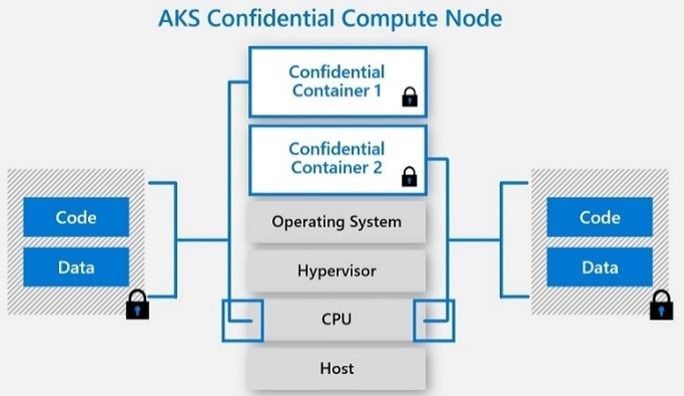

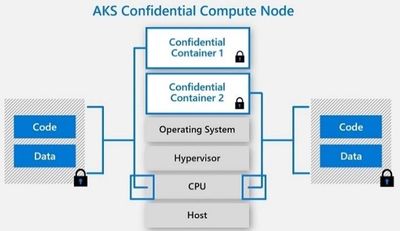

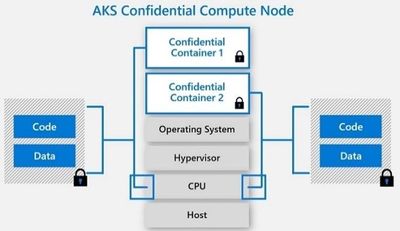

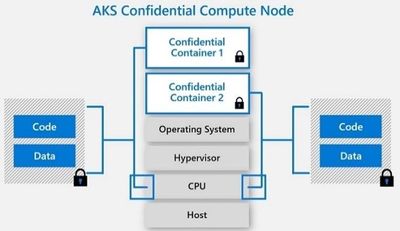

The public preview of confidential computing nodes powered by the Intel SGX DCsv2 SKU with Azure Kubernetes Service brings us one step closer by securing data of cloud native and container workloads. This release extends the data integrity, data confidentiality and code integrity protection of hardware-based isolated Trusted Execution Environments (TEE) to container applications.

Azure confidential computing, based on Intel SGX-enabled virtual machines, continues encrypting data while the CPU is processing it—that’s the “in use” part. This is achieved with a hardware-based TEE that provides a protected portion of the hardware’s processor and memory. Users can run software on top of the protected environment to shield portions of code and data from view or modification outside of the TEE.

Expanding Azure confidential computing deployments

Developers can choose different application architectures based on whether they prefer a model with a faster path to confidentiality or a model with more control. The confidential nodes on AKS support both architecture models and will orchestrate confidential application and standard container applications within the same AKS deployment. Also, developers can continue to leverage existing tooling and dev ops practices when designing highly secure end-to-end applications.

During our preview period, we have seen our customers choose different paths towards confidential computing:

-

Most developers choose confidential containers by taking an existing unmodified docker container application written in a higher programming language like Python, Java etc. and chose a partner like Scone, Fortanix and Anjuna or Open Source Software (OSS) like Graphene or Occlum in order to “lift and shift” their existing application into a container backed by confidential computing infrastructure. Customers chose this option either because it provides a quicker path to confidentiality or because it provides the ability to achieve container IP protection through encryption and verification of identity in the enclave and client verification of the server thumbprint.

-

Other developers choose the path that puts them in full control of the code in the enclave design by developing enclave aware containers with the Open Enclave SDK, Intel SGX SDK or chose a framework such as the Confidential Consortium Framework (CCF). AI/ML developers can also leverage Confidential Inferencing with ONNX to bring a pre-trained ML model and run it confidentially in a hardware isolated trusted execution environment on AKS.

One customer, Magnit, chose the first path. Magnit is one of the largest retail chains in the world and is using confidential containers to pilot a multi-party confidential data analysis solution through Aggregion’s digital marketing platform. The solution focuses on creating insights captured and computed through secured confidential computing to protect customer and partner data within their loyalty program.

We have aggregated more samples of real use cases and continue to expand this sample list here: https://aka.ms/accsamples.

How to get going

Confidential computing, through its isolated execution environment, has broad potential across use cases and industries; and with the added improvements to the overall security posture of containers with its integration to AKS, we are excited and eager to learn more about what business problems you can solve.

Get started today by learning how to deploy confidential computing nodes via AKS.

by Contributed | Sep 30, 2020 | Azure, Technology, Uncategorized

This article is contributed. See the original author and article here.

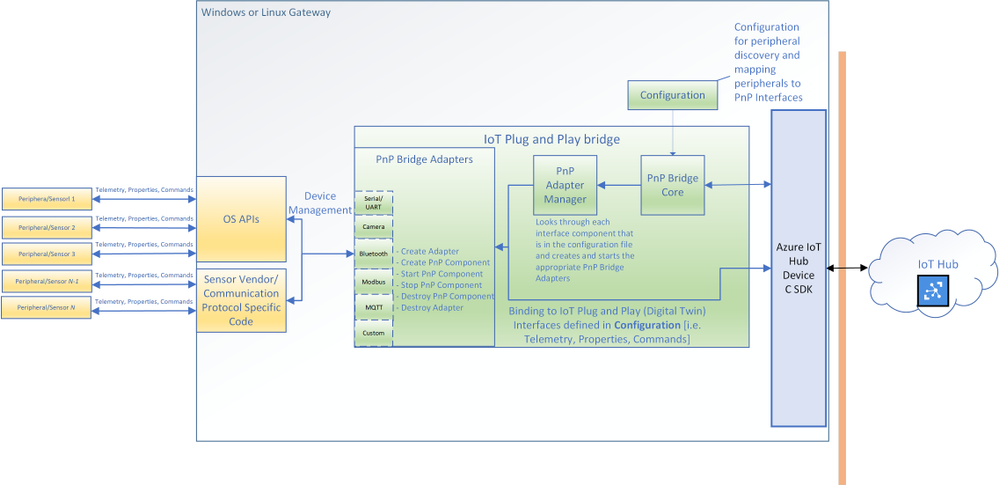

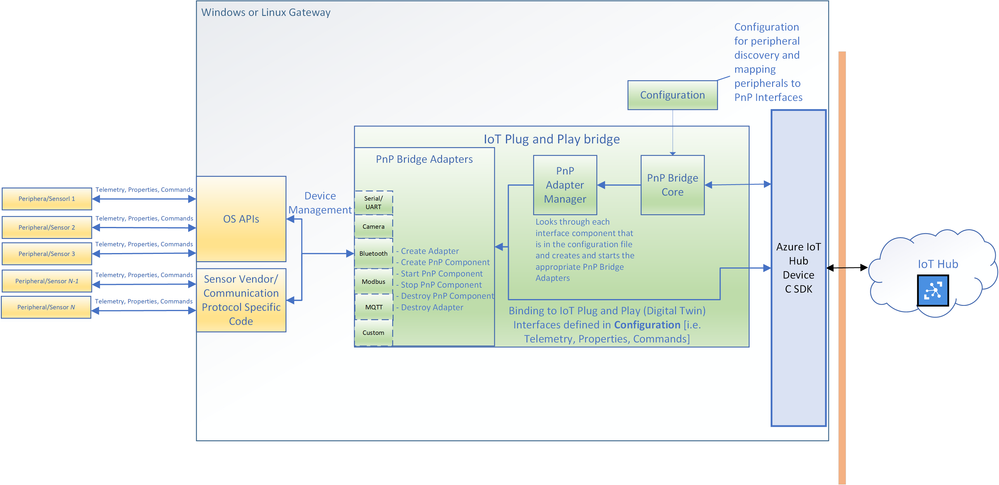

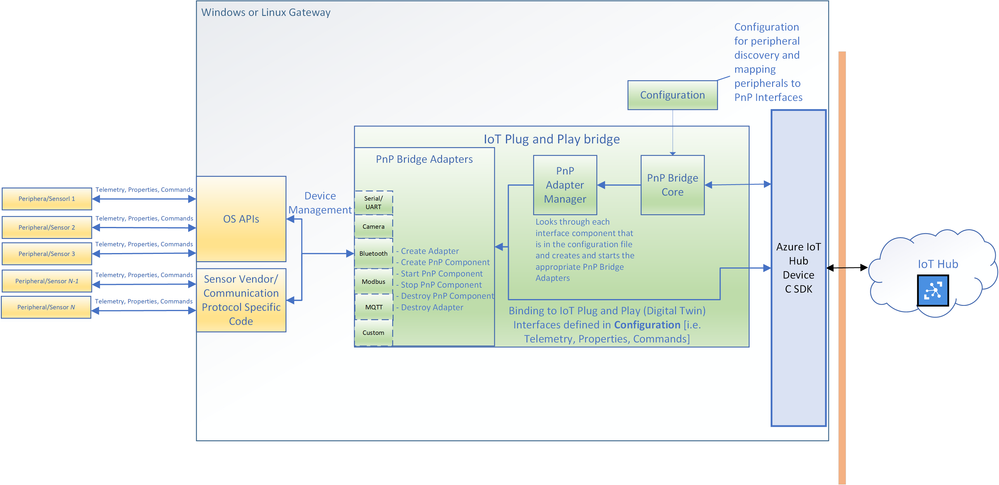

You can now connect existing sensors to Azure with little to no-code using IoT Plug and Play bridge! For developers who are building IoT solutions with existing hardware attached to a Linux or Windows gateway, the IoT Plug and Play bridge provides you an easy way to connect these devices to IoT Plug and Play compatible services. For supported protocols, the bridge requires modification of a simple JSON. The IoT Plug and Play bridge is open-source and can be easily extended to support additional protocols. It supports the latest version of IoT Plug and Play and Digital Twins Definition Language.

Are you an IoT device developer or IoT solution builder trying to work with existing sensors? Often the code on these sensors can’t be updated to run the latest Azure IoT Device SDK or these sensors are not able to connect directly to the internet. However, many of these sensors can connect to a Windows or Linux gateway with device drivers and support for standard protocols with OS APIs. The IoT Plug and Play bridge enables you to connect these existing sensors without modifying them. It is an open-source application that bridges the gap between the OS APIs and Azure IoT Device SDKs, expanding the reach of devices your IoT solution targets.

The architecture diagram of the IoT Plug and Play bridge.

The architecture diagram of the IoT Plug and Play bridge.

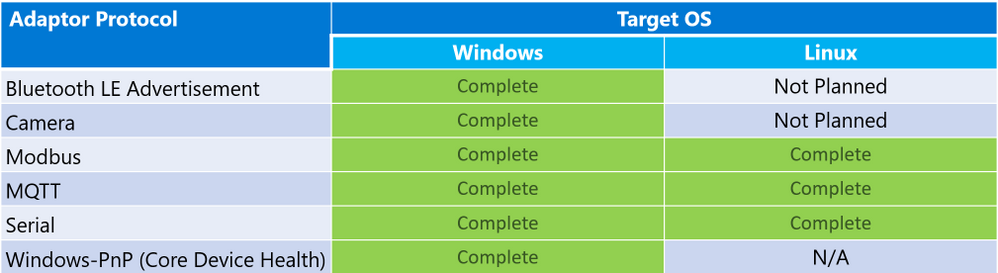

The IoT Plug and Play supports multiple protocols through a set of existing “PnP Bridge Adapters”. Here’s what’s supported out of the box today:

Adaptor protocols currently supported by default for IoT Plug and Play bridge

Adaptor protocols currently supported by default for IoT Plug and Play bridge

For example, there is a Windows Bluetooth adapter that let you connect Bluetooth device advertisements to IoT Plug and Play interfaces. All you need to do is modify a configuration JSON and then compile and run the IoT Plug and Play bridge. If you are only using the supported protocols you can download a pre-compiled version of the bridge from our releases page: www.aka.ms/iot-pnp-bridge-releases

Configuring the IoT Plug and Play bridge

Here’s an example of what that configuration looks like. The first part provides the bridge connection details to the bridge. You can use either a connection string or the IoT Hub Device Provisioning Service. You’ll also specify the device id and IoT Plug and Play model ID:

"$schema": "../../../pnpbridge/src/pnpbridge_config_schema.json",

"pnp_bridge_connection_parameters": {

"connection_type" : "dps",

"root_interface_model_id": "dtmi:com:example:RootPnpBridgeBluetoothDevice;1",

"auth_parameters" : {

"auth_type" : "symmetric_key",

"symmetric_key" : "InNbAialsdfhjlskdflaksdDUMMYSYMETTRICKEY=="

},

"dps_parameters" : {

"global_prov_uri" : "global.azure-devices-provisioning.net",

"id_scope": "0ne00000000",

"device_id": "bluetooth-sensor-3"

}

}

The second part of the configuration maps the hardware parameters of the device to IoT Plug and Play interfaces. Here we map Bluetooth specific addresses and offsets to IoT Plug and Play interfaces. This is done in two parts: a global adapter configuration (think of these as global variables to configure each PnP Bridge Adapter) and interface specific configuration (mappings specific to each Plug and Play interface):

"pnp_bridge_debug_trace": false,

"pnp_bridge_config_source": "local",

"pnp_bridge_interface_components": [

{

"_comment": "Component 1 - Bluetooth Device",

"pnp_bridge_component_name": "Ruuvi",

"pnp_bridge_adapter_id": "bluetooth-sensor-pnp-adapter",

"pnp_bridge_adapter_config": {

"bluetooth_address": "267541100483322",

"blesensor_identity" : "Ruuvi"

}

}

],

"pnp_bridge_adapter_global_configs": {

"bluetooth-sensor-pnp-adapter": {

"Ruuvi" : {

"company_id": "0x499",

"endianness": "big",

"telemetry_descriptor":[

{

"telemetry_name": "humidity",

"data_parse_type": "uint8",

"data_offset": 1,

"conversion_bias": 0,

"conversion_coefficient": 0.5

},

...

]

}

}

}

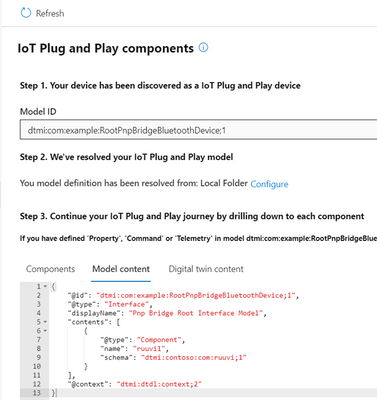

That’s it! You can now run the bridge with this configuration and start to see telemetry reporting in IoT Hub or IoT Explorer. Each downstream device from the bridge appears as an interface for the root bridge device. In this example you see the BLE sensor exposed a “Ruuvi” interface of the “RootPnpBridgeBluetoothDevice” root device interface:

The DTDL model content for IoT Plug and Play bridge

The DTDL model content for IoT Plug and Play bridge

Both the “Ruuvi” and “RootPnpBridgeBluetoothDevice” interface are modeled using Digital Twins Definition Language. You can find more samples of these interfaces for the bridge in the schemas folder. You can author your own models and use them with the bridge. You can use new Visual Studio Code and Visual Studio extensions to help you author these models.

Calls to Action

by Contributed | Sep 30, 2020 | Azure, Technology, Uncategorized

This article is contributed. See the original author and article here.

Post by Vikas Bhatia (@Vikas Bhatia), Head of Product, Azure Confidential Computing

Microsoft is committed to enabling the industry to move from ‘computing in the clear’ to ‘computing confidentially’. Why? Common scenarios confidential computing have enabled include:

- Multi-party rich and secure data analytics

- Confidential blockchain with secure key management

- Confidential inferencing with client and server measurements & verifications

- Microservices and secure data processing jobs

The public preview of confidential computing nodes powered by the Intel SGX DCsv2 SKU with Azure Kubernetes Service brings us one step closer by securing data of cloud native and container workloads. This release extends the data integrity, data confidentiality and code integrity protection of hardware-based isolated Trusted Execution Environments (TEE) to container applications.

Azure confidential computing based on Intel SGX-enabled virtual machines, continues encrypting data while the CPU is processing it—that’s the “in use” part. This is achieved with a hardware-based TEE that provides a protected portion of the hardware’s processor and memory. Users can run software on top of the protected environment to shield portions of code and data from view or modification outside of the TEE.

Expanding Azure confidential computing deployments

Developers can choose different application architectures based on whether they prefer a model with a faster path to confidentiality or a model with more control. The confidential nodes on AKS support both architecture models and will orchestrate confidential application and standard container applications within the same AKS deployment. Also, developers can continue to leverage existing tooling and dev ops practices when designing highly secure end-to-end applications.

During our preview period, we have seen our customers choose different paths towards confidential computing:

- Most developers choose confidential containers by taking an existing unmodified docker container application written in a higher programming language like Python, Java etc. and chose a partner like Scone, Fortanix and Anjuna or Open Source Software (OSS) like Graphene or Occlum in order to “lift and shift” their existing application into a container backed by confidential computing infrastructure. Customers chose this option either because it provides a quicker path to confidentiality or because it provides the ability to achieve container IP protection through encryption and verification of identity in the enclave and client verification of the server thumbprint.

- Other developers choose the path that puts them in full control of the code in the enclave design by developing enclave aware containers with the Open Enclave SDK, Intel SGX SDK or chose a framework such as the Confidential Consortium Framework (CCF). AI/ML developers can also leverage Confidential Inferencing with ONNX to bring a pre-trained ML model and run it confidentially in a hardware isolated trusted execution environment on AKS.

One customer, Magnit, chose the first path. Magnit is one of the largest retail chains in the world and is using confidential containers to pilot a multi-party confidential data analysis solution through Aggregion’s digital marketing platform. The solution focuses on creating insights captured and computed through secured confidential computing to protect customer and partner data within their loyalty program.

We have aggregated more samples of real use cases and continue to expand this sample list here: https://aka.ms/accsamples.

How to get going

- “Lift and shift” your existing application by choosing a partner such as Anjuna, Fortanix, or Scone; or by using Open Source Software such as Graphene or Occlum; or

- Build a new confidential application from scratch using an SDK such as the Open Enclave SDK.

Confidential computing, through its isolated execution environment, has broad potential across use cases and industries; and with the added improvements to the overall security posture of containers with its integration to AKS, we are excited and eager to learn more about what business problems you can solve.

Get started today by learning how to deploy confidential computing nodes via AKS.

by Contributed | Sep 30, 2020 | Azure, Technology, Uncategorized

This article is contributed. See the original author and article here.

Together with the Azure Stack Hub team, we are starting a journey to explore the ways our customers and partners use, deploy, manage, and build solutions on the Azure Stack Hub platform. Together with the Tiberiu Radu (Azure Stack Hub PM @rctibi), we created a new Azure Stack Hub Partner solution video series to show how our customers and partners use Azure Stack Hub in their Hybrid Cloud environment. In this series, as we will meet customers that are deploying Azure Stack Hub for their own internal departments, partners that run managed services on behalf of their customers, and a wide range of in-between as we look at how our various partners are using Azure Stack Hub to bring the power of the cloud on-premises.

Datacom is an Azure Stack Hub partner that provides both multi-tenant environments, as well as dedicated ones. They focus on providing value to their customers and meeting them where they are by providing managed services as well as complete solutions. Datacom focuses on a number of customers ranging from large government agencies as well as enterprise customers. Join the Datacom team as we explore how they provide value and solve customer issues using Azure and Azure Stack Hub.

Links mentioned through the video:

I hope this video was helpful and you enjoyed watching it. If you have any questions, feel free to leave a comment below. If you want to learn more about the Microsoft Azure Stack portfolio, check out my blog post.

by Contributed | Sep 30, 2020 | Azure, Technology, Uncategorized

This article is contributed. See the original author and article here.

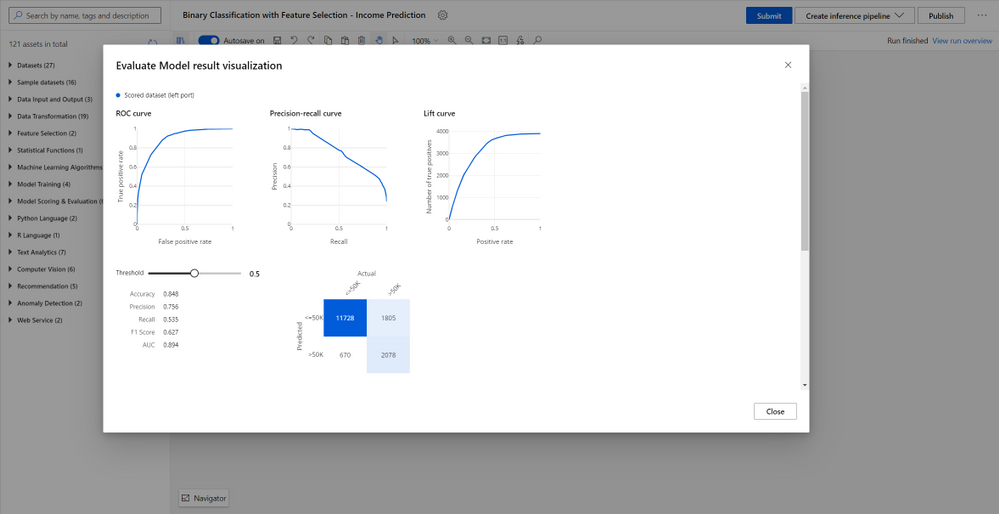

At Microsoft Ignite, we announced the general availability of Azure Machine Learning designer, the drag-and-drop workflow capability in Azure Machine Learning studio which simplifies and accelerates the process of building, testing, and deploying machine learning models for the entire data science team, from beginners to professionals. We launched the preview in November 2019, and we have been excited with the strong customer interest. We listened to our customers and appreciated all the feedback. Your responses helped us reach this milestone. Thank you.

“By using Azure Machine Learning designer we were able to quickly release a valuable tool built on machine learning insights, that predicted occupancy in trains, promoting social distancing in the fight against Covid-19.” – Steffen Pedersen, Head of AI and advanced analytics, DSB (Danish State Railways).

Artificial intelligence (AI) is gaining momentum in all industries. Enterprises today are adopting AI at a rapid pace with different skill sets of people, from business analysts, developers, data scientists to machine learning engineers. The drag-and-drop experience in Azure Machine Learning designer can help your entire data science team to speed up machine learning model building and deployment. Specially, it is tailored for:

- Data scientists who are more familiar with visual tools than coding.

- Users who are new to machine learning and want to learn it in an intuitive way.

- Machine learning experts who are interested in rapid prototyping.

- Machine learning engineers who need a visual workflow to manage model training and deployment.

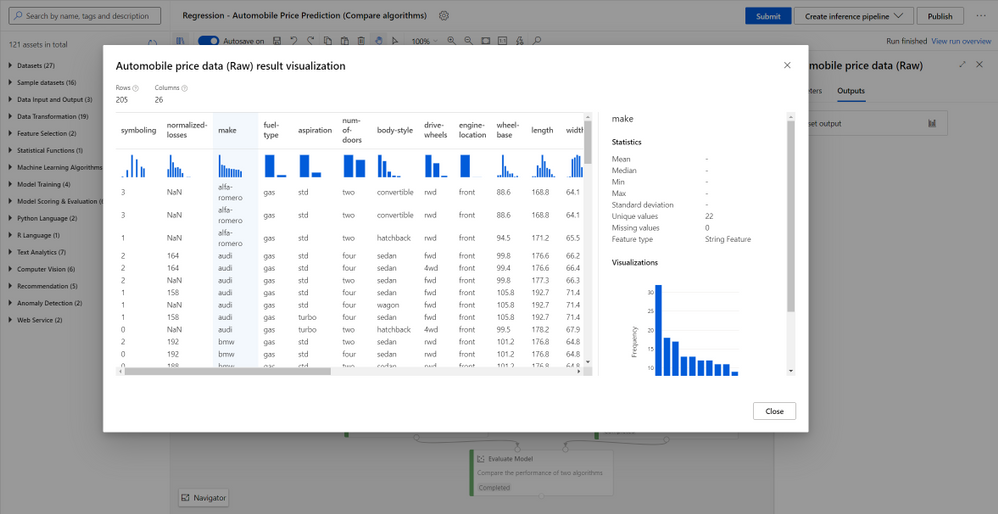

Connect and prepare data with ease

Azure Machine Learning designer is fully integrated with Azure Machine Learning dataset service for the benefit of versioning, tracking and data monitoring. You can import data by dragging and dropping a registered dataset from the asset library, or connecting to various data sources including HTTP URL, Azure blob, Azure Data Lake, Azure SQL or upload from a local file with Import Data module . You can use right click to preview and visualize the data profile, and preprocess data using a rich set of built-in modules for data transformation and feature engineering.

Build and train models with no-code/low-code

In Azure Machine Learning designer, you can build and train machine learning models with state-of-the art machine learning and deep learning algorithms, including those for traditional machine learning, computer vision, text analytics, recommendation and anomaly detection. You can also use customized Python and R code to build your own models. Each module can be configured to run on different Azure Machine Learning compute clusters so data scientists don’t need to worry about the scaling limitation and can focus on their training work.

Validate and evaluate model performance

You can evaluate and compare your trained model performance with a few clicks using the built-in evaluate model modules, or use execute Python/R script modules to log the customized metrics/images. All metrics are stored in run history and can be compared among different runs in the studio UI.

Root cause analysis with immersed debugging experience

While interactively running machine learning pipelines, you can always perform quick root cause analysis using the graph search and navigation to quickly nailed down to the failed step, preview logs and outputs for debugging and troubleshooting without losing context of the pipeline, and find snapshots to trace scripts and dependencies used to run the pipeline.

Deploy models and publish endpoints with a few clicks

Data scientists and machine learning engineers can deploy models for real-time and batch inferencing as versioned REST endpoints to their own environment. You don’t need to worry about the deep knowledge of coding, model management, container services, etc., as scoring files and the deployment image are automatically generated with a few clicks. Models and other assets can also be registered in the central registry for MLOps tracking, lineage, and automation.

Get started today

Get started today with your new Azure free trial, and learn more about Azure Machine Learning designer.

by Contributed | Sep 30, 2020 | Azure, Technology, Uncategorized

This article is contributed. See the original author and article here.

Millions of people are using Microsoft Teams as their secure, productive and mobile collaboration & communication tool, today @Pete Bryan from Microsoft Threat Intelligence Center and @Hesham Saad from Microsoft CyberSecurity Global Black Belt will detail Microsoft Teams schema and data structure in Azure Sentinel so let’s get started!

Microsoft Teams now has an official connector at Azure Sentinel:

- Easy deployment (in a single checkbox)

- Data into Office Activity

- It’s free activity logs

- Only keep the custom connector for other workloads

Here’s a quick demonstration:

You can check as well a couple of hunting queries been shared on the Azure Sentinel GitHub

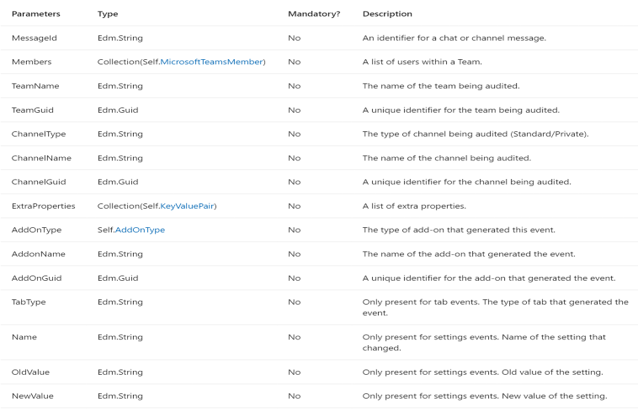

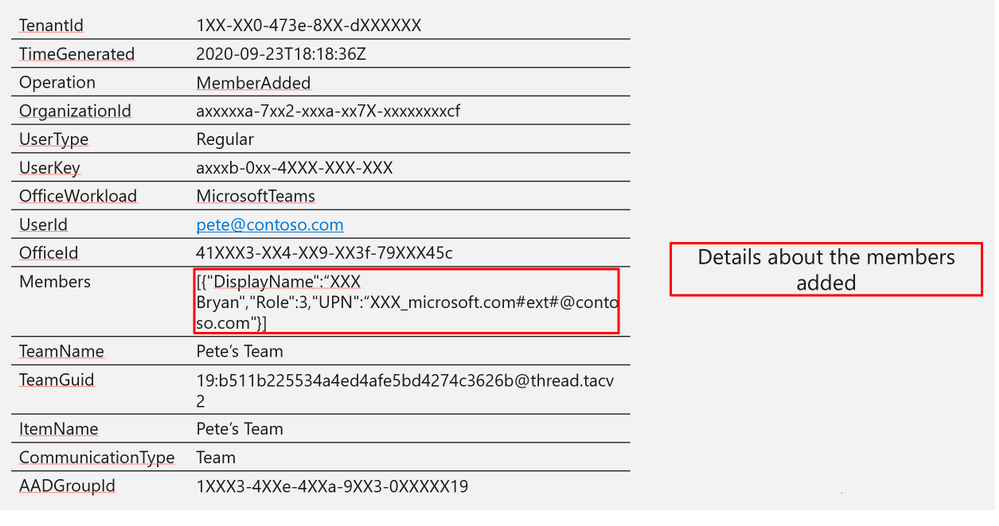

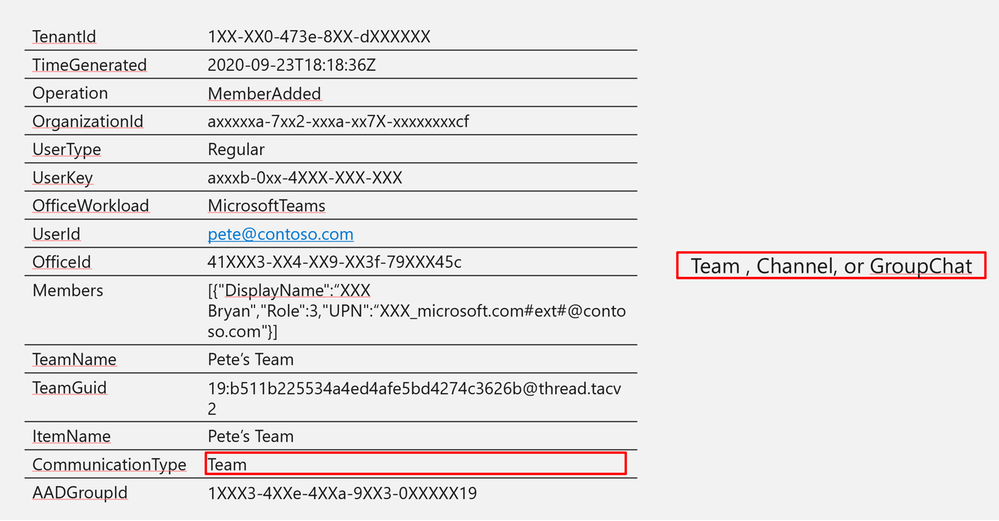

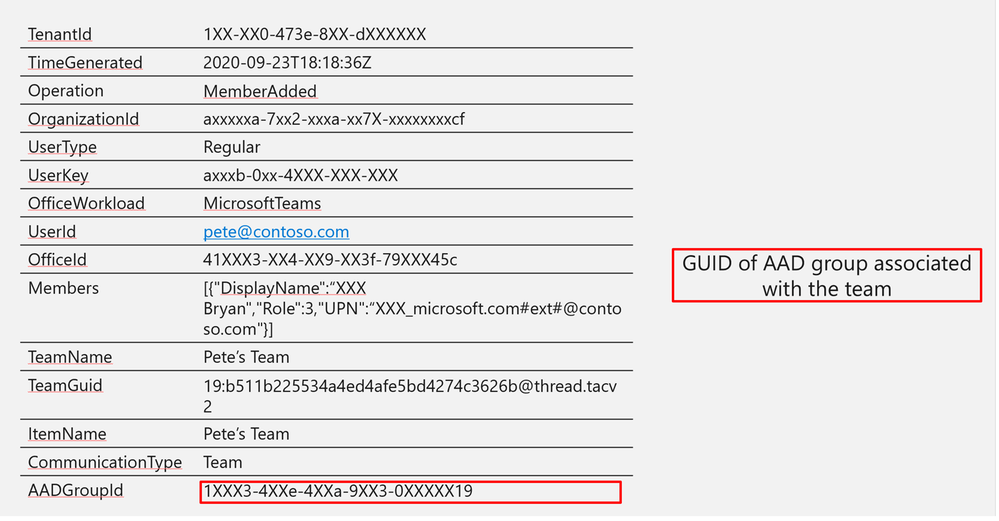

Lets understand now Microsoft Teams Schemas:

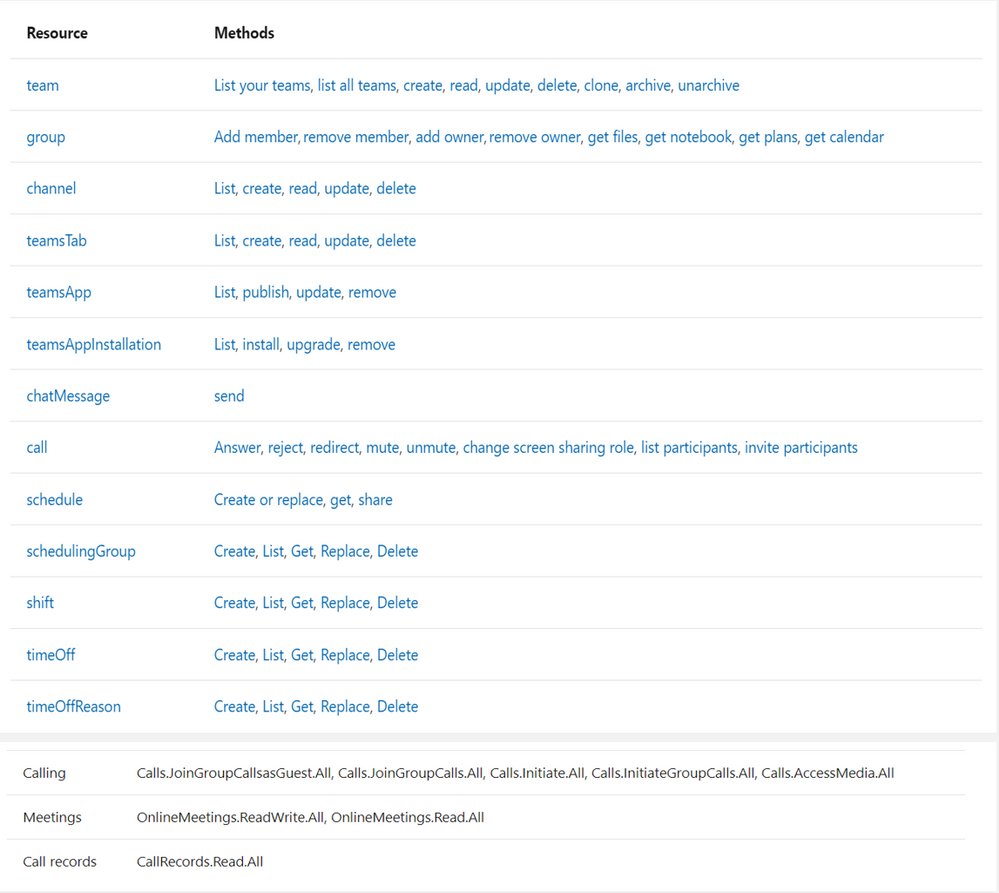

- Office 365 Management API

What’s in Teams Logs:

-

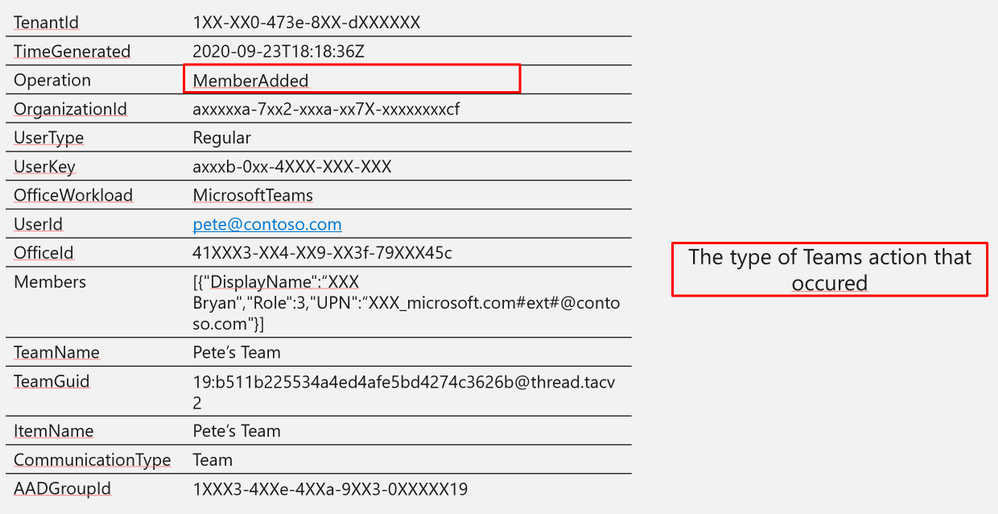

TeamsSessionStarted – Sign-in to Teams Client (except token refresh)

-

MemberAdded/MemberRemoved – User added/removed to team or group chat

-

MemberRoleChanged – User’s permissions changed 1 = Owner, 2 = Member, 3 = Guest

-

ChannelAdded/ChannelRemoved – A channel is added/removed to a team

-

TeamCreated/Deleted – A whole team is created or deleted

-

TeamsSettingChanged – A change is made to a team setting (e.g. make it public/private)

-

TeamsTenantSettingChanged – A change is made at a tenant level (e.g. enable product)

+ Bots, Apps, Tabs

https://docs.microsoft.com/en-us/microsoftteams/audit-log-events

-

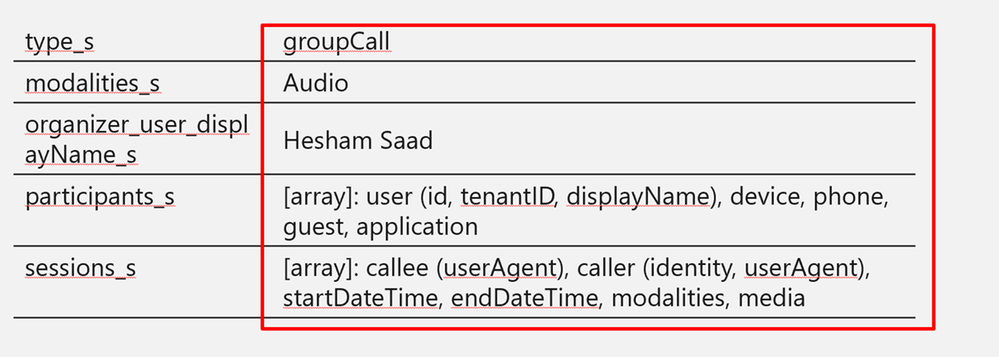

callRecord: Represents a single peer-to-peer call or a group call between multiple participants, sometimes referred to as an online meeting.

-

onlineMeeting: Contains information about the meeting, including the URL used to join a meeting, the attendees list, and the description.

-

callRecord/organizer – the organizing party’s identity

-

callRecord/participants – list of distinct identities involved in the call

-

callRecord/type – type of the call (group call, peer to peer,…etc)

-

callRecord/modalities – list of all modalities (audio, video, data, screen sharing, …etc)

-

callRecord/ (id – startDateTime – endDateTime – joinWebUrl )

-

onlineMeeting/ (subject, chatInfo, participants, startDateTime, endDateTime)

https://docs.microsoft.com/en-us/graph/api/resources/communications-api-overview?view=graph-rest-1.0

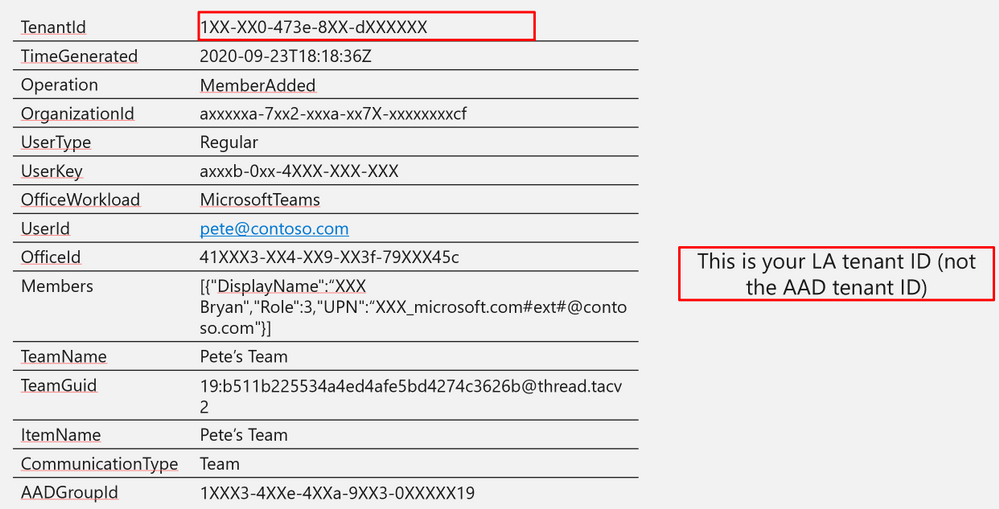

Log Structure:

|

TenantId

|

1XX-XX0-473e-8XX-dXXXXXX

|

|

TimeGenerated

|

2020-09-23T18:18:36Z

|

|

Operation

|

MemberAdded

|

|

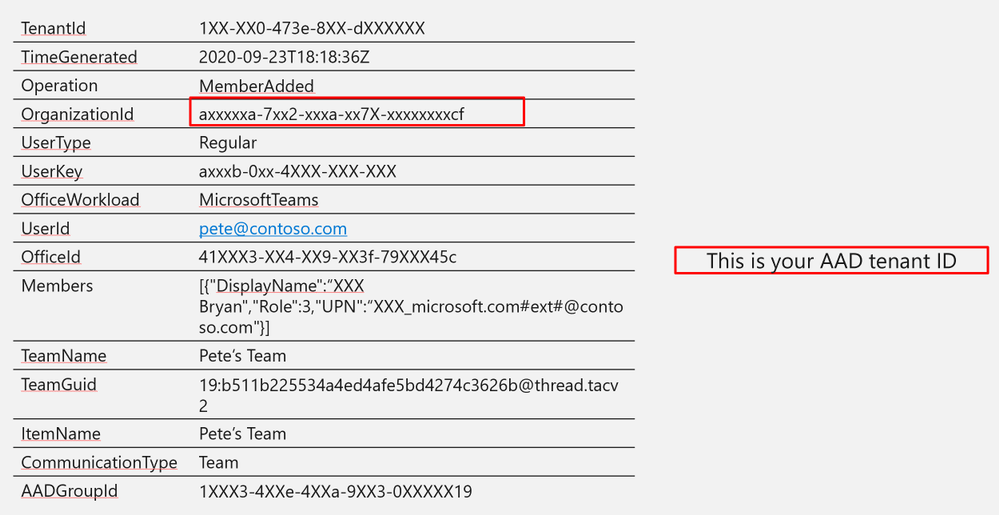

OrganizationId

|

axxxxxa-7xx2-xxxa-xx7X-xxxxxxxxcf

|

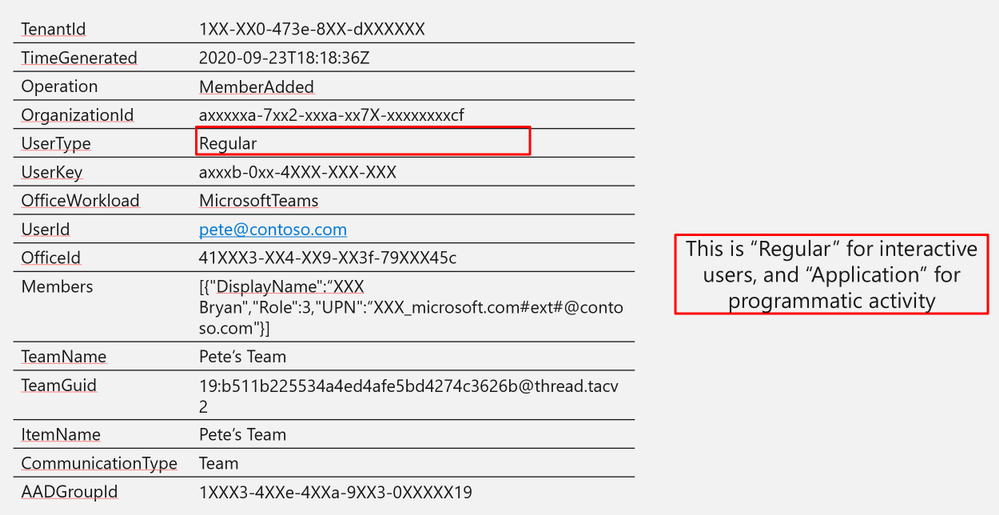

|

UserType

|

Regular

|

|

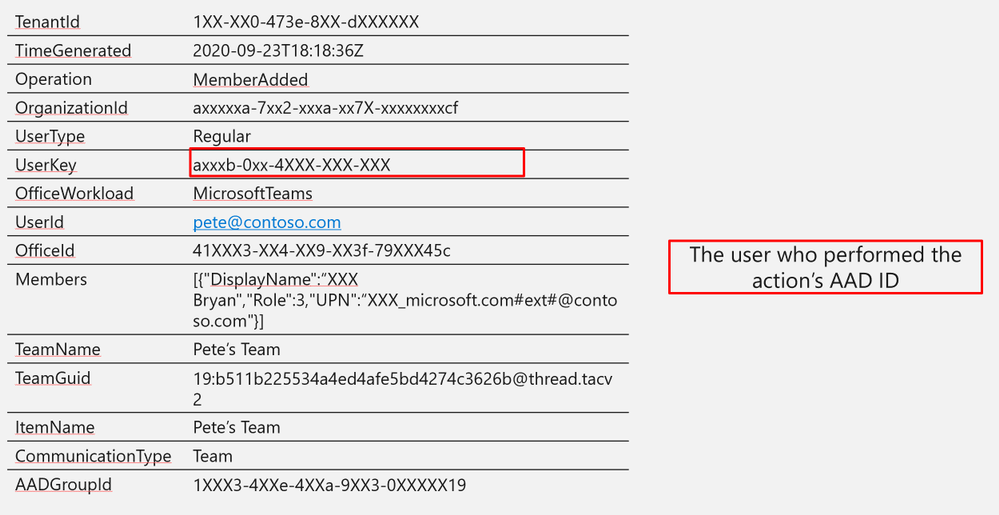

UserKey

|

axxxb-0xx-4XXX-XXX-XXX

|

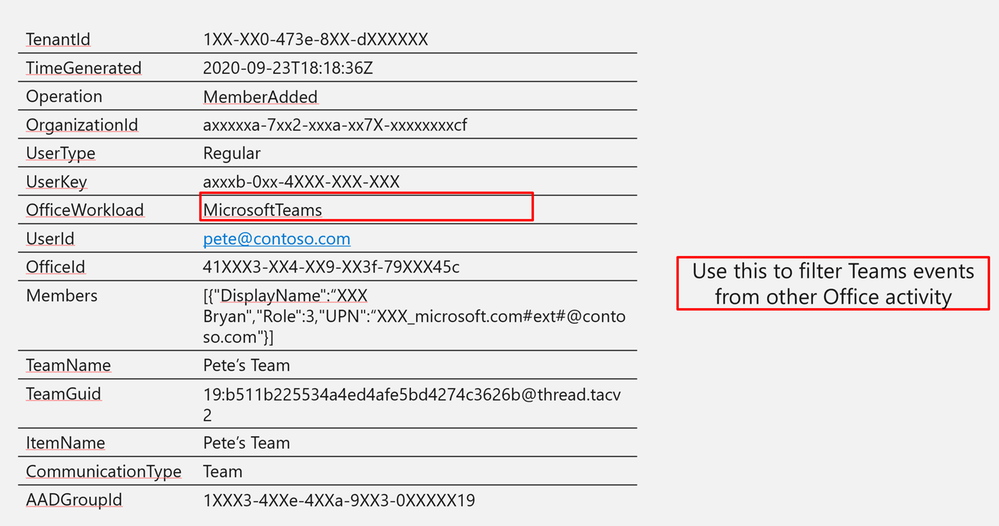

|

OfficeWorkload

|

MicrosoftTeams

|

|

UserId

|

pete@contoso.com

|

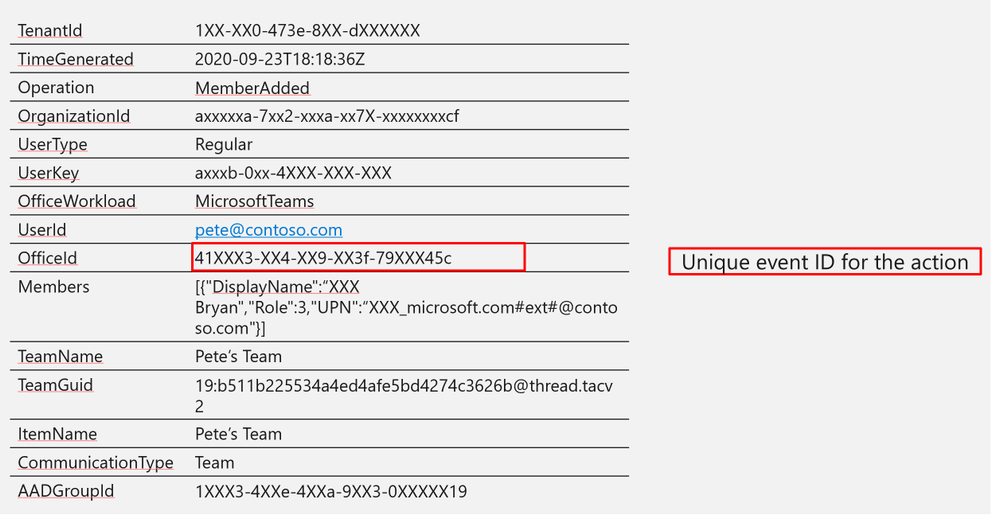

|

OfficeId

|

41XXX3-XX4-XX9-XX3f-79XXX45c

|

|

Members

|

[{“DisplayName”:“XXX Bryan”,”Role”:3,”UPN”:“XXX_microsoft.com#ext#@contoso.com”}]

|

|

TeamName

|

Pete’s Team

|

|

TeamGuid

|

19:b511b225534a4ed4afe5bd4274c3626b@thread.tacv2

|

|

ItemName

|

Pete’s Team

|

|

CommunicationType

|

Team

|

|

AADGroupId

|

1XXX3-4XXe-4XXa-9XX3-0XXXXX19

|

Log Structure (additional fields)

A step-by-step guide on how to ingest CallRecords-Sessions Teams data to Azure Sentinel via Microsoft Graph API, check out Secure your Calls- Monitoring Microsoft TEAMS CallRecords Activity Logs using Azure Sentinel blog post.

Other Logs:

SigninLogs

AAD Signin for Teams

| where AppDisplayName contains “Microsoft Teams”

OfficeActivity

Files uploaded via Teams

| where SourceRelativeUrl contains "Microsoft Teams Chat Files"

Hunting:

Detection:

- Difficult due to usage variations between orgs

- SigninLogs analytics will protect against a lot of common attack types

- New external organization hunting query is a good candidate

SOAR:

- Plenty of actions in Azure Sentinel Playbooks – LogicApps controls for Teams

- Use this to get additional context for alerts

- You can also post messages to teams

Get started today!

We encourage you to try it now and start hunting in your environment.

You can also contribute new connectors, workbooks, analytics and more in Azure Sentinel. Get started now by joining the Azure Sentinel Threat Hunters GitHub community.

Recent Comments