by Contributed | Dec 6, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

Final Update: Sunday, 06 December 2020 19:44 UTC

We’ve confirmed that all systems are back to normal with no customer impact as of 12/6, 19:30 UTC. Our logs show the incident started on 12/6, 18:08 UTC and that during the 1 hour 22 minutes that it took to resolve the issue customers in Australia Central may have experienced data access issues which could have resulted in missed or false alerts.

- Root Cause: The failure was due to an upgrade to a back end service that failed. The system was rolled back to mitigate the issue.

- Incident Timeline: 1 Hours & 22 minutes – 12/6, 18:08 UTC through 12/6, 19:30 UTC

We understand that customers rely on Azure Log Analytics as a critical service and apologize for any impact this incident caused.

-Ian

Update: Sunday, 06 December 2020 19:17 UTC

Root cause has been isolated to an update to a backend service in Australia Central which is impacting data access. To address this issue we are rolling back the update. Some customers may experience data access issues along with failed or misfired alerts.

- Work Around: none

- Next Update: Before 12/06 22:30 UTC

-Ian

by Contributed | Dec 5, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

Final Update: Saturday, 05 December 2020 14:50 UTC

We’ve confirmed that all systems are back to normal with no customer impact as of 12/05, 14:14 UTC. Our logs show the incident started on 12/05, 12:02 UTC and that during the 2 hours & 2minutes that it took to resolve the issue. Customers using Application Insights might have experienced intermittent metric data gaps and incorrect alert activations in US Gov regions.

- Root Cause: The failure was due to configuration issue in application insights .

- Incident Timeline: 2 Hours & 2 minutes – 12/05, 12:02 UTC through 12/05, 14:14 UTC

We understand that customers rely on Application Insights as a critical service and apologize for any impact this incident caused.

-Deepika

by Contributed | Dec 4, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

Most of the developers may want to stop their MySQL instance over the weekend or by end of business day after development or testing to stop billing and save cost.

Azure Database for MySQL Single Server & Flexible Server (in Preview) supports stop/start of standalone mysql server. These operations are not supported for servers which are involved in replication using read replica feature. Once you stop the server, you will not be able to run any other management operation by design.

In this blog post, I will share how can you use PowerShell Automation runbook to stop or start your Azure Database for MySQL Single Server automatically based on time-based schedule. While the example below is for Single Server, the concept can be easily be extended to Flexible Server deployment option too when PowerShell support is available.

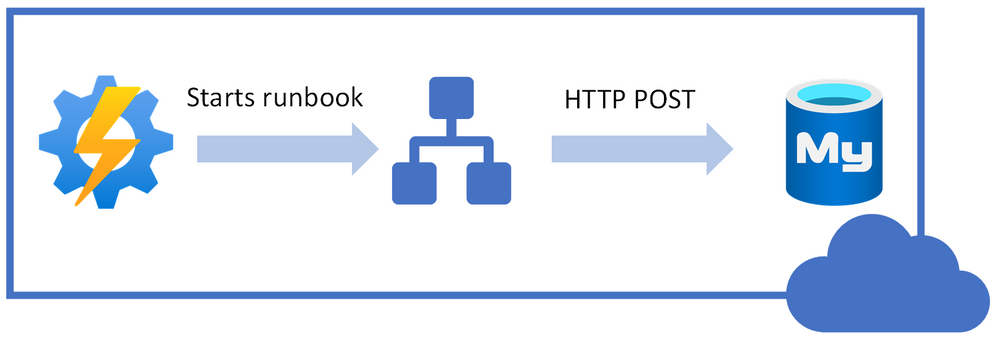

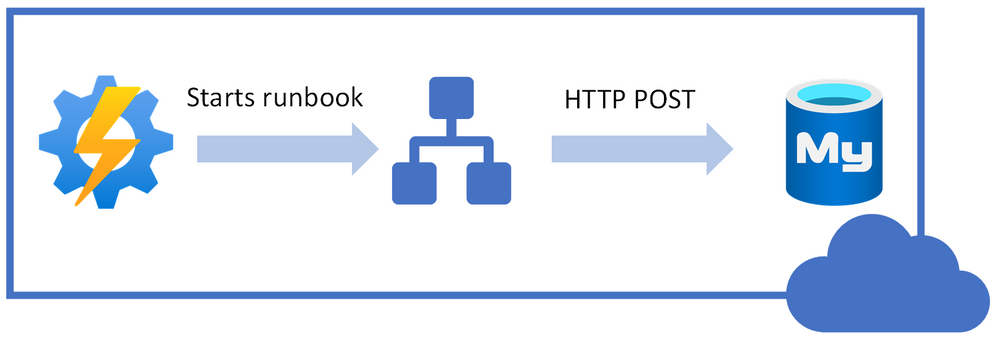

Following is high-level idea of performing stop/start on Azure Database for MySQL Single Server using REST API call from PowerShell runbook.

I will be using Azure Automation account to schedule the runbook to stop/start our Azure Database MySQL Single Server.

You can use the steps below to achieve this task:

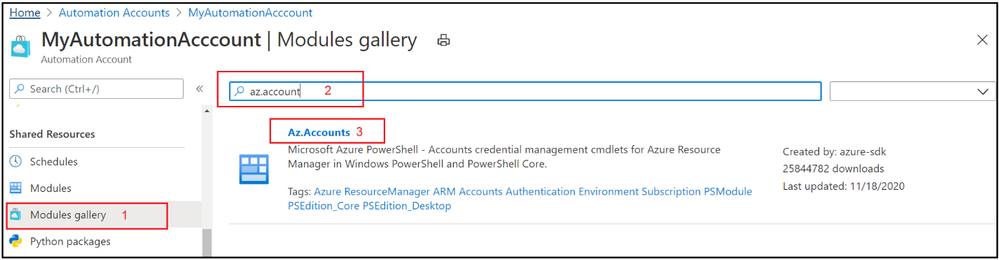

1. Navigate to the Azure Automation Account and make sure that you have Az.Accounts module imported. If it’s not available in modules, then import the module first:

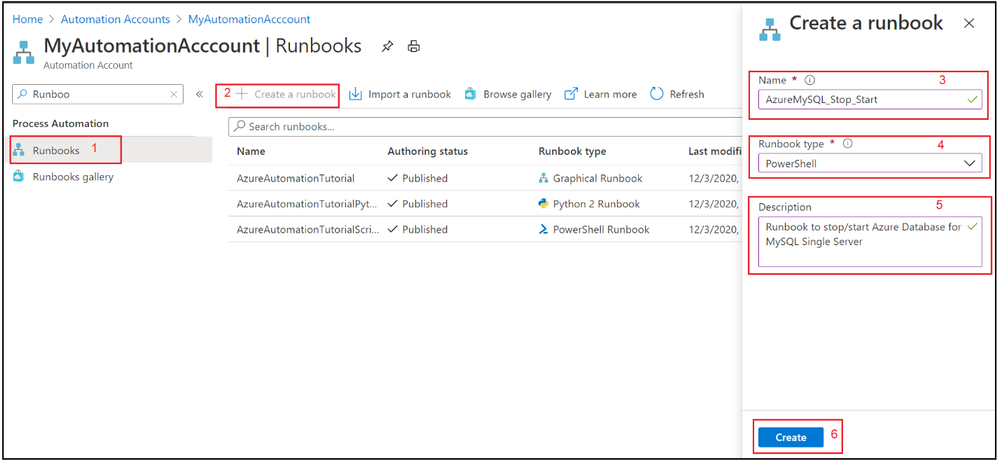

2. Select Runbooks blade and create a new PowerShell runbook.

3. Copy & Paste the following PowerShell code to newly created runbook.

#Envrionment parameters

param(

[parameter(Mandatory=$true)]

[string] $resourceGroupName,

[parameter(Mandatory=$true)]

[string] $serverName,

[parameter(Mandatory=$true)]

[string] $action

)

filter timestamp {"[$(Get-Date -Format G)]: $_"}

Write-Output "Script started." | timestamp

#$VerbosePreference = "Continue" ##enable this for verbose logging

$ErrorActionPreference = "Stop"

#Authenticate with Azure Automation Run As account (service principal)

$connectionName = "AzureRunAsConnection"

try

{

# Get the connection "AzureRunAsConnection "

$servicePrincipalConnection=Get-AutomationConnection -Name $connectionName

"Logging in to Azure..."

Add-AzAccount `

-ServicePrincipal `

-TenantId $servicePrincipalConnection.TenantId `

-ApplicationId $servicePrincipalConnection.ApplicationId `

-CertificateThumbprint $servicePrincipalConnection.CertificateThumbprint | Out-Null

}

catch {

if (!$servicePrincipalConnection)

{

$ErrorMessage = "Connection $connectionName not found."

throw $ErrorMessage

} else{

Write-Error -Message $_.Exception

throw $_.Exception

}

}

Write-Output "Authenticated with Automation Run As Account." | timestamp

$startTime = Get-Date

Write-Output "Azure Automation local time: $startTime." | timestamp

# Get the authentication token

$azContext = Get-AzContext

$azProfile = [Microsoft.Azure.Commands.Common.Authentication.Abstractions.AzureRmProfileProvider]::Instance.Profile

$profileClient = New-Object -TypeName Microsoft.Azure.Commands.ResourceManager.Common.RMProfileClient -ArgumentList ($azProfile)

$token = $profileClient.AcquireAccessToken($azContext.Subscription.TenantId)

$authHeader = @{

'Content-Type'='application/json'

'Authorization'='Bearer ' + $token.AccessToken

}

Write-Output "Authentication Token acquired." | timestamp

##Invoke REST API Call based on specified action

if($action -eq 'stop')

{

# Invoke the REST API

$restUri='https://management.azure.com/subscriptions/6ff855b5-ee6d-4bc2-a901-adf5569842e1/resourceGroups/'+$resourceGroupName+'/providers/Microsoft.DBForMySQL/servers/'+$serverName+'/'+$action+'?api-version=2020-01-01'

$response = Invoke-RestMethod -Uri $restUri -Method POST -Headers $authHeader

Write-Output "$servername is getting stopped." | timestamp

}

else

{

# Invoke the REST API

$restUri='https://management.azure.com/subscriptions/6ff855b5-ee6d-4bc2-a901-adf5569842e1/resourceGroups/'+$resourceGroupName+'/providers/Microsoft.DBForMySQL/servers/'+$serverName+'/'+$action+'?api-version=2020-01-01'

$response = Invoke-RestMethod -Uri $restUri -Method POST -Headers $authHeader

Write-Output "$servername is Starting." | timestamp

}

Write-Output "Script finished." | timestamp

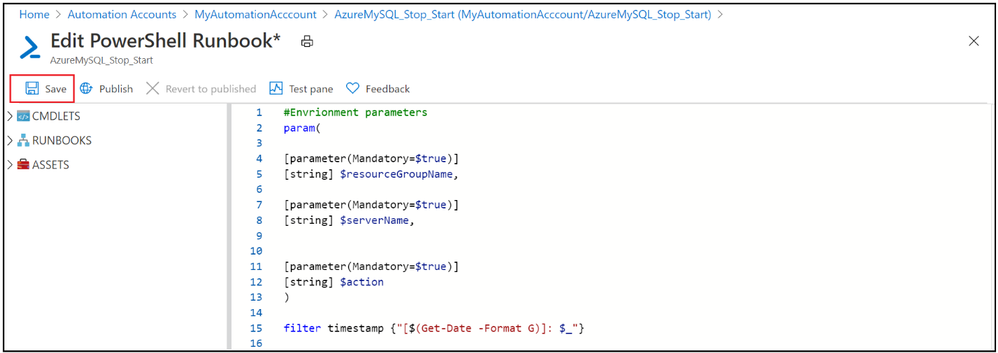

4. Save the runbook

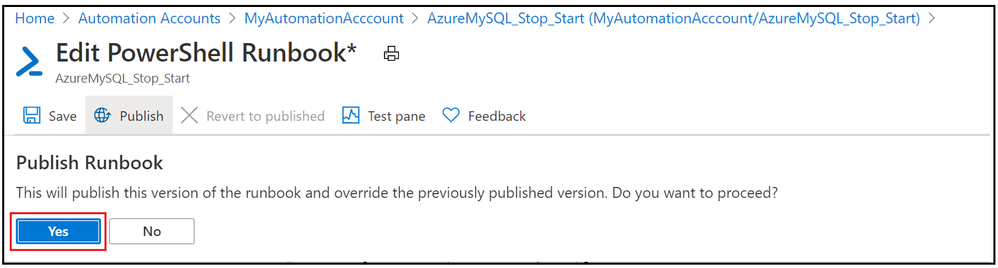

5. Publish the PowerShell runbook

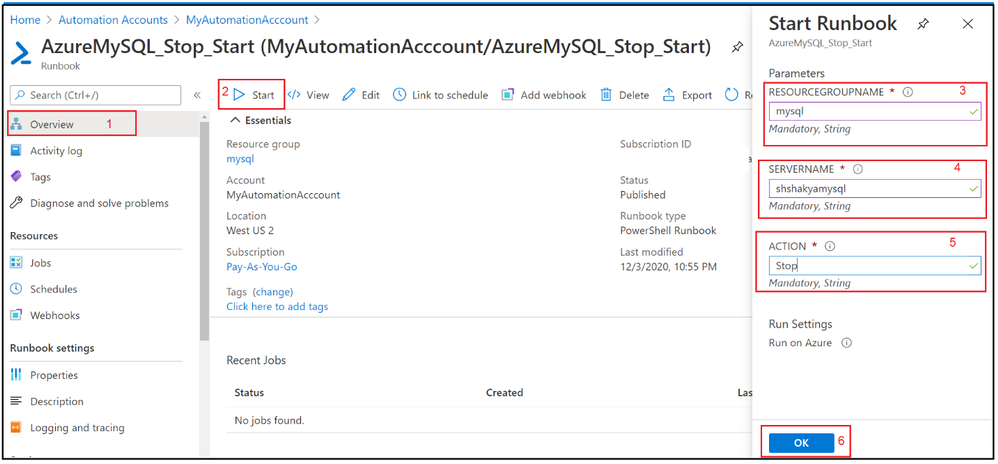

6. Test the PowerShell runbook by entering mandatory field.

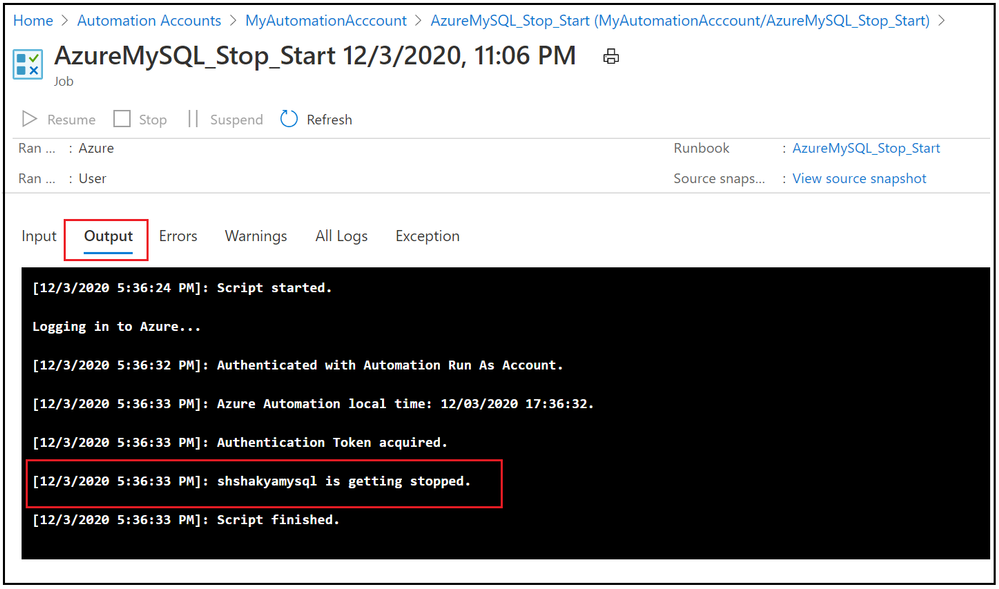

7. Verify the job output:

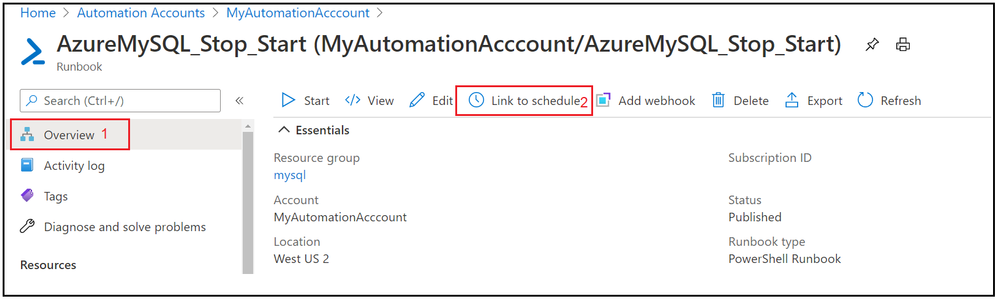

8. Now we have seen that Runbook worked as expected, lets add the schedule to stop and start Azure Database for MySQL Single Server over the weekend. Go to Overview tab and click on Link to schedule

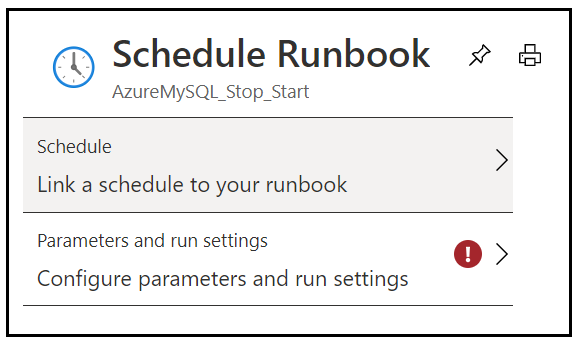

9. Add schedule and runbook parameters.

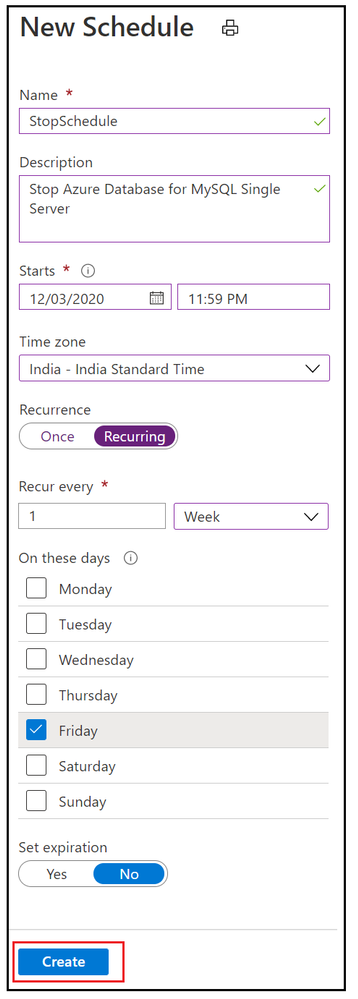

10. Add weekly stop schedule on every Friday night.

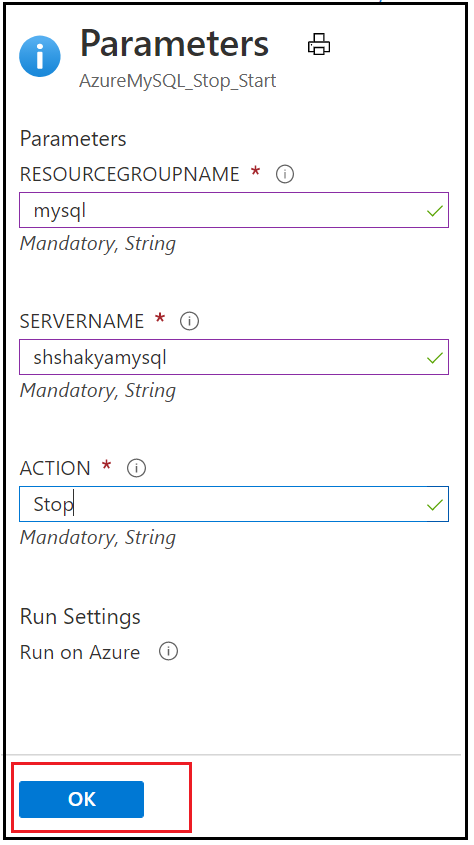

11. Add the parameters to stop Azure Database for MySQL Single Server.

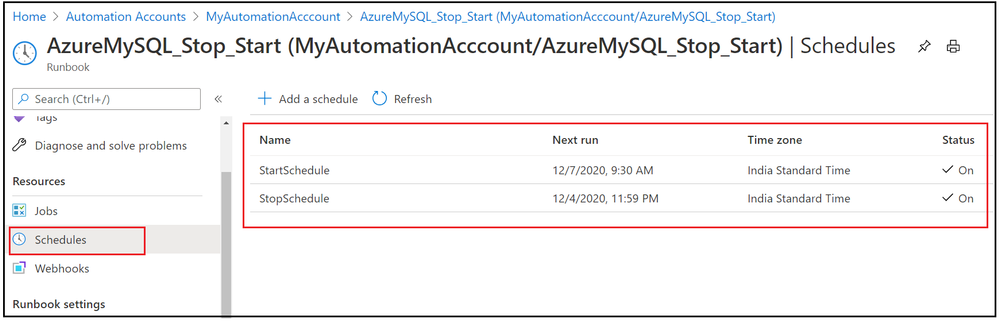

12. Similarly add a weekly schedule for Starting Azure Database for MySQL Single Server on every Monday morning and verify these stop/start schedule by navigating to Runbook’s Schedules blade.

In this activity, we have added two schedules to stop and start Azure Database for MySQL Single Server based on given action.

I hope this will be helpful to you to save cost and hope you enjoyed this learning !!!

by Contributed | Dec 4, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

Sarah Lean

Sarah is a Cloud Advocate for Microsoft. In this Discover STEM video Sarah talks about cloud computing, including explaining the basic principles behind the technology, using terms like servers, data centres and computing power. She provides some advantages and disadvantages of using the technology and also provide examples for how industry might use cloud technology in the future.

A Gentle Introduction to Using a Docker Container as a Dev Environment | CSS-Tricks

Burke Holland

Sarcasm disclaimer: This article is mostly sarcasm. I do not think that I actually speak for Dylan Thomas and I would never encourage you to foist a light

Green Energy Efficient Progressive Web Apps | Sustainable Software

Asim Hussain

As a web developer, can we adjust our code to participate in the global effort to reduce the carbon footprint? PWAs offer some solutions

How to Monitor an Azure virtual machine with Azure Monitor

Thomas Maurer

Here is how to use Azure Monitor to collect and analyze monitoring data from Azure virtual machines to maintain their health. Virtual machines can be monitor…

Working with Git Branches!

Sarah Lean

Let’s get to grips with Git Branches

5 things you should know about Real-Time Analytics | A Cloud Guru

Adi Polak

Running analytics on real-time data is a challenge many data engineers are facing today. But not all analytics can be done in real time! Many are dependent on the volume of the data and the processing requirements. Even logic conditions are becoming a bottleneck. For example, think about join operations on huge tables with more […]

AzUpdate: Azure portal updates, ARM Template support for file share backup and more

Anthony Bartolo

It might be snowing in parts of the Northern Hemisphere, but we won’t let that stop us from sharing Azure news with you. News covered this week includes: New Azure Portal updates for November 2020, Azure Resource Manager template support for Azure file share backup, How to use Windows Admin Center on-premises to manage Azure Windows Server VMs, Multiple new features for Azure VPN Gateway now Generally Available, and our Microsoft Learn Module of the Week.

Hybrid management. Where do I start?

Pierre Roman

Managing & maintaining servers on-premises or in multiple clouds, as well as Azure? Learn about management tools for your servers wherever they are.

#VisualizeIT: A free online series of workshops to build your visual storytelling skills!

Nitya Narasimhan

#VisualizeIT is a free online series of workshops for creative technologists, from @MSFTReactor, @azureadvocates and members of the @letssketchtech community.

Handle app button events in Microsoft Teams tabs

Waldek Mastykarz

Did you know that you can respond to user clicking on the app button of your Microsoft Teams personal app?

Weekly Update #67 – Rebuilding laptops, filming videos and news!

Sarah Lean

In this week’s update I talk about rebuilding my laptop, talking at a user group, filming videos and the Azure news of the week. :red_circle: Azure Cloud Shell Update -…

Picking the Right Distributed Database [Create: Data]

Abhishek Gupta

“In God we trust, all others must bring data” William Edwards Deming Well…

Securing a Windows Server VM in Azure

Sonia Cuff

If you’ve built and managed Windows Servers in an on-premises environment, you may have a set of configuration steps as well as regular process and monitoring alerts, to ensure that server is as secure as possible. But if you run a Windows Server VM in Azure, apart from not having to manage the physical security of the underlying compute hardware, what on-premises concepts still apply, what may you need to alter and what capabilities of Azure should you include?

CLI for Microsoft 365 v3.3 – Microsoft 365 Developer Blog

Waldek Mastykarz

Connect to the latest conferences, trainings, and blog posts for Microsoft 365, Office client, and SharePoint developers. Join the Microsoft 365 Developer Program.

Microsoft 365 PnP Weekly – Episode 107 – Microsoft 365 Developer Blog

Waldek Mastykarz

Connect to the latest conferences, trainings, and blog posts for Microsoft 365, Office client, and SharePoint developers. Join the Microsoft 365 Developer Program.

What is Serverless SQL? And how to use it for Data Exploration | by Adi Polak | Dec, 2020 | Towards Data Science

Adi Polak

So, you are a data scientist, you work with data and need to explore it and run some analytics on the data before jumping into running extensive machine learning algorithms. According to Wikipedia…

Terraform for Java developers, part 1 of 4

Julien Dubois

An introduction to Terraform focusing on Java developers. In this first video (out of 4), we describe what Terraform is, and we fork the Spring Petclinic pro…

by Contributed | Dec 4, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

The UP Podcast with Lisa Crosbie & Megan V. Walker

Lisa Crosbie and Megan V. Walker are Business Application MVPs. With Lisa in Australia and Megan in the United Kingdom, the pair thought it was a great idea to start a podcast together about Microsoft Business Applications, the Power Platform, Dynamics 365, and Microsoft technologies. The UP Podcast shares what is new and exciting, highlights community content, and seeks to share and learn with the audience. Follow on Twitter @LisaMCrosbie, @MeganVWalker, @the_UP_podcast.

How to automatically re-enable flow using Power Automate

Hiroaki Nagao is a Business Applications MVP from Japan. Currently working as a system administrator in an operating company, Hiroaki is a core member of the local Power Apps / Power Automate community. A regular blogger with more than 100 posts this year, find more on his blog or Twitter @mofumofu_dance

Engage Your Audience with Forms Polls in Microsoft Teams Meetings

Matti Paukkonen is an Office Apps & Services MVP and Modern Work Architect from Finland. He has more than 10 years’ experience of Microsoft collaboration solutions, like SharePoint, Microsoft Teams and Microsoft 365. He writes technical blog articles, organizes a local Teams User Group, and participates on several communities and speaks on events. Follow him on Twitter @mpaukkon.

Azure Sentinel: Connecting the Enterprise Firewalls

John Joyner is an inventor, author, speaker, and professor on datacenter and enterprise cloud computing topics. John, who has been named as an MVP for the past twelve years, teaches a cloud computing management course at the University of Arkansas. For more, check out his Twitter @john_joyner

ML.NET Model Builder: Getting Started (using ASP.NET Core)

Syed Shanu is a Microsoft MVP, a two-time C# MVP and two-time Code project MVP. Syed is also an author, blogger and speaker. He’s from Madurai, India, and works as Technical Lead in South Korea. With more than 10 Years of experience with Microsoft technologies, Syed is an active person in the community and always happy to share his knowledge on topics related to ASP.NET , MVC, ASP.NET Core, Web API, SQL Server, UWP, Azure, among others. You can see his contributions to MSDN and TechNet Wiki here. Follow him on Twitter @syedshanu3.

by Contributed | Dec 4, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

Big announcement surrounding the general availability of Azure Synapse Analytics and the public preview release of Azure Purview data catalog is covered this week on AzUpdate. Other news items covered include: Azure AD Application Proxy now natively supports apps that use header-based authentication, and the Microsoft Learn Module of the week.

Azure Synapse now Generally Available

Solutions like data lakes and data warehouses have helped organizations collect and analyze several types of data. The process however, created niches of expertise and specialized technology. Azure Synapse rearchitects operational and analytics data stores to take full advantage of a new, cloud-native architecture. The solution enables organizations to query data using either serverless or dedicated resources at scale while maintaining consistent tools and languages. Think of it as your organization’s one pane of glass to analyze all its captured data. Azure Synapse combines capabilities spanning the needs of data engineering, machine learning, and BI without creating silos in processes and tools.

Further details can be found here: Harnessing the power of Azure Synapse for improved data and analytics

Details have also been shared on how Microsoft’s Modern Workplace team in partnership with CMS Medicare developed an end-to-end Azure Synapse and Power BI tutorial including over 120 million rows of real CMS Medicare Part D Data to help other organizations learn how to harness it.

The entire step-by-step tutorial including the demo public domain Part D data can be viewed here: How to Deploy an End-to-End Azure Synapse Analytics and Power BI Solution

Public Preview of Azure Purview data catalog

Announced alongside Azure Synapse, Azure Purview enters public preview and provides a comprehensive data governance solution enabling organizations to know where all thier data resides. The solution can easily create an up-to-date map of an organization’s data landscape with automated data discovery, sensitive data classification, and end-to-end data lineage wherever it is stored including on-premises, across multi-clouds and multi-edge, in SaaS apps, and in Microsoft Power BI. Azure Purview is integrated with Microsoft Information Protection thus enabling the ability to apply the same sensitivity labels defined in Microsoft 365 Compliance Center.

Further details can be found here: Azure Purview

Azure AD Application Proxy now natively supports apps that use header-based authentication

Public preview of Application Proxy support for applications that use header-based authentication on standard claims that are issued by Azure AD is now available. Some examples of said applications include NetWeaver Portal, Peoplesoft, and WebCenter of which can benefit from all the capabilities of Application Proxy, including single sign-on as well as enforcing pre-authentication and Conditional Access policies like requiring Multi-Factor Authentication (MFA) or using a compliant device before users can access these apps. What’s more, no added software is required as existing Application Proxy connectors can be used.

Steps on how to harness this can be found here: How to enable Azure AD Application Proxy to support apps using header-based authentication

Community Events

- Create: Data – A half day of conversations with experts and community to learn and discuss everything data – from the upcoming trends, to best practices and data for good.

- All Around Azure – A Beginners Guide to IoT – Focus on topics ranging from IoT device connectivity, IoT data communication strategies, use of artificial intelligence at the edge, data processing considerations for IoT data, and IoT solutioning based on the Azure IoT reference architecture

- Festive Tech Calendar – Continuing this month’s content from different Azure communities and people around the globe for the month of December

- Introduction to Cloud Adoption Framework – Sarah Lean investigates Microsoft’s Cloud Adoption Framework offering and what is available for organizations to take advantage of

MS Learn Module of the Week

Realize Integrated Analytical Solutions with Azure Synapse Analytics

This learning path provides details on how Azure Synapse Analytics enables you to perform different types of analytics through its’ components that can be used to build Modern Data Warehouses through to Advanced Analytical solutions.

This learning path can be completed here: Integrated Analytical Solutions via Azure Synapse Analytics

Let us know in the comments below if there are any news items you would like to see covered in next week show. Az Update streams live every Friday so be sure to catch the next episode and join us in the live chat.

Recent Comments