by Contributed | Oct 16, 2020 | Azure, Technology

This article is contributed. See the original author and article here.

Hi there  .

.

Today, I am here again, to present one of the possible solutions to keep the Microsoft Monitoring Agent (MMA) installed on your virtual machine up to date with roughly 0 effort.

The reason why I started playing with this theme, is because I couldn’t keep up with the latest and greatest MMA releases that comes as part of the Azure world, easily and in a time saving way.

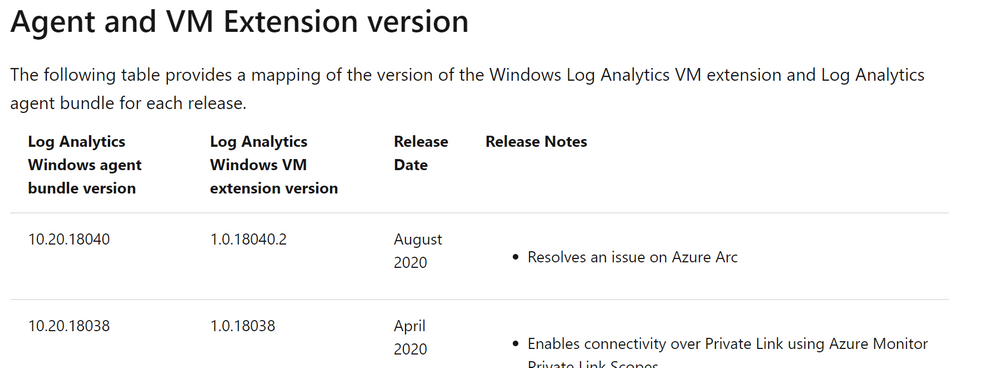

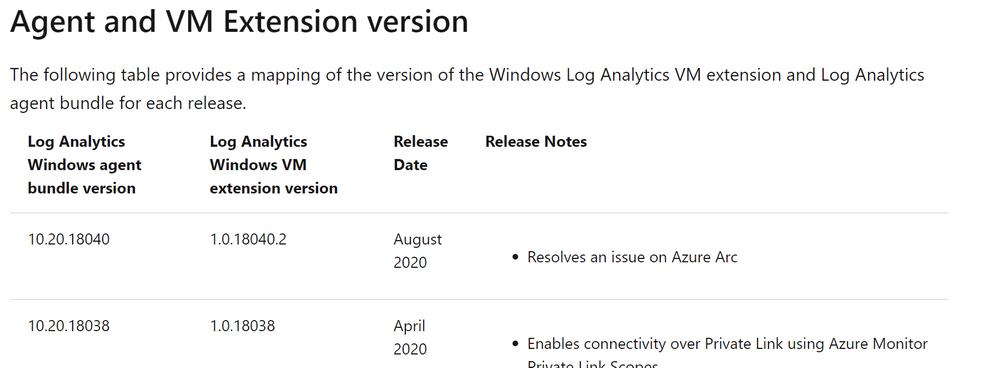

Everybody knows that from time to time, thanks to the work and effort of our colleagues, we get new Log Analytics Agent version available. These updates open the door to more stability and new features.

As I anticipated there are several methods of updating the MMA once installed and your choice is mostly related to the way the MMA was installed. In fact, if it has been installed as Virtual Machine (VM) extension, it gets updated automatically as part of the infrastructure maintenance that is in charge to Microsoft (see Shared responsibility in the cloud).

Same for those VMs which have been configured using Azure Policies (with Azure Security Center for instance).

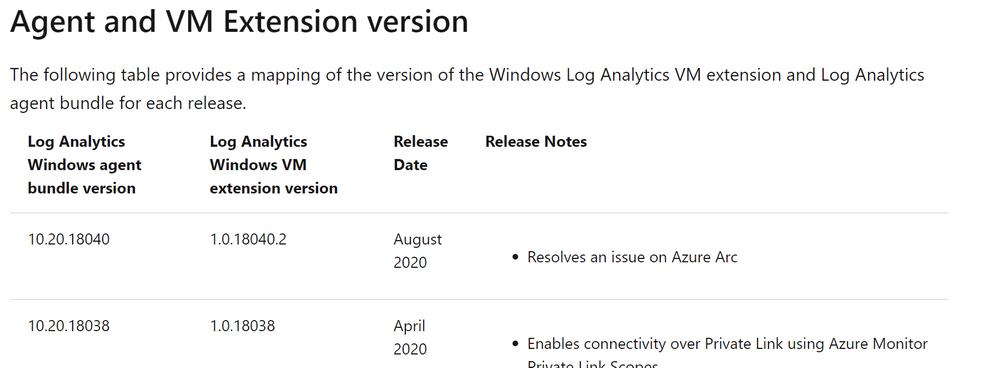

But what if you are managing a hybrid environment? What happen if you installed the agent manually? Even more, do you get any Windows Update if you selected ”I don’t want to …” in the following window?

NOTE: If you selected “Use Microsoft Update …” the update will be presented together with other updates, meaning that you have to rely on your patch management process and tool.

Well in this case you need to take care of your agent version manually and here it comes my idea.

Since Azure offers lots of services and one of them is the Azure Automation, why not using it?

Based on that, I created a very simple PowerShell Automation Runbook, based on a PowerShell script, that does the stuff for you. Of course, my approach is just an example, but you could leverage the idea or the attached script in your environment if you like.

I want to point out that:

- Test is always recommended to make sure it works as expected.

- You might need to check and eventually adjust the Invoke-WebRequest command line to include the proxy server and the related credentials if necessary (see the commented line in the script).

The script is very easy, as you can see. It just goes over some links, downloads the files in the C:Temp folder (the path existence is verified and created if necessary) and execute them.

# Setting variables

$setupFilePath = "C:Temp"

# Setting variables specific for MMA

$setupMmaFileName = "MMASetup-AMD64.exe"

$argumentListMma = '/C:"setup.exe /qn /l*v C:TempAgentUpgrade.log AcceptEndUserLicenseAgreement=1"'

$URI_MMA = "https://aka.ms/MonitoringAgentWindows"

# Setting variables specific for DependencyAgent

$setupDependencyFileName = "InstallDependencyAgent-Windows.exe"

$argumentListDependency = '/C:" /S /RebootMode=manual"'

$URI_Dependency = "https://aka.ms/DependencyAgentWindows"

# Checking if temporary path exists otherwise create it

if(!(Test-Path $setupFilePath))

{

Write-Output "Creating folder $setupFilePath since it does not exist ... "

New-Item -path $setupFilePath -ItemType Directory

Write-Output "Folder $setupFilePath created successfully."

}

#Check if the file was already downloaded hence overwrite it, otherwise download it from scratch

if (Test-Path $($setupFilePath+""+$setupMmaFileName))

{

Write-Output "The file $setupMmaFileName already exists, overwriting with a new copy ... "

}

else

{

Write-Output "The file $setupMmaFileName does not exist, downloading ... "

}

# Downloading the file

try

{

$Response = Invoke-WebRequest -Uri $URI_MMA -OutFile $($setupFilePath+""+$setupMmaFileName) -ErrorAction Stop

##$Response = Invoke-WebRequest -Uri $URI_MMA -Proxy "http://myproxy:8080/" -ProxyUseDefaultCredentials -OutFile $($setupFilePath+""+$setupMmaFileName) -ErrorAction Stop

#$StatusCode = $Response.StatusCode

# This will only execute if the Invoke-WebRequest is successful.

Write-Output "Download of $setupMmaFileName, done!"

Write-Output "Starting the upgrade process ... "

start-process $($setupFilePath+""+$setupMmaFileName) -ArgumentList $argumentListMma -Wait

Write-Output "Agent Upgrade process completed."

Write-Output "Checking if Microsoft Dependency Agent is installed ..."

try

{

Get-Service -Name MicrosoftDependencyAgent -ErrorAction Stop | Out-Null

Write-Output "Microsoft Dependency Agent is installed. Moving on with the upgrade."

if (Test-Path $($setupFilePath+""+$setupDependencyFileName))

{

Write-Output "The file $setupDependencyFileName already exists, overwriting with a new copy ... "

}

else

{

Write-Output "The file $setupDependencyFileName does not exist, downloading ... "

}

try

{

$Response = Invoke-WebRequest -Uri $URI_Dependency -OutFile $($setupFilePath+""+$setupDependencyFileName) -ErrorAction Stop

#$Response = Invoke-WebRequest -Uri $URI_Dependency -Proxy "http://myproxy:8080/" -ProxyUseDefaultCredentials -OutFile $($setupFilePath+""+$setupDependencyFileName) -ErrorAction Stop

Write-Output "Download of $setupDependencyFileName, done!"

Write-Output "Starting the upgrade process ... "

start-process $($setupFilePath+""+$setupDependencyFileName) -ArgumentList $argumentListDependency -Wait

Write-Output "Dependency Agent Upgrade process completed."

}

catch

{

Write-Output "Error downloading the new Microsoft Dependency Agent installer"

}

}

catch

{

Write-Output "Dependency Agent is not installed."

}

}

catch

{

$StatusCode = $_.Exception.Response.StatusCode.value__

Write-Output "An error occurred during file download. The error code code is ==$StatusCode==."

}

# Logging runbook completion

Write-Output "Runbook execution completed."

As you can see, I am also taking care of the Microsoft Dependency Agent used by both the Azure Monitor for VMs and Service Map.

Provided that you already have an Automation Account already created and configured as well as the Hybrid Runbook Worker deployed, all you need to do is to import the runbook and schedule it accordingly. Wait for the execution and the game is done …

Thanks,

Bruno

Disclaimer

The sample scripts are not supported under any Microsoft standard support program or service. The sample scripts are provided AS IS without warranty of any kind. Microsoft further disclaims all implied warranties including, without limitation, any implied warranties of merchantability or of fitness for a particular purpose. The entire risk arising out of the use or performance of the sample scripts and documentation remains with you. In no event shall Microsoft, its authors, or anyone else involved in the creation, production, or delivery of the scripts be liable for any damages whatsoever (including, without limitation, damages for loss of business profits, business interruption, loss of business information, or other pecuniary loss) arising out of the use of or inability to use the sample scripts or documentation, even if Microsoft has been advised of the possibility of such damages.

by Contributed | Oct 15, 2020 | Azure, Technology

This article is contributed. See the original author and article here.

Databases > Azure Cosmos DB

- Azure Cosmos DB serverless capacity mode now in public preview

Intune

- Updates to Microsoft Intune

_________________________________________________________

Databases > Azure Cosmos DB

Azure Cosmos DB serverless capacity mode now in public preview

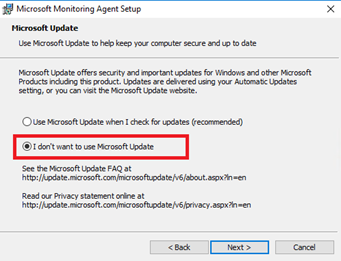

Serverless is a new consumption-based model that bills only for the resources used by database operations, with no minimum. It’s ideal for small to mid-sized workloads with sporadic traffic. To get started, select “Serverless (preview)” during account creation.

INTUNE

Updates to Microsoft Intune

The Microsoft Intune team has been hard at work on updates as well. You can find the full list of updates to Intune on the What’s new in Microsoft Intune page, including changes that affect your experience using Intune.

Azure portal “how to” video series

Have you checked out our Azure portal “how to” video series yet? The videos highlight specific aspects of the portal so you can be more efficient and productive while deploying your cloud workloads from the portal. Check out our most recently published video:

Next steps

The Azure portal has a large team of engineers that wants to hear from you, so please keep providing us your feedback in the comments section below or on Twitter @AzurePortal.

Sign in to the Azure portal now and see for yourself everything that’s new. Download the Azure mobile app to stay connected to your Azure resources anytime, anywhere. See you next month!

by Contributed | Oct 15, 2020 | Azure, Technology

This article is contributed. See the original author and article here.

Since the inception of Azure SQL more than ten years ago, we have been continuously raising the limits across all aspects of the service, including storage size, compute capacity, and IO. Today, we are pleased to announce that transaction log rate limits have been increased for General Purpose databases and elastic pools in the vCore purchasing model.

Increasing log rate

Resource governance in Azure SQL has specific limits on resource consumption including log rate, in order to provide a balanced Database-as-a-Service and ensure that recoverability SLAs (RPO, RTO) are met.

Maximum transaction log rate, or log write throughput, determines how fast data can be written to a database in bulk, and is often the main limiting factor in data loading scenarios such as data warehousing or database migration to Azure. In 2019, we doubled the maximum transaction log rate for Business Critical databases, from 48 MB/s to 96 MB/s. With the new M-series hardware at the high end of the Azure SQL Database SKU spectrum, we now support log rate of up to 264 MB/s.

Our latest increases are for all hardware generations currently supported in the General Purpose service tier, and apply equally to both provisioned and serverless compute tiers.

For single databases, log rate limit increases are:

Cores

|

1

|

2

|

3

|

4

|

5

|

6

|

7

|

8 and higher

|

Old limit (MB/s)

|

3.75

|

7.5

|

11.25

|

15

|

18.75

|

22.5

|

26.3

|

30

|

New limit (MB/s)

|

4.5

|

9

|

13.5

|

18

|

22.5

|

27

|

31.5

|

36

|

For elastic pools, log rate limit increases are:

Cores

|

1

|

2

|

3

|

4

|

5

|

6

|

7

|

8 and higher

|

Old limit (MB/s)

|

4.7

|

9.4

|

14.1

|

18.8

|

23.4

|

28.1

|

32.8

|

37.5

|

New limit (MB/s)

|

6

|

12

|

18

|

24

|

30

|

36

|

42

|

48

|

All up-to-date resource limits are documented for single databases and elastic pools.

Impact at scale

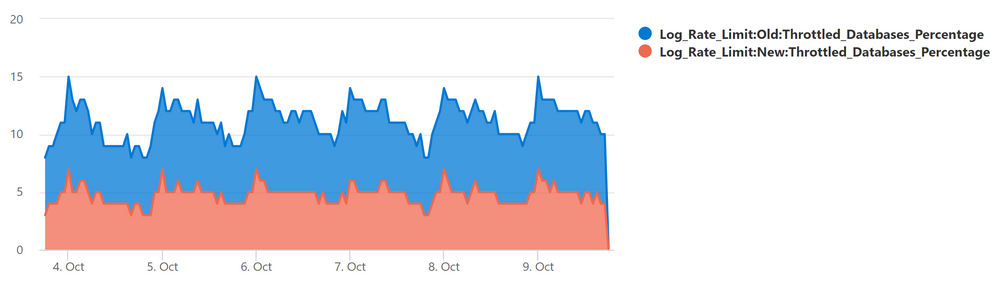

To see the impact of log rate increases on customer workloads, we analyzed telemetry data for the period when the change was being deployed in one Azure region. During that time, the region had a mix of databases and elastic pools with old and new limits.

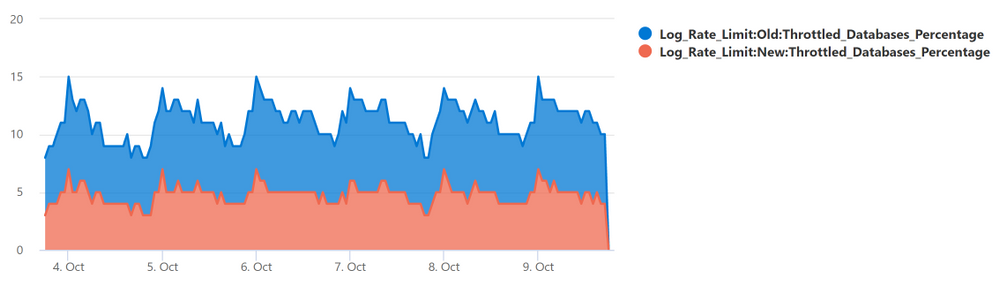

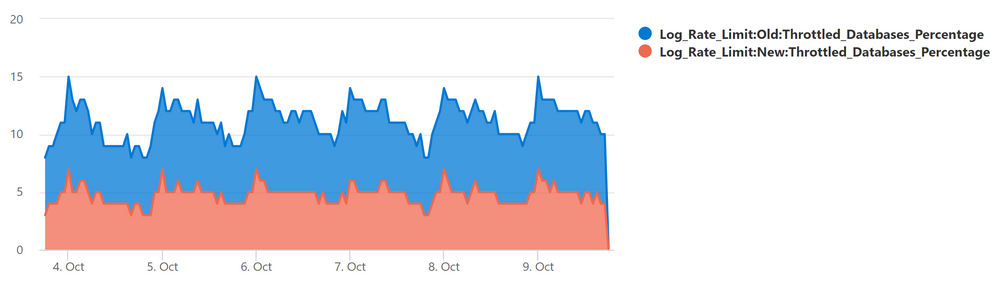

The chart below plots the percentage of General Purpose databases that reached log rate limit and experienced log rate throttling (defined as at least one LOG_RATE_GOVERNOR wait in a 1-hour interval). The higher (light blue) area is for databases with the old limits, and the lower (red orange) area is for databases with the new limits.

The positive impact is clear: the change reduced the average percentage of General Purpose databases experiencing log rate throttling from over 10% to less than 5%.

Data load example

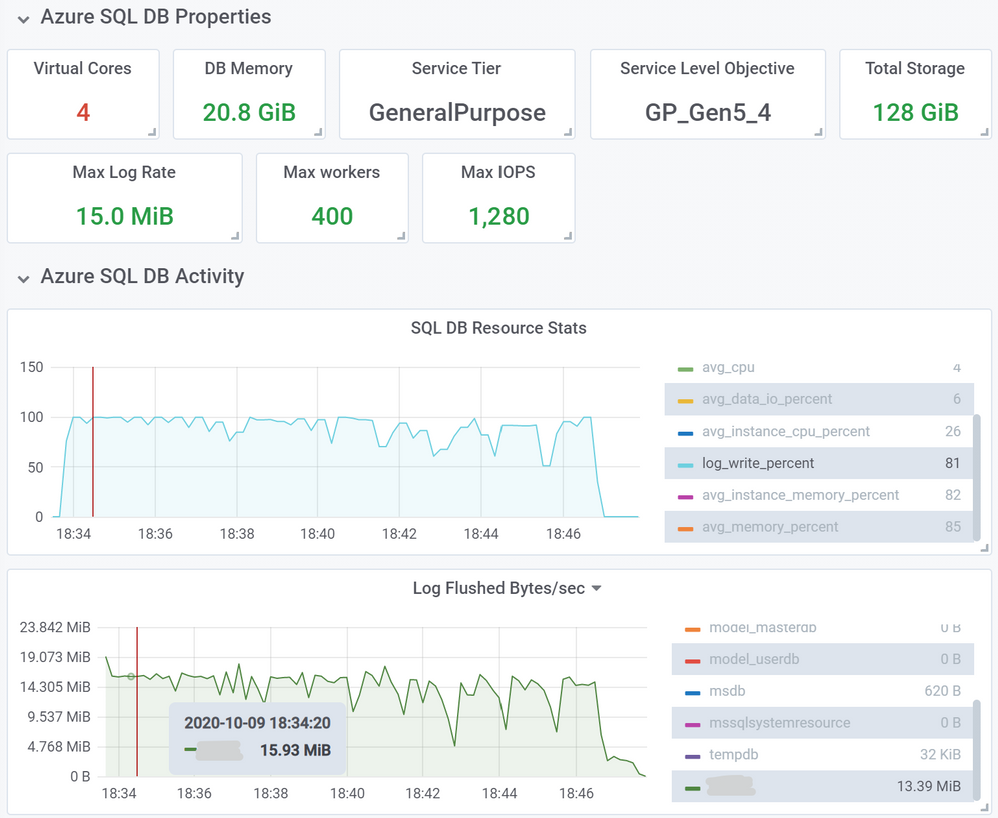

As an example of an operation benefiting from higher log rate limits, we inserted 10 million rows into a heap table using a SELECT … INTO … statement, using a 4-core General Purpose database on Gen5 hardware. Row size was 1,255 bytes, and total inserted data size was 11.7 GB.

First, we used a database with the old log rate limit of 15 MB/s. This load took 13 minutes and 2 seconds.

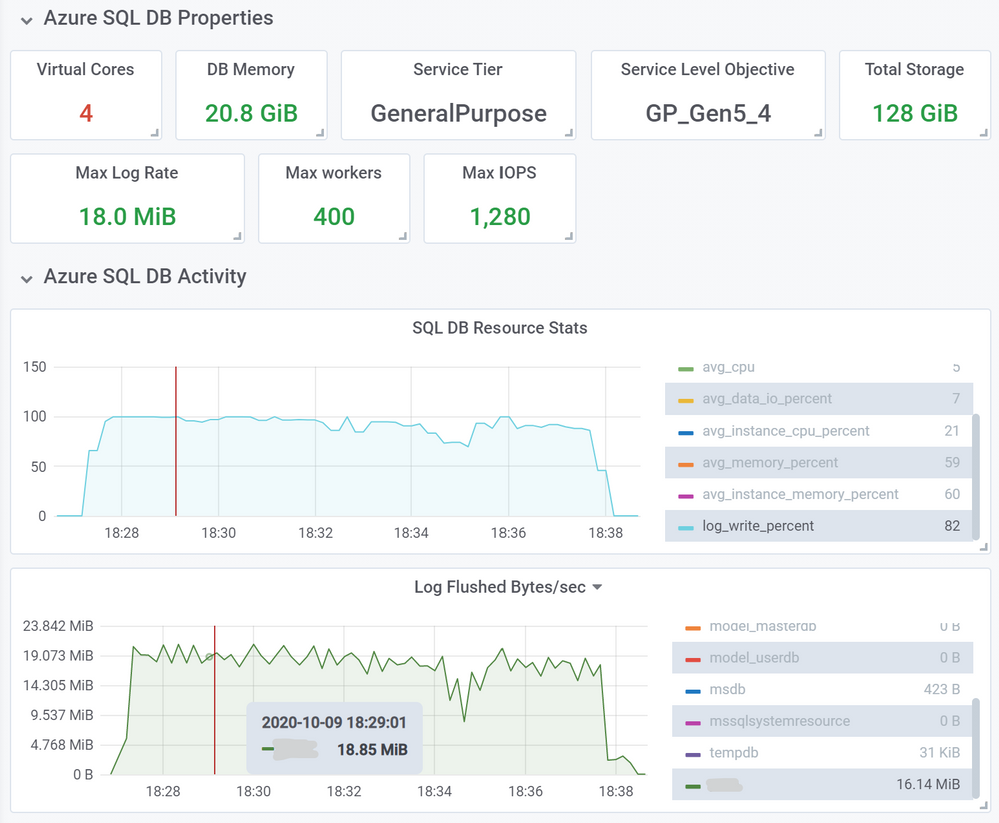

Next, we repeated the same load, but using a database with the new 18 MB/s limit.

This load took proportionally less time, 10 minutes and 33 seconds, a 21% decrease in load time.

You can expect this kind of improvement for most operations that used to consume close to 100% of log write throughput with the old limit. Similarly, for databases in elastic pools that generate high volumes of log writes at the same time, delays due to throttling are now reduced due to higher log rate limit at the elastic pool level.

Conclusion

This increase in log rate limits helps our customers using General Purpose databases and elastic pools improve performance of bulk data loading and data modification operations. In some cases, customers may be able to scale down to a lower service objective and maintain the same data load performance.

This improvement is another step in our journey to improve Azure SQL performance and scalability to help our customers achieve their goals more efficiently and at lower cost, while maintaining Database-as a-Service principles and existing recoverability SLAs.

by Contributed | Oct 15, 2020 | Azure, Technology

This article is contributed. See the original author and article here.

This covers broad resources to help you get started with Microsoft Azure. To find resources on a specific element of Azure, please re-try your search with those keywords or use filters to refine your results.

Getting Started

- Microsoft Azure: Sign in to your Azure account. The site also includes technical documentation, pricing, training, and news for Microsoft Azure. Start turning your ideas into solutions with Azure products and services

- Microsoft Learn: Microsoft Azure: Find learning paths, training, and information on how to obtain a Microsoft Certification on Azure. Grow your skills to build and manage applications in the cloud, on-premises, and at the edge.

- LinkedIn Learning: Azure: See the LinkedIn Learning courses on Azure. Get the training you need to stay ahead with expert-led courses on Azure.

Working with Azure

- Azure Architecture Center: Find everything you need about Azure architecture, from application architecture guides, to reference architectures, cloud design patterns, and more. Guidance for architecting solutions on Azure using established patterns and practices.

- Azure Portal: Find the available Azure services, as well as tools and links to technical documentation.

by Contributed | Oct 15, 2020 | Azure, Business, Microsoft, Technology

This article is contributed. See the original author and article here.

If you are as interested in Windows Virtual Desktop and many are, Christiaan Brinkhoff does a complete walk through of how to prepare and deploy Windows Virtual Desktop. At this point, this is the best place for how to build it from the ground up: https://www.christiaanbrinkhoff.com/2020/05/01/windows-virtual-desktop-technical-2020-spring-update-arm-based-model-deployment-walkthrough/

I used this post back in early May as a guide, following it step-by-step, and found it to be very effective. Christiaan talks about the why and how and has a fantastic viewpoint (and is connected to the product).

For more information or assistance on deploying Azure virtual desktop for your business contact us.

by Contributed | Oct 15, 2020 | Azure, Technology

This article is contributed. See the original author and article here.

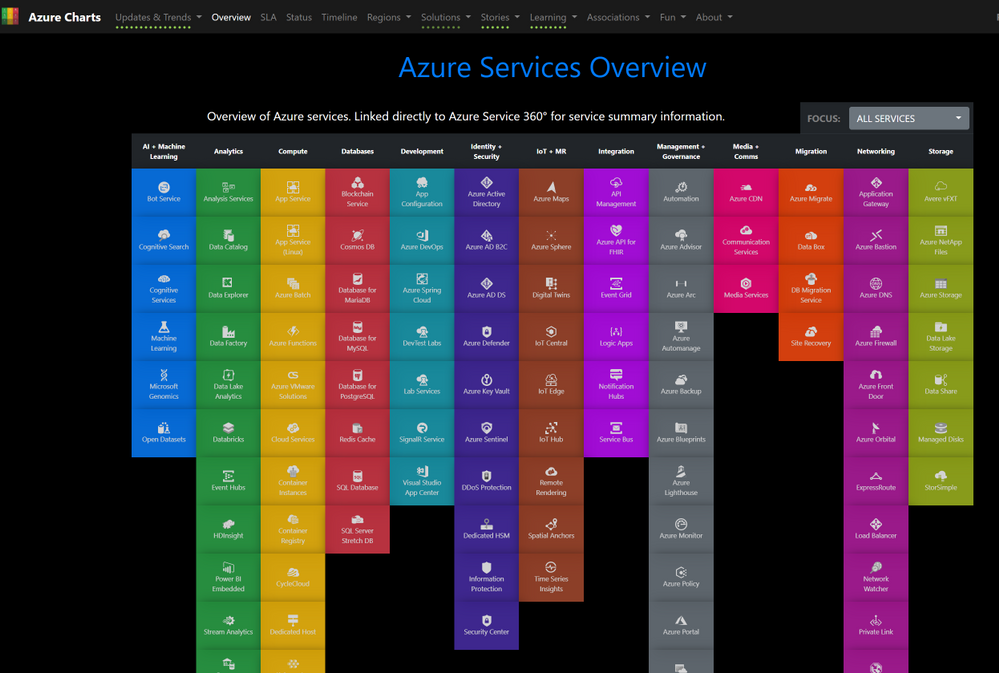

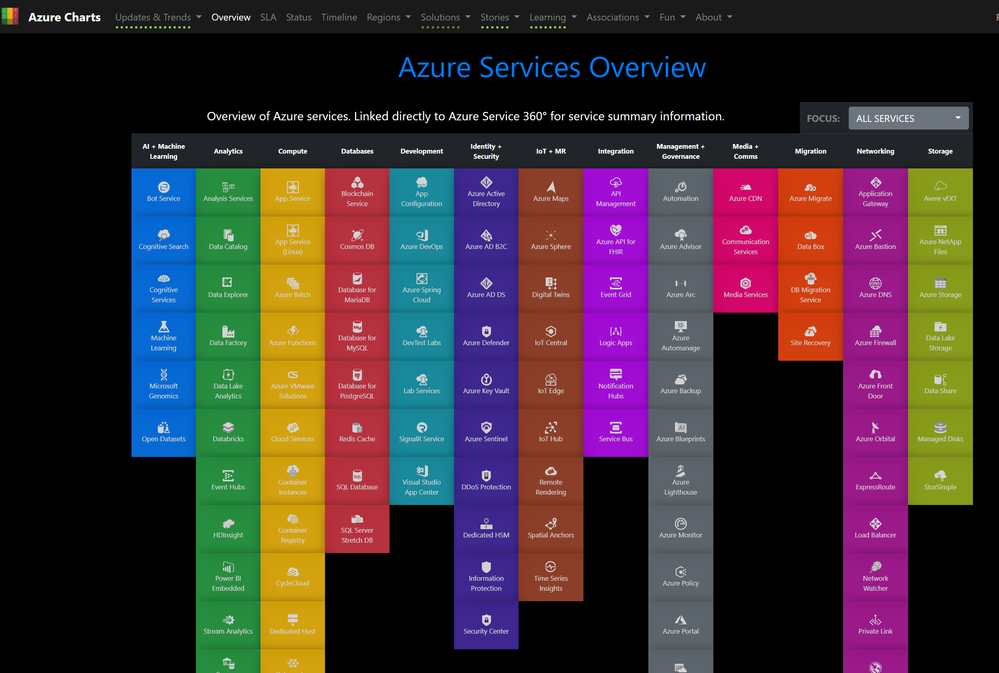

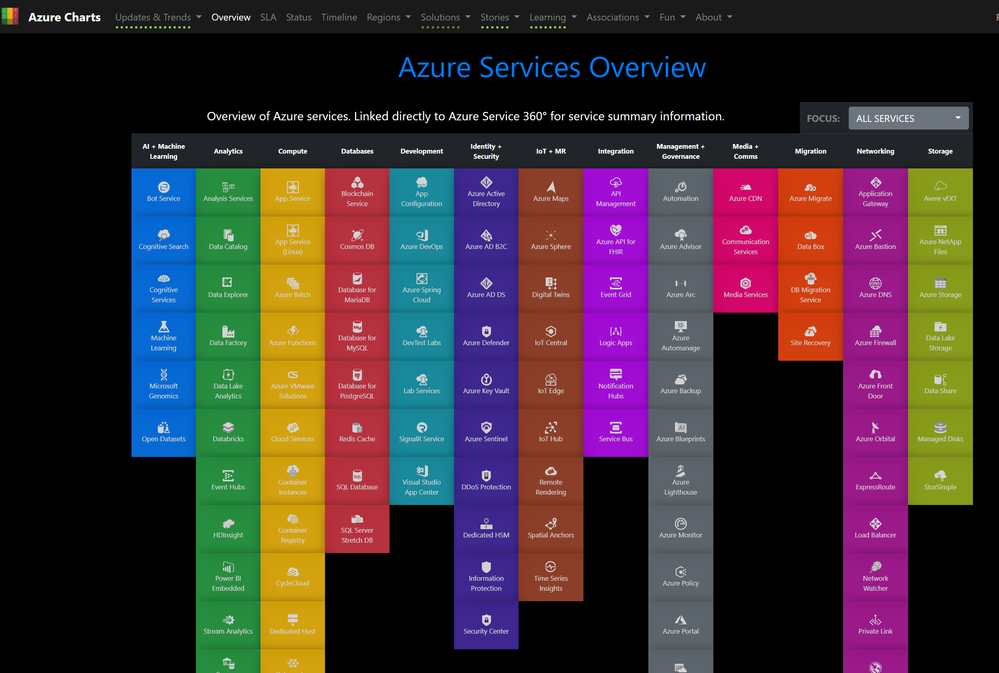

The rate of change in cloud service offerings is astounding. How do you keep up? Fortunately, there is a fantastic visual guide to tracking things here: https://azurecharts.com/

This is continuously updated with new service offerings and Azure updates as well a new features and functionality with the AzureCharts site itself. If you do anything in Azure, this is an excellent resource to identify what services are available where, what’s coming next, and a multitude of other things. Azure Charts: “Communicating Azure’s current state, structure and updates in a compact digestible way.”

![]() .

.![]()

Recent Comments