What is shutting down my Azure Virtual Machine?

This article is contributed. See the original author and article here.

Recently I came across a scenario where someone had changed the time on a scheduled Azure virtual machine shut down, but the VM was not adhering to the shut down new time.. Learn how asking the wrong question can cause you to miss the answer!

Background – the change

The systems administrator had an Azure Automation Runbook in place that told the Azure Windows Server virtual machine to shut down at 10pm each night. After changing the script to 11pm, the admin noticed that the server was still shutting down at 10pm.

So they started to investigate the wrong question …

“Why isn’t the VM acknowledging the changed time in the updated schedule?”

The importance of broader questions

With this question, we’re assuming part of the cause – that the VM is controlled by the Azure Automation Runbook but somehow doesn’t realize there has been an updated change to the schedule. We could wrack our brains & comb through logs for days, without answering this question. Why? Because it’s the wrong question to ask.

A better question

Instead, let’s rephrase the problem a little broader.

“WHY is the VM shutting down at 10pm?”

Notice I didn’t say “why is the VM still shutting down at 10pm”. I want to set aside for a moment any past behavior versus expected new behavior and instead go exploring some of the reasons why a virtual machine would shut down.

Shut down causes/triggers

Let’s brainstorm a few “usual suspects” that might shut down a VM:

1. Azure Automation – yes, that’s what we were first looking at. Has it saved correctly? Are there any other runbooks executing first?

2. Windows Update – settings on the server itself, Azure Update Management, or even a third party management tool (though in none of those scenarios would I expect it to happen every day, but I have seen stranger things!).

3. Azure Dev/Test Labs – These pre-configured Azure Resource Manager templates let you specify auto shutdown (and auto start) times and policies for your Azure VMs.

4. Something else controlling that server – think of a local script, application or third party management tool. Could the cause of the shut down be inside the VM itself and not related to Azure?

See if you can identify something I’ve left off this list, on purpose!

Analyzing the shut down event

Now I have a few ideas outside the scope of just that one script, it’s time to go and look at the facts.

Starting with the Windows Server event log, it tells me that a shut down event was initiated at 2200hrs. Yeah, no kidding. But it’s not very good at tell me what initiated it. This gives me a clue that it may be a factor outside of the server OS.

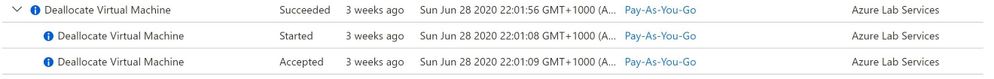

Next, I’ll check the VM’s Activity log in the Azure portal. This logs subscription-level events, including those triggered by Azure Policy. Now we can see that “Azure Lab Services” initiates our shut down events at 10:00pm daily – as well as our . That is not our Azure Automation Runbook.

This server is not part of an Azure Dev/Test Lab though, so what have we missed?

Auto-shutdown support for Azure VMs

One place we didn’t look was the Operations section of the Azure VM, in the Azure Portal. Nestled in with Azure Bastion connection, Backup, Policies etc. (relevant to this machine), is the Auto-shutdown section!

And here we’ve found the cause of our shutdowns.

The properties of the Virtual Machine had been configured to shut down the VM daily at 10pm.

Summary

If you’ve ever scratched your head over a problem, only to have someone else quickly find the cause … welcome to the human race! Sometimes our troubleshooting questions lead us in a defined direction, missing the clues that we actually need. So the next time you’re faced with a problem, step back and look at what questions you are asking to try and solve it, and what assumptions they may contain.

Learn more with our Introduction to Azure Virtual Machines, on Microsoft Learn.

Recent Comments