by Contributed | Jul 20, 2023 | Technology

This article is contributed. See the original author and article here.

There are several actions government IT admins can take to increase their organizational security resilience and continue to deliver the citizen services, including: building the right team to assess your current security environment and utilizing a comprehensive security portfolio to maximize protection and reduce data exposure.

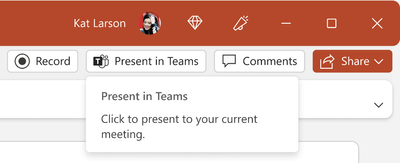

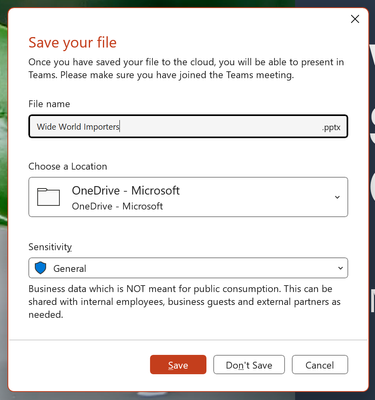

The Microsoft Teams Product Group is excited to introduce Teams Premium for Government Community Cloud (GCC). On Wednesday, July 19th 10:00 AM PST |1 PM EST, Teams engineers will walk you through the details of the new Teams Premium SKU.

Microsoft To Do is a task management tool that helps you stay productive, manage your day, and collaborate with your colleagues. We are pleased to announce that it’s now available for use by customers in GCC High and DoD tenants via the web app, as well as integration in Outlook on the web. Other platforms (desktop, mobile) are not available yet in GCC High and DoD.

We’re excited to announce that customers using US Government Community Cloud (GCC) environment and Microsoft Azure can now purchase Windows 365 Enterprise and deploy their Cloud PCs in Microsoft Azure commercial regions.

Join Jay Leask and other members of the Government Community live on LinkedIn!

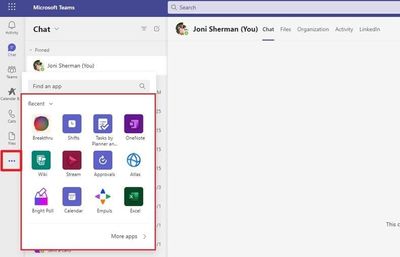

Customers in our Office 365 government clouds, GCC, GCCH, and DoD, are continuing to evolve how they do business in the hybrid workplace. As Microsoft Teams is the primary tool for communication and collaboration, customers are looking to improve productivity by integrating their business processes directly into Microsoft Teams via third-party party (3P) applications or line-of-business (LOB)/homegrown application integrations.

Empowering US public sector organizations to transition to Microsoft 365

We have a great line-up of new features to announce.

Exchange Online

Teams

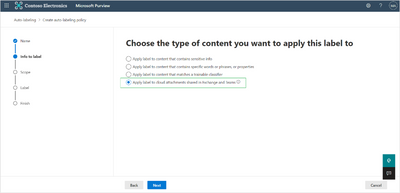

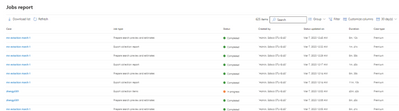

Purview

Microsoft 365

References and Information Resources

|

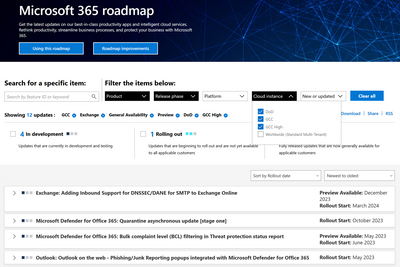

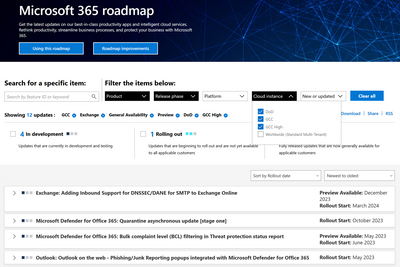

Microsoft 365 Public Roadmap

This link is filtered to show GCC, GCC High and DOD specific items. For more general information uncheck these boxes under “Cloud Instance”.

Stay on top of Microsoft 365 changes

Here are a few ways that you can stay on top of the Office 365 updates in your organization.

Microsoft Tech Community for Public Sector

Your community for discussion surrounding the public sector, local and state governments.

Microsoft 365 for US Government Service Descriptions

· Office 365 Platform (GCC, GCCH, DoD)

· Office 365 U.S. Government GCC High endpoints

· Office 365 U.S. Government DoD endpoints

· Microsoft Purview (GCC, GCCH, DoD)

· Enterprise Mobility & Security (GCC, GCCH, DoD)

· Microsoft Defender for Endpoint (GCC, GCCH, DoD)

· Microsoft Defender for Cloud Apps Security (GCC, GCCH, DoD)

· Microsoft Defender for Identity Security (GCC, GCCH, DoD)

· Azure Information Protection Premium

· Exchange Online (GCC, GCCH, DoD)

· SharePoint (GCC, GCCH, DoD)

· OneDrive (GCC, GCCH, DoD)

· Teams (GCC, GCCH, DoD)

· Office 365 Government (GCC, GCCH, DoD)

· Power Apps (GCC, GCCH, DoD)

· Power Automate US Government (GCC, GCCH, DoD)

· Power BI (GCC, GCCH, DoD)

· Planner (GCC, GCCH, DoD)

· Outlook Mobile (GCC, GCCH, DoD)

· Viva Insights (GCC)

· Dynamics 365 US Government

Public Sector Center of Expertise

We bring together thought leadership and research relating to digital transformation and innovation in the public sector. We highlight the stories of public servants around the globe, while fostering a community of decision makers. Join us as we discover and share the learnings and achievements of public sector communities.

|

|

Microsoft Teams for US Government Adoption Guide

|

|

|

Message Center Posts and Updates for Microsoft Teams in GCC

|

Looking for what’s on the map for Microsoft Teams and only Teams in GCC?

Go right to the GCC Teams Feature Communications Guide

Message Center Highlights

|

SharePoint Online

MC616550 — InfoPath 2013 client and InfoPath Forms Services in SharePoint Online will reach end of support in July 2026

>365 Days

Industry trends and feedback from our customers and partners make it clear that today’s businesses demand an intelligent, integrated forms experience that spans devices which InfoPath does not provide. As announced earlier, InfoPath Client 2013 will reach the end of its extended support period on July 14, 2026 (link), and to keep an aligned experience across Microsoft products, InfoPath Forms Service will be retired from SharePoint Online. We’re sending this message to bring it to your early attention to minimize the potential impact on your organization.

Key Points:

- Major: Retirement

- Timeline: Starting from July 14, 2026, Microsoft will remove InfoPath Forms Services for existing tenants.

- Action: Review and assess impact

How this will affect your organization:

After July 14, 2026, users will no longer be able to use InfoPath forms in SharePoint Online.

What you need to do to prepare:

To understand how InfoPath is used in your organization, you can run the Microsoft 365 Assessment tool to scan the tenant for InfoPath usage. Using the Power BI InfoPath Report generated by the scanner tool, you can:

- Identify all InfoPath Forms usage in the tenant, per site collection and site.

- Evaluate the recency and volume of usage of InfoPath Forms.

- Understand lists, libraries and content types that use InfoPath.

We recommend communicating to the impacted site owners/teams inside your organization now, so they are aware of the coming change.

For scenarios where InfoPath or InfoPath Forms services are currently being used, we recommend migrating to Power Apps, Power Automate or Forms. Please ensure that you allow adequate time for migration of any use of InfoPath/InfoPath Forms Services in your organization ahead of this date, as there is no migration tool provided. Additional instructions on how to migrate can be found in this blog.

Please plan appropriately as there will not be an option to extend InfoPath Form Services beyond the InfoPath retirement date of July 14, 2026.

MC602601 — We’re making changes to promoted results in Microsoft Search

30-60 Days

In order to improve the Microsoft Search experience, promoted results will no longer be supported in Microsoft Search for Organization level scoped searches and will be replaced by the Bookmarks and Q&A features. This aligns with similar retirement for general clouds in October 2021.

Note: Promoted results will continue to be supported for hub and site level scoped searches in Microsoft search and the deprecation will not affect any classic search experiences or SharePoint Search API based experiences.

When this will happen:

We will roll out this change beginning in early August and expect to complete by mid-August.

How this will affect your organization:

Promoted results will no longer trigger in Microsoft Search for SharePoint Home or office.com when this change is implemented.

What you need to do to prepare:

Microsoft Search provides an alternative to promoted results using either Bookmark or Q&A answers.

If your organization set up Promoted Results in SharePoint, you can import the Promoted Results into Microsoft Search and make the imported content available to your users. This is an easy way to quickly populate search results as soon as you set up Microsoft Search and make it more effective for your users. We recommend using promoted results from SharePoint as a reference to understand how to name and create relevant search results.

Microsoft Search allows a search administrator to import promoted results as Bookmarks. See Manage bookmarks for more information.

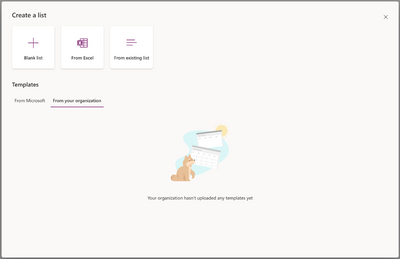

MC600726 — Microsoft Lists User Experience Update

<30 Days

Microsoft 365 Roadmap ID 124867

This update improves the overall performance of Microsoft Lists and introduces new features, including the ability to add ratings to any list, drag and/or paste images directly into a list, see who is collaborating with you in real-time, switch views by clicking tabs, and more.

When this will happen:

Targeted Release: We will begin rollout in mid-July and expect to complete rollout by late July.

Standard Release: We will begin rollout in late July and expect to complete rollout by late September.

How this will affect your organization:

Users in affected tenants will see Lists feature updates as described in this blog post. These improvements will reach all Lists, except those that have been configured with the following features:

- SharePoint Framework extensions

- PowerApps forms

- Approvals

- The Playlist template

Lists that are using these features will not receive the new user experience with this feature rollout. Future rollouts will bring support for Lists that are using these features.

Lists with the new experience will always open inside the Lists app, whether those lists are opened from Lists Home, a sharing link, a direct link, or a link in SharePoint navigation. This means SharePoint site elements like the header and left navigation will not be shown on initial load. There are two ways to show these site elements in a List:

- Users can click the “Open in Site” button in the upper right corner of the list. This causes the List to reload inside the parent SharePoint site with those site elements visible on the page.

- Admins can set a property on a site collection to make all lists in that site collection always load with the site elements intact. This property is called ListsShowHeaderAndNavigation. This an example PowerShell cmdlet that will set this property for a site given its URL: Set-SPOSite -Identity https://contoso.sharepoint.com/sites/site1 -ListsShowHeaderAndNavigation $true

When this change rolls out, there is one workaround to be aware of. Gallery views that have a Group By specified will be displayed in list mode. This is because of an issue where gallery views and group by are mutually exclusive. To get gallery mode back inside this view, remove the Group By. This issue will be resolved in August and you will no longer need this workaround to see gallery cards properly.

What you need to do to prepare:

You don’t need to do anything to prepare for this change.

The ListsShowHeaderAndNavigation setting is currently available if you wish to proactively set this setting to prepare for this rollout. Doing so will mean that lists inside those sites will always be shown with SharePoint site elements like the header and left navigation.

MC590117 — IPv6 by default for SharePoint Online – GCCH

30-60 Days

SharePoint Online will begin enabling IPv6 by default for all SharePoint tenant URLs endpoints. The scope of this change includes all URLs under *.sharepoint.us including .sharepoint.us and -my.sharepoint.us.

When this will happen:

Changes will begin to roll out beginning of July and be completed by the beginning of August.

How this will affect your organization:

When the change is complete SharePoint will begin advertising both AAAA and A DNS records allowing clients to connect either via IPv4 or IPv6. This dual-stack configuration is expected to be transparent with many Office 365 products already configured as such.

What you need to do to prepare:

This is for your information.

For additional information please visit IPv6 support in Microsoft 365 service

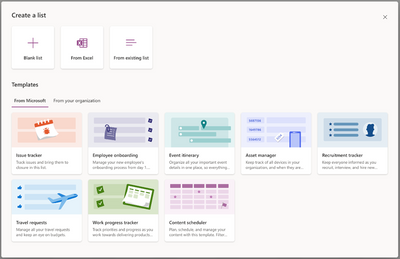

MC588324 — Microsoft Lists: Custom List Template Updates

30-60 Days

The Custom List Templates feature will give organizations the ability to create their own custom list templates with custom formatting and schema. It will also empower organizations to create repeatable solutions within the same Microsoft Lists infrastructure (including list creation in SharePoint, Teams, and within the Lists app itself).

We have made some updates to the feature we are releasing:

- We have updated the extraction cmdlet, Get-SPOSiteScriptFromList to now generate list scripts with bindings.

- In the CreateSPList action you will see key-value pairs, with the tokens and identity, where identity is a unique string to identify the CreateSPList action.

- UI updates to the list creation dialog for the end-user

When this will happen:

Rollout will begin in mid-June 2023 and is expected to be complete by late August 2023.

How this will affect your organization:

End-user impact: Visual updates to the list creation dialog and the addition of a From your organization tab when creating a new list. This new tab is where your custom list templates appear alongside the ready-made templates from Microsoft.

Admin impact: Custom list templates can only be uploaded by a SharePoint administrator for Microsoft 365 by using PowerShell cmdlets. For consistency, the process of defining and uploading custom list templates is like the custom site templates experience and will reuse some of the existing sites related PowerShell APIs.

To define and upload custom list templates, admins will use the following site template PowerShell cmdlets:

- Use the Get-SPOSiteScriptFromList cmdlet to extract the site script from any list

- Run Add-SPOSiteScript and Add-SPOListDesign to add the custom list template to your organization.

- Scope who sees the template by using Grant-SPOSiteDesignRights (Optional).

What you need to do to prepare:

If you are already using the feature in First Release, please re-extract the list script using Get-SPOSiteScriptFromList to get the latest version. Next, update the existing custom list template by using Set-SPOSiteScript.

For all other customers, the visual updates for this feature will be seen by end-users in the updated user interface (UI) when creating a list.

Note: The From your organization tab will be empty until your organization defines and publishes custom list templates.

PowerShell Cmdlets documentation for custom list templates is available here. Public facing documentation for creating custom list templates can be found here.

MC564196 — (Updated) Microsoft Stream: Playlist View in SharePoint List Webpart

<30 Days

Microsoft 365 Roadmap ID 124808

Updated June 20, 2023: We have updated the rollout timeline below. Thank you for your patience.

Users can soon select a playlist to be displayed in the list webpart in the full-blown playlist view along with video playback.

When this will happen:

Standard Release: We will begin rolling out in early June 2023 and expect to complete rollout by late July 2023 (previously late June).

How this will affect your organization:

- Users will be able to select a playlist from the list picker while configuring the list webpart.

- Users will be able to see the full-blown playlist view as the default view for playlists.

What you need to do to prepare:

There is no action required at this time, this change will be enabled automatically.

MC540126 — (Updated) Applied Filters in Microsoft Lists

Rolled Out

Microsoft 365 Roadmap ID 117585

Updated June 7, 2023: We have updated the rollout timeline below. Thank you for your patience.

Microsoft Lists is rolling out a new way to visualize and clear the filters that are present in a view.

When this will happen:

Targeted Release: Rollout will begin in late April and is expected to be complete by early May.

Standard Release: Rollout will begin in early May and is expected to be complete by late June (previously late May).

How this will affect your organization:

As you add and adjust filters to narrow or expand what appears in a view inside a list, you will see which filters have been applied and you can remove them without having to go back into the column filter pane.

What you need to do to prepare:

You don’t have to do anything to prepare for this change.

MC525663 — (Updated) SPO Site Sharing will now use Azure B2B Invitation Manager

<30 Days

Microsoft 365 Roadmap ID 117557

Updated June 30, 2023: We have updated the rollout timeline below. Thank you for your patience.

We are updating the SharePoint Online Site Sharing backend to use Azure B2B Invitation Manager instead of the legacy SharePoint Invitation Manager.

When this will happen:

This change will begin rolling out in late March, and complete rollout by late July (previously late June).

How this will affect your organization:

Today, sharing just the SharePoint site goes through the original SharePoint Invitation Manager which always creates guest users in your organization’s directory. We are now updating this flow & experience to use the Azure B2B Invitation Manager instead. The sharing flow will remain identical to what users see today, but external recipients will now go through your organization’s B2B Invitation Manager experience.

Note: This change will only impact sharing of sites. Users who share files or folders will continue to receive the same experience they have today.

What you need to do to prepare:

You may want to review your Azure B2B Invitation Manager policies.

OneDrive for Business

MC597037 — (Updated) New UI to view OneDrive files excluded from Sync

<30 Days

Microsoft 365 Roadmap ID 124868

Updated June 27, 2023: We have updated the rollout timeline below. Thank you for your patience.

Introducing a UI in OneDrive Sync Advanced Setting to allow users to view the admin configuration of files to be excluded (ignored) from Sync.

When this will happen:

Standard Release: We have started rolling out and expected to complete by mid-July (previously mid-June).

How this will affect your organization:

Commercial tenants will not have any changes to their experience. The commercial users will now be able to see the Sync Excluded files in their OneDrive Settings.

What you need to do to prepare:

No prep is needed.

MC559933 — (Updated) OneDrive: Restore files to original location when turning off folder backup

<30 Days

Updated June 27, 2023: We have updated the rollout timeline below. Thank you for your patience.

OneDrive: Restore files to original location when turning off folder backup.

This feature provides new functionality for users who turn off folder backup (also known as “PC folder backup” and “known folder move”). When users turn off folder backup, they will have the option to restore the files back to their original location. Note: when a folder contains files stored only in the cloud, those files will not be moved; they will remain in the cloud

When this will happen:

Standard Release: This is currently rolling out and will complete rolling out in mid-July (previously late June).

Note: Some users may see this feature before others in your organization.

What you need to do to prepare:

There is no action needed to prepare for this change. You may want to notify your users about this change and update any relevant documentation as appropriate.

MC545906 — (Updated) SharedWith and SharedWithDetails column in OneDrive and SharePoint will no longer be updated

TBD

Updated June 30, 2023: We are not proceeding with this change at this time. We will communicate via Message center when we are ready to proceed. Thank you for your patience.

We will be retiring the SharedWith and SharedWithDetails columns from OneDrive and SharePoint starting in early June. These columns have been hidden by default in OneDrive and SharePoint for several years and were replaced by the Manage Access experience and the “Shared With” section of the Share dialog.

When this will happen:

We will communicate via Message center when we are ready to proceed.

How this will affect your organization:

As described in this documentation, the SharedWith and SharedWithDetails column do not accurately reflect the permissions and people who have access to an item. As such, the column has been hidden by default in OneDrive and SharePoint for 3+ years. With this change, we will no longer update the SharedWith fields during sharing operations. The data within the column will move to being considered customer content and will not be updated or changed by Microsoft. In a future update, we will stop provisioning this column entirely.

Most users already use Manage Access and the Sharing Dialog to manage and understand who has access to their content and these experiences are not impacted by this change. We are continuing to develop and improve the Manage Access experience to make understanding permissions easy and simple for users.

What you need to do to prepare:

If you use the SharedWith or SharedWithDetails columns, we recommend using the Manage Access experience to understand which users have permission to an item.

Please click Additional Information to learn more.

See who a file is shared with in OneDrive or SharePoint

MC529434 — (Updated) Microsoft Syntex: Split PDF Pages into new PDF files with the Extract action in OneDrive for iOS

Rolled Out

Microsoft 365 Roadmap ID 117548

Updated June 7, 2023: We have updated the rollout timeline below. Thank you for your patience.

Users of Microsoft Syntex can soon split a PDF file into multiple PDFs with the Extract file action in OneDrive for iOS. The Extract file action lets you quickly split and extract multiple pages of a PDF into a new PDF file stored in SharePoint or OneDrive.

When this will happen:

We will begin rolling out in late April and expect to complete rollout by mid-June (previously late May).

How this will affect your organization:

Syntex users won’t have to worry about sharing unnecessary information from a large PDF file. Splitting a large PDF file into multiple, smaller files lets users tailor the information they want to view or share with others.

To split a PDF file in Syntex using the Extract action:

- Open the PDF file you want to split in OneDrive for iOS.

- At the bottom of the screen, tap the horizontal bar or drag the bottom menu up to expand the new menu.

- Under File actions, select Extract.

- Choose the page(s) you want to separate and tap Extract.

- Select a location to save the extracted PDF.

- After the new PDF is done processing, a Your PDF is ready banner will appear at the bottom of the screen. Tap Open from the banner to access your new file.

Note: Some users may see this feature before others within your organization. PDF extraction is available only for Microsoft Syntex licensed users; users without a Syntex license will not see the feature. We will bring the capability to other clients, including OneDrive, SharePoint and Syntex browsers soon.

What you need to do to prepare:

There is nothing you need to do to prepare for this feature. You may want to notify your users about this new capability and update your training and documentation as appropriate.

Power Platform

MC611565 — The Modern Security Role Editor User Interface is now available

Rolled Out

We are pleased to announce that the Modern Security Role Editor User Interface is now available for the Power Platform admin center (PPAC). The rollout has started and will be fully completed by June 30, 2023.

How will this affect me?

The new Modern Security Role Editor is only available to PPAC administrators and will include the same functionality as the classic interface that was used to support Dynamics 365 Apps. In addition to carrying over the functionality of the classic interface, the Modern User Interface provides an additional set of rich features that will enhance an Administrator’s governance experience in PPAC.

Where can I learn more?

For more information on the Modern Security Role Editor User Interface and its capabilities, click here.

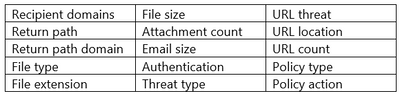

MC589752 — Action Required: Allow list Azure Front Door service tags

Rolled Out

We are updating the recommendation for controlling access to Power Platform URLs and IP address ranges. Our current guidance is to configure your allow-lists using the “AzureCloud” service tag in the Azure IP Ranges and Service Tags JSON files. If you have created a customized implementation of allow-list entries, you will need to update your allow-list to avoid disruption of service.

New Service Tag:

When this will happen:

We will begin using the IP address ranges included in the new Service Tag starting June 16th, 2023 and rolling it out globally according to our safe deployment process.

This change is applicable to all customers in the Public and Government cloud offerings.

What action do I need to take?

Please update your allow-lists to include either the AzureCloud service tag, or add the AzureFrontDoor.Frontend service tag to existing rule sets to prevent potential disruption of service.

If you require further assistance, please contact Microsoft Support.

MC584218 — Manage email notifications to admins

Rolled Out

Direct email notifications are sent to users who are assigned a pre-configured administrator role in an impacted Dynamics 365 or Power Platform environment, or Microsoft Dynamics 365 Lifecycle Services (LCS) project or environment.

To ensure that the administrators receive email notifications in alignment with our existing notification policy, please ensure that the correct roles are assigned, or additional email addresses have been added to the “Additional Notification” list via PowerShell.

What actions do I need to take?

Please review the “Manage email notifications to admins” article and ensure your admins and additional users are assigned the appropriate roles.

If you want additional recipients who are not System administrators in Dataverse or assigned administrator roles in LCS to receive direct email communications, please see use the PowerShell cmdlets to add those email addresses to the “Additional Notification” field of the environment.

Additional resources:

Service incident and change communications are primarily posted to the Microsoft 365 Service health dashboard and the Message center. More information about our service communications can be found here: Policies and Communications for Power Platform and Dynamics 365 Services.

MC579277 — AI Builder – “Create text with GPT” model (preview) is now available

Rolled Out

We are excited to introduce a new AI model in AI Builder: Create text with GPT. This model leverages generative AI, powered by Azure OpenAI Service, to help users create new text content, summarize existing text, extract information from text, and more. This model preview is now available in all environments hosted in the United States.

With this model, users:

- Have a prompt engineering to guide the model to perform various tasks, such as summarizing, creating text, classifying, translating, and more.

- Can embed the model in Power Automate flows to automate workflows with generative AI.

- Will be able to use PowerFx to embed and invoke the model within Power Apps.

Like all new AI Builder models, this model is automatically available in all environments within the tenant.

How do I disable “Create text with GPT?”

If you are still evaluating generative AI and want to disable this model, it can be done at environment or tenant level through the Power Platform admin center.

To disable the model at the environment level:

- Sign-in to the Power Platform admin center.

- Click on Environments in the left navigation pane.

- Select the desired environment and click on Settings.

- Select Product and Features, look for Copilot setting and click on the toggle to switch off the generative AI copilot features.

To disable the model at the tenant level:

- Sign-in to the Power Platform admin center.

- Click on Settings in the left navigation pane.

- Select Copilot setting and click on the toggle to switch off the generative AI copilot features in the right pane.

For more information about this model and how to use it, please visit the Azure Open AI in AI Builder documentation.

We hope this new capability enhances user experience, and we look forward to hearing feedback.

MC576046 — Power Automate – Immediate change to universal search

Rolled Out

Effective immediately, we are no longer supporting Microsoft Learn and Documentation search category results from Power Automate universal search bar by default.

How does this affect me?

Power Automate users who are accustomed to seeing search category results in Microsoft Learn and Documentation will no longer see those results if PowerPlatform.Search.disableDocsSearch is set to True.

What action do I need to take?

If you want to enable Microsoft Learn and Documentation search category results across the Power Platform, you will need to set PowerPlatform.Search.disableDocsSearch to False. You can find more information in this article: Manage search providers.

Please communicate as appropriate with your Power Automate users. If you have questions, please contact Microsoft Support.

Microsoft Viva

MC611679 — Microsoft Viva: Topic card expanded view shows more details about a topic

<30 Days

Microsoft 365 Roadmap ID 118590

We are rolling out the expanded view of the topic card for Viva Topics tenants that brings more information on the topic to users. Users can click on the topic name to view the expanded view of the card and learn more about the topic.

When this will happen:

Standard Release: We will begin rolling out early July and expect to complete by early July 2023.

How this will affect your organization:

No impact. Default feature that will be available to all Viva Topics licensed users.

What you need to do to prepare:

There is no action needed to prepare for this change.

MC590126 — Viva Insights Manager, Leader, and Advanced Insights Available for GCC

<30 Days

Microsoft 365 Roadmap ID 88843

Premium Viva Insights capabilities will soon be available for Government Community Cloud environments.

When this will happen:

Rollout will begin in early July and is expected to be complete by late July.

How this will affect your organization:

In addition to the personal insights and experiences available with a Microsoft 365 subscription, users with a Viva Insights license will also have access to premium personal features available through the Viva Insights app in Microsoft Teams and on the web. Premium personal features which include additional time management features, shared plans, schedule send in Teams, and more – further enable individuals to build better work habits and improve their effectiveness at work. More details here: Personal insights in Viva Insights.

Manager and leader insights are available through the Viva Insights app in Teams and on the web provide visibility into work patterns that can lead to burnout and erode productivity. The metrics presented to managers and leaders have built-in safeguards to protect the privacy of individuals, like de-identification, aggregation, and differential privacy. More details here: Team and Organization insights.

The advanced insights app is used for deep-dive analysis. Viva Insights analysts can use flexible, out-of-the-box report templates as well as advanced tools to generate custom insights tailored to specific business challenges. More details here: Introduction to advanced insights.

The following controls will be available to IT Admins to configure the roll out:

- Manager and leader insights and premium personal insights require a Viva Insights license for every user. Admins can assign Microsoft Viva Insights service plan to users or for specific groups in your organization to enable these enhanced experiences in Viva Insights app in Teams and on the web.

- For advanced insights, every individual included in the analyzed population must be licensed and assigned the Microsoft Viva Insights service plan. Admins can set up advanced insights using these instructions: Microsoft Viva Advanced Insights setup.

What you need to do to prepare:

Review and assess the impact for your organization. Consider updating documentation as appropriate.

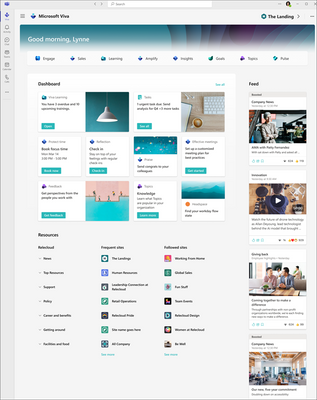

MC533820 — (Updated) New Home Experience for Viva Connections desktop for GCC

>60 Days

Updated June 20, 2023: We have updated the rollout timeline below. Thank you for your patience.

A new desktop experience is being released for Viva Connections that’s easier and faster to set up and optimizes content to deliver a modern employee experience. The new Viva Connections desktop design serves as a new home experience option that centers essential job tasks, personalized content, easy access to other Viva experiences, and better aligns with the mobile experience. The New home experiences for Viva Connections desktop will begin rolling out to GCC tenants.

When this will happen:

Rollout to GCC tenants will begin in late September and is expected to be complete by late October.

How this will affect your organization:

For customers who are currently using Viva Connections, the new home experience will automatically update in Microsoft Teams. Customers with existing home sites can choose to keep the existing desktop experience using a new PowerShell command that will become available to all customers by mid-November. For more details, please visit the customization guidance documentation.

If you’ve already set up Viva Connections, existing content will pre-populate into the new home experience.

If you haven’t set up Viva Connections yet, the default experience includes starter cards on the Dashboard, but otherwise does not impact the current mobile experience.

What you need to do to prepare:

With the new desktop experience, admins and editors will be able to edit content and manage permissions from Microsoft Teams. To prepare for this change, admins and editors for Viva Connections can learn more about how to customize the new home experience and how permissions work by visiting this documentation.

End users in your organization will use the same entry point in Teams as the previous desktop experience. When they view the new experience for the first time, visual prompts will walk them through the main functions of the design and how to interact with different elements.

Microsoft Teams

MC611685 — Click Through Collaborative Annotations Layer in Teams Meetings

30-60 Days

Microsoft 365 Roadmap ID 127900

We are rolling out an enhancement to the current Collaborative Annotations experience whereby screen sharers can click through the annotations layer and interact with underlying content without stopping the existing annotation session. After a sharer initiates annotations from their presenter toolbar, sharers will be able to interact with their background to edit their content by default.

When this will happen:

Standard Release: We will begin rolling out late July and expect to complete by mid-August.

GCC-High and DoD: We will begin rolling out early August and expect to complete by mid-August.

How this will affect your organization:

Click through is available on desktop (Windows and Mac) and web. When users share their screen in Teams meetings and select Start annotation in the presenter toolbar, they will see a new button, Interact with background, in Microsoft Whiteboard toolset which allows them to interact with underlying content without stopping the existing annotation session.

What you need to do to prepare:

No preparation is needed. You may want to update your training materials indicating that the feature is now supported.

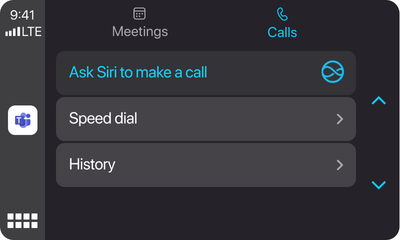

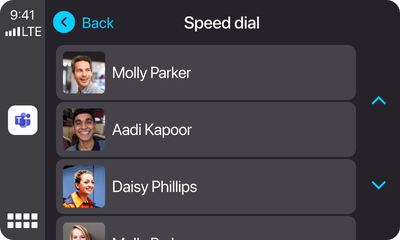

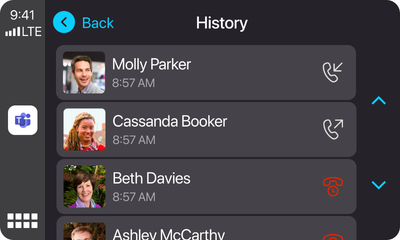

MC611680 — Make calls through speed dial and call history on Apple CarPlay

>60 Days

Microsoft 365 Roadmap ID 125556

Users can now easily call their speed dial contacts and get back to their recent calls on Apple CarPlay with Teams after connecting iPhone to vehicles that support Apple CarPlay.

When this will happen:

Production and GCC: We will begin rollout in mid-July and expect to complete rollout by early August.

GCC-High and DoD: We will begin rollout in early September and expect to complete by early October.

How this will affect your organization:

Users in your organization can now make calls to their speed dial contacts and get back to recent calls on Teams on Apple CarPlay with your iPhone.

After tapping the Teams icon and navigating to the Calls tab (which is next to Meetings tab), users can either choose to access speed dial contacts or call history.

What you need to do to prepare:

No specific action is required as this will be available to all Teams users and not gated by an admin policy.

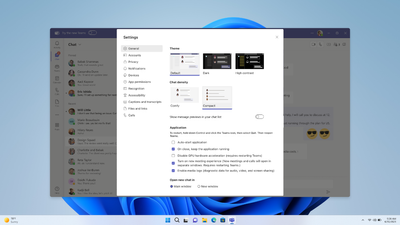

MC609889 — Microsoft Teams Compact Chat List

>60 Days

Microsoft 365 Roadmap ID: 126428

Compact chat list is a feature addition that is coming to compact mode. Users in compact mode will now have hidden message previews in their chat list. This new design will enable users to keep messages more private, view more chats in their chat list and navigate the chat list more easily. This feature addition to the existing compact mode will be rolling out across Microsoft Teams Desktop and Web and will provide a condensed view of the chat list for compact mode users.

When this will happen:

Standard Release: We will begin rolling out to commercial tenants in early June and expect to complete rollout by late June.

GCC: We will begin rolling out in July.

GCCH: We will begin rolling out in August.

DoD: We will begin rolling out in September.

This feature addition will be available in the new Teams desktop experience later this calendar year.

How this will affect your organization:

Users can access this feature addition by switching to compact mode. To do so, users must navigate to Settings and go to the “Chat density” section under the “General” tab. From there, users can select “Compact”.

Upon exiting Settings, users will see a more condensed view of messages in their message pane (existing feature) as well as a compacted chat list (feature addition). The compacted chat list includes hidden message previews and allows the user to see more chats on their screen at a given time.

What you need to do to prepare:

You may want to notify your users about this new design/feature addition in compact mode. Users can switch to compact mode in the Settings menu under the General tab in T1.

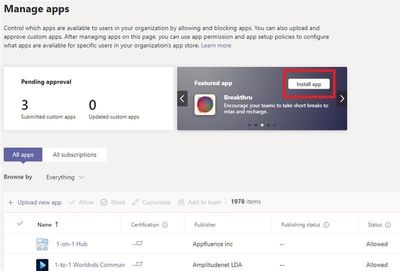

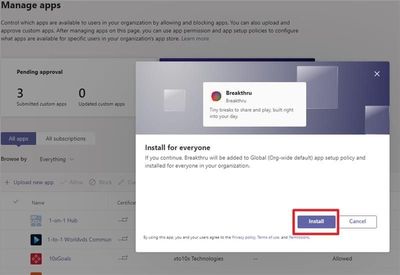

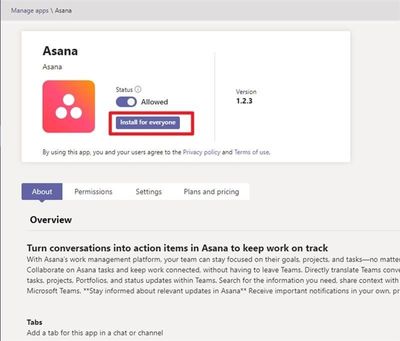

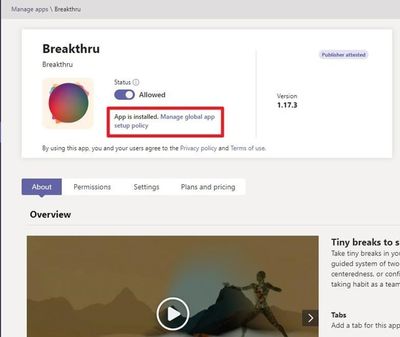

MC604885 — Teams admin center: Simplified App Pre-Install Experience

<30 Days

We are excited to announce a new feature designed to improve the app pre-installation experience in the Teams Admin Center.

When this will happen:

This feature will begin rolling out at the end of June 2023 and is expected to be fully complete by early July 2023.

How this will affect your organization:

Pre-installed apps can enhance the productivity of your users in their daily work. With this new feature, you’ll be able to install useful apps for all your end users with just a few simple clicks.

For Admins:

- Pre-install an app from the banner

- Pre-install an app from the app details page

- Manage a pre-installed app

For Users: Once an app is pre-installed by admins, it will appear in the flyout of the Teams client end for your users.

What you need to do to prepare:

At this time, no action is required from admins.

MC602604 — Microsoft Teams: New App Sharing Experience

Rolled Out

Microsoft 365 Roadmap ID 122536

We are introducing a new app sharing feature in Microsoft Teams, enabling users to share apps directly from the app details dialog to a chat, group chat, or channel.

When this will happen:

Rollout has begun and is expected to be complete by late June.

How this will affect your organization:

This new feature streamlines the app sharing process, eliminating the need to copy and paste app links across different locations on Teams. Users will be able to share apps directly from the app details dialog, enhancing collaboration and communication within the organization.

What you need to do to prepare:

There is no action required from you at this time as this feature will be enabled automatically. You may want to notify your users of this update.

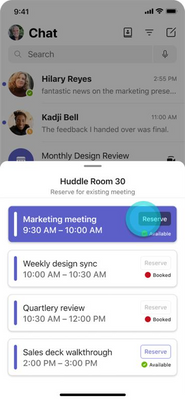

MC602596 — Microsoft Teams Panels Update

>60 Days

Microsoft 365 Roadmap ID 129366

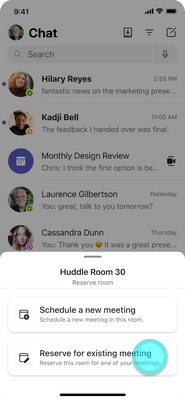

With this update, Teams Panels will support reserving a room using a QR code. This feature lets Microsoft Teams users book a room for meetings now, in the future, or add the room to an existing meeting by scanning a QR code on the scheduling panel and using the Teams app on their Android or iOS mobile phones.

All Teams Panels will have a QR code shown in the top left of the home screen. Panels users can either schedule a new meeting with the room pre-populated for them or easily see the room’s availability for their meetings and book the room with one click.

When this will happen:

Rollout is expected to begin in early August and is expected to be complete by early September.

How this will affect your organization:

In order to access this feature, be sure to have the most up-to-date release of Teams Panels and check that your users have access to the latest version of the Teams app on their mobile phones.

Please note the following:

- External tenants are currently not able to use this feature.

- This feature will ship default “ON”.

You can disable this feature on Teams Panels under Settings > Device settings > Teams Admin Settings > Meetings.

To reserve the room:

- Users need to scan the QR code using their mobile phone.

- In the Teams app on their mobile phone, users should select if they’d like to ‘Schedule a new meeting’ or ‘Reserve for existing meeting’.

- If the user schedules a new meeting, the room will be auto added as the location of the meeting. Users can then fill out the other meeting details.

- If the user selects to reserve the room for an existing meeting that is happening between now and midnight the next day, they will be able to see whether the room is available or booked at those times and can reserve with one click.

What you need to do to prepare:

To prepare for this incoming feature, we strongly recommend communicating this new feature to your users and share instructions if needed.

A recommended scanner for this feature is the mobile system/OS scanner on mobile phones. However, for Android, the scanning may not work if your users have both work and personal profiles on their Android phones. In this case, users will need to add mobile system/OS scanner in the work profile. To do that, follow the steps below:

- In Intune go to Apps -> Android and add

- Select Android enterprise system app

- Enter type of Android phone and Google and paste OS camera package name

- Assign to user / group of users

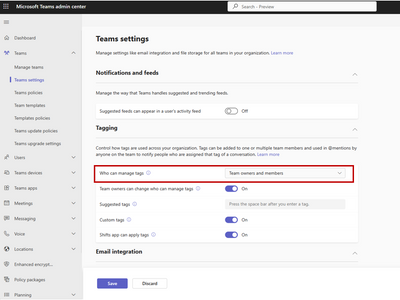

MC591878 — Introducing “Microsoft Default” setting to Teams Tag Management Settings

<30 Days

Microsoft 365 Roadmap ID 88318

We are introducing a Microsoft Default setting to Teams Tag Management settings in Teams Admin Center.

- If you have already explicitly made a selection to your Tag Management settings your tenant will not be affected by this change.

- If you have not made changes to Tag Management settings, Microsoft Default settings will apply for your tenant. Microsoft Default settings currently map to “Team Owners” value but is subject to change in the future.

- As Admin, please set tag management settings to reflect tenant-level preference. Select “Team Owners” to keep tag management limited to Team Owners, or select “Team owners and members” to allow all team members to create and edit tags.

In Teams client, users will still see the same options as they do today: “Team Owners” and “Team owners and members”.

When this will happen:

- Preview: We will begin rolling out late June 2023 and expect to complete by mid-July 2023.

- Targeted Release: We will begin rolling out early July 2023 and expect to complete by mid-July 2023.

- Standard Release: We will begin rolling out early July 2023 and expect to complete by mid-July 2023.

How this will affect your organization:

- If you have already explicitly made a selection to your Tag Management settings your tenant will not be affected by this change.

- If you have not made changes to Tag Management settings, Microsoft Default settings will apply for your tenant. Microsoft Default settings currently map to “Team Owners” value but is subject to change in the future.

If you are an EDU tenant, non-owner students cannot manage tags as long as the Tenant level settings is Team Owners or Microsoft Default. We will continue to respect explicit and preferred tenant settings over Microsoft Default settings. Select your preferred tag management setting for your tenant to ensure you are not impacted by future changes.

What you need to do to prepare:

As tenant Admin, please change your tag management settings to your preferred setting. Here are some steps you can take:

- Sign in to Microsoft Teams admin center at https://admins.teams.microsoft.com.

- In the left navigation pane under Teams section, select Teams settings

- Under Tagging section, select your preference for “Who can manage tags”

MC584833 — Group chat message copy links are now available!

30-60 Days

Microsoft 365 Roadmap ID 122522

This feature allows group chat members to create and share links to specific messages within the chat, with the intention of improving communication and organization of information in group chats.

When this will happen:

Targeted Release: Rollout will start in early June and expected to complete by early July.

Standard Release: We will begin rolling out early July and expect to complete by end of August.

How this will affect your organization:

Links work only for members already in the chat.

What you need to do to prepare:

There is no action needed to prepare for this change. You may want to notify your users about this change and update any relevant documentation as appropriate.

MC579613 — (Updated) Net Promoter Score survey coming to Teams Rooms on Windows

30-60 Days

Microsoft 365 Roadmap ID 121117

Updated June 30, 2023: We have updated the content below with additional information. Thank you for your patience.

In a continuous effort to gather feedback from our Teams Rooms on Windows customers, a new Net Promotor Score survey will appear at the end of some meetings in your conference rooms starting at the end of June.

When this will happen:

Standard Release: We will begin rolling out in late June and is expected to complete by early August.

How this will affect your organization:

After this release is completed, when the user ends a meeting in a conference room, sometimes they will be presented with a survey on the room console to answer some questions about the experience in the room.

Answering the survey is optional and the user can answer none, 1, 2 or the 3 questions from the survey.

The NPS for Teams Rooms is governed by the same rules as any other NPS survey from M365 in your organization:

- There is no PII (Personal Identifiable Information), EUPI (End User Pseudonymized Information) or OII (Organizational Identifiable Information) gathered as part of the survey.

- The information collected corresponds exclusively to the choices selected by the user

- The results of the survey are solely by Microsoft consumption and can be shared with the IT admin if needed via a Support ticket.

The selection of these rooms is random. In the future we will add a control for the IT admin to turn off or on the survey.

What you need to do to prepare:

If desired, inform users they may see a short survey at the end of some meetings and encourage them to give feedback.

The survey will be presented once every 5 days and for only one meeting in that day. If the survey is not answered, it will disappear from the console after a few seconds.

If you want to disable the survey, you can follow this guide Manage feedback policies in Microsoft Teams – Microsoft Teams | Microsoft Learn.

MC579612 — Admin Policy for Collaborative Annotations

Rolled Out

With privacy and security restrictions, we have implemented a user-level IT admin policy for admins to choose whether some or all users in the company can use Collaborative Annotations. Currently, there is a way to turn Annotations ON or OFF through cmdlet Set-SPOTenant-IsWBFluidEnabled, this will also influence Whiteboard.

When this will happen:

We will begin rolling out in mid-June and expect to complete rollout by late June.

How this will affect your organization:

IT admins will be able to turn ON or OFF Collaborative Annotations from the Teams admin center in Meetings –> Meeting policies.

What you need to do to prepare:

There is nothing you need to do to prepare.

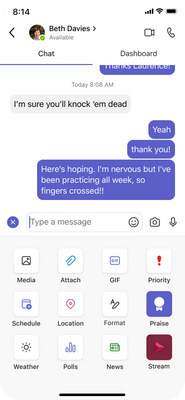

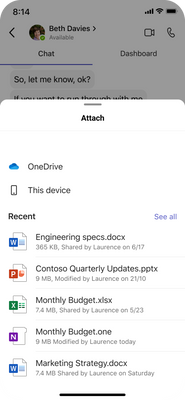

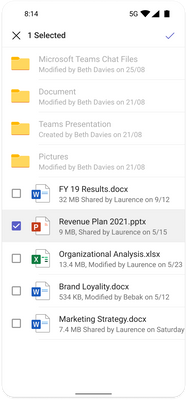

MC579610 — (Updated) Microsoft Teams: Attach Cloud Files in Chat and Channel from Teams Mobile

30-60 Days

Microsoft 365 Roadmap IDs 98321 and 98327

Updated June 30, 2023: We have updated the rollout timeline below. Thank you for your patience.

We are introducing the capability to upload files from OneDrive from Teams Mobile chat and channel for iOS and Android. Additionally, users will be able to view Recent files and attach the most used files upfront from the Recent section.

When this will happen:

We will begin rolling out in late June 2023 and expect rollout to be complete by mid-August (previously mid-July).

How this will affect your organization:

To upload files, click on the ‘+’ icon in any chat or channel message when composing and then click on the paperclip icon.

What you need to do to prepare:

There is no action required from you at this time. You may want to notify your users of this update.

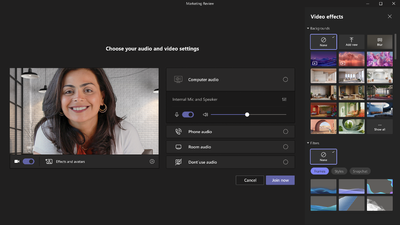

MC578280 — (Updated) Animated Backgrounds in Teams Meetings

>60 Days

Microsoft 365 Roadmap ID 122513

The animated background feature in Teams Meetings allows users to replace their existing background with a dynamic animation for a more immersive virtual environment. It offers various options to enhance meeting experience with creativity and personalization according to users’ preferences. Currently, only pre-defined backgrounds from Microsoft are supported. Animated backgrounds are identified by the small video icon in the bottom-left corner of the preview picture.

When this will happen:

Targeted Release: We will begin rolling out in mid-June and expect to complete rollout by late June.

Public Preview: We will begin rolling out in mid-June and expect to complete rollout by late June.

Standard Release: We will begin rolling out in early July and expect to complete rollout by late July.

GCC-High and DoD: We will begin rolling out in late September and expect to complete rollout by late October.

How this will affect your organization:

Users can go to the pre-join screen before the meeting start > Effects and Avatars > Video effects and select a new animated background. Animated backgrounds can be identified by the little video symbol in the bottom left corner of the preview picture.

Users can also change animated backgrounds when they start a meeting and go to More in meeting toolbar > Effects and Avatars > Select an animated background.

- Animated backgrounds are not available on low-end devices and require at least 8 GB RAM, CPU with 4 logical processors.

- Only pre-defined backgrounds from Microsoft are currently supported.

- Using more video filters might slow down background animation, in case of high machine workload.

What you need to do to prepare:

No preparation is needed. You may want to update your training materials indicating that animated backgrounds are now supported Teams meetings.

MC567504 — (Updated) Updated companion mode for Android meetings

<30 Days

Microsoft 365 Roadmap ID 109606

Updated June 20, 2023: We have updated the rollout timeline below. Thank you for your patience.

This feature allows you to add your Android device to an ongoing meeting, making it easy to chat, react, share, and more.

When this will happen:

Standard Release: We will begin rolling out early June 2023 and expect to complete by early July 2023 (previously mid-June).

How this will affect your organization:

The feature introduces Companion Mode for Android users.

What you need to do to prepare:

Nothing is needed to prepare. However, feel free to test this feature once it has rolled out.

MC565156 — (Updated) Teams Room Windows: Simplified Device Registration Process for Microsoft Teams Rooms Pro Management Portal

Rolled Out

Updated June 9, 2023: Pro portal enrollment will only work for devices that are in commercial cloud and not GCC-H – thus once the Pro Management agent is successfully installed and connected, devices with the Teams Rooms Pro license will be automatically enrolled and visible on the Pro Management portal.

We are pleased to announce an enhancement to the device registration process for Windows-based Teams Rooms devices on the Pro Management portal, aimed at making it more convenient for IT admins.

With the upcoming Teams Rooms app update (v. 4.17) scheduled for release by the end of June 2023, we have streamlined the registration process for Windows-based Teams Rooms devices. IT admins will no longer need to manually download and install the Pro Management agent on each device.

Instead, the Pro Management agent will be automatically downloaded and installed onto the Windows-based Teams Rooms devices as part of the Teams Rooms app update. This improvement simplifies the agent deployment and device enrollment tasks for IT admins, reducing their workload.

Once the Pro Management agent is successfully installed and connected, devices with the Teams Rooms Pro license will be automatically enrolled and visible on the Pro Management portal. This eliminates the need for any additional user action, making the process more efficient.

We understand the importance of a seamless and hassle-free device registration experience for IT admins. This update aims to improve the overall management process and reduce friction when connecting Windows-based Teams Rooms devices to the Pro Management portal.

Stay tuned for the upcoming Teams Rooms app update (v. 4.17) to take advantage of the simplified device registration process and enhance your device management capabilities with the Pro Management service.

How this will affect your organization:

MTRW Devices with Teams Room Pro license will automatically enroll into the pro management portal for state of the art monitoring and remote management capabilities for Microsoft Teams Rooms

What you need to do to prepare:

No action needed.

MC564198 — (Updated) Microsoft Teams: Select Together Mode for everyone on Microsoft Teams Rooms on Windows

<30 Days

Microsoft 365 Roadmap ID 126105

Updated June 30, 2023: Breakout room support (95680) will not be rolling out at this time, and we will communicate via Message center when we are ready to proceed. Thank you for your patience.

The latest version of Teams Rooms on Windows app 4.17 update includes new in-meeting experiences including:

- Choose your Together Mode scene and select it for everyone.

When this will happen:

We will begin rolling out in late June (previously mid-June) and expect to complete rollout by late July.

How this will affect your organization:

Choose your Together Mode scene and select it for everyone:

- Teams Rooms on Windows can now choose a Together Mode scene that fits your meeting type, using the view switcher on console.

- When your Teams Rooms on Windows is an organizer or presenter, you can have all of the participants see the same scene by clicking the ‘Select for everyone’ checkbox and ‘Apply’ button.

What you need to do to prepare:

Kindly notify your users about this new experience and update your training and documentation as appropriate.

MC556772 — (Updated) Microsoft Teams: A new default home screen and enhanced custom background feature for Teams Rooms on Windows

<30 Days

Microsoft 365 Roadmap ID 124774

Updated June 27, 2023: We have updated the content below with additional information. Thank you for your patience.

The latest version of Teams Rooms on Windows app 4.17 update includes new and improved home screen features, including:

- New default home screen experience with modern UI update

- Enhanced custom background feature for Teams Rooms Pro users.

When this will happen:

Roll out begins late-June and is expected to be completed by late July.

How this affects your organization:

New default home screen

The refreshed home screen with modern UI/UX updates for Teams Rooms on Windows was released as ‘opt-in’ in 4.16 app version to allow IT Admins to prepare for changes (M365 Public Roadmap #117952).

In June with the 4.17 app release, the modernized home screen becomes the default experience. Please see this documentation for important checks and changes.

Note that the calendar on the new Teams Rooms UI is aligning with how Teams desktop, web, and mobile communicate with Exchange – all of which do not support purely on-premises Exchange deployments. Only on-premises Exchange servers with Hybrid Configuration and AutoDiscover v2 published externally is supported in the 4.17 app and later versions. For a complete list of requirements, see Microsoft Teams and on-premises mailboxes and How Exchange and Microsoft Teams interact.

If you don’t meet all the requirements listed in the links above: once the 4.17 app version rolls out and your Teams Rooms calendar stops working, you will need to roll back to the legacy UI using the false XML setting to restore your calendar. The value of this setting will carry over in 4.18 and later app updates until the legacy UI is deprecated. More information on the timeline for the retirement of the legacy UI will be announced soon.

Enhanced custom background (Teams Rooms Pro SKU only)

On Teams Rooms with the new home screen experience and Teams Rooms Pro license: IT admins will have the option to specify up to 3 background images and identify which image is applied on the main room display, extended room display, and room console, using the following XML settings:

Custom

file1.jpg

file2.jpg

file3.jpg

Please carefully review the following input requirements for this feature to work as expected:

- Images must be in PNG, JPG, JPEG, and BMP format

- – this XML setting specifies background for the right side of the dual display which has the calendar; input in this setting is required regardless of whether the device is in single or dual display mode

- – this XML setting specifies background for the left side of the dual display which has the time and room info; input in this setting is required when dual display setting is ON

- – this XML setting specifies background for the room console; input in this setting is optional

To avoid cropping of background images, use the following image dimensions (based on 1080p display standards) for each screen aspect ratio respectively:

- 16:9 – 1920 x 1080 px (minimum image dimension required for room displays)

- 21:9 – 2560 x 1080 px

- 3:2 – 1920 x 1280 px

- 16:10 – 1280 x 800 px (minimum image dimension required for room consoles)

If a 4K image with a 3840 x 2160 px dimension is applied to a 4K display, the full image will be shown. However, if the dimensions of the image and the display do not match, the image scales to fill the frame while preserving the image aspect ratio (without letterboxing) and/or the image is cropped from the center. For example:

- If a 1080p image (1920 x 1080 px) with 16:9 aspect ratio is applied on a 21:9 display, the image scales to fill the frame without distorting the image, and is cropped from the center

- If a 4K image (3840 x 2160 px) is applied on a 1080p display, the image is cropped from the center

Note that once these new custom background XML settings are applied, the legacy custom theme settings will be disregarded. For more information, see

Set up and manage Teams Rooms on Windows custom backgrounds – Microsoft Teams | Microsoft Learn

What you need to do to prepare:

Kindly notify your users about this new experience and update your training and documentation as appropriate.

MC556150 — (Updated) Microsoft Teams: Collaborative Stageview

30-60 Days

Microsoft 365 Roadmap ID 93769

Updated June 30, 2023: We have updated the rollout timeline below. Thank you for your patience.

Users can now open app content in a separate Teams window that’s been built for collaboration.

When this will happen:

Standard Release: We will begin rolling out mid-July (previously mid-June) and expect to complete by late August (previously late June).

How this will affect your organization:

Collaborative Stageview is a new type of Teams window that allows users to engage with content and conversation, side-by-side. For apps that have built their experience for Collab Stage, it allows users to:

– Open their content from eligible adaptive cards directly into a new Teams window

– Engage with their app content in a large Teams canvas

– Continue the conversation from which they came, within the Collaborative Stageview side panel

What you need to do to prepare:

Users opening apps in the Teams web client will be unaffected by this change. In the web client, Collaborative Stageview will fall back to the current Stageview modal.No action is required from admins. Apps that have built for Collaborative Stageview will pop out into new Teams windows.

MC555188 — (Updated) Device State Change and Submit to Approve Admin Notifications Available in Teams admin center

Rolled Out

Updated June 8, 2023: Device state monitoring notifications is rolled out and available for use to all GCC customers now.

Please Note – LoB App submission notifications will be available to use around July/August timeframe.

In public cloud, Teams admin center has monitoring and alert functionality where admins can get notified if a device’s state has changed or if a custom app is submitted for admin approval.

In GCC cloud, Admins can soon use different sets of rules available under the Notifications and alerts section in the Teams admin center to monitor Teams capabilities and receive alerts. For example, you can actively monitor the health of Teams devices such as IP Phones, Teams Rooms on Android, and others if they unexpectedly go offline.

When this will happen:

We will begin rolling out in mid-May and expect to complete rollout by late May.

How this will affect your organization:

Your organization can use Teams monitoring and alerting to do the following items:

- Automatically manage Teams capabilities

- Be alerted if they show something unexpected.

- Take corrective actions to get things back on track.

You must be a global admin in Microsoft 365 or a Teams service admin to configure alerting rules. To use this feature:

- Sign into the Teams admin center.

- From the left navigation, select Notifications and alerts.

- Choose the rule you want to configure from Rules.

What you need to do to prepare:

There is nothing required from you at this time. If you would like to use this feature, please configure rules appropriately for your organization. For additional information, please visit this documentation.

MC554158 — (Updated) A new experience to search within chat and channels.

>60 Days

Microsoft 365 Roadmap ID 122550

Updated June 30, 2023: We have updated the rollout timeline below. Thank you for your patience.

We are excited to introduce a new and improved experience to search within chats and channels.

When this will happen:

- Standard: We will begin rolling this out in late July (previously mid-June) and expect to complete rollout by early August (previously early July)

- GCC: We will begin rolling this out in early August (previously mid-July) and complete rollout by late August (previously early August).

- GCC-H: We will begin rolling this out in late August (previously late July) and complete rollout by early September (previously mid-August).

- DoD: We will begin rolling this out in mid-September (previously mid-August) and complete rollout by early October (previously early September).

How this will affect your organization:

A few key enhancements include:

- The new in-chat and in-channel Search experience will now be available in the right handrail, making it possible to see your chat/channel list and your new search results in one single view. The initiation can be done using the keyboard shortcut Ctrl+F(win) or Cmd +F (Mac).

Note: Search capability on the new Channels experience is currently available on the main view, and we will extend it to the conversation view very soon.

- In addition to the keyboard shortcut, a dedicated button ‘Find in Channel’, placed on the channel info pane can be used to initiate contextual search:

Note: we are working on bring the search entry point to the chat surface soon.

- We now have introduced enhanced captioning and keyword highlighting to aid the discernment of results in chat and channel.

- In-chat search will now also be available in pop-out chats.

What you need to do to prepare:

No preparation needed. The new search experience is set up by default, but you may want to draw attention to these new capabilities in Teams.

MC554154 — (Updated) Teams Rooms on Android support for GCCH

<30 Days

Microsoft 365 Roadmap ID 121548

Updated June 24, 2023: We have updated the content below with additional information. Thank you for your patience.

Teams Rooms on Android and the Teams Admin Center will fully support GCC-H customers. This is feature available only with the Teams Rooms Pro license.

When this will happen:

We will begin rolling out in late June (previously mid-June) and expect to complete by late July (previously mid-July).

How this will affect your organization:

Pro License customers in GCCH will be able to sign into Teams Rooms on Android and also leverage Teams Admin Center for managing their MTRAs.

Note: The following features are not available in GCC-High.

- People counting in a meeting

- Starting a whiteboard

- Report a problem

- 1 on 1 call recording

MC552789 — (Updated) Microsoft Teams: Block Anonymous Users’ Meeting Chat Read Access in Addition to Current Write Access

30-60 Days

This message is associated with Microsoft 365 Roadmap ID 123974

Updated June 20, 2023: We have updated the content below for clarity. Thank you for your patience.

Microsoft Teams IT Admins will soon be able to block anonymous users from accessing the chat in internally hosted meetings by disabling their read access on top of the existing disabled write access. This release of Microsoft Teams updated meeting chat setting will be rolling out across Microsoft Teams Desktop, Mobile and Web and will provide an additional way for tenants to manage external users’ chat access in meetings hosted by internal users.

When will this happen:

GA: We will begin rollout in mid-June and expect to complete rollout by late June.

GCC: We will begin roll out in early July and expect to complete rollout by mid-July.

GCCH: We will begin rollout in late July and expect to complete rollout by early August.

DoD: We will begin rollout in early August and expect to complete rollout by mid-August.

How this will affect your organization:

IT Admins can turn ON this setting for internal users from the Teams Admin Center under Meeting Chat or from PowerShell under MeetingChatEnabledType. Anonymous participants joining meetings organized by internal users who have Meeting Chat as On for everyone but anonymous users from the Admin Center or MeetingChatEnabledType as EnabledExceptAnonymous from the PowerShell will not have read or write meeting chat access on any Teams platforms. Meeting participants who are in the same meeting and have chat access will be notified that some external participants in the meeting might not see chat when this setting is in effect.

What you need to do to prepare:

You may want to notify your users about this new setting and the potential effect on participants’ chat access in their meetings with external users. For additional information, please visit this documentation.

MC552782 — (Updated) Microsoft Teams: Default Meeting Layout Controls for Teams Rooms on Android

<30 Days

This message is associated with Microsoft 365 Roadmap ID 121663

Updated June 7, 2023: We have updated the rollout timeline below. Thank you for your patience.

Microsoft Teams Rooms on Android IT admins can choose the default meeting layout. When a meeting begins, room users will see the default meeting layout. They can switch to other views using the view switcher during a meeting.

When this will happen:

We will begin rolling out in late-June (previously mid-June) and expect to complete by late-July (previously mid-July).

Note: There can be a delay between when features are released by Microsoft and when they become available on a device make and model. If an update isn’t available on your device, check with your device manufacturer for information on the availability timeline.

How this will affect your organization:

IT admins can choose the default meeting layout by selecting Content only and Content + Gallery when content sharing begins. Standard, Premium, and Pro customers can also choose Front Row as the default layout.

What you need to do to prepare:

Please plan on updating all Microsoft Teams Rooms on Android in your tenant to the latest version through the Teams Admin Center. Once devices are updated, you can control new functionalities through the device settings and notify your users about these new capabilities and update your training and documentation as appropriate.

MC552334 — (Updated) Creation of Default Notes tab in new standard channels in Teams

TBD

This message is associated with Microsoft 365 Roadmap ID 101962

Updated June 13, 2023: We will not be rolling out to GCC, GCC-High, DoD and Microsoft 365 operated by 21Vianet as described below. Organizations in these environments can safely disregard this message and we will communicate the new timeline via Message center when we are ready to proceed. Additionally, MC496248 (January ’23) is not applicable to these environments. We apologize for any inconvenience.

MC550533 — (Updated) Microsoft Teams: Virtual Appointments for GCC

Rolled Out

This message is associated with Microsoft 365 Roadmap ID 121360

Updated June 13, 2023: We have updated rollout timeline below. Thank you for your patience.

Advanced Virtual Appointments features in Teams Premium launched to General Availability for commercial customers in early February 2023, and is currently supported in Microsoft Teams.

The Virtual Appointments app provides a central hub for all your virtual appointments needs in Microsoft Teams. You can schedule, view, and manage virtual appointments, get real-time status updates in a queue view, view analytics and reports to gain insight into virtual appointments activity, and configure calendar, staff, and booking page settings.

The virtual appointments are held through Microsoft Teams meetings, which offer robust video conferencing capabilities. For example, a user can share their screen and review data with the end user or an advisor can request electronic signatures on documents, allowing them to close transactions remotely.

Virtual appointments analytics will also be available as part of the release. With our reports, organizations can view and analyze usage trends and history. With individual or department analytics, organizations can focus on key metrics, such as no-shows, wait times, and calendar-level analytics. Organizational analytics allow administrators or operations supervisors to view aggregated analytics across their department and entire organization.

When this will happen:

Rollout for GCC will begin in mid-June (previously early June) and is expected to be complete by late June 2023.

How this will affect your organization:

With any Microsoft 365 license, you can use basic Virtual Appointments capabilities to schedule and join business-to-customer meetings. For example, you can schedule appointments in the Bookings calendar and external attendees can join through the Virtual Appointments Teams app. Teams Premium unlocks advanced Virtual Appointments capabilities that your organization can use to manage and personalize the experience. These include a queue view of scheduled and on-demand appointments, custom logo, and analytics.

The following advanced feature are currently not available in GCC:

- Mobile browser join: attendees joining Virtual Appointments from mobile browser will not be supported in GCC. They will instead be routed to the native Teams app.

- Two-way lobby chat from queue

- SMS notifications: SMS notifications will not be supported in GCC when appointments are scheduled in the Virtual Appointments app

- Virtual Appointments Graph API’s

What you need to do to prepare:

We recommend preparing your users to use Virtual Appointments.

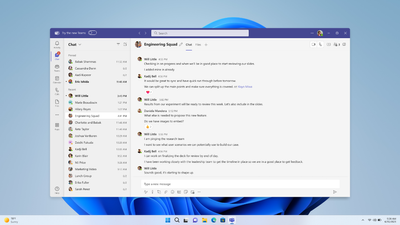

MC550081 — (Updated) Microsoft Teams: New Channels Experience

30-60 Days

This message is associated with Microsoft 365 Roadmap ID 91683

Updated June 24, 2023: We have updated the rollout timeline below. Thank you for your patience.

A new channels experience in Microsoft Teams has been built with an intuitive design that allows teams to focus and stay on tasks, bring everyone up to speed, and actively engage in real-time. The following features will be rolling out:

- The compose box and recent posts will appear at the top of the page. It’ll now be much easier to start a new post, keep up with the latest conversations and participate – giving users the confidence that they’ve not missed anything.

- Every post is now a true conversation that users can focus on. Users can navigate to a conversation view that makes the discussion more engaging and synchronous, just like a chat. Users can also pop out the post, keep an eye on the discussion, while continuing to work on other topics.

- A streamlined information pane will include all the important contextual information like channel’s members and pinned posts so new team members can quickly on-board and for all members to find the information they need, right in the channel.

- Pin posts to make it easy for everyone to know what’s important in the channel and quickly reference it.

- Simplified badging is making it easier for users to understand when there are new unread activities in teams. In addition, the simplified design helps users focus on the specific channels which requires the most attention, like channels in which the user was @mentioned.

When this will happen:

Targeted Release: Started rolling out in May and is expected to complete in early July.

GA and GCC: We will begin rollout in late July (previously early June) and expect to complete rollout by early August (previously mid-June).

GCC-H and DoD: We will begin rollout in early August (previously mid-July) and expect to complete rollout by late August (previously late July).

How this will affect your organization:

Users in your organization will not need to make any changes and will get the new channels experience by default.

What you need to do to prepare:

Admins will not need to make any changes.

MC549348 — (Updated) Webinar Email feature updates -customize content and time to send reminders

30-60 Days

This message is associated with Microsoft 365 Roadmap ID 119391

Updated June 8, 2023: We have updated the rollout timeline below. Thank you for your patience.

Emails for Webinars are critical to engage with registrants and often organizers feel the need to customize the content. To enable this, we are rolling out two features.

- The content of the emails (i.e. all webinar related attendee emails) can be edited by the event organizers. This is reserved for Teams Premium licensed organizers.

- Time to send reminder emails can be configured by the event organizers. This is reserved for Teams Premium licensed organizers.

When this will happen:

Standard Release: rollout will start in mid-July and expected to be completed by end of July

GCC: rollout is end of July and should be complete by mid-August

How this will affect your organization:

Email customization is governed by IT admin policy to enable admins to restrict organizers with Teams Premium license from being able to edit the content of the emails. This setting is available in Teams Events policy. Set-CsTeamsEventsPolicy

What you need to do to prepare:

Both these features are reserved for users that have a Teams Premium license. The policy to customize content is enabled by default in commercial SKU.

MC543386 — (Updated) Microsoft Teams: Mark All As Read for Activity Feed

Rolled Out

This message is associated with Microsoft 365 Roadmap ID 102238

Updated June 20, 2023: We have updated the rollout timeline below. Thank you for your patience.