Unleashing the Power of Deferred processing receiving in Dynamics 365 SCM (supply chain management) Warehouse Management

This article is contributed. See the original author and article here.

Editor: Denis Conway

Introduction

In the relentless pursuit of streamlining warehouse operations and empowering organizations to achieve more, perfecting the receiving process is a cornerstone. This blog post delves into the transformative role of Dynamics 365 SCM Warehouse Management’s feature “Deferred Processing”, a strategic framework designed to increase operational efficiency by effectively deferring work creation, allowing rapid registration of incoming inventory without waiting for each put-away work to be generated.

The Supermarket Analogy

Imagine you are at the checkout in your favourite supermarket. Each customer who visits the store needs to pay for their groceries. Today, most of us usually pay using our debit or credit card, which transfers money from our account to the supermarket’s account. But what if you had to wait for your bank transfer to go through and the supermarket to receive their money before they could move on to the next customer and you could exit the store? That would be tedious, right?

Fortunately, in the world of dynamics warehousing, the process is not as exhaustive. We don’t wait long for work to be created in the receiving or inventory movement process, but we still need to wait a couple of seconds for it.

The waiting time is a consequence of the various database calls needed for a process in the warehouse system to take place, such as inventory on-hand-updates when receiving.

That’s where the deferred processing feature comes into play. Deferred processing creates work in the background, allowing the warehouse worker managing the process to continue without interruptions. It assures the user that “work will be created, but it will be done in the background, so that you don’t have to wait” and can continue receiving other items.

Referring to the earlier paragraph with the supermarket example, the deferred processing allows the cashier and the customer to consider the transfer of money for groceries as complete and processed, even though the real transaction has not taken place yet. In our warehouse system, the process works in an equivalent way. We are told work has been completed and we are allowed to continue in our inventory movement or receiving process, but in fact the work creation has just been deferred to be processed in the background.

Warehouse Scenarios: Regular vs. Deferred Receiving

In a warehouse scenario, the main difference between using deferred processing for deferred receiving and regular receiving can best be explained using a fictional scenario. Let’s imagine a load entering the warehouse holding multiple purchase orders.

Scenario 1: Regular Receiving

In this scenario, the warehouse worker(s) responsible for the receiving process scans the license plate or the items for each order. The worker(s) might need to wait a brief time for the related work to be created based on complexity of put-away configuration logic (in this case, work creation will be moving items in the purchase order from receiving area to its “Put” location in the warehouse) before continuing with the next item. This process is usually fast for the majority of the put-away cases, but in some cases, due to a more complex set of put-away rules, waiting time could be experienced. Even though customers only wait a brief time for each, the waiting time adds up, leading to more idle time for the worker. This situation can be avoided by using deferred processing described in scenario 2.

Scenario 2: Using Deferred Processing for Receiving

In this scenario, we use the deferred receiving feature that is used when put-away rules inside WMS configuration are complex and might result in slight waiting time on WMA powered devices during put-away work creation. The warehouse worker responsible for managing the inbound receiving scans license plate/items for each order, and as we are using deferred processing, the put-away work that is created from that receiving inventory is processed in the background, releasing workers to continue to scan without interruptions. The work is processed in the background, but in the eyes of the warehouse worker, the work can be considered done and completed. This allows the warehouse worker to spend less time on completing the same amount of work.

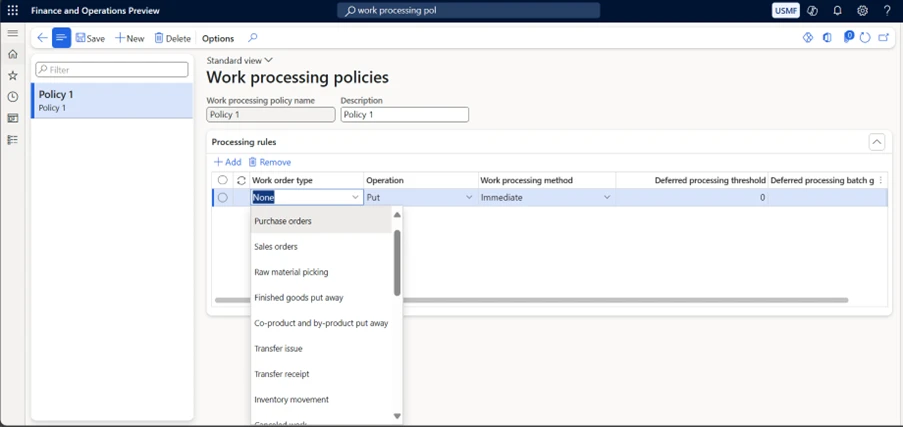

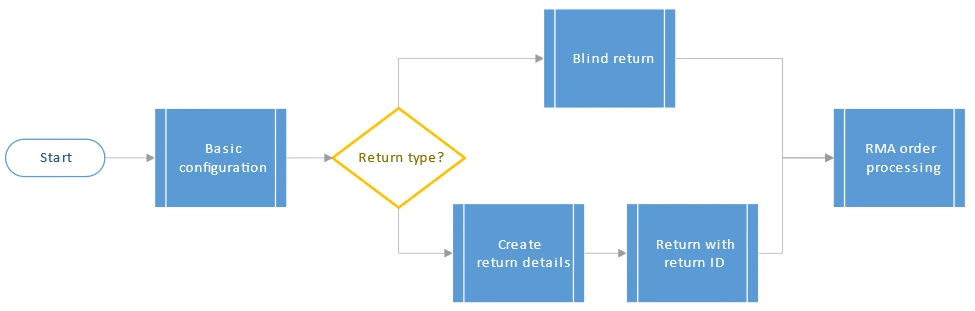

Configuration of deferred warehouse work processing

In the picture below, users can get a hint of what the configuration process looks like. On this page, users select the work order type that the policy is applied to, which type of operation that is processed by using the policy, and the method that is used to process the work line. If the method is set to Immediate, the behavior resembles the behavior when no work processing policies are used to process the line. If the method is set to Deferred, deferred processing that uses the batch framework is used.

A value of 0 (zero) indicates that there is no threshold. In this case, deferred processing is used if it can be used. If the specific threshold calculation is below the threshold, the Immediate method is used. Otherwise, the Deferred method is used if it can be used. For sales and transfer-related work, the threshold is calculated as the number of associated source load lines that are being processed for the work. For replenishment work, the threshold is calculated as the number of work lines that are being replenished by the work. By setting a threshold of, for example, 5 for sales, smaller works that have fewer than five initial source load lines won’t use deferred processing, but larger works will use it. The threshold has an effect only if the work processing method is set to Deferred.

Implementation and Supported Processes

This feature is available for these ways of receiving into your warehouse:

- Purchase order item receiving.

- Purchase order line receiving.

- Transfer order item receiving.

- Transfer order line receiving.

- Load item receiving.

- License plate receiving.

For deferred put-away processing, the following work order types are supported:

- Sales orders.

- Transfer order issues.

- Replenishment orders.

Application of Deferred processing:

In cases where warehouse workers are experiencing slight waiting time on each received item, due to system figuring out where that item should be placed, and processing is slowing down the inventory registration for the worker, deferred processing is an answer.

Conclusion

Deferred processing enables high productivity on inbound docks even in situations where put-away logic is complex, and processing consumes unnecessary time. Deferred receiving empowers workers to effectively handle incoming inventory regardless of length of work creation. It’s a powerful tool for supporting productivity and preventing unnecessary delays.

By deferring work creation and processing it in the background, we allow warehouse workers to focus on their tasks without interruptions.

Ultimately, Deferred processing is not just a feature, but a strategic framework that empowers organizations to achieve more, making it an excellent tool in the modern warehouse operations landscape. It enables customers to keep productivity high in any circumstances and with any put-away configurations.

If it is needed for you, embrace Deferred processing, and let your warehouse operations reach new heights of success.

Get started with Dynamics 365

Drive more efficiency, reduce costs, and create a hyperconnected business that links people, data, and processes across your organization—enabling every team to quickly adapt and innovate.

Business Applications | Microsoft Dynamics 365

Join our Yammer Group:

Welcome to join Yammer group at: Dynamics 365 and Power Platform Preview Programs : Dynamics AX WHS TMS

Learn more:

Mixed license plate receiving – Supply Chain Management | Dynamics 365 | Microsoft Learn

Deferred processing of warehouse work – Supply Chain Management | Dynamics 365 | Microsoft Learn

Deferred processing of manual inventory movement – Supply Chain Management | Dynamics 365 | Microsoft Learn

Deferred processing of warehouse work – Supply Chain Management | Dynamics 365 | Microsoft Learn

Deferred processing of manual inventory movement – Supply Chain Management | Dynamics 365 | Microsoft Learn

The post Unleashing the Power of Deferred processing receiving in Dynamics 365 SCM (supply chain management) Warehouse Management appeared first on Microsoft Dynamics 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

Recent Comments