by Contributed | May 14, 2021 | Technology

This article is contributed. See the original author and article here.

With return to work, and hybrid work becoming a reality, familiarity with physical locations is again coming in to play for many organizations. This “back to work” scenario seems to be playing out along three primary scenarios:

With return to work, and hybrid work becoming a reality, familiarity with physical locations is again coming in to play for many organizations. This “back to work” scenario seems to be playing out along three primary scenarios:

- New hires may have never seen an orgs buildings.

- Return workers may be going to new locations as building consolidation efforts have taken place.

- Existing spaces may now be set up for hybrid work with non-dedicated spaces.

Thankfully, organizations can leverage the tremendous power of Microsoft Viva Connections paired with the rich immersive experiences of SharePoint Spaces to:

- Bring impactful, educational, virtual tours to employees that immerse them in the new environments from comfort of home. SharePoint Spaces provides for immersive 360’ tours that can simulate the onsite experience with additional, contextual, information to enrich the experience.

- Light up through Microsoft Viva Connections where the next generation Intranet is brought to employees where they work…. Microsoft Teams.

- Use high quality, low cost, consumer grade devices like the Insta360 One X2, to capture stunning 360’ images and videos that can be leveraged within SharePoint Spaces.

Resources:

Thanks for visiting – Michael Gannotti LinkedIn | Twitter

Michael Gannotti

Michael Gannotti

by Contributed | May 14, 2021 | Technology

This article is contributed. See the original author and article here.

Hello everyone, we are starting a new video tutorial series focusing on Endpoint Protection integration with Configuration Manager. This series is recorded by @Steve Rachui, a Microsoft principal premier field engineer. The first session is an introduction and previews what is coming in the remainder of the series.

Next in the series Steve focuses on how Configuration Manager can be used to manage antimalware policy settings for the Endpoint Defender client built into Windows.

Posts in the series

- Introduction (this post)

- Antimalware policies

- BitLocker integration and management

- Firewall policies

- Windows Defender Advanced Threat Protection (ATP) policies

- Windows Defender Exploit Guard policies

- Windows Defender Application Guard policies

- Windows Defender Application Control (WDAC) policies

Go straight to the playlist

by Contributed | May 14, 2021 | Technology

This article is contributed. See the original author and article here.

Sharif Nasser grew up with the dream of becoming an inventor.

As a teenager, he became interested in AI and machine learning and became a strong believer in technology as a powerful tool for equity and progress. While currently studying Robotics at Tecnolo’gico de Monterrey, in Monterrey, Mexico, he uses his technical knowledge and expertise to bridge the digital divide across Latin America.

Through large scale online teaching ventures of up to 5000 students, he is making technology more accessible to all. Learn more about his journey and his belief in low code platforms to lower the barrier to entry: aka.ms/power-students.

If you would like to sharpen your own technical skills, check out: aka.ms/trainingandcertification.

by Contributed | May 14, 2021 | Technology

This article is contributed. See the original author and article here.

Last updated on May 14, 2021

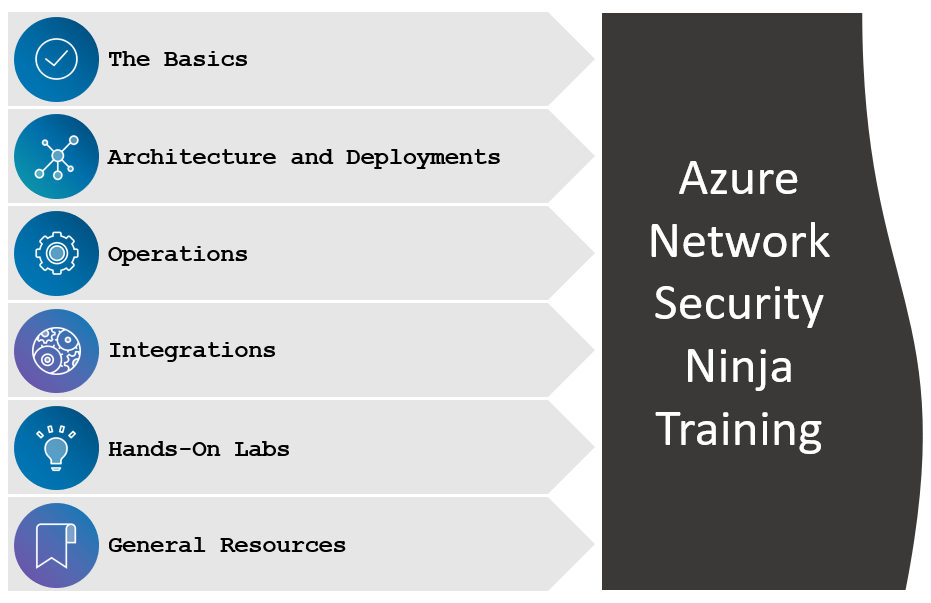

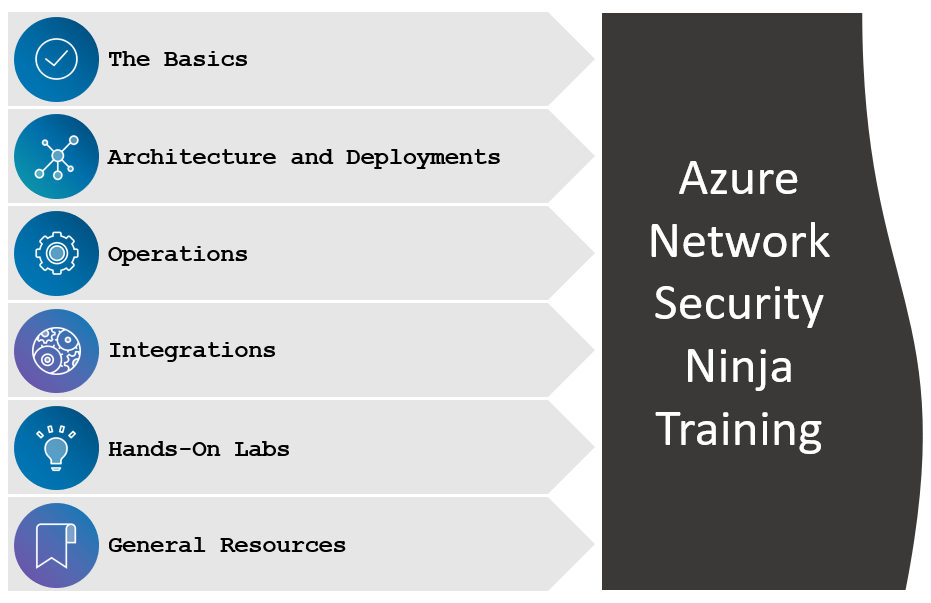

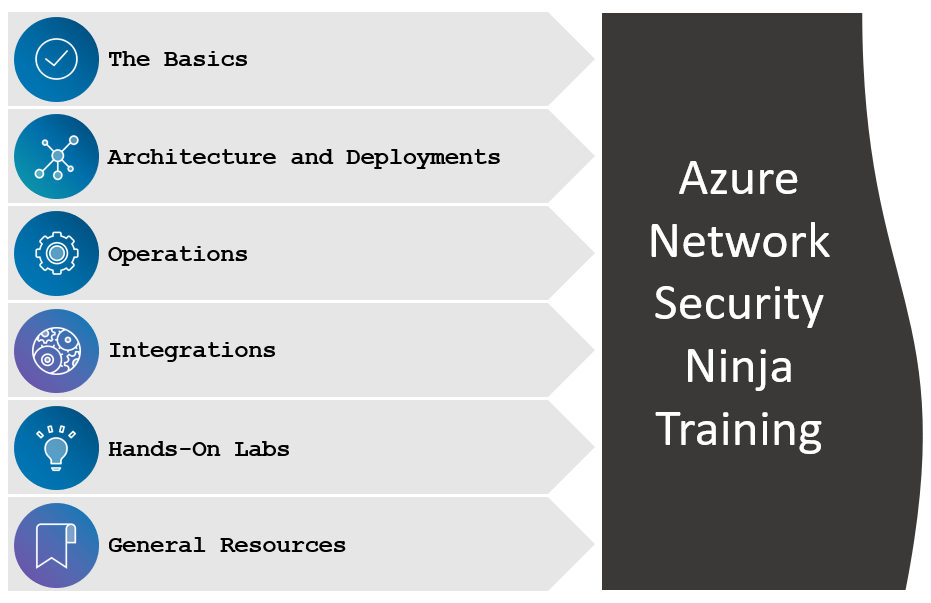

In this blog post, we will walk you through basic to advanced scenarios for Azure network security. Ready to become an Azure NetSec ninja? Dive right in!

Check back here routinely, as we will keep updating this blog post with new content as it becomes available.

Anything in here that could be improved or may be missing? Let us know in the comments below, we’re looking forward to hearing from you.

Azure Network Security Ninja Training Sections

Azure Network Security Ninja Training Sections

1 The Basics

1.1 Introduction to network security concepts

This module introduces general concepts of network and web application security.

1.1.1 Network security in Azure

Be familiar with network security concepts and ways you can achieve a secure network deployment in the Azure cloud.

1.1.2 Web application protection in Azure

Be familiar with web application protection concepts and ways you can achieve a secure web application deployment in the Azure cloud.

1.2 Introduction to Azure network security products

Do you prefer videos? Check out the Introduction to Azure Network Security (50 minutes) webinar, which covers all products listed individually below. You can also quickly browse through the contents of the presentation deck.

1.2.1 Azure DDoS Protection Standard

Azure DDoS Protection Standard, combined with application design best practices, provides enhanced DDoS mitigation features to defend against DDoS attacks.

For more information, check the Azure DDoS Protection Standard documentation.

MS Learn Training Material: Azure DDoS Protection Standard (35 minutes)

This MS Learn module will show you how to guard your Azure services from a denial-of-service attack using Azure DDoS Protection Standard.

1.2.2 Azure Firewall and Azure Firewall Manager

Azure Firewall is a managed, cloud-based network security service that protects your Azure Virtual Network resources. It’s a fully stateful firewall as a service with built-in high availability and unrestricted cloud scalability.

For more information, check the Azure Firewall documentation.

Azure Firewall Manager is a security management service that provides central security policy and route management for cloud-based security perimeters.

For more information, check the Azure Firewall Manager documentation.

MS Learn Training Material: Azure Firewall and Azure Firewall Manager (40 minutes)

This MS Learn module will describe how Azure Firewall protects Azure Virtual Network resources, including the Azure Firewall features, rules, deployment options, and administration with Azure Firewall Manager.

1.2.3 Azure Web Application Firewall (WAF)

Web Application Firewall (WAF) provides centralized protection of your web applications from common exploits and vulnerabilities. You can deploy WAF on Azure Application Gateway or WAF on Azure Front Door.

For more information, check the Azure Web Application Firewall (WAF) documentation.

MS Learn Training Material: Azure Web Application Firewall (WAF) (40 minutes)

This MS Learn module will show how Azure Web Application Firewall protects Azure web applications from common attacks, including its features, how it’s deployed, and its common use cases.

2 Architecture and Deployments

2.1 Standalone Deployments

2.1.1 Azure DDoS Protection Standard

When deploying Azure DDoS Protection Standard, keep in mind that public IPs in ARM-based VNETs are currently the only type of protected resource. PaaS services (multitenant) are not supported for Azure DDoS Protection Standard SKU at this time. For these services, the default DDoS Protection Basic SKU applies.

The main steps to deploy Azure DDoS Protection Standard are:

Do you prefer videos? Check out the Getting started with Azure Distributed Denial of Service (DDoS) Protection (60 minutes) webinar. You can also quickly browse through the contents of the presentation deck.

2.1.2 Azure Firewall

You can choose to deploy Azure Firewall Standard SKU or Azure Firewall Premium SKU (currently in Public Preview). Check the documentation below to get an understanding of their feature differences:

During your planning stages, it’s also a good idea to refer to the known issues for these products. Being aware of these known issues will save you time and stress when deploying your Azure Firewall.

Deploy and configure Azure Firewall using the Azure portal

Azure Firewall logs and metrics

Integrate Azure Firewall with Azure Standard Load Balancer

Use Azure Firewall to protect Azure Kubernetes Service (AKS) Deployments

Azure Firewall DNS settings

Azure Firewall in forced tunneling mode

Do you prefer videos? Check out the Manage application and network connectivity with Azure Firewall (50 minutes) webinar. You can also quickly browse through the contents of the presentation deck.

2.1.1 Azure Web Application Firewall (WAF)

2.2 Advanced Deployments

2.2.1 On-Prem Hybrid

2.2.2 vWAN (Secured Virtual Hub)

2.2.3 vWAN (Secured Virtual Hub) with 3rd party SECCaaS

2.2.4 Hub and Spoke

2.2.5 Forced Tunneling with 3rd party NVAs

2.2.6 Multi-product combination in Azure

2.2.7 TLS Inspection on Azure Firewall

Do you prefer videos? Check out the Content Inspection Using TLS Termination with Azure Firewall Premium (50 minutes) webinar. You can also quickly browse through the contents of the presentation deck.

2.2.8 Per-Site or Per-URI WAF policies on Azure Application Gateway

Do you prefer videos? Check out the Using Azure WAF Policies to Protect Your Web Application at Different Association Levels (50 minutes) webinar. You can also quickly browse through the contents of the presentation deck.

3 Operations

3.1 Centralized Management

3.1.1 Azure Firewall Manager and Firewall Policy

Do you prefer videos? Check out the Getting started with Azure Firewall Manager (35 minutes) webinar. You can also quickly browse through the contents of the Azure Firewall Manager presentation deck.

3.1.2 Web Application Firewall (WAF) Policy

3.2 Optimizing

3.2.1 Web Application Firewall (WAF) tuning

Do you prefer videos? Check out the Boosting your Azure Web Application (WAF) deployment (45 minutes) webinar. You can also quickly browse through the contents of the presentation deck.

3.3 Governance

3.3.1 Built-in Azure Policies for Azure DDoS Protection Standard

3.3.2 Built-in Azure Policies for Azure Web Application Firewall (WAF)

3.3.3 Restrict creation of Azure DDoS Protection Standard plans with Azure Policy

If you are looking to prevent unplanned or unapproved costs associated with the creation of multiple DDoS plans within the same tenant, check out this Azure Policy template. This policy denies the creation of Azure DDoS Protection Standard plans on any subscriptions, except for the ones defined as allowed.

3.4 Responding

3.4.1 Azure Web Application Firewall (WAF)

This Logic App Playbook for Sentinel will add the source IP address passed from the Sentinel Incident to a custom WAF rule blocking the IP. For a more comprehensive description of this use case, check our blog post Integrating Azure Web Application Firewall with Azure Sentinel.

3.4.2 Azure DDoS Protection Standard

During an active access, Azure DDoS Protection Standard customers have access to the DDoS Rapid Response (DRR) team, who can help with attack investigation during an attack and post-attack analysis.

This DDoS Mitigation Alert Enrichment template will alert administrators of a DDoS event, while adding essential information in the body of the email for a more detailed notification.

4 Integrations

Using Azure Sentinel with Azure Web Application Firewall

You can integrate Azure WAF with Azure Sentinel for security information event management (SIEM). By doing this, you can use Azure Sentinel’s security analytics, playbooks and workbooks with your WAF’s log data.

In this blog post, we cover in further detail how to configure the log connector, query logs, generate incidents, and automate responses to incidents.

5 Hands-on Labs

Network Security Demo lab: Azure pre-configured test deployment kit for POC is available in this repository. You can use this lab to validate Proof of Concepts for the different Network security products. You can find more information on set up and demo in the NetSec POC blogpost

WAF Attack test lab: Set up a Web Application Firewall lab environment to verify how you can identify, detect and protect against suspicious activities in your environment. This blogpost provides steps to protect against potential attacks and you can deploy the template from Github.

6 Resource References

Register for upcoming webinars or watch recordings of past webinars in our Microsoft Security Community!

Check out and be sure to contribute with our Azure Network Security samples in GitHub!

Check out our Azure Network Security blog posts in our Tech Community!

Provide feedback and ideas about Azure products and features in our Azure Feedback portal!

by Contributed | May 14, 2021 | Technology

This article is contributed. See the original author and article here.

Starting in August 2020, we introduced a new framework for our monthly community sessions. In addition to our normal Q&A in each community call we will focus on topics related to various components of the Service Fabric platform, provide updates to roadmap, upcoming releases, and showcase solutions developed by our customers that benefit the community.

Agenda:

Join us to learn more about one of most awaited GA announcement of Service Fabric Managed Clusters which we announced this week, new enhancements introduced in GA milestone, how it reduces complexity in cluster management operations, give us feedback and ask us any questions related to Service Fabric etc. This month’s Q&A features one session on:

As usual, there is no need to RSVP – just navigate to the link to the call and you are in.

We have posted recordings of all our past Service Fabric Community call here.

by Contributed | May 14, 2021 | Technology

This article is contributed. See the original author and article here.

Introduction

When we talk about large databases with hundreds of GBs and significant number of database objects, when transactional consistency is required and migration needs to be done in an online fashion with short down time, Transactional replication is currently the most robust and advised way to perform migration from Azure SQL Managed Instance to SQL Server whether it’s hosted on IaaS or on-premises.

Transactional replication has been around for many years, and there is lots of documentation and other kind of resources where you can learn more about it. This blog post will focus on using transactional replication to migrate a database from Managed Instance to SQL Server.

Transactional replication overview

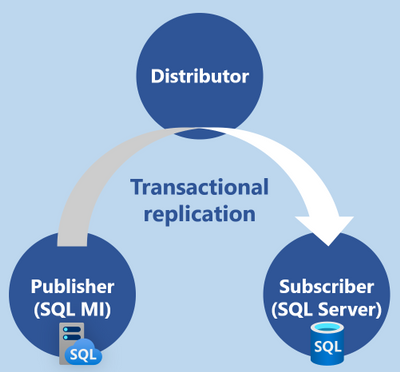

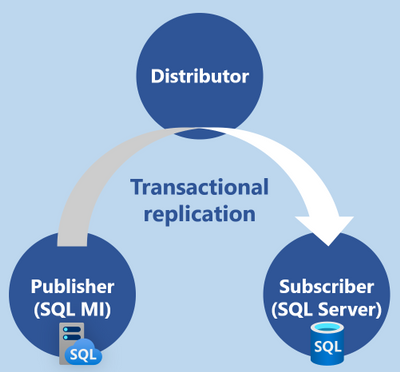

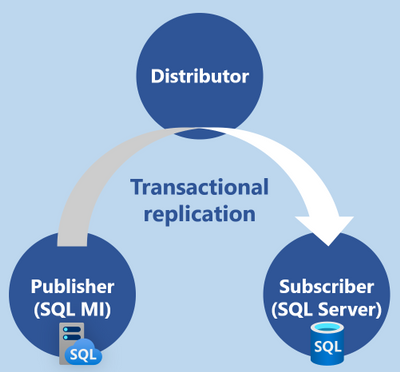

If you haven’t had experience with Transactional replication so far, here is a brief overview of this technology from database migration point of view. There will be three subjects that will participate in the replication process:

- Publisher is the source of the replication, and for us this will be Managed Instance.

- Distributor is coordinating replication, and this can be either Managed Instance or SQL Server.

- Subscriber is replication target, and this will be SQL Server, as we migrate to SQL Server.

Transactional replication is based on three SQL Agent jobs:

- Snapshot Agent (gets the initial database snapshot).

- Log Reader Agent (tracks source database changes after the snapshot is taken).

- Distribution Agent (applies the database changes to target).

Replication can be done in Push or Pull model. Push means that distributor is on the Publisher side, pushing the replication towards Subscriber. In Pull model, distributor is on the Subscriber side, pulling the replication from the Publisher. Each approach has its pros and cons. Distributor needs some resources to run, so it will have some performance impact to the side where it is deployed. Having distributor on a Publisher side means that replication is delivered continuously. If it is on the Subscriber side then the changes are delivered periodically, per schedule. In rare cases when both source and target don’t have enough resources to run the distributor, third option is available – to host distributor on a third server, and it can be either Managed Instance or SQL Server. In this case changes are also delivered periodically.

Transactional replication setup

In this section we will setup a transactional replication for the purpose of migrating database from Managed Instance to SQL Server 2019 hosted on Azure VM. Managed Instance will be both Publisher and Distributor, while SQL Server will be Subscriber. This topology will enable us to perform online migration, as changes from publisher to subscriber are delivered continuously.

Prerequisites

For replication to work, there must be a network connectivity between Managed Instance and SQL Server. In our case this is ensured by having both servers on the same Virtual Network. If that were not the case then VNet peering setup would be required.

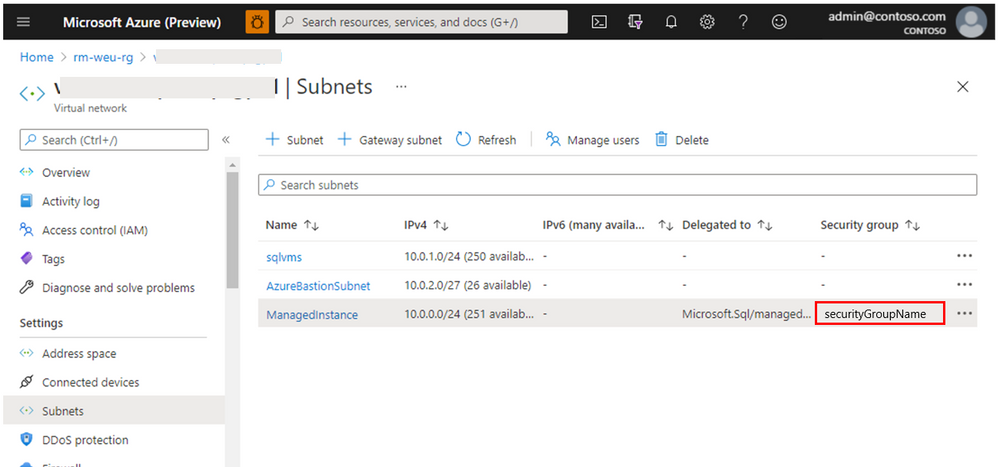

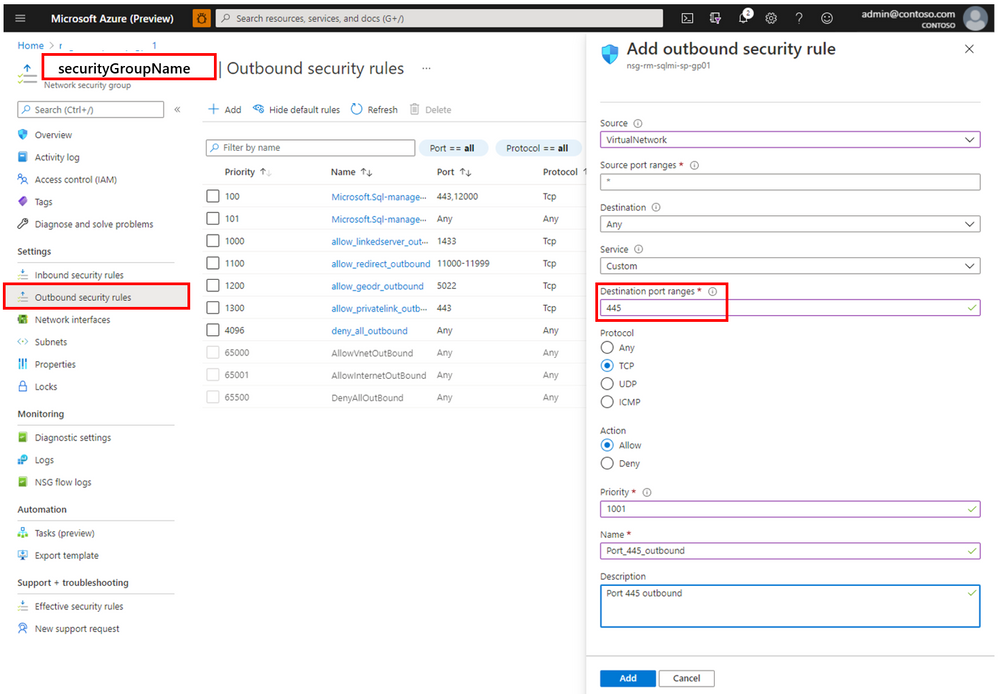

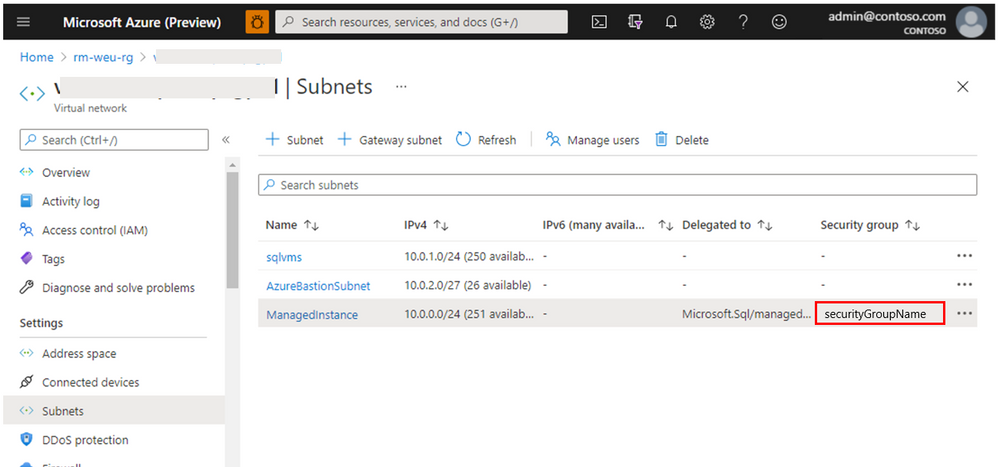

Outbound TCP port 445 needs to be open for transactional replication, so in case you have NSG make sure this port is allowed. Allowing this port will enable Managed Instance to access Azure Storage account, which will be covered a bit later. To allow the port, go to the virtual network of your Managed Instance and identify the security group name.

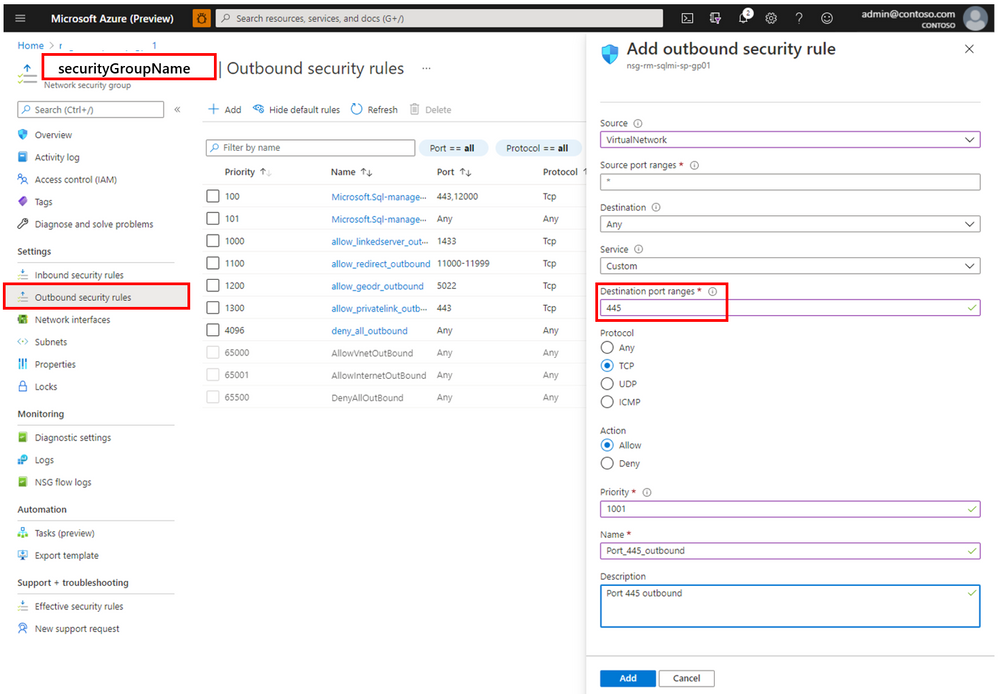

Then find that Security group in the Azure portal, and in its Settings section open Outbound security rules. Click Add and add outbound security rule for port 445.

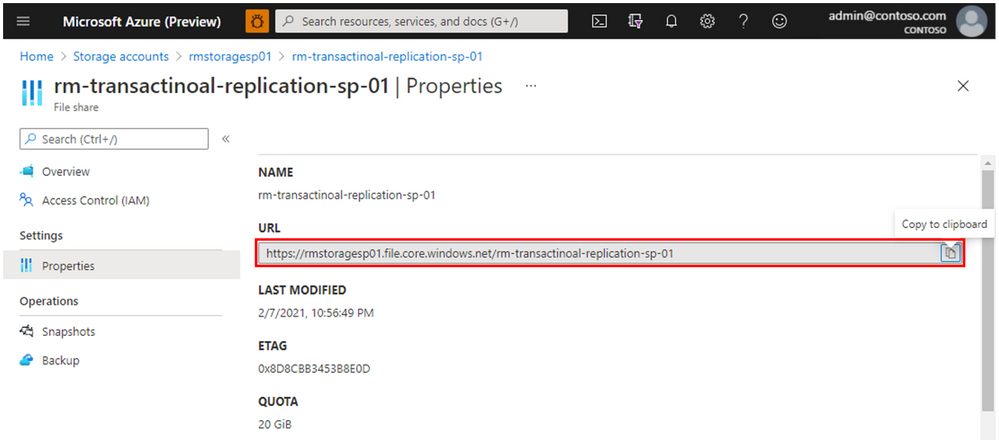

Transactional replication will require a storage account, so if you don’t have one yet, use the Azure Portal to create it. Alternatively, you can use one of your existing storage accounts. Within the Storage account, create a File Share with quota of 20 GiB.

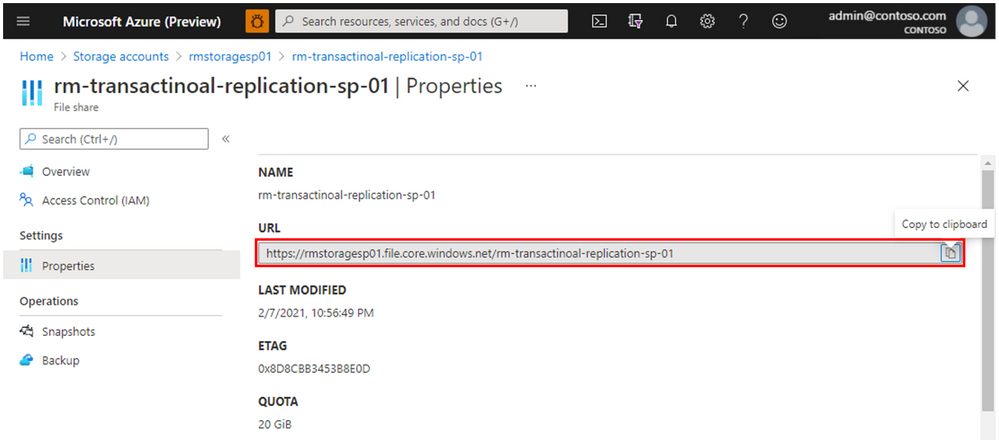

Go to the File share Properties and copy the storage URL because this value will be needed in the following T-SQL script.

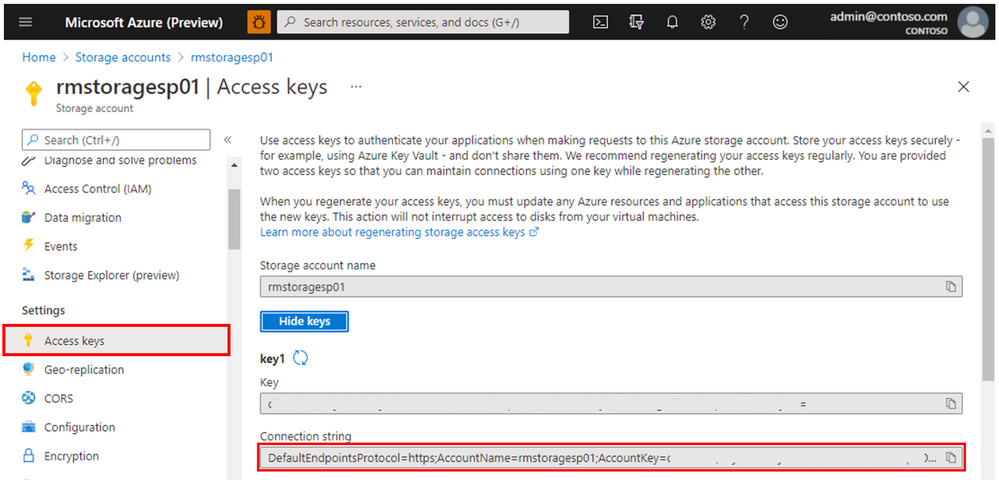

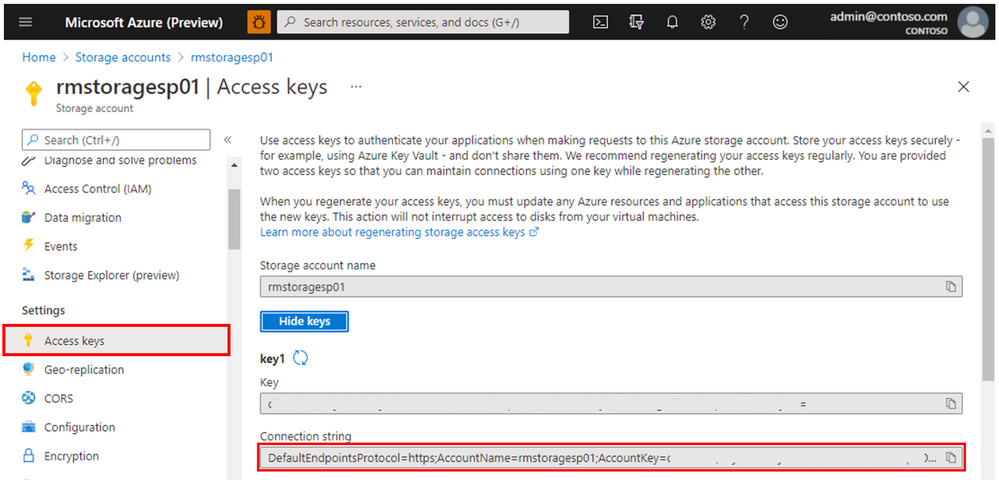

Also, go to the Settings of the Storage account, to Access keys section and copy Connection string, that will also be needed for the T-SQL script.

Once the prerequisites are met, we will setup the transactional replication by creating distribution, publication and finally a subscription.

Configure replication distribution

Storage URL and connection string we’ve already copied will be needed in the following T-SQL script.

DECLARE @distribution_db_name NVARCHAR(50) = N'distribution'

-- Assign the storage connection string you got from the Azure Portal.

DECLARE @storage_conn_string NVARCHAR(MAX) = N'DefaultEndpointsProtocol=https;AccountName=rmstoragesp01;AccountKey=asdf000000000000000000000000000000000000000000000000000000000000000000000000000000000a==;EndpointSuffix=core.windows.net'

-- Assign the storage URL you got from the Azure Portal.

DECLARE @storage_url NVARCHAR(MAX) = N'https://rmstoragesp01.file.core.windows.net/rm-transactinoal-replication-sp-01'

-- Adjust storage URL format.

DECLARE @working_dir NVARCHAR(MAX) = SUBSTRING(@storage_url, 9, LEN(@storage_url) - 8)

SET @working_dir = '' + REPLACE(@working_dir, '/', '')

PRINT @working_dir

-- With following three commands you will set distributor server, distributor database name and configure publisher to use a specified distribution database.

--

EXEC sp_adddistributor @distributor = @@SERVERNAME

EXEC sp_adddistributiondb @database = @distribution_db_name

EXEC sp_adddistpublisher @publisher = @@SERVERNAME,

@distribution_db = @distribution_db_name,

@security_mode = 0,

@login = N'login_name',

@password = N'password',

@working_directory = @working_dir,

@storage_connection_string = @storage_conn_string

Once this is set you can run following three commands to verify the settings.

EXEC sp_helpdistributor

GO

EXEC sp_helpdistributiondb

GO

EXEC sp_helpdistpublisher

GO

If you want to drop any of these settings use stored procedures sp_dropdistpublisher, sp_dropdistributiondb and sp_dropdistributor, or in case you need to reset the settings entirely you can run EXEC sp_dropdistributor @no_checks = 1, @ignore_distributor = 1.

Configure replication publication

To create publication, use SSMS, connect to the Managed Instance with the private link (not public!). In the Object Explorer go to Replication, Local Publications and finally New Publication as on the screen shoot below.

This will open a New Publication Wizard. Click Next, select the database you want to publish and again click Next.

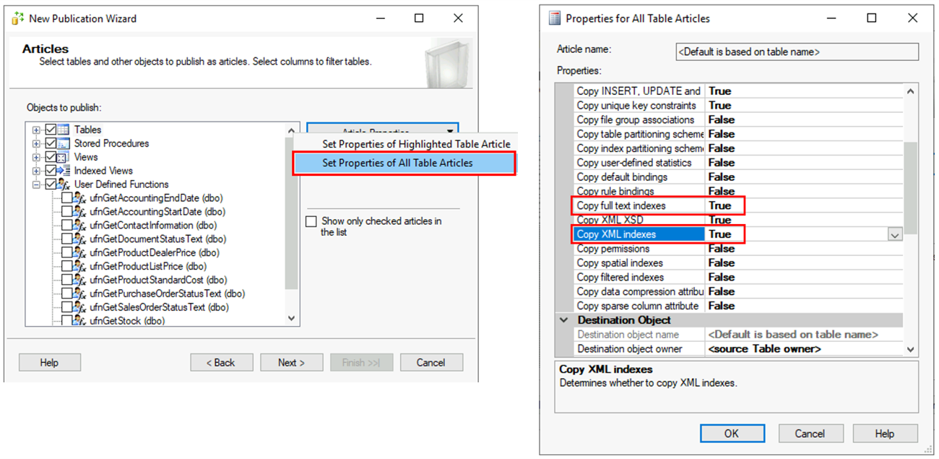

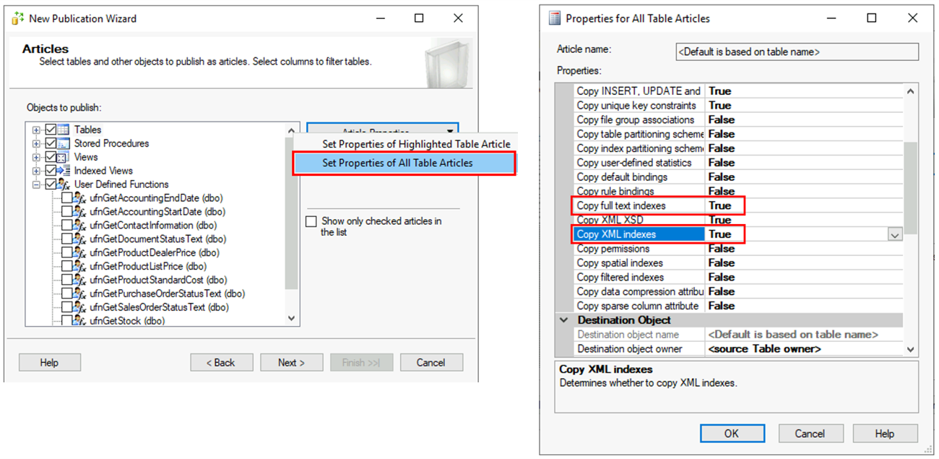

As on the screenshot below, for Publication Type chose Transactional publication and click Next. Then on the Articles window, choose objects you want to replicate. In this example we will chose all Tables, Stored Procedures, Views, Indexed Views and only ufnLeadingZeros from User Defined Functions. Once this is selected, click on the Article properties button.

On Article Properties, select Set Properties of All Table Articles, and then in the menu find properties Copy full text indexes and Copy XML indexes and set their values to True.

Wizard will warn about potential problems on the Article Issues window. We will ignore these and click Next. On the Filter Table Rows window we don’t add any filters, just click Next.

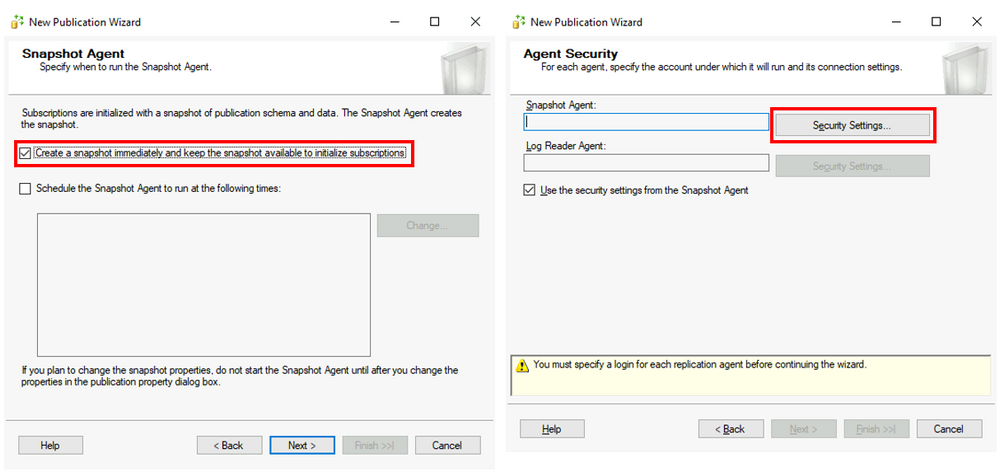

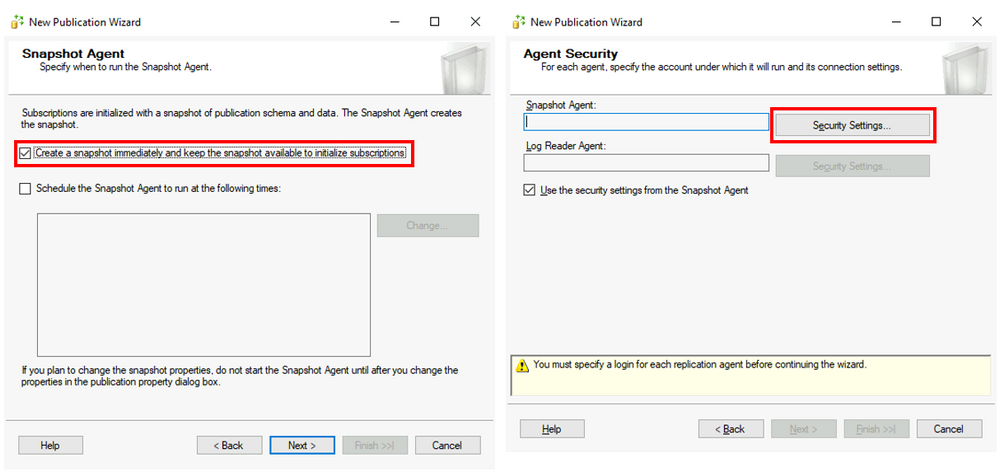

On Snapshot Agent windows check “Create a snapshot immediately…” and click Next. On Agent Security windows click on Security Settings to provide credentials for both Snapshot Agent and connection to the Publisher.

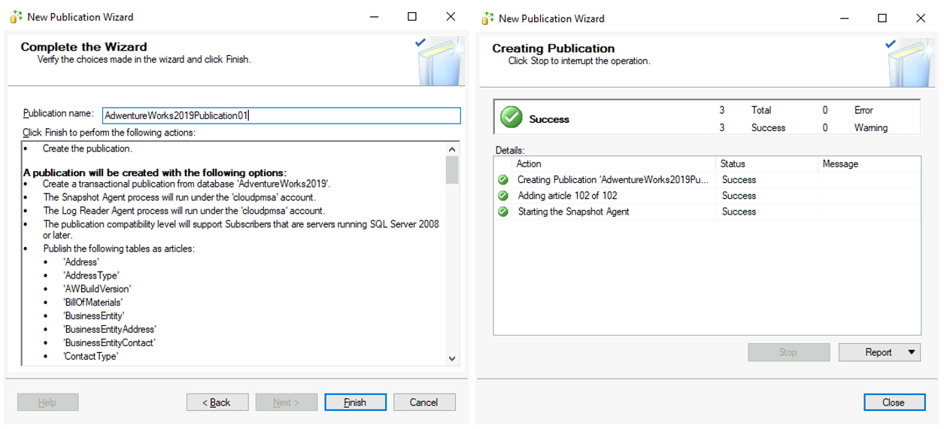

On the Wizard Actions window check Create the Publication and click Next.

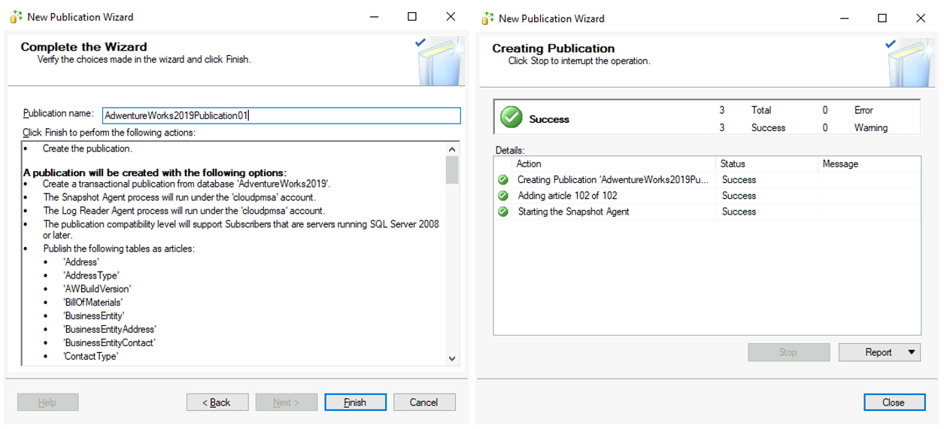

Finally in the Complete the Wizard, provide the name for the publication and click Finish. Wizard will show progress and once the process is done you should see a Success sign with 0 Errors and 0 Warnings. Click the Close button. With this the publication is successfully created.

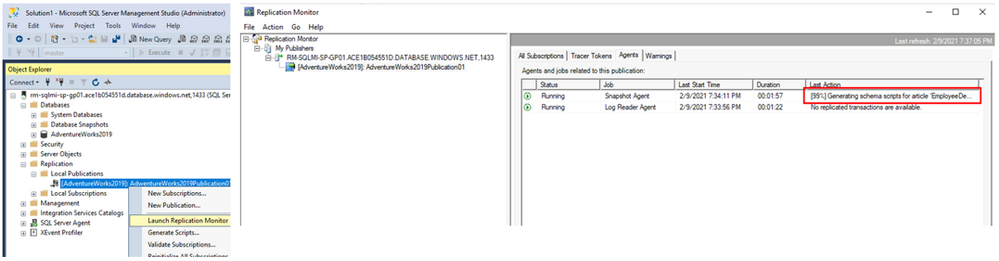

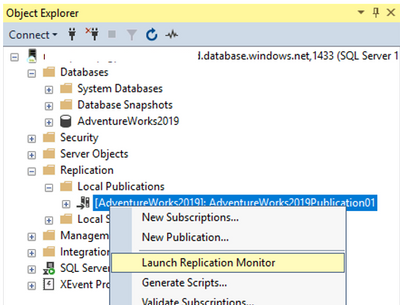

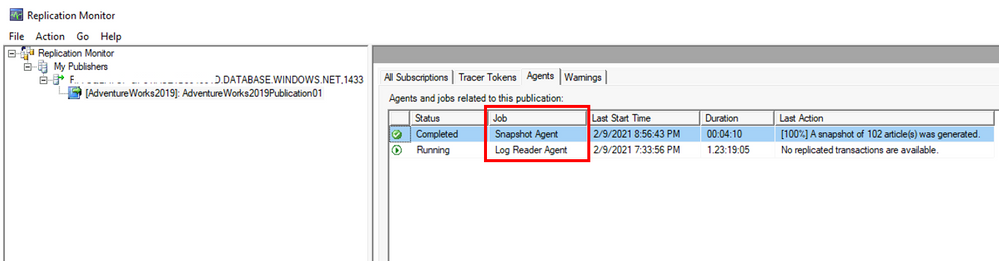

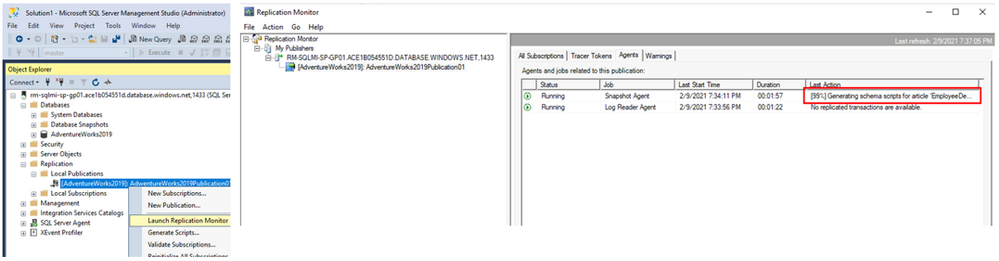

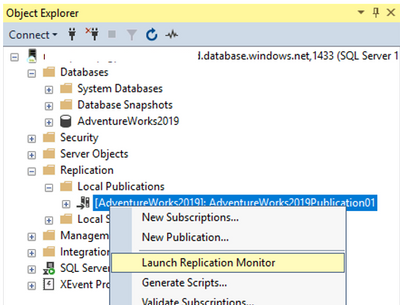

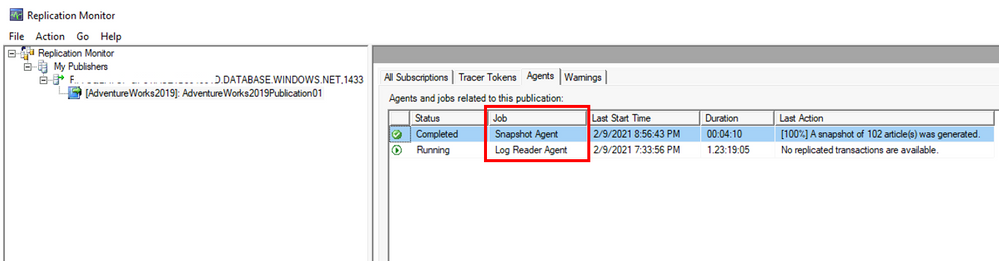

To check the status of the publication, refresh the Local Publications in SSMS and you will find newly created publication. From the context menu of the newly created Publication, click Launch Replication Monitor and find the publisher that has been just created. On the Agents tab you can monitor the progress of the Snapshot Agent and Log Reader Agent. Time needed for a snapshot to be created varies and depends on the size of data that’s participating in the replication. Once Snapshot Agent’s Last Action is “[100%] A snapshot of x article(s) was generated.” the publication is ready.

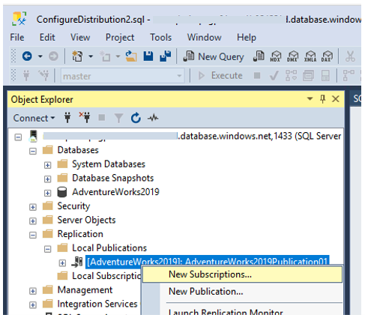

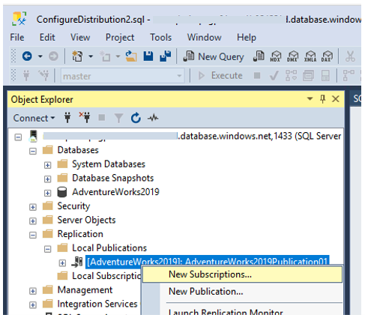

Configure replication subscription

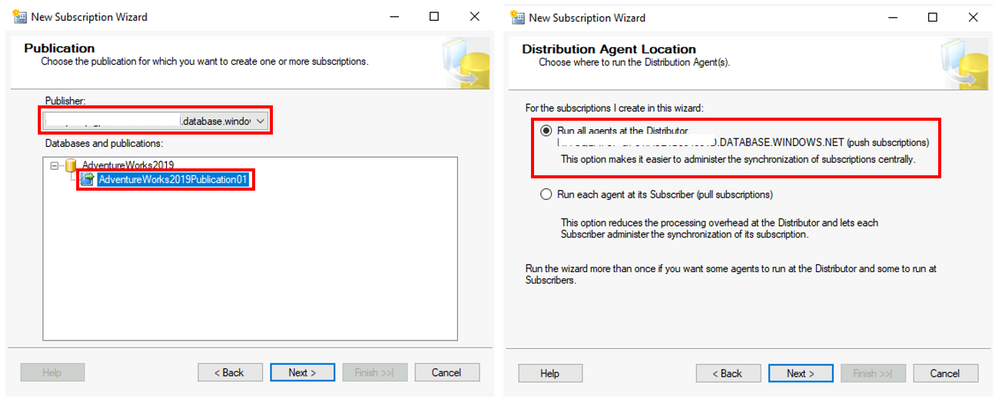

Now when distribution and publication are configured, we need to create a subscription as the last piece of transactional replication setup. In the context menu of the Publication we’ve created, we are going to click on New Subscriptions. New Subscription Wizard will open. Let’s follow its steps.

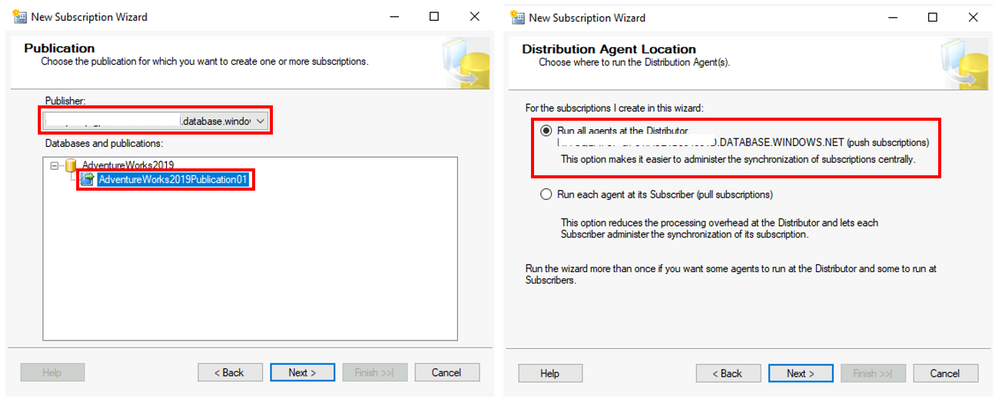

On the Publication window, select the Publisher, database and the publication and click Next. On the Distribution Agent Location select option to Run all agents at the Distributor and click Next.

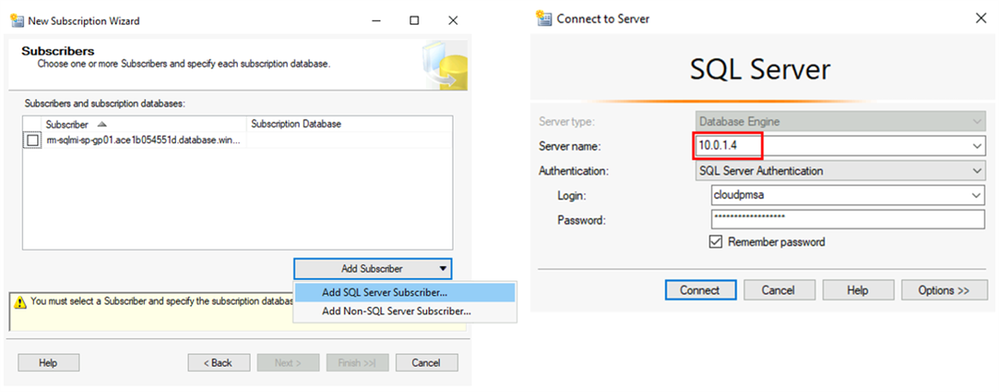

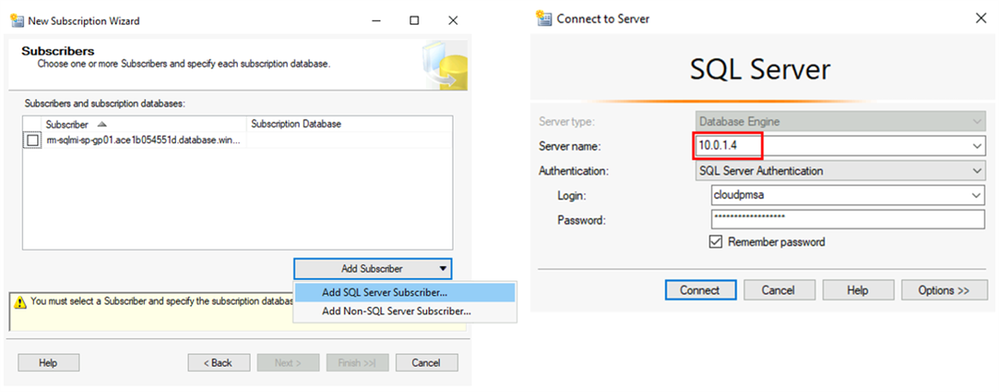

On the Subscriber window, click on Add Subscriber and then Add SQL Server Subscriber. Then connect to the SQL Server that will be the Subscriber. This SQL Server will be the target for the database migration. Use the server’s local IP address for this connection.

For the newly added Subscriber, for the Subscription Database chose New database option from the drop down menu, and in the New Database window, provide the Database name. This will be the name of the target database for the migration. Then click OK and then Next.

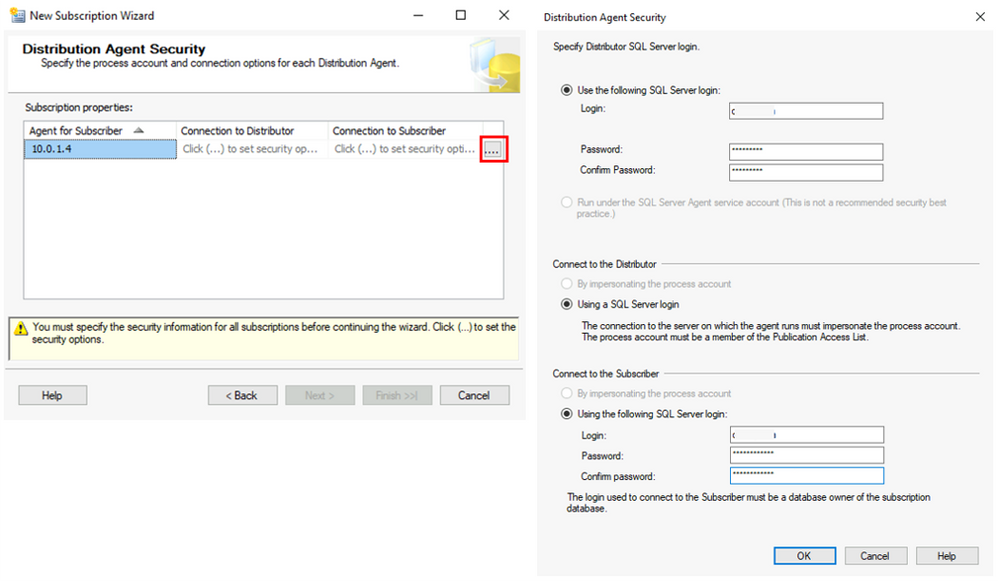

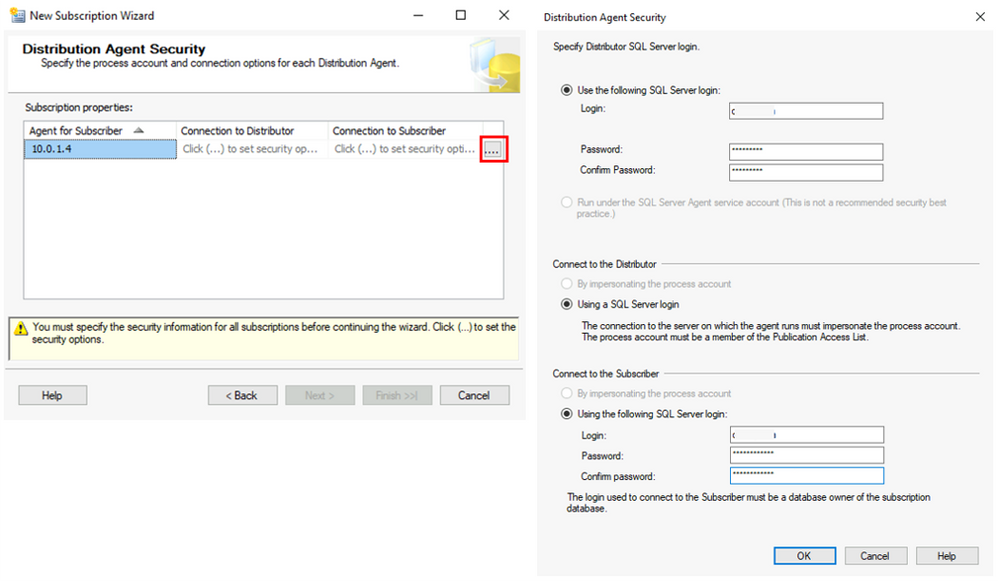

On the Distribution Agent Security window, click on the button with three dots, and in Distribution Agent Security window provide login and password for Distributor and Subscriber.

Follow through the wizard. On Synchronization Schedule leave the Agent Schedule set to Run continuously. This will enable continuous data flow from the source database to the destination and help with the online migration. On the Initialize Subscription window leave Initialize When set to Immediately. Then on the next window, Wizard Actions, leave Create the subscription(s) checked and click Next. On the Complete the Wizard click Next and wait for the Success status on the final Creating Subscription(s) step.

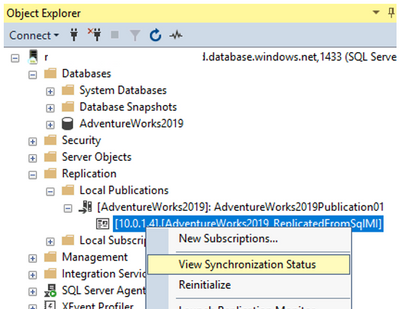

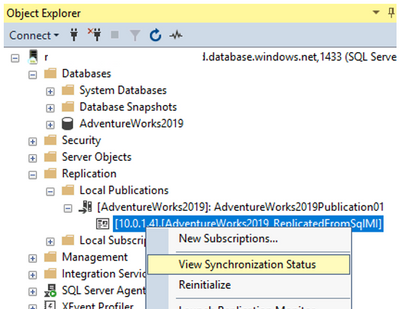

Once this is done, from the context menu of the newly created subscription, open the View Synchronization Status. You will see its progress and eventually all transactions will be replicated.

From the context menu of the Publication click Launch Replication Monitor.

Here in the Replication Monitor, in Agents tab you will see the progress of the Snapshot Agent and Log Reader Agent. On Snapshot agent reaches 100%, database is replicated, and ongoing transactions will be replicated as well.

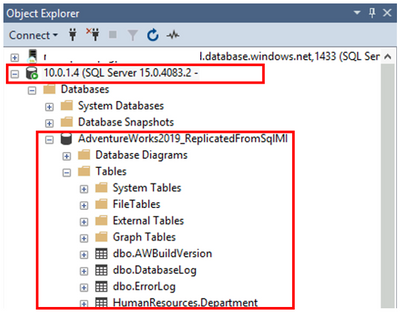

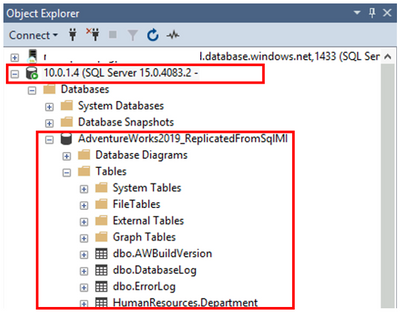

Finally, connect to the SQL Server that is Subscriber and you will see that database is replicated.

With this you source database is migrated from Managed Instance to SQL Server. If the workload on the source database is still running, changes it might make will be replicated to the target database. Once you want to finalize the migration, you can set the source database to read-only mode, wait for the replication to complete (in the Replication monitor, Log Reader Agent’s last action will be “No replicated transactions are available”) and stop the transactional replication. This is done by disabling the corresponding SQL Agent jobs and deleting subscriber, distributor and publisher.

Transactional replication limitations and workarounds

Managed Instance documentation contains list of know errors related to transactional replication.

In case you want to know more, take a look at the official Managed Instance transactional replication documentation.

Primary key requirement

All tables that participate in transactional replication need to have primary key. If a table does not have one, you will have to create it. Alternatively, you can create materialized view over the table that’s missing primary key, and replicate that materialized view. Once the data is in the target database, you can create the missing table and insert data from the replicated materialized view to the newly created table.

Conclusion

Transactional replication offers performant and robust way to migrate database from Managed Instance to SQL Server, with some limitations mentioned in this blog. For more information on the migration options, see Moving databases from Azure SQL Managed Instance to SQL Server.

by Contributed | May 14, 2021 | Technology

This article is contributed. See the original author and article here.

Introduction

BACPAC is a ZIP file with an extension of BACPAC, containing both the metadata and data from SQL Server database. For more information you can refer to Data-tier Applications – SQL Server | Microsoft Docs. A BACPAC file can be exported in Azure Blob storage or in local file system in an on-premises location and later imported into SQL Server or Azure SQL Managed Instance, or Azure SQL Database. Although BACPAC file contains data and metadata and can be used to deploy a copy of the source database, it is not a backup and shouldn’t be considered as such. However, in a situation where it’s not possible to use a database backup, BACPAC may be an acceptable substitute.

Note: With BACPACs, data consistency is guaranteed only at the point of execution against each individual database object, meaning that inflight transactions are missed. In other words, BACPAC does not guarantee transactional replication. You will find more details about this later in Transactional consistency paragraph.

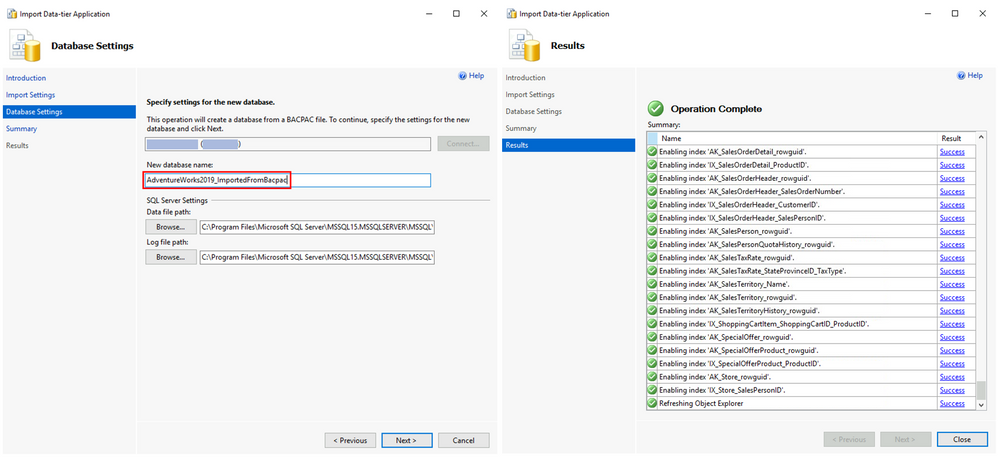

Performing export/import via SSMS and BACPAC

To migrate user database from Managed Instance to SQL Server, first you would need to export the database to a BACPAC file. That can be done from variety of tools: Azure Portal, SqlPackage command line utility, SSMS, Azure Data Studio or PowerShell. The export process is explained in the Azure documentation. The second step is creating a user database on SQL Server by importing created BACPAC. The process is covered in the documentation.

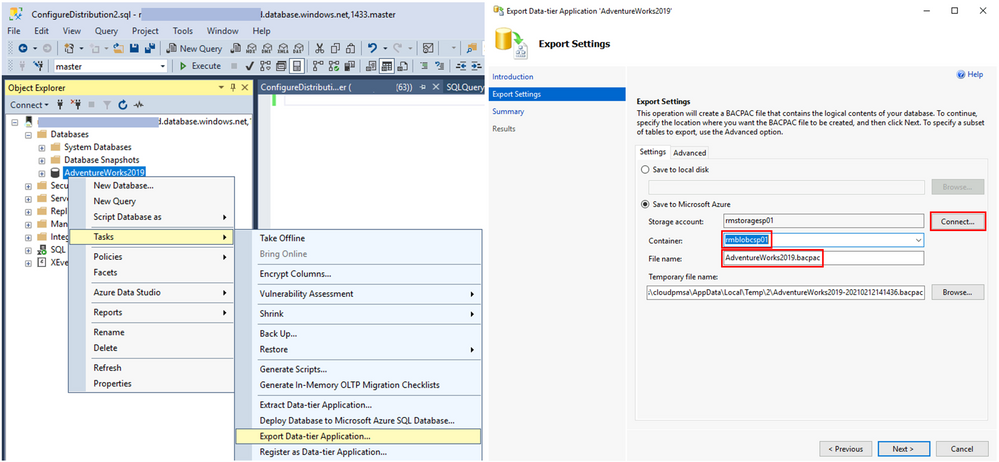

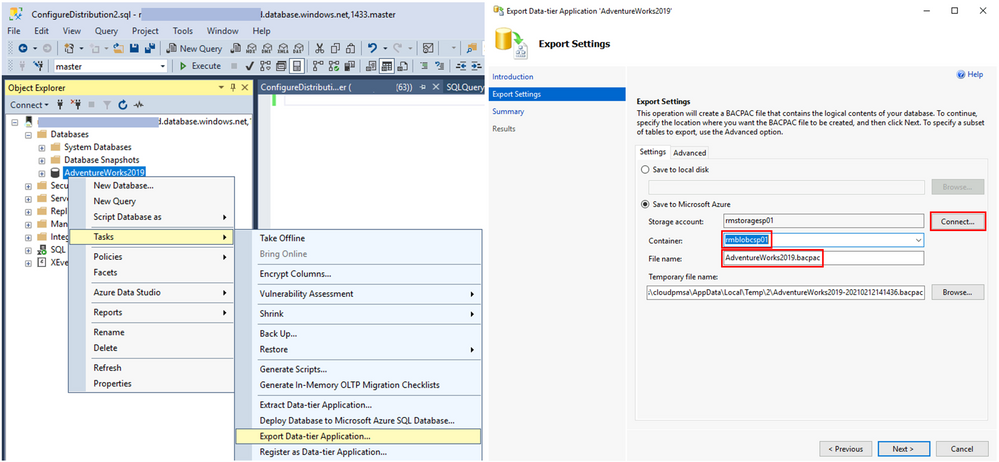

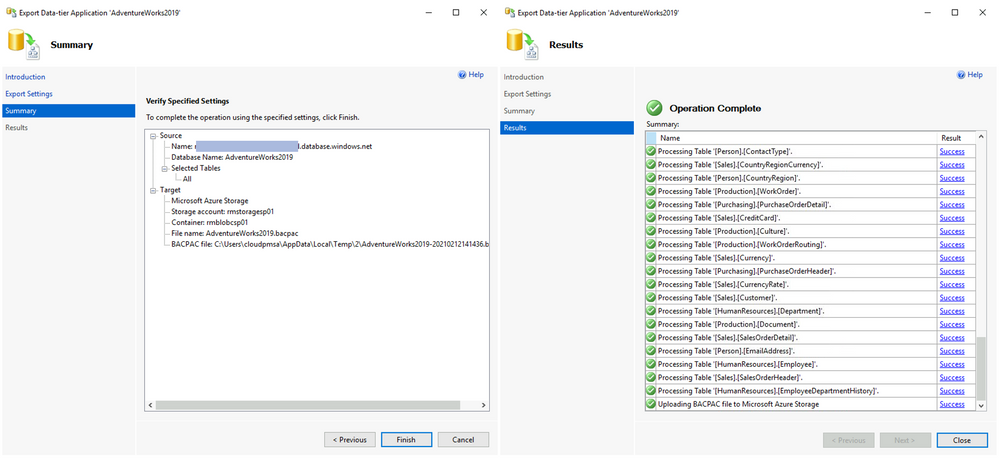

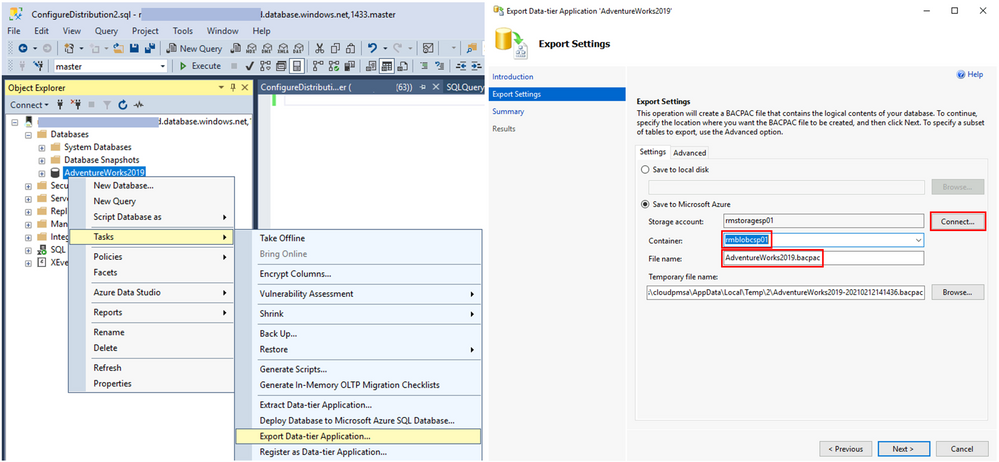

Let’s quickly go through the steps in SSMS. To migrate the database, we will to export a BACPAC to Azure Storage (other options are available as well) and then import it from Azure Storage to a SQL Server. Here’s is what it looks like. In SSMS, from the context menu of a database you want to migrate chose Tasks, Export Data-tier Application. In Export settings, click Connect, connect with your Azure account, then chose target Container and File name and click Next.

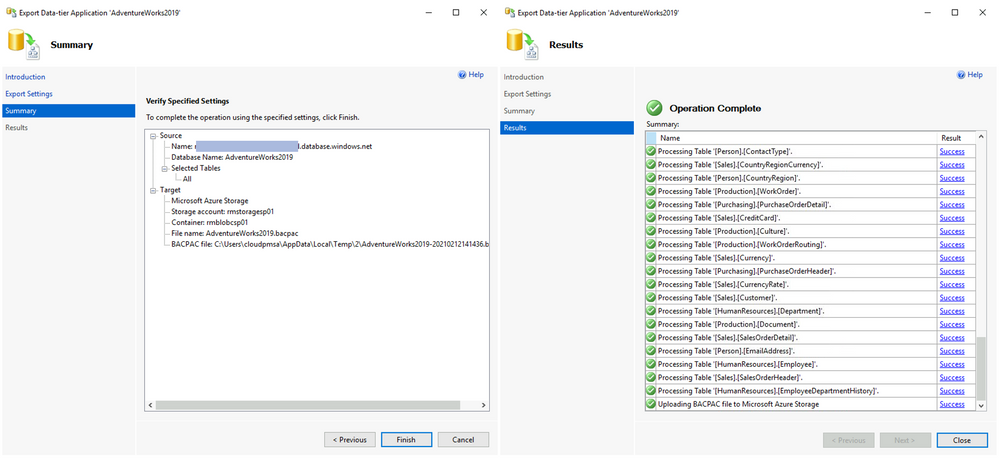

Follow through the Wizard. The final step will show the operation progress. When it reaches Operation Complete, your BACPAC will be saved in the Azure Storage account.

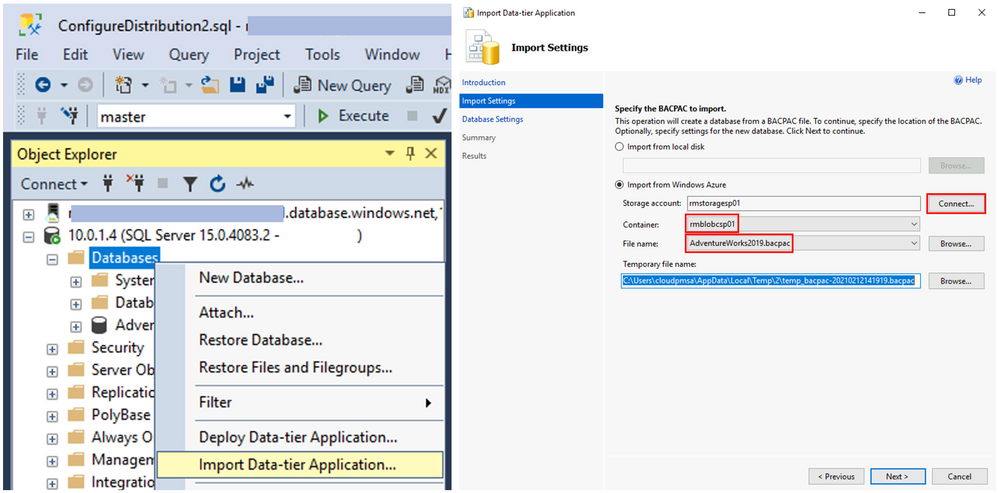

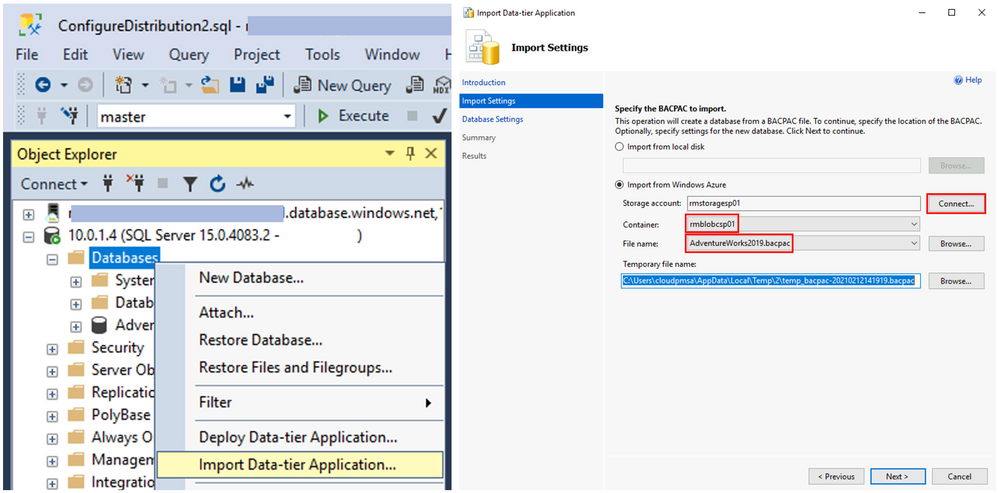

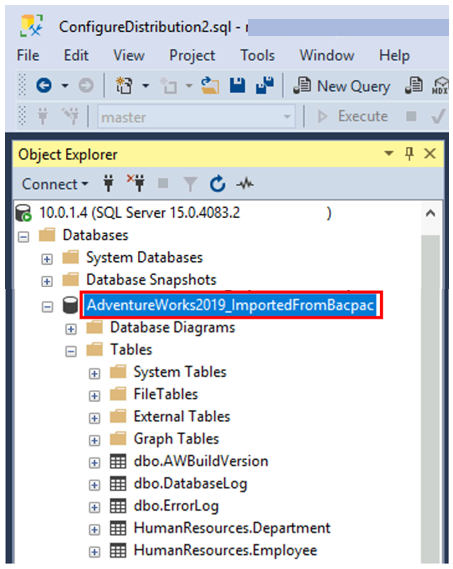

Now, connect to the target SQL Server and from the Databases context menu chose Import Data-tier Application. On the Import Settings window click Connect, connect to your Azure account, then select Container and File name of the BACPAC previously saved and click Next.

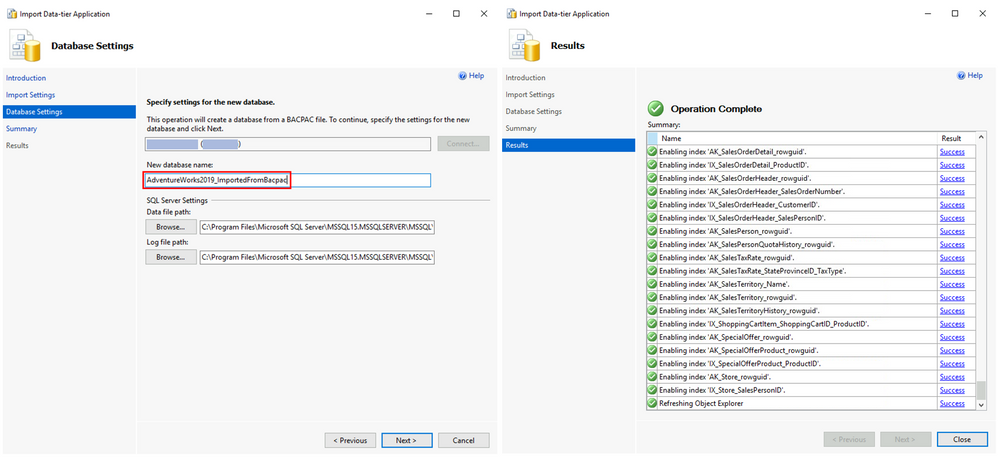

On Database Settings window, set the New database name. Follow the Wizard. The final step will show the operation progress and once it reaches Operation Complete the database will be ready.

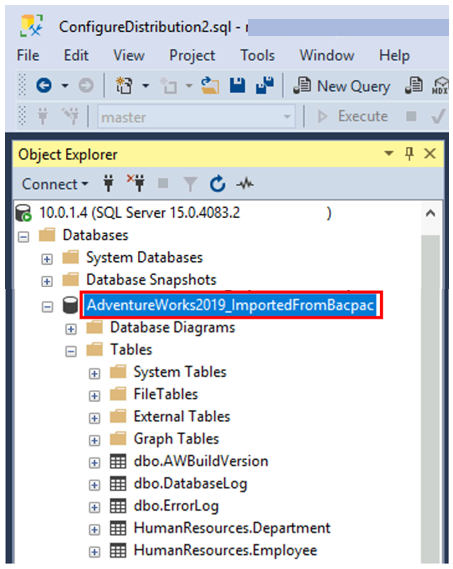

In SSMS, connect to the target SQL Server and you will find the database with schema and data.

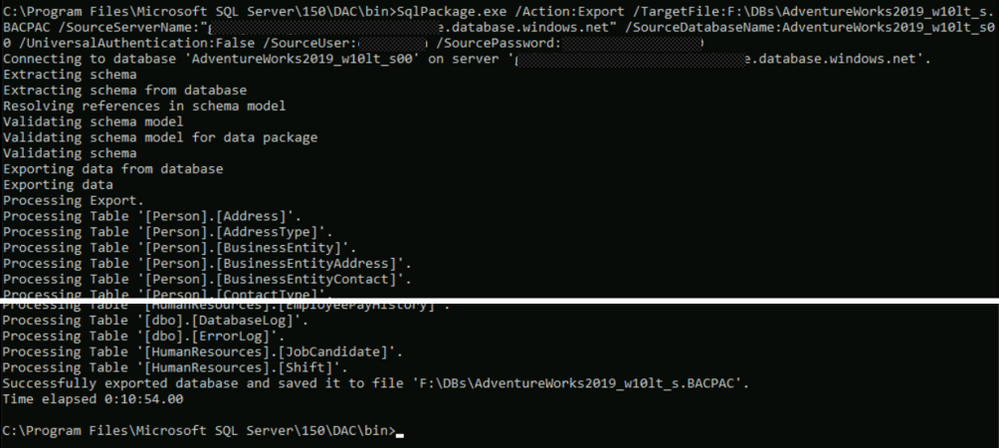

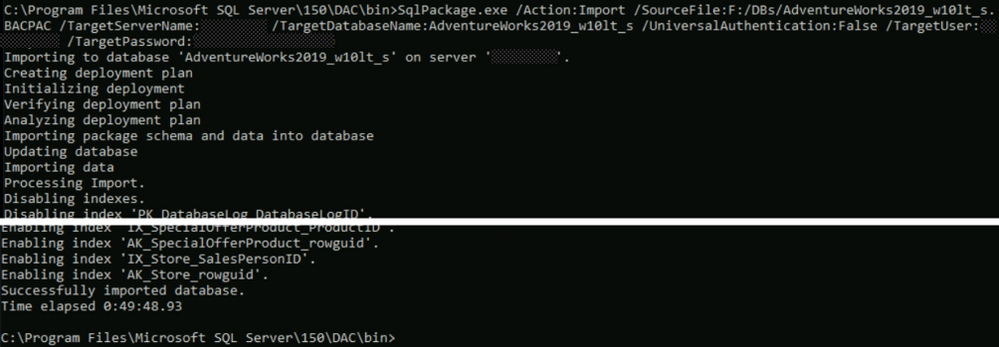

Performing BACPAC export/import via SqlPackage utility

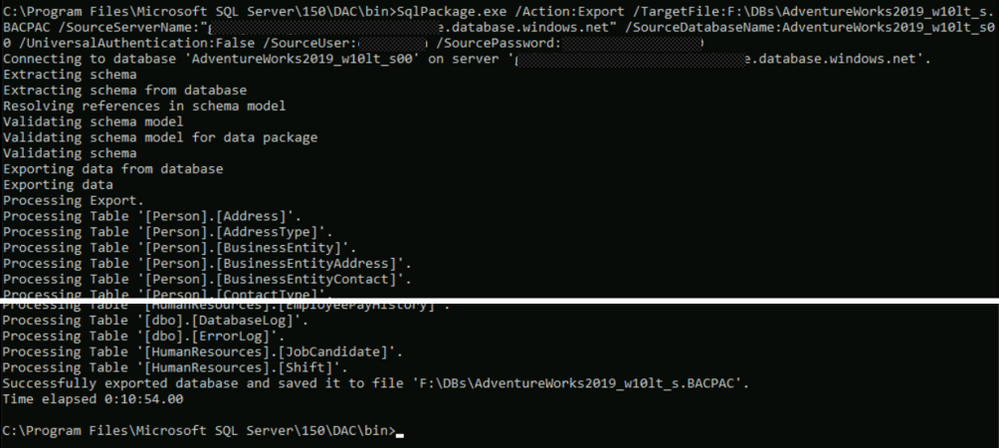

If you need you need to migrate more databases, using a command line tool might be more convenient than SSMS. The utility also offers more parameters than SSMS, so it offers more flexibility and options for fine-tuning. SqlPackage utility can be downloaded from here. Once you install it on a client machine you will be able to run commands to export a BACPAC from Managed Instance, and then to import it into a SQL Server. Here is an example of that process.

Export command:

C:Program FilesMicrosoft SQL Server150DACbin>SqlPackage.exe /Action:Export /TargetFile:F:DBsAdventureWorks2019_w10lt_s.BACPAC

/SourceServerName:"****.****.database.windows.net" /SourceDatabaseName:AdventureWorks2019_w10lt_s00

/UniversalAuthentication:False /SourceUser:******* /SourcePassword:*******

Import command:

C:Program FilesMicrosoft SQL Server150DACbin>SqlPackage.exe /Action:Import /SourceFile:F:/DBs/AdventureWorks2019_w10lt_s.BACPAC

/TargetServerName:******* /TargetDatabaseName:AdventureWorks2019_w10lt_s

/UniversalAuthentication:False /TargetUser:******* /TargetPassword:*******

Limitations and workarounds

Export/Import performance

Overall performance of the export import process depends on the resources involved in the process. Source Managed Instance, its tier (GP or BC), number of cores, available IOPS, and resource utilization during the export will impact the performance as well as the network bandwidth between the source Managed Instance and the target storage. And finally, network bandwidth of the BACPAC storage and target SQL Server, and its resources (CPU and storage IO) will influence the import duration.

An example of expected performance for the export from GP Managed Instance, with default settings is:

- StackOverflow 10GB sample database, was exported in ~20min (exported BACPAC had 1.7GB).

- StackOverflow 50GB sample database, was exported in ~65min (exported BACKAC had 9.2GB).

Note: If the export operation exceeds 20 hours, it may be canceled, so optimizing for performance can make a difference between failure and success.

Here are some options for improving performance of export/import:

- If you’re exporting from General Purpose Managed Instance (remote storage), you can increase remote storage database files to improve IO performance and speed up the export.

- Temporarily increase your compute size.

- Limit usage of database during export (like in Transactional consistency scenario consider using dedicated copy of the database to perform the export operation)

- Consider using a clustered index with non-null values on all large tables. With clustered index, export can be parallelized, hence much more efficient. Without clustered indexes, export service needs to perform table scan on entire tables in order to export them, and this can lead to time-outs after 6-12 hours for very large tables.

Hint: A good way to determine if your tables are optimized for export is to run DBCC SHOW_STATISTICS and make sure that the RANGE_HI_KEY is not null and its value has good distribution. For details, see DBCC SHOW_STATISTICS.

Database size

Although export to BACPAC file is one of the easiest options, it’s not possible to use it in every scenario. In case of exporting to blob storage, the maximum size of a BACPAC file is 200 GB. To archive a larger BACPAC file, export to local storage.

Transactional consistency

As mentioned in the introduction, BACPAC does not guarantee transactional replication thus if a child table is modified after the parent table is exported, the database won’t be consistent. To avoid this, you must ensure either that no write activity is occurring during the export. You can achieve this by setting read-only mode to your source database or restoring your database to the same instance (under different name) or to a different instance and using that copy as a source for export.

Cross-database references

DacFx (framework used to create and manage BACPAC files) was designed to block Export/Import when object definitions like views, procedures, etc. contain external references. This includes blocking Export/Import for databases with three-part references to themselves.

Possible resolutions for this situation are:

- Modify your database schema, removing all self-referencing three-part name references, reducing them to a two-part name.

- There are many 3rd party tools/mechanisms which can be used to fix schema and remove these external references. One option is to use SQL Server Data Tools (SSDT). In SSDT, you can create a database project from your Managed Instance database, setting the target platform of the resulting project to “SQL Azure”. This will enable Azure-specific validation of your schema which will flag all three-part name/external references as errors. Once all external reference errors identified in the error list have been remedied, you can publish your project back to your Managed Instance database and export/import your database.

- Finally, if you have only a few database objects using three-part names, you can script them out, delete them, use BACPAC to migrate the database and in the end, use created scripts to manually recreate objects that are using three-part names.

Conclusion

Data-tier applications (BACPAC) offers a simple way to migrate a database from Managed Instance to SQL Server, with some outstanding limitations. For more information on the migration options, see Moving databases from Azure SQL Managed Instance to SQL Server.

by Contributed | May 14, 2021 | Technology

This article is contributed. See the original author and article here.

Introduction

Let’s move our database to the cloud! This is one of the common scenarios for solution modernization and database migrations. There are many ways of migrating SQL Server database to Microsoft Azure and there are lots of materials covering this topic. This blog is about the ability to go back from Azure SQL Managed Instance to physical/virtual SQL Server environment. At this stage, there are at least two questions that could come up. First, what’s the challenge of going back, as it’s a matter of backup and restore, isn’t it? Well, it isn’t, because backup-restore strategy does not work today in this case, and you will find more about this below. Second, why should anyone go back from the Azure, or cloud in general to any other place? Our customer and partner cases shed some light on this. One of the reasons for example is a policy or general requirement of having a “cloud exit” plan.

Why backup-restore is not a way to go?

To better understand this problem, first of all, let’s see what is Managed Instance? It’s a database product in a form of a platform as a service (PaaS), meaning that Microsoft as a service provider covers for you various platform aspects such as:

- Providing resources (compute, memory, networking) and setting up the system.

- Built in high availability and disaster recovery capabilities.

- Regular OS and SQL upgrades.

- Automatic backups, monitoring, etc.

As SQL bits are being upgraded regularly (every few months), Managed Instance is running on “evergreen” SQL bits. This means that current Managed Instance version is most of the time actually newer than the latest SQL Server version. As SQL Server backups are not backwards compatible, a backup from Managed Instance cannot be restored to e.g., SQL Server 2019. It’s for the same reason as why backup from SQL Server 2019 cannot be restored to SQL Server 2017. Specifically, internal database version mismatch is one of the main technical reasons why a database from Managed Instance cannot be backed up and then restored to e.g., SQL Server 2019. Upgrading is of course working but downgrading internal database version has never been supported in SQL Server. So by design, backups from lower versions of the SQL Server can be restored to higher version, but vice versa is not supported.

Note: You can use this query to check internal database version of the server instances you’re using:

select name as database_name, version as internal_database_version from sys.sysdatabases

Lack of backup-restore functionality from higher to lower version is what makes moving a database from Managed Instance to SQL Server a non-trivial effort, and that’s why migration to the Managed Instance is often considered a one-way journey. Making Managed Instance backup portable to on-premises SQL is one of the popular Managed Instance feature requests on Azure Feedback. The team is investigating ways to provide the solution for this request and make the two-way migration, both to and from Managed Instance easy and simple. Until a solution arrives, we’ll talk more about currently available options for migration of user databases from Managed Instance to SQL Server: Export/Import and Transactional Replication.

Why moving away from Managed Instance?

While we’re striving to build Azure SQL Managed Instance to be the best PaaS database solution, with full compatibility with SQL Server and want you to like it and use it for your databases, sometimes having an option to easily migrate from Managed Instance to another database system is a necessity. Motivation can come from compliance or legal reasons. Sometimes our customers have contract obligation to provide database backups to their clients, who might not want to use our cloud solutions. In other cases, development practices require using schemas and data from production back in development environment that are often purely on-premises. Finally, you might find out that you require more flexibility than what PaaS can offer, so you need to go back to IaaS (e.g. managed VMs) or on-premises solutions.

Available options

There are two main SQL technologies that can be used today for moving data from Managed Instance to SQL Server. Those are:

- Export/Import, also known as BACPAC.

- Transactional replication.

Both technologies have different options and some limitations, and these will be discussed later, or in subsequent blog posts.

Here is a brief comparison of the two technologies and their pros and cons.

Export/Import

Using export/import is very simple, either through SSMS or SqlPackage. It’s best to be used for offline migration of small and medium databases.

This technology has some limitations:

- It does not handle databases that reference external objects (schemas with three or four-part names).

- Its performance degrades when working with large databases or databases with large number of objects (hundreds of GBs or tens of thousands of objects in a database).

- Produced BACPAC is not transactionally consistent.

If you’d like to read more about this option, see how to migrate database from Managed Instance to SQL Server with export/import.

Transactional replication

This is a more robust way to migrate databases from Managed Instance to SQL Server. It’s best to be used for online or offline migration of large and complex databases.

Some outstanding limitations that apply to transactional replication are:

- Tables must have primary keys.

- Setup is not simple.

To find out more about this, see how to migrate database from Managed Instance to SQL Server with transactional replication.

by Contributed | May 14, 2021 | Technology

This article is contributed. See the original author and article here.

Blazor is here – and Chinese Developer Technologies MVP Guangpo Zhang is determined to make sure the technology reaches the heights that it deserves.

The free and open-source web framework that enables developers to create web apps using C# and HTML launched in 2018, with Guangpo inspired to support online learners with a resource created during the pandemic.

The result is Blazor.Zone, an educational hub that in one year has grown into China’s top Blazor destination with more than 100,000 monthly page views.

“Creating Blazer.Zone is a meaningful thing,” Guangpo says. “When I went to Beijing on a business trip, I was quarantined in a hotel for a month. I took advantage of this month to conceive and complete more than 40 Blazor components and create the open-source website.”

“Since Blazor technology was newly launched by Microsoft, the entire ecosystem was blank without any easily used UI component library. I wanted to contribute something for the .NET community, so I made this open-source UI component library.”

Blazor lets users build interactive web UIs using C# instead of JavaScript, with Blazor apps composed of reusable web UI components implemented using C#, HTML, and CSS. Both client and server code is written in C#, allowing users to share code and libraries.

In addition to the website, Guangpo is active in helping people learn the framework with Microsoft Docs. After one year of using and fine-tuning the content, Guangpo regards Docs as “a knowledge base camp”.

“We have witnessed the continued improvement and update of the [Docs] website … I always recommend this technology treasure to community members, no matter what level they are, as there are always learning paths and modules for them.”

Today, Blazer.Zone is both a place to develop projects with other coders and a platform to learn and communicate technology. Every Thursday, for example, Guangpo simultaneously updates his open-source projects and MS Learn and Docs content so that users will see the learning materials as they read the project update news.

Moreover, Guangpo also organizes several WeChat groups for Blazor developers and enables administrators to send out MS Learn materials in these groups. Members report that these learning materials are valuable, with screenshots of module completion and problems encountered shared in the WeChat learning group.

For more information on Guangpo’s story, check out his blog.

by Contributed | May 14, 2021 | Technology

This article is contributed. See the original author and article here.

Hello! I’m Sue Bohn, Partner Director of Program Management for Identity and Access Management. In this Voice of the Partner blog post, we’ve invited Prakash Narayanamoorthy, Principal Microsoft Security Architect for Wipro, and Terence Oliver Jayabalan, Practice Partner and Global Solutions Lead for IAM at Wipro, to share how their company envisioned, engineered, and brought to market a one-of-a-kind solution for automatically migrating third-party apps to Azure Active Directory—shrinking the migration process from months to hours.

Seamlessly and automatically migrate SSO applications to Azure AD

By Terence Oliver Jayabalan, Practice Partner, Global Solutions Lead for Identity and Access Management

Wipro Limited is a leading global information technology, consulting, and business process services company. We harness the power of cognitive computing, hyper-automation, robotics, cloud, analytics, and emerging technologies to help our clients succeed in the digital world. With over 180,000 employees serving clients across six continents, we’ve been recognized for our comprehensive portfolio of services, commitment to sustainability, and good corporate citizenship. With a staff of more than 8,000 security professionals, Wipro has been helping customers in the Identity and Access Management (IAM) domain for more than two decades through our consulting, advisory, and implementation solutions.

Moving a mountain—app migrations and IAM

Our customers come to us from across industry verticals, but a common pain point for most of them involves user provisioning and access management for single sign-on (SSO) software-as-a-service (SaaS) apps. With Zero Trust now the gold standard for enterprise security, identity has become the new perimeter. Many of our customers are looking to modernize their identity and access management (IAM) landscape by bringing advanced platforms like Azure Active Directory (Azure AD) into their environment; so they can connect and secure all their apps with a single identity solution. With Azure AD, Conditional Access, multifactor authentication, single-sign on (SSO), and automatic user provisioning make IAM easier and more secure across the enterprise. Azure AD also saves money by reducing admin overhead for on-premises user provisioning and authentication—Forrester estimates the value of IT efficiency gains at USD 3.0 million over three years.

However, moving to a new IAM solution often requires the time-consuming task of manually migrating hundreds of SaaS applications from their existing IAM solution. This typically involves the admin getting the connection parameters from the existing tool and manually bringing it into Azure AD, usually by typing information or with some form of export-import function. Then, the admin has to validate those settings and do the application site configurations before the end-to-end integration/migration is finally completed. For a typical business, this process can require several hours just for one app.

Wipro sought to change that. We set out to build a solution that could automate migrating applications from one IAM platform to another while addressing the biggest IAM app-migration challenges:

- Large number of applications needing to be migrated.

- Need for a specialized skillset to carry out the migration.

- Extensive manual effort needed to migrate applications to a new platform.

- No centralized view of the vast IAM landscape.

- Lack of centralized monitoring, reporting, and management for IAM.

- No centralized repository for documents, best practices, templates, or delivery kits.

- Lack of IAM tasks and process automations.

- No simplified view of IAM operations (user details, who has access to what).

Wipro’s solution—Identity Management Center (IMC)

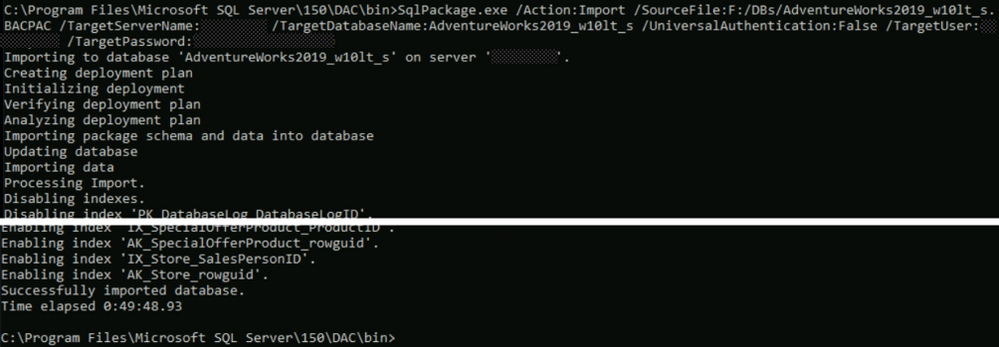

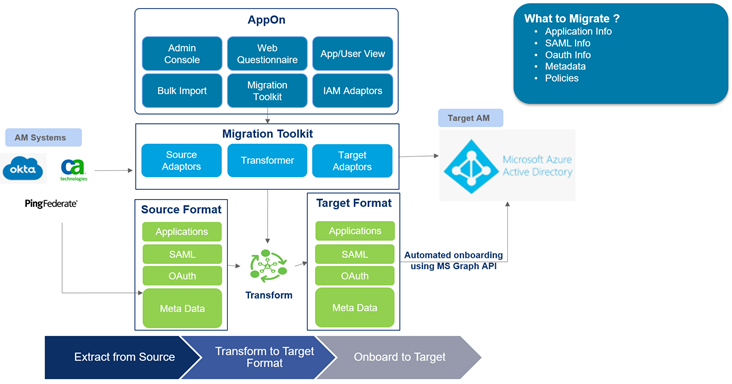

To solve this pain point for our customers, Wipro worked closely with the Microsoft Identity engineering team to enable a seamless solution for onboarding SSO apps to Azure AD. Our new accelerator solution, Identity Management Center (IMC), automates and accelerates the app migration/onboarding process from end to end. IMC supports migrating OIDC and SAML applications, as well as multiple IAM systems both as a source and a target—including a new functionality to speed up migration of SSO apps from Okta to Azure AD.

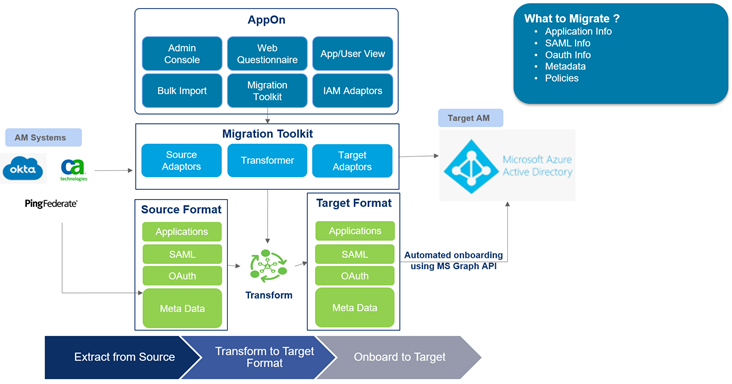

We make use of customer Okta instance APIs to pull information about the application into IMC, i.e., SAML and related metadata, any URLs, and policy information. As all that is pulled in, we transform it into a format which Microsoft Azure AD understands. Once it’s present in that format within IMC, we make use of the Microsoft Graph API to push that information into Azure AD.

Figure 1: IMC for Azure AD: Reference architecture

Once the application configuration is loaded into the IMC platform, migrating from one environment to another (Dev to QA, QA to Prod, etc.) requires just the click of a button. It begins with the discovery process in the Okta platform, followed by bringing the required configuration into IMC. The intuitive IMC interface helps users gather the applications’ onboarding details effortlessly via web-form questionnaires. Once the app configurations are onboarded, IMC automatically provisions the apps to Azure AD. Our IMC solution also integrates with IT service management (ITSM) tools like SNOW, helping to incorporate change-management processes for automated onboarding to Azure AD as well.

Figure 2: IMC accelerated process for SaaS app migration

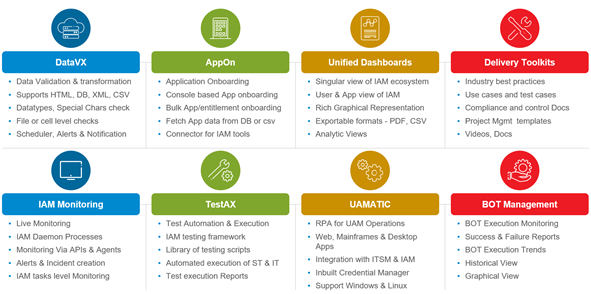

Wipro’s IMC solution is a web-tiered architecture that can be quickly setup on customers’ on-premises or cloud infrastructure. And because IMC is not a multi-tenant solution, data residency and control remains completely within the customer’s hands. IMC provides a single pane of glass for monitoring IAM solutions across your enterprise—a single, holistic service-management platform which provides compliance visibility and includes accelerators and automation tool-kits.

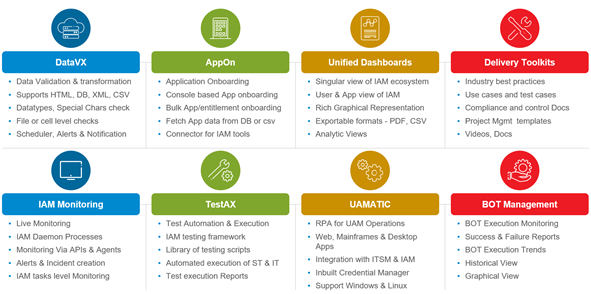

IMC contains eight modules covering enterprise IAM:

- Data VX: Data validation and transformation

- AppOn: Application onboarding

- Unified dashboards: Singular view of IAM ecosystem

- Delivery toolkits: Industry best practices and tool kits

- IAM monitoring: Live monitoring via APIs and agents

- TestAX: Test automation and execution

- UAmatic: Unified access management

- Bot management: Bot execution monitoring

Figure 3: IMC modules

For questions like, how many orphan accounts do you have? Or how many concurrent logins are happening in your access-management system? Those are the types of things you can configure in the dashboard. For example, if you have 100 applications integrated to your Azure AD; validating those normally is going to be a huge manual effort. Instead, TestAX will run scripts for you at the click of a button—all the use cases can run in a series and provide you with a PDF report.

Results—fast, easy app migration

If a typical manual migration of 500 applications takes around 10 months, our IMC solution can reduce app migration efforts by 60 to 70 percent—dropping migration timelines from months to hours. Working closely with the Azure AD engineering team on the Microsoft Graph APIs and IMC integration, we’ve been able to automate the entire SSO app migration to deliver one-click onboarding from Okta to Azure AD, including:

- Live auto discovery of Okta apps

- No Okta or Azure AD admins required for SSO application onboarding activities

- Automatic transformation of Okta configuration into Azure AD

- One-click migration of configurations

- Automated ticketing with integrated ITSM

- Easily assign applications to users in Azure AD

- Provides Azure AD certificate

Teamwork brings IMC to market

We have a deep connection with the Microsoft Identity engineering team, and they’re really excited about our IMC solution because it’s the only tool of its kind that provides a seamless migration from Okta to Azure AD. We’ve presented IMC to multiple customers, and they’re excited too. This is the only tool that solves their specific pain points around application migration and IAM. Our team at Wipro believes that IMC has the potential for migrating thousands of applications, including deeper integrations with other ecosystems. The results have been so promising, we’re now building migration capabilities for more IAM solutions, such as Ping Identity and Oracle Access Management. We’re expecting IMC’s Okta-to-Azure AD migration feature to enter general availability in Q2, 2021

For additional information about Wipro and their IMC SaaS app-migration solution, please contact cybersecurity.services@wipro.com

Learn more about Microsoft identity:

With return to work, and hybrid work becoming a reality, familiarity with physical locations is again coming in to play for many organizations. This “back to work” scenario seems to be playing out along three primary scenarios:

With return to work, and hybrid work becoming a reality, familiarity with physical locations is again coming in to play for many organizations. This “back to work” scenario seems to be playing out along three primary scenarios:Michael Gannotti

Recent Comments