by Contributed | May 18, 2021 | Technology

This article is contributed. See the original author and article here.

Microsoft Build, our free digital event, starts next week and runs from May 25-27, 2021. We thought you might be interested to learn ways you can plan to experience the latest set of developer tools, platforms, and services helping you build amazing things on your terms, anywhere, with Microsoft and Azure. You’ll also have a chance to speak with Microsoft experts and have the opportunity to continue your technical learning journey.

Register to gain full access to all Microsoft Build has to offer—it’s easy and at no-cost to you.

Create the perfect event schedule

Explore the session catalog to find expert speakers, interactive sessions, and more. After registering, get started on your journey using the Build session catalogue.

Below are a set of featured Technical Sessions kicking off the key themes of Build 2021, with deeper related Breakout Sessions—all of which you won’t want to miss:

Increase Developer Velocity with Microsoft’s end-to-end developer platform:

Deliver new intelligent cloud-native applications by harnessing the power of Data and AI:

Build cloud-native applications your way and run them anywhere:

Follow #MSBuild

Explore the latest event news, trending topics, and share your point of view in real time with your community. Join us on Twitter and LinkedIn by using #MSBuild.

Join us on social >

Connection Zone

Only at #MSBuild can you strengthen your network with local connections and meet with Microsoft product teams to help influence the future of products and services.

Connect today>

Learning Zone

The Learning Zone is the center for training, development, and certification with Microsoft. Whatever your style of learning happens to be, you can find content and interactive opportunities to boost and diversify your cloud skills.

Explore trainings >

One-on-one consultation

Schedule your 45-minute, one-on-one consultation with a Microsoft engineer to architect, design, implement or migrate your solutions.

Schedule today >

Continue your learning journey

Discover more in-depth learning paths, training options, communities, and certification details across all Microsoft cloud solutions from one place.

Explore trainings >

by Contributed | May 18, 2021 | Technology

This article is contributed. See the original author and article here.

Final Update: Tuesday, 18 May 2021 20:15 UTC

We’ve confirmed that all systems are back to normal with no customer impact as of 05/18, 16:00 UTC. Our logs show the incident started on 05/18, 12:00 UTC and that during the 4 hours that it took to resolve the issue customers using Log Analytics in UK South may have experienced intermittent data latency and incorrect alert activation for resources in this region.

- Root Cause: After our investigation, we found that a backend scale unit become unhealthy due to an ingestion error. This ingestion is to process logging data for Log Analytics and caused alert systems to fail.

- Incident Timeline: 4 Hours – 05/18, 12:00 UTC through 05/18, 16:00 UTC

We understand that customers rely on Azure Log Analytics as a critical service and apologize for any impact this incident caused.

-Vincent

by Contributed | May 18, 2021 | Dynamics 365, Microsoft 365, Technology

This article is contributed. See the original author and article here.

Microsoft Build is just around the corner! To help you make the most of the three-day digital event, we’ve rounded up some must-see sessions and speakersmore than two dozen technical sessions to help developers across roles and skill levels create amazing experiences with Microsoft Power Platform and Microsoft Dynamics 365.

The post Your guide to Dynamics 365 and Power Platform at Microsoft Build appeared first on Microsoft Dynamics 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

by Contributed | May 18, 2021 | Technology

This article is contributed. See the original author and article here.

We are pleased to announce the final release of the Windows 10, version 21H1 (a.k.a. May 2021 Update) security baseline package!

Please download the content from the Microsoft Security Compliance Toolkit, test the recommended configurations, and customize / implement as appropriate.

This Windows 10 feature update brings very few new policy settings. At this point, no new 21H1 policy settings meet the criteria for inclusion in the security baseline. We are, however, refreshing the package to ensure the latest content is available to you. The refresh contains an updated administrative template for SecGuide.admx/l (that we released with Microsoft 365 Apps for Enterprise baseline), new spreadsheets, .PolicyRules file, along with a script change (commented out the Windows Server options in the Baseline-LocalInstall.ps1 script)

Windows 10, version 21H1 is a client only release. Windows Server, version 20H2 is the current Windows Server Semi-Annual Channel release and per our lifecycle policy is supported until May 10, 2022.

As a reminder, our security baselines for the endpoint also include Microsoft 365 Apps for Enterprise, which we recently released, as well as Microsoft Edge and Windows Update.

Please let us know your thoughts by commenting on this post or via the Security Baseline Community.

by Contributed | May 18, 2021 | Technology

This article is contributed. See the original author and article here.

Windows 10, version 21H1 is now available through Windows Server Update Services (WSUS) and Windows Update for Business, and can be downloaded today from Visual Studio Subscriptions, the Software Download Center (via Update Assistant or the Media Creation Tool), and the Volume Licensing Service Center[1]. Today also marks the start of the 18-month servicing timeline for this H1 (first half of the calendar year) Semi-Annual Channel release.

Windows 10, version 21H1 (also referred to as the Windows 10 May 2021 Update) offers a scoped set of improvements in the areas of security, remote access, and quality to ensure that your organization and your end users stay protected and productive. Just as we did for devices updating from Windows 10, version 2004 to version 20H2, we will be delivering Windows 10, version 21H1 via an enablement package to devices running version 2004 or version 20H2—resulting in a fast installation experience for users of those devices. For those updating to Windows 10, version 21H1 from Windows 10, version 1909 and earlier, the process will be similar to previous updates.

Jump to: What is an enablement package? | Tools | Resources | Features to explore | Deployment recommendations | Office hours

What is an enablement package?

Simply put, an enablement package is a great option for installing a scoped feature update like Windows 10, version 21H1 as it enables devices to update with a single restart, reducing downtime. This works because Windows 10, version 21H1 shares a common core operating system with an identical set of system files with versions 2004 and 20H2. As a result, the scoped set of features in version 21H1 were included in the May 2021 monthly quality updates for version 2004 and version 20H2, but were delivered in a disabled/dormant state. These features remain dormant until they are turned on with the Windows 10, version 21H1 enablement package—a small, quick to install “switch” that activates these features. Using an enablement package, installing the Windows 10, version 21H1 update should take approximately the same amount of time as a monthly quality update.

Note: If you are connected to WSUS and running Windows 10, version 2004 or version 20H2, but have not installed the May 2021 updates (or later), you will not see the version 21H1 enablement package offered to your device. Devices running version 2004 or version 20H2 connecting directly to Windows Update will be able to install the enablement package, but will also install the Latest Cumulative Update (LCU) at the same time (if needed), which may increase the overall installation time slightly.

|

Which tools are being updated for version 21H1?

To support the release of Windows 10, version 21H1, we have released updated versions of the following tools:

What about other tools?

As Windows 10, version 21H1 shares a common core and an identical set of system files with version 2004 and 20H2, the following tools do not need to be updated to work with version 21H1:

- Windows Assessment and Deployment Kit (Windows ADK) for Windows 10, version 2004 –Customize Windows images for large-scale deployment or test the quality and performance of your system, added components, and applications with tools like the User State Migration Tool, Windows Performance Analyzer, Windows Performance Recorder, Window System Image Manager (SIM), and the Windows Assessment Toolkit.

- Windows PE add-on for the Windows ADK, version 2004 – Small operating system used to install, deploy, and repair Windows 10 for desktop editions (Home, Pro, Enterprise, and Education). (Note: Prior to Windows 10, version 1809, WinPE was included in the ADK. Starting with Windows 10, version 1809, WinPE is an add-on. Install the ADK first, then install the WinPE add-ons to start working with WinPE.)

Any resources being updated?

To support Windows 10, version 21H1, we are updating the key resources you rely on to effectively manage and deploy updates in your organization, including:

- Windows release health hub – The quickest way to stay up to date on update-related news, announcements, and best practices; important lifecycle reminders, and the status of known issues and safeguard holds.

- Windows 10 release information – A list of current Windows 10 versions by servicing option along with release dates, build numbers, end of service dates, and release history.

- Windows 10, version 21H1 update history – A list of all updates (monthly and out-of-band) released for Windows 10, version 21H1 sorted in reverse chronological order.

New features to explore

As noted above, Windows 10, version 21H1 offers a scoped set of features focused on the core experiences that you rely on the most as you support both in person and remote workforces. Here are the highlights for commercial organizations:

- Windows Hello multi-camera support. For devices with a built-in camera and an external camera, Windows Hello would previously use the built-in camera to authenticate the user, while apps such as Microsoft Teams were set to use the external camera. In Windows 10, version 21H1, Windows Hello and Windows Hello for Business now default to the external camera when both built-in and external Windows Hello-capable cameras are present on the device. When multiple cameras are available on the same device, Windows Hello will prioritize as follows:

- SecureBio camera

- External FrameServer camera with IR + Color sensors

- Internal FrameServer camera with IR + Color sensors

- External camera with IR only sensor

- Internal camera with IR only sensor

- Sensor Data Service or other old cameras

- Microsoft Defender Application Guard enhancements. With Windows 10, version 21H1, end users can now open files faster while Application Guard checks for possible security concerns.

- Security updates. Windows 10, version 21H1 provides security updates for Windows App Platform and Frameworks, Windows Apps, Windows Input and Composition, Windows Office Media, Windows Fundamentals, Windows Cryptography, the Windows AI Platform, Windows Kernel, Windows Virtualization, Internet Explorer, and Windows Media.

- Windows Management Instrumentation (WMI) Group Policy Service (GPSVC) updating performance improvements to support remote work scenarios. When an administrator would make changes to user or computer group membership, these changes would propagate slowly. Although the access token eventually updates, the changes would not be reflected in a troubleshooting scenario when the gpresult /r or gpresult /h commands were executed. This was especially experienced in remote work scenarios and has been addressed.

What else have we been up to?

Aside from Windows 10, version 21H1, we’ve been busy with other new, exciting features and solutions that you may have heard about! (Note that some of these may require additional licensing or services.) Check out the links for details:

- Passwordless authentication – Speaking of Windows Hello for Business, I wanted to make sure you didn’t miss our March announcement that passwordless authentication is now generally available for hybrid environments! This is a huge milestone in our zero-trust strategy, helping users and organizations stay secure with features like Temporary Access Pass.

- Windows Update for Business deployment service – Approve and schedule content approvals directly through a service-to-service architecture. Use Microsoft Graph APIs to gain rich control over the approval, scheduling, and protection of content delivered from Windows Update.

- Expedite updates – Expediting a security update overrides Windows Update for Business deferral policies so that the update is installed as quickly as possible. This can be useful when critical security events arise and you need to deploy an update more rapidly than normal.

- Known Issue Rollback – Quickly return an impacted device back to productive use if an issue arises during a Windows update. Known Issue Rollback supports non-security bug fixes, enabling us to quickly revert a single, targeted fix to a previously released behavior if a critical regression is discovered.

- News and interests – For devices running Windows 10, version 1909 or later, news and interests in the taskbar enables users to easily see local weather and traffic as well as favorite stocks and the latest news on topics related to professional or personal interests. To learn how to manage news and interests via Group Policy or Microsoft Endpoint Manager, see Manage news and interests on the taskbar with policy,

- Universal Print – Now generally available, Universal Print is ready for your business! Universal Print is the premier cloud-based printing solution, run entirely in Microsoft Azure, and requires no on-premises print infrastructure.

- …and so much more! Follow the Windows IT Pro Blog (and @MSWindowsITPro on Twitter) to keep up-to-date on Windows announcements and new feature releases, and the Microsoft Endpoint Manager Blog (and @MSIntune on Twitter) for announcements and features new to Intune and Configuration Manager.

Deployment recommendations

With today’s release, you can begin targeted deployments of Windows 10, version 21H1 to validate that the apps, devices, and infrastructure used by your organization work as expected with the new features. If you will be updating devices used in remote or hybrid work scenarios, I recommend reading or revisiting Deploying a new version of Windows 10 in a remote world. For insight into our broader rollout strategy for this release, see John Cable’s post, How to get the Windows 10 May 2021 Update.

If you need a refresher on Windows update fundamentals, see:

For step-by-step online learning to help you optimize your update strategy and deploy updates more quickly across your device estate, see:

To get an early peek at some of the new features before we release them, join the Windows Insider Program for Business! Insiders can test new deployment, management, and security features, and provide feedback before they become generally available. Learn about managing the installation of Windows 10 Insider builds across multiple devices and get started today!

Join us for Office Hours

And finally, make sure you join our monthly Windows Office Hours, where you can ask your deployment, servicing, and updating questions and get answers, support, and help from our broad team of experts. Submit questions live during the monthly one-hour event or post them in advance if that schedule does not work for your time zone. Our next event is Thursday, May 20, 2021 so add it to your calendar and join us!

[1] It may take a day for downloads to be fully available in the VLSC across all products, markets, and languages.

by Contributed | May 18, 2021 | Technology

This article is contributed. See the original author and article here.

Azure Databricks is trusted by organizations such as the U.S. Department of Veterans Affairs (VA), Centers for Medicare and Medicaid Services (CMS), Department of Transportation (DOT), DC Water, Unilever, Daimler, Credit Suisse, Starbucks, AstraZeneca, McKesson, ExxonMobil, ABN AMRO, Humana and T-Mobile for mission-critical data and AI use cases.

Security and compliance using Azure Databricks

Databricks maintains the highest level of data security by incorporating industry leading best practices into our cloud computing security program. Azure Government general availability provides customers the assurance that Azure Databricks is designed to meet United States Government security and compliance requirements to support sensitive analytics and data science use cases. Azure Government is a gold standard among public sector organizations and their partners who are modernizing their approach to information security and privacy.

FedRAMP High authorization provides customers the assurance that Azure Databricks meets U.S. Government security and compliance requirements to support their sensitive analytics and data science use cases. Azure Databricks has also received a Provisional Authorization (PA) by the Defense Information Systems Agency (DISA) at Impact Level 5 (IL5). The authorization further validates Azure Databricks security and compliance for higher sensitivity Controlled Unclassified Information (CUI), mission-critical information and national security systems across a wide variety of data analytics and AI use cases.

Cloud-native security

In addition to core Databricks security features, Azure Databricks provides native integration with Azure security features to safeguard your most sensitive data and enhance compliance. Azure Databricks provides Azure Active Directory (Azure AD) integration, role-based access controls and service-level agreements (SLAs) that protect your data and your business. Azure AD native integration enables customers to provide a secure, zero-touch experience from the moment an Azure Databricks workspace is deployed. Azure AD authentication enables customers to securely and seamlessly connect to other services from Azure Databricks using their Azure AD account, dramatically reducing the time to deploy a solution.

Learn more about Azure Databricks security

Join us at Data + AI Summit for a technical deep-dive on security best practices to help you deploy, manage and operate a secure analytics and AI environment using Azure Databricks. Visit the new Azure Databricks Security and Compliance page for additional resources to help you implement security best practices and attend an Azure Databricks Quickstart Lab to get hands on experience.

by Contributed | May 18, 2021 | Technology

This article is contributed. See the original author and article here.

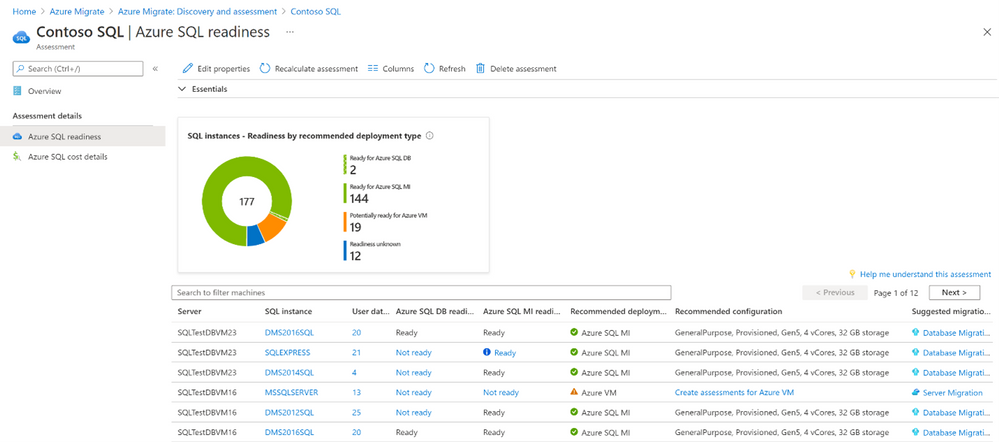

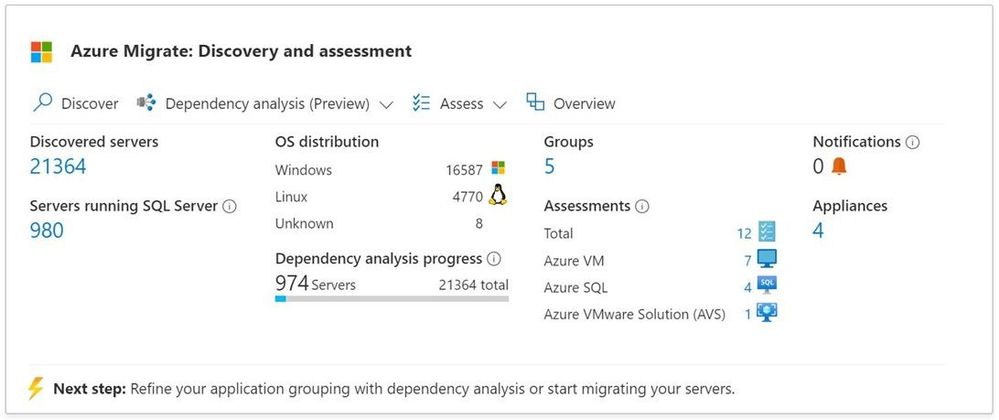

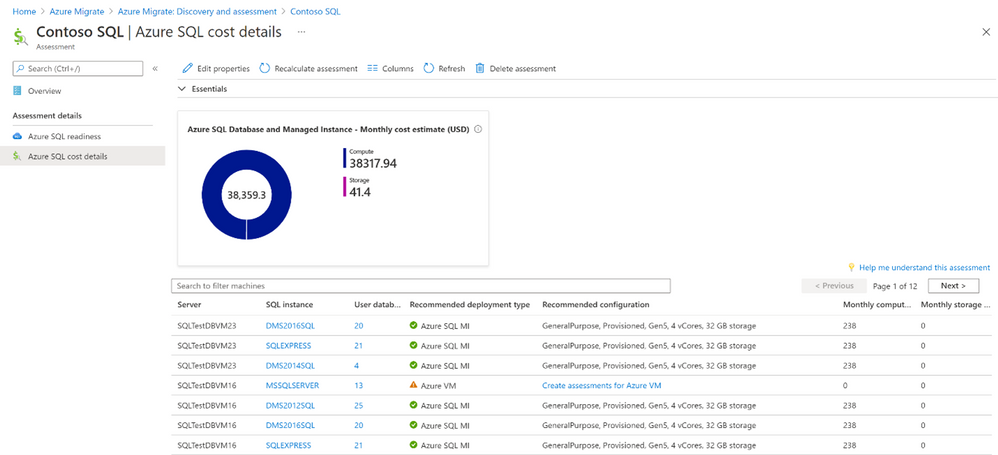

Cloud migration projects can be complex, and it can be hard to know where to start the migration journey. Migration assessments are crucial to execute successful migrations as they help you build a high-confidence migration plan. Microsoft is committed to support your migration needs with discovery, assessment, and migration capabilities for all your key workloads. In this blog post, we will take a look at how Azure Migrate’s preview of unified, at-scale discovery and assessment for SQL server instances and databases can help you plan and migrate your data estate to Azure.

With Azure Migrate, you can now create a unified view of your entire datacenter, across Windows, Linux and SQL Server running in your VMware environment. This makes Azure Migrate a one-stop-shop for all your discovery, assessment, and migration needs, across a breadth of infrastructure, application, and database scenarios. It has already helped thousands of customers in their cloud migration journey, and you can use it for free with your Azure subscription. With Azure Migrate’s integrated discovery and assessment capabilities, you can:

- Discover your infrastructure (Windows, Linux, and SQL ServerPREVIEW) at-scale.

- Identify installed applications running in your servers.

- Perform agentless dependency mapping to identify dependencies between your servers.

- Create assessments for migration to Azure VM, Azure VMware Solution and Azure SQLPREVIEW.

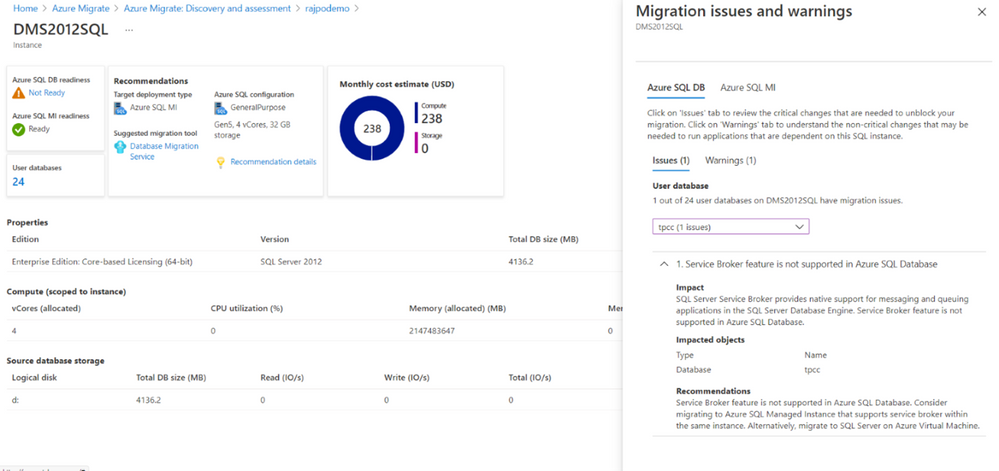

With this preview of unified discovery and assessment of SQL Server, you get a more comprehensive assessment of your on-premises datacenter. It lets you understand the following with respect to your SQL Server instances and databases running in VMware environments:

- Readiness for migrating to Azure SQL.

- Microsoft-recommended deployment type in Azure – Azure SQL Database, Azure SQL Managed Instance, or SQL Server on Azure VMs.

- Migration issues and mitigation steps.

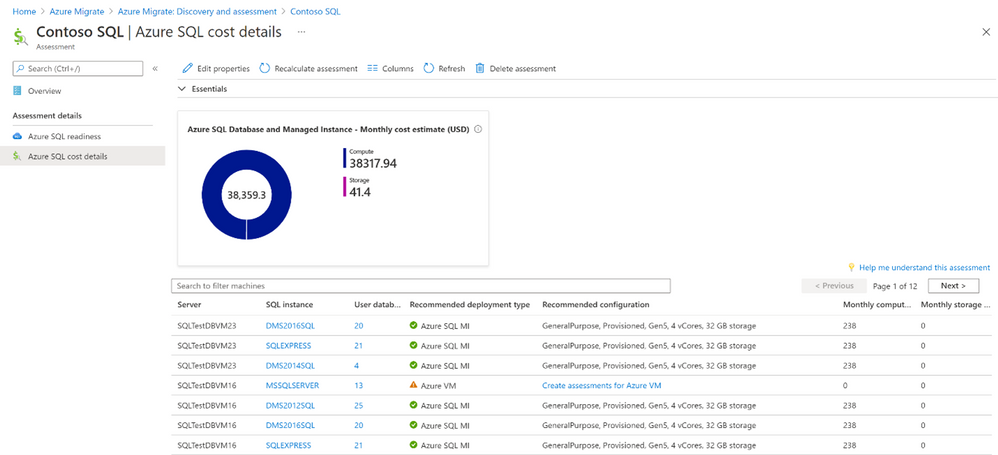

- Right-sized Azure SQL Tier-SKUs that can meet the performance requirements of your databases, and the corresponding cost estimates.

How to get started?

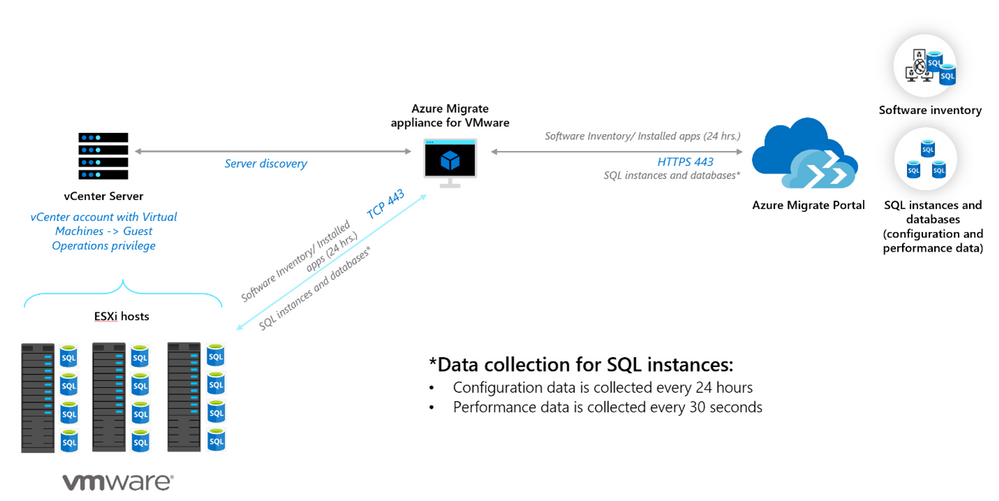

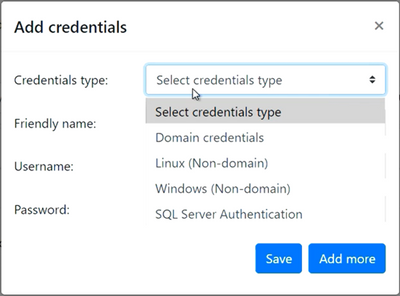

You will first need to set up an Azure Migrate project. Once you have created a project, you will have to deploy and configure the Azure Migrate appliance. This appliance enables you to perform discovery and assessment of your SQL Servers. After the assessment, you can review the reports which include Azure SQL readiness details, Microsoft recommendations, and monthly estimates.

- Create an Azure Migrate project from the Azure Portal, and download the Azure Migrate appliance for VMware. Customers who already have an active Azure Migrate project can upgrade their project to enable this preview.

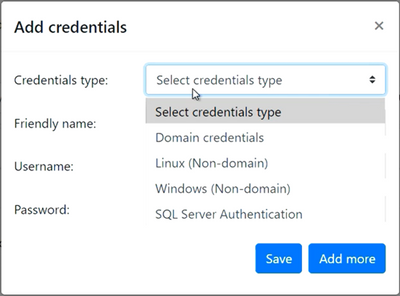

- Deploy a new Azure Migrate appliance for VMware or upgrade your existing VMware appliances to discover on-premises servers, installed applications, SQL Server instances and databases, and application dependencies. To enable you to discover your datacenter easily, the appliance lets you enter multiple credentials – Windows authentication (both Domain and non-Domain) and SQL Server authentication. The Azure Migrate appliance will automatically map each server to the appropriate credential when multiple credentials are specified.

These credentials are encrypted and stored on the deployed appliance locally and are never sent to Microsoft.

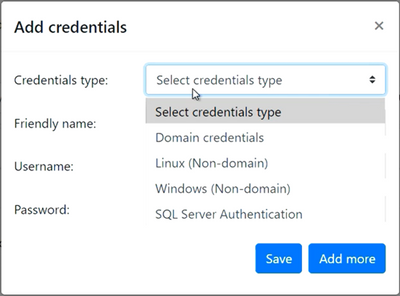

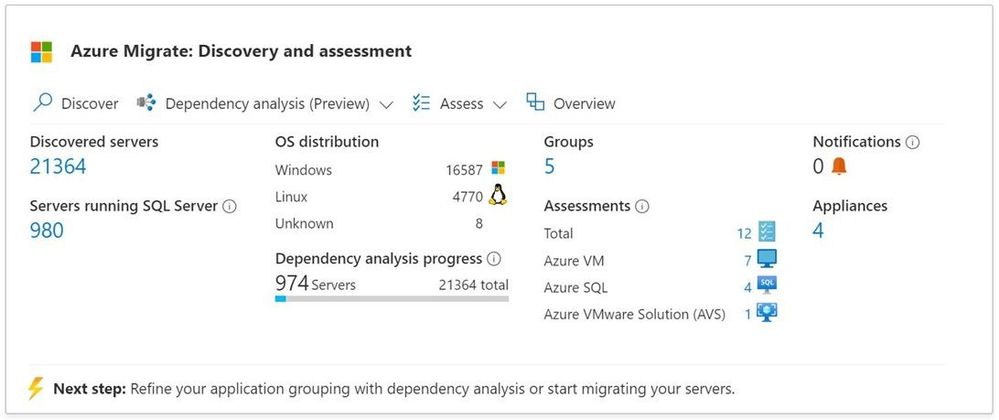

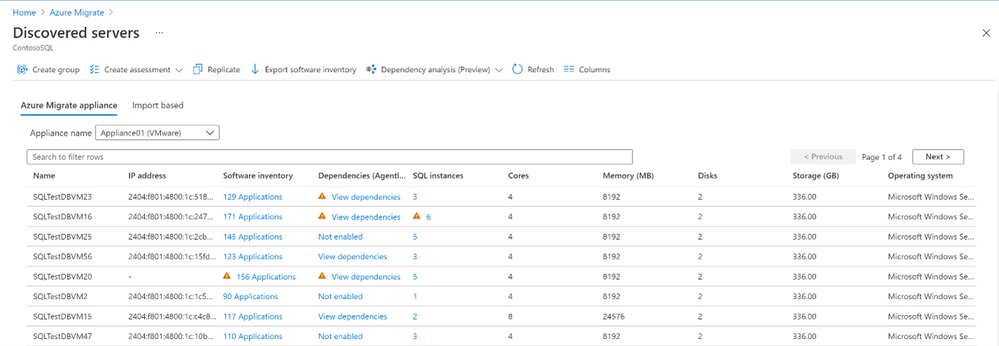

- View the summary of the discovered IT estate from your Azure Migrate project

a. You can view details of the discovered servers such as their configurations, software inventory (installed apps), dependencies, count of SQL instances, etc.

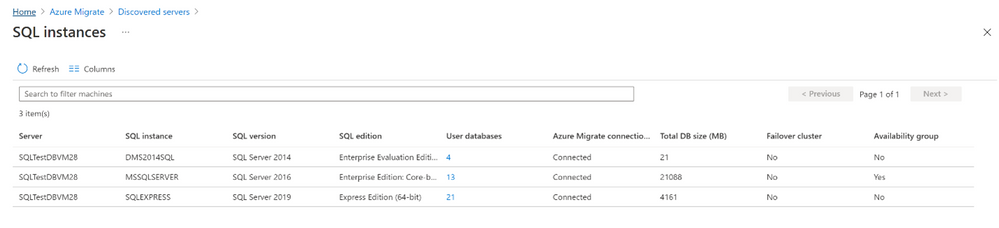

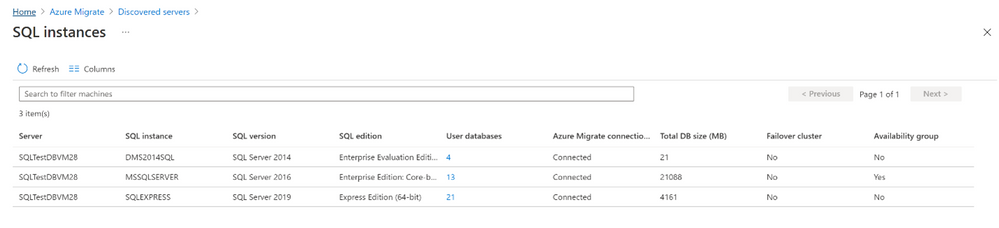

b. You can delve deeper into a server to view the SQL Server instances running on it and their properties such as version, edition, allocated memory, features enabled, etc.

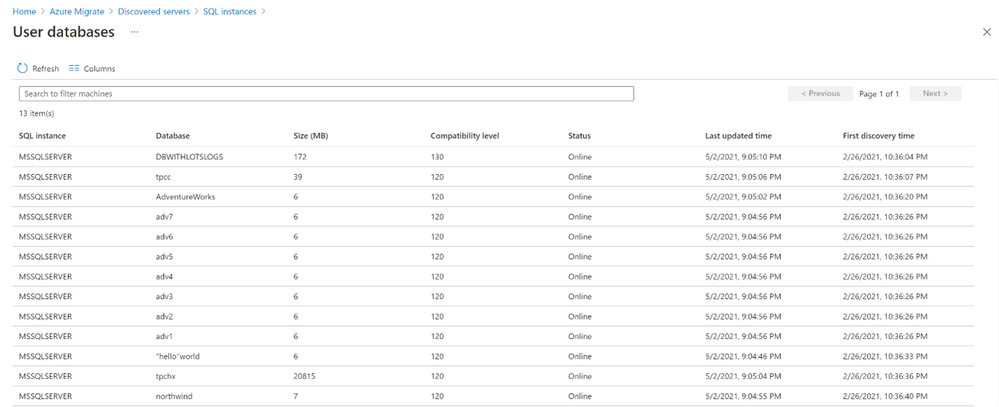

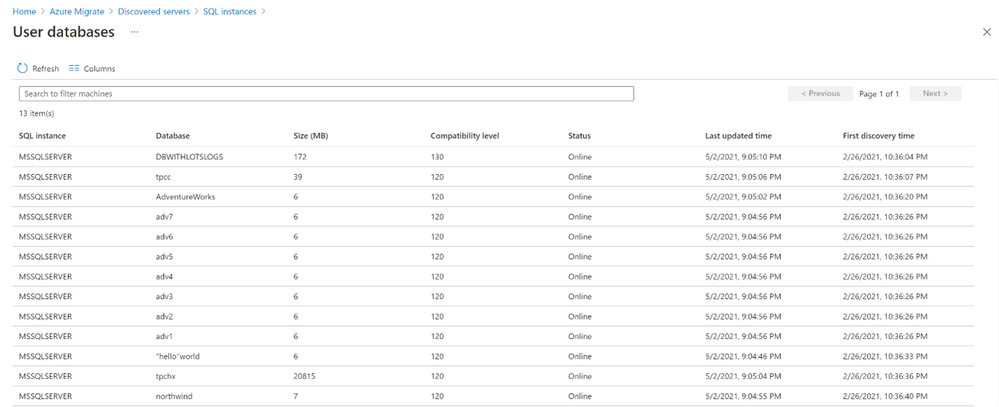

c. You can further dive deeper into each instance to view the databases running on it and their properties such as status, compatibility level, etc.

c. You can further dive deeper into each instance to view the databases running on it and their properties such as status, compatibility level, etc.

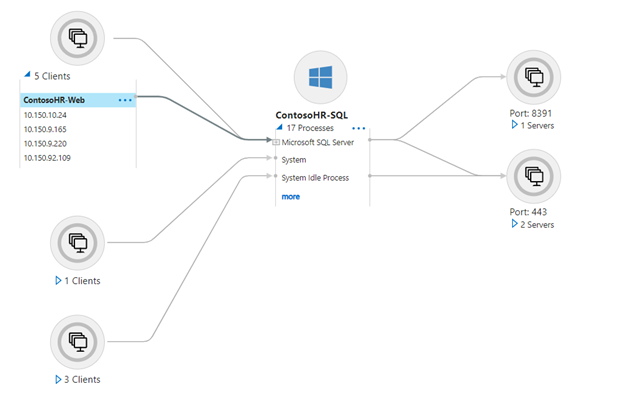

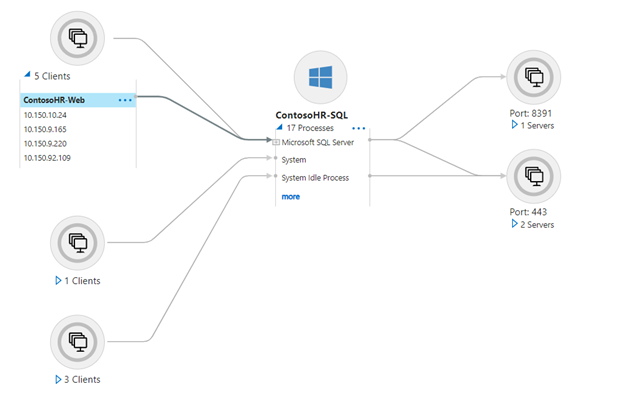

- Use the agentless dependency mapping feature to identify application tiers or interdependent workloads.

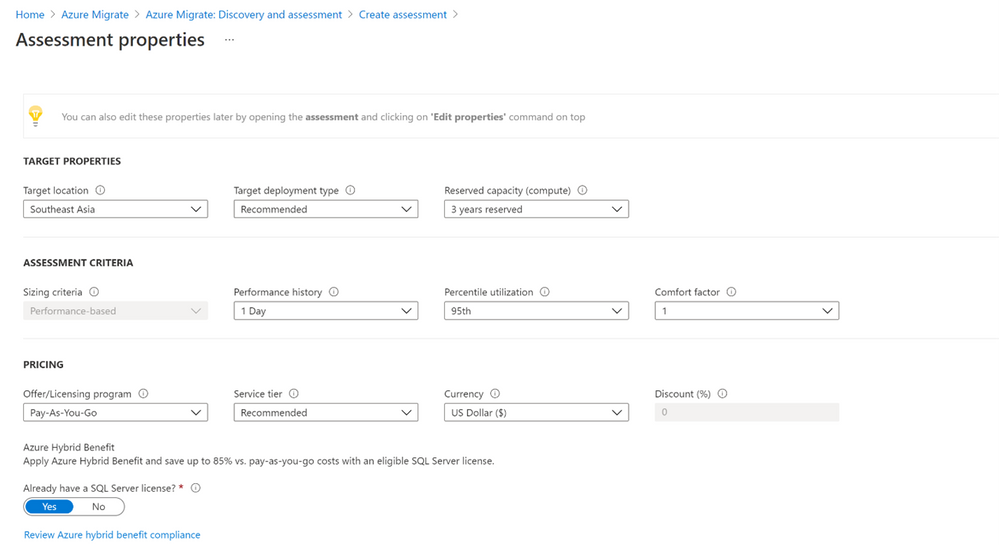

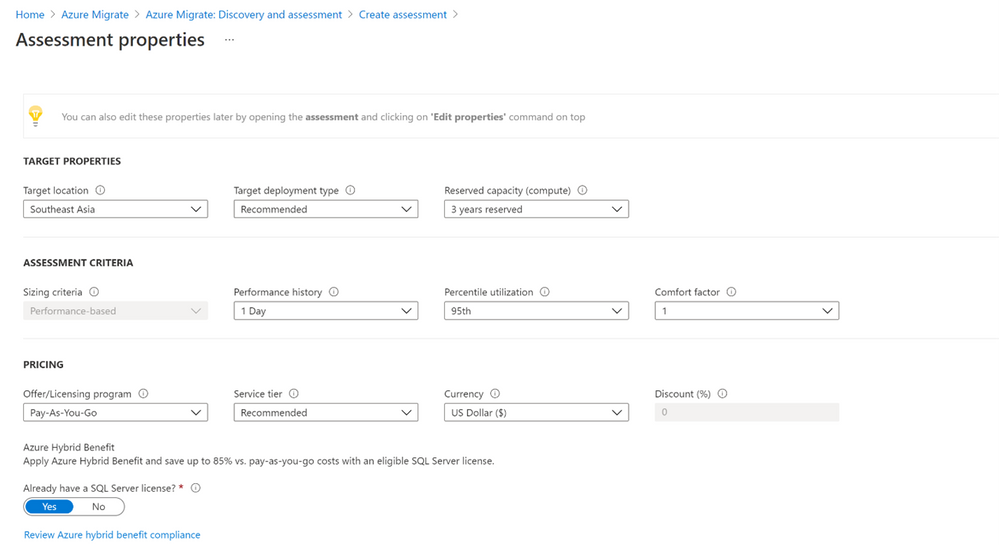

5. Create an assessment for Azure SQL by specifying key properties such as the target Azure region, Azure SQL deployment type, reserved capacity, service tier, performance history, etc.

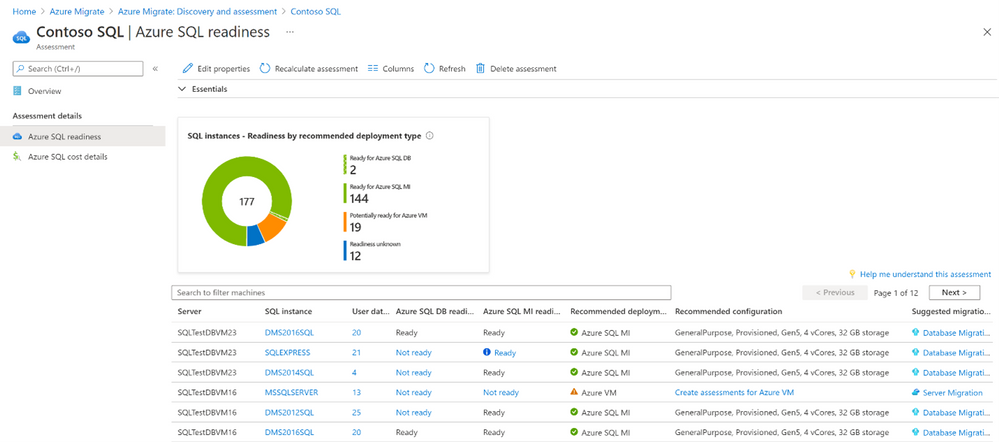

- View the assessment report to identify suitable Azure SQL deployment options, right-sized SKUs that can provide the same or superior performance as your on-premises SQL deployments, and recommended migration tools.

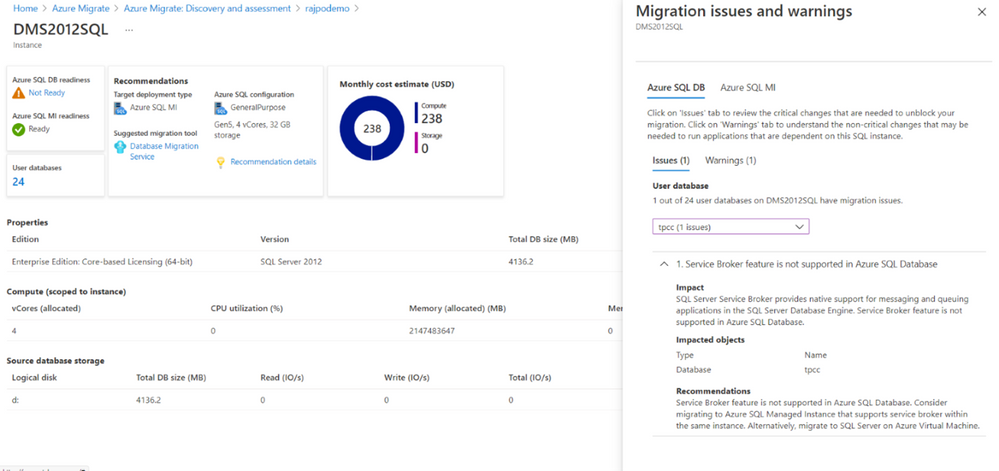

a. You can further drill-down to a SQL Server instance and subsequently to its databases, in order to understand its readiness for Azure SQL, specific migration issues that prevent migration to a specific Azure SQL deployment option, etc.

a. You can further drill-down to a SQL Server instance and subsequently to its databases, in order to understand its readiness for Azure SQL, specific migration issues that prevent migration to a specific Azure SQL deployment option, etc.

b. You can also understand the estimated cost of running your on-premises SQL deployments in Azure SQL.

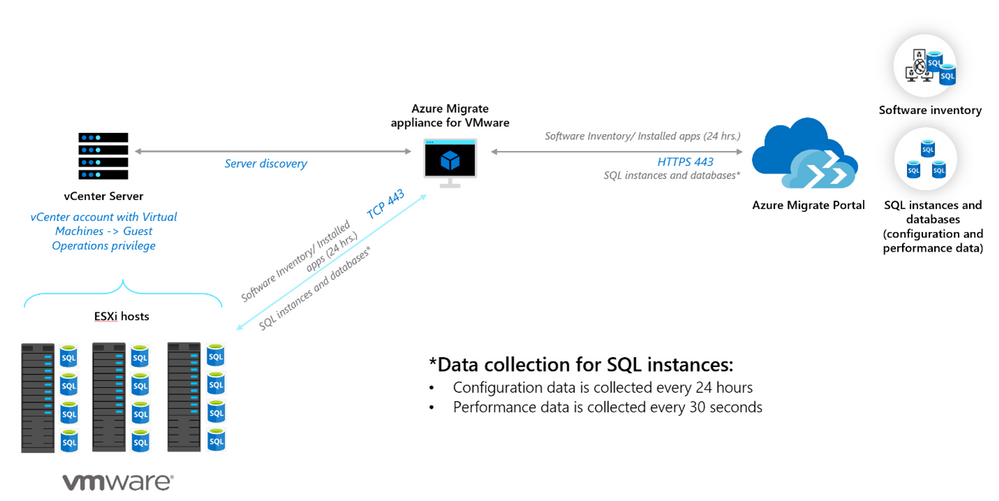

Workflow and architecture

The architecture diagram below, outlines the flow of data once the Azure Migrate appliance is deployed, and credentials are entered. The appliance:

- Uses the vCenter credentials to connect to the vCenter Server to discover the configuration and performance (resource utilization) data of the Windows and Linux servers it manages.

- Collects software inventory (installed apps) and dependency (netstat) information from servers that were discovered and identifies the SQL Server instances running on them.

- Connects to the discovered SQL Server instances and collects configuration and performance data of the SQL estate. The configuration data is used to identify migration blockers, and the performance data (IOPS, latency, CPU utilization etc.) is used to right-size the SQL instances and databases for migration to Azure SQL.

Scale and support

You can discover up to 6000 databases (or 300 instances) with a single appliance, and you can scale discovery further by deploying more appliances. The preview supports discovery of SQL instances and databases running on SQL Server 2008 through SQL Server 2019. All SQL editions – Developer, Enterprise, Express, and Web are supported. Additionally, as indicated in the diagram, discovery of configuration and performance data is a continuous process ie the discovered inventory is refreshed periodically, thereby enabling you to visualize the most updated details of your environment.

Conclusion

Once you have completed your SQL Server assessment and identified the desired targets in Azure, you can leverage Server Migration tool from Azure Migrate to migrate to Azure VMs, or Azure Database Migration Service to migrate to Azure SQL Managed Server or Azure SQL Database.

Migrating to Azure can help you realize substantial cost savings and operational efficiencies. Azure enables you to move efficiently and with high confidence through a mix of services like Azure Migrate, best practice guides, and programs. Once in the cloud, you can scale on-demand to meet your business needs.

Get started today

by Contributed | May 18, 2021 | Dynamics 365, Microsoft 365, Technology

This article is contributed. See the original author and article here.

Today, companies operate facilities and warehouses in multiple locations, across regions, and across time zones to meet consumer demand in the best way possible. Warehouses and manufacturing facilities are spread out to match global operations with shipping needs.

Therefore, any supply chain solution that supports warehouse and manufacturing facilities needs to provide a high level of resilience that delivers reliability, availability, and scale.

Dynamics 365 Supply Chain Management meets these needs for your organization with cloud and edge scale units. Supply Chain Management is a tailored offering that helps businesses of all sizes build resilience.

The hybrid topology for Supply Chain Management using scale units became available for public preview in October 2020, and since then we have added numerous incremental enhancements to the platform and to the warehouse and manufacturing execution workloads for scale units. Now the scale unit platform with its first workload is generally available, and you can purchase the scale units add-in for Supply Chain Management environments in the cloud.

Warehouse execution workloads in cloud scale units generally available

The hybrid topology for Supply Chain Management includes the following elements:

- A platform for a distributed hybrid topology, which is composed of a hub environment in the cloud and subordinate cloud scale unit environments that perform dedicated workload execution and increase your supply chain resilience.

- The warehouse execution workload, which is the first distributed supply chain management workload in general availability.

- The Scale Unit Manager portal, which helps businesses to configure their distributed topology using scale units and workloads.

The first release of the warehouse execution workload provides support for inbound operations, outbound operations, and internal warehouse movement. It utilizes the concept of warehouse orders, which have been added to enable the system to transfer warehouse work to scale units. For more information about the workload capabilities now generally available with version 10.0.17 of Supply Chain Management, see Warehouse management workloads for cloud and edge scale units.

Hybrid topology enhancements available for preview

In addition to the features for scale units, there are several preview features for building hybrid topologies.

Warehouse execution workload capabilities

Version 10.0.19 of Supply Chain Management is now in early access. It provides enhancements for warehouse workloads that have been requested by our customers.

In version 10.0.19, warehouse workload capabilities reach into the shipping processes and are now able to confirm shipments, send advance shipping notices (ASNs), and generate bill of lading on the scale unit.

One of the top demands of our customers is the ability to create provisional packing slips on the scale units, which would provide full end-to-end resiliency for outbound warehouse processes. For most of the standard operations, this will allow you to ship right from the scale unit, even if there is no active connection to your cloud hub. This is great if you run an edge scale unit at a remote facility where the external network is not always available.

In future releases, we want to extend the process responsibility of the warehouse execution workload even more and include parts of the planning phase for outbound processes like shipment creation and ship consolidation.

Manufacturing execution workload

The manufacturing workload continues in preview. In version 10.0.19, now in early access, we are introducing interactive enhancements for our customers validating the capabilities in preview.

In version 10.0.19, the manufacturing workload gains access to inventory information, which means that inventory relevant to the facility workloads is now available on the scale unit. This adds support for putaway work following manufacturing work and lets workers report as finished on the scale unit.

Future iterative enhancements will move raw material picking to the scale unit and extend the use of scale units to support more types of manufacturing processes, such as when production orders are not converted into jobs.

Edge scale units

With the release of version 10.0.19 for early access, we have opened the ability to set up edge scale units on local business data (LBD) deployments. You can now set up LBD deployments on your local hardware appliance and configure the environment as a scale unit on the edge, connected to your cloud hub.

We have released a new version of the infrastructure scripts that are used in LBD deployments and support the specific requirements for edge scale units.

During the preview phase, you will use the deployment tools provided on GitHub to experience edge scale units in a deployment of one-box development environments.

Next steps

To learn more about the distributed hybrid topology for Supply Chain Management and how you can increase the resilience of your supply chain management implementation, check out the documentation.

The post Enable business resilience with cloud and edge scale units in Dynamics 365 Supply Chain Management appeared first on Microsoft Dynamics 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

by Contributed | May 18, 2021 | Technology

This article is contributed. See the original author and article here.

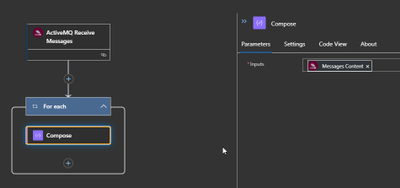

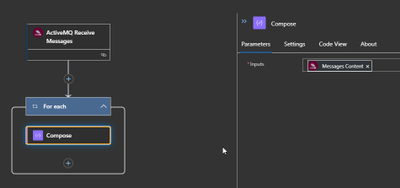

In this article I will show how to build the ActiveMQ trigger using the service provider capability in the new logic app, the project was inspired from Praveen article Azure Logic Apps Running Anywhere: Built-in connector extensibility – Microsoft Tech Community

This project is only a POC and it is not fully tested This project is only a POC and it is not fully tested |

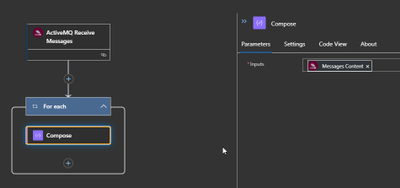

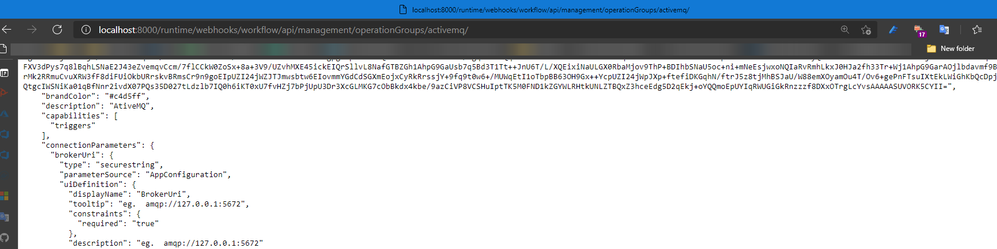

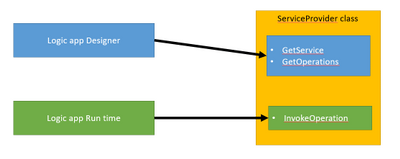

The ServiceProvider is serving two consumers

The logic app designer and the logic app run time.

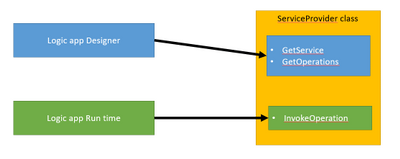

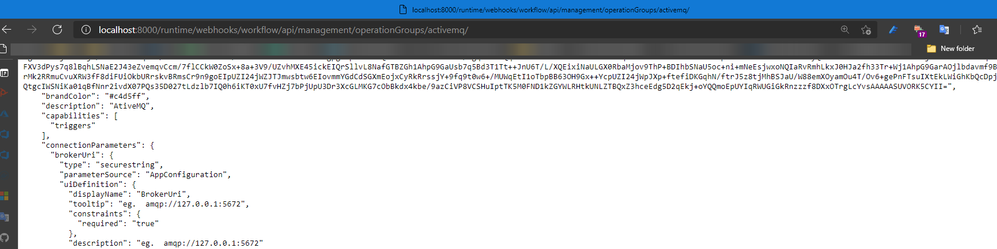

The Designer can be the VS code or the portal where requests will be done to get the skeleton/Swager of the trigger by calling REST API hosted on function app runtime like below

Run time will read the Logic app Json definition and execute the invoke operation.

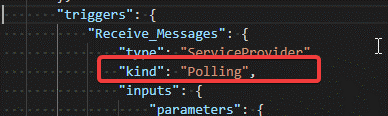

the developed trigger work based on polling mechanism so the Logic app run time will call the invokeoperation based on the time interval configured in the trigger request.

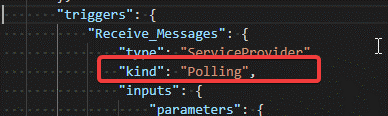

"triggers": {

"Receive_Messages": {

"type": "ServiceProvider",

"kind": "Polling",

"inputs": {

"parameters": {

"queue": "TransactionQueue",

"MaximumNo": 44

},

"serviceProviderConfiguration": {

"connectionName": "activemq",

"operationId": "ActiveMQ : ReceiveMessages",

"serviceProviderId": "/serviceProviders/activemq"

}

},

"recurrence": {

"frequency": "Second",

"interval": 15

}

}

},

this can be specified first by specifying the Recurrence Setting to be basic so the designer can know that is a polling trigger

Recurrence = new RecurrenceSetting

{

Type = RecurrenceType.Basic,

},

Then the designer will add the keyword kind = Polling

If the kind keyword is not added, then add it manually.

InvokeOperation operation steps

The operation is doing the following.

Read the connection properties as well as the trigger request properties.

If there are no messages in the queue then the response will be System.Net.HttpStatusCode.Accepted which will be understood by the Logic app run engine as a skipped trigger

public Task<ServiceOperationResponse> InvokeOperation(string operationId, InsensitiveDictionary<JToken> connectionParameters,

ServiceOperationRequest serviceOperationRequest)

{

//System.IO.File.AppendAllText("c:templalogdll2.txt", $"rn({DateTime.Now}) start InvokeOperation ");

string Error = "";

try

{

ServiceOpertionsProviderValidation.OperationId(operationId);

triggerPramsDto _triggerPramsDto = new triggerPramsDto(connectionParameters, serviceOperationRequest);

var connectionFactory = new NmsConnectionFactory(_triggerPramsDto.UserName, _triggerPramsDto.Password, _triggerPramsDto.BrokerUri);

using (var connection = connectionFactory.CreateConnection())

{

connection.ClientId = _triggerPramsDto.ClientId;

using (var session = connection.CreateSession(AcknowledgementMode.Transactional))

{

using (var queue = session.GetQueue(_triggerPramsDto.QueueName))

{

using (var consumer = session.CreateConsumer(queue))

{

connection.Start();

List<JObject> receiveMessages = new List<JObject>();

for (int i = 0; i < _triggerPramsDto.MaximumNo; i++)

{

var message = consumer.Receive(new TimeSpan(0,0,0,1)) as ITextMessage;

//System.IO.File.AppendAllText("c:templalogdll2.txt", $"rn({DateTime.Now}) message != null {(message != null).ToString()} ");

if (message != null)

{

receiveMessages.Add(new JObject

{

{ "contentData", message.Text },

{ "Properties",new JObject{ { "NMSMessageId", message.NMSMessageId } } },

});

}

else

{

//the we will exit the loop if there are no message

break;

}

}

session.Commit();

session.Close();

connection.Close();

if (receiveMessages.Count == 0)

{

//System.IO.File.AppendAllText("c:templalogdll2.txt", $"rn({DateTime.Now}) Skip { JObject.FromObject(new { message = "No messages"} ) }");

return Task.FromResult((ServiceOperationResponse)new ActiveMQTriggerResponse(JObject.FromObject(new { message = "No messages" }), System.Net.HttpStatusCode.Accepted));

}

else

{

//System.IO.File.AppendAllText("c:templalogdll2.txt", $"rn({DateTime.Now}) Ok {JArray.FromObject(receiveMessages)}");

return Task.FromResult((ServiceOperationResponse)new ActiveMQTriggerResponse(JArray.FromObject(receiveMessages), System.Net.HttpStatusCode.OK));

}

}

}

}

}

}

catch (Exception e)

{

Error = e.Message;

//System.IO.File.AppendAllText("c:templalogdll2.txt", $"rn({DateTime.Now}) error {e.Message}");

}

return Task.FromResult((ServiceOperationResponse)new ActiveMQTriggerResponse(JObject.FromObject(new { message = Error }), System.Net.HttpStatusCode.InternalServerError));

}

Development environment

To let the designer recognized the new serviceprovider, the information for the Dll should be added to the extensions.json

Which can be found in the below path

C:Users....azure-functions-core-toolsFunctionsExtensionBundlesMicrosoft.Azure.Functions.ExtensionBundle.Workflows1.1.7binextensions.json

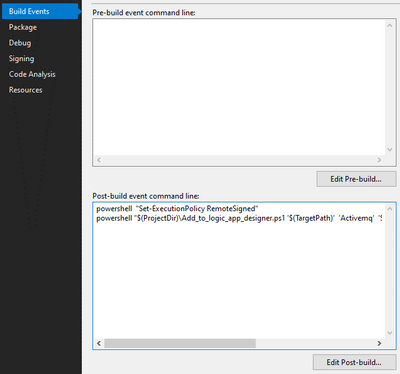

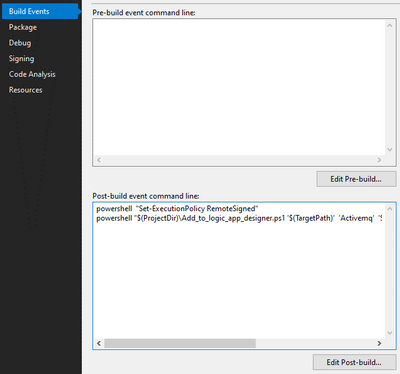

Also we need to copy dll and it is dependencies to the bin folder next to the extensions.json file , this is done by using powershell script that run after the build

the powershell script can be found in the C# project folder

After building the ServiceProvider project you can switch to vs code that have the logic app designer installed more information can be found on Azure Logic Apps (Preview) – Visual Studio Marketplace

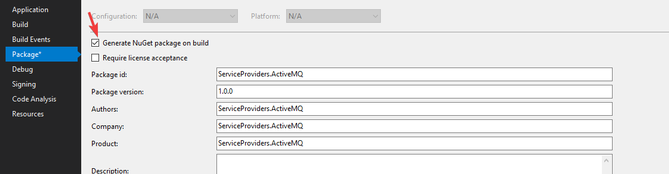

To add the service provider package to the logic app

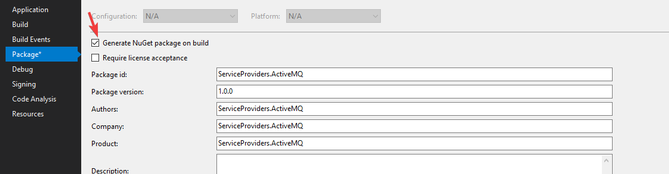

First convert the logic app project to nuget as described here Create Logic Apps Preview workflows in Visual Studio Code – Azure Logic Apps | Microsoft Docs

Then to get the nupkg file you can enable the below checkbox

Then to add the package you can run the powershell file Common/tools/add-extension.ps1

1- run Import-Module C:path to the fileadd-extension.ps1

2- add-extension Path “ActiveMQ”

You may face difficulty regarding the Nueget package cache ,So keep in mind that you may need manually delete the package file form the cache

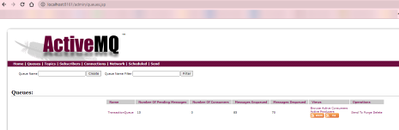

Setup the ActiveMQ server

I used the docker image rmohr/activemq (docker.com)

Then create messages using the management page http://localhost:8161/

The source code is available on GitHub

https://github.com/Azure/logicapps-connector-extensions

by Contributed | May 18, 2021 | Technology

This article is contributed. See the original author and article here.

Serverless Synapse SQL pools enable you to read Parquet/CSV files or Cosmos DB collections and return their content as a set of rows. In some scenarios, you would need to ensure that a reader cannot access some rows in the underlying data source. This way, you are limiting the result set that will be returned to the users based on some security rules. In this scenario, called Row-level security, you would like to return a subset of data depending on the reader’s identity or role.

Row-level security is supported in dedicated SQL pools, but it is not supported in serverless pools (you can propose this feature in Azure feedback site). In some cases, you can implement your own custom row-level security rules using standard T-SQL code.

In this post you will see how to implement RLS by specifying the security rules in the WHERE clause or by using inline table-value function (iTVF).

Scenario

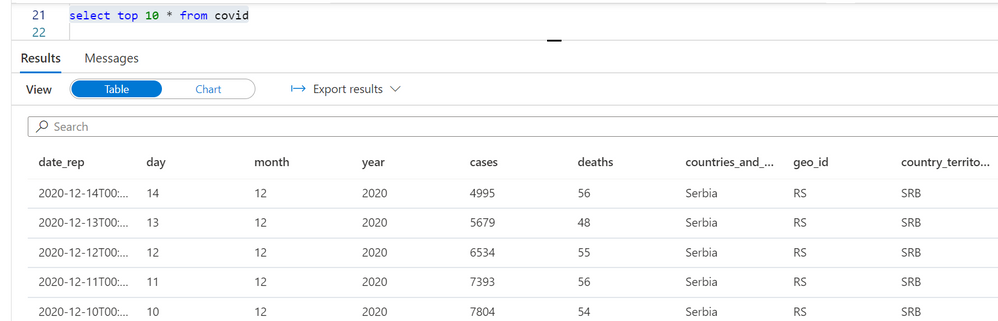

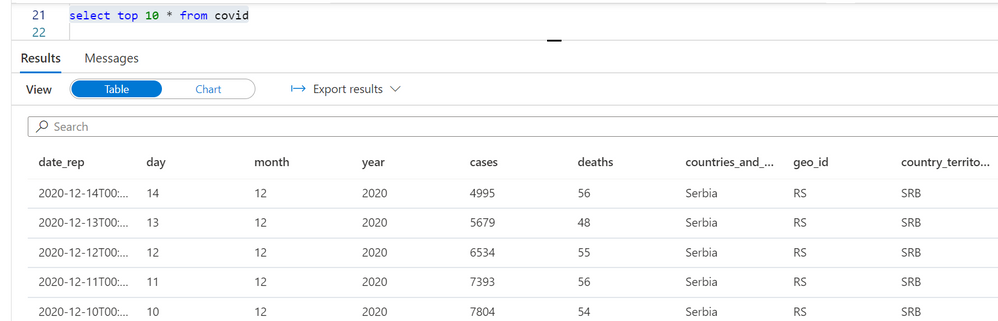

Let’s imagine that we have a view or external table created on top of the COVID data set placed in the Azure Data Lake storage:

create or alter view dbo.covid as

select *

from openrowset(

bulk 'https://pandemicdatalake.blob.core.windows.net/public/curated/covid-19/ecdc_cases/latest/ecdc_cases.parquet',

format = 'parquet') as rows

The readers will get all COVID cases that are written in the Parquet file. Let’s imagine that the requirement is to restrict the user access and allow them to see just a subset of data based on the following rules:

- Azure AD user jovan@adventureworks.com can see only the COVID cases reported in Serbia.

- The users in ‘AfricaAnalyst’ role can see only the COVID cases reported in Africa.

We can represent these security rules using the following predicate (this is T-SQL pseudo-syntax):

( USER_NAME = 'jovan@adventureworks.com' AND geo_id = 'RS' )

OR

( USER IS IN ROLE 'AfricaAnalyst' AND continent_exp = 'Africa' )

You can use the system functions like SUSER_SNAME() or IS_ROLEMEMBER() to identify the caller and easily check should you return some rows to the current user. We just need to express this condition in T-SQL and add it as a filtering condition in the view.

In this post you will see two methods for filtering the rows based on security rules:

- Filtering rows directly in the WHERE condition.

- Applying security predicated coded in iTVF.

As a prerequisite, you should setup a database role that should have limited access to the underlying data set.

CREATE ROLE AfricaAnalyst;

CREATE USER [jovan@contoso.com] FROM EXTERNAL PROVIDER

CREATE USER [petar@contoso.com] FROM EXTERNAL PROVIDER

CREATE USER [petar@contoso.com] FROM EXTERNAL PROVIDER

ALTER ROLE AfricaAnalyst ADD MEMBER [jovan@contoso.com];

ALTER ROLE AfricaAnalyst ADD MEMBER [petar@contoso.com];

ALTER ROLE AfricaAnalyst ADD MEMBER [nikola@contoso.com];

In this code we have added a role and added three users to this role.

Filtering rows based on security rule

The easiest way to implement row-level security is to directly embed security predicates in the WHERE condition of the view:

create or alter view dbo.covid as

select *

from openrowset(

bulk 'https://pandemicdatalake.blob.core.windows.net/public/curated/covid-19/ecdc_cases/latest/ecdc_cases.parquet',

format = 'parquet') as rows

WHERE

( SUSER_SNAME() = 'jovan@adventureworks.com' AND geo_id = 'RS')

OR

( IS_ROLEMEMBER('AfricaAnalyst', SUSER_SNAME()) = 1 AND continent_exp = 'Africa')

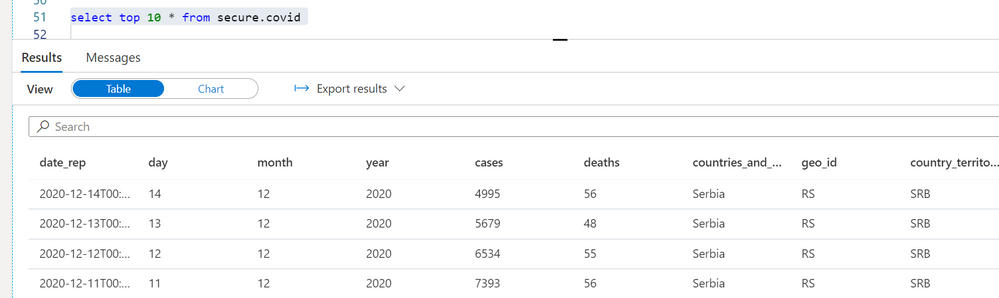

The Azure AD principal jovan@adventureworks.com will see only the COVID cases reported in Serbia:

Azure AD principals that belong to ‘AfricaAnalysts’ role will see the cases reported in Africa when running the same query:

Without any change in the query, the readers will be able to access only the rows that they are allowed to see based on their identity.

Operationalizing row-level-security with iTVF

In some cases, it would be hard to maintain security rules if you put them directly in the view definition. The security rules might change, and you don’t want to track all possible views to update them. The WHERE condition in the view may contain other predicates, and some bug in AND/OR logic might change or disable the security rules.

The better idea would be to put the security predicates in a separate function and just apply the function on the views that should return limited data.

We can create a separate schema called secure, and put the security rules in the inline table value function (iTVF):

create schema secure

go

create or alter function secure.geo_predicate(@geo_id varchar(20),

@continent_exp varchar(20))

returns table

return (

select condition = 1

WHERE

(SUSER_SNAME() = 'jovanpop@adventureworks.com' AND @Geo_id = 'RS')

OR

(IS_ROLEMEMBER('AfricaAnalyst', SUSER_SNAME())=1 AND continent_exp='Africa')

)

This predicate is very similar to the predicates used in native row-level security in the T-SQL language. This predicate evaluates a security rule based on geo_id and continent_exp values. These values are provided as the function parameters. The function will internally use SUSER_SNAME() and IS_ROLEMEMBER() functions and evaluate security rules based on geo_id and continent_exp values. If the current user can see a row with the provided values, the function fill return value 1. Otherwise, it will not return any value.

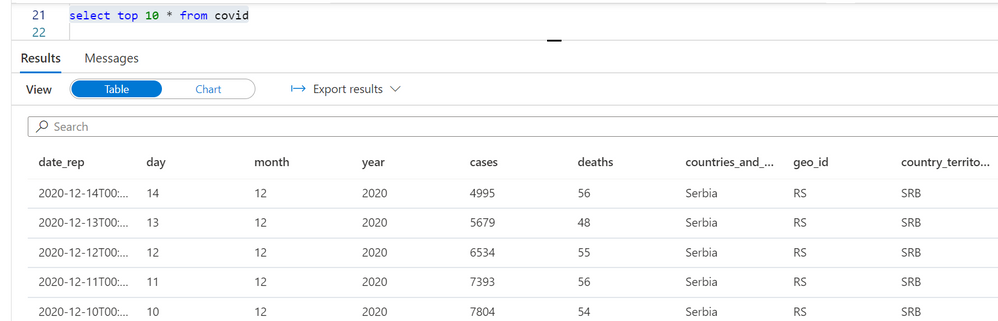

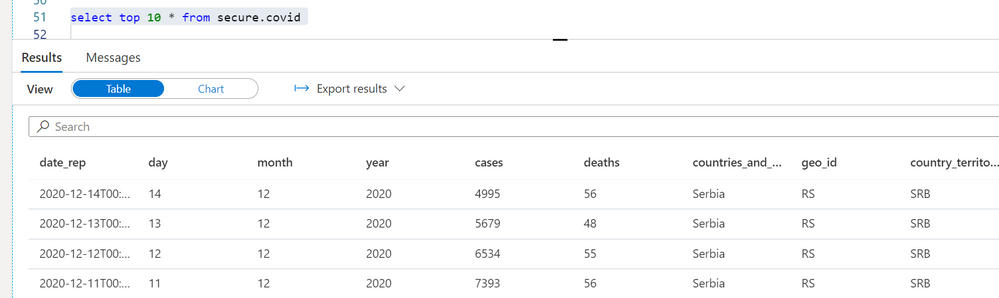

Instead of modifying every view by adding new conditions in the WHERE clause, we can create the secure wrapper views where we will apply this predicate. In the following example, I’m creating a view secure.covid where I will apply the predicate and pass the columns that will be used in the predicate to evaluate should this row be returned or not:

create or alter view secure.covid

as

select *

from dbo.covid

cross apply security.geo_predicate(geo_id, continent_exp)

go

Note that in this case the view dbo.covid will not have the WHERE clause that contains the security rule. Security rules are filtering rows by applying security.geo_predicate() iTVF on the original view. For every row in the dbo.covid view. The query will provide the values of geo_id and continent_exp columns to the iTVF predicate. The predicate will remove the row from the output if these values do not satisfy criterion.

A user jovanpop@adventureworks.com will see the filtered results based on his context:

Placing the security rules in a separate iTVFs and creating the secure wrapper views will make your code more maintainable.

Conclusion

Although native row-level-security is not available in serverless SQL pool, you can easily implement the similar security rules using the standard T-SQL functionalities.

You just need to ensure that a reader cannot bypass the security rules.

- You need to ensure that the readers cannot directly query the files or collections in the underlying data source using the OPENROWSET function or the views/external tables. Make sure that you restrict access to the OPENROWSET/credentials and DENY SELECT on the base views and external tables that read original un-filtered data.

- You need to ensure that users cannot directly access the underlying data source and bypass serverless SQL pool. You would need to make sure that underlying storage is protected from random access using private endpoints.

- Make sure that the readers cannot use some other tool like other Synapse workspace, Apache Spark, or other application or service to directly access the data that you secured on your workspace.

This method uses the core T-SQL functionalities and will not introduce additional overhead compared to the native row-level security. If this workaround doesn’t work in your scenario, you can propose this feature in Azure feedback site.

Recent Comments