by Contributed | May 21, 2021 | Technology

This article is contributed. See the original author and article here.

SharePoint Framework Special Interest Group (SIG) bi-weekly community call recording from May 20th is now available from the Microsoft 365 Community YouTube channel at http://aka.ms/m365pnp-videos. You can use SharePoint Framework for building solutions for Microsoft Teams and for SharePoint Online.

Call summary:

Preview the new Microsoft 365 Extensibility look book gallery co-developed by Microsoft Teams and Sharepoint engineering. Register now for May/June trainings on Sharing-is-caring. View the Microsoft Build sessions guide (Modern Work Digital Brochure) – Keynotes, breakouts, on-demand, roundtables and 1:1 consultations. PnPjs Client-Side Libraries v2.5.0 GA and CLI for Microsoft 365 v3.10.0 preview were released this month. Also latest updates on SPFx roadmap (v1.13.0 Preview in summer) and Viva Connections extensibility shared.

Four new/updated PnP SPFx web part samples delivered in last 2 weeks. Great work!

Latest project updates include: (Bold indicates update from previous report 2 weeks ago)

PnP Project |

Current version |

Release/Status |

SharePoint Framework (SPFx) |

v1.12.1 |

v1.13.0 Preview in summer |

PnPjs Client-Side Libraries |

v2.5.0 |

v3.0.0 developments underway |

CLI for Microsoft 365 |

v3.9.0 |

v3.10.0 preview released |

Reusable SPFx React Controls |

v2.7.0, v3.1.0 |

v2.7.0 (SPFx v1.11), v3.1.0 (SPFx v1.12.1) |

Reusable SPFx React Property Controls |

v2.6.0, v3.1.0 |

v2.6.0 (SPFx v1.11), v3.1.0 (SPFx v1.12.1) |

PnP SPFx Generator |

v1.16.0 |

Angular 11 support |

PnP Modern Search |

v3.19 and v4.1.0 |

April and March 20th |

The host of this call is Patrick Rodgers (Microsoft) @mediocrebowler. Q&A takes place in chat throughout the call.

Thanks everybody for being part of the Community and helping making things happen. You are absolutely awesome!

Actions:

- Register for Sharing is Caring Events:

- First Time Contributor Session – May 24th (EMEA, APAC & US friendly times available)

- Community Docs Session – May

- PnP – SPFx Developer Workstation Setup – June

- PnP SPFx Samples – Solving SPFx version differences using Node Version Manager – May 20th

- AMA (Ask Me Anything) – Microsoft Graph & MGT – June 8th

- AMA (Ask Me Anything) – Microsoft Teams Dev – June

- First Time Presenter – May 25th

- More than Code with VSCode – May 27th

- Maturity Model Practitioners – May 18th

- PnP Office Hours – 1:1 session – Register

- Download the recurrent invite for this call – https://aka.ms/spdev-spfx-call

Demos:

Using Microsoft Graph Toolkit to easily access files in Sites and in OneDrive – a.k.a. OneDrive finder – find and explore OneDrives, folders and files using Microsoft Graph Toolkit. Use Graph queries to get hostname, Sites on hostname, OneDrive item-id, and Sites Root item-id. Use 2 beta controls from MGT for search – Mgt-File-List and MGT-File. Refine search results by file extension, items on page, etc. Display style – light/dark mode. Recommendations on managing file list cache and permissions.

Building Microsoft Teams user bulk membership update tool with SPFx and Microsoft Graph – a.k.a. the Teams Membership Updater tool – a web part for Teams’ site owners that pulls member updates from a selected CSV file. Upon load, the web part calls Graph to pull the list of Teams you are a member/owner. Uses Graph batch functions to remove orphaned members and then to add new members. Uses SPFx Reusable controls and react-papaparse.

Building Microsoft Graph get client web part with latest Graph Client SDK – use Microsoft Graph Toolkit SharePoint provider to access and leverage new functionality of Graph JS SDK in SPFx. Web part sample developed using React Framework that showcases how to use the latest microsoft-graph-client in SPFx. The client enables throttling management (no 429s), Chaos management, uses latest types, getting RAW responses from API, Content-Type is already set for PUT, and a lot more!

SPFx web part samples: (https://aka.ms/spfx-webparts)

Thank you for your great work. Samples are often showcased in Demos.

Agenda items:

Demos:

Demo: Using Microsoft Graph Toolkit to easily access files in Sites and in OneDrive – André Lage (Datalynx AG) | @aaclage – 18:27

Demo: Building Microsoft Teams user bulk membership update tool with SPFx and Microsoft Graph – Nick Brown (Cardiff University) | @techienickb – 34:06

Demo: Building Microsoft Graph get client web part with latest Graph Client SDK – Sébastien Levert (Microsoft) | @sebastienlevert – 47:30

Resources:

Additional resources around the covered topics and links from the slides.

General Resources:

Other mentioned topics:

Upcoming calls | Recurrent invites:

PnP SharePoint Framework Special Interest Group bi-weekly calls are targeted at anyone who is interested in the JavaScript-based development towards Microsoft Teams, SharePoint Online, and also on-premises. SIG calls are used for the following objectives.

- SharePoint Framework engineering update from Microsoft

- Talk about PnP JavaScript Core libraries

- Office 365 CLI Updates

- SPFx reusable controls

- PnP SPFx Yeoman generator

- Share code samples and best practices

- Possible engineering asks for the field – input, feedback, and suggestions

- Cover any open questions on the client-side development

- Demonstrate SharePoint Framework in practice in Microsoft Teams or SharePoint context

- You can download a recurrent invite from https://aka.ms/spdev-spfx-call. Welcome and join the discussion!

“Sharing is caring”

Microsoft 365 PnP team, Microsoft – 21st of May 2021

by Contributed | May 21, 2021 | Technology

This article is contributed. See the original author and article here.

This is a continuation of Troubleshooting Node down Scenarios in Azure Service Fabric here.

Scenario#6:

Check the Network connectivity between the nodes:

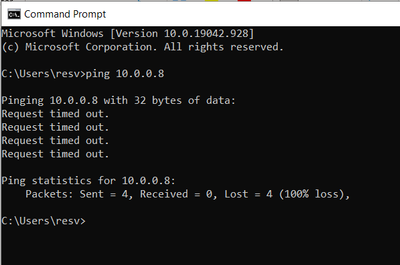

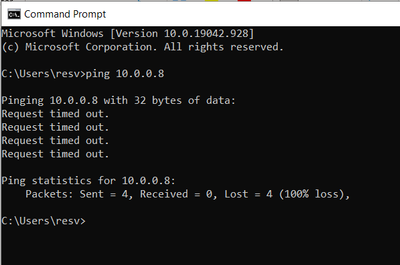

- Open a command prompt

- Ping <IP Address Of Other Node>

If request times out.

Mitigation:

Check if any NSG blocking the connectivity.

Scenario#7:

Node-to-Node communication failure due to any of the below reason could lead to Node down issue.

- If Cluster Certificate has expired.

- If SF extension on the VMSS resource is pointing to expired certificate, On VM reboot node may go down due to this expired certificate.

“extensionProfile”: {

“extensions”: [

{

“properties”: {

“autoUpgradeMinorVersion”: true,

“settings”: {

“clusterEndpoint”: “https://xxxxx.servicefabric.azure.com/runtime/clusters/xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx“,

“nodeTypeRef”: “sys”,

“dataPath”: “D:SvcFab”,

“durabilityLevel”: “Bronze”,

“enableParallelJobs”: true,

“nicPrefixOverride”: “10.0.0.0/24”,

“certificate”: {

“thumbprint”: “XXXXXXXXXXXXXXXXXXXXXXXXXXXXX”,

“x509StoreName”: “My”

}

- Make sure certificate is ACL’d to network service.

- If Reverse Proxy certificate has expired.

- If above are taken care, Go to Scenario#8.

Scenario#8:

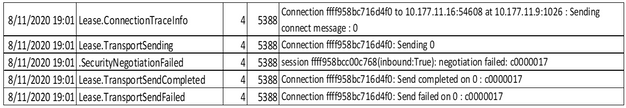

Node1 is not able to establish lease with a Neighboring node2 could cause node1 to do down.

From the SF traces:

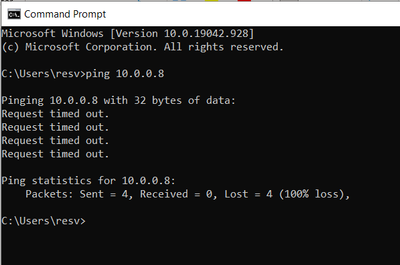

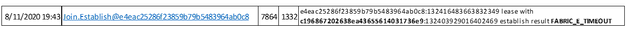

For example in the logs we see a node with Node ID “e4eac25286f23859b79b5483964ab0c8” (Node1) failed to establish lease with a node with Node ID “c196867202638ea43655614031736e9” (Node2)–

Now the focus should be on the node with which the lease connectivity is failing rather than the node which is down.

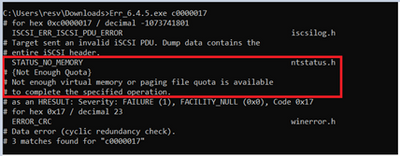

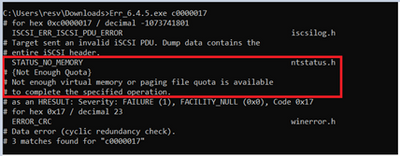

From above traces, we get the Error code: c0000017

To understand what this Error code means, please download Microsoft Error Lookup Tool.

And execute the exe by passing error code as Parameter:

Mitigation:

Restart the node (Node2) which could free up the Virtual Memory and start establishing the lease with Node1 to bring the Node1 Up.

by Contributed | May 21, 2021 | Technology

This article is contributed. See the original author and article here.

This is a continuation of Troubleshooting Node down Scenarios in Azure Service Fabric here

Scenario#5:

Virtual Machine associated with the node is healthy, but Service Fabric Extension being unhealthy could cause node to go down in Service Fabric cluster.

Analysis:

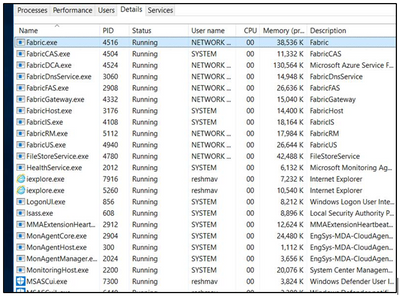

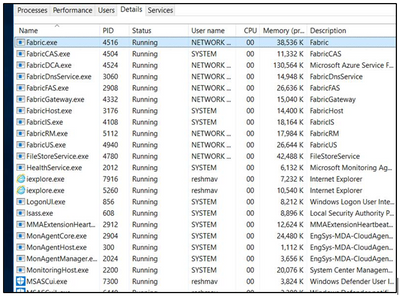

RDP into node, which is down. Open Task manager and Observe the Fabric processes.

If Fabric.exe and FabricHost.exe is crashing and Restarting often, then check Mitigation#1.

If ServiceFabricNodeBootStrapAgent.exe is crashing and Restarting often check Mitigation#2.

If FabricInstallerSvc.exe is crashing and Restarting often check Mitigation#3.

Mitigation#1:

- <path>/Cluster.current.xml

- Does it match manifest for cluster (compare with the one in SFX)

- No

- Does SFX indicate upgrades in progress?

- No upgrades in progress

- Go to <Path>

- Open Clustermanifest.current.xml

- Replace contents of Clustermanifest.current with contents of manifest in SFX.

- Save

- In task manager, select Fabric.exe if running and click on “End Task” button

- If Fabric.exe is not running, reboot VM.

- It will take a few minutes for node to become healthy.

- Node did not become healthy, start from beginning.

Path: D:SvcFab_Nodename_FabricClusterManifest.current.xml

Mitigation#2:

Check if this process listed in list of processes in Task Manager.

- If “Yes”:

- Wait a while to see if the node heals itself.

- This process tries to heal the failure at a coarse level by restarting the VM and reinstalling SF runtime.

- It waits for 15 minutes after an attempt to heal before taking the next action.

- Check ServiceFabricNodeBootstrapAgent.InstallLog – Check “From the Node” Path: C:PackagesPluginsMicrosoft.Azure.ServiceFabric.ServiceFabricNode<version>ServiceServiceFabricNodeBootstrapAgent.InstallLog

- Did not heal, go to “Event Viewer logs” for error details.

- If “No”:

- Go to Services tab in Task Manager and click on Open Services link at the bottom.

- Check the startup mode for the bootstrap service, make sure it is Automatic .

- Start service.

- If it stays running, go to “Yes” section above.

Mitigation#3:

Check if the connectivity of the Node is working.

For more details Refer to Part III – Troubleshooting Node down Scenarios.

by Contributed | May 20, 2021 | Technology

This article is contributed. See the original author and article here.

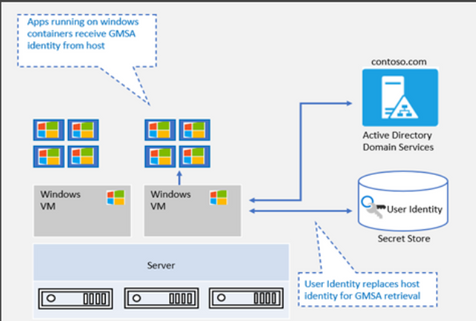

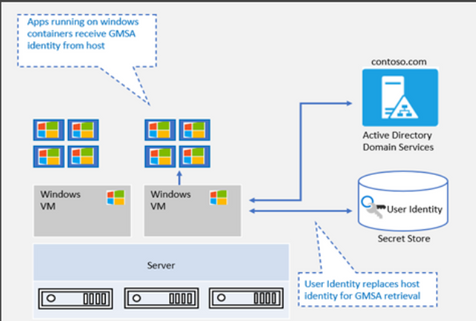

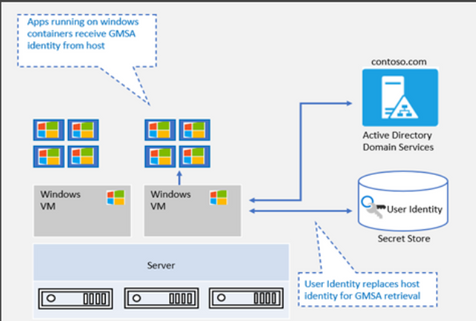

We’re very pleased to announce that Group Managed Service Account (gMSA) for Windows containers with non-domain joined host solution is now available in the recently announced AKS on Azure Stack HCI Release Candidate!

The Journey

Since the team started the journey bringing containers to Windows Server several years ago, we have heard from customers that the majority of traditional Windows Server apps rely on Active Directory (AD). We have made a lot of investments in our OS platform, such as leveraging Group Managed Service Accounts (gMSA) to give containers an identity and can be authenticated with Active Directory. For example, this blog showcased improvements in the Windows Server 2019 release wave: What’s new for container identity. We have also partnered with the Kubernetes community and enabled gMSA for Windows pods and containers in Kubernetes v1.18. This is extremely exciting news. But this solution needs Windows worker nodes to be domain joined with an Active Directory Domain. In addition, multiple steps need to be executed to install webhook and config gMSA Credential Spec resources to make the scenario working end to end.

To ease the complexities, as announced in this blog on What’s new for Windows Containers on Windows Server 2022 – Microsoft Tech Community, improvements are made in the OS platform to support gMSA with a non-domain joined host. We have been working hard to light up this innovation in AKS and AKS on Azure Stack HCI. We are very happy to share that AKS on Azure Stack HCI is the first Kubernetes based container platform that supports this “gMSA with non-domain joined host” end-to-end solution. No domain joined Windows worker nodes anymore, plus a couple of cmdlets to simplify an end-to-end user experience!

“gMSA with non-domain joined host” vs. “gMSA with domain-joined host”

gMSA with non-domain joined host |

gMSA with domain-joined host |

- Credentials are stored as K8 secrets and authenticated parties can retrieve the secrets. These creds are used to retrieve the gMSA identity from AD.

- This eliminates the need for container host to be domain joined and solves challenges with container host updates.

|

- Updates to Windows container host can pose considerable challenges.

- All previous settings need to be reconfigured to domain join the new container host.

|

|

|

Simplified end to end gMSA configuration process by build-in cmdlets

In AKS on Azure Stack HCI, even though you don’t need to domain join Windows worker nodes anymore, there are other configuration steps that you can’t skip. These steps include installing the webhook, the custom resource definition (CRD), and the credential spec, as well as enabling role-based access control (RBAC). We provide a few PowerShell cmdlets to simply the end-to-end experience. Please refer to Configure group Managed Service Accounts with AKS on Azure Stack HCI.

Getting started

We have provided detailed documentation on how to integrate your gMSA with containers in AKS-HCI with non-domain joined solution.

- Preparing gMSA in domain controller.

- Prepare the gMSA credential spec JSON file (This is a one-time action. Please use the gMSA account in your domain.)

- Install webhook, Kubernetes secret and add Credential Spec.

- Deploy your application.

If you are looking for this support on AKS, you can follow this entry on AKS Roadmap [Feature] gMSA v2 support on Windows AKS · Issue #1680.

As always, we love to see you try it out, and give us feedback. You can share your feedback at our GitHub community Issues · microsoft/Windows-Containers , or contact us directly at win-containers@microsoft.com.

Jing

Twitter: https://twitter.com/JingLi00465231

by Contributed | May 20, 2021 | Technology

This article is contributed. See the original author and article here.

It’s hard to believe but it’s this time of the year again when we get to connect and you get to learn at Microsoft Build.

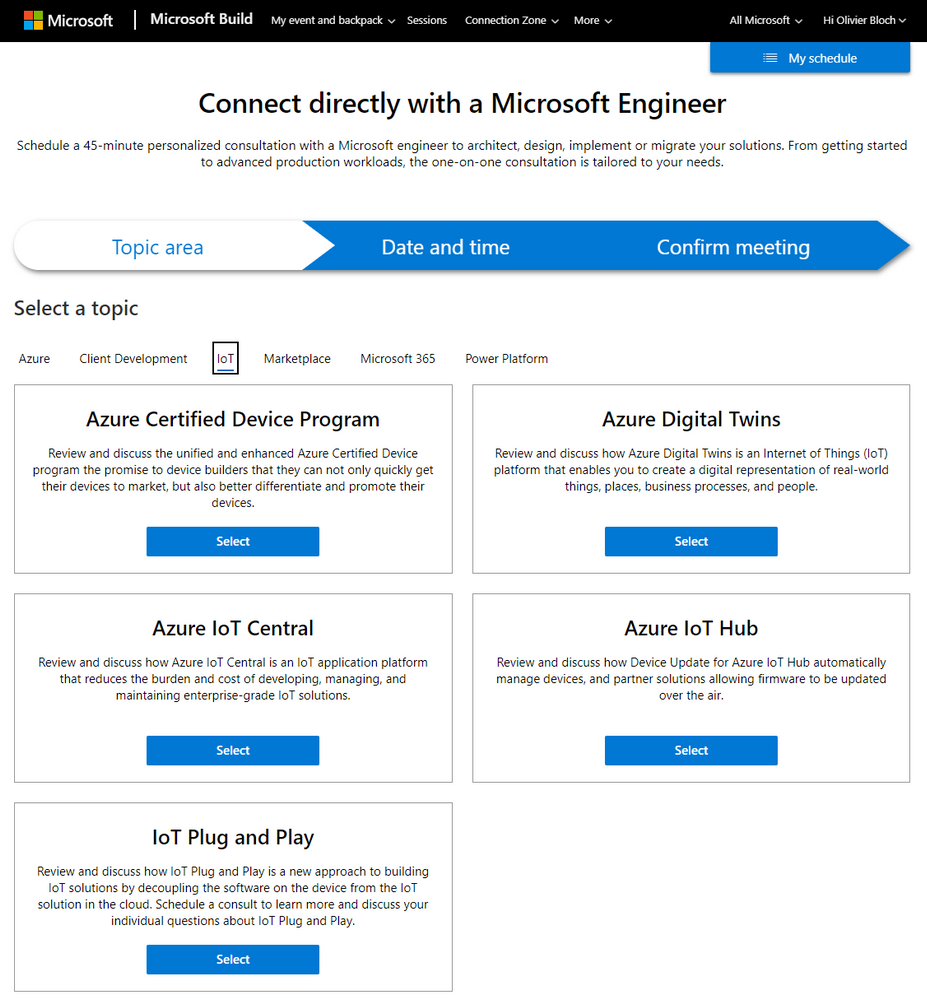

For this edition, the way you will be able to engage with the IoT team will be even more intimate than in the past with a series of Product Round Table sessions, as well as 1:1 consultation. RSVP rapidly as seats are need be reserved for these ones.

If you were to only watch one session, we highly recommend you tune in Sam George’s keynote: Building Digital Twins, Mixed Reality and Metaverse Apps. It will be played a couple times on Wednesday, May 26 | 2:00 PM – 2:30 PM Pacific Daylight Time and Thursday, May 27

6:00 AM – 6:30 AM PDT.

In addition to these opportunities to connect with the team, we will deliver some sessions.

Here is a list of all IoT sessions going on at Build this year:

Title

|

Speaker(s)

|

Type of session

|

Building Digital Twins, Mixed Reality and Metaverse Apps

|

Sam George

|

Breakout

|

Ask the Experts: Bringing Azure Linux workloads to Windows

|

Terry Warwick

|

Connection Zone

|

ConnectIoT data to Hololens 2 with Azure Digital Twins and Unity

|

Brent Jackson, Adam Lash

|

Connection Zone

|

Ask the Experts: Building Digital Twins, Mixed Reality and Metaverse Apps

|

Kence Anderson, Chafia Aouissi, Ines Khelifi, Christian Schormann, Simon Skaria, Scott Stanfield

|

Connection Zone

|

Build Secured IoT solutions for Azure Sphere with IoT Hub

|

David Glover, Mike Hall, Daisuke Nakahara

|

On-Demand

|

Round table: Simplifying IoT solution development

|

John Strohschein, Lori Birtley, Samantha Neufeld, Sarah Grover

|

Product round table

|

Round table: Azure Sphere: securing IoT devices and lowering your costs

|

Gregg Boer, Megha Tiwari, Rebecca Holt, Sudhanva Huruli, Vladimir Petrosyan

|

Product round table

|

Round table: Build connected environment solutions – Architecture patterns

|

Basak Mutlum, Chafia Aouissi, Christian Schormann, Ines Khelifi, Steve Busby

|

Product round table

|

Round table: Industrial IoT analytics with Azure Time Series Insights

|

Chris Novak, Ellick Sung

|

Product round table

|

Round table: Verfied Telemetry – enhancing data quality of IoT devices

|

Ajay Manchepalli, Akshay Nambi, Ryan Winter

|

Product round table

|

Round table: IoT semiconductor ecosystem: building and connecting secured devices

|

Bill Lamie, James Scott, Joseph Lloyd, Mahti Daliparthi, Marc Goodner, Mike Hall, Pamela Cortez, Rebecca Holt, Steve Patrick, Sudhanva Huruli

|

Product round table

|

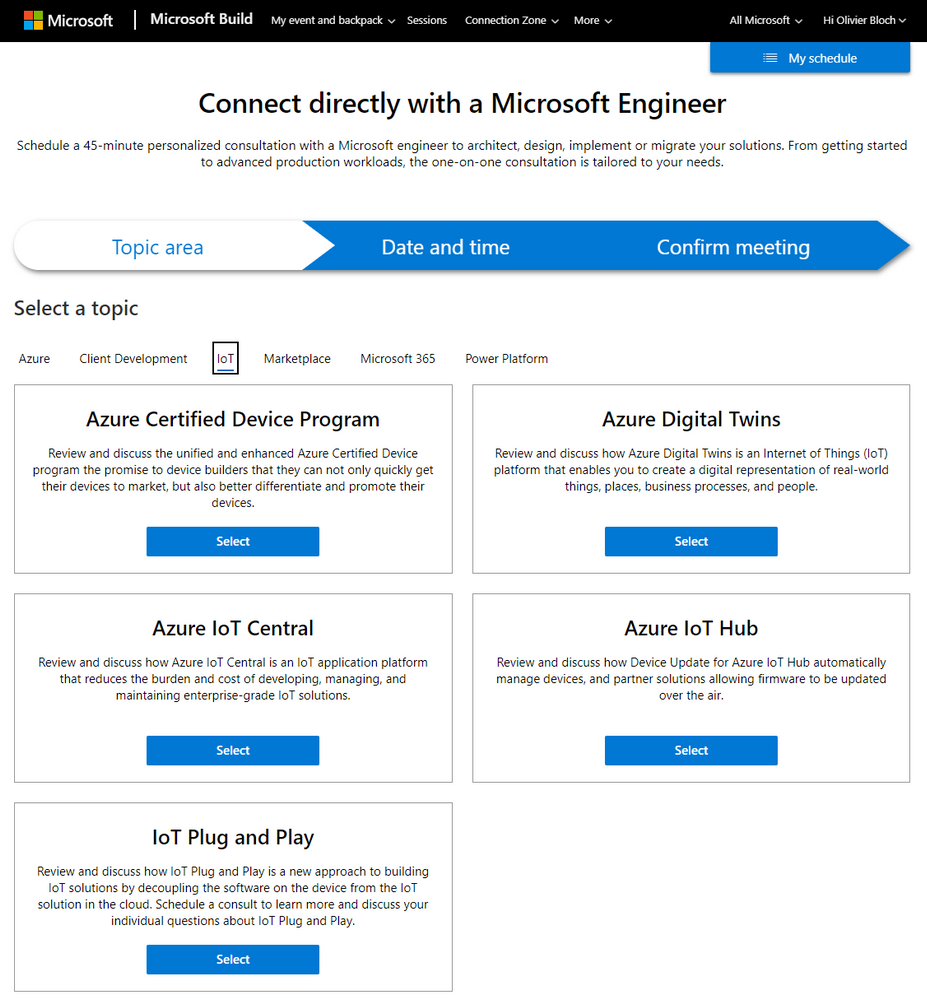

For 1:1 consultation with Microsoft engineers, you can find the IoT ones on this page under the IoT tab:

As usual we will update this blog post with more content, pointers and resources.

Have a great Microsoft Build 2021!

by Contributed | May 20, 2021 | Technology

This article is contributed. See the original author and article here.

Go hybrid or go home. Wait, you can either stay home or join in-person. Win/Win!

The Microsoft 365 Collaboration Conference is a unique ‘hybrid’ event in Orlando, Florida. ‘Hybrid’ for everyone = speakers and attendees participating in person and virtually; for those who can travel safely as the vaccine rollout continues and virtually for those who are unable to join us in-person safely.

The event brings together business leaders, IT pros, developers, and consultants to learn how technology can power teamwork, employee engagement and communications, and organizational effectiveness. Each session is delivered by acclaimed presenters – thought leaders, engaged MVPs and product members from Microsoft working on Microsoft 365, Microsoft Teams, SharePoint, and Power Platform.

The Microsoft 365 Collaboration Conference is a unique ‘hybrid’ event in Orlando, Florida with three unique Microsoft keynotes.

The Microsoft 365 Collaboration Conference is a unique ‘hybrid’ event in Orlando, Florida with three unique Microsoft keynotes.

You’ll find over 200 sessions, panels, and workshops for everyone who works with Microsoft 365, presented by Microsoft’s leaders and experts from around the world. Below, you can review the subset of keynotes and sessions delivered by Microsoft employees from the product groups.

The Microsoft 365 Collaboration Conference embraces all of Microsoft 365: Microsoft Teams, SharePoint, Power Platform, OneDrive, Yammer, Microsoft Stream, Outlook, Office applications, Power Apps, Power BI, Power Automate and more.

Virtually

|

In-person

|

Early Bird (April 15, 2021 – May 10, 2021):

Full Conference Only: $599

Virtual Show Package 1: $898

Virtual Show Package 2: $1197

Virtual Show Package 3: $1496

Pre-conference OR Post-conference: $399

|

Early Bird (April 15, 2021 – May 10, 2021):

Full Conference Only: $1799

Virtual Show Package 1: $2248

Virtual Show Package 2: $2697

Virtual Show Package 3: $3146

Pre-conference OR Post-conference: $699

|

Regular (After May 10, 2021):

Full Conference Only: $599

Virtual Show Package 1: $898

Virtual Show Package 2: $1197

Virtual Show Package 3: $1496

Pre-conference OR Post-conference: $399

|

Regular (After May 10, 2021):

Full Conference Only: $1899

Virtual Show Package 1: $2348

Virtual Show Package 2: $2797

Virtual Show Package 3: $3246

Pre-conference OR Post-conference: $699

|

Microsoft keynotes and sessions (all times listed in the US EST time zone)

Microsoft keynote sessions:

- Day One Keynote | “Microsoft 365: Your key to delivering on employee wellbeing and productivity goals” by @Karuana Gatimu | June 8th, 9am-10am

- Day Two Keynote | “The future of work: productivity and employee experience” by @Dan Holme | June 9th, 9am-10am

- Day Three Keynote | “What’s new and what’s next for the Microsoft Power Platform” by Charles Lamanna | June 10th, 12m-1pm

Microsoft breakouts sessions:

- “Practical guidance for driving Microsoft 365 adoption” by Karuana Gatimu (6/8 10:30am – 11:30am)

- “Meet Microsoft Viva: a new kind of employee experience” by John Mighell (6/8 12pm – 1pm)

- “Building a vibrant community – from inclusive campaigns to empowering your groups” by Laurie Pottmeyer and Josh Leporati (6/8 12pm – 1pm)

- “Governance best practices for Office 365, including Microsoft Teams guidance” by Karuana Gatimu (6/8 2:15pm – 3:15pm)

- “Get to know Microsoft Lists” by Mark Kashman and Harini Saladi (6/8 3:45pm – 4:45pm)

- “Roadmap to end user learning with Microsoft 365” by Josh Leporati (6/8 3:45pm – 4:45pm)

- “What’s new for intelligent file experiences across Microsoft 365” by Ankita Kirti and Stephen Rice (6/9 10:30am – 11:30am)

- “How Visio integrates with Microsoft 365 apps to enhance virtual collaboration” by Nishant Kumar (6/9 12pm – 1pm)

- “Meeting & virtual event best practices” by Karuana Gatimu (6/9 12pm – 1pm)

- “The Latest in Microsoft Teams” by Karuana Gatimu (6/9 2:15pm – 3:15pm)

- “Tasks, Planner, & To-Do: Decrease stress and increase productivity” by TBA (6/9 3:45pm – 4:45pm)

- “Modern Calling – How Teams changes the way we communicate” by Sean Wilson (6/10 9am – 10am)

- “IT Pro deep dive – Microsoft Teams” by Stephen Rose (6/10 10:30am – 11:30am)

- “What’s new and next for Microsoft Search” by Bill Baer (6/10 10:30am – 11:30am)

- “SharePoint + Teams: Powering content collaboration” by Cathy Dew (6/10 2pm – 3pm)

- “Architecting your intelligent intranet” by DC Padur and Melissa Torres (6/10 3:30pm – 4:30pm)

View all Microsoft 365 Collaboration Conference (Orlando, FL) sessions.

The event brings together business leaders, IT pros, developers, and consultants safely for those who can travel as the vaccine rollout continues and virtually for those who are unable to join in-person.

The event brings together business leaders, IT pros, developers, and consultants safely for those who can travel as the vaccine rollout continues and virtually for those who are unable to join in-person.

BONUS | A word from @Jeff Teper about the broader value of the event (previously known as SharePoint Conference):

Thanks, Mark Kashman, senior product manager – Microsoft

by Contributed | May 20, 2021 | Technology

This article is contributed. See the original author and article here.

Overview

In 2021, there will be a blog covering the webinar of the month for the Low-code application development (LCAD) on Azure solution.

LCAD on Azure is a solution that integrates the robust development capabilities of low code Microsoft Power Apps and the Azure products such as Azure Functions, Azure Logic Apps, and more.

This month’s webinar is ‘Increase Efficiency with Azure Functions and Power Platform’.

This blog will briefly recap Low-code application development on Azure, provide an overview of Azure Functions reusability, Durable Functions, and how to integrate Functions across the Power Platform.

This is a helpful blog for those new to Azure Functions and those who want to start integrating Azure Functions into their Power Platform build cases.

What is Low code application development on Azure?

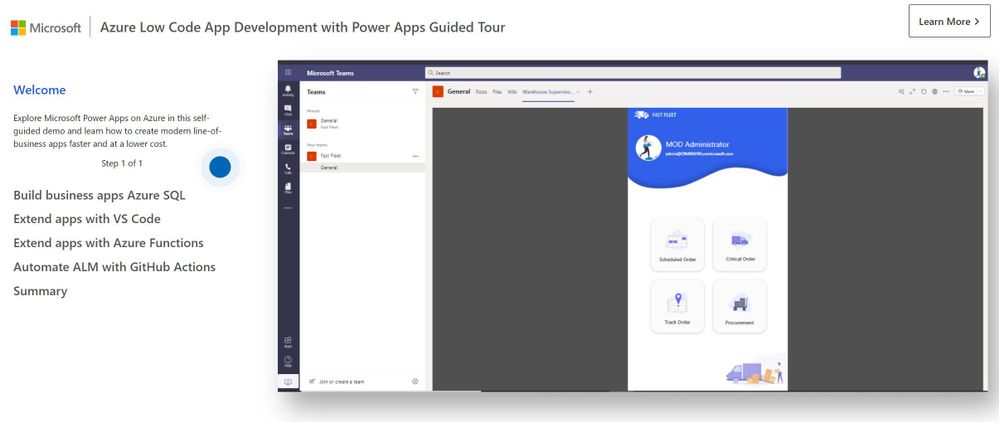

Low-code application development (LCAD) on Azure was created to help developers build business applications faster with less code.

Leveraging the Power Platform, and more specifically Power Apps, yet helping them scale and extend their Power Apps with Azure services.

For example, a pro developer who works for a manufacturing company would need to build a line-of-business (LOB) application to help warehouse employees track incoming inventory.

That application would take months to build, test, and deploy. Using Power Apps, it can take hours to build, saving time and resources.

However, say the warehouse employees want the application to place procurement orders for additional inventory automatically when current inventory hits a determined low.

In the past that would require another heavy lift by the development team to rework their previous application iteration.

Due to the integration of Power Apps and Azure a professional developer can build an API in Visual Studio (VS) Code, publish it to their Azure portal, and export the API to Power Apps integrating it into their application as a custom connector.

Afterwards, that same API is re-usable indefinitely in the Power Apps’ studio, for future use with other applications, saving the company and developers more time and resources.

To learn more about possible scenarios with LCAD on Azure go through the self-guided tour.

Azure Functions Reusability

Why should you reuse functionality? There are four key reasons: shorter development time, consistency, easier testing, and live proven code.

The shorter development time is driven by not having to build code again.

For example, if you’re validating a phone number with your application you don’t want to have to re-write the code for each nuanced small scenario, such as rebuilding a web app, then a portal, etc.

Not re-writing code even if it is being plugged into a new app enables shorter development time.

Additionally, this ties into greater consistency in your code creating a much cleaner user experience across platforms and devices.

Reuse of functionality also enables easier testing.

When reusing functionality you can automate tests, however if you write new code each time, for each iteration you must manually test the code, subsequently increasing development time.

However, if you reuse functionality, once set up and spun up, every time you test apps down the line, all you need to do is check the Azure Function connection rather than starting from scratch.

Lastly, is the advantage of live proven code, which can’t be overstated. The separate aspects of functionality are already proven to work, therefore speeding up the application development lifecycle.

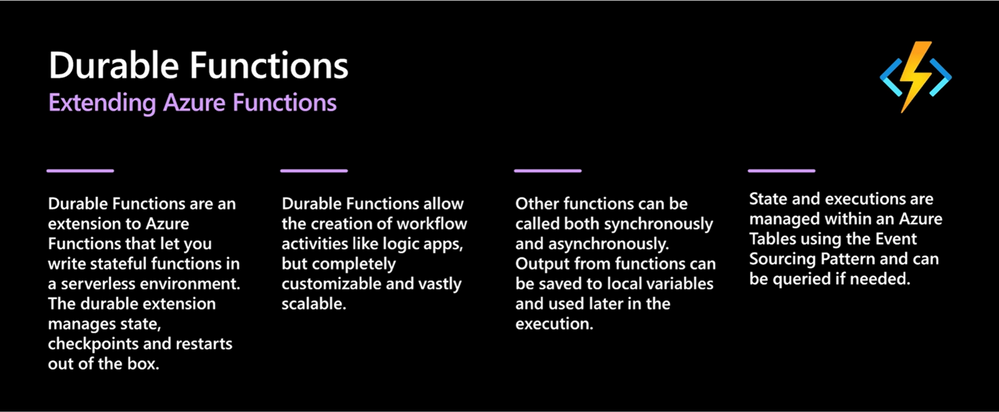

Durable Functions

Durable Functions are an extension of Azure Functions, that let you write stateful functions in a serverless environment. The durable extension manages state, checkpoints and restarts out of the box.

Durable Functions allow the creation of workflow activities like Logic Apps but are completely customizable and scalable.

Durable Functions can be called both synchronously and asynchronously. Output from Functions can be saved to local variables and used later in execution.

State and executions are managed within an Azure Table using the Event Sourcing Pattern and can be queried if needed.

For example, if you want to fill in a field on a form and need to check input information across multiple databases, the orchestration capabilities of Durable Functions enable that functionality.

Moreover, if you need all the tasks to happen at the same time or need them to happen in different patterns, you could build that functionality in Power Automate or Logic apps.

Leveraging Durable Functions enables greater detail and options for functionality.

Lastly, these Functions scale rapidly to meet demand levels, however when inactive they rest until called upon again.

Functions Integration across the Power Platform

Power Apps

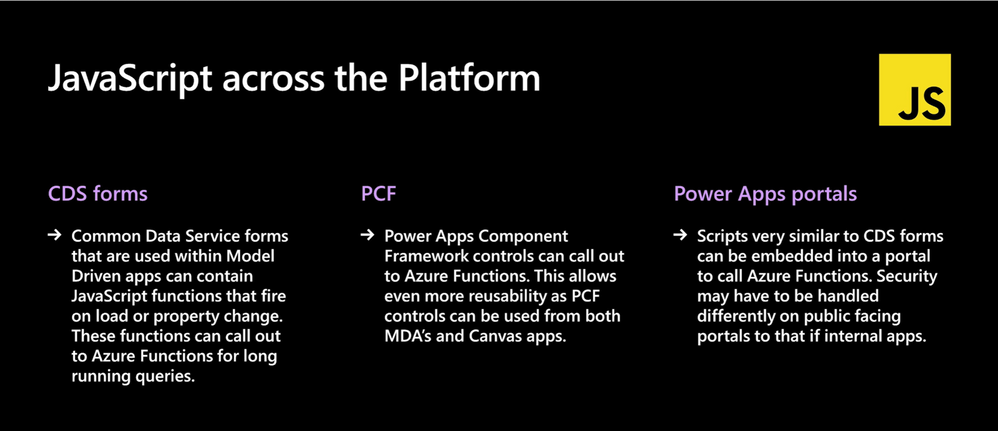

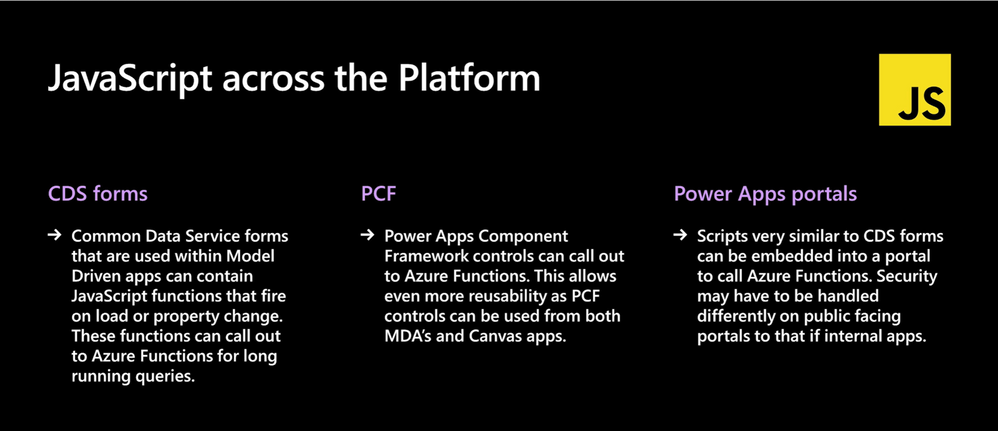

There are 3 types of Power Apps available to integrate with Azure Functions. Note that this blog will be covering JavaScript, however you can write Azure Functions in any language.

First, there are Dataverse forms. Dataverse forms are used within Model Driven applications that can contain JavaScript Functions that fire on load or property change. These functions can call out to Azure Functions for long running queries. Thus, enabling your colleagues to leverage your Azure Function.

Second, are Power Component Framework controls (PCFs). They are a web packet that you can put in both model-driven app forms and canvas apps. The code can be called from either place, if used to call out an Azure Function it creates a double layer of reusability and can separate deployment for use across your business.

Third, are Power Apps Portals. These scripts are very similar to Dataverse forms and can be embedded into a portal to call any web API and call an Azure Function from the portal. Security will have to be handled differently for public facing portals than internal applications.

Power Automate

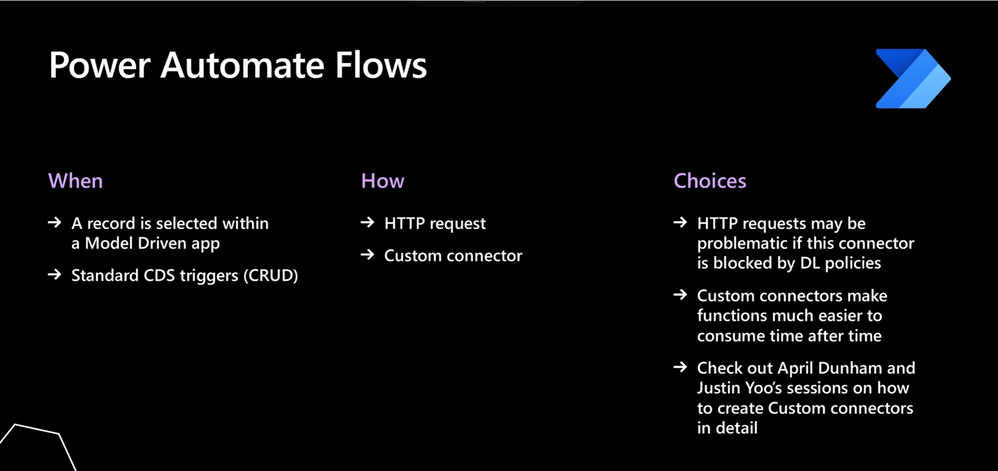

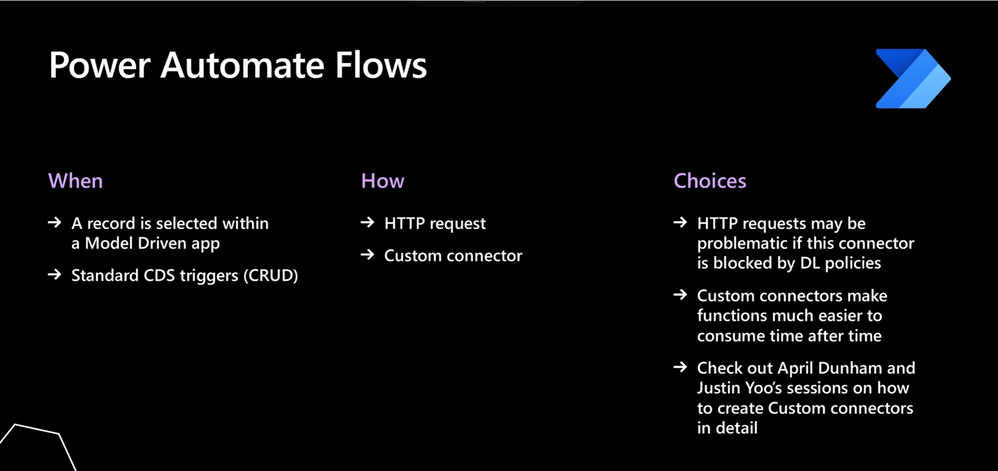

In the webinar Lee Baker covers the stages of when and how to connect a Power Automate flow to an Azure Function.

When? You can start when a record is selected in a model driven app, hitting the on-demand flow button, pushes those records to Power Automate. Or you can use standard Dataverse triggers when creating, reading, updating, and deleting (CRUD).

How? HTTP request actions from Power Automate or Logic Apps, can put data or URLs incorporate and get payloads back to use in Azure Functions, or you can build a custom connector.

You would build a custom connector because HTTP requests are often blocked by data loss policies in Power Platform environments but can circumnavigate policies.

Custom connectors can be created in accordance with data loss policies but pull the HTTP request directly into the canvas application via Azure Functions for a secure and streamlined approach.

Conclusion

This is just the beginning of what is possible with the integration of APIs into Power Apps via Azure Functions. To learn more about the integration of Azure Functions and Power Apps watch the webinar covered in this blog titled “Increase Efficiency with Azure Functions and Power Platform”.

To get hands on experience creating a custom connector and extending a Power App with custom code as covered in this blog, start with the new learning path “Transform your business applications with fusion development”.

After completing the learning path, if you want to learn even more about how extend your low code applications with Azure and establishing a fusion development team in your organization read the accompanying e–book “Fusion development approach to building apps using Power Apps”.

by Contributed | May 20, 2021 | Technology

This article is contributed. See the original author and article here.

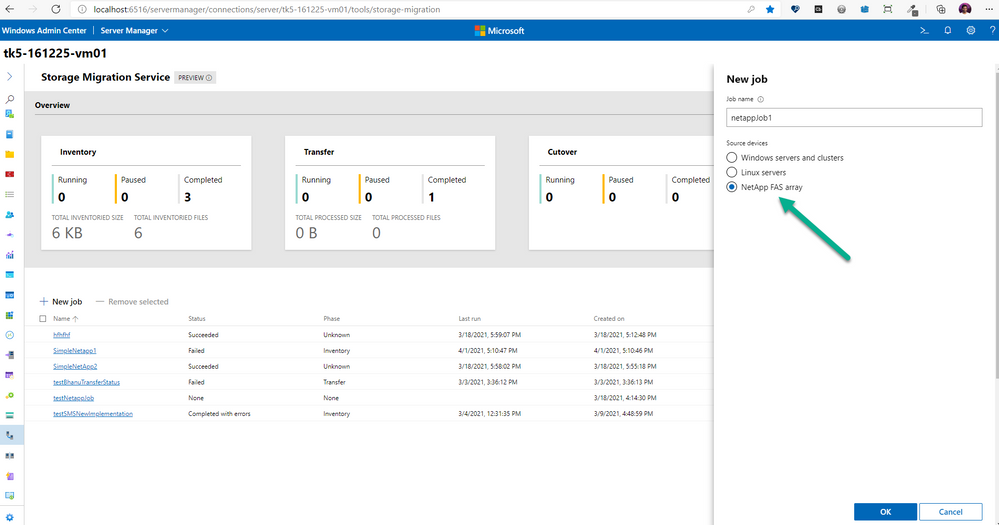

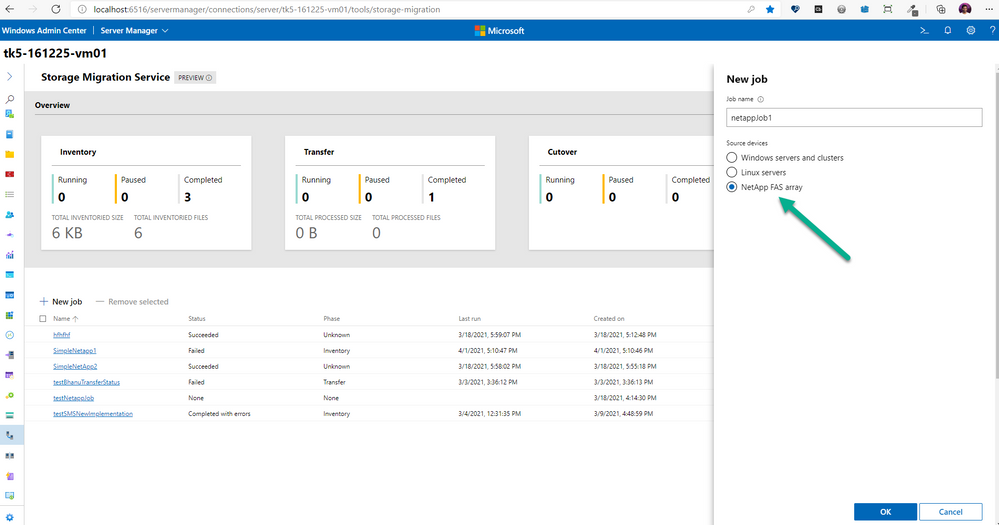

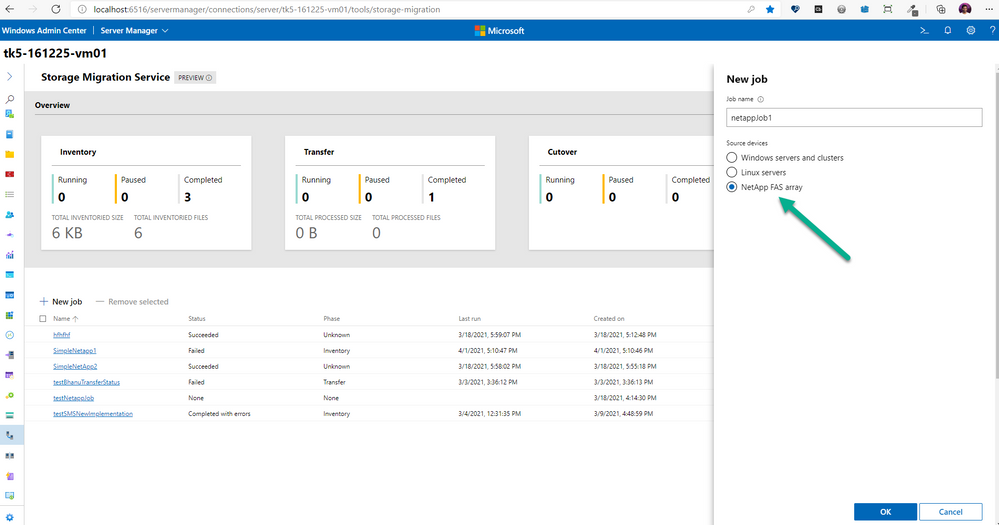

Heya folks, Ned here again. Eagle-eyed readers may have noticed in the April 22, 2021-KB5001384 monthly update for Windows Server 2019 – and now in the May 2021 Patch Tuesday – we added support for migrating from NetApp FAS arrays onto Windows Servers and clusters. I already updated all our SMS documentation on https://aka.ms/storagemigrationservice so you’re good-to-go on steps. There’s a new version of the Windows Admin Center SMS extension that will also automatically update to support the scenario.

We tried to make this experience change as little as possible from the previous scenarios of migrating from Windows Server and Samba. The one big thing to know – and we reiterate this in the docs and WAC – is that you must have a NetApp support contract, because you need to install the NetApp PowerShell Toolkit, which is only available behind that licensed customers-only support site.

You’ll see the new option once you patch your orchestrator server with the May monthly update, update your WAC SMS extension, and install the NetApp PowerShell toolkit on your orchestrator:

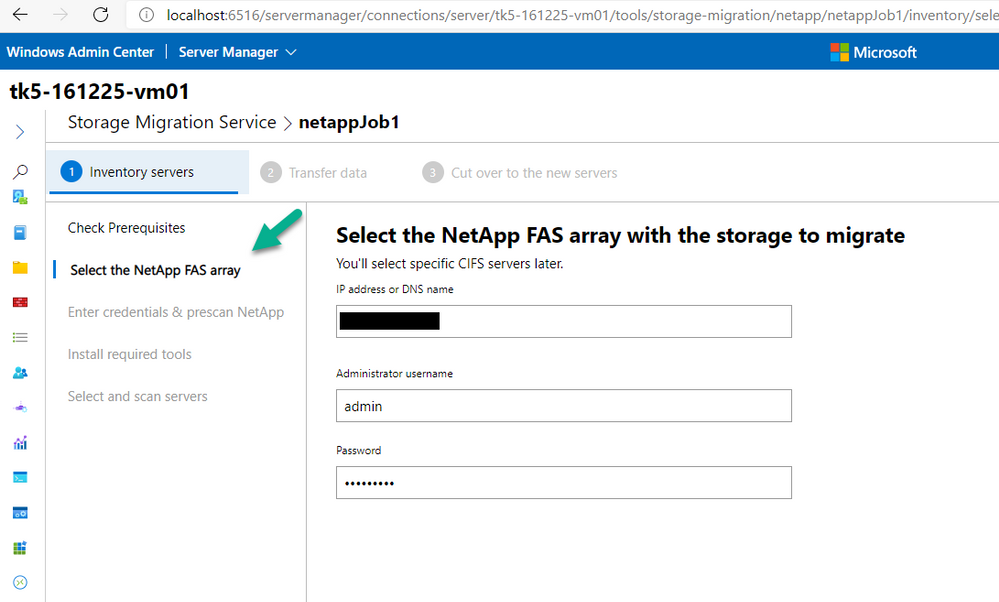

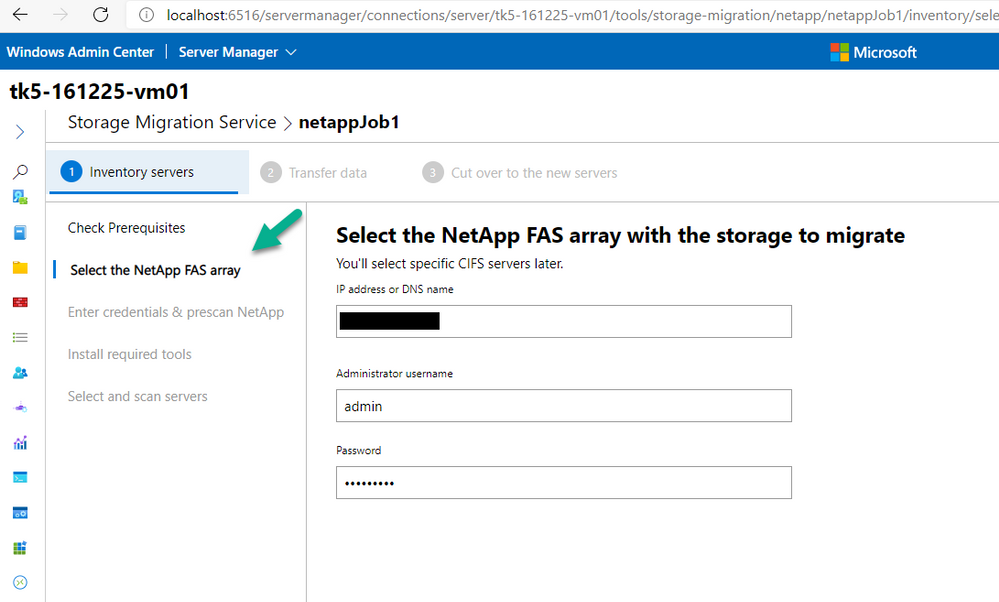

After you create a new job and see the new Prerequisites helper screen, you simply give the network info and creds for your NetApp FAS array and we’ll find all the CIFS (SMB) SVMs running. It’s actually a bit easier than Windows Server sources; since there is a known host array to enumerate, we save you typing in all the SMB servers.

Then you provide the Windows admin source credentials just like usual, and pick which CIFS (virtual) servers you want to migrate.

After that, the migration experience is almost exactly the same as you’re used to with Windows and Samba source migrations. You still perform inventory, transfer, cutover just like before with exactly the same steps. The one difference is that since NetApp CIFS servers don’t use DHCP, you will choose to assign static IP addresses or use NetApp “subnets” before you start cutover. Voila.

I’ll get a video demo of the experience up here when I find the time. Busy bee with many new things coming soon, eating up my blogging time. :D

– Ned “NedApp” Pyle

by Contributed | May 20, 2021 | Technology

This article is contributed. See the original author and article here.

If you’ve started down the path of developing applications for Microsoft Teams, you may have seen a tool called ngrok as a prerequisite in various tutorials and lab exercises. It’s also integrated with a number of tools such as the Microsoft Teams Toolkit and the yo teams generator; even the Bot Framework Emulator has an ngrok option.

If you’ve started down the path of developing applications for Microsoft Teams, you may have seen a tool called ngrok as a prerequisite in various tutorials and lab exercises. It’s also integrated with a number of tools such as the Microsoft Teams Toolkit and the yo teams generator; even the Bot Framework Emulator has an ngrok option.

This article will explain what ngrok is, why it’s useful, and what to do instead if you or your company are uncomfortable using it. Also, please check out the companion video for this article, which includes live demos of Teams development with and without ngrok.

Grokking ngrok

First the shocking truth: Microsoft Teams applications don’t run in Teams. OK maybe it’s not shocking, but it’s true. Teams applications are made up of regular web pages and web services that you can host anywhere on the Internet. Teams tabs and task modules (dialog boxes) are just embedded web pages; bots and messaging extensions are web services. This allows developers to use their choice of web development stack, and to reuse code and skills.

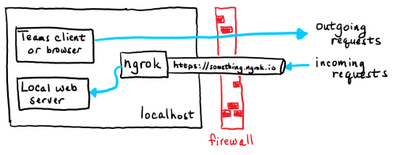

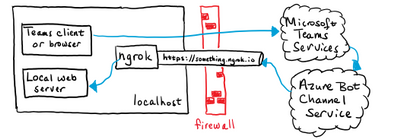

Web developers typically host a small web server on the same computer where they edit their code to run and debug their applications. For Microsoft Teams developers in particular, ngrok is very handy in this situation. Here’s why:

ngrok provides the encryption needed for https, which is required for Teams applications. It’s set up using a trusted TLS certificate so it just works immediately in any web browser.

ngrok provides a tunnel from the Internet to your local computer which can accept incoming requests that are normally blocked by an Internet firewall. The requests in this case are HTTP POSTs that come from the Azure Bot Service. Though tunneling is not required per se, there has to be some way for the Azure Bot Service to send requests to the application.

ngrok provides name resolution with a DNS names ending in ngrok.io, so it’s easy to find the public side of the Internet tunnel.

ngrok makes mobile device testing easier since any Internet-connected phone or tablet can reach your app via the tunnel. There’s no need to mess with the the phone’s network connections; it just works.

These conveniences have made ngrok the darling of many Microsoft Teams developers. It lets them compile, run, and debug software locally without worrying about any of these details.

The tunneling part, however, lacks the guard rails expected by many IT professionals, especially if they’re managing a traditional corporate network that relies on a shared firewall or proxy server for security. While ngrok only provides access to the local computer where it’s run, an insider “bad actor” could launch attacks from such a machine. For that reason, some IT departments block all access to ngrok.

Many colleagues have suggested other tunneling applications such as Azure Relay or localtunnel. While they may do the job, they still open a tunnel from the public Internet to your development computer, and thus the same security concerns usually arise. This article will only consider approaches that don’t involve tunneling from the Internet.

Tunneling explained

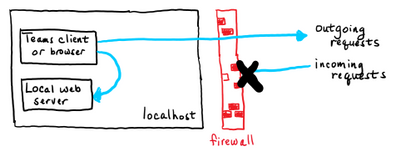

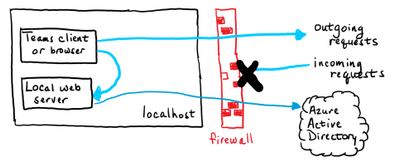

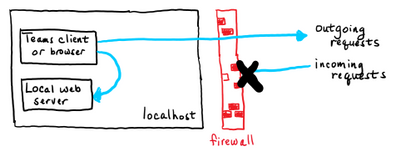

Most computers that are connected to the Internet aren’t connected directly. Network traffic passes through some kind of firewall or NAT router to reach the actual Internet. The firewall allows outgoing requests to servers on the Internet but blocks all incoming requests. This is largely a security measure, but it has other advantages as well.

A typical web developer runs some kind of web server on their local computer. They can access that web server using the hostname localhost, which routes messages through a “loopback” service instead of sending them out on the network. As you can see in the picture, these messages don’t go through the Internet at all.

But what if, in order to test the application, it needs to get requests from the Internet? Bots are an example of this; the requests come from the Azure Bot service in the cloud, not from a local web browser or other client program. Another place this comes up is when implementing webhooks such as Microsoft Graph change notifications; the notifications are HTTP(s) requests that come from the Internet.

The problem is these incoming requests are normally blocked by the firewall. If you’ve ever opened a port on your home router to allow a gadget to receive incoming connections, this is the same situation.

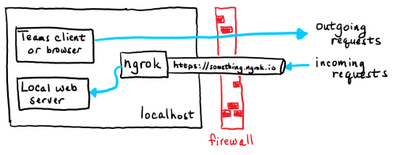

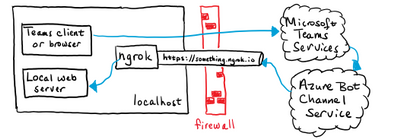

With ngrok running, the incoming requests go through the ngrok service and into your locally running copy of the ngrok application. The ngrok application calls the local server, allowing the developer to run and debug the web server locally.

In the case of Teams development, tabs and task modules only require a local loopback connection, whereas bots and messaging extensions have to handle incoming requests from the Internet. Each is explained in more detail later in this article.

Using ngrok

ngrok is a command line program that works on Windows, Mac OS, Linux and FreeBSD.

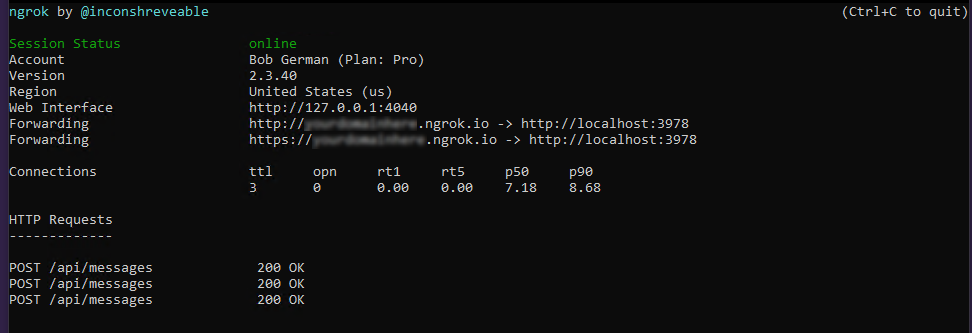

Suppose your local web server is at http://localhost:3978 (the default for bots). Then run this ngrok command line:

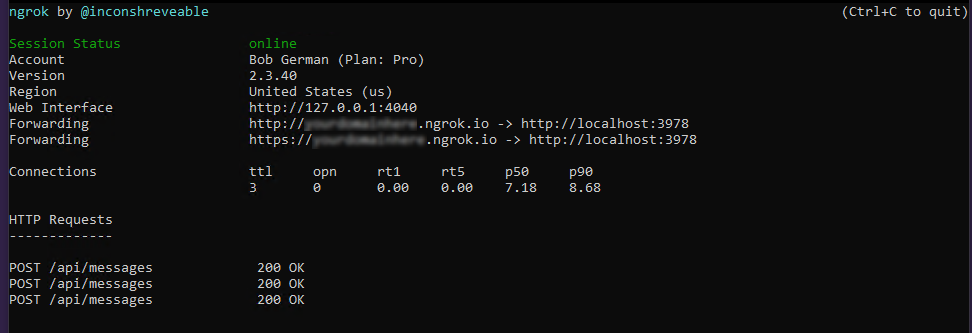

You will then see a screen like this:

The “Forwarding” lines show what’s happening. Requests arriving at http://(something).ngrok.io or https://(something).ngrok.io will be forwarded to http://localhost:3978 where your bot code is running. At this point you would put the “something.ngrok.io” address into your Azure bot configuration, Teams app manifest etc. as the location, and leave the command running while you debug your application.

ngrok url

The external ngrok url will always look like this:

https://(something).ngrok.io

With the free ngrok service, the value of (something) is different every time you run ngrok, and you’re limited to an 8-hour session. Every time you get a new hostname you need to update your configuration; depending on what you’re doing and how many places you had to enter the hostname, this can be a chore. The paid plans allow you to reserve names that will persist, so you can just start ngrok and and you’re ready to go.

Hint: If you’re doing a tutorial using the free version of ngrok, make a note of every place you use the ngrok URL. That way you can easily remember where to update it when it changes.

The example above assumes that your local server is running http on the specified port. If your local server is running https you need a different command or it won’t connect:

ngrok http https://localhost:3978

Host header rewriting

The HTTP(s) protocol includes a header called Host, which should contain the host (domain) name and port of the web server. This is used for routing requests to the right server, and for allowing a single web server to serve multiple web sites and services.

If your debug server ignores the host header, you can ignore this section. But some servers will require the header to match the host name, such as localhost:3978. The challenge is that if the request was sent to 12345.ngrok.io, the originator will probably put that in the host header when the server is expecting localhost:3978. To handle this, ngrok provides a command line argument that replaces the host headers with the local host name. Simply add the -host-header command switch to enable this.

For example,

ngrok http -host-header=localhost:3978 3978

If you see the messages go through to your local web server but it doesn’t respond, it may be expecting a specific host header.

All the command options are detailed in the ngrok documentation.

Network tracing

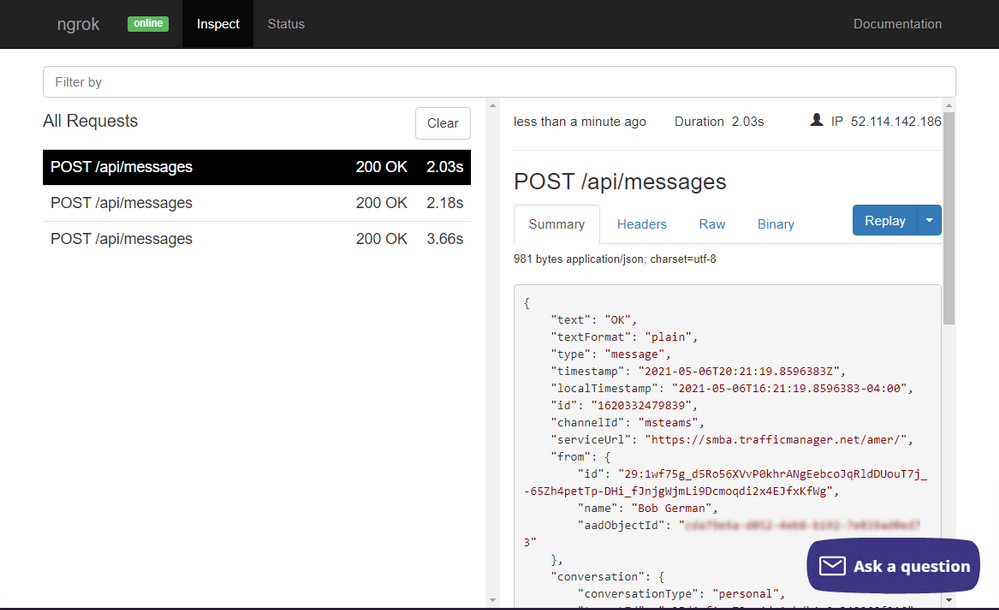

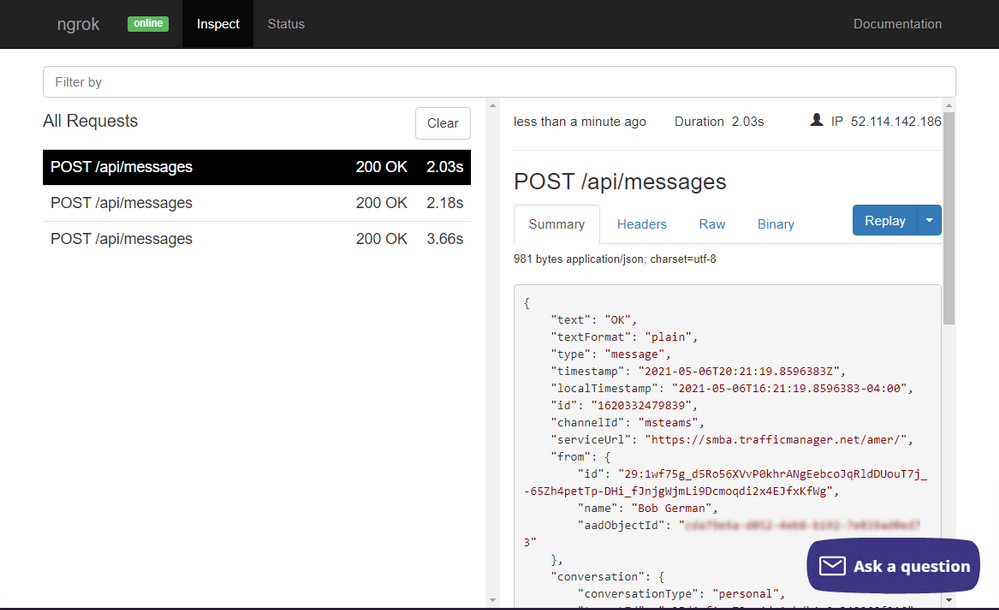

You might notice that the ngrok screen shows a trace of requests that went through the tunnel; in this case they’re HTTP POST requests from the Azure Bot Service, and the local server returned a 200 (OK) response. This is handy because you can see a 500 error from your server code by just glancing at the ngrok screen.

You might also notice the “Web interface” url on the ngrok screen. It provides a full network trace of what went through the tunnel, which can be very helpful in debugging.

Developing Tabs and Web-Page based Teams features

Some Teams application features are based on web pages provided by your application:

- Tabs and Tab Configuration Pages

- Task Modules (dialog boxes)

- Connector Configuration Pages

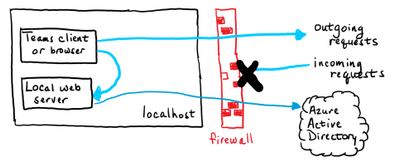

These features are backed by ordinary web pages that are displayed in an IFrame within the Microsoft Teams user interface. Tabs using the Azure Active Directory Single Sign-On (SSO) option also need to implement a web service to do a token exchange. Accessing these via localhost is no problem; no tunnel is required.

However Teams does require the web server to use a trusted https connection or it won’t display. ngrok translates trusted https requests into local http requests, so it just works. But if you’d rather not have a tunnel to the Internet as part of your setup, you can do this all locally. It’s just more work.

Setting up a trusted https server

On a NodeJS server, you can usually enable https by editing the .env file and setting the HTTPS property to true. For .NET projects in Visual Studio, under project properties on the “Debug” tab, check the “Enable SSL” box to enable https.

But alas, just turning on the https protocol is generally not enough to satisfy this requirement; the connection must be trusted. Trust is established by a digital certificate; if the certificate comes from a trusted authority, is up-to-date, and matches the hostname in the URL, the little padlock in your web browser lights up and all is well. If not, you get errors that you can bypass in most web browsers, but not in Microsoft Teams.

The people over at ngrok acquired their certificate from a trusted authority, and it’s set to match hostnames ending in ngrok.io, so it just works without any fuss. The local web server, on the other hand, will most likely have a self-signed, untrusted certificate. So the trick is to get your browser and/or Microsoft Teams to trust it.

An option that often works is to browse to the local server from a regular web browser, click the security error, and tell the browser to trust the certificate. You can then run Teams in the same browser and bypass the issue. If the Teams client shares the same certificate store as your browser, it will also work. However these default certificates generally expire after a month or so, and the process will need to be repeated.

A better option is to generate your own certificate and tell your computer to trust it. That way you can control the expiration date and reuse the certificate on multiple projects, so you only need to do the setup once. This is explained in the article, Setting up SSL for tabs in the Teams Toolkit for Visual Studio Code. The instructions are for a Create React App application using the Teams Toolkit but they shouldn’t be too difficult to adapt to other tool chains since the certificate creation and trust parts are the same regardless.

Mobile device testing

It’s prudent to test Teams applications using the mobile versions of Teams (iOS and Android) to make sure everything looks good and works properly. ngrok makes this a breeze; since your local service is exposed on the public Internet, you can test using any device with an Internet connection, no special setup required.

If you’d prefer not to expose your local server to the Internet, you can always connect your phone locally using wifi and leave the Internet out of it. This picture shows two phones; phone 1 is connected via ngrok and phone 2 is connected locally.

To set up local access you’ll need a server name other than localhost, and you’ll need to open a path on the local network from your phone to your local web server. Here are the high-level steps; the details vary depending on your phone OS, development computer OS, and network configuration.

- Connect your mobile device via wifi to the same network as your development computer.

- Open an incoming port on your development computer’s built-in firewall (port 3000, 3978, 8080, or whatever your local web server is using).

- Determine the local IP address of your development computer; ideally reserve it so it doesn’t change. This can be accomplished in the DHCP section of most home routers or by using a fixed IP address.

- Set up a

hosts entry (phones have them too!) or local DNS name to point to your development computer. Again, many home routers have the ability to register a local DNS name so you don’t have to configure it in each device.

- Make sure the https certificate is for this same hostname and not just

localhost, and install it as a trusted certificate on your phone.

While this might not seem easy, it is possible! And it’s a one-time setup that you can use over and over. But you can see that ngrok makes it a whole lot easier.

Developing Bots and Messaging Extensions with and without ngrok

Several features of Teams applications are based on a web service within your application that is called from the cloud. These are:

All of these are implemented as REST services and could be built with any tool chain, but the requests will come from the cloud, so you need to have a port listening on the Internet to receive those requests.

For this reason there’s currently no local debugging option in Microsoft Teams that doesn’t involve opening a port on the Internet or using some sort of tunnel, ngrok or otherwise. The same is true for outgoing webhooks, which are outgoing from Teams to your app).

If ngrok isn’t on your road map, don’t worry, there are still options available!

Option 1. Use the Bot Framework Emulator

If you’re building a bot, consider using the Bot Framework Emulator, which allows you to run bots locally without using the Azure Bot Service. Instead of running your bot in Teams, you run it in the emulator. The drawback is that the emulator doesn’t currently understand some Teams-specific features such as messaging extensions or other Invoke activities. However it does a great job running conversational bots! Adaptive cards work as well, though Invoke card actions do not.

If your bot isn’t too Teams-specific, consider using the Bot Framework Emulator for most debugging and just do final integration testing in Teams, perhaps when the bot deployed in a staging environment which is set up for handling incoming requests.

Option 2. Don’t debug locally

Another approach is to move away from the strategy of local debugging entirely. For example, you could publish your app to Microsoft Azure app service and use the remote debugger. Here are the instructions for Visual Studio Code (NodeJS) and Visual Studio 2019 (.NET).

You could even set up a development virtual machine (VM) in Azure or your cloud service of choice and open an incoming port for the Azure Bot Service. Or just run ngrok in the VM, away from the concerns of a corporate network.

How many services do you have?

Teams applications generated by the yo teams generator have a single web server, so if your app has a combination of features – say, tabs and a bot – you can use one ngrok tunnel or one for all your application features.

Teams applications generated by the Microsoft Teams Toolkit generate multiple web servers (one for tabs, another for bots, and a third for the SSO web service if you use it). If you want to use ngrok, you’ll need a tunnel for each one. This requires the paid service and you’ll have to start up multiple copies each time you begin debugging. Another option is to use a single ngrok tunnel for your bot, potentially with the free service, and then use local connections for the tab and SSO web service.

Of course you don’t have to use either of these tools; they’re really just a convenience! You can use any tools you wish and create the Teams application package in App Studio or by editing your own manifest.json file and zipping it up with a couple icons.

Summary

While ngrok isn’t a requirement for Teams development, it does make it a lot easier. However if you can’t or don’t want to open up a tunnel from the Internet, there are other strategies. Many of these, such as local https servers with self-signed certificates, have been around for a long time and may already be familiar.

There is nothing magic about Teams development! It was designed to use standard web tooling, and you can use the same tools that you’d use for any web development project. Trust your knowledge of web development to come up with a configuration that works for you. Just be aware of which services will be called from the Internet and plan accordingly.

References

by Contributed | May 20, 2021 | Dynamics 365, Microsoft 365, Technology

This article is contributed. See the original author and article here.

Many of the forces driving rapid change in B2C commerce are now propelling transformation in the B2B space. Buyers of all types demand an easy, convenient online shopping experience, a requirement that has been accelerated by the pandemic and as Millennials take the decision-making reins.

Over time, the demand for an omnichannel, user-centric e-commerce experience will grow in every space, including B2B. The good news is that with B2B e-commerce for Microsoft Dynamics 365 Commerce, we’re delivering the same integrated, secure, and seamless experience for B2B e-commerce as we do for B2C companies. Plus, businesses who compete in both spaces can use the same holistic commerce solution in both scenarios.

1. B2B buying is moving online

When examining how the pandemic has accelerated e-commerce, it’s clear that this rapid transformation has touched every industry, every sector, and every business type. Online shopping hit new records before the pandemic and surged more than 209 percent in April 2020 alone, according to ACI.

In the B2B arena, more buyers are shopping online than ever before, too. According to McKinsey & Company, just 20 percent of B2B buyers say they hope to return to in-person sales. This proves to be especially true in sectors where field-sales have long dominated, including pharma and medical products. And this shift is not just for small purchases. B2B consumers are making large purchases online. The same McKinsey & Company research found that 70 percent of B2B decision-makers are open to making remote or self-service purchases over $50,000, and 27 percent would spend more than $500,000.

According to Digital Commerce 360, US B2B e-commerce sales increased by 11.8 percent to $2.19 trillion in 2020, up from $1.3 trillion in 2019. This market is near double the opportunity as B2C e-commerce, which was $861.12 billion in 2020, according to Digital Commerce 360.

Kent Water Sports have been actively working with Microsoft to bring Dynamics 365 Commerce to both consumers and business partners to improve buying experiences.

“Our customers are thrilled to see inventory levels and receive automatic shipping notifications from our system now that we’ve added the Dynamics 365 B2B e-commerce capabilities. Altogether, it’s a much better experience for our B2B customers. Rhett Thompson: IT Corporate Director” – Kent Water Sports

2. Supply chain agility is a necessity

The pandemic has highlighted just how critical supply chain agility is, as demonstrated by the headline-making supply chain shocks. In addition to the toilet paper shortages in the pandemic’s early days, other supply chains, such as medical supplies, silicone chips, appliances, and even bicycles, have buckled. A year later, supply chains have still not fully recovered.

According to a November 2020 commissioned study conducted by Forrester Consulting on behalf of Microsoft, supply chain agility is one of the three major areas that retailers and consumer packaged goods companies are focused on as part of a successful digital commerce strategy. These businesses are addressing agility by increasing visibility throughout their product’s journey and embracing technologies such as machine learning and AI to drive automation. Companies must be able to connect supply chain technology with their digital commerce systems to gain access to real-time inventory visibility and leverage AI to optimize fulfillment and ensure inventory availability at the right time and place.

3. User experience is at the forefront

In recent years, consumers have come to expect certain conveniences when shopping online that improve their experiences. From depositing checks through a smartphone app to sending money instantly without a fee to grocery delivery, companies have increasingly made our lives easier through integrated technologies and a focus on the customer experience. When shopping online, B2C consumers have come to expect easy-to-use digital storefronts, customizable products, package tracking, and simplified checkout and payment options.

As Millennials become more senior in business, they’re questioning why B2B should be any different, citing a disconnect between an archaic spreadsheet-driven B2B buying experience and how they conduct their personal online activities. Back in 2017, Forrester already noted that Millennials have taken over B2B buying power, with 73 percent of Millennials involved in B2B purchasing decisions. A more recent study by Demand Gen found that 44 percent of Millennial respondents indicating they are a primary decision-maker at their company for purchases of $10,000 or more.

Creating a seamless customer experience is a must for all businesses. B2B e-commerce is no exception and companies like Britax Rmer have been working with Dynamics 365 Commerce to provide more robust experiences and discoverability for retailers and streamline the customer’s buying journey.

4. Personalization requires integration

Personalization is a growing trend in B2C retail that is making its way to the B2B commerce space, too. B2B buyers are sophisticated. They’re the tech-savvy Millennials and buyers of all ages who are used to seeing personalized recommendations, purchase history for easier reordering, chat options for instant customer service, and more.

Delivering this personalization requires a more integrated customer experience, from in-person to online and back again, which relies upon unified data and greater transparency. In a special blog series for retailers, we discussed how to achieve visibility through Dynamics 365 Commerce in part two of the series. The takeaways from this series can be applied to B2B, too.

Although e-commerce is booming, it’s expected that in-person shopping for both B2C and B2B customers will rebound. Before the pandemic, Capgemini Research Institute found that 59 percent of consumers worldwide had high levels of interaction with brick-and-mortar stores. As things return to the new normal, a lower number, 39 percent, expect a high level of interaction with physical stores. B2B retailers need to deliver seamless omnichannel experiences that tie together online and offline interactions.

5. Security, privacy, and fraud prevention are critical

In our Forrester study, security and privacy were highlighted as the third critical component for successful digital commerce, while expanding e-commerce widens the scope of risks. In addition to protecting customer data, businesses must protect themselves from both fraud and security breaches. According to Retail TouchPoints, online fraud losses reached $40 billion globally in 2018. The study also showed that 52 percent of retail and CPG decision-makers consider the increasing level of sophistication of online threats or attacks as a major security challenge for digital commerce.

A robust system is required for combating fraud, which is why we’ve made it easy to integrate Microsoft Dynamics 365 Fraud Protection into Dynamics 365 Commerce. It provides tools and capabilities to decrease fraud and abuse, reduce operational expenses, and increase acceptance rates while safeguarding user accounts.

How to get started with B2B e-commerce for Dynamics 365 Commerce

We’ve created B2B e-commerce for Dynamics 365 Commerce to help you streamline and simplify self-service buying for your B2B customers. It provides a unified omnichannel solution that can meet organizational needs for both B2C and B2B e-commerce in a single platform. It also helps companies provide more personalized e-commerce experiences while ramping up security, privacy, fraud prevention.

Learn more about B2B e-commerce for Dynamics 365 Commerce today or contact us to join a demo or to speak with a specialist about Dynamics 365 Commerce.

The post 5 trends transforming B2B buying and e-commerce appeared first on Microsoft Dynamics 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

![]()

Recent Comments